Start your free trial

Arrange a trial for your organisation and discover why FSTA is the leading database for reliable research on the sciences of food and health.

REQUEST A FREE TRIAL

- Research Skills Blog

5 software tools to support your systematic review processes

By Dr. Mina Kalantar on 19-Jan-2021 13:01:01

Systematic reviews are a reassessment of scholarly literature to facilitate decision making. This methodical approach of re-evaluating evidence was initially applied in healthcare, to set policies, create guidelines and answer medical questions.

Systematic reviews are large, complex projects and, depending on the purpose, they can be quite expensive to conduct. A team of researchers, data analysts and experts from various fields may collaborate to review and examine incredibly large numbers of research articles for evidence synthesis. Depending on the spectrum, systematic reviews often take at least 6 months, and sometimes upwards of 18 months to complete.

The main principles of transparency and reproducibility require a pragmatic approach in the organisation of the required research activities and detailed documentation of the outcomes. As a result, many software tools have been developed to help researchers with some of the tedious tasks required as part of the systematic review process.

hbspt.cta._relativeUrls=true;hbspt.cta.load(97439, 'ccc20645-09e2-4098-838f-091ed1bf1f4e', {"useNewLoader":"true","region":"na1"});

The first generation of these software tools were produced to accommodate and manage collaborations, but gradually developed to help with screening literature and reporting outcomes. Some of these software packages were initially designed for medical and healthcare studies and have specific protocols and customised steps integrated for various types of systematic reviews. However, some are designed for general processing, and by extending the application of the systematic review approach to other fields, they are being increasingly adopted and used in software engineering, health-related nutrition, agriculture, environmental science, social sciences and education.

Software tools

There are various free and subscription-based tools to help with conducting a systematic review. Many of these tools are designed to assist with the key stages of the process, including title and abstract screening, data synthesis, and critical appraisal. Some are designed to facilitate the entire process of review, including protocol development, reporting of the outcomes and help with fast project completion.

As time goes on, more functions are being integrated into such software tools. Technological advancement has allowed for more sophisticated and user-friendly features, including visual graphics for pattern recognition and linking multiple concepts. The idea is to digitalise the cumbersome parts of the process to increase efficiency, thus allowing researchers to focus their time and efforts on assessing the rigorousness and robustness of the research articles.

This article introduces commonly used systematic review tools that are relevant to food research and related disciplines, which can be used in a similar context to the process in healthcare disciplines.

These reviews are based on IFIS' internal research, thus are unbiased and not affiliated with the companies.

This online platform is a core component of the Cochrane toolkit, supporting parts of the systematic review process, including title/abstract and full-text screening, documentation, and reporting.

The Covidence platform enables collaboration of the entire systematic reviews team and is suitable for researchers and students at all levels of experience.

From a user perspective, the interface is intuitive, and the citation screening is directed step-by-step through a well-defined workflow. Imports and exports are straightforward, with easy export options to Excel and CVS.

Access is free for Cochrane authors (a single reviewer), and Cochrane provides a free trial to other researchers in healthcare. Universities can also subscribe on an institutional basis.

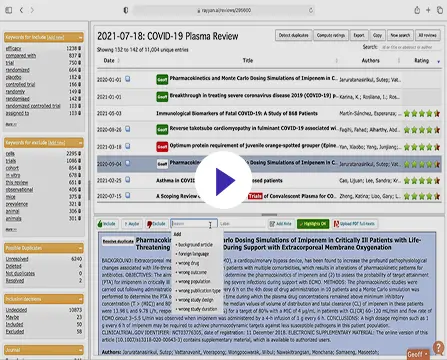

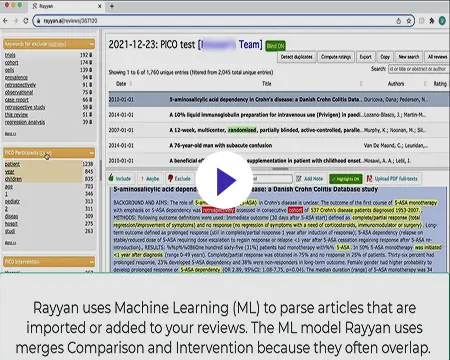

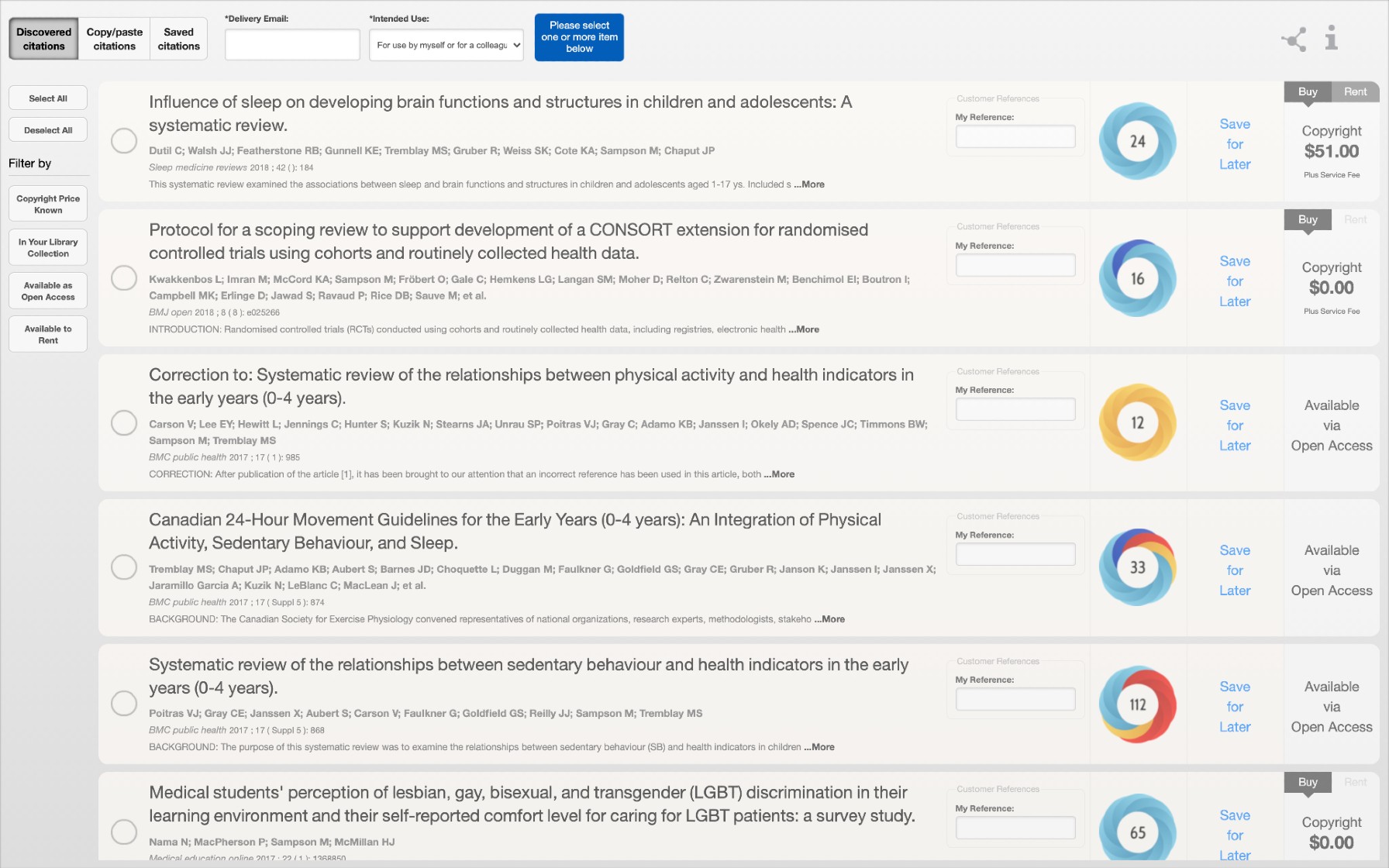

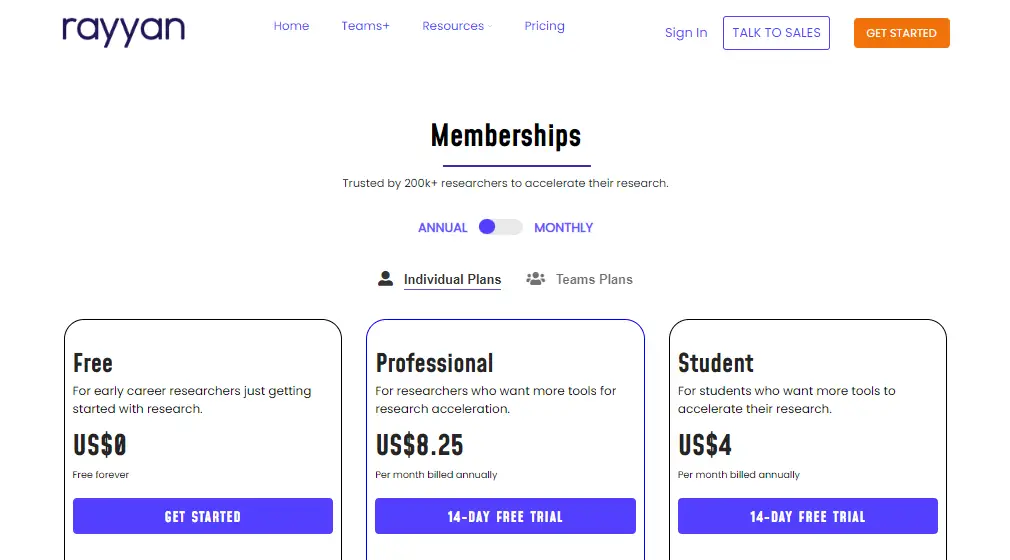

Rayyan is a free and open access web-based platform funded by the Qatar Foundation, a non-profit organisation supporting education and community development initiative . Rayyan is used to screen and code literature through a systematic review process.

Unlike Covidence, Rayyan does not follow a standard SR workflow and simply helps with citation screening. It is accessible through a mobile application with compatibility for offline screening. The web-based platform is known for its accessible user interface, with easy and clear export options.

Function comparison of 5 software tools to support the systematic review process

Eppi-reviewer.

EPPI-Reviewer is a web-based software programme developed by the Evidence for Policy and Practice Information and Co-ordinating Centre (EPPI) at the UCL Institute for Education, London .

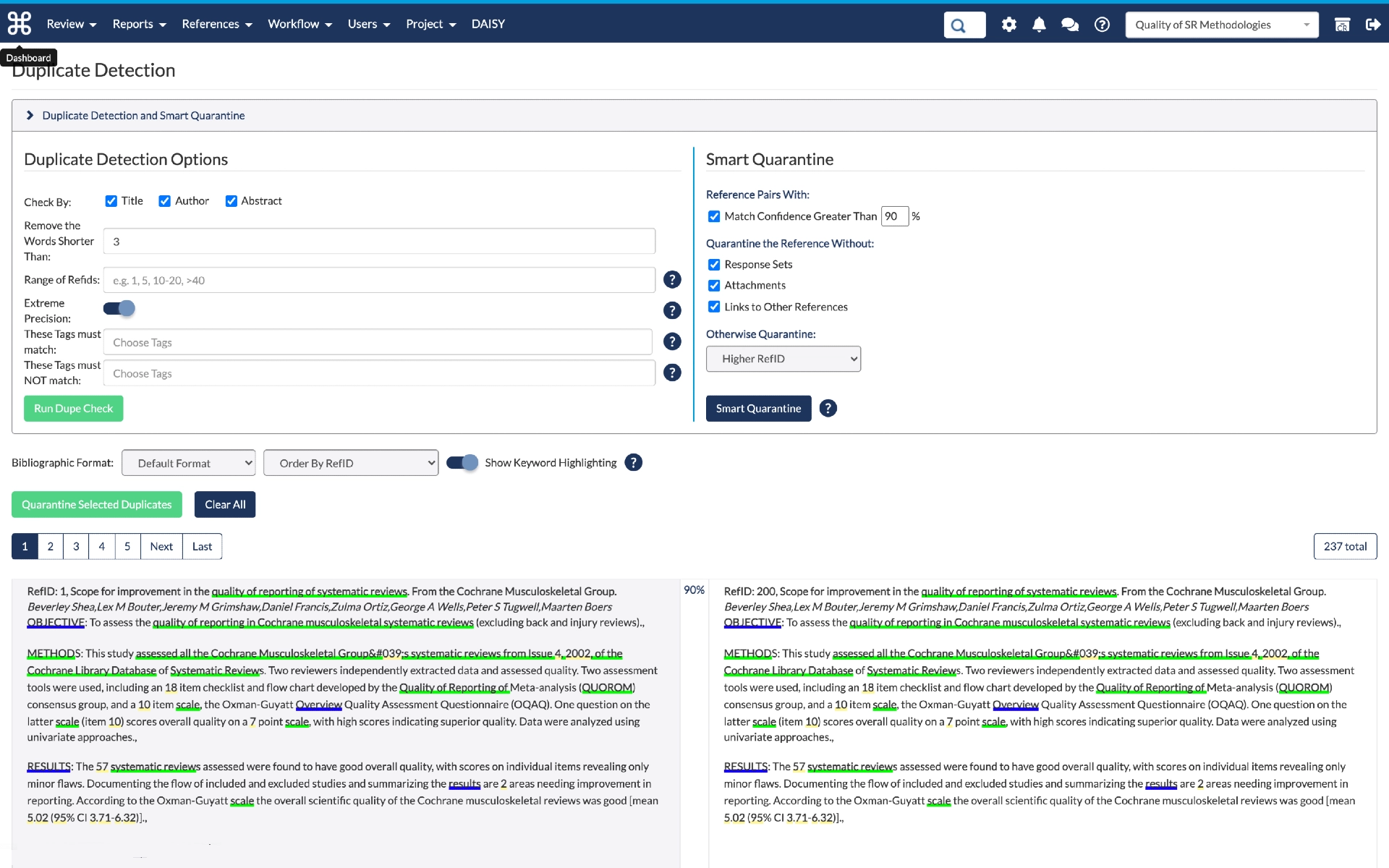

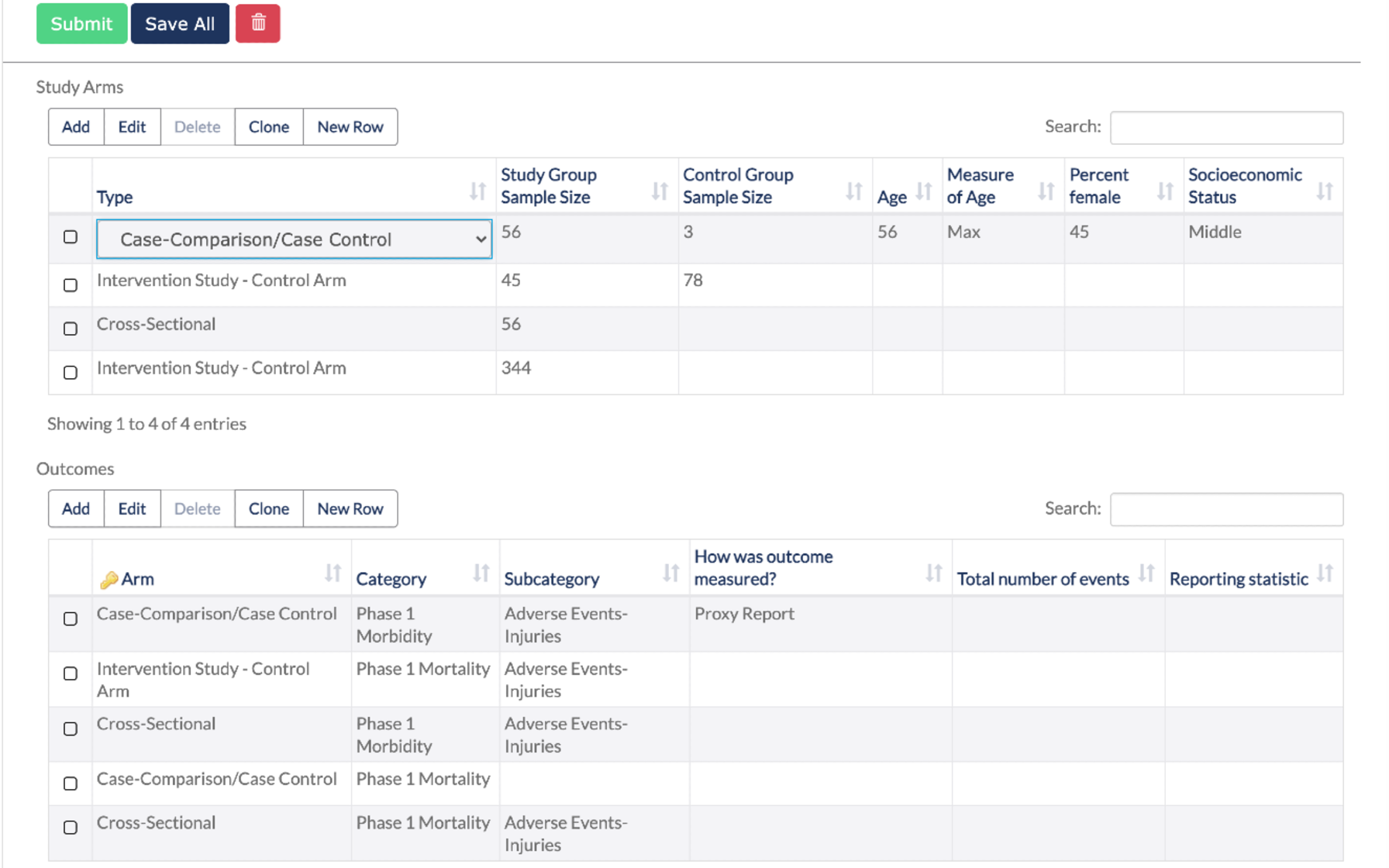

It provides comprehensive functionalities for coding and screening. Users can create different levels of coding in a code set tool for clustering, screening, and administration of documents. EPPI-Reviewer allows direct search and import from PubMed. The import of search results from other databases is feasible in different formats. It stores, references, identifies and removes duplicates automatically. EPPI-Reviewer allows full-text screening, text mining, meta-analysis and the export of data into different types of reports.

There is no limit for concurrent use of the software and the number of articles being reviewed. Cochrane reviewers can access EPPI reviews using their Cochrane subscription details.

EPPI-Centre has other tools for facilitating the systematic review process, including coding guidelines and data management tools.

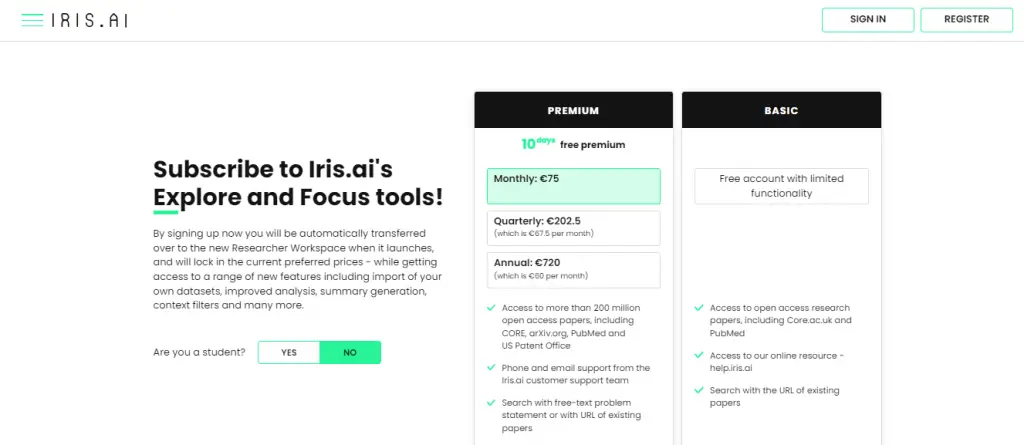

CADIMA is a free, online, open access review management tool, developed to facilitate research synthesis and structure documentation of the outcomes.

The Julius Institute and the Collaboration for Environmental Evidence established the software programme to support and guide users through the entire systematic review process, including protocol development, literature searching, study selection, critical appraisal, and documentation of the outcomes. The flexibility in choosing the steps also makes CADIMA suitable for conducting systematic mapping and rapid reviews.

CADIMA was initially developed for research questions in agriculture and environment but it is not limited to these, and as such, can be used for managing review processes in other disciplines. It enables users to export files and work offline.

The software allows for statistical analysis of the collated data using the R statistical software. Unlike EPPI-Reviewer, CADIMA does not have a built-in search engine to allow for searching in literature databases like PubMed.

DistillerSR

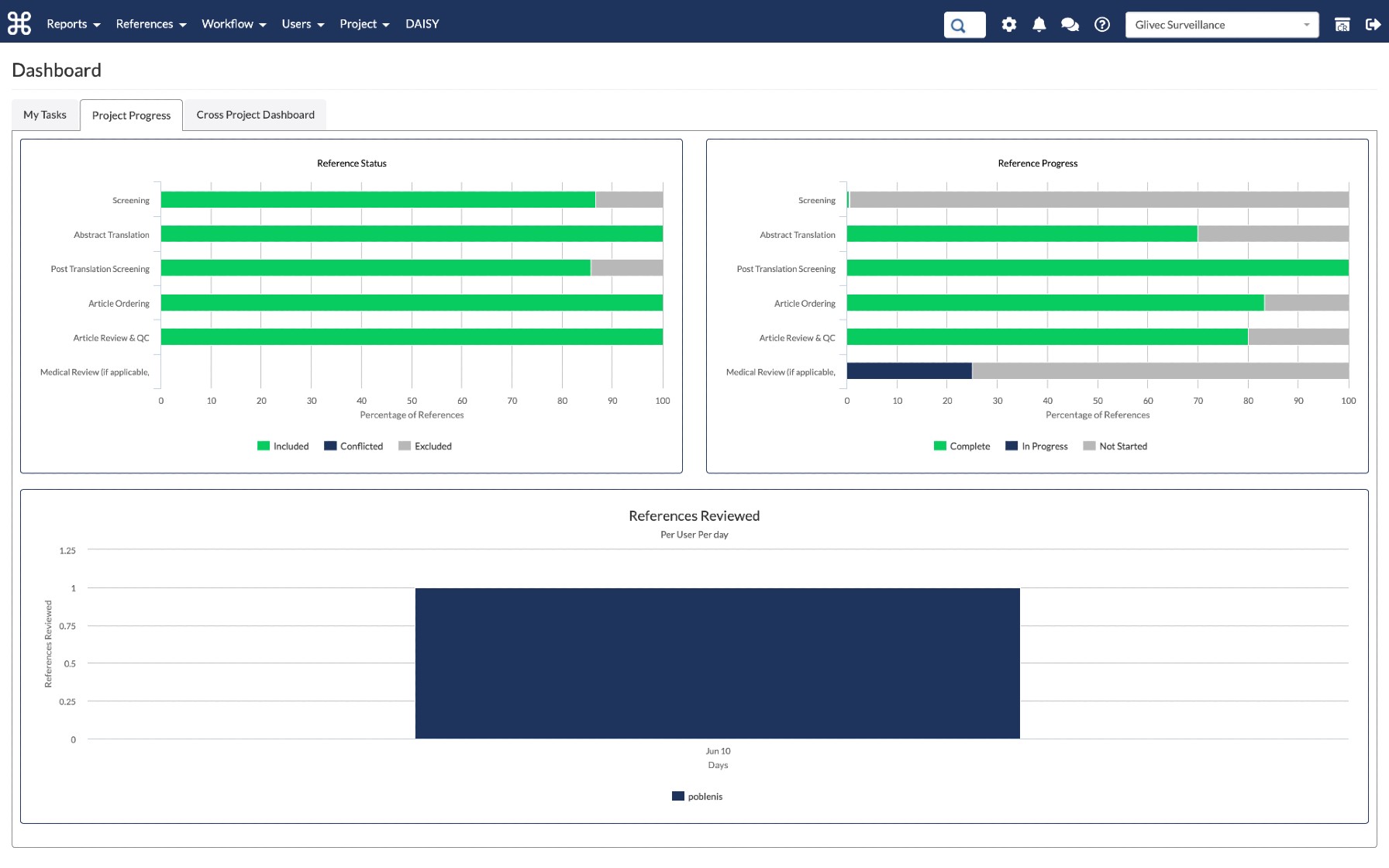

DistillerSR is an online software maintained by the Canadian company, Evidence Partners which specialises in literature review automation. DistillerSR provides a collaborative platform for every stage of literature review management. The framework is flexible and can accommodate literature reviews of different sizes. It is configurable to different data curation procedures, workflows and reporting standards. The platform integrates necessary features for screening, quality assessment, data extraction and reporting. The software uses Artificial Learning (AL)-enabled technologies in priority screening. It is to cut the screening process short by reranking the most relevant references nearer to the top. It can also use AL, as a second reviewer, in quality control checks of screened studies by human reviewers. DistillerSR is used to manage systematic reviews in various medical disciplines, surveillance, pharmacovigilance and public health reviews including food and nutrition topics. The software does not support statistical analyses. It provides configurable forms in standard formats for data extraction.

DistillerSR allows direct search and import of references from PubMed. It provides an add on feature called LitConnect which can be set to automatically import newly published references from data providers to keep reviews up to date during their progress.

The systematic review Toolbox is a web-based catalogue of various tools, including software packages which can assist with single or multiple tasks within the evidence synthesis process. Researchers can run a quick search or tailor a more sophisticated search by choosing their approach, budget, discipline, and preferred support features, to find the right tools for their research.

If you enjoyed this blog post, you may also be interested in our recently published blog post addressing the difference between a systematic review and a systematic literature review.

- FSTA - Food Science & Technology Abstracts

- IFIS Collections

- Resources Hub

- Diversity Statement

- Sustainability Commitment

- Company news

- Frequently Asked Questions

- Privacy Policy

- Terms of Use for IFIS Collections

Ground Floor, 115 Wharfedale Road, Winnersh Triangle, Wokingham, Berkshire RG41 5RB

Get in touch with IFIS

© International Food Information Service (IFIS Publishing) operating as IFIS – All Rights Reserved | Charity Reg. No. 1068176 | Limited Company No. 3507902 | Designed by Blend

- Help Center

GET STARTED

COLLABORATE ON YOUR REVIEWS WITH ANYONE, ANYWHERE, ANYTIME

Save precious time and maximize your productivity with a Rayyan membership. Receive training, priority support, and access features to complete your systematic reviews efficiently.

Rayyan Teams+ makes your job easier. It includes VIP Support, AI-powered in-app help, and powerful tools to create, share and organize systematic reviews, review teams, searches, and full-texts.

RESEARCHERS

Rayyan makes collaborative systematic reviews faster, easier, and more convenient. Training, VIP support, and access to new features maximize your productivity. Get started now!

Over 1 billion reference articles reviewed by research teams, and counting...

Intelligent, scalable and intuitive.

Rayyan understands language, learns from your decisions and helps you work quickly through even your largest systematic literature reviews.

WATCH A TUTORIAL NOW

Solutions for Organizations and Businesses

Rayyan Enterprise and Rayyan Teams+ make it faster, easier and more convenient for you to manage your research process across your organization.

- Accelerate your research across your team or organization and save valuable researcher time.

- Build and preserve institutional assets, including literature searches, systematic reviews, and full-text articles.

- Onboard team members quickly with access to group trainings for beginners and experts.

- Receive priority support to stay productive when questions arise.

- SCHEDULE A DEMO

- LEARN MORE ABOUT RAYYAN TEAMS+

RAYYAN SYSTEMATIC LITERATURE REVIEW OVERVIEW

LEARN ABOUT RAYYAN’S PICO HIGHLIGHTS AND FILTERS

Join now to learn why Rayyan is trusted by already more than 500,000 researchers

Individual plans, teams plans.

For early career researchers just getting started with research.

Free forever

- 3 Active Reviews

- Invite Unlimited Reviewers

- Import Directly from Mendeley

- Industry Leading De-Duplication

- 5-Star Relevance Ranking

- Advanced Filtration Facets

- Mobile App Access

- 100 Decisions on Mobile App

- Standard Support

- Revoke Reviewer

- Online Training

- PICO Highlights & Filters

- PRISMA (Beta)

- Auto-Resolver

- Multiple Teams & Management Roles

- Monitor & Manage Users, Searches, Reviews, Full Texts

- Onboarding and Regular Training

Professional

For researchers who want more tools for research acceleration.

Per month billed annually

- Unlimited Active Reviews

- Unlimited Decisions on Mobile App

- Priority Support

- Auto-Resolver

For currently enrolled students with valid student ID.

Per month billed annually

Billed monthly

For a team that wants professional licenses for all members.

Per-user, per month, billed annually

- Single Team

- High Priority Support

For teams that want support and advanced tools for members.

- Multiple Teams

- Management Roles

For organizations who want access to all of their members.

Annual Subscription

Contact Sales

- Organizational Ownership

- For an organization or a company

- Access to all the premium features such as PICO Filters, Auto-Resolver, PRISMA and Mobile App

- Store and Reuse Searches and Full Texts

- A management console to view, organize and manage users, teams, review projects, searches and full texts

- Highest tier of support – Support via email, chat and AI-powered in-app help

- GDPR Compliant

- Single Sign-On

- API Integration

- Training for Experts

- Training Sessions Students Each Semester

- More options for secure access control

ANNUAL ONLY

Per-user, billed monthly

Rayyan Subscription

membership starts with 2 users. You can select the number of additional members that you’d like to add to your membership.

Total amount:

Click Proceed to get started.

Great usability and functionality. Rayyan has saved me countless hours. I even received timely feedback from staff when I did not understand the capabilities of the system, and was pleasantly surprised with the time they dedicated to my problem. Thanks again!

This is a great piece of software. It has made the independent viewing process so much quicker. The whole thing is very intuitive.

Rayyan makes ordering articles and extracting data very easy. A great tool for undertaking literature and systematic reviews!

Excellent interface to do title and abstract screening. Also helps to keep a track on the the reasons for exclusion from the review. That too in a blinded manner.

Rayyan is a fantastic tool to save time and improve systematic reviews!!! It has changed my life as a researcher!!! thanks

Easy to use, friendly, has everything you need for cooperative work on the systematic review.

Rayyan makes life easy in every way when conducting a systematic review and it is easy to use.

Covidence website will be inaccessible as we upgrading our platform on Monday 23rd August at 10am AEST, / 2am CEST/1am BST (Sunday, 15th August 8pm EDT/5pm PDT)

The World's #1 Systematic Review Tool

See your systematic reviews like never before

Faster reviews.

An average 35% reduction in time spent per review, saving an average of 71 hours per review.

Expert, online support

Easy to learn and use, with 24/7 support from product experts who are also seasoned reviewers!

Seamless collaboration

Enable the whole review team to collaborate from anywhere.

Suits all levels of experience and sectors

Suitable for reviewers in a variety of sectors including health, education, social science and many others.

Supporting the world's largest systematic review community

See how it works.

Step inside Covidence to see a more intuitive, streamlined way to manage systematic reviews.

Unlimited use for every organization

With no restrictions on reviews and users, Covidence gets out of the way so you can bring the best evidence to the world, more quickly.

Covidence is used by world-leading evidence organizations

Whether you’re an academic institution, a hospital or society, Covidence is working for organizations like yours right now.

See a list of organizations already using Covidence →

How Covidence has enabled living guidelines for Australians impacted by stroke

Clinical guidelines took 7 years to update prior to moving to a living evidence approach. Learn how Covidence streamlined workflows and created real time savings for the guidelines team.

University of Ottawa Drives Systematic Review Excellence Across Many Academic Disciplines

University of Ottawa

Top Ranked U.S. Teaching Hospital Delivers Effective Systematic Review Management

Top Ranked U.S. Teaching Hospital

See more Case Studies

Better systematic review management

Head office, working for an institution or organisation.

Find out why over 350 of the world’s leading institutions are seeing a surge in publications since using Covidence!

Request a consultation with one of our team members and start empowering your researchers:

By using our site you consent to our use of cookies to measure and improve our site’s performance. Please see our Privacy Policy for more information.

Cochrane RevMan

Revman: systematic review and meta-analysis tool for researchers worldwide.

Pricing & subscription

Intuitive and easy to use

High quality support and guidance, promotes high quality research, collaborate on the same project.

Systematic Reviews and Meta Analysis

- Getting Started

- Guides and Standards

- Review Protocols

- Databases and Sources

- Randomized Controlled Trials

- Controlled Clinical Trials

- Observational Designs

- Tests of Diagnostic Accuracy

Software and Tools

- Where do I get all those articles?

- Collaborations

- EPI 233/528

- Countway Mediated Search

- Risk of Bias (RoB)

Covidence is Web-based to for managing the review workflow. Tools for screening records, managing full-text articles, and extracting data make the process much less burdensome. Covidence currently is available for Harvard investigators with a hms.harvard.edu, hsdm.harvard.edu, or hsph.harvard.edu email address. To make use of Harvard's institutional account:

- If you don't already have a Covidence account, sign up for one at: https://www.covidence.org/signups/new Make sure you use your hms, hsph, or hsdm Harvard email address.

- Then associate your account with Harvard's institutional access at: https://www.covidence.org/organizations/58RXa/signup Use the same address you used in step 1 and follow the instructions in the resulting email.

- To set up a project, go to your account dashboard page and click the 'Start a new review' button. Make sure you choose "Harvard University Libraries" under "which account" on the project creation page.

Rayyan is an alternative review manager that has a free option. It has ranking and sorting option lacking in Covidence but takes more time to learn. We do not provide support for Rayyan.

Other Review Software Systems

There are a number of tools available to help a team manage the systematic review process. Notable examples include Eppi-Reviewer , DistillerSR , and PICO Portal . These are subscription-based services but in some cases offer a trial project. Use the Systematic Review Toolbox to explore more options.

Citation Managers

Citation managers like EndNote or Zotero can be used to collect, manage and de-duplicate bibliographic records and full-text documents but are considerable more painful to use than specialized systematic review applications. Of course, they are handy for writing up your report.

Need more, or looking for alternatives? See the SR Toolbox , a searchable database of tools to support systematic reviews and meta-analysis.

- << Previous: Tests of Diagnostic Accuracy

- Next: Where do I get all those articles? >>

- Last Updated: Feb 26, 2024 3:17 PM

- URL: https://guides.library.harvard.edu/meta-analysis

Accelerate your research with the best systematic literature review tools

The ideal literature review tool helps you make sense of the most important insights in your research field. ATLAS.ti empowers researchers to perform powerful and collaborative analysis using the leading software for literature review.

Finalize your literature review faster with comfort

ATLAS.ti makes it easy to manage, organize, and analyze articles, PDFs, excerpts, and more for your projects. Conduct a deep systematic literature review and get the insights you need with a comprehensive toolset built specifically for your research projects.

Figure out the "why" behind your participant's motivations

Understand the behaviors and emotions that are driving your focus group participants. With ATLAS.ti, you can transform your raw data and turn it into qualitative insights you can learn from. Easily determine user intent in the same spot you're deciphering your overall focus group data.

Visualize your research findings like never before

We make it simple to present your analysis results with meaningful charts, networks, and diagrams. Instead of figuring out how to communicate the insights you just unlocked, we enable you to leverage easy-to-use visualizations that support your goals.

Everything you need to elevate your literature review

Import and organize literature data.

Import and analyze any type of text content – ATLAS.ti supports all standard text and transcription files such as Word and PDF.

Analyze with ease and speed

Utilize easy-to-learn workflows that save valuable time, such as auto coding, sentiment analysis, team collaboration, and more.

Leverage AI-driven tools

Make efficiency a priority and let ATLAS.ti do your work with AI-powered research tools and features for faster results.

Visualize and present findings

With just a few clicks, you can create meaningful visualizations like charts, word clouds, tables, networks, among others for your literature data.

The faster way to make sense of your literature review. Try it for free, today.

A literature review analyzes the most current research within a research area. A literature review consists of published studies from many sources:

- Peer-reviewed academic publications

- Full-length books

- University bulletins

- Conference proceedings

- Dissertations and theses

Literature reviews allow researchers to:

- Summarize the state of the research

- Identify unexplored research inquiries

- Recommend practical applications

- Critique currently published research

Literature reviews are either standalone publications or part of a paper as background for an original research project. A literature review, as a section of a more extensive research article, summarizes the current state of the research to justify the primary research described in the paper.

For example, a researcher may have reviewed the literature on a new supplement's health benefits and concluded that more research needs to be conducted on those with a particular condition. This research gap warrants a study examining how this understudied population reacted to the supplement. Researchers need to establish this research gap through a literature review to persuade journal editors and reviewers of the value of their research.

Consider a literature review as a typical research publication presenting a study, its results, and the salient points scholars can infer from the study. The only significant difference with a literature review treats existing literature as the research data to collect and analyze. From that analysis, a literature review can suggest new inquiries to pursue.

Identify a focus

Similar to a typical study, a literature review should have a research question or questions that analysis can answer. This sort of inquiry typically targets a particular phenomenon, population, or even research method to examine how different studies have looked at the same thing differently. A literature review, then, should center the literature collection around that focus.

Collect and analyze the literature

With a focus in mind, a researcher can collect studies that provide relevant information for that focus. They can then analyze the collected studies by finding and identifying patterns or themes that occur frequently. This analysis allows the researcher to point out what the field has frequently explored or, on the other hand, overlooked.

Suggest implications

The literature review allows the researcher to argue a particular point through the evidence provided by the analysis. For example, suppose the analysis makes it apparent that the published research on people's sleep patterns has not adequately explored the connection between sleep and a particular factor (e.g., television-watching habits, indoor air quality). In that case, the researcher can argue that further study can address this research gap.

External requirements aside (e.g., many academic journals have a word limit of 6,000-8,000 words), a literature review as a standalone publication is as long as necessary to allow readers to understand the current state of the field. Even if it is just a section in a larger paper, a literature review is long enough to allow the researcher to justify the study that is the paper's focus.

Note that a literature review needs only to incorporate a representative number of studies relevant to the research inquiry. For term papers in university courses, 10 to 20 references might be appropriate for demonstrating analytical skills. Published literature reviews in peer-reviewed journals might have 40 to 50 references. One of the essential goals of a literature review is to persuade readers that you have analyzed a representative segment of the research you are reviewing.

Researchers can find published research from various online sources:

- Journal websites

- Research databases

- Search engines (Google Scholar, Semantic Scholar)

- Research repositories

- Social networking sites (Academia, ResearchGate)

Many journals make articles freely available under the term "open access," meaning that there are no restrictions to viewing and downloading such articles. Otherwise, collecting research articles from restricted journals usually requires access from an institution such as a university or a library.

Evidence of a rigorous literature review is more important than the word count or the number of articles that undergo data analysis. Especially when writing for a peer-reviewed journal, it is essential to consider how to demonstrate research rigor in your literature review to persuade reviewers of its scholarly value.

Select field-specific journals

The most significant research relevant to your field focuses on a narrow set of journals similar in aims and scope. Consider who the most prominent scholars in your field are and determine which journals publish their research or have them as editors or reviewers. Journals tend to look favorably on systematic reviews that include articles they have published.

Incorporate recent research

Recently published studies have greater value in determining the gaps in the current state of research. Older research is likely to have encountered challenges and critiques that may render their findings outdated or refuted. What counts as recent differs by field; start by looking for research published within the last three years and gradually expand to older research when you need to collect more articles for your review.

Consider the quality of the research

Literature reviews are only as strong as the quality of the studies that the researcher collects. You can judge any particular study by many factors, including:

- the quality of the article's journal

- the article's research rigor

- the timeliness of the research

The critical point here is that you should consider more than just a study's findings or research outputs when including research in your literature review.

Narrow your research focus

Ideally, the articles you collect for your literature review have something in common, such as a research method or research context. For example, if you are conducting a literature review about teaching practices in high school contexts, it is best to narrow your literature search to studies focusing on high school. You should consider expanding your search to junior high school and university contexts only when there are not enough studies that match your focus.

You can create a project in ATLAS.ti for keeping track of your collected literature. ATLAS.ti allows you to view and analyze full text articles and PDF files in a single project. Within projects, you can use document groups to separate studies into different categories for easier and faster analysis.

For example, a researcher with a literature review that examines studies across different countries can create document groups labeled "United Kingdom," "Germany," and "United States," among others. A researcher can also use ATLAS.ti's global filters to narrow analysis to a particular set of studies and gain insights about a smaller set of literature.

ATLAS.ti allows you to search, code, and analyze text documents and PDF files. You can treat a set of research articles like other forms of qualitative data. The codes you apply to your literature collection allow for analysis through many powerful tools in ATLAS.ti:

- Code Co-Occurrence Explorer

- Code Co-Occurrence Table

- Code-Document Table

Other tools in ATLAS.ti employ machine learning to facilitate parts of the coding process for you. Some of our software tools that are effective for analyzing literature include:

- Named Entity Recognition

- Opinion Mining

- Sentiment Analysis

As long as your documents are text documents or text-enable PDF files, ATLAS.ti's automated tools can provide essential assistance in the data analysis process.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- JMIR Med Inform

- v.10(5); 2022 May

Web-Based Software Tools for Systematic Literature Review in Medicine: Systematic Search and Feature Analysis

Kathryn cowie.

1 Nested Knowledge, Saint Paul, MN, United States

Asad Rahmatullah

Nicole hardy, kevin kallmes, associated data.

Supplementary Table 1: Screening Decisions for SR (systematic review) Tools Reviewed in Full.

Supplementary Table 2: Inter-observer Agreement across (1) Systematic Review (SR) Tools and (2) Features Assessed.

Systematic reviews (SRs) are central to evaluating therapies but have high costs in terms of both time and money. Many software tools exist to assist with SRs, but most tools do not support the full process, and transparency and replicability of SR depend on performing and presenting evidence according to established best practices.

This study aims to provide a basis for comparing and selecting between web-based software tools that support SR, by conducting a feature-by-feature comparison of SR tools.

We searched for SR tools by reviewing any such tool listed in the SR Toolbox, previous reviews of SR tools, and qualitative Google searching. We included all SR tools that were currently functional and required no coding, and excluded reference managers, desktop applications, and statistical software. The list of features to assess was populated by combining all features assessed in 4 previous reviews of SR tools; we also added 5 features (manual addition, screening automation, dual extraction, living review, and public outputs) that were independently noted as best practices or enhancements of transparency and replicability. Then, 2 reviewers assigned binary present or absent assessments to all SR tools with respect to all features, and a third reviewer adjudicated all disagreements.

Of the 53 SR tools found, 55% (29/53) were excluded, leaving 45% (24/53) for assessment. In total, 30 features were assessed across 6 classes, and the interobserver agreement was 86.46%. Giotto Compliance (27/30, 90%), DistillerSR (26/30, 87%), and Nested Knowledge (26/30, 87%) support the most features, followed by EPPI-Reviewer Web (25/30, 83%), LitStream (23/30, 77%), JBI SUMARI (21/30, 70%), and SRDB.PRO (VTS Software) (21/30, 70%). Fewer than half of all the features assessed are supported by 7 tools: RobotAnalyst (National Centre for Text Mining), SRDR (Agency for Healthcare Research and Quality), SyRF (Systematic Review Facility), Data Abstraction Assistant (Center for Evidence Synthesis in Health), SR Accelerator (Institute for Evidence-Based Healthcare), RobotReviewer (RobotReviewer), and COVID-NMA (COVID-NMA). Notably, of the 24 tools, only 10 (42%) support direct search, only 7 (29%) offer dual extraction, and only 13 (54%) offer living/updatable reviews.

Conclusions

DistillerSR, Nested Knowledge, and EPPI-Reviewer Web each offer a high density of SR-focused web-based tools. By transparent comparison and discussion regarding SR tool functionality, the medical community can both choose among existing software offerings and note the areas of growth needed, most notably in the support of living reviews.

Introduction

Systematic review costs and gaps.

According to the Centre for Evidence-Based Medicine, systematic reviews (SRs) of high-quality primary studies represent the highest level of evidence for evaluating therapeutic performance [ 1 ]. However, although vital to evidence-based medical practice, SRs are time-intensive, taking an average of 67.3 weeks to complete [ 2 ] and costing leading research institutions over US $141,000 in labor per published review [ 3 ]. Owing to the high costs in researcher time and complexity, up-to-date reviews cover only 10% to 17% of primary evidence in a representative analysis of the lung cancer literature [ 4 ]. Although many qualitative and noncomprehensive publications provide some level of summative evidence, SRs—defined as reviews of “evidence on a clearly formulated question that use systematic and explicit methods to identify, select and critically appraise relevant primary research, and to extract and analyze data from the studies that are included” [ 5 ]—are distinguished by both their structured approach to finding, filtering, and extracting from underlying articles and the resulting comprehensiveness in answering a concrete medical question.

Software Tools for Systematic Review

Software tools that assist with central SR activities—retrieval (searching or importing records), appraisal (screening of records), synthesis (content extraction from underlying studies), and documentation/output (presentation of SR outputs)—have shown promise in reducing the amount of effort needed in a given review [ 6 ]. Because of the time savings of web-based software tools, institutions and individual researchers engaged in evidence synthesis may benefit from using these tools in the review process [ 7 ].

Existing Studies of Software Tools

However, choosing among the existing software tools presents a further challenge to researchers; in the SR Toolbox [ 8 ], there are >240 tools indexed, of which 224 support health care reviews. Vitally, few of these tools can be used for each of the steps of SR, so comparing the features available through each tool can assist researchers in selecting an SR tool to use. This selection can be informed by feature analysis; for example, a previously published feature analysis compared 15 SR tools [ 9 ] across 21 subfeatures of interest and found that DistillerSR (Evidence Partners), EPPI-Reviewer (EPPI-Centre), SWIFT-Active Screener (Sciome), and Covidence (Cochrane) support the greatest number of features as of 2019. Harrison et al [ 10 ], Marshall et al [ 11 ], and Kohl et al [ 12 ] have completed similar analyses, but each feature assessment selected a different set of features and used different qualitative feature assessment methods, and none covered all SR tools currently available.

The SR tool landscape continues to evolve; as existing tools are updated, new software is made available to researchers, and new feature classes are developed. For instance, despite the growth of calls for living SRs, that is, reviews where the outputs are updated as new primary evidence becomes available, no feature analysis has yet covered this novel capability. Furthermore, the leading feature analyses [ 9 - 12 ] have focused on the screening phase of review, meaning that no comparison of data extraction capabilities has yet been published.

Feature Analysis of Systematic Review Tools

The authors, who are also the developers of the Nested Knowledge platform for SR and meta-analysis (Nested Knowledge, Inc) [ 13 ], have noted the lack of SR feature comparison among new tools and across all feature classes (retrieval, appraisal, synthesis, documentation/output, administration of reviews, and access/support features). To provide an updated feature analysis comparing SR software tools, we performed a feature analysis covering the full life cycle of SR across software tools.

Search Strategy

We searched the SR tools for assessment in 3 ways: first, we identified any SR tool that was published in existing reviews of SR tools (Table S1 in Multimedia Appendix 1 ). Second, we reviewed SR Toolbox [ 8 ], a repository of indexed software tools that support the SR process. Third, we performed a Google search for Systematic review software and identified any software tool that was among the first 5 pages of results. Furthermore, for any library resource pages that were among the search results, we included any SR tools mentioned by the library resource page that met our inclusion criteria. The search was completed between June and August 2021. Four additional tools, namely SRDR+ (Agency for Healthcare Research and Quality), Systematic Review Assistant-Deduplication Module (Institute for Evidence-Based Healthcare), Giotto Compliance, and Robotsearch (Robotsearch), were assessed in December 2021 following reviewer feedback.

Selection of Software Tools

The inclusion and exclusion criteria were determined by 3 authors (KK, KH, and KC). Among our search results, we queued up all software tools that had descriptions meeting our inclusion criteria for full examination of the software in a second round of review. We included any that were functioning web-based tools that require no coding by the user to install or operate, so long as they were used to support the SR process and can be used to review clinical or preclinical literature. The no coding requirement was established because the target audience of this review is medical researchers who are selecting a review software to use; thus, we aim to review only tools that this broad audience is likely to be able to adopt. We also excluded desktop applications, statistical packages, and tools built for reviewing software engineering and social sciences literature, as well as reference managers, to avoid unfairly casting these tools as incomplete review tools (as they would each score quite low in features that are not related to reference management). All software tools were screened by one reviewer (KC), and inclusion decisions were reviewed by a second (KK).

Selection of Features of Interest

We built on the previous comparisons of SR tools published by Van der Mierden et al [ 9 ], Harrison et al [ 10 ], Marshall et al [ 11 ], and Kohl et al [ 12 ], which assign features a level of importance and evaluate each feature in reference screening tools. As the studies by Van der Mierden et al [ 9 ] and Harrison et al [ 10 ] focus on reference screening, we supplemented the features with features identified in related reviews of SR tools (Table S1 in Multimedia Appendix 1 ). From a study by Kohl et al [ 12 ], we added database search, risk of bias assessment (critical appraisal), and data visualization. From Marshall et al [ 11 ], we added report writing.

We added 4 more features based on their importance to software-based SR: manual addition of records, automated full-text retrieval, dual extraction of studies, risk of bias (critical appraisal), living SR, and public outputs. Each addition represents either a best practice in SR [ 14 ] or a key feature for the accuracy, replicability, and transparency of SR. Thus, in total, we assessed the presence or absence of 30 features across 6 categories: retrieval, appraisal, synthesis, documentation/output, administration/project management, and access/support.

We adopted each feature unless it was outside of the SR process, it was required for inclusion in the present review, it duplicated another feature, it was not a discrete step for comparison, it was not necessary for English language reviews, it was not necessary for a web-based software, or it related to reference management (as we excluded reference managers from the present review). Table 1 shows all features not assessed, with rationale.

Features from systematic reviews not assessed in this review, with rationale.

Feature Assessment

To minimize bias concerning the subjective assessment of the necessity or desirability of features or of the relative performance of features, we used a binary assessment where each SR tool was scored 0 if a given feature was not present or 1 if a feature was present. Tools were assessed between June and August 2021. We assessed 30 features, divided into 6 feature classes. Of the 30 features, 77% (23/30) were identified in existing literature, and 23% (7/30) were added by the authors ( Table 2 ).

The criteria for each selected feature, as well as the rationale.

a API: application programming interface.

b Rationale only provided for features added in this review; all other features were drawn from existing feature analyses of Systematic Review Software Tools.

c RIS: Research Information System.

d PRISMA: Preferred Reporting Items for Systematic Reviews and Meta-Analyses.

e AI: artificial intelligence.

Evaluation of Tools

For tools with free versions available, each of the researchers created an account and tested the program to determine feature presence. We also referred to user guides, publications, and training tutorials. For proprietary software, we gathered information on feature offerings from marketing webpages, training materials, and video tutorials. We also contacted all proprietary software providers to give them the opportunity to comment on feature offerings that may have been left out of those materials. Of the 8 proprietary software providers contacted, 38% (3/8) did not respond, 50% (4/8) provided feedback on feature offerings, and 13% (1/8) declined to comment. When providers provided feedback, we re-reviewed the features in question and altered the assessment as appropriate. One provider gave feedback after initial puplication, prompting issuance of a correction.

Feature assessment was completed independently by 2 reviewers (KC and AR), and all disagreements were adjudicated by a third (KK). Interobserver agreement was calculated using standard methods [ 19 ] as applied to binary assessments. First, the 2 independent assessments were compared, and the number of disagreements was counted per feature, per software. For each feature, the total number of disagreements was counted and divided by the number of software tools assessed. This provided a per-feature variability percentage; these percentages were averaged across all features to provide a cumulative interobserver agreement percentage.

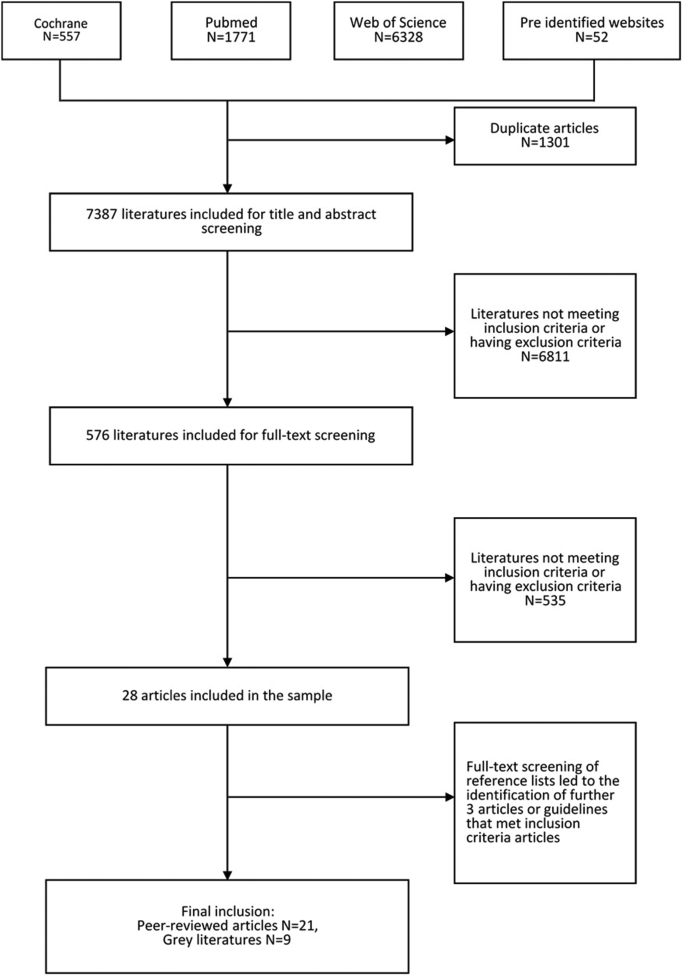

Identification of SR Tools

We reviewed all 240 software tools offered on SR Toolbox and sent forward all studies that, based on the software descriptions, could meet our inclusion criteria; we then added in all software tools found on Google Scholar. This strategy yielded 53 software tools that were reviewed in full ( Figure 1 shows the PRISMA [Preferred Reporting Items for Systematic Reviews and Meta-Analyses]-based chart). Of these 53 software tools, 55% (29/53) were excluded. Of the 29 excluded tools, 17% (5/29) were built to review software engineering literature, 10% (3/29) were not functional as of August 2021, 7% (2/29) were citation managers, and 7% (2/29) were statistical packages. Other excluded tools included tools not designed for SRs (6/29, 21%), desktop applications (4/29, 14%), tools requiring users to code (3/29, 10%), a search engine (1/29, 3%), and a social science literature review tool (1/29, 3%). One tool, Research Screener [ 20 ], was excluded owing to insufficient information available on supported features. Another tool, the Health Assessment Workspace Collaborative, was excluded because it is designed to assess chemical hazards.

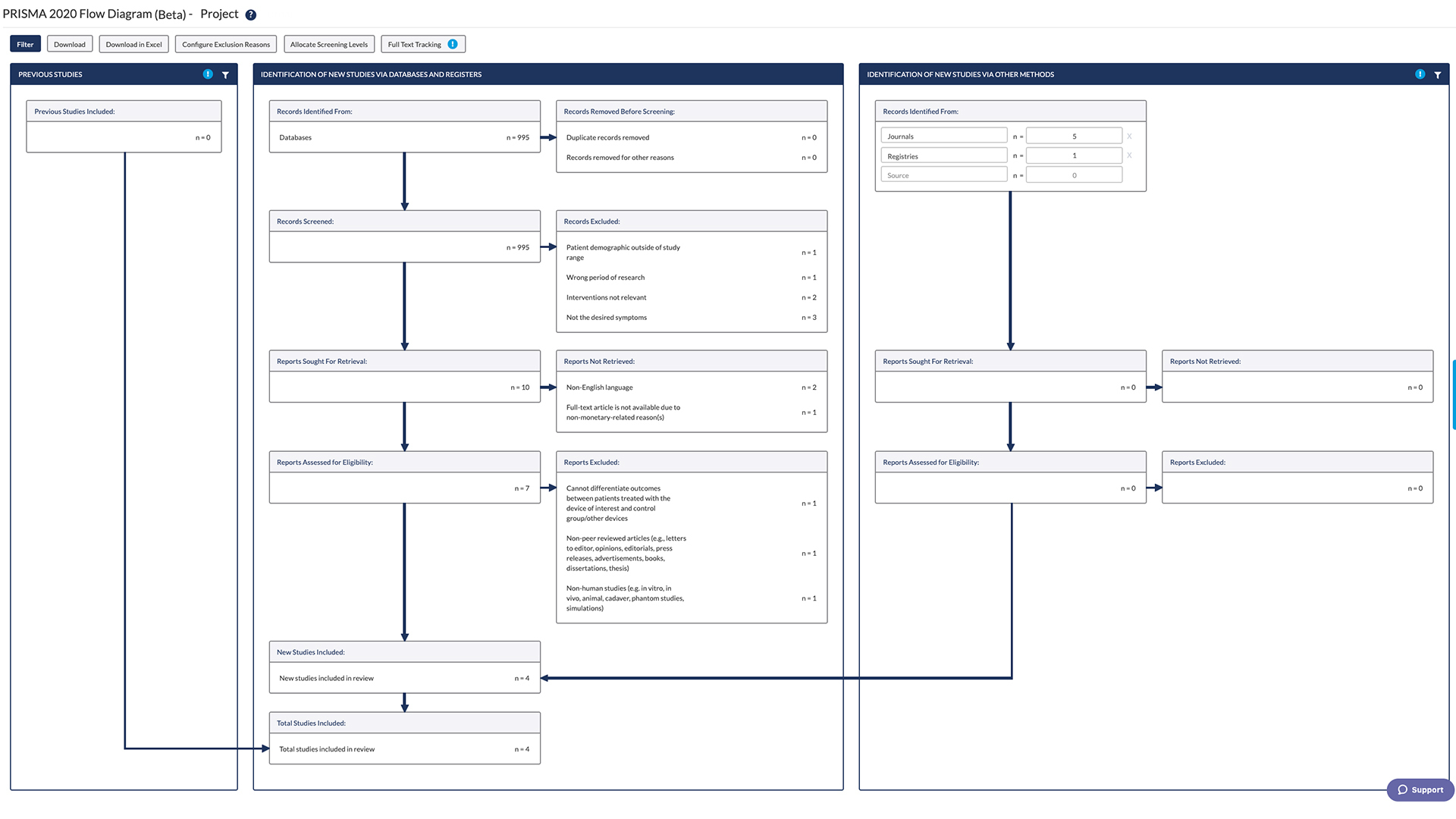

PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses)-based chart showing the sources of all tools considered for inclusion, including 2-phase screening and reasons for all exclusions made at the full software review stage. SR: systematic review.

Overview of SR Tools

We assessed the presence of features in 24 software tools, of which 71% (17/24) are designed for health care or biomedical sciences. In addition, 63% (15/24) of the analyzed tools support the full SR process, meaning they enable search, screening, extraction, and export, as these are the basic capabilities necessary to complete a review in a single software tool. Furthermore, 21% (5/34) of the tools support the screening stage ( Table 3 ).

Breakdown of software tools for systematic review by process type (full process, screening, extraction, or visualization; n=24).

Data Gathering

Interobserver agreement between the 2 reviewers gathering data features was 86.46%, meaning that across all feature assessments, the 2 reviewers disagreed on <15% of the applications. Final assessments are summarized in Table 4 , and Table S2 in Multimedia Appendix 2 shows the interobserver agreement on a per–SR tool and per-feature basis. Interobserver agreement was ≥70% for every feature assessed and for all SR tools except 3: LitStream (ICF; 53.3%), RevMan Web (Cochrane; 50%), and SR Accelerator (Institute for Evidence-Based Healthcare; 53.3%); on investigation, these low rates of agreement were found to be due to name changes and versioning (LitStream and RevMan Web) and due to the modular nature of the subsidiary offerings (SR Accelerator). An interactive, updatable visualization of the features offered by each tool is available in the Systematic Review Methodologies Qualitative Synthesis.

Feature assessment scores by feature class for each systematic review tool analyzed. The total number of features across all feature classes is presented in descending order.

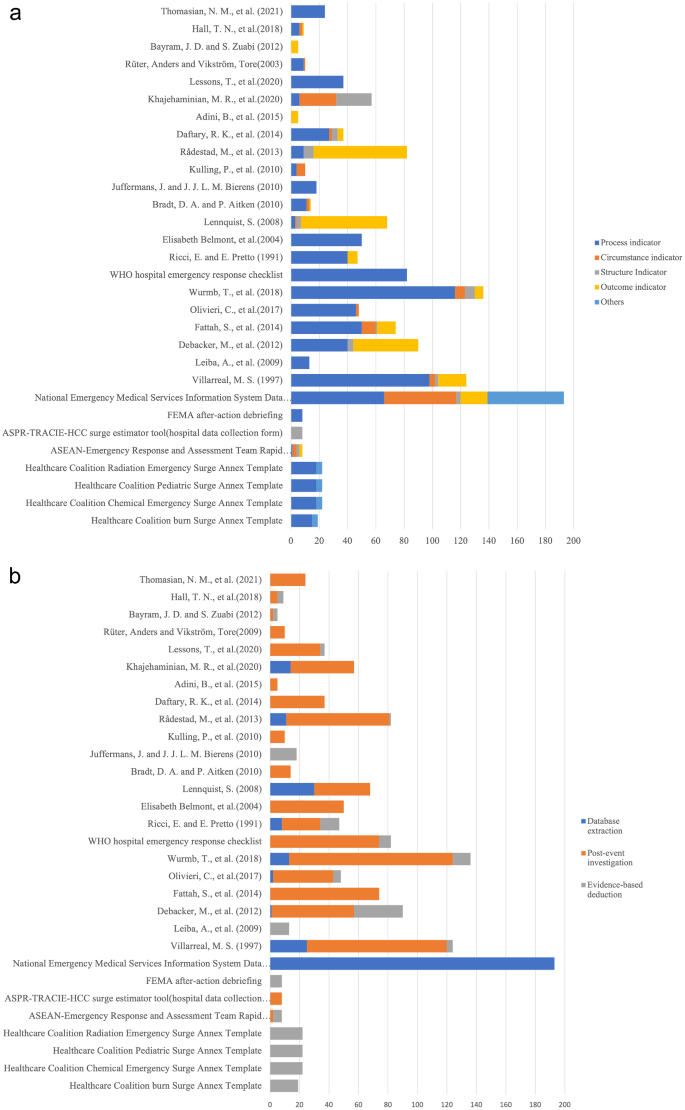

Giotto Compliance (27/30, 90%), DistillerSR (26/30, 87%), and Nested Knowledge (26/30, 87%) support the most features, followed by EPPI-Reviewer Web (25/30, 83%), LitStream (23/30, 77%), JBI SUMARI (21/30, 70%), and SRDB.PRO (VTS Software) (21/30, 70%).

The top 16 software tools are ranked by percent of features from highest to lowest in Figure 2 . Fewer than half of all features are supported by 7 tools: RobotAnalyst (National Centre for Text Mining), SRDR (Agency for Healthcare Research and Quality), SyRF (Systematic Review Facility), Data Abstraction Assistant (Center for Evidence Synthesis in Health, Institute for Evidence-Based Healthcare), SR-Accelerator, RobotReviewer (RobotReviewer), and COVID-NMA (COVID-NMA; Table 3 ).

Stacked bar chart comparing the percentage of supported features, broken down by their feature class (retrieval, appraisal, extraction, output, admin, and access), among all analyzed software tools.

Feature Assessment: Breakout by Feature Class

Of all 6 feature classes, administrative features are the most supported, and output and extraction features are the least supported ( Figure 3 ). Only 3 tools, Covidence (Cochrane), EPPI-Reviewer, and Giotto Compliance, offer all 4 extraction features ( Table 4 ). DistillerSR and Giotto support all 5 retrieval features, while Nested Knowledge supports all 5 documentation/output features. Colandr, DistillerSR, EPPI-Reviewer, Giotto Compliance, and PICOPortal support all 6 appraisal features.

Heat map of features observed in 24 analyzed software tools. Dark blue indicates that a feature is present, and light blue indicates that a feature is not present.

Feature Class 1: Retrieval

The ability to search directly within the SR tool was only present for 42% (10/24) of the software tools, meaning that for all other SR tools, the user is required to search externally and import records. The only SR tool that did not enable importing of records was COVID-NMA, which supplies studies directly from the providers of the tool but does not enable the user to do so.

Feature Class 2: Appraisal

Among the 19 tools that have title/abstract screening, all tools except for RobotAnalyst and SRDR+ enable dual screening and adjudication. Reference deduplication is less widespread, with 58% (14/24) of the tools supporting it. A form of machine learning/automation during the screening stage is present in 54% (13/24) of the tools.

Feature Class 3: Extraction

Although 75% (18/24) of the tools offer data extraction, only 29% (7/24) offer dual data extraction (Giotto Compliance, DistillerSR, SRDR+, Cadima [Cadima], Covidence, EPPI-Reviewer, and PICOPortal [PICOPortal]). A total of 54% (13/24) of the tools enable risk of bias assessments.

Feature Class 4: Output

Exporting references or collected data is available in 71% (17/24) of the tools. Of the 24 tools, 54% (13/24) generate figures or tables, 42% (10/24) of tools generate PRISMA flow diagrams, 32% (8/24%) have report writing, and only 13% (3/34) have in-text citations.

Feature Class 5: Admin

Protocols, customer support, and training materials are available in 71% (17/24), 79% (19/24), and 83% (20/24) of the tools, respectively. Of all administrative features, the least well developed are progress/activity monitoring, which is offered 67% (16/24) of the tools, and comments, which are available in 58% (14/24) of the tools.

Feature Class 6: Access

Access features cover both collaboration during the review, cost, and availability of outputs. Of the 24 software tools, 83% (20/24) permit collaboration by allowing multiple users to work on a project. COVID-NMA, RobotAnalyst, RobotReviewer, and SR-Accelerator do not allow multiple users. In addition, of the 24 tools, 71% (17/24) offer a free subscription, whereas 29% (7/24) require paid subscriptions or licenses (Covidence, DistillerSR, EPPI-Reviewer Web, Giotto Compliance, JBI Sumari, SRDB.PRO, and SWIFT-Active Screener). Only 54% (13/24) of the software tools support living, updatable reviews.

Principal Findings

Our review found a wide range of options in the SR software space; however, among these tools, many lacked features that are either crucial to the completion of a review or recommended as best practices. Only 63% (15/24) of the SR tools covered the full process from search/import through to extraction and export. Among these 15 tools, only 67% (10/15) had a search functionality directly built in, and only 47% (7/15) offered dual data extraction (which is the gold standard in quality control). Notable strengths across the field include collaborative mechanisms (offered by 20/24, 83% tools) and easy, free access (17/24, 71% of tools are free). Indeed, the top 4 software tools in terms of number of features offered (Giotto Compliance, DistillerSR, Nested Knowledge, and EPPI-Reviewer all offered between 83% and 90% of the features assessed. However, major remaining gaps include a lack of automation of any step other than screening (automated screening offered by 13/24, 54% of tools) and underprovision of living, updatable outputs.

Major Gaps in the Provision of SR Tools

Marshall et al [ 11 ] have previously noted that “the user should be able to perform an automated search from within the tool which should identify duplicate papers and handle them accordingly” [ 11 ]. Less than a third of tools (7/24, 29%) support search, reference import, and manual reference addition.

Study Selection

Screening of references is the most commonly offered feature and has the strongest offerings across features. All software tools that offer screening also support dual screening (with the exception of RobotAnalyst and SRDR+). This demonstrates adherence to SR best practices during the screening stage.

Automation and Machine Learning

Automation in medical SR screening has been growing. Some form of machine learning or other automation for screening literature is present in over half (13/24, 54%) of all the tools analyzed. Machine learning/screening includes reordering references, topic modeling, and predicting inclusion rates.

Data Extraction

In contrast to screening, extraction is underdeveloped. Although extraction is offered by 75% (18/24) tools, few tools adhere to SR best practices of dual extraction. This is a deep problem in the methods of review, as the error rate for manual extraction without dual extraction is highly variable and has even reached 50% in independent tests [ 16 ].

Although single extraction continues to be the only commonly offered method, the scientific community has noted that automating extraction would have value in both time savings and improved accuracy, but the field is as of yet underdeveloped. To quote a recent review on the subject of automated extraction, “[automation] techniques have not been fully utilized to fully or even partially automate the data extraction step of systematic review” [ 21 ]. The technologies to automate extraction have not achieved partial extraction at a sufficiently high accuracy level to be adopted; therefore, dual extraction is a pressing software requirement that is unlikely to be surpassed in the near future.

Project Management

Administrative features are well supported by SR software. However, there is a need for improved monitoring of review progress. Project monitoring is offered by 67% (16/24) of the tools, which is among the lowest of all admin features and likely the feature most closely associated with the quality of the outputs. As collaborative access is common and highly prized, SR software providers should recognize the barriers to collaboration in medical research; lack of mutual awareness, inertia in communication, and time management and capacity constraints are among the leading reasons for failure in interinstitutional research [ 22 ]. Project monitoring tools could assist with each of these pain points and improve the transparency and accountability within the research team.

Living Reviews

The scientific community has made consistent demands for SR processes to be rendered updatable, with the goal of improving the quality of evidence available to clinicians, health policymakers, and the medical public [ 23 , 24 ]. Despite these ongoing calls for change, living, updatable reviews are not yet standard in SR software tools. Only 54% (13/24) of the tools support living reviews, largely because living review depends on providing updatability at each step up through to outputs. However, until greater provision of living review tools is achieved, reviews will continue to fall out of date and out of sync with clinical practice [ 24 ].

Study Limitations

In our study design, we elected to use a binary assessment, which limited the bias induced by the subjective appeal of any given tool. Therefore, these assessments did not include any comparison of quality or usability among the SR tools. This also meant that we did not use the Desmet [ 25 ] method, which ranks features by level of importance. We also excluded certain assessments that may impact user choices such as language translation features or translated training documentation, which is supported by some technologies, including DistillerSR. We completed the review in August 2021 but added several software tools following reviewer feedback; by adding expert additions without repeating the entire search strategy, we may have missed SR tools that launched between August and December 2021. Finally, the authors of this study are the designers of one of the leading SR tools, Nested Knowledge, which may have led to tacit bias toward this tool as part of the comparison.

By assessing features offered by web-based SR applications, we have identified gaps in current technologies and areas in need of development. Feature count does not equate to value or usability; it fails to capture benefits of simple platforms, such as ease of use, effective user interface, alignment with established workflows, or relative costs. The authors make no claim about superiority of software based on feature prevalence.

Future Directions

We invite and encourage independent researchers to assess the landscape of SR tools and build on this review. We expect the list of features to be assessed will evolve as research changes. For example, this review did not include features such as the ability to search included studies, reuse of extracted data, and application programming interface calls to read data, which may grow in importance. Furthermore, this review assessed the presence of automation at a high level without evaluating details. A future direction might be characterizing specific types of automation models used in screening, as well as in other stages, for software applications that support SR of biomedical research.

The highest-performing SR tools were DistillerSR, EPPI-Reviewer Web, and Nested Knowledge, each of which offer >80% of features. The most commonly offered and robust feature class was screening, whereas extraction (especially quality-controlled dual extraction) was underprovided. Living reviews, although strongly advocated for in the scientific community, were similarly underprovided by the SR tools reviewed here. This review enables the medical community to complete transparent and comprehensive comparison of SR tools and may also be used to identify gaps in technology for further development by the providers of these or novel SR tools.

This review of web-based software review software tools represents an attempt to best capture information from software providers’ websites, free trials, peer-reviewed publications, training materials, or software tutorials. The review is based primarily on publicly available information and may not accurately reflect feature offerings, as relevant information was not always available or clear to interpret. This evaluation does not represent the views or opinions of any of the software developers or service providers, except those of the authors. The review was completed in August 2021, and readers should refer to the respective software providers’ websites to obtain updated information on feature offerings.

Acknowledgments

The authors acknowledge the software development team from Nested Knowledge, Stephen Mead, Jeffrey Johnson, and Darian Lehmann-Plantenberg for their input in designing Nested Knowledge. The authors thank the independent software providers who provided feedback on our feature assessment, which increased the quality and accuracy of the results.

Abbreviations

Multimedia appendix 1, multimedia appendix 2.

Authors' Contributions: All authors participated in the conception, drafting, and editing of the manuscript.

Conflicts of Interest: KC, NH, and KH work for and hold equity in Nested Knowledge, which provides a software application included in this assessment. AR worked for Nested Knowledge. KL works for and holds equity in Nested Knowledge, Inc, and holds equity in Superior Medical Experts, Inc. KK works for and holds equity in Nested Knowledge, and holds equity in Superior Medical Experts.

- Oracle Mode

- Oracle Mode – Advanced

- Exploration Mode

- Simulation Mode

- Simulation Infrastructure

Join the movement towards fast, open, and transparent systematic reviews

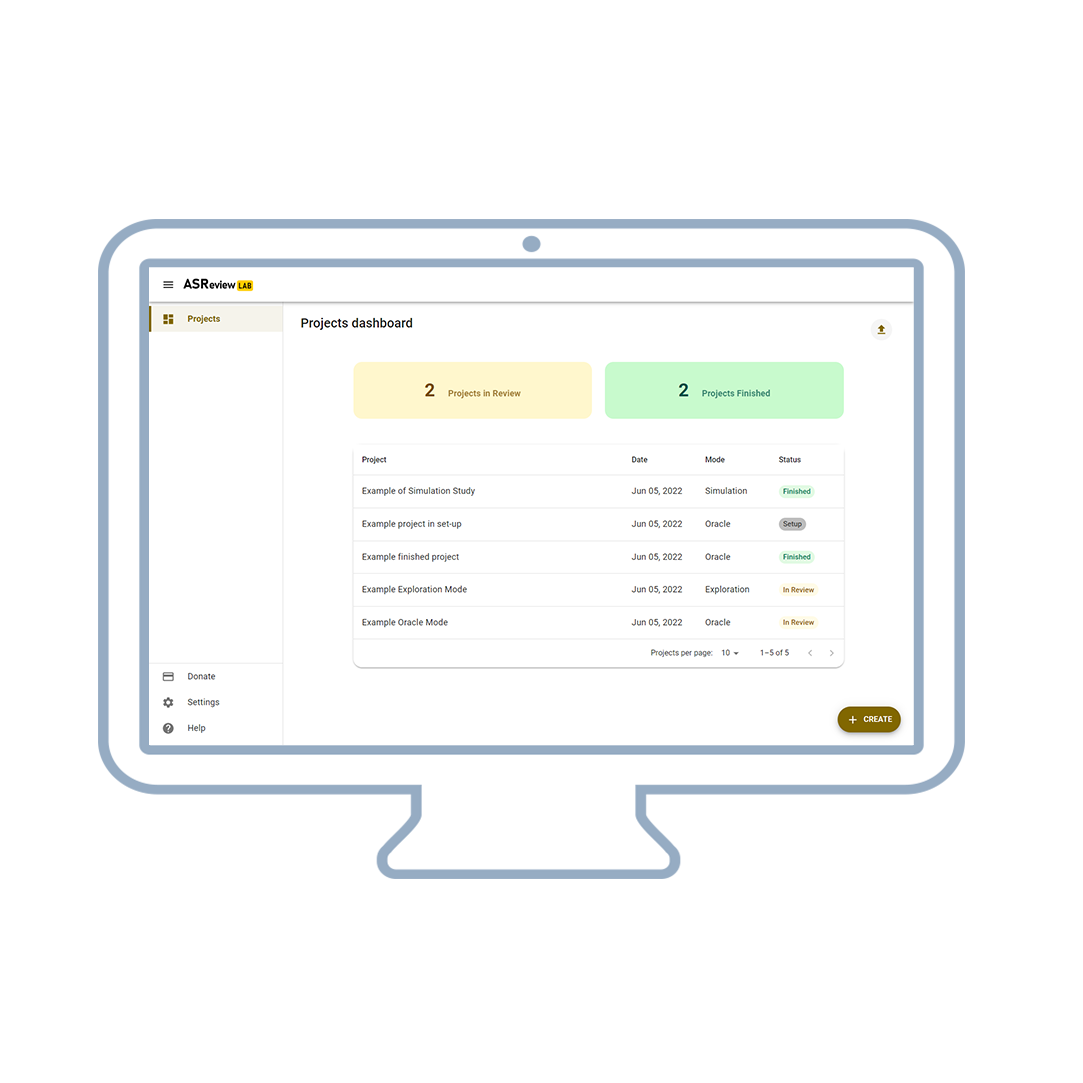

ASReview LAB v1.5 is out!

By loading the video, you agree to YouTube's privacy policy. Learn more

Always unblock YouTube

ASReview uses state-of-the-art active learning techniques to solve one of the most interesting challenges in systematically screening large amounts of text : there’s not enough time to read everything!

The project has grown into a vivid worldwide community of researchers, users, and developers. ASReview is coordinated at Utrecht University and is part of the official AI-labs at the university.

Free, Open and Transparent

The software is installed on your device locally. This ensures that nobody else has access to your data, except when you share it with others. Nice, isn’t it?

- Free and open source

- Local or server installation

- Full control over your data

- Follows the Reproducibility and Data Storage Checklist for AI-Aided Systematic Reviews

In 2 minutes up and running

With the smart project setup features, you can start a new project in minutes. Ready, set, start screening!

- Create as many projects as you want

- Choose your own or an existing dataset

- Select prior knowledge

- Select your favorite active learning algorithm

Three modi to choose from

ASReview LAB can be used for:

- Screening with the Oracle Mode , including advanced options

- Teaching using the Exploration Mode

- Validating algorithms using the Simulation Mode

We also offer an open-source research infrastructure to run large-scale simulation studies for validating newly developed AI algorithms.

Follow the development

Open-source means:

- All annotated source code is available

- You can see the developers at work in open Pull Requests

- Open Pull Request show in what direction the project is developing

- Anyone can contribute!

Give a GitHub repo a star if you like our work.

Join the community

A community-driven project means:

- The project is a joined endeavor

- Your contribution matters!

Join the movement towards transparent AI-aided reviewing

Beginner -> User -> Developer -> Maintainer

Organizations

Github stars

Join the ASReview Development Fund

Many users donate their time to continue the development of the different software tools that are part of the ASReview universe. Also, donations and research grants make innovations possible!

Navigating the Maze of Models in ASReview

Starting a systematic review can feel like navigating through a maze, with countless articles and endless…

ASReview LAB Class 101

ASReview LAB Class 101 Welcome to ASReview LAB class 101, an introduction to the most important…

Introducing the Noisy Label Filter (NLF) procedure in systematic reviews

The ASReview team developed a procedure to overcome replication issues in creating a dataset for simulation…

Seven ways to integrate ASReview in your systematic review workflow

Seven ways to integrate ASReview in your systematic review workflow Systematic reviewing using software implementing Active…

Active Learning Explained

Active Learning Explained The rapidly evolving field of artificial intelligence (AI) has allowed the development of…

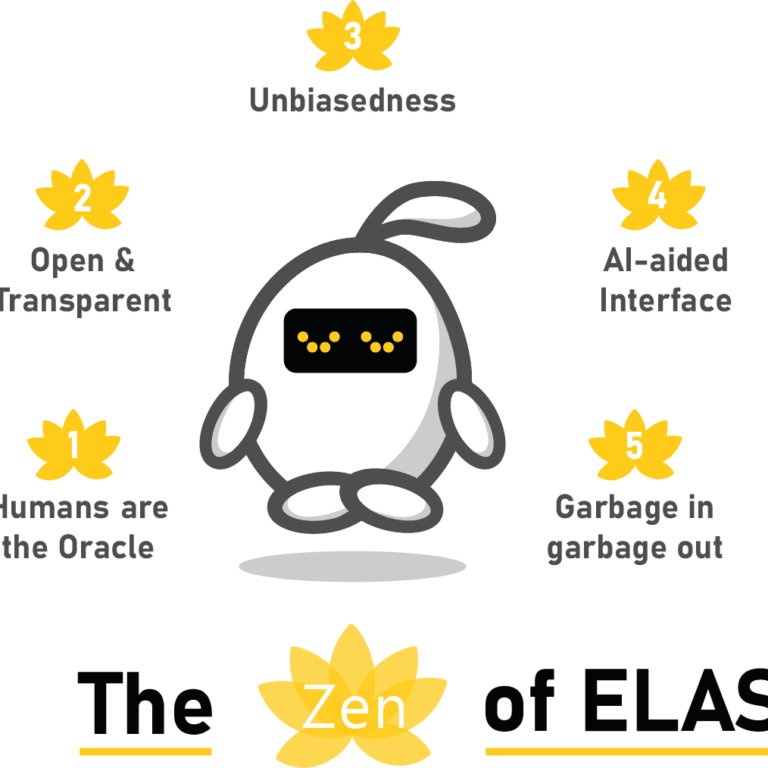

the Zen of Elas

The Zen of Elas Elas is the Mascotte of ASReview and your Electronic Learning Assistant who…

Five ways to get involved in ASReview

Five ways to get involved in ASReview ASReview LAB is open and free (Libre) software, maintained…

Connecting RIS import to export functionalities

What’s new in v0.19? Connecting RIS import to export functionalities Download ASReview LAB 0.19Update to ASReview…

Meet the new ASReview Maintainer: Yongchao Ma

Meet Front-End Developer and ASReview Maintainer Yongchao Ma As a user of ASReview, you are probably…

UPDATED: ASReview Hackathon for Follow the Money

This event has passed The winners of the hackathon were: Data pre-processing: Teamwork by: Raymon van…

What’s new in release 0.18?

More models more options, available now! Version 0.18 slowly opens ways to the soon to be…

Simulation Mode Class 101

Simulation Mode Class 101 Have you ever done a systematic review manually, but wondered how much…

MSU Libraries

- Need help? Ask a Librarian

Systematic & Advanced Evidence Synthesis Reviews

- Our Services

- Choosing A Review Type

- Conducting A Review

- Systematic Reviews

- Scoping & Other Types of Advanced Reviews

Online Toolkits & Workbooks

Search strategies and citation chaining, citation management, deduplication, bibliography creation, and cite-while-you-write, screening results, creating prisma compliant flow charts, data analysis & abstraction, total workflow sr products, writing a manuscript.

- Contact Your Librarian For Help

This page lists commonly used software for Systematic Review's (SRs) and other advanced evidence synthesis reviews and should not be taken as MSU Libraries endorsing one program over another. The sections of the guide list fee-based as well as free and open-source software for different aspects of the review workflow. All-inclusive workflow products are listed in this section.

- Wanner, Amanda. 2019. Getting started with your systematic or scoping review: Workbook & Endnote Instructions. Open Science Framework. This is a librarian created workbook on OSF that includes a pretty comprehensive workbook that walks you through all the steps and stages of creating a systematic or scoping review.

- What review is right for you? This tool is designed to provide guidance and supporting material to reviewers on methods for the conduct and reporting of knowledge synthesis. As a pilot project, the current version of the tool only identifies methods for knowledge synthesis of quantitative studies. A future iteration will be developed for qualitative evidence synthesis.

- Systematic Review Toolkit The Systematic Review Toolbox is a web-based catalogue of tools that support various tasks within the systematic review and wider evidence synthesis process. The toolbox aims to help researchers and reviewers find the following: Software tools, Quality assessment / critical appraisal checklists, Reporting standards, and Guidelines.

It is highly recommended that researchers partner with the academic librarian for their specialty to create search strategies for systematic and advanced reviews. Many guidance organizations recognize the invaluable contributions of information professionals to creating search strategies - the bedrock of synthesis reviews.

- Visualising systematic review search strategies to assist information specialists

- Gusenbauer, M., & Haddaway, N. R. (2019). Which Academic Search Systems are Suitable for Systematic Reviews or Meta‐Analyses? Evaluating Retrieval Qualities of Google Scholar, PubMed and 26 other Resources. Research Synthesis Methods.

- Citation Chaser Forward citation chasing looks for all records citing one or more articles of known relevance; backward citation chasing looks for all records referenced in one or more articles. This tool automates this process by making use of the Lens.org API. An input article list can be used to return a list of all referenced records, and/or all citing records in the Lens.org database (consisting of PubMed, PubMed Central, CrossRef, Microsoft Academic Graph and CORE)

How do you track your integration and resourcing for projects that require systematic searching, like systematic or scoping reviews? What, where, and how should you be tracking? Using a tool like Air Table can help you stay organized.

- Airtable for Systematic Search Tracking Talk from Whitney Townsend at the University of Michigan - April 6, 2022

Having a software program that can store citations from databases, deduplicating your results, and automating the creation and formatting of citations and a bibliography using a cite-while-you-write plugin will save a lot of time when doing any literature review. The software listed below can do all of these functions which are not found in the fee-based total systematic review workflow products.

You could also do most of the components of an SR in these software including screening.

- Endnote Guide Endnote Online is free and has basic functionality like importing citations and cite-while-you-write for Microsoft Word. The desktop version of Endnote is a separate individual purchase and is more robust then the online version particularly for organization of citations and ease of use with large citation libraries.

- Mendeley Guide Mendeley has all the standard features of a citation manager with the addition of a social community of scholars. Mendeley can be sluggish with large file sizes of multiple thousands of citations and the free version has limited collaborative features.

Screening the titles, abstracts, and full text of your results is one of the most time consuming components of any review. There are easy-to-use free software for this process but they won't have features like automatically creating the flow charts and inter-rater reliability kappa coefficient that you need to report in your methodology section. You will have to do this by hand.

Deduplication of results before importation into one of these tools and screening should be done in a citation management program like Endnote, Mendeley, or Zotero.

- Abstrackr Created by Brown University, Abstrackr is one of the best known and easiest to use free tools for screening results.

- Colandr Colandr is an open source screening tool. Like Abstrackr, deduplication is best done in a citation manager and then results imported into Colandr. There is a learning curve to this tool but a vibrant user community does exist for troubleshooting.

- Rayyan Built by the Qatar Foundation. It is a free web-tool (Beta) designed to help researchers working on systematic reviews and other knowledge synthesis projects. It has simple interface and a mobile app for screening-on-the-go.

- PRISMA Diagram Generator Using the PRISMA Diagram Generator you can produce a diagram easily in any of 10 different formats. The official PRISMA website only has the format as a .docx or .pdf option. Using the generator the diagram is produced using the Open Source dot program (part of graphviz), and this tool provides the source for your diagram if you wish to further tweak your diagram.

- PRISMA 2020: R Package and ShinyApp This free, online tool makes use of the DiagrammeR R package to develop a customisable flow diagram that conforms to PRISMA 2020 standards. It allows the user to specify whether previous and other study arms should be included, and allows interactivity to be embedded through the use of mouseover tooltips and hyperlinks on box clicks.

Tools for data analysis can help you categorize results such as outcomes of studies and perform metanalyses. The SRDR tool may be the easiest to use and has frequent functionality updates.

- OpenMeta[Analyst] Developed by Brown University using an AHRQ grant, OpenMeta[Analyst] is a no-frills approach to data analysis.

- SRDR Developed by the AHRQ, The Systematic Review Data Repository (SRDR) is a powerful and easy-to-use tool for the extraction and management of data for systematic review or meta-analysis. It is also an open and searchable archive of systematic reviews and their data.

Data abstraction commonly refers to the extraction, synthesis, and structured visualization of evidence characteristics. Evidence tables/table shells/reading grids are the core way article extraction analyses are displayed. It lists all the included data sources and their characteristics according to your inclusion/exclusion criteria. Tools like Covidence have modules to create your own data extraction form and export a table when finished.

- OpenAcademics: Reading Grid Template

- The National Academies Press: Sample Literature Table Shells

- Campbell Collaboration: Data Extraction Tips

There are several fee-based products that are a one-stop-shop for systematic reviews. They complete all the steps from importing citations, deduplicating results, screening, bibliography management, and some even perform metanalyses. These are best used by teams that have grant or departmental funding because they can be rather expensive.

None of these tools offers a robust bibliography creation function or cite-while-you write option. You will still need to use a separate citation manager to do these aspects of review writing. We list commonly used citation management tools on this page.

- EPPI-Reviewer 4 EPPI-Reviewer 4 is a web-based software program for managing and analysing data in literature reviews. It has been developed for all types of systematic review (meta-analysis, framework synthesis, thematic synthesis etc) but also has features that would be useful in any literature review. It manages references, stores PDF files and facilitates qualitative and quantitative analyses such as meta-analysis and thematic synthesis. It also contains some new ‘text mining’ technology which is promising to make systematic reviewing more efficient. It also supports many different analytic functions for synthesis including meta-analysis, empirical synthesis and qualitative thematic synthesis. It does not have a bibliographic manager or cite-while-you-write feature.

- JBI-SUMARI Currently, this tool can only accept Endnote XML files for citation import. So you would need to download citations to Endnote, import them into SUMARI, and when screening is complete use Endnote as your bibliographic manager for any writing. SUMARI supports 10 review types, including reviews of effectiveness, qualitative research, economic evaluations, prevalence/incidence, aetiology/risk, mixed methods, umbrella/overviews, text/opinion, diagnostic test accuracy and scoping reviews. It facilitates the entire review process, from protocol development, team management, study selection, critical appraisal, data extraction, data synthesis and writing your systematic review report.

Using Excel

Some teams may choose to use Excel for their systematic review. This is not recommended because it can be extremely time consuming and is more prone to error. However, there is a basic template for Excel-based SR's online that is good quality and walks one through the entire workflow of completing an SR (excluding bibliography creation and citation management).

- PIECES Workbook This link will open an Excel workbook designed to help conduct, document, and manage a systematic review. Made by Margaret J. Foster, MS, MPH, AHIP Systematic Reviews Coordinator Associate Professor Medical Sciences Library, Texas A&M University

- Systematic Review Accelerator: Methods Wizard An tool to help you write consistent, reproducible methods sections according to common reporting structures.

- PRISMA Extensions Each PRISMA reporting extension has a manuscript checklist that lays out exactly how to write/report your review and what information to include.

- << Previous: Scoping & Other Types of Advanced Reviews

- Next: Contact Your Librarian For Help >>

- Last Updated: May 14, 2024 2:50 PM

- URL: https://libguides.lib.msu.edu/systematic_reviews

An official website of the United States government

Here's how you know

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

Tools & Resources

Software tools.

There are a variety of fee-based and open-source (i.e., free) tools available for conducting the various steps of your scoping or systematic review.

The NIH Library currently provides free access for NIH customers to Covidence . At least one user must be from NIH in order to request access and use Covidence. Please contact the NIH Library's Systematic Review Service to request access.

You can use Covidence to import citations from any citation management tool and then screen your citations at title and abstract and then full text levels. Covidence keeps track of who voted and manages the flow of the citations to ensure the correct number of screeners reviews each citation. It can also support single or dual screeners. In the full text screening step, you can upload PDFs into Covidence and it will keep track of your excluded citations and reasons for exclusion. Later, export this information to help you complete the PRISMA flow diagram. If you chose, you can also complete your data extraction and risk of bias assessments in Covidence by creating templates based on your needs and type of risk of bias tool. Finally, export all of your results for data management purposes or export your data into another data analysis tool for further work.

Other tools available for conducting scoping or systematic reviews are:

- DistillerSR (fee-based)

- EPPI-Reviewer (fee-based)

- JBI SUMARI (from the Joanna Briggs Institute for their reviews) (fee-based)

- LitStream (from ICF International)

- Systematic Review Data Repository (SRDR+) (from AHRQ) (free)

- Abstrackr (open source)

- Colandr (open source)

- Google Sheets and Forms

- HAWC (Health Assessment Workplace Collaborative) (free)

- Rayyan (open source)

And check out the Systematic Review Toolbox for additional software suggestions for conducting your review.

Quality Assessment Tools (i.e., risk of bias, critical appraisal)

- 2022 Repository of Quality Assessment and Risk of Bias Tools - A comprehensive resource for finding and selecting a risk of bias or quality assessment tool for evidence synthesis projects. Continually updated.

- AMSTAR 2 - AMSTAR 2 ( A MeaSurement Tool to Assess systematic Reviews). Use for critically appraising ONLY systematic reviews of healthcare interventions including randomised controlled clinical trials.