The Ultimate Guide to Qualitative Research - Part 1: The Basics

- Introduction and overview

- What is qualitative research?

- What is qualitative data?

- Examples of qualitative data

- Qualitative vs. quantitative research

- Mixed methods

- Qualitative research preparation

- Theoretical perspective

- Theoretical framework

- Literature reviews

Research question

- Conceptual framework

- Conceptual vs. theoretical framework

Data collection

- Qualitative research methods

- Focus groups

- Observational research

What is a case study?

Applications for case study research, what is a good case study, process of case study design, benefits and limitations of case studies.

- Ethnographical research

- Ethical considerations

- Confidentiality and privacy

- Power dynamics

- Reflexivity

Case studies

Case studies are essential to qualitative research , offering a lens through which researchers can investigate complex phenomena within their real-life contexts. This chapter explores the concept, purpose, applications, examples, and types of case studies and provides guidance on how to conduct case study research effectively.

Whereas quantitative methods look at phenomena at scale, case study research looks at a concept or phenomenon in considerable detail. While analyzing a single case can help understand one perspective regarding the object of research inquiry, analyzing multiple cases can help obtain a more holistic sense of the topic or issue. Let's provide a basic definition of a case study, then explore its characteristics and role in the qualitative research process.

Definition of a case study

A case study in qualitative research is a strategy of inquiry that involves an in-depth investigation of a phenomenon within its real-world context. It provides researchers with the opportunity to acquire an in-depth understanding of intricate details that might not be as apparent or accessible through other methods of research. The specific case or cases being studied can be a single person, group, or organization – demarcating what constitutes a relevant case worth studying depends on the researcher and their research question .

Among qualitative research methods , a case study relies on multiple sources of evidence, such as documents, artifacts, interviews , or observations , to present a complete and nuanced understanding of the phenomenon under investigation. The objective is to illuminate the readers' understanding of the phenomenon beyond its abstract statistical or theoretical explanations.

Characteristics of case studies

Case studies typically possess a number of distinct characteristics that set them apart from other research methods. These characteristics include a focus on holistic description and explanation, flexibility in the design and data collection methods, reliance on multiple sources of evidence, and emphasis on the context in which the phenomenon occurs.

Furthermore, case studies can often involve a longitudinal examination of the case, meaning they study the case over a period of time. These characteristics allow case studies to yield comprehensive, in-depth, and richly contextualized insights about the phenomenon of interest.

The role of case studies in research

Case studies hold a unique position in the broader landscape of research methods aimed at theory development. They are instrumental when the primary research interest is to gain an intensive, detailed understanding of a phenomenon in its real-life context.

In addition, case studies can serve different purposes within research - they can be used for exploratory, descriptive, or explanatory purposes, depending on the research question and objectives. This flexibility and depth make case studies a valuable tool in the toolkit of qualitative researchers.

Remember, a well-conducted case study can offer a rich, insightful contribution to both academic and practical knowledge through theory development or theory verification, thus enhancing our understanding of complex phenomena in their real-world contexts.

What is the purpose of a case study?

Case study research aims for a more comprehensive understanding of phenomena, requiring various research methods to gather information for qualitative analysis . Ultimately, a case study can allow the researcher to gain insight into a particular object of inquiry and develop a theoretical framework relevant to the research inquiry.

Why use case studies in qualitative research?

Using case studies as a research strategy depends mainly on the nature of the research question and the researcher's access to the data.

Conducting case study research provides a level of detail and contextual richness that other research methods might not offer. They are beneficial when there's a need to understand complex social phenomena within their natural contexts.

The explanatory, exploratory, and descriptive roles of case studies

Case studies can take on various roles depending on the research objectives. They can be exploratory when the research aims to discover new phenomena or define new research questions; they are descriptive when the objective is to depict a phenomenon within its context in a detailed manner; and they can be explanatory if the goal is to understand specific relationships within the studied context. Thus, the versatility of case studies allows researchers to approach their topic from different angles, offering multiple ways to uncover and interpret the data .

The impact of case studies on knowledge development

Case studies play a significant role in knowledge development across various disciplines. Analysis of cases provides an avenue for researchers to explore phenomena within their context based on the collected data.

This can result in the production of rich, practical insights that can be instrumental in both theory-building and practice. Case studies allow researchers to delve into the intricacies and complexities of real-life situations, uncovering insights that might otherwise remain hidden.

Types of case studies

In qualitative research , a case study is not a one-size-fits-all approach. Depending on the nature of the research question and the specific objectives of the study, researchers might choose to use different types of case studies. These types differ in their focus, methodology, and the level of detail they provide about the phenomenon under investigation.

Understanding these types is crucial for selecting the most appropriate approach for your research project and effectively achieving your research goals. Let's briefly look at the main types of case studies.

Exploratory case studies

Exploratory case studies are typically conducted to develop a theory or framework around an understudied phenomenon. They can also serve as a precursor to a larger-scale research project. Exploratory case studies are useful when a researcher wants to identify the key issues or questions which can spur more extensive study or be used to develop propositions for further research. These case studies are characterized by flexibility, allowing researchers to explore various aspects of a phenomenon as they emerge, which can also form the foundation for subsequent studies.

Descriptive case studies

Descriptive case studies aim to provide a complete and accurate representation of a phenomenon or event within its context. These case studies are often based on an established theoretical framework, which guides how data is collected and analyzed. The researcher is concerned with describing the phenomenon in detail, as it occurs naturally, without trying to influence or manipulate it.

Explanatory case studies

Explanatory case studies are focused on explanation - they seek to clarify how or why certain phenomena occur. Often used in complex, real-life situations, they can be particularly valuable in clarifying causal relationships among concepts and understanding the interplay between different factors within a specific context.

Intrinsic, instrumental, and collective case studies

These three categories of case studies focus on the nature and purpose of the study. An intrinsic case study is conducted when a researcher has an inherent interest in the case itself. Instrumental case studies are employed when the case is used to provide insight into a particular issue or phenomenon. A collective case study, on the other hand, involves studying multiple cases simultaneously to investigate some general phenomena.

Each type of case study serves a different purpose and has its own strengths and challenges. The selection of the type should be guided by the research question and objectives, as well as the context and constraints of the research.

The flexibility, depth, and contextual richness offered by case studies make this approach an excellent research method for various fields of study. They enable researchers to investigate real-world phenomena within their specific contexts, capturing nuances that other research methods might miss. Across numerous fields, case studies provide valuable insights into complex issues.

Critical information systems research

Case studies provide a detailed understanding of the role and impact of information systems in different contexts. They offer a platform to explore how information systems are designed, implemented, and used and how they interact with various social, economic, and political factors. Case studies in this field often focus on examining the intricate relationship between technology, organizational processes, and user behavior, helping to uncover insights that can inform better system design and implementation.

Health research

Health research is another field where case studies are highly valuable. They offer a way to explore patient experiences, healthcare delivery processes, and the impact of various interventions in a real-world context.

Case studies can provide a deep understanding of a patient's journey, giving insights into the intricacies of disease progression, treatment effects, and the psychosocial aspects of health and illness.

Asthma research studies

Specifically within medical research, studies on asthma often employ case studies to explore the individual and environmental factors that influence asthma development, management, and outcomes. A case study can provide rich, detailed data about individual patients' experiences, from the triggers and symptoms they experience to the effectiveness of various management strategies. This can be crucial for developing patient-centered asthma care approaches.

Other fields

Apart from the fields mentioned, case studies are also extensively used in business and management research, education research, and political sciences, among many others. They provide an opportunity to delve into the intricacies of real-world situations, allowing for a comprehensive understanding of various phenomena.

Case studies, with their depth and contextual focus, offer unique insights across these varied fields. They allow researchers to illuminate the complexities of real-life situations, contributing to both theory and practice.

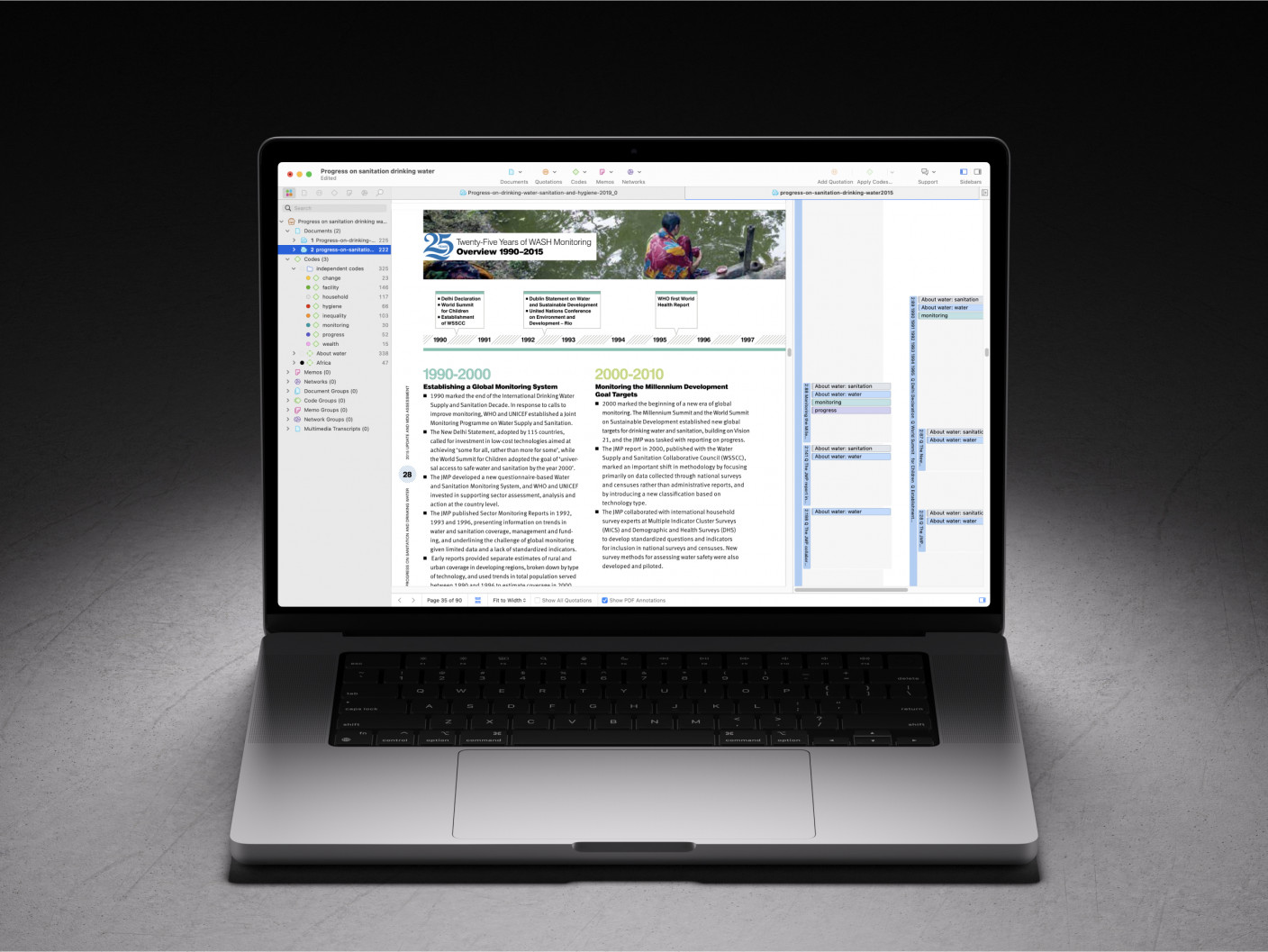

Whatever field you're in, ATLAS.ti puts your data to work for you

Download a free trial of ATLAS.ti to turn your data into insights.

Understanding the key elements of case study design is crucial for conducting rigorous and impactful case study research. A well-structured design guides the researcher through the process, ensuring that the study is methodologically sound and its findings are reliable and valid. The main elements of case study design include the research question , propositions, units of analysis, and the logic linking the data to the propositions.

The research question is the foundation of any research study. A good research question guides the direction of the study and informs the selection of the case, the methods of collecting data, and the analysis techniques. A well-formulated research question in case study research is typically clear, focused, and complex enough to merit further detailed examination of the relevant case(s).

Propositions

Propositions, though not necessary in every case study, provide a direction by stating what we might expect to find in the data collected. They guide how data is collected and analyzed by helping researchers focus on specific aspects of the case. They are particularly important in explanatory case studies, which seek to understand the relationships among concepts within the studied phenomenon.

Units of analysis

The unit of analysis refers to the case, or the main entity or entities that are being analyzed in the study. In case study research, the unit of analysis can be an individual, a group, an organization, a decision, an event, or even a time period. It's crucial to clearly define the unit of analysis, as it shapes the qualitative data analysis process by allowing the researcher to analyze a particular case and synthesize analysis across multiple case studies to draw conclusions.

Argumentation

This refers to the inferential model that allows researchers to draw conclusions from the data. The researcher needs to ensure that there is a clear link between the data, the propositions (if any), and the conclusions drawn. This argumentation is what enables the researcher to make valid and credible inferences about the phenomenon under study.

Understanding and carefully considering these elements in the design phase of a case study can significantly enhance the quality of the research. It can help ensure that the study is methodologically sound and its findings contribute meaningful insights about the case.

Ready to jumpstart your research with ATLAS.ti?

Conceptualize your research project with our intuitive data analysis interface. Download a free trial today.

Conducting a case study involves several steps, from defining the research question and selecting the case to collecting and analyzing data . This section outlines these key stages, providing a practical guide on how to conduct case study research.

Defining the research question

The first step in case study research is defining a clear, focused research question. This question should guide the entire research process, from case selection to analysis. It's crucial to ensure that the research question is suitable for a case study approach. Typically, such questions are exploratory or descriptive in nature and focus on understanding a phenomenon within its real-life context.

Selecting and defining the case

The selection of the case should be based on the research question and the objectives of the study. It involves choosing a unique example or a set of examples that provide rich, in-depth data about the phenomenon under investigation. After selecting the case, it's crucial to define it clearly, setting the boundaries of the case, including the time period and the specific context.

Previous research can help guide the case study design. When considering a case study, an example of a case could be taken from previous case study research and used to define cases in a new research inquiry. Considering recently published examples can help understand how to select and define cases effectively.

Developing a detailed case study protocol

A case study protocol outlines the procedures and general rules to be followed during the case study. This includes the data collection methods to be used, the sources of data, and the procedures for analysis. Having a detailed case study protocol ensures consistency and reliability in the study.

The protocol should also consider how to work with the people involved in the research context to grant the research team access to collecting data. As mentioned in previous sections of this guide, establishing rapport is an essential component of qualitative research as it shapes the overall potential for collecting and analyzing data.

Collecting data

Gathering data in case study research often involves multiple sources of evidence, including documents, archival records, interviews, observations, and physical artifacts. This allows for a comprehensive understanding of the case. The process for gathering data should be systematic and carefully documented to ensure the reliability and validity of the study.

Analyzing and interpreting data

The next step is analyzing the data. This involves organizing the data , categorizing it into themes or patterns , and interpreting these patterns to answer the research question. The analysis might also involve comparing the findings with prior research or theoretical propositions.

Writing the case study report

The final step is writing the case study report . This should provide a detailed description of the case, the data, the analysis process, and the findings. The report should be clear, organized, and carefully written to ensure that the reader can understand the case and the conclusions drawn from it.

Each of these steps is crucial in ensuring that the case study research is rigorous, reliable, and provides valuable insights about the case.

The type, depth, and quality of data in your study can significantly influence the validity and utility of the study. In case study research, data is usually collected from multiple sources to provide a comprehensive and nuanced understanding of the case. This section will outline the various methods of collecting data used in case study research and discuss considerations for ensuring the quality of the data.

Interviews are a common method of gathering data in case study research. They can provide rich, in-depth data about the perspectives, experiences, and interpretations of the individuals involved in the case. Interviews can be structured , semi-structured , or unstructured , depending on the research question and the degree of flexibility needed.

Observations

Observations involve the researcher observing the case in its natural setting, providing first-hand information about the case and its context. Observations can provide data that might not be revealed in interviews or documents, such as non-verbal cues or contextual information.

Documents and artifacts

Documents and archival records provide a valuable source of data in case study research. They can include reports, letters, memos, meeting minutes, email correspondence, and various public and private documents related to the case.

These records can provide historical context, corroborate evidence from other sources, and offer insights into the case that might not be apparent from interviews or observations.

Physical artifacts refer to any physical evidence related to the case, such as tools, products, or physical environments. These artifacts can provide tangible insights into the case, complementing the data gathered from other sources.

Ensuring the quality of data collection

Determining the quality of data in case study research requires careful planning and execution. It's crucial to ensure that the data is reliable, accurate, and relevant to the research question. This involves selecting appropriate methods of collecting data, properly training interviewers or observers, and systematically recording and storing the data. It also includes considering ethical issues related to collecting and handling data, such as obtaining informed consent and ensuring the privacy and confidentiality of the participants.

Data analysis

Analyzing case study research involves making sense of the rich, detailed data to answer the research question. This process can be challenging due to the volume and complexity of case study data. However, a systematic and rigorous approach to analysis can ensure that the findings are credible and meaningful. This section outlines the main steps and considerations in analyzing data in case study research.

Organizing the data

The first step in the analysis is organizing the data. This involves sorting the data into manageable sections, often according to the data source or the theme. This step can also involve transcribing interviews, digitizing physical artifacts, or organizing observational data.

Categorizing and coding the data

Once the data is organized, the next step is to categorize or code the data. This involves identifying common themes, patterns, or concepts in the data and assigning codes to relevant data segments. Coding can be done manually or with the help of software tools, and in either case, qualitative analysis software can greatly facilitate the entire coding process. Coding helps to reduce the data to a set of themes or categories that can be more easily analyzed.

Identifying patterns and themes

After coding the data, the researcher looks for patterns or themes in the coded data. This involves comparing and contrasting the codes and looking for relationships or patterns among them. The identified patterns and themes should help answer the research question.

Interpreting the data

Once patterns and themes have been identified, the next step is to interpret these findings. This involves explaining what the patterns or themes mean in the context of the research question and the case. This interpretation should be grounded in the data, but it can also involve drawing on theoretical concepts or prior research.

Verification of the data

The last step in the analysis is verification. This involves checking the accuracy and consistency of the analysis process and confirming that the findings are supported by the data. This can involve re-checking the original data, checking the consistency of codes, or seeking feedback from research participants or peers.

Like any research method , case study research has its strengths and limitations. Researchers must be aware of these, as they can influence the design, conduct, and interpretation of the study.

Understanding the strengths and limitations of case study research can also guide researchers in deciding whether this approach is suitable for their research question . This section outlines some of the key strengths and limitations of case study research.

Benefits include the following:

- Rich, detailed data: One of the main strengths of case study research is that it can generate rich, detailed data about the case. This can provide a deep understanding of the case and its context, which can be valuable in exploring complex phenomena.

- Flexibility: Case study research is flexible in terms of design , data collection , and analysis . A sufficient degree of flexibility allows the researcher to adapt the study according to the case and the emerging findings.

- Real-world context: Case study research involves studying the case in its real-world context, which can provide valuable insights into the interplay between the case and its context.

- Multiple sources of evidence: Case study research often involves collecting data from multiple sources , which can enhance the robustness and validity of the findings.

On the other hand, researchers should consider the following limitations:

- Generalizability: A common criticism of case study research is that its findings might not be generalizable to other cases due to the specificity and uniqueness of each case.

- Time and resource intensive: Case study research can be time and resource intensive due to the depth of the investigation and the amount of collected data.

- Complexity of analysis: The rich, detailed data generated in case study research can make analyzing the data challenging.

- Subjectivity: Given the nature of case study research, there may be a higher degree of subjectivity in interpreting the data , so researchers need to reflect on this and transparently convey to audiences how the research was conducted.

Being aware of these strengths and limitations can help researchers design and conduct case study research effectively and interpret and report the findings appropriately.

Ready to analyze your data with ATLAS.ti?

See how our intuitive software can draw key insights from your data with a free trial today.

- Search Menu

Sign in through your institution

- Browse content in Arts and Humanities

- Browse content in Archaeology

- Anglo-Saxon and Medieval Archaeology

- Archaeological Methodology and Techniques

- Archaeology by Region

- Archaeology of Religion

- Archaeology of Trade and Exchange

- Biblical Archaeology

- Contemporary and Public Archaeology

- Environmental Archaeology

- Historical Archaeology

- History and Theory of Archaeology

- Industrial Archaeology

- Landscape Archaeology

- Mortuary Archaeology

- Prehistoric Archaeology

- Underwater Archaeology

- Urban Archaeology

- Zooarchaeology

- Browse content in Architecture

- Architectural Structure and Design

- History of Architecture

- Residential and Domestic Buildings

- Theory of Architecture

- Browse content in Art

- Art Subjects and Themes

- History of Art

- Industrial and Commercial Art

- Theory of Art

- Biographical Studies

- Byzantine Studies

- Browse content in Classical Studies

- Classical Literature

- Classical Reception

- Classical History

- Classical Philosophy

- Classical Mythology

- Classical Art and Architecture

- Classical Oratory and Rhetoric

- Greek and Roman Papyrology

- Greek and Roman Archaeology

- Greek and Roman Epigraphy

- Greek and Roman Law

- Late Antiquity

- Religion in the Ancient World

- Digital Humanities

- Browse content in History

- Colonialism and Imperialism

- Diplomatic History

- Environmental History

- Genealogy, Heraldry, Names, and Honours

- Genocide and Ethnic Cleansing

- Historical Geography

- History by Period

- History of Emotions

- History of Agriculture

- History of Education

- History of Gender and Sexuality

- Industrial History

- Intellectual History

- International History

- Labour History

- Legal and Constitutional History

- Local and Family History

- Maritime History

- Military History

- National Liberation and Post-Colonialism

- Oral History

- Political History

- Public History

- Regional and National History

- Revolutions and Rebellions

- Slavery and Abolition of Slavery

- Social and Cultural History

- Theory, Methods, and Historiography

- Urban History

- World History

- Browse content in Language Teaching and Learning

- Language Learning (Specific Skills)

- Language Teaching Theory and Methods

- Browse content in Linguistics

- Applied Linguistics

- Cognitive Linguistics

- Computational Linguistics

- Forensic Linguistics

- Grammar, Syntax and Morphology

- Historical and Diachronic Linguistics

- History of English

- Language Evolution

- Language Reference

- Language Variation

- Language Families

- Language Acquisition

- Lexicography

- Linguistic Anthropology

- Linguistic Theories

- Linguistic Typology

- Phonetics and Phonology

- Psycholinguistics

- Sociolinguistics

- Translation and Interpretation

- Writing Systems

- Browse content in Literature

- Bibliography

- Children's Literature Studies

- Literary Studies (Romanticism)

- Literary Studies (American)

- Literary Studies (Modernism)

- Literary Studies (Asian)

- Literary Studies (European)

- Literary Studies (Eco-criticism)

- Literary Studies - World

- Literary Studies (1500 to 1800)

- Literary Studies (19th Century)

- Literary Studies (20th Century onwards)

- Literary Studies (African American Literature)

- Literary Studies (British and Irish)

- Literary Studies (Early and Medieval)

- Literary Studies (Fiction, Novelists, and Prose Writers)

- Literary Studies (Gender Studies)

- Literary Studies (Graphic Novels)

- Literary Studies (History of the Book)

- Literary Studies (Plays and Playwrights)

- Literary Studies (Poetry and Poets)

- Literary Studies (Postcolonial Literature)

- Literary Studies (Queer Studies)

- Literary Studies (Science Fiction)

- Literary Studies (Travel Literature)

- Literary Studies (War Literature)

- Literary Studies (Women's Writing)

- Literary Theory and Cultural Studies

- Mythology and Folklore

- Shakespeare Studies and Criticism

- Browse content in Media Studies

- Browse content in Music

- Applied Music

- Dance and Music

- Ethics in Music

- Ethnomusicology

- Gender and Sexuality in Music

- Medicine and Music

- Music Cultures

- Music and Media

- Music and Culture

- Music and Religion

- Music Education and Pedagogy

- Music Theory and Analysis

- Musical Scores, Lyrics, and Libretti

- Musical Structures, Styles, and Techniques

- Musicology and Music History

- Performance Practice and Studies

- Race and Ethnicity in Music

- Sound Studies

- Browse content in Performing Arts

- Browse content in Philosophy

- Aesthetics and Philosophy of Art

- Epistemology

- Feminist Philosophy

- History of Western Philosophy

- Metaphysics

- Moral Philosophy

- Non-Western Philosophy

- Philosophy of Language

- Philosophy of Mind

- Philosophy of Perception

- Philosophy of Action

- Philosophy of Law

- Philosophy of Religion

- Philosophy of Science

- Philosophy of Mathematics and Logic

- Practical Ethics

- Social and Political Philosophy

- Browse content in Religion

- Biblical Studies

- Christianity

- East Asian Religions

- History of Religion

- Judaism and Jewish Studies

- Qumran Studies

- Religion and Education

- Religion and Health

- Religion and Politics

- Religion and Science

- Religion and Law

- Religion and Art, Literature, and Music

- Religious Studies

- Browse content in Society and Culture

- Cookery, Food, and Drink

- Cultural Studies

- Customs and Traditions

- Ethical Issues and Debates

- Hobbies, Games, Arts and Crafts

- Lifestyle, Home, and Garden

- Natural world, Country Life, and Pets

- Popular Beliefs and Controversial Knowledge

- Sports and Outdoor Recreation

- Technology and Society

- Travel and Holiday

- Visual Culture

- Browse content in Law

- Arbitration

- Browse content in Company and Commercial Law

- Commercial Law

- Company Law

- Browse content in Comparative Law

- Systems of Law

- Competition Law

- Browse content in Constitutional and Administrative Law

- Government Powers

- Judicial Review

- Local Government Law

- Military and Defence Law

- Parliamentary and Legislative Practice

- Construction Law

- Contract Law

- Browse content in Criminal Law

- Criminal Procedure

- Criminal Evidence Law

- Sentencing and Punishment

- Employment and Labour Law

- Environment and Energy Law

- Browse content in Financial Law

- Banking Law

- Insolvency Law

- History of Law

- Human Rights and Immigration

- Intellectual Property Law

- Browse content in International Law

- Private International Law and Conflict of Laws

- Public International Law

- IT and Communications Law

- Jurisprudence and Philosophy of Law

- Law and Society

- Law and Politics

- Browse content in Legal System and Practice

- Courts and Procedure

- Legal Skills and Practice

- Primary Sources of Law

- Regulation of Legal Profession

- Medical and Healthcare Law

- Browse content in Policing

- Criminal Investigation and Detection

- Police and Security Services

- Police Procedure and Law

- Police Regional Planning

- Browse content in Property Law

- Personal Property Law

- Study and Revision

- Terrorism and National Security Law

- Browse content in Trusts Law

- Wills and Probate or Succession

- Browse content in Medicine and Health

- Browse content in Allied Health Professions

- Arts Therapies

- Clinical Science

- Dietetics and Nutrition

- Occupational Therapy

- Operating Department Practice

- Physiotherapy

- Radiography

- Speech and Language Therapy

- Browse content in Anaesthetics

- General Anaesthesia

- Neuroanaesthesia

- Clinical Neuroscience

- Browse content in Clinical Medicine

- Acute Medicine

- Cardiovascular Medicine

- Clinical Genetics

- Clinical Pharmacology and Therapeutics

- Dermatology

- Endocrinology and Diabetes

- Gastroenterology

- Genito-urinary Medicine

- Geriatric Medicine

- Infectious Diseases

- Medical Toxicology

- Medical Oncology

- Pain Medicine

- Palliative Medicine

- Rehabilitation Medicine

- Respiratory Medicine and Pulmonology

- Rheumatology

- Sleep Medicine

- Sports and Exercise Medicine

- Community Medical Services

- Critical Care

- Emergency Medicine

- Forensic Medicine

- Haematology

- History of Medicine

- Browse content in Medical Skills

- Clinical Skills

- Communication Skills

- Nursing Skills

- Surgical Skills

- Medical Ethics

- Browse content in Medical Dentistry

- Oral and Maxillofacial Surgery

- Paediatric Dentistry

- Restorative Dentistry and Orthodontics

- Surgical Dentistry

- Medical Statistics and Methodology

- Browse content in Neurology

- Clinical Neurophysiology

- Neuropathology

- Nursing Studies

- Browse content in Obstetrics and Gynaecology

- Gynaecology

- Occupational Medicine

- Ophthalmology

- Otolaryngology (ENT)

- Browse content in Paediatrics

- Neonatology

- Browse content in Pathology

- Chemical Pathology

- Clinical Cytogenetics and Molecular Genetics

- Histopathology

- Medical Microbiology and Virology

- Patient Education and Information

- Browse content in Pharmacology

- Psychopharmacology

- Browse content in Popular Health

- Caring for Others

- Complementary and Alternative Medicine

- Self-help and Personal Development

- Browse content in Preclinical Medicine

- Cell Biology

- Molecular Biology and Genetics

- Reproduction, Growth and Development

- Primary Care

- Professional Development in Medicine

- Browse content in Psychiatry

- Addiction Medicine

- Child and Adolescent Psychiatry

- Forensic Psychiatry

- Learning Disabilities

- Old Age Psychiatry

- Psychotherapy

- Browse content in Public Health and Epidemiology

- Epidemiology

- Public Health

- Browse content in Radiology

- Clinical Radiology

- Interventional Radiology

- Nuclear Medicine

- Radiation Oncology

- Reproductive Medicine

- Browse content in Surgery

- Cardiothoracic Surgery

- Gastro-intestinal and Colorectal Surgery

- General Surgery

- Neurosurgery

- Paediatric Surgery

- Peri-operative Care

- Plastic and Reconstructive Surgery

- Surgical Oncology

- Transplant Surgery

- Trauma and Orthopaedic Surgery

- Vascular Surgery

- Browse content in Science and Mathematics

- Browse content in Biological Sciences

- Aquatic Biology

- Biochemistry

- Bioinformatics and Computational Biology

- Developmental Biology

- Ecology and Conservation

- Evolutionary Biology

- Genetics and Genomics

- Microbiology

- Molecular and Cell Biology

- Natural History

- Plant Sciences and Forestry

- Research Methods in Life Sciences

- Structural Biology

- Systems Biology

- Zoology and Animal Sciences

- Browse content in Chemistry

- Analytical Chemistry

- Computational Chemistry

- Crystallography

- Environmental Chemistry

- Industrial Chemistry

- Inorganic Chemistry

- Materials Chemistry

- Medicinal Chemistry

- Mineralogy and Gems

- Organic Chemistry

- Physical Chemistry

- Polymer Chemistry

- Study and Communication Skills in Chemistry

- Theoretical Chemistry

- Browse content in Computer Science

- Artificial Intelligence

- Computer Architecture and Logic Design

- Game Studies

- Human-Computer Interaction

- Mathematical Theory of Computation

- Programming Languages

- Software Engineering

- Systems Analysis and Design

- Virtual Reality

- Browse content in Computing

- Business Applications

- Computer Games

- Computer Security

- Computer Networking and Communications

- Digital Lifestyle

- Graphical and Digital Media Applications

- Operating Systems

- Browse content in Earth Sciences and Geography

- Atmospheric Sciences

- Environmental Geography

- Geology and the Lithosphere

- Maps and Map-making

- Meteorology and Climatology

- Oceanography and Hydrology

- Palaeontology

- Physical Geography and Topography

- Regional Geography

- Soil Science

- Urban Geography

- Browse content in Engineering and Technology

- Agriculture and Farming

- Biological Engineering

- Civil Engineering, Surveying, and Building

- Electronics and Communications Engineering

- Energy Technology

- Engineering (General)

- Environmental Science, Engineering, and Technology

- History of Engineering and Technology

- Mechanical Engineering and Materials

- Technology of Industrial Chemistry

- Transport Technology and Trades

- Browse content in Environmental Science

- Applied Ecology (Environmental Science)

- Conservation of the Environment (Environmental Science)

- Environmental Sustainability

- Environmentalist Thought and Ideology (Environmental Science)

- Management of Land and Natural Resources (Environmental Science)

- Natural Disasters (Environmental Science)

- Nuclear Issues (Environmental Science)

- Pollution and Threats to the Environment (Environmental Science)

- Social Impact of Environmental Issues (Environmental Science)

- History of Science and Technology

- Browse content in Materials Science

- Ceramics and Glasses

- Composite Materials

- Metals, Alloying, and Corrosion

- Nanotechnology

- Browse content in Mathematics

- Applied Mathematics

- Biomathematics and Statistics

- History of Mathematics

- Mathematical Education

- Mathematical Finance

- Mathematical Analysis

- Numerical and Computational Mathematics

- Probability and Statistics

- Pure Mathematics

- Browse content in Neuroscience

- Cognition and Behavioural Neuroscience

- Development of the Nervous System

- Disorders of the Nervous System

- History of Neuroscience

- Invertebrate Neurobiology

- Molecular and Cellular Systems

- Neuroendocrinology and Autonomic Nervous System

- Neuroscientific Techniques

- Sensory and Motor Systems

- Browse content in Physics

- Astronomy and Astrophysics

- Atomic, Molecular, and Optical Physics

- Biological and Medical Physics

- Classical Mechanics

- Computational Physics

- Condensed Matter Physics

- Electromagnetism, Optics, and Acoustics

- History of Physics

- Mathematical and Statistical Physics

- Measurement Science

- Nuclear Physics

- Particles and Fields

- Plasma Physics

- Quantum Physics

- Relativity and Gravitation

- Semiconductor and Mesoscopic Physics

- Browse content in Psychology

- Affective Sciences

- Clinical Psychology

- Cognitive Psychology

- Cognitive Neuroscience

- Criminal and Forensic Psychology

- Developmental Psychology

- Educational Psychology

- Evolutionary Psychology

- Health Psychology

- History and Systems in Psychology

- Music Psychology

- Neuropsychology

- Organizational Psychology

- Psychological Assessment and Testing

- Psychology of Human-Technology Interaction

- Psychology Professional Development and Training

- Research Methods in Psychology

- Social Psychology

- Browse content in Social Sciences

- Browse content in Anthropology

- Anthropology of Religion

- Human Evolution

- Medical Anthropology

- Physical Anthropology

- Regional Anthropology

- Social and Cultural Anthropology

- Theory and Practice of Anthropology

- Browse content in Business and Management

- Business Ethics

- Business History

- Business Strategy

- Business and Technology

- Business and Government

- Business and the Environment

- Comparative Management

- Corporate Governance

- Corporate Social Responsibility

- Entrepreneurship

- Health Management

- Human Resource Management

- Industrial and Employment Relations

- Industry Studies

- Information and Communication Technologies

- International Business

- Knowledge Management

- Management and Management Techniques

- Operations Management

- Organizational Theory and Behaviour

- Pensions and Pension Management

- Public and Nonprofit Management

- Strategic Management

- Supply Chain Management

- Browse content in Criminology and Criminal Justice

- Criminal Justice

- Criminology

- Forms of Crime

- International and Comparative Criminology

- Youth Violence and Juvenile Justice

- Development Studies

- Browse content in Economics

- Agricultural, Environmental, and Natural Resource Economics

- Asian Economics

- Behavioural Finance

- Behavioural Economics and Neuroeconomics

- Econometrics and Mathematical Economics

- Economic History

- Economic Methodology

- Economic Systems

- Economic Development and Growth

- Financial Markets

- Financial Institutions and Services

- General Economics and Teaching

- Health, Education, and Welfare

- History of Economic Thought

- International Economics

- Labour and Demographic Economics

- Law and Economics

- Macroeconomics and Monetary Economics

- Microeconomics

- Public Economics

- Urban, Rural, and Regional Economics

- Welfare Economics

- Browse content in Education

- Adult Education and Continuous Learning

- Care and Counselling of Students

- Early Childhood and Elementary Education

- Educational Equipment and Technology

- Educational Strategies and Policy

- Higher and Further Education

- Organization and Management of Education

- Philosophy and Theory of Education

- Schools Studies

- Secondary Education

- Teaching of a Specific Subject

- Teaching of Specific Groups and Special Educational Needs

- Teaching Skills and Techniques

- Browse content in Environment

- Applied Ecology (Social Science)

- Climate Change

- Conservation of the Environment (Social Science)

- Environmentalist Thought and Ideology (Social Science)

- Natural Disasters (Environment)

- Social Impact of Environmental Issues (Social Science)

- Browse content in Human Geography

- Cultural Geography

- Economic Geography

- Political Geography

- Browse content in Interdisciplinary Studies

- Communication Studies

- Museums, Libraries, and Information Sciences

- Browse content in Politics

- African Politics

- Asian Politics

- Chinese Politics

- Comparative Politics

- Conflict Politics

- Elections and Electoral Studies

- Environmental Politics

- European Union

- Foreign Policy

- Gender and Politics

- Human Rights and Politics

- Indian Politics

- International Relations

- International Organization (Politics)

- International Political Economy

- Irish Politics

- Latin American Politics

- Middle Eastern Politics

- Political Behaviour

- Political Economy

- Political Institutions

- Political Theory

- Political Methodology

- Political Communication

- Political Philosophy

- Political Sociology

- Politics and Law

- Politics of Development

- Public Policy

- Public Administration

- Quantitative Political Methodology

- Regional Political Studies

- Russian Politics

- Security Studies

- State and Local Government

- UK Politics

- US Politics

- Browse content in Regional and Area Studies

- African Studies

- Asian Studies

- East Asian Studies

- Japanese Studies

- Latin American Studies

- Middle Eastern Studies

- Native American Studies

- Scottish Studies

- Browse content in Research and Information

- Research Methods

- Browse content in Social Work

- Addictions and Substance Misuse

- Adoption and Fostering

- Care of the Elderly

- Child and Adolescent Social Work

- Couple and Family Social Work

- Developmental and Physical Disabilities Social Work

- Direct Practice and Clinical Social Work

- Emergency Services

- Human Behaviour and the Social Environment

- International and Global Issues in Social Work

- Mental and Behavioural Health

- Social Justice and Human Rights

- Social Policy and Advocacy

- Social Work and Crime and Justice

- Social Work Macro Practice

- Social Work Practice Settings

- Social Work Research and Evidence-based Practice

- Welfare and Benefit Systems

- Browse content in Sociology

- Childhood Studies

- Community Development

- Comparative and Historical Sociology

- Economic Sociology

- Gender and Sexuality

- Gerontology and Ageing

- Health, Illness, and Medicine

- Marriage and the Family

- Migration Studies

- Occupations, Professions, and Work

- Organizations

- Population and Demography

- Race and Ethnicity

- Social Theory

- Social Movements and Social Change

- Social Research and Statistics

- Social Stratification, Inequality, and Mobility

- Sociology of Religion

- Sociology of Education

- Sport and Leisure

- Urban and Rural Studies

- Browse content in Warfare and Defence

- Defence Strategy, Planning, and Research

- Land Forces and Warfare

- Military Administration

- Military Life and Institutions

- Naval Forces and Warfare

- Other Warfare and Defence Issues

- Peace Studies and Conflict Resolution

- Weapons and Equipment

- < Previous chapter

- Next chapter >

6 Case Study and Selection

- Published: March 2024

- Cite Icon Cite

- Permissions Icon Permissions

This chapter presents and critically examines best practices for case study research and case selection. In reviewing prominent case study criteria that prioritize objective, analytical needs of research design (“appropriateness”), the chapter argues that overlooked considerations of feasibility (including positionality, resources, and skills) and interest play equally important roles when selecting and studying cases. The chapter concludes by arguing for an iterative process of research design, one that equally weighs pragmatic considerations and academic concerns of best fit. This strategy hopes to make qualitative research more accessible and transparent and to diversify the types of cases we study and questions we ask.

Signed in as

Institutional accounts.

- Google Scholar Indexing

- GoogleCrawler [DO NOT DELETE]

Personal account

- Sign in with email/username & password

- Get email alerts

- Save searches

- Purchase content

- Activate your purchase/trial code

- Add your ORCID iD

Institutional access

Sign in with a library card.

- Sign in with username/password

- Recommend to your librarian

- Institutional account management

- Get help with access

Access to content on Oxford Academic is often provided through institutional subscriptions and purchases. If you are a member of an institution with an active account, you may be able to access content in one of the following ways:

IP based access

Typically, access is provided across an institutional network to a range of IP addresses. This authentication occurs automatically, and it is not possible to sign out of an IP authenticated account.

Choose this option to get remote access when outside your institution. Shibboleth/Open Athens technology is used to provide single sign-on between your institution’s website and Oxford Academic.

- Click Sign in through your institution.

- Select your institution from the list provided, which will take you to your institution's website to sign in.

- When on the institution site, please use the credentials provided by your institution. Do not use an Oxford Academic personal account.

- Following successful sign in, you will be returned to Oxford Academic.

If your institution is not listed or you cannot sign in to your institution’s website, please contact your librarian or administrator.

Enter your library card number to sign in. If you cannot sign in, please contact your librarian.

Society Members

Society member access to a journal is achieved in one of the following ways:

Sign in through society site

Many societies offer single sign-on between the society website and Oxford Academic. If you see ‘Sign in through society site’ in the sign in pane within a journal:

- Click Sign in through society site.

- When on the society site, please use the credentials provided by that society. Do not use an Oxford Academic personal account.

If you do not have a society account or have forgotten your username or password, please contact your society.

Sign in using a personal account

Some societies use Oxford Academic personal accounts to provide access to their members. See below.

A personal account can be used to get email alerts, save searches, purchase content, and activate subscriptions.

Some societies use Oxford Academic personal accounts to provide access to their members.

Viewing your signed in accounts

Click the account icon in the top right to:

- View your signed in personal account and access account management features.

- View the institutional accounts that are providing access.

Signed in but can't access content

Oxford Academic is home to a wide variety of products. The institutional subscription may not cover the content that you are trying to access. If you believe you should have access to that content, please contact your librarian.

For librarians and administrators, your personal account also provides access to institutional account management. Here you will find options to view and activate subscriptions, manage institutional settings and access options, access usage statistics, and more.

Our books are available by subscription or purchase to libraries and institutions.

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Rights and permissions

- Accessibility

- Advertising

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

Case Selection Techniques in Case Study Research: A Menu of Qualitative and Quantitative Options

- Related Documents

Case Selection for Case‐Study Analysis: Qualitative and Quantitative Techniques

This article presents some guidance by cataloging nine different techniques for case selection: typical, diverse, extreme, deviant, influential, crucial, pathway, most similar, and most different. It also indicates that if the researcher is starting from a quantitative database, then methods for finding influential outliers can be used. In particular, the article clarifies the general principles that might guide the process of case selection in case-study research. Cases are more or less representative of some broader phenomenon and, on that score, may be considered better or worse subjects for intensive analysis. The article then draws attention to two ambiguities in case-selection strategies in case-study research. The first concerns the admixture of several case-selection strategies. The second concerns the changing status of a case as a study proceeds. Some case studies follow only one strategy of case selection.

Case Selection and Hypothesis Testing

This chapter discusses quantitative and qualitative practices of case-study selection when the goal of the analysis is to evaluate causal hypotheses. More specifically, it considers how the different causal models used in the qualitative and quantitative research cultures shape the kind of cases that provide the most leverage for hypothesis testing. The chapter examines whether one should select cases based on their value on the dependent variable. It also evaluates the kinds of cases that provide the most leverage for causal inference when conducting case-study research. It shows that differences in research goals between quantitative and qualitative scholars yield distinct ideas about best strategies of case selection. Qualitative research places emphasis on explaining particular cases; quantitative research does not.

Paying Attention to the Trees in the Forest, or a Call to Examine Agency-Specific Stories

Public administration scholarship needs to strike a better balance between large sample studies and in-depth case studies. The availability of large data sets has led us to engage in empirical research that is broad in scope but is frequently devoid of rich context. In-depth case studies can help to explain why we observe particular relationships and can help us to clarify gaps and inconsistencies in theory. Our argument for more case studies aims to encourage researchers to bridge insights from qualitative and quantitative research through triangulation. We describe the value of case study research, and qualitative and quantitative design options. We then propose opportunities for case study research in public personnel scholarship on patronage pressures, performance management, and diversity management.

Case Selection Techniques in Case Study Research

Blending the focused ethnographic method and case study research: implications regarding case selection and generalization of results.

In this article, we present the benefits of blending the methodological characteristics of the focused ethnographic method (FEM) and case study research (CSR) for a study on auxiliary work processes in a hospital in Barcelona, Spain. We argue that incorporating CSR logic and principles in the FEM produces a better form of inquiry, as this combination improves the quality of data and the ability to make comparisons in addition to enhancing the transferability of findings. These better outcomes are achieved because the characteristics of the case study methodological design reinforce many of the strengths of the FEM, such as its thematic concreteness and its targeted data collection. The role played by the theoretical framework of the study thus makes it easier to focus on the key variables of the initial theoretical model and to introduce a logic of multiple case comparison.

Qualitative Case Study Research Design

Qualitative case study research can be a valuable tool for answering complex, real-world questions. This method is often misunderstood or neglected due to a lack of understanding by researchers and reviewers. This tutorial defines the characteristics of qualitative case study research and its application to a broader understanding of stuttering that cannot be defined through other methodologies. This article will describe ways that data can be collected and analyzed.

Intersecting Mixed Methods and Case Study Research: Design Possibilities and Challenges

Robert k. yin. (2014). case study research design and methods (5th ed.). thousand oaks, ca: sage. 282 pages., cooperative banks & local government units - areas of collaboration (poland case study - research results), from the ‘frying-pan’ into the ‘fire’ antidotes to confirmatory bias in case-study research, export citation format, share document.

Organizing Your Social Sciences Research Assignments

- Annotated Bibliography

- Analyzing a Scholarly Journal Article

- Group Presentations

- Dealing with Nervousness

- Using Visual Aids

- Grading Someone Else's Paper

- Types of Structured Group Activities

- Group Project Survival Skills

- Leading a Class Discussion

- Multiple Book Review Essay

- Reviewing Collected Works

- Writing a Case Analysis Paper

- Writing a Case Study

- About Informed Consent

- Writing Field Notes

- Writing a Policy Memo

- Writing a Reflective Paper

- Writing a Research Proposal

- Generative AI and Writing

- Acknowledgments

A case study research paper examines a person, place, event, condition, phenomenon, or other type of subject of analysis in order to extrapolate key themes and results that help predict future trends, illuminate previously hidden issues that can be applied to practice, and/or provide a means for understanding an important research problem with greater clarity. A case study research paper usually examines a single subject of analysis, but case study papers can also be designed as a comparative investigation that shows relationships between two or more subjects. The methods used to study a case can rest within a quantitative, qualitative, or mixed-method investigative paradigm.

Case Studies. Writing@CSU. Colorado State University; Mills, Albert J. , Gabrielle Durepos, and Eiden Wiebe, editors. Encyclopedia of Case Study Research . Thousand Oaks, CA: SAGE Publications, 2010 ; “What is a Case Study?” In Swanborn, Peter G. Case Study Research: What, Why and How? London: SAGE, 2010.

How to Approach Writing a Case Study Research Paper

General information about how to choose a topic to investigate can be found under the " Choosing a Research Problem " tab in the Organizing Your Social Sciences Research Paper writing guide. Review this page because it may help you identify a subject of analysis that can be investigated using a case study design.

However, identifying a case to investigate involves more than choosing the research problem . A case study encompasses a problem contextualized around the application of in-depth analysis, interpretation, and discussion, often resulting in specific recommendations for action or for improving existing conditions. As Seawright and Gerring note, practical considerations such as time and access to information can influence case selection, but these issues should not be the sole factors used in describing the methodological justification for identifying a particular case to study. Given this, selecting a case includes considering the following:

- The case represents an unusual or atypical example of a research problem that requires more in-depth analysis? Cases often represent a topic that rests on the fringes of prior investigations because the case may provide new ways of understanding the research problem. For example, if the research problem is to identify strategies to improve policies that support girl's access to secondary education in predominantly Muslim nations, you could consider using Azerbaijan as a case study rather than selecting a more obvious nation in the Middle East. Doing so may reveal important new insights into recommending how governments in other predominantly Muslim nations can formulate policies that support improved access to education for girls.

- The case provides important insight or illuminate a previously hidden problem? In-depth analysis of a case can be based on the hypothesis that the case study will reveal trends or issues that have not been exposed in prior research or will reveal new and important implications for practice. For example, anecdotal evidence may suggest drug use among homeless veterans is related to their patterns of travel throughout the day. Assuming prior studies have not looked at individual travel choices as a way to study access to illicit drug use, a case study that observes a homeless veteran could reveal how issues of personal mobility choices facilitate regular access to illicit drugs. Note that it is important to conduct a thorough literature review to ensure that your assumption about the need to reveal new insights or previously hidden problems is valid and evidence-based.

- The case challenges and offers a counter-point to prevailing assumptions? Over time, research on any given topic can fall into a trap of developing assumptions based on outdated studies that are still applied to new or changing conditions or the idea that something should simply be accepted as "common sense," even though the issue has not been thoroughly tested in current practice. A case study analysis may offer an opportunity to gather evidence that challenges prevailing assumptions about a research problem and provide a new set of recommendations applied to practice that have not been tested previously. For example, perhaps there has been a long practice among scholars to apply a particular theory in explaining the relationship between two subjects of analysis. Your case could challenge this assumption by applying an innovative theoretical framework [perhaps borrowed from another discipline] to explore whether this approach offers new ways of understanding the research problem. Taking a contrarian stance is one of the most important ways that new knowledge and understanding develops from existing literature.

- The case provides an opportunity to pursue action leading to the resolution of a problem? Another way to think about choosing a case to study is to consider how the results from investigating a particular case may result in findings that reveal ways in which to resolve an existing or emerging problem. For example, studying the case of an unforeseen incident, such as a fatal accident at a railroad crossing, can reveal hidden issues that could be applied to preventative measures that contribute to reducing the chance of accidents in the future. In this example, a case study investigating the accident could lead to a better understanding of where to strategically locate additional signals at other railroad crossings so as to better warn drivers of an approaching train, particularly when visibility is hindered by heavy rain, fog, or at night.

- The case offers a new direction in future research? A case study can be used as a tool for an exploratory investigation that highlights the need for further research about the problem. A case can be used when there are few studies that help predict an outcome or that establish a clear understanding about how best to proceed in addressing a problem. For example, after conducting a thorough literature review [very important!], you discover that little research exists showing the ways in which women contribute to promoting water conservation in rural communities of east central Africa. A case study of how women contribute to saving water in a rural village of Uganda can lay the foundation for understanding the need for more thorough research that documents how women in their roles as cooks and family caregivers think about water as a valuable resource within their community. This example of a case study could also point to the need for scholars to build new theoretical frameworks around the topic [e.g., applying feminist theories of work and family to the issue of water conservation].

Eisenhardt, Kathleen M. “Building Theories from Case Study Research.” Academy of Management Review 14 (October 1989): 532-550; Emmel, Nick. Sampling and Choosing Cases in Qualitative Research: A Realist Approach . Thousand Oaks, CA: SAGE Publications, 2013; Gerring, John. “What Is a Case Study and What Is It Good for?” American Political Science Review 98 (May 2004): 341-354; Mills, Albert J. , Gabrielle Durepos, and Eiden Wiebe, editors. Encyclopedia of Case Study Research . Thousand Oaks, CA: SAGE Publications, 2010; Seawright, Jason and John Gerring. "Case Selection Techniques in Case Study Research." Political Research Quarterly 61 (June 2008): 294-308.

Structure and Writing Style

The purpose of a paper in the social sciences designed around a case study is to thoroughly investigate a subject of analysis in order to reveal a new understanding about the research problem and, in so doing, contributing new knowledge to what is already known from previous studies. In applied social sciences disciplines [e.g., education, social work, public administration, etc.], case studies may also be used to reveal best practices, highlight key programs, or investigate interesting aspects of professional work.

In general, the structure of a case study research paper is not all that different from a standard college-level research paper. However, there are subtle differences you should be aware of. Here are the key elements to organizing and writing a case study research paper.

I. Introduction

As with any research paper, your introduction should serve as a roadmap for your readers to ascertain the scope and purpose of your study . The introduction to a case study research paper, however, should not only describe the research problem and its significance, but you should also succinctly describe why the case is being used and how it relates to addressing the problem. The two elements should be linked. With this in mind, a good introduction answers these four questions:

- What is being studied? Describe the research problem and describe the subject of analysis [the case] you have chosen to address the problem. Explain how they are linked and what elements of the case will help to expand knowledge and understanding about the problem.

- Why is this topic important to investigate? Describe the significance of the research problem and state why a case study design and the subject of analysis that the paper is designed around is appropriate in addressing the problem.

- What did we know about this topic before I did this study? Provide background that helps lead the reader into the more in-depth literature review to follow. If applicable, summarize prior case study research applied to the research problem and why it fails to adequately address the problem. Describe why your case will be useful. If no prior case studies have been used to address the research problem, explain why you have selected this subject of analysis.

- How will this study advance new knowledge or new ways of understanding? Explain why your case study will be suitable in helping to expand knowledge and understanding about the research problem.

Each of these questions should be addressed in no more than a few paragraphs. Exceptions to this can be when you are addressing a complex research problem or subject of analysis that requires more in-depth background information.

II. Literature Review

The literature review for a case study research paper is generally structured the same as it is for any college-level research paper. The difference, however, is that the literature review is focused on providing background information and enabling historical interpretation of the subject of analysis in relation to the research problem the case is intended to address . This includes synthesizing studies that help to:

- Place relevant works in the context of their contribution to understanding the case study being investigated . This would involve summarizing studies that have used a similar subject of analysis to investigate the research problem. If there is literature using the same or a very similar case to study, you need to explain why duplicating past research is important [e.g., conditions have changed; prior studies were conducted long ago, etc.].

- Describe the relationship each work has to the others under consideration that informs the reader why this case is applicable . Your literature review should include a description of any works that support using the case to investigate the research problem and the underlying research questions.

- Identify new ways to interpret prior research using the case study . If applicable, review any research that has examined the research problem using a different research design. Explain how your use of a case study design may reveal new knowledge or a new perspective or that can redirect research in an important new direction.

- Resolve conflicts amongst seemingly contradictory previous studies . This refers to synthesizing any literature that points to unresolved issues of concern about the research problem and describing how the subject of analysis that forms the case study can help resolve these existing contradictions.

- Point the way in fulfilling a need for additional research . Your review should examine any literature that lays a foundation for understanding why your case study design and the subject of analysis around which you have designed your study may reveal a new way of approaching the research problem or offer a perspective that points to the need for additional research.

- Expose any gaps that exist in the literature that the case study could help to fill . Summarize any literature that not only shows how your subject of analysis contributes to understanding the research problem, but how your case contributes to a new way of understanding the problem that prior research has failed to do.

- Locate your own research within the context of existing literature [very important!] . Collectively, your literature review should always place your case study within the larger domain of prior research about the problem. The overarching purpose of reviewing pertinent literature in a case study paper is to demonstrate that you have thoroughly identified and synthesized prior studies in relation to explaining the relevance of the case in addressing the research problem.

III. Method

In this section, you explain why you selected a particular case [i.e., subject of analysis] and the strategy you used to identify and ultimately decide that your case was appropriate in addressing the research problem. The way you describe the methods used varies depending on the type of subject of analysis that constitutes your case study.

If your subject of analysis is an incident or event . In the social and behavioral sciences, the event or incident that represents the case to be studied is usually bounded by time and place, with a clear beginning and end and with an identifiable location or position relative to its surroundings. The subject of analysis can be a rare or critical event or it can focus on a typical or regular event. The purpose of studying a rare event is to illuminate new ways of thinking about the broader research problem or to test a hypothesis. Critical incident case studies must describe the method by which you identified the event and explain the process by which you determined the validity of this case to inform broader perspectives about the research problem or to reveal new findings. However, the event does not have to be a rare or uniquely significant to support new thinking about the research problem or to challenge an existing hypothesis. For example, Walo, Bull, and Breen conducted a case study to identify and evaluate the direct and indirect economic benefits and costs of a local sports event in the City of Lismore, New South Wales, Australia. The purpose of their study was to provide new insights from measuring the impact of a typical local sports event that prior studies could not measure well because they focused on large "mega-events." Whether the event is rare or not, the methods section should include an explanation of the following characteristics of the event: a) when did it take place; b) what were the underlying circumstances leading to the event; and, c) what were the consequences of the event in relation to the research problem.

If your subject of analysis is a person. Explain why you selected this particular individual to be studied and describe what experiences they have had that provide an opportunity to advance new understandings about the research problem. Mention any background about this person which might help the reader understand the significance of their experiences that make them worthy of study. This includes describing the relationships this person has had with other people, institutions, and/or events that support using them as the subject for a case study research paper. It is particularly important to differentiate the person as the subject of analysis from others and to succinctly explain how the person relates to examining the research problem [e.g., why is one politician in a particular local election used to show an increase in voter turnout from any other candidate running in the election]. Note that these issues apply to a specific group of people used as a case study unit of analysis [e.g., a classroom of students].

If your subject of analysis is a place. In general, a case study that investigates a place suggests a subject of analysis that is unique or special in some way and that this uniqueness can be used to build new understanding or knowledge about the research problem. A case study of a place must not only describe its various attributes relevant to the research problem [e.g., physical, social, historical, cultural, economic, political], but you must state the method by which you determined that this place will illuminate new understandings about the research problem. It is also important to articulate why a particular place as the case for study is being used if similar places also exist [i.e., if you are studying patterns of homeless encampments of veterans in open spaces, explain why you are studying Echo Park in Los Angeles rather than Griffith Park?]. If applicable, describe what type of human activity involving this place makes it a good choice to study [e.g., prior research suggests Echo Park has more homeless veterans].

If your subject of analysis is a phenomenon. A phenomenon refers to a fact, occurrence, or circumstance that can be studied or observed but with the cause or explanation to be in question. In this sense, a phenomenon that forms your subject of analysis can encompass anything that can be observed or presumed to exist but is not fully understood. In the social and behavioral sciences, the case usually focuses on human interaction within a complex physical, social, economic, cultural, or political system. For example, the phenomenon could be the observation that many vehicles used by ISIS fighters are small trucks with English language advertisements on them. The research problem could be that ISIS fighters are difficult to combat because they are highly mobile. The research questions could be how and by what means are these vehicles used by ISIS being supplied to the militants and how might supply lines to these vehicles be cut off? How might knowing the suppliers of these trucks reveal larger networks of collaborators and financial support? A case study of a phenomenon most often encompasses an in-depth analysis of a cause and effect that is grounded in an interactive relationship between people and their environment in some way.

NOTE: The choice of the case or set of cases to study cannot appear random. Evidence that supports the method by which you identified and chose your subject of analysis should clearly support investigation of the research problem and linked to key findings from your literature review. Be sure to cite any studies that helped you determine that the case you chose was appropriate for examining the problem.

IV. Discussion

The main elements of your discussion section are generally the same as any research paper, but centered around interpreting and drawing conclusions about the key findings from your analysis of the case study. Note that a general social sciences research paper may contain a separate section to report findings. However, in a paper designed around a case study, it is common to combine a description of the results with the discussion about their implications. The objectives of your discussion section should include the following:

Reiterate the Research Problem/State the Major Findings Briefly reiterate the research problem you are investigating and explain why the subject of analysis around which you designed the case study were used. You should then describe the findings revealed from your study of the case using direct, declarative, and succinct proclamation of the study results. Highlight any findings that were unexpected or especially profound.