Deloitte Data Scientist Interview Guide

Detailed, specific guidance on the Deloitte Data Scientist interview process - with a breakdown of different stages and interview questions asked at each stage

The role of a Deloitte Data Scientist

A Deloitte Data Scientist is a professional who uses data to drive business decisions and solve complex problems. They are responsible for collecting, analyzing, and interpreting large sets of data to identify trends and patterns. They use this information to make predictions and recommendations that help organizations improve their performance and achieve their goals.

Deloitte Data Scientists work in a variety of industries, including finance, healthcare, and retail. They are typically part of a team that includes other data scientists, analysts, and business consultants. They usually also collaborate with other teams such as IT, operations, and marketing to ensure that data is being used effectively across the organization.

Deloitte has several data science positions across its various practice areas, including - Data Scientist, Advanced Analytics Consultant, ML Engineer and Analytics Manager. These positions can vary in terms of specific responsibilities and requirements depending on the industry, practice area, and level of seniority.

For example, a Data Scientist in the financial services industry may focus on developing predictive models to identify potential fraud or risk, while a Data Scientist in the healthcare industry may focus on analyzing patient data to improve treatment outcomes.

Similarly, an Advanced Analytics Consultant in the manufacturing industry may work on improving supply chain efficiency, while an Advanced Analytics Consultant in the retail industry may work on optimizing pricing strategies.

How to Apply for a Data Scientist Job at Deloitte?

Take a look at Deloitte’s website and visit their careers page. You'll find plenty of opportunities available, and you can easily apply to roles directly on the site. However, we would highly recommend taking the referral route if you know someone in the company as it increases your chances significantly. Before you hit the apply button, make sure you read the job requirements thoroughly to check if your skills/experiences match against the role’s requirements. Nothing's more frustrating than getting caught off guard during an interview. If you want to increase your chances even more, tailor your resume to align it with the qualifications and experiences listed in the job posting. It'll make you stand out from the rest. If you're not sure how to do that, Prepfully offers a resume review service, where actual recruiters will give you feedback on your resume.

It's also important to mention that Deloitte has a strong emphasis on diversity and inclusion, and they encourage candidates from under-represented groups to apply.

Responsibilities of a Data Scientist at Deloitte

The responsibilities of a data scientist at Deloitte across roles can broadly be seen as-

- Using statistical, machine learning, and other advanced techniques to analyze data and identify trends, patterns, and insights that inform business decisions and solve complex problems.

- Collaborating with other data scientists, analysts, and business consultants to deliver data-driven solutions to clients.

- Communicating results and recommendations to clients and stakeholders in a clear and compelling manner, including visualizing and presenting data in a way that is easy to understand.

- Designing and implementing experiments and testing hypotheses to validate findings and improve models.

- Continuously improving processes and techniques by staying current with the latest industry trends and technologies.

- Creating and maintaining a positive work environment and fostering team dynamics.

It's important to note that the responsibilities may vary depending on the level of seniority, the specific practice area, the specific industry, and the specific project.

Skills and Qualifications needed for Data Scientists at Deloitte

Here are some skills and qualifications that will help you excel in your Data Science interviews at Deloitte.

- It's important to have a strong background in statistics, mathematics, and computer science.

- Experience with programming languages such as Python and R, as well as experience working with databases and big data platforms, is important to be successful as a Data Scientist at Deloitte.

- It's important to have experience with machine learning and statistical modeling. This will allow you to develop and implement advanced models that drive business decisions.

- Strong understanding of data visualization and presentation techniques will allow you to communicate results and recommendations to clients and stakeholders in a clear and compelling manner, and in a way that is easy to understand.

- Experience with cloud-based computing platforms and technologies such as AWS or Azure can be pretty useful, since it will allow you to have constructive conversations with engineers across use cases such as deploying your models to production.

- Strong business acumen - you’ll be translating complex data insights into actionable recommendations for clients, so it’s important to be able to tie your thinking back into business outcomes and impact.

As a part of the Deloitte Data Scientist interview, you will need to go through multiple interview rounds:

1. HR interview - The first round is an HR interview. This session takes place so that the HR team can better understand your background and can help you understand the role. Many candidates reported getting a take home case study or assignment after this round.

2. Technical Interview Rounds - The second round of the interview process will consist of two technical rounds. These rounds will focus on assessing the candidate's competency and technical skills.

3. The final stage of the interview process will comprise multiple different interview rounds . Some of the example rounds are - Behavioral interview, Business case study interview and Techno Managerial interview.

LAUNCH your dream career!

Talk to a coach from your target company for:

- Mock Interviews

- Negotiation

- Resume Reviews

- Expert Advice

Get a mock interview with a recruiter at Deloitte

Check out video guide that delves into the interview process and provides valuable tips tailored to each round of the interview.

Relevant Guides

Deloitte data scientist -hr interview.

During the HR interview, the focus is typically on assessing if your abilities align with the position being applied for. This may include informal queries about your experiences and qualifications. The goal of this session is to provide the HR team with a deeper understanding of your background and to assist you in understanding the role. Many candidates reported getting a take home case study or assignment after this round.

Interview Questions

- Why do you want to join Deloitte?

- Why do you think you will be a good fit for the role?

- What responsibilities do you expect to have from your job at Deloitte?

- Describe a previous project of your choice, frame and solve a problem given a scenario.

Watch these videos

Deloitte data scientist - technical interview rounds.

The second round of the interview process will consist of two technical rounds. These rounds will focus on assessing the candidate's competency and technical skills. Both the rounds cover technical skills and competencies, just different ones related to the DS craft. Expect questions and assessments that test your understanding of specific technical skills such as SQL, Python, and machine learning. Be prepared to provide examples of past projects or experiences that demonstrate your competency in these areas.

- How do you view the frequentist vs bayesian approach to probability? Can you give an example of a situation where one approach would be more suitable than the other?

- Can you tell us about your experience using Tableau? How have you used it in the past and what types of data have you visualized with it?

- What is embedding?

- What is the normal distribution?

- Can you compare and contrast the training and loss graphs for neural networks? How do they inform the optimization and performance of a model?

- Can you discuss the use of confusion matrix and ROC curve in evaluating a model's performance? How do they differ and when would you use one over the other?

- Can you explain what DMatrix is in XGBoost? How does it differ from other data structures used in gradient boosting?

- What is heteroscedasticity?

- What is multicollinearity?

- Can you explain techniques to overcome imbalanced datasets?

- Can you discuss the effects of an imbalanced dataset on model metrics?

- Which is faster, joins or subqueries?

Read these articles

Deloitte data scientist - final round interviews.

The final stage of the interview process will be a virtual on-site interview comprising multiple different interview rounds. Some of the rounds you can expect to face are:

- Behavioral Interview: This interview round mostly focuses on conversational questions. The focus of this interview will be on understanding your values and how they align with the company's culture.

- Business Case Study: This interview will be a business case study where you will be asked hypothetical questions to test your understanding of the business or domain related to the position you're interviewing for.

- Techno Managerial Interview: In this round, you will be interviewed by either a Data Science manager or a Project manager. The focus of this interview will be on your experience handling projects and clients. Expect to be asked about how you handle client relationships, how you manage projects, and how you handle any challenges that may arise during the course of a project. This will be an opportunity for the interviewer to get a sense of your ability to work with others, as well as your technical skills and knowledge.

Please note that these are just some of the examples of the rounds you could face. There are sometimes additional rounds scheduled which are specific to certain roles and positions.

- Can you provide an example of a project you have managed and the challenges you faced?

- How do you ensure effective communication with clients and stakeholders during a project?

- How do you prioritize and manage competing project demands?

- Can you give an example of a situation where you had to make a difficult decision related to a project?

- Can you walk me through how you would approach a hypothetical business problem?

- How would you analyze market trends and competition for a new product launch?

Interview Tips

When you are preparing for a Deloitte Data Science interview - we’d recommend the following things to keep in mind:

- Be prepared to discuss specific examples of past projects or experiences that demonstrate your competency in the technical skills related to the role. Highlight your achievements and explain how you applied your technical skills to solve problems.

- Brush up on your knowledge of specific technical skills such as SQL, Python, and machine learning. Be familiar with the latest trends and techniques in these areas.

- Be prepared to provide examples of how you handle client relationships, manage projects, and handle challenges that may arise during the course of a project. Show how you are able to work well with others and how you handle difficult situations.

- Practice answering business case study questions and be prepared to walk through your thought process and approach to solving hypothetical business problems. This will demonstrate your problem-solving skills and ability to think strategically.

- Prepare answers to common interview questions, such as “Why do you want to join Deloitte?” and “Why do you think you will be a good fit for the role?”. Make sure you have a clear and compelling answer that showcases your qualifications and enthusiasm for the role.

The interview process for a Data Scientist role at Deloitte typically includes 3 primary rounds - a HR interview, technical interview rounds, and the final interview rounds. The first round is an HR interview. This session takes place so that the HR team can better understand your background and can help you understand the role. The second round of the interview process will consist of two technical rounds. These rounds will focus on assessing the candidate's competency and technical skills. The final stage of the interview process will comprise multiple different interview rounds. Some of the example rounds are - Behavioral interview, Business case study interview and Techno Managerial interview.

Schedule a free peer interview to practice for the interview.

Frequently asked questions.

How long does the entire Data Scientist interview process at Deloitte typically take?

What should I know about Deloitte's approach to data science and analytics during the interview process?

Are there behavioral or situational interview questions in the process?

How can I prepare for the technical interviews in the Data Scientist interview process at Deloitte?

Is there a coding component in the Data Scientist interview process at Deloitte?

Relevant interview guides

2024 Guide: 20+ Essential Data Science Case Study Interview Questions

Case studies are often the most challenging aspect of data science interview processes. They are crafted to resemble a company’s existing or previous projects, assessing a candidate’s ability to tackle prompts, convey their insights, and navigate obstacles.

To excel in data science case study interviews, practice is crucial. It will enable you to develop strategies for approaching case studies, asking the right questions to your interviewer, and providing responses that showcase your skills while adhering to time constraints.

The best way of doing this is by using a framework for answering case studies. For example, you could use the product metrics framework and the A/B testing framework to answer most case studies that come up in data science interviews.

There are four main types of data science case studies:

- Product Case Studies - This type of case study tackles a specific product or feature offering, often tied to the interviewing company. Interviewers are generally looking for a sense of business sense geared towards product metrics.

- Data Analytics Case Study Questions - Data analytics case studies ask you to propose possible metrics in order to investigate an analytics problem. Additionally, you must write a SQL query to pull your proposed metrics, and then perform analysis using the data you queried, just as you would do in the role.

- Modeling and Machine Learning Case Studies - Modeling case studies are more varied and focus on assessing your intuition for building models around business problems.

- Business Case Questions - Similar to product questions, business cases tackle issues or opportunities specific to the organization that is interviewing you. Often, candidates must assess the best option for a certain business plan being proposed, and formulate a process for solving the specific problem.

How Case Study Interviews Are Conducted

Oftentimes as an interviewee, you want to know the setting and format in which to expect the above questions to be asked. Unfortunately, this is company-specific: Some prefer real-time settings, where candidates actively work through a prompt after receiving it, while others offer some period of days (say, a week) before settling in for a presentation of your findings.

It is therefore important to have a system for answering these questions that will accommodate all possible formats, such that you are prepared for any set of circumstances (we provide such a framework below).

Why Are Case Study Questions Asked?

Case studies assess your thought process in answering data science questions. Specifically, interviewers want to see that you have the ability to think on your feet, and to work through real-world problems that likely do not have a right or wrong answer. Real-world case studies that are affecting businesses are not binary; there is no black-and-white, yes-or-no answer. This is why it is important that you can demonstrate decisiveness in your investigations, as well as show your capacity to consider impacts and topics from a variety of angles. Once you are in the role, you will be dealing directly with the ambiguity at the heart of decision-making.

Perhaps most importantly, case interviews assess your ability to effectively communicate your conclusions. On the job, data scientists exchange information across teams and divisions, so a significant part of the interviewer’s focus will be on how you process and explain your answer.

Quick tip: Because case questions in data science interviews tend to be product- and company-focused, it is extremely beneficial to research current projects and developments across different divisions , as these initiatives might end up as the case study topic.

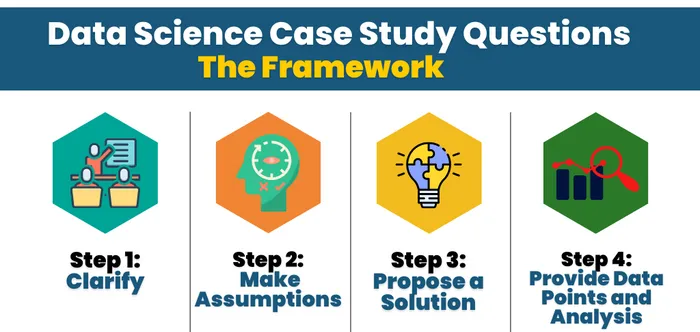

How to Answer Data Science Case Study Questions (The Framework)

There are four main steps to tackling case questions in Data Science interviews, regardless of the type: clarify, make assumptions, gather context, and provide data points and analysis.

Step 1: Clarify

Clarifying is used to gather more information . More often than not, these case studies are designed to be confusing and vague. There will be unorganized data intentionally supplemented with extraneous or omitted information, so it is the candidate’s responsibility to dig deeper, filter out bad information, and fill gaps. Interviewers will be observing how an applicant asks questions and reach their solution.

For example, with a product question, you might take into consideration:

- What is the product?

- How does the product work?

- How does the product align with the business itself?

Step 2: Make Assumptions

When you have made sure that you have evaluated and understand the dataset, start investigating and discarding possible hypotheses. Developing insights on the product at this stage complements your ability to glean information from the dataset, and the exploration of your ideas is paramount to forming a successful hypothesis. You should be communicating your hypotheses with the interviewer, such that they can provide clarifying remarks on how the business views the product, and to help you discard unworkable lines of inquiry. If we continue to think about a product question, some important questions to evaluate and draw conclusions from include:

- Who uses the product? Why?

- What are the goals of the product?

- How does the product interact with other services or goods the company offers?

The goal of this is to reduce the scope of the problem at hand, and ask the interviewer questions upfront that allow you to tackle the meat of the problem instead of focusing on less consequential edge cases.

Step 3: Propose a Solution

Now that a hypothesis is formed that has incorporated the dataset and an understanding of the business-related context, it is time to apply that knowledge in forming a solution. Remember, the hypothesis is simply a refined version of the problem that uses the data on hand as its basis to being solved. The solution you create can target this narrow problem, and you can have full faith that it is addressing the core of the case study question.

Keep in mind that there isn’t a single expected solution, and as such, there is a certain freedom here to determine the exact path for investigation.

Step 4: Provide Data Points and Analysis

Finally, providing data points and analysis in support of your solution involves choosing and prioritizing a main metric. As with all prior factors, this step must be tied back to the hypothesis and the main goal of the problem. From that foundation, it is important to trace through and analyze different examples– from the main metric–in order to validate the hypothesis.

Quick tip: Every case question tends to have multiple solutions. Therefore, you should absolutely consider and communicate any potential trade-offs of your chosen method. Be sure you are communicating the pros and cons of your approach.

Note: In some special cases, solutions will also be assessed on the ability to convey information in layman’s terms. Regardless of the structure, applicants should always be prepared to solve through the framework outlined above in order to answer the prompt.

The Role of Effective Communication

There have been multiple articles and discussions conducted by interviewers behind the Data Science Case Study portion, and they all boil down success in case studies to one main factor: effective communication.

All the analysis in the world will not help if interviewees cannot verbally work through and highlight their thought process within the case study. Again, interviewers are keyed at this stage of the hiring process to look for well-developed “soft-skills” and problem-solving capabilities. Demonstrating those traits is key to succeeding in this round.

To this end, the best advice possible would be to practice actively going through example case studies, such as those available in the Interview Query questions bank . Exploring different topics with a friend in an interview-like setting with cold recall (no Googling in between!) will be uncomfortable and awkward, but it will also help reveal weaknesses in fleshing out the investigation.

Don’t worry if the first few times are terrible! Developing a rhythm will help with gaining self-confidence as you become better at assessing and learning through these sessions.

Product Case Study Questions

With product data science case questions , the interviewer wants to get an idea of your product sense intuition. Specifically, these questions assess your ability to identify which metrics should be proposed in order to understand a product.

1. How would you measure the success of private stories on Instagram, where only certain close friends can see the story?

Start by answering: What is the goal of the private story feature on Instagram? You can’t evaluate “success” without knowing what the initial objective of the product was, to begin with.

One specific goal of this feature would be to drive engagement. A private story could potentially increase interactions between users, and grow awareness of the feature.

Now, what types of metrics might you propose to assess user engagement? For a high-level overview, we could look at:

- Average stories per user per day

- Average Close Friends stories per user per day

However, we would also want to further bucket our users to see the effect that Close Friends stories have on user engagement. By bucketing users by age, date joined, or another metric, we could see how engagement is affected within certain populations, giving us insight on success that could be lost if looking at the overall population.

2. How would you measure the success of acquiring new users through a 30-day free trial at Netflix?

More context: Netflix is offering a promotion where users can enroll in a 30-day free trial. After 30 days, customers will automatically be charged based on their selected package. How would you measure acquisition success, and what metrics would you propose to measure the success of the free trial?

One way we can frame the concept specifically to this problem is to think about controllable inputs, external drivers, and then the observable output . Start with the major goals of Netflix:

- Acquiring new users to their subscription plan.

- Decreasing churn and increasing retention.

Looking at acquisition output metrics specifically, there are several top-level stats that we can look at, including:

- Conversion rate percentage

- Cost per free trial acquisition

- Daily conversion rate

With these conversion metrics, we would also want to bucket users by cohort. This would help us see the percentage of free users who were acquired, as well as retention by cohort.

3. How would you measure the success of Facebook Groups?

Start by considering the key function of Facebook Groups . You could say that Groups are a way for users to connect with other users through a shared interest or real-life relationship. Therefore, the user’s goal is to experience a sense of community, which will also drive our business goal of increasing user engagement.

What general engagement metrics can we associate with this value? An objective metric like Groups monthly active users would help us see if Facebook Groups user base is increasing or decreasing. Plus, we could monitor metrics like posting, commenting, and sharing rates.

There are other products that Groups impact, however, specifically the Newsfeed. We need to consider Newsfeed quality and examine if updates from Groups clog up the content pipeline and if users prioritize those updates over other Newsfeed items. This evaluation will give us a better sense of if Groups actually contribute to higher engagement levels.

4. How would you analyze the effectiveness of a new LinkedIn chat feature that shows a “green dot” for active users?

Note: Given engineering constraints, the new feature is impossible to A/B test before release. When you approach case study questions, remember always to clarify any vague terms. In this case, “effectiveness” is very vague. To help you define that term, you would want first to consider what the goal is of adding a green dot to LinkedIn chat.

5. How would you diagnose why weekly active users are up 5%, but email notification open rates are down 2%?

What assumptions can you make about the relationship between weekly active users and email open rates? With a case question like this, you would want to first answer that line of inquiry before proceeding.

Hint: Open rate can decrease when its numerator decreases (fewer people open emails) or its denominator increases (more emails are sent overall). Taking these two factors into account, what are some hypotheses we can make about our decrease in the open rate compared to our increase in weekly active users?

Data Analytics Case Study Questions

Data analytics case studies ask you to dive into analytics problems. Typically these questions ask you to examine metrics trade-offs or investigate changes in metrics. In addition to proposing metrics, you also have to write SQL queries to generate the metrics, which is why they are sometimes referred to as SQL case study questions .

6. Using the provided data, generate some specific recommendations on how DoorDash can improve.

In this DoorDash analytics case study take-home question you are provided with the following dataset:

- Customer order time

- Restaurant order time

- Driver arrives at restaurant time

- Order delivered time

- Customer ID

- Amount of discount

- Amount of tip

With a dataset like this, there are numerous recommendations you can make. A good place to start is by thinking about the DoorDash marketplace, which includes drivers, riders and merchants. How could you analyze the data to increase revenue, driver/user retention and engagement in that marketplace?

7. After implementing a notification change, the total number of unsubscribes increases. Write a SQL query to show how unsubscribes are affecting login rates over time.

This is a Twitter data science interview question , and let’s say you implemented this new feature using an A/B test. You are provided with two tables: events (which includes login, nologin and unsubscribe ) and variants (which includes control or variant ).

We are tasked with comparing multiple different variables at play here. There is the new notification system, along with its effect of creating more unsubscribes. We can also see how login rates compare for unsubscribes for each bucket of the A/B test.

Given that we want to measure two different changes, we know we have to use GROUP BY for the two variables: date and bucket variant. What comes next?

8. Write a query to disprove the hypothesis: Data scientists who switch jobs more often end up getting promoted faster.

More context: You are provided with a table of user experiences representing each person’s past work experiences and timelines.

This question requires a bit of creative problem-solving to understand how we can prove or disprove the hypothesis. The hypothesis is that a data scientist that ends up switching jobs more often gets promoted faster.

Therefore, in analyzing this dataset, we can prove this hypothesis by separating the data scientists into specific segments on how often they jump in their careers.

For example, if we looked at the number of job switches for data scientists that have been in their field for five years, we could prove the hypothesis that the number of data science managers increased as the number of career jumps also rose.

- Never switched jobs: 10% are managers

- Switched jobs once: 20% are managers

- Switched jobs twice: 30% are managers

- Switched jobs three times: 40% are managers

9. Write a SQL query to investigate the hypothesis: Click-through rate is dependent on search result rating.

More context: You are given a table with search results on Facebook, which includes query (search term), position (the search position), and rating (human rating from 1 to 5). Each row represents a single search and includes a column has_clicked that represents whether a user clicked or not.

This question requires us to formulaically do two things: create a metric that can analyze a problem that we face and then actually compute that metric.

Think about the data we want to display to prove or disprove the hypothesis. Our output metric is CTR (clickthrough rate). If CTR is high when search result ratings are high and CTR is low when the search result ratings are low, then our hypothesis is proven. However, if the opposite is true, CTR is low when the search result ratings are high, or there is no proven correlation between the two, then our hypothesis is not proven.

With that structure in mind, we can then look at the results split into different search rating buckets. If we measure the CTR for queries that all have results rated at 1 and then measure CTR for queries that have results rated at lower than 2, etc., we can measure to see if the increase in rating is correlated with an increase in CTR.

10. How would you help a supermarket chain determine which product categories should be prioritized in their inventory restructuring efforts?

You’re working as a Data Scientist in a local grocery chain’s data science team. The business team has decided to allocate store floor space by product category (e.g., electronics, sports and travel, food and beverages). Help the team understand which product categories to prioritize as well as answering questions such as how customer demographics affect sales, and how each city’s sales per product category differs.

Check out our Data Analytics Learning Path .

Modeling and Machine Learning Case Questions

Machine learning case questions assess your ability to build models to solve business problems. These questions can range from applying machine learning to solve a specific case scenario to assessing the validity of a hypothetical existing model . The modeling case study requires a candidate to evaluate and explain any certain part of the model building process.

11. Describe how you would build a model to predict Uber ETAs after a rider requests a ride.

Common machine learning case study problems like this are designed to explain how you would build a model. Many times this can be scoped down to specific parts of the model building process. Examining the example above, we could break it up into:

How would you evaluate the predictions of an Uber ETA model?

What features would you use to predict the Uber ETA for ride requests?

Our recommended framework breaks down a modeling and machine learning case study to individual steps in order to tackle each one thoroughly. In each full modeling case study, you will want to go over:

- Data processing

- Feature Selection

- Model Selection

- Cross Validation

- Evaluation Metrics

- Testing and Roll Out

12. How would you build a model that sends bank customers a text message when fraudulent transactions are detected?

Additionally, the customer can approve or deny the transaction via text response.

Let’s start out by understanding what kind of model would need to be built. We know that since we are working with fraud, there has to be a case where either a fraudulent transaction is or is not present .

Hint: This problem is a binary classification problem. Given the problem scenario, what considerations do we have to think about when first building this model? What would the bank fraud data look like?

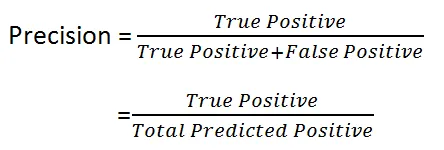

13. How would you design the inputs and outputs for a model that detects potential bombs at a border crossing?

Additional questions. How would you test the model and measure its accuracy? Remember the equation for precision:

Because we can not have high TrueNegatives, recall should be high when assessing the model.

14. Which model would you choose to predict Airbnb booking prices: Linear regression or random forest regression?

Start by answering this question: What are the main differences between linear regression and random forest?

Random forest regression is based on the ensemble machine learning technique of bagging . The two key concepts of random forests are:

- Random sampling of training observations when building trees.

- Random subsets of features for splitting nodes.

Random forest regressions also discretize continuous variables, since they are based on decision trees and can split categorical and continuous variables.

Linear regression, on the other hand, is the standard regression technique in which relationships are modeled using a linear predictor function, the most common example represented as y = Ax + B.

Let’s see how each model is applicable to Airbnb’s bookings. One thing we need to do in the interview is to understand more context around the problem of predicting bookings. To do so, we need to understand which features are present in our dataset.

We can assume the dataset will have features like:

- Location features.

- Seasonality.

- Number of bedrooms and bathrooms.

- Private room, shared, entire home, etc.

- External demand (conferences, festivals, sporting events).

Which model would be the best fit for this feature set?

15. Using a binary classification model that pre-approves candidates for a loan, how would you give each rejected application a rejection reason?

More context: You do not have access to the feature weights. Start by thinking about the problem like this: How would the problem change if we had ten, one thousand, or ten thousand applicants that had gone through the loan qualification program?

Pretend that we have three people: Alice, Bob, and Candace that have all applied for a loan. Simplifying the financial lending loan model, let us assume the only features are the total number of credit cards , the dollar amount of current debt , and credit age . Here is a scenario:

Alice: 10 credit cards, 5 years of credit age, $\$20K$ in debt

Bob: 10 credit cards, 5 years of credit age, $\$15K$ in debt

Candace: 10 credit cards, 5 years of credit age, $\$10K$ in debt

If Candace is approved, we can logically point to the fact that Candace’s $\$10K$ in debt swung the model to approve her for a loan. How did we reason this out?

If the sample size analyzed was instead thousands of people who had the same number of credit cards and credit age with varying levels of debt, we could figure out the model’s average loan acceptance rate for each numerical amount of current debt. Then we could plot these on a graph to model the y-value (average loan acceptance) versus the x-value (dollar amount of current debt). These graphs are called partial dependence plots.

Business Case Questions

In data science interviews, business case study questions task you with addressing problems as they relate to the business. You might be asked about topics like estimation and calculation, as well as applying problem-solving to a larger case. One tip: Be sure to read up on the company’s products and ventures before your interview to expose yourself to possible topics.

16. How would you estimate the average lifetime value of customers at a business that has existed for just over one year?

More context: You know that the product costs $\$100$ per month, averages 10% in monthly churn, and the average customer stays for 3.5 months.

Remember that lifetime value is defined by the prediction of the net revenue attributed to the entire future relationship with all customers averaged. Therefore, $\$100$ * 3.5 = $\$350$… But is it that simple?

Because this company is so new, our average customer length (3.5 months) is biased from the short possible length of time that anyone could have been a customer (one year maximum). How would you then model out LTV knowing the churn rate and product cost?

17. How would you go about removing duplicate product names (e.g. iPhone X vs. Apple iPhone 10) in a massive database?

See the full solution for this Amazon business case question on YouTube:

18. What metrics would you monitor to know if a 50% discount promotion is a good idea for a ride-sharing company?

This question has no correct answer and is rather designed to test your reasoning and communication skills related to product/business cases. First, start by stating your assumptions. What are the goals of this promotion? It is likely that the goal of the discount is to grow revenue and increase retention. A few other assumptions you might make include:

- The promotion will be applied uniformly across all users.

- The 50% discount can only be used for a single ride.

How would we be able to evaluate this pricing strategy? An A/B test between the control group (no discount) and test group (discount) would allow us to evaluate Long-term revenue vs average cost of the promotion. Using these two metrics how could we measure if the promotion is a good idea?

19. A bank wants to create a new partner card, e.g. Whole Foods Chase credit card). How would you determine what the next partner card should be?

More context: Say you have access to all customer spending data. With this question, there are several approaches you can take. As your first step, think about the business reason for credit card partnerships: they help increase acquisition and customer retention.

One of the simplest solutions would be to sum all transactions grouped by merchants. This would identify the merchants who see the highest spending amounts. However, the one issue might be that some merchants have a high-spend value but low volume. How could we counteract this potential pitfall? Is the volume of transactions even an important factor in our credit card business? The more questions you ask, the more may spring to mind.

20. How would you assess the value of keeping a TV show on a streaming platform like Netflix?

Say that Netflix is working on a deal to renew the streaming rights for a show like The Office , which has been on Netflix for one year. Your job is to value the benefit of keeping the show on Netflix.

Start by trying to understand the reasons why Netflix would want to renew the show. Netflix mainly has three goals for what their content should help achieve:

- Acquisition: To increase the number of subscribers.

- Retention: To increase the retention of active subscribers and keep them on as paying members.

- Revenue: To increase overall revenue.

One solution to value the benefit would be to estimate a lower and upper bound to understand the percentage of users that would be affected by The Office being removed. You could then run these percentages against your known acquisition and retention rates.

21. How would you determine which products are to be put on sale?

Let’s say you work at Amazon. It’s nearing Black Friday, and you are tasked with determining which products should be put on sale. You have access to historical pricing and purchasing data from items that have been on sale before. How would you determine what products should go on sale to best maximize profit during Black Friday?

To start with this question, aggregate data from previous years for products that have been on sale during Black Friday or similar events. You can then compare elements such as historical sales volume, inventory levels, and profit margins.

Learn More About Feature Changes

This course is designed teach you everything you need to know about feature changes:

More Data Science Interview Resources

Case studies are one of the most common types of data science interview questions . Practice with the data science course from Interview Query, which includes product and machine learning modules.

Deloitte US

Deloitte consulting, government & public services - data summer scholar.

Atlanta, Georgia, United States

Rosslyn, Virginia, United States

Sacramento, California, United States

Position Summary

When organizations undergo significant changes, face difficult roadblocks, seek process efficiency or identify technological opportunities, they need a trusted advisor to help them drive decisions and navigate challenges. Our Summer Scholars collaborate with our clients to deliver strategies to help them adapt to their unique challenges, opportunities, and meet their objectives. We are seeking candidates with outstanding leadership experience, strong academic performance, and excellent communication skills to join our Government and Public Services practice.

Work You’ll Do

As a Summer Scholar, you will be an integral member of a client service team, collaborating with diverse and talented team members to help solve multidimensional problems, improve performance, and generate value for our clients. You can capitalize on our cross-industry presence to find your niche and build your individual brand within the organization. This person should have strong analytical and critical thinking skills with the ability to solve complex problems and communicate findings.

While a career in consulting is dynamic and evolving, we look for people who will perform in specific areas, grow those related skills, and deliver exceptional results to our clients. We recognize that you have unique skills, experiences, and interests, so we divide the broad scope of the Summer Scholar role into skills-based profiles in order to best align each Summer Scholar with a focus area. Setting our people up for success is our highest priority. We are currently recruiting Data Summer Scholars whose skills and interests align with the below description:

Data Summer Scholar

- This Summer Scholar leverages data analytics and technology skills to enable the use of data in decision making. This Summer Scholar designs and develops cloud-based data processing pipelines, machine learning predictive models, data visualization dashboards and other data driven systems. Majors : Data Science, Computer Science, Business Analytics, Mathematics, Statistics, and other Quantitative Fields

The Team

Our practitioners will be able to maintain the specialization they have built to date in certain areas while also learning how it connects to broader issues in the market. We are committed to continuously supporting our practitioners as they build skills in either one specific part of our business or across the business – our Portfolio is structured to facilitate this learning. Engagement teams at Deloitte drive value for our clients but also understand the importance of developing resources and contributing to the communities in which we work. We make it our business to take issue to impact, both within and beyond a client setting.

Required Qualifications

- Data Science, Computer Science, Business Analytics, Mathematics, Statistics, and other quantitative fields

- Must be legally authorized to work in the United States without the need for employer sponsorship now or at any time in the future

- Ability to obtain a U.S. Security Clearance

- Strong academic track record (3.0 cumulative GPA required, 3.4 cumulative GPA preferred).

- Ability to travel up to 50%, on average, based on the work you do and the clients and industries/sectors you serve

- Candidates must be at least 18 years of age at the time of employment

The wage range for this role takes into account the wide range of factors that are considered in making compensation decisions including but not limited to skill sets; experience and training; licensure and certifications; and other business and organizational needs. The disclosed range estimate has not been adjusted for the applicable geographic differential associated with the location at which the position may be filled. At Deloitte, it is not typical for an individual to be hired at or near the top of the range for their role and compensation decisions are dependent on the facts and circumstances of each case. A reasonable estimate of the current range is $38/hour.

Information for applicants with a need for Accommodation

https://www2.deloitte.com/us/en/pages/careers/articles/join-deloitte-assistance-for-disabled-applicants.html

How You’ll Grow

Throughout the course of the internship program, you’ll receive formal and informal mentorship and training. Our Summer Scholar training program provides a view both into the technical skills as well as critical professional behaviors, standards, and mindsets required to make the most of your internship. The program includes a variety of team-based, hands-on exercises and activities. Beyond the formal training, you will work with talented consulting professionals, granting you access to ask questions and gain valuable real world experience while working on client engagements. Explore Deloitte University, The Leadership Center.

As used in this posting, "Deloitte" means Deloitte Consulting LLP, a subsidiary of Deloitte LLP. Please see www.deloitte.com/us/about for a detailed description of the legal structure of Deloitte LLP and its subsidiaries.

All qualified applicants will receive consideration for employment without regard to race, color, religion, sex, sexual orientation, gender identity, national origin, age, disability or protected veteran status, or any other legally protected basis, in accordance with applicable law.

Requisition code: 182226

Caution against fraudulent job offers!

We have been informed of instances where jobseekers are led to believe of fictitious job opportunities with Deloitte US (“Deloitte”). In one or more such cases, false promises of actual or potential selection, or initiation or completion of the recruitment formalities appear to have been or are being made. Some jobseekers appear to have been asked to pay money to specified bank accounts of individuals or entities as a condition of their selection for a ‘job’ with Deloitte. These individuals or entities are in no way connected with Deloitte and do not represent or otherwise act on behalf of Deloitte.

We would like to clarify that:

- At Deloitte, ethics and integrity are fundamental and not negotiable.

- We are against corruption and neither offer bribes nor accept them, nor induce or permit any other party to make or receive bribes on our behalf.

- We have not authorized any party or person to collect any money from jobseekers in any form whatsoever for promises of getting jobs in Deloitte.

- We consider candidates on merit and that we provide an equal opportunity to eligible applicants.

- No one other than designated Deloitte personnel (e.g., a Deloitte recruiter or Deloitte hiring partner) is permitted to extend any job offer from Deloitte.

Anyone who at any time has made or makes any payment to any party in exchange for promises of job or selection for a job with Deloitte or any matter related to this (including those for ‘registration’, ‘verification’ or ‘security deposit’) or otherwise engages with any such person who has made or makes fraudulent promises or offers, does so (or has done so) entirely at their own risk. Deloitte takes no responsibility or liability for any such unauthorized or fraudulent actions or engagements. We encourage jobseekers to exercise caution.

- About Deloitte

- Terms of use

- Data Privacy Framework

- Do Not Sell or Share My Personal Information

- Legal information for job seekers and ADA

- Labor condition applications

- Assistance for people with disabilities

The Apple Vision Pro may have tanked — but spatial computing is still the future, Deloitte says

- Share on Facebook

- Share on LinkedIn

Join us in returning to NYC on June 5th to collaborate with executive leaders in exploring comprehensive methods for auditing AI models regarding bias, performance, and ethical compliance across diverse organizations. Find out how you can attend here .

The tech world couldn’t wait for the rollout of the Apple Vision Pro — but the response has been underwhelming and blasé, with users complaining about dizziness, headaches and the overall goofy feeling they get wearing it.

Reviews have also been mixed about the Meta Quest Pro, Ray-Ban Meta smart glasses and rabbit R1, while the Humane AI pin is said to be “ bad at almost everything .”

All of this might make it seem like spatial computing or the seamless convergence of physical and digital worlds, is just a sci-fi pipe dream.

Not true, argues Deloitte . Spatial computing will be the next level of interaction, the firm argues in a new Dichotomies whitepaper out today.

Countdown to GamesBeat Summit

Secure your spot now and join us in LA for an unforgettable two days experience exploring the theme of resilience and adaptation. Register today to guarantee your seat!

“The idea of headsets and wearable displays has tended to be all about virtual reality — that is, we’re going to escape the place we’re in so that we might go to an altogether new place that’s better and or more exciting,” Mike Bechtel, Deloitte’s chief futurist, told VentureBeat.

But now and going forward, the mindset is shifting to, “Let’s not reject reality, let’s enrich it. Let’s paint pixels over our lived reality.”

We’re not quite there yet (but might be in five-plus years?)

Although Apple has popularized the term, “spatial computing” existed long before the Vision Pro came along. It was coined in 2003 in a thesis by MIT graduate researcher Simon Greenwold.

Deloitte identifies three components of spatial computing: physical (wearables and sensors), bridging (network infrastructure) and digital (interactive digital objects, holographs, avatars).

But based on the response to Vision Pro 1.0 and other new wearables, it’s clear the hardware is still struggling to get there. Bechtel recalled a recent interaction with a client demoing one of the new technologies; he turned to him and said, “There is no way I’m going to wear a toaster on my face to the office.”

Existing spatial computing technologies can be compared to the 8-track cartridges of the 1970s and 80s: They were also bulky and not all that user-friendly. But they did provide a gateway to traditional cassettes, then minidiscs, CDs and eventually “every song ever in your pocket all at once,” said Bechtel.

For devices to be normalized and accepted, they need to feel like they’re simplifying experiences — say, a good-looking pair of glasses or contacts removes the need for everyone to carry a phone, tablet and laptop everywhere they go.

The hardware right now can “feel like a long putt,” and there’s a discrepancy when it comes to acceptance in different segments of the workforce. White-collar technologists, for one, already have a multitude of available technologies: 4K webcams, high-fidelity microphones, giant monitors, high-connection bandwidth.

“To imagine leaving those tools for a bulky headset — it doesn’t feel like an upgrade,” said Bechtel. “It feels like a side grade or even maybe a downgrade.”

On the other hand, there’s early traction interest among traditional blue-collar workers in factory, energy and field service settings . They’ve been sold laptops, tablets and PDAs for 25 years, but those can all be dangerous and distracting in their work settings. On the other hand, Bechtel pointed out, “I have to wear safety glasses anyway, and so I guess I’d rather have smart ones than dumb ones.”

Spatial computing has essential plumbing needs

The Internet isn’t just about tubes and monitors; it wouldn’t exist without transmission lines, antennas, switches and other critical infrastructure. The same goes for spatial computing, Bechtel noted — there must be a “spatial web,” that bridges the gap between physical and digital.

“The nerd word here is something called ‘sensor fusion,’ which is the idea of, you’ve got all this info, but it’s all rows in a spreadsheet until thoughtful techies roll it into a fabric that makes sense,” said Bechtel.

Some of the critical technology components that will make spatial computing a reality include lidar, micro-LEDs, computer vision, advanced motion sensors, accelerometers, heat sensors, motion sensors and smart IoT devices (among others).

GPS and spatial mapping software will also be important to offer instantaneous mapping of public spaces and physical objects, as will spatial cameras that record in three dimensions, audio capabilities that can simulate realistic soundscapes and haptic feedback (physical stimuli) through gloves and other clothing.

Bechtel pointed out that early technologies such as Google Glass have felt like a computer screen floating in space. But in the future, we’ll have transparent screens that allow us to look “through the glass.”

Eventually, we will have a “flotilla of sensor technology and software that can allow the digital to overlay the physical in a way that’s increasingly believable and seamless.”

Over time, digital experiences will continue to become multisensory, someday replicating the five senses and even introducing a “sixth sense” through neurotechnology. Deloitte also predicts that we will interact with public digital objects and hyper-personalized ads, and will be able to “edit” reality by cutting out certain people or objects.

Enabling real-time digital twinning

Digital twins have been around for a little over two decades now (data scientist Michael Grieves introduced the first model in 2002). The original concept is creating a visual representation of a physical object (such as a human body or jet engine).

“Let’s have that in virtual, so we can study and look at it, rather than have to cut open the human or open up the jet engine,” Bechtel explained.

But, he pointed out, there’s a big difference between a true digital twin and just a digital copy. A copy is a model of a jet engine that allows scientists to see how it works (or at least how it worked three months ago when data was captured).

A real digital twin, by contrast, is actively in a “state of twinning.” For instance, a virtual jet engine is getting up-to-the-second, real-time data from the physical world, and vice versa. With wearables and spatial computing, the two can be overlaid, allowing for much more utility and enabling a multitude of experimental use cases.

“The idea is that they’re entangled and intertwined,” said Bechtel.

The good and bad of emerging technologies

The notion of the Dichotomies series, Bechtel explained, is to dive into what could go right and wrong with certain technologies, providing nuanced positives and negatives. In the case of spatial computing, the biggest negatives revolve around privacy and security, he said. “This could be a vector for unintentionally invasive surveillance and monitoring.”

For instance, a manager looking to ensure workplace productivity at a factory has to be able to punch into her subordinates’ smart glasses to make sure they’re doing the right things at the right time. But this could mean she unintentionally overhears them talking about a sensitive personal or family matter.

In some cases, “efficiency and optimization is at odds with empathy and personal respect,” said Bechtel. While there are malicious actors, “the more pernicious threat is just scaled mindlessness, like oops, accidental surveilling.”

Another concern is reality distortion, such as when smart contacts can render ever more believable deepfakes . This can muddy what’s real and what’s imagined.

On the flip side, though, the “good” of spatial computing is its ability to revolutionize accessibility, efficiency and communication and enhance personalization, said Bechtel.

“There’s an unhelpful tendency to canonize or demonize emerging tech, this feeling that for it to be worth our attention, it either needs to be a 10X hero or a 10X villain,” he said. “You’re limited only by your imagination, but just so long as you don’t treat it as something to be feared or revered.”

Stay in the know! Get the latest news in your inbox daily

By subscribing, you agree to VentureBeat's Terms of Service.

Thanks for subscribing. Check out more VB newsletters here .

An error occured.

Study links organization of neurotypical brains to genes involved in autism and schizophrenia

Three patterns of gene expression each align with distinct features of known neurobiology: C1 aligns with functional connectivity strength, C2 with brain oscillations in the theta-band range, and C3 with adolescent brain plasticity. Below, we show heatmaps (corrected for multiple comparisons) of the convergent associations between the three patterns C1-C3 and three mental health conditions – autism spectrum disorder (ASD), major depressive disorder (MDD), and schizophrenia (SCZ) – across three previously unrelated methods of analysis (cortical shrinkage from neuroimaging, differential gene expression from postmortem RNA sequencing, and genetic risk from genome-wide association studies), revealing autism to be consistently associated with C1 and C2, and schizophrenia to be consistently associated with C3. Credit: Nature Neuroscience (2024). DOI: 10.1038/s41593-024-01624-4.

The organization of the human brain develops over time, following the coordinated expression of thousands of genes. Linking the development of healthy brain organization to genes involved in mental health conditions such as autism and schizophrenia could help to reveal the biological causes of these disorders.

Researchers at University of Cambridge and other institutes worldwide recently carried out a study that linked gene expression in healthy brains to the imaging, transcriptomics and genetics of autism spectrum disorder and schizophrenia. Their paper, published in Nature Neuroscience unveiled three distinct spatial patterns of cortical gene expression each with specific associations to autism and schizophrenia.

“Prior work had shown that all human brains have the same primary spatial pattern of gene expression (C1) reflecting the hierarchy of connections between neurons,” Richard Dear, co-author of the paper, told Medical Xpress. “Our hypothesis was that there is probably more than one pattern organizing how the twenty-thousand human genes are expressed across our brains.”

The primary objective of the recent study by Dear and his colleagues was to uncover new spatial patterns of gene expression. They hoped that these new patterns would shed light on so-called “transcriptional programs,” the biological mechanisms through which the human brain develops both in health and disease.

“The key challenge for this study was the limited data available,” Dear said. “The Allen Human Brain Atlas remains the only gene expression dataset with high spatial resolution across the brain, and it was collected from only six healthy donors. We therefore had to find a way to identify patterns in these limited data that we were confident represent general transcriptional programs shared across all human brains.”

As part of their study, the researchers ran various computational analyses to validate their results, including a permutation test for robustness and validation in three distinct types of independent data. They also searched for associations between the new transcriptional programs and previously published data related to brain organization, development, gene expression, and genetic variation associated with brain function in both health and disease.

“What was most striking was how each new analysis that we performed converged on the same consistent story,” Dear explained. “The second pattern of gene expression (C2) relates to cognitive metabolism and autism, while the third pattern (C3) relates to brain plasticity in adolescence and schizophrenia. Critically, both patterns were identified and validated entirely in brains from neurotypical donors.”

Dear and his collaborators are the first to link neurotypical brain gene expression to autism and schizophrenia across three common types of prior results (i.e., case-control neuroimaging, differential gene expression and genome-wide association studies ). Their results suggest that data collected using these distinct techniques, which previously appeared to be unrelated, can in fact be traced back to the same transcriptional programs that guide the development of healthy human brains.

“The next step for us will be to dive deeper into the biology of the three patterns to understand them at a more mechanistic level, leveraging recently published single-nucleus RNA sequencing data,” Dear added. “For example, we hope to discover the key genes or transcription factors guiding the expression of the C1–C3 patterns early in development across different cell types. We also hope that these three patterns and the optimized analysis code we developed will be a resource for other scientists seeking to understand the genetic underpinnings of the brain’s spatial organization.”

More information: Richard Dear et al, Cortical gene expression architecture links healthy neurodevelopment to the imaging, transcriptomics and genetics of autism and schizophrenia, Nature Neuroscience (2024). DOI: 10.1038/s41593-024-01624-4

© 2024 Science X Network

Citation : Study links organization of neurotypical brains to genes involved in autism and schizophrenia (2024, May 11) retrieved 11 May 2024 from https://medicalxpress.com/news/2024-05-links-neurotypical-brains-genes-involved.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without the written permission. The content is provided for information purposes only.

Leave a Comment Cancel reply

Save my name, email, and website in this browser for the next time I comment.

IMAGES

VIDEO

COMMENTS

Global Strategy, Analytics and M&A Leader | Consulting. [email protected]. +1 617 831 4128.

Deloitte has several data science positions across its various practice areas, including - Data Scientist, Advanced Analytics Consultant, ML Engineer and Analytics Manager. These positions can vary in terms of specific responsibilities and requirements depending on the industry, practice area, and level of seniority. ... Business Case Study: ...

Nestlé USA client spotlight. Deloitte and Nestlé collaborated on a modernization program to develop and maintain a data lake in the cloud, tearing down siloes and providing reusable data assets that can be used by all business functions. Print. Nestlé creates centralized data lake. 463 KB PDF.

So, I wanted to share my experience with the Deloitte data science department. It's quite the ride ... technical assessment, a case study, face-to-face cognitive and analytical test (honestly ...

93 Deloitte Data Science interview questions and 77 interview reviews. Free interview details posted anonymously by Deloitte interview candidates. ... Case study followed by technical interviewing. Also had an HR round. Another technical round. Questions were short, lengthy. Some involved explanations.

Costi Perricos. Global Artificial Intelligence & Data Lead Partner. [email protected]. +44 (0)20 7007 8206. Harness the power of analytics, automation, and AI to implement strategy and technology that will balance speed, cost, and quality to deliver business value.

Pivoting from product seller to service provider. Find out how Deloitte is working with Dell in its transformation from a product seller to a services and solutions provider. A range of case studies that explore how Deloitte creates an unprecedented impact using teamwork, cutting-edge technology and strategic thinking.

10 Deloitte Data Science Consultant interview questions and 10 interview reviews. ... Second round - Case Study on a machine learning approach , third round - Technical mostly based on the project , fourth round - partner interview. ... Technical/Case is all about making assumptions and decomposing the problem effectively (DS or otherwise ...

Learn how Deloitte helps clients around the globe solve their biggest challenges, ranging from digital transformation to climate & sustainability initiatives. Real Connections. Real Impact. As the world rapidly evolves, we help organizations successfully navigate their unique business challenges. Explore these stories that illustrate how we ...

The average base salary for a Data Scientist at Deloitte is $112,463. based on 43 data points. Adjusting the average for more recent salary data points, the average recency weighted base salary is $112,569. The estimated average total compensation is $117,445. based on 33 data points.

Step 1: Clarify. Clarifying is used to gather more information. More often than not, these case studies are designed to be confusing and vague. There will be unorganized data intentionally supplemented with extraneous or omitted information, so it is the candidate's responsibility to dig deeper, filter out bad information, and fill gaps.

Home. Connections that matter. See how we elevate the human experience for our clients. Ongoing disruption and heightened uncertainty are testing our humanity with the hope of elevating it. We are in the midst of a global pandemic, economic turbulence, and an overdue reckoning with systemic racism. These crises call into question what we value ...

Familiar-Half2517. •. This. I do case interviews and case prep - the candidates I meet with are VERY well prepared and know how to analyze a case. You do need to ask clarifying questions. The cases I have worked through are always missing a key piece of data you need to formulate the correct response. Definitely prep.

He asked a few questions about things on my resume to make sure I wasn't bs'ing and then some conceptual questions about how to do things in Python and SQL. The hardest part is that he goes through a case study and you have to talk your way through your thought process. There's examples on the Deloitte website about these case studies ...

This Summer Scholar designs and develops cloud-based data processing pipelines, machine learning predictive models, data visualization dashboards and other data driven systems. Majors: Data Science, Computer Science, Business Analytics, Mathematics, Statistics, and other Quantitative Fields. The Team. Our practitioners will be able to maintain ...

Deloitte identifies three components of spatial computing: physical (wearables and sensors), bridging (network infrastructure) and digital (interactive digital objects, holographs, avatars).

Dear and his collaborators are the first to link neurotypical brain gene expression to autism and schizophrenia across three common types of prior results (i.e., case-control neuroimaging, differential gene expressionand genome-wide association studies). Their results suggest that data collected using these distinct techniques, which previously ...