Qualitative case study data analysis: an example from practice

Affiliation.

- 1 School of Nursing and Midwifery, National University of Ireland, Galway, Republic of Ireland.

- PMID: 25976531

- DOI: 10.7748/nr.22.5.8.e1307

Aim: To illustrate an approach to data analysis in qualitative case study methodology.

Background: There is often little detail in case study research about how data were analysed. However, it is important that comprehensive analysis procedures are used because there are often large sets of data from multiple sources of evidence. Furthermore, the ability to describe in detail how the analysis was conducted ensures rigour in reporting qualitative research.

Data sources: The research example used is a multiple case study that explored the role of the clinical skills laboratory in preparing students for the real world of practice. Data analysis was conducted using a framework guided by the four stages of analysis outlined by Morse ( 1994 ): comprehending, synthesising, theorising and recontextualising. The specific strategies for analysis in these stages centred on the work of Miles and Huberman ( 1994 ), which has been successfully used in case study research. The data were managed using NVivo software.

Review methods: Literature examining qualitative data analysis was reviewed and strategies illustrated by the case study example provided. Discussion Each stage of the analysis framework is described with illustration from the research example for the purpose of highlighting the benefits of a systematic approach to handling large data sets from multiple sources.

Conclusion: By providing an example of how each stage of the analysis was conducted, it is hoped that researchers will be able to consider the benefits of such an approach to their own case study analysis.

Implications for research/practice: This paper illustrates specific strategies that can be employed when conducting data analysis in case study research and other qualitative research designs.

Keywords: Case study data analysis; case study research methodology; clinical skills research; qualitative case study methodology; qualitative data analysis; qualitative research.

- Case-Control Studies*

- Data Interpretation, Statistical*

- Nursing Research / methods*

- Qualitative Research*

- Research Design

- AI & NLP

- Churn & Loyalty

- Customer Experience

- Customer Journeys

- Customer Metrics

- Feedback Analysis

- Product Experience

- Product Updates

- Sentiment Analysis

- Surveys & Feedback Collection

- Try Thematic

Welcome to the community

Qualitative Data Analysis: Step-by-Step Guide (Manual vs. Automatic)

When we conduct qualitative methods of research, need to explain changes in metrics or understand people's opinions, we always turn to qualitative data. Qualitative data is typically generated through:

- Interview transcripts

- Surveys with open-ended questions

- Contact center transcripts

- Texts and documents

- Audio and video recordings

- Observational notes

Compared to quantitative data, which captures structured information, qualitative data is unstructured and has more depth. It can answer our questions, can help formulate hypotheses and build understanding.

It's important to understand the differences between quantitative data & qualitative data . But unfortunately, analyzing qualitative data is difficult. While tools like Excel, Tableau and PowerBI crunch and visualize quantitative data with ease, there are a limited number of mainstream tools for analyzing qualitative data . The majority of qualitative data analysis still happens manually.

That said, there are two new trends that are changing this. First, there are advances in natural language processing (NLP) which is focused on understanding human language. Second, there is an explosion of user-friendly software designed for both researchers and businesses. Both help automate the qualitative data analysis process.

In this post we want to teach you how to conduct a successful qualitative data analysis. There are two primary qualitative data analysis methods; manual & automatic. We will teach you how to conduct the analysis manually, and also, automatically using software solutions powered by NLP. We’ll guide you through the steps to conduct a manual analysis, and look at what is involved and the role technology can play in automating this process.

More businesses are switching to fully-automated analysis of qualitative customer data because it is cheaper, faster, and just as accurate. Primarily, businesses purchase subscriptions to feedback analytics platforms so that they can understand customer pain points and sentiment.

We’ll take you through 5 steps to conduct a successful qualitative data analysis. Within each step we will highlight the key difference between the manual, and automated approach of qualitative researchers. Here's an overview of the steps:

The 5 steps to doing qualitative data analysis

- Gathering and collecting your qualitative data

- Organizing and connecting into your qualitative data

- Coding your qualitative data

- Analyzing the qualitative data for insights

- Reporting on the insights derived from your analysis

What is Qualitative Data Analysis?

Qualitative data analysis is a process of gathering, structuring and interpreting qualitative data to understand what it represents.

Qualitative data is non-numerical and unstructured. Qualitative data generally refers to text, such as open-ended responses to survey questions or user interviews, but also includes audio, photos and video.

Businesses often perform qualitative data analysis on customer feedback. And within this context, qualitative data generally refers to verbatim text data collected from sources such as reviews, complaints, chat messages, support centre interactions, customer interviews, case notes or social media comments.

How is qualitative data analysis different from quantitative data analysis?

Understanding the differences between quantitative & qualitative data is important. When it comes to analyzing data, Qualitative Data Analysis serves a very different role to Quantitative Data Analysis. But what sets them apart?

Qualitative Data Analysis dives into the stories hidden in non-numerical data such as interviews, open-ended survey answers, or notes from observations. It uncovers the ‘whys’ and ‘hows’ giving a deep understanding of people’s experiences and emotions.

Quantitative Data Analysis on the other hand deals with numerical data, using statistics to measure differences, identify preferred options, and pinpoint root causes of issues. It steps back to address questions like "how many" or "what percentage" to offer broad insights we can apply to larger groups.

In short, Qualitative Data Analysis is like a microscope, helping us understand specific detail. Quantitative Data Analysis is like the telescope, giving us a broader perspective. Both are important, working together to decode data for different objectives.

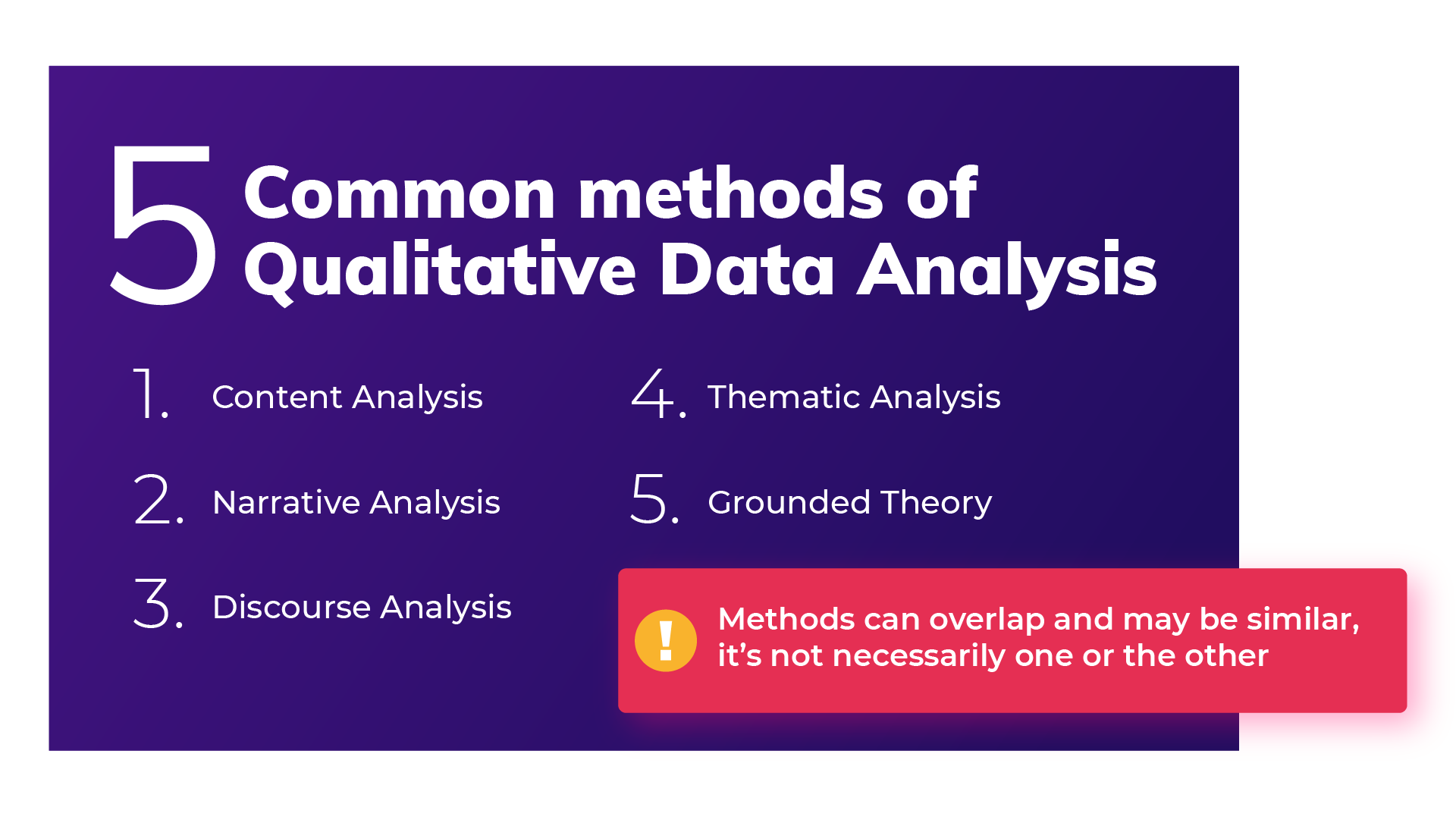

Qualitative Data Analysis methods

Once all the data has been captured, there are a variety of analysis techniques available and the choice is determined by your specific research objectives and the kind of data you’ve gathered. Common qualitative data analysis methods include:

Content Analysis

This is a popular approach to qualitative data analysis. Other qualitative analysis techniques may fit within the broad scope of content analysis. Thematic analysis is a part of the content analysis. Content analysis is used to identify the patterns that emerge from text, by grouping content into words, concepts, and themes. Content analysis is useful to quantify the relationship between all of the grouped content. The Columbia School of Public Health has a detailed breakdown of content analysis .

Narrative Analysis

Narrative analysis focuses on the stories people tell and the language they use to make sense of them. It is particularly useful in qualitative research methods where customer stories are used to get a deep understanding of customers’ perspectives on a specific issue. A narrative analysis might enable us to summarize the outcomes of a focused case study.

Discourse Analysis

Discourse analysis is used to get a thorough understanding of the political, cultural and power dynamics that exist in specific situations. The focus of discourse analysis here is on the way people express themselves in different social contexts. Discourse analysis is commonly used by brand strategists who hope to understand why a group of people feel the way they do about a brand or product.

Thematic Analysis

Thematic analysis is used to deduce the meaning behind the words people use. This is accomplished by discovering repeating themes in text. These meaningful themes reveal key insights into data and can be quantified, particularly when paired with sentiment analysis . Often, the outcome of thematic analysis is a code frame that captures themes in terms of codes, also called categories. So the process of thematic analysis is also referred to as “coding”. A common use-case for thematic analysis in companies is analysis of customer feedback.

Grounded Theory

Grounded theory is a useful approach when little is known about a subject. Grounded theory starts by formulating a theory around a single data case. This means that the theory is “grounded”. Grounded theory analysis is based on actual data, and not entirely speculative. Then additional cases can be examined to see if they are relevant and can add to the original grounded theory.

Challenges of Qualitative Data Analysis

While Qualitative Data Analysis offers rich insights, it comes with its challenges. Each unique QDA method has its unique hurdles. Let’s take a look at the challenges researchers and analysts might face, depending on the chosen method.

- Time and Effort (Narrative Analysis): Narrative analysis, which focuses on personal stories, demands patience. Sifting through lengthy narratives to find meaningful insights can be time-consuming, requires dedicated effort.

- Being Objective (Grounded Theory): Grounded theory, building theories from data, faces the challenges of personal biases. Staying objective while interpreting data is crucial, ensuring conclusions are rooted in the data itself.

- Complexity (Thematic Analysis): Thematic analysis involves identifying themes within data, a process that can be intricate. Categorizing and understanding themes can be complex, especially when each piece of data varies in context and structure. Thematic Analysis software can simplify this process.

- Generalizing Findings (Narrative Analysis): Narrative analysis, dealing with individual stories, makes drawing broad challenging. Extending findings from a single narrative to a broader context requires careful consideration.

- Managing Data (Thematic Analysis): Thematic analysis involves organizing and managing vast amounts of unstructured data, like interview transcripts. Managing this can be a hefty task, requiring effective data management strategies.

- Skill Level (Grounded Theory): Grounded theory demands specific skills to build theories from the ground up. Finding or training analysts with these skills poses a challenge, requiring investment in building expertise.

Benefits of qualitative data analysis

Qualitative Data Analysis (QDA) is like a versatile toolkit, offering a tailored approach to understanding your data. The benefits it offers are as diverse as the methods. Let’s explore why choosing the right method matters.

- Tailored Methods for Specific Needs: QDA isn't one-size-fits-all. Depending on your research objectives and the type of data at hand, different methods offer unique benefits. If you want emotive customer stories, narrative analysis paints a strong picture. When you want to explain a score, thematic analysis reveals insightful patterns

- Flexibility with Thematic Analysis: thematic analysis is like a chameleon in the toolkit of QDA. It adapts well to different types of data and research objectives, making it a top choice for any qualitative analysis.

- Deeper Understanding, Better Products: QDA helps you dive into people's thoughts and feelings. This deep understanding helps you build products and services that truly matches what people want, ensuring satisfied customers

- Finding the Unexpected: Qualitative data often reveals surprises that we miss in quantitative data. QDA offers us new ideas and perspectives, for insights we might otherwise miss.

- Building Effective Strategies: Insights from QDA are like strategic guides. They help businesses in crafting plans that match people’s desires.

- Creating Genuine Connections: Understanding people’s experiences lets businesses connect on a real level. This genuine connection helps build trust and loyalty, priceless for any business.

How to do Qualitative Data Analysis: 5 steps

Now we are going to show how you can do your own qualitative data analysis. We will guide you through this process step by step. As mentioned earlier, you will learn how to do qualitative data analysis manually , and also automatically using modern qualitative data and thematic analysis software.

To get best value from the analysis process and research process, it’s important to be super clear about the nature and scope of the question that’s being researched. This will help you select the research collection channels that are most likely to help you answer your question.

Depending on if you are a business looking to understand customer sentiment, or an academic surveying a school, your approach to qualitative data analysis will be unique.

Once you’re clear, there’s a sequence to follow. And, though there are differences in the manual and automatic approaches, the process steps are mostly the same.

The use case for our step-by-step guide is a company looking to collect data (customer feedback data), and analyze the customer feedback - in order to improve customer experience. By analyzing the customer feedback the company derives insights about their business and their customers. You can follow these same steps regardless of the nature of your research. Let’s get started.

Step 1: Gather your qualitative data and conduct research (Conduct qualitative research)

The first step of qualitative research is to do data collection. Put simply, data collection is gathering all of your data for analysis. A common situation is when qualitative data is spread across various sources.

Classic methods of gathering qualitative data

Most companies use traditional methods for gathering qualitative data: conducting interviews with research participants, running surveys, and running focus groups. This data is typically stored in documents, CRMs, databases and knowledge bases. It’s important to examine which data is available and needs to be included in your research project, based on its scope.

Using your existing qualitative feedback

As it becomes easier for customers to engage across a range of different channels, companies are gathering increasingly large amounts of both solicited and unsolicited qualitative feedback.

Most organizations have now invested in Voice of Customer programs , support ticketing systems, chatbot and support conversations, emails and even customer Slack chats.

These new channels provide companies with new ways of getting feedback, and also allow the collection of unstructured feedback data at scale.

The great thing about this data is that it contains a wealth of valubale insights and that it’s already there! When you have a new question about user behavior or your customers, you don’t need to create a new research study or set up a focus group. You can find most answers in the data you already have.

Typically, this data is stored in third-party solutions or a central database, but there are ways to export it or connect to a feedback analysis solution through integrations or an API.

Utilize untapped qualitative data channels

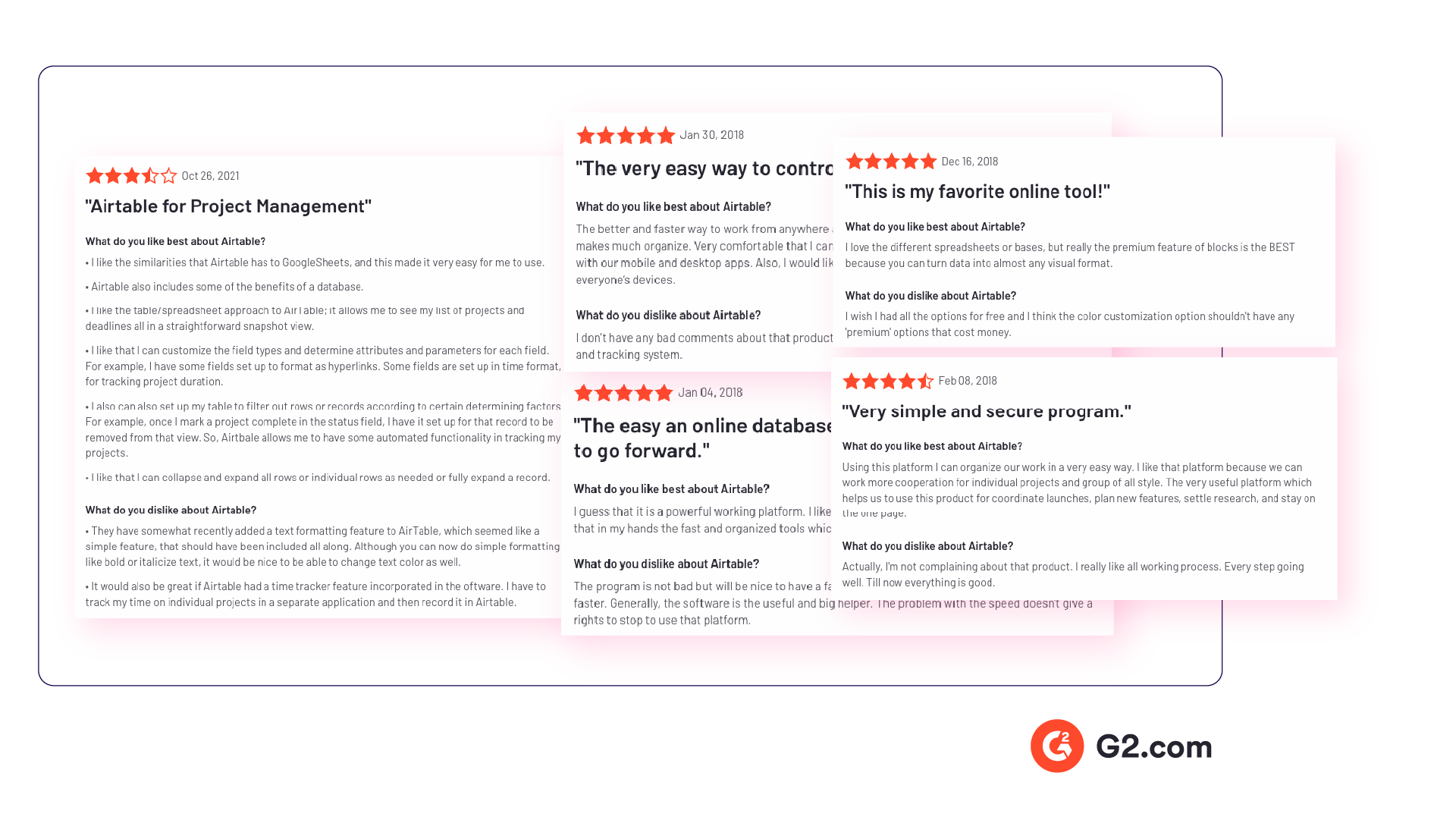

There are many online qualitative data sources you may not have considered. For example, you can find useful qualitative data in social media channels like Twitter or Facebook. Online forums, review sites, and online communities such as Discourse or Reddit also contain valuable data about your customers, or research questions.

If you are considering performing a qualitative benchmark analysis against competitors - the internet is your best friend, and review analysis is a great place to start. Gathering feedback in competitor reviews on sites like Trustpilot, G2, Capterra, Better Business Bureau or on app stores is a great way to perform a competitor benchmark analysis.

Customer feedback analysis software often has integrations into social media and review sites, or you could use a solution like DataMiner to scrape the reviews.

Step 2: Connect & organize all your qualitative data

Now you all have this qualitative data but there’s a problem, the data is unstructured. Before feedback can be analyzed and assigned any value, it needs to be organized in a single place. Why is this important? Consistency!

If all data is easily accessible in one place and analyzed in a consistent manner, you will have an easier time summarizing and making decisions based on this data.

The manual approach to organizing your data

The classic method of structuring qualitative data is to plot all the raw data you’ve gathered into a spreadsheet.

Typically, research and support teams would share large Excel sheets and different business units would make sense of the qualitative feedback data on their own. Each team collects and organizes the data in a way that best suits them, which means the feedback tends to be kept in separate silos.

An alternative and a more robust solution is to store feedback in a central database, like Snowflake or Amazon Redshift .

Keep in mind that when you organize your data in this way, you are often preparing it to be imported into another software. If you go the route of a database, you would need to use an API to push the feedback into a third-party software.

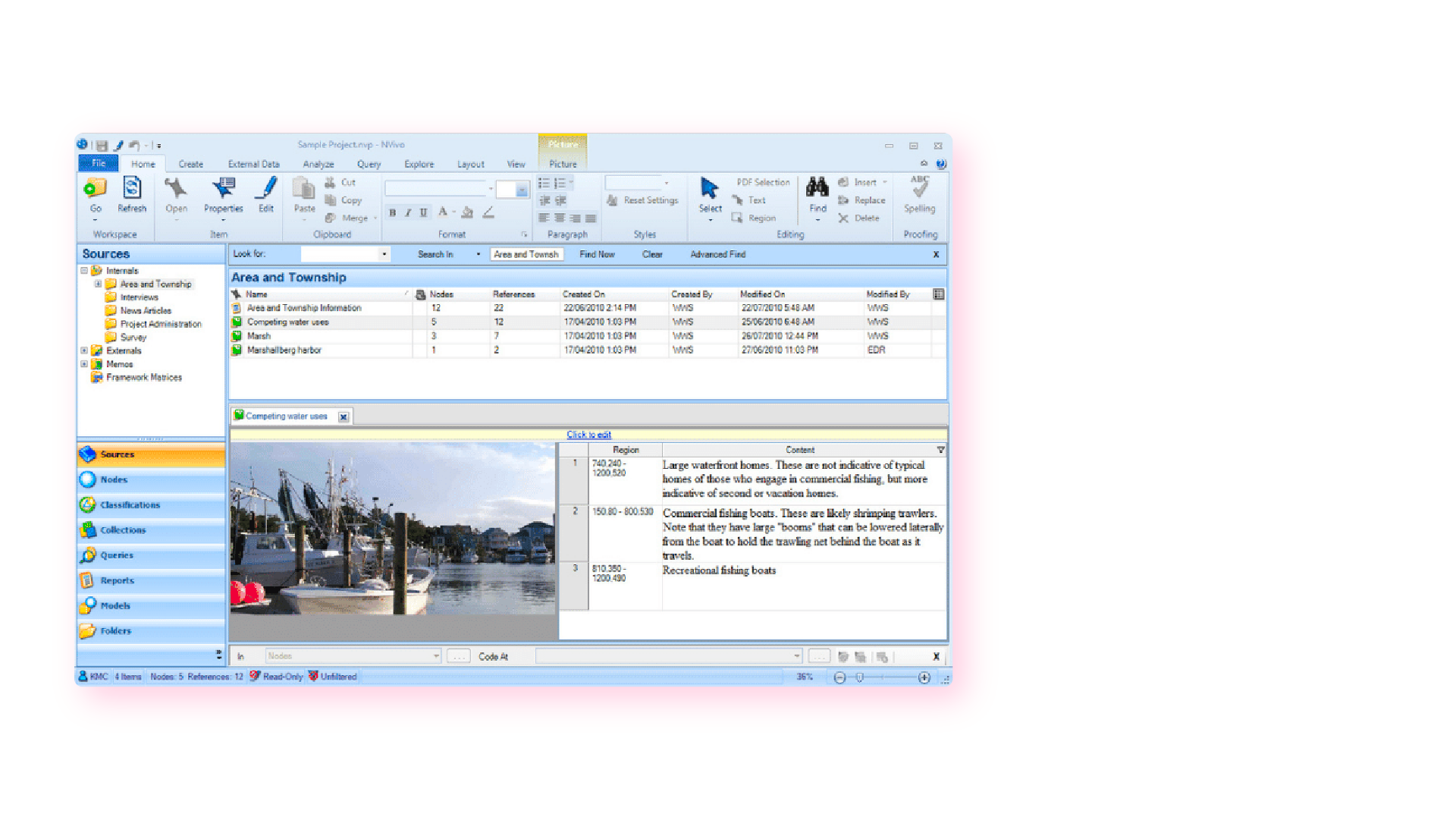

Computer-assisted qualitative data analysis software (CAQDAS)

Traditionally within the manual analysis approach (but not always), qualitative data is imported into CAQDAS software for coding.

In the early 2000s, CAQDAS software was popularised by developers such as ATLAS.ti, NVivo and MAXQDA and eagerly adopted by researchers to assist with the organizing and coding of data.

The benefits of using computer-assisted qualitative data analysis software:

- Assists in the organizing of your data

- Opens you up to exploring different interpretations of your data analysis

- Allows you to share your dataset easier and allows group collaboration (allows for secondary analysis)

However you still need to code the data, uncover the themes and do the analysis yourself. Therefore it is still a manual approach.

Organizing your qualitative data in a feedback repository

Another solution to organizing your qualitative data is to upload it into a feedback repository where it can be unified with your other data , and easily searchable and taggable. There are a number of software solutions that act as a central repository for your qualitative research data. Here are a couple solutions that you could investigate:

- Dovetail: Dovetail is a research repository with a focus on video and audio transcriptions. You can tag your transcriptions within the platform for theme analysis. You can also upload your other qualitative data such as research reports, survey responses, support conversations, and customer interviews. Dovetail acts as a single, searchable repository. And makes it easier to collaborate with other people around your qualitative research.

- EnjoyHQ: EnjoyHQ is another research repository with similar functionality to Dovetail. It boasts a more sophisticated search engine, but it has a higher starting subscription cost.

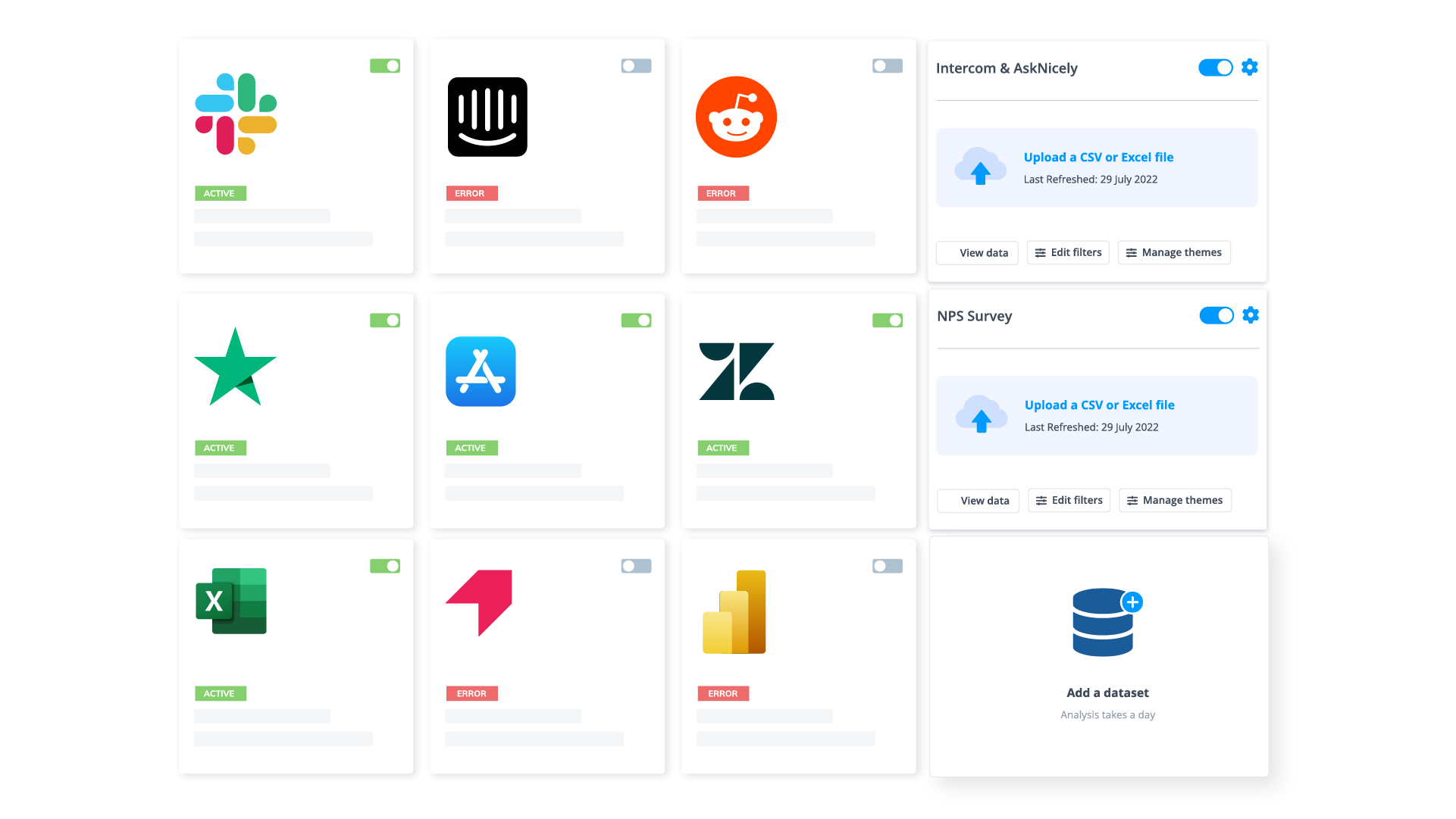

Organizing your qualitative data in a feedback analytics platform

If you have a lot of qualitative customer or employee feedback, from the likes of customer surveys or employee surveys, you will benefit from a feedback analytics platform. A feedback analytics platform is a software that automates the process of both sentiment analysis and thematic analysis . Companies use the integrations offered by these platforms to directly tap into their qualitative data sources (review sites, social media, survey responses, etc.). The data collected is then organized and analyzed consistently within the platform.

If you have data prepared in a spreadsheet, it can also be imported into feedback analytics platforms.

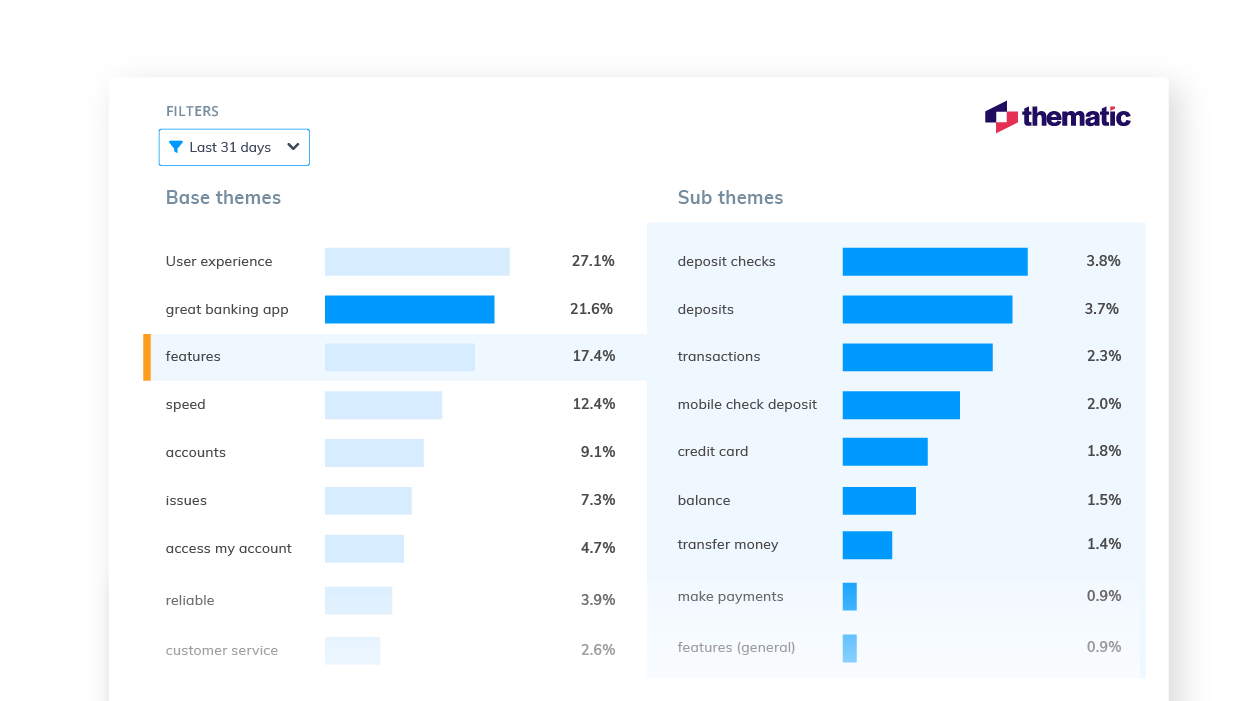

Once all this rich data has been organized within the feedback analytics platform, it is ready to be coded and themed, within the same platform. Thematic is a feedback analytics platform that offers one of the largest libraries of integrations with qualitative data sources.

Step 3: Coding your qualitative data

Your feedback data is now organized in one place. Either within your spreadsheet, CAQDAS, feedback repository or within your feedback analytics platform. The next step is to code your feedback data so we can extract meaningful insights in the next step.

Coding is the process of labelling and organizing your data in such a way that you can then identify themes in the data, and the relationships between these themes.

To simplify the coding process, you will take small samples of your customer feedback data, come up with a set of codes, or categories capturing themes, and label each piece of feedback, systematically, for patterns and meaning. Then you will take a larger sample of data, revising and refining the codes for greater accuracy and consistency as you go.

If you choose to use a feedback analytics platform, much of this process will be automated and accomplished for you.

The terms to describe different categories of meaning (‘theme’, ‘code’, ‘tag’, ‘category’ etc) can be confusing as they are often used interchangeably. For clarity, this article will use the term ‘code’.

To code means to identify key words or phrases and assign them to a category of meaning. “I really hate the customer service of this computer software company” would be coded as “poor customer service”.

How to manually code your qualitative data

- Decide whether you will use deductive or inductive coding. Deductive coding is when you create a list of predefined codes, and then assign them to the qualitative data. Inductive coding is the opposite of this, you create codes based on the data itself. Codes arise directly from the data and you label them as you go. You need to weigh up the pros and cons of each coding method and select the most appropriate.

- Read through the feedback data to get a broad sense of what it reveals. Now it’s time to start assigning your first set of codes to statements and sections of text.

- Keep repeating step 2, adding new codes and revising the code description as often as necessary. Once it has all been coded, go through everything again, to be sure there are no inconsistencies and that nothing has been overlooked.

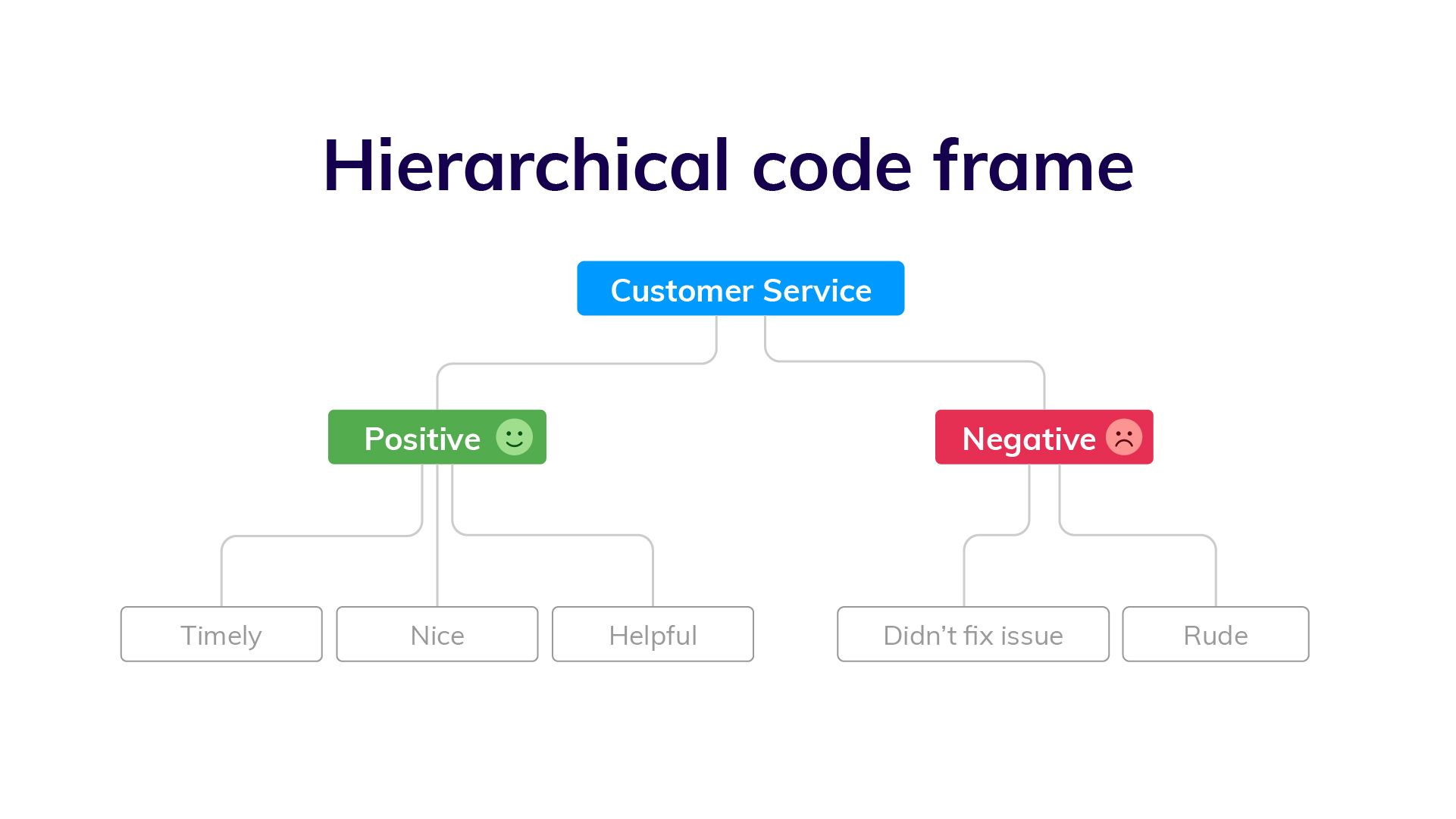

- Create a code frame to group your codes. The coding frame is the organizational structure of all your codes. And there are two commonly used types of coding frames, flat, or hierarchical. A hierarchical code frame will make it easier for you to derive insights from your analysis.

- Based on the number of times a particular code occurs, you can now see the common themes in your feedback data. This is insightful! If ‘bad customer service’ is a common code, it’s time to take action.

We have a detailed guide dedicated to manually coding your qualitative data .

Using software to speed up manual coding of qualitative data

An Excel spreadsheet is still a popular method for coding. But various software solutions can help speed up this process. Here are some examples.

- CAQDAS / NVivo - CAQDAS software has built-in functionality that allows you to code text within their software. You may find the interface the software offers easier for managing codes than a spreadsheet.

- Dovetail/EnjoyHQ - You can tag transcripts and other textual data within these solutions. As they are also repositories you may find it simpler to keep the coding in one platform.

- IBM SPSS - SPSS is a statistical analysis software that may make coding easier than in a spreadsheet.

- Ascribe - Ascribe’s ‘Coder’ is a coding management system. Its user interface will make it easier for you to manage your codes.

Automating the qualitative coding process using thematic analysis software

In solutions which speed up the manual coding process, you still have to come up with valid codes and often apply codes manually to pieces of feedback. But there are also solutions that automate both the discovery and the application of codes.

Advances in machine learning have now made it possible to read, code and structure qualitative data automatically. This type of automated coding is offered by thematic analysis software .

Automation makes it far simpler and faster to code the feedback and group it into themes. By incorporating natural language processing (NLP) into the software, the AI looks across sentences and phrases to identify common themes meaningful statements. Some automated solutions detect repeating patterns and assign codes to them, others make you train the AI by providing examples. You could say that the AI learns the meaning of the feedback on its own.

Thematic automates the coding of qualitative feedback regardless of source. There’s no need to set up themes or categories in advance. Simply upload your data and wait a few minutes. You can also manually edit the codes to further refine their accuracy. Experiments conducted indicate that Thematic’s automated coding is just as accurate as manual coding .

Paired with sentiment analysis and advanced text analytics - these automated solutions become powerful for deriving quality business or research insights.

You could also build your own , if you have the resources!

The key benefits of using an automated coding solution

Automated analysis can often be set up fast and there’s the potential to uncover things that would never have been revealed if you had given the software a prescribed list of themes to look for.

Because the model applies a consistent rule to the data, it captures phrases or statements that a human eye might have missed.

Complete and consistent analysis of customer feedback enables more meaningful findings. Leading us into step 4.

Step 4: Analyze your data: Find meaningful insights

Now we are going to analyze our data to find insights. This is where we start to answer our research questions. Keep in mind that step 4 and step 5 (tell the story) have some overlap . This is because creating visualizations is both part of analysis process and reporting.

The task of uncovering insights is to scour through the codes that emerge from the data and draw meaningful correlations from them. It is also about making sure each insight is distinct and has enough data to support it.

Part of the analysis is to establish how much each code relates to different demographics and customer profiles, and identify whether there’s any relationship between these data points.

Manually create sub-codes to improve the quality of insights

If your code frame only has one level, you may find that your codes are too broad to be able to extract meaningful insights. This is where it is valuable to create sub-codes to your primary codes. This process is sometimes referred to as meta coding.

Note: If you take an inductive coding approach, you can create sub-codes as you are reading through your feedback data and coding it.

While time-consuming, this exercise will improve the quality of your analysis. Here is an example of what sub-codes could look like.

You need to carefully read your qualitative data to create quality sub-codes. But as you can see, the depth of analysis is greatly improved. By calculating the frequency of these sub-codes you can get insight into which customer service problems you can immediately address.

Correlate the frequency of codes to customer segments

Many businesses use customer segmentation . And you may have your own respondent segments that you can apply to your qualitative analysis. Segmentation is the practise of dividing customers or research respondents into subgroups.

Segments can be based on:

- Demographic

- And any other data type that you care to segment by

It is particularly useful to see the occurrence of codes within your segments. If one of your customer segments is considered unimportant to your business, but they are the cause of nearly all customer service complaints, it may be in your best interest to focus attention elsewhere. This is a useful insight!

Manually visualizing coded qualitative data

There are formulas you can use to visualize key insights in your data. The formulas we will suggest are imperative if you are measuring a score alongside your feedback.

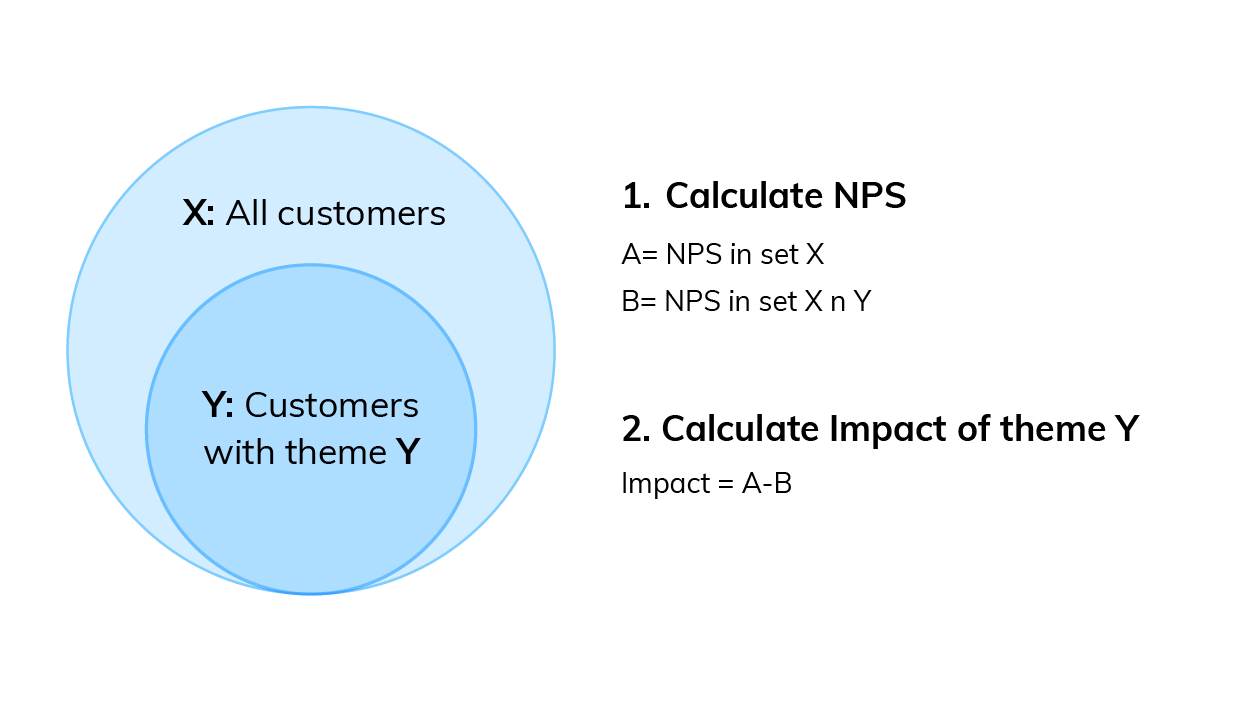

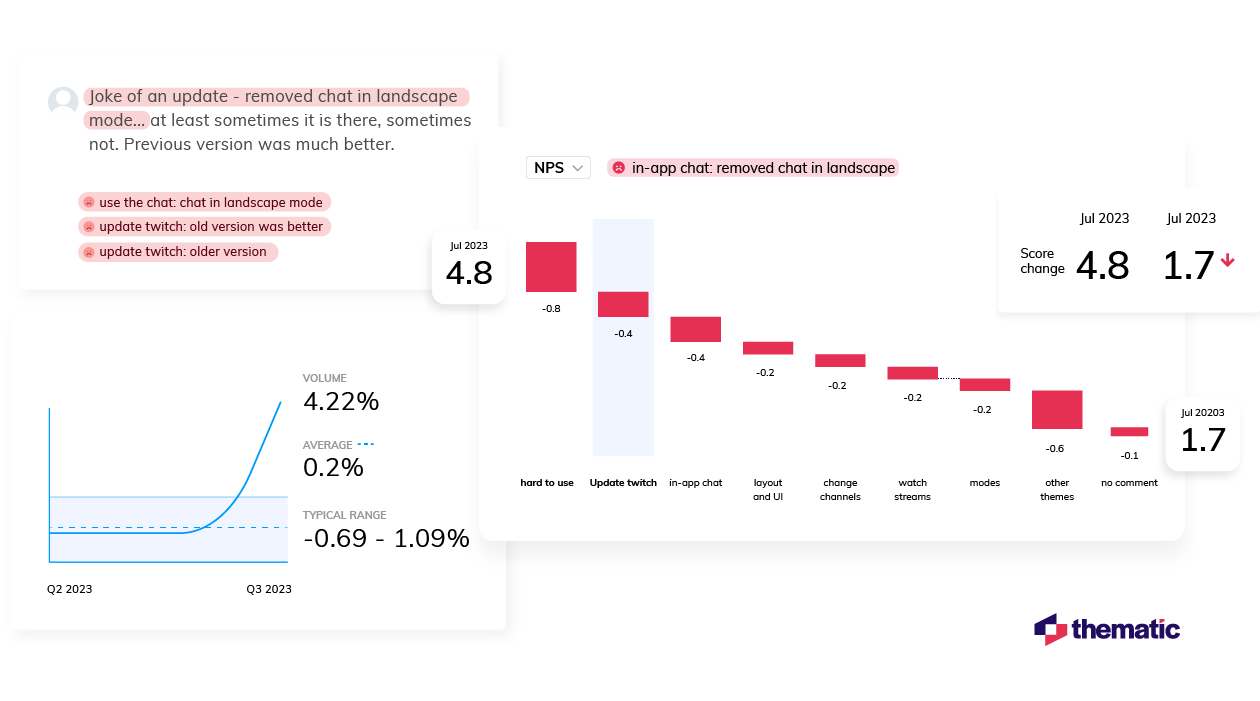

If you are collecting a metric alongside your qualitative data this is a key visualization. Impact answers the question: “What’s the impact of a code on my overall score?”. Using Net Promoter Score (NPS) as an example, first you need to:

- Calculate overall NPS

- Calculate NPS in the subset of responses that do not contain that theme

- Subtract B from A

Then you can use this simple formula to calculate code impact on NPS .

You can then visualize this data using a bar chart.

You can download our CX toolkit - it includes a template to recreate this.

Trends over time

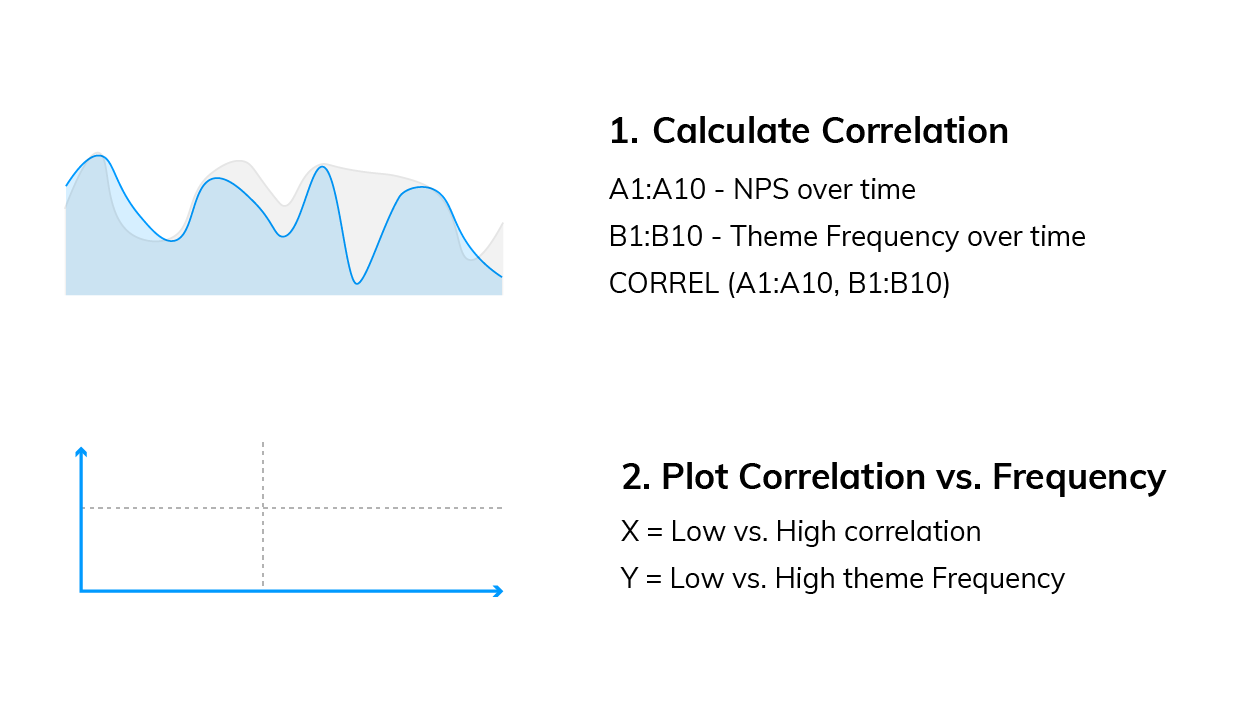

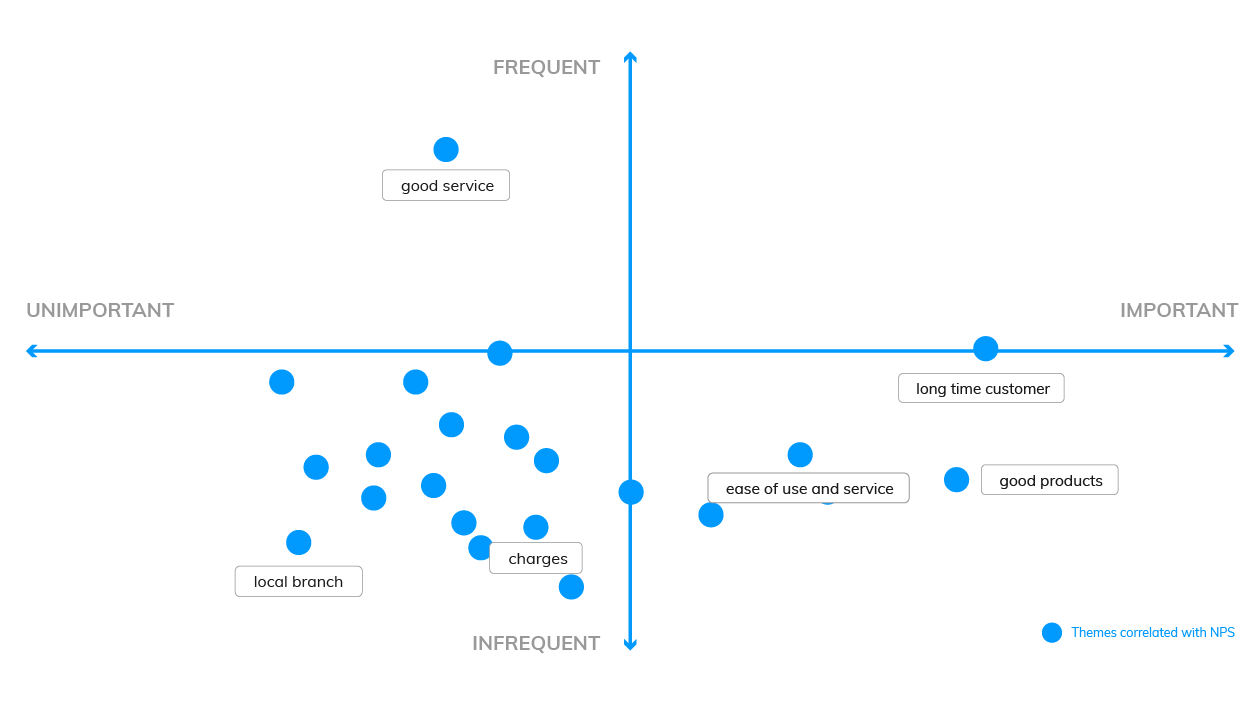

This analysis can help you answer questions like: “Which codes are linked to decreases or increases in my score over time?”

We need to compare two sequences of numbers: NPS over time and code frequency over time . Using Excel, calculate the correlation between the two sequences, which can be either positive (the more codes the higher the NPS, see picture below), or negative (the more codes the lower the NPS).

Now you need to plot code frequency against the absolute value of code correlation with NPS. Here is the formula:

The visualization could look like this:

These are two examples, but there are more. For a third manual formula, and to learn why word clouds are not an insightful form of analysis, read our visualizations article .

Using a text analytics solution to automate analysis

Automated text analytics solutions enable codes and sub-codes to be pulled out of the data automatically. This makes it far faster and easier to identify what’s driving negative or positive results. And to pick up emerging trends and find all manner of rich insights in the data.

Another benefit of AI-driven text analytics software is its built-in capability for sentiment analysis, which provides the emotive context behind your feedback and other qualitative textual data therein.

Thematic provides text analytics that goes further by allowing users to apply their expertise on business context to edit or augment the AI-generated outputs.

Since the move away from manual research is generally about reducing the human element, adding human input to the technology might sound counter-intuitive. However, this is mostly to make sure important business nuances in the feedback aren’t missed during coding. The result is a higher accuracy of analysis. This is sometimes referred to as augmented intelligence .

Step 5: Report on your data: Tell the story

The last step of analyzing your qualitative data is to report on it, to tell the story. At this point, the codes are fully developed and the focus is on communicating the narrative to the audience.

A coherent outline of the qualitative research, the findings and the insights is vital for stakeholders to discuss and debate before they can devise a meaningful course of action.

Creating graphs and reporting in Powerpoint

Typically, qualitative researchers take the tried and tested approach of distilling their report into a series of charts, tables and other visuals which are woven into a narrative for presentation in Powerpoint.

Using visualization software for reporting

With data transformation and APIs, the analyzed data can be shared with data visualisation software, such as Power BI or Tableau , Google Studio or Looker. Power BI and Tableau are among the most preferred options.

Visualizing your insights inside a feedback analytics platform

Feedback analytics platforms, like Thematic, incorporate visualisation tools that intuitively turn key data and insights into graphs. This removes the time consuming work of constructing charts to visually identify patterns and creates more time to focus on building a compelling narrative that highlights the insights, in bite-size chunks, for executive teams to review.

Using a feedback analytics platform with visualization tools means you don’t have to use a separate product for visualizations. You can export graphs into Powerpoints straight from the platforms.

Conclusion - Manual or Automated?

There are those who remain deeply invested in the manual approach - because it’s familiar, because they’re reluctant to spend money and time learning new software, or because they’ve been burned by the overpromises of AI.

For projects that involve small datasets, manual analysis makes sense. For example, if the objective is simply to quantify a simple question like “Do customers prefer X concepts to Y?”. If the findings are being extracted from a small set of focus groups and interviews, sometimes it’s easier to just read them

However, as new generations come into the workplace, it’s technology-driven solutions that feel more comfortable and practical. And the merits are undeniable. Especially if the objective is to go deeper and understand the ‘why’ behind customers’ preference for X or Y. And even more especially if time and money are considerations.

The ability to collect a free flow of qualitative feedback data at the same time as the metric means AI can cost-effectively scan, crunch, score and analyze a ton of feedback from one system in one go. And time-intensive processes like focus groups, or coding, that used to take weeks, can now be completed in a matter of hours or days.

But aside from the ever-present business case to speed things up and keep costs down, there are also powerful research imperatives for automated analysis of qualitative data: namely, accuracy and consistency.

Finding insights hidden in feedback requires consistency, especially in coding. Not to mention catching all the ‘unknown unknowns’ that can skew research findings and steering clear of cognitive bias.

Some say without manual data analysis researchers won’t get an accurate “feel” for the insights. However, the larger data sets are, the harder it is to sort through the feedback and organize feedback that has been pulled from different places. And, the more difficult it is to stay on course, the greater the risk of drawing incorrect, or incomplete, conclusions grows.

Though the process steps for qualitative data analysis have remained pretty much unchanged since psychologist Paul Felix Lazarsfeld paved the path a hundred years ago, the impact digital technology has had on types of qualitative feedback data and the approach to the analysis are profound.

If you want to try an automated feedback analysis solution on your own qualitative data, you can get started with Thematic .

Community & Marketing

Tyler manages our community of CX, insights & analytics professionals. Tyler's goal is to help unite insights professionals around common challenges.

We make it easy to discover the customer and product issues that matter.

Unlock the value of feedback at scale, in one platform. Try it for free now!

- Questions to ask your Feedback Analytics vendor

- How to end customer churn for good

- Scalable analysis of NPS verbatims

- 5 Text analytics approaches

- How to calculate the ROI of CX

Our experts will show you how Thematic works, how to discover pain points and track the ROI of decisions. To access your free trial, book a personal demo today.

Recent posts

When two major storms wreaked havoc on Auckland and Watercare’s infrastructurem the utility went through a CX crisis. With a massive influx of calls to their support center, Thematic helped them get inisghts from this data to forge a new approach to restore services and satisfaction levels.

Become a qualitative theming pro! Creating a perfect code frame is hard, but thematic analysis software makes the process much easier.

Qualtrics is one of the most well-known and powerful Customer Feedback Management platforms. But even so, it has limitations. We recently hosted a live panel where data analysts from two well-known brands shared their experiences with Qualtrics, and how they extended this platform’s capabilities. Below, we’ll share the

Research Writing and Analysis

- NVivo Group and Study Sessions

- SPSS This link opens in a new window

- Statistical Analysis Group sessions

- Using Qualtrics

- Dissertation and Data Analysis Group Sessions

- Defense Schedule - Commons Calendar This link opens in a new window

- Research Process Flow Chart

- Research Alignment Chapter 1 This link opens in a new window

- Step 1: Seek Out Evidence

- Step 2: Explain

- Step 3: The Big Picture

- Step 4: Own It

- Step 5: Illustrate

- Annotated Bibliography

- Literature Review This link opens in a new window

- Systematic Reviews & Meta-Analyses

- How to Synthesize and Analyze

- Synthesis and Analysis Practice

- Synthesis and Analysis Group Sessions

- Problem Statement

- Purpose Statement

- Conceptual Framework

- Theoretical Framework

- Locating Theoretical and Conceptual Frameworks This link opens in a new window

- Quantitative Research Questions

- Qualitative Research Questions

- Trustworthiness of Qualitative Data

- Analysis and Coding Example- Qualitative Data

- Thematic Data Analysis in Qualitative Design

- Dissertation to Journal Article This link opens in a new window

- International Journal of Online Graduate Education (IJOGE) This link opens in a new window

- Journal of Research in Innovative Teaching & Learning (JRIT&L) This link opens in a new window

Writing a Case Study

What is a case study?

A Case study is:

- An in-depth research design that primarily uses a qualitative methodology but sometimes includes quantitative methodology.

- Used to examine an identifiable problem confirmed through research.

- Used to investigate an individual, group of people, organization, or event.

- Used to mostly answer "how" and "why" questions.

What are the different types of case studies?

Note: These are the primary case studies. As you continue to research and learn

about case studies you will begin to find a robust list of different types.

Who are your case study participants?

What is triangulation ?

Validity and credibility are an essential part of the case study. Therefore, the researcher should include triangulation to ensure trustworthiness while accurately reflecting what the researcher seeks to investigate.

How to write a Case Study?

When developing a case study, there are different ways you could present the information, but remember to include the five parts for your case study.

Was this resource helpful?

- << Previous: Thematic Data Analysis in Qualitative Design

- Next: Journal Article Reporting Standards (JARS) >>

- Last Updated: May 29, 2024 8:05 AM

- URL: https://resources.nu.edu/researchtools

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- HHS Author Manuscripts

Qualitative Secondary Analysis: A Case Exemplar

Judith ann tate.

The Ohio State University, College of Nursing

Mary Beth Happ

Qualitative secondary analysis (QSA) is the use of qualitative data collected by someone else or to answer a different research question. Secondary analysis of qualitative data provides an opportunity to maximize data utility particularly with difficult to reach patient populations. However, QSA methods require careful consideration and explicit description to best understand, contextualize, and evaluate the research results. In this paper, we describe methodologic considerations using a case exemplar to illustrate challenges specific to QSA and strategies to overcome them.

Health care research requires significant time and resources. Secondary analysis of existing data provides an efficient alternative to collecting data from new groups or the same subjects. Secondary analysis, defined as the reuse of existing data to investigate a different research question ( Heaton, 2004 ), has a similar purpose whether the data are quantitative or qualitative. Common goals include to (1) perform additional analyses on the original dataset, (2) analyze a subset of the original data, (3) apply a new perspective or focus to the original data, or (4) validate or expand findings from the original analysis ( Hinds, Vogel, & Clarke-Steffen, 1997 ). Synthesis of knowledge from meta-analysis or aggregation may be viewed as an additional purpose of secondary analysis ( Heaton, 2004 ).

Qualitative studies utilize several different data sources, such as interviews, observations, field notes, archival meeting minutes or clinical record notes, to produce rich descriptions of human experiences within a social context. The work typically requires significant resources (e.g., personnel effort/time) for data collection and analysis. When feasible, qualitative secondary analysis (QSA) can be a useful and cost-effective alternative to designing and conducting redundant primary studies. With advances in computerized data storage and analysis programs, sharing qualitative datasets has become easier. However, little guidance is available for conducting, structuring procedures, or evaluating QSA ( Szabo & Strang, 1997 ).

QSA has been described as “an almost invisible enterprise in social research” ( Fielding, 2004 ). Primary data is often re-used; however, descriptions of this practice are embedded within the methods section of qualitative research reports rather than explicitly identified as QSA. Moreover, searching or classifying reports as QSA is difficult because many researchers refrain from identifying their work as secondary analyses ( Hinds et al., 1997 ; Thorne, 1998a ). In this paper, we provide an overview of QSA, the purposes, and modes of data sharing and approaches. A unique, expanded QSA approach is presented as a methodological exemplar to illustrate considerations.

QSA Typology

Heaton (2004) classified QSA studies based on the relationship between the secondary and primary questions and the scope of data analyzed. Types of QSA included studies that (1) investigated questions different from the primary study, (2) applied a unique theoretical perspective, or (3) extended the primary work. Heaton’s literature review (2004) showed that studies varied in the choice of data used, from selected portions to entire or combined datasets.

Modes of Data Sharing

Heaton (2004) identified three modes of data sharing: formal, informal and auto-data. Formal data sharing involves accessing and analyzing deposited or archived qualitative data by an independent group of researchers. Historical research often uses formal data sharing. Informal data sharing refers to requests for direct access to an investigator’s data for use alone or to pool with other data, usually as a result of informal networking. In some instances, the primary researchers may be invited to collaborate. The most common mode of data sharing is auto-data, defined as further exploration of a qualitative data set by the primary research team. Due to the iterative nature of qualitative research, when using auto-data, it may be difficult to determine where the original study questions end and discrete, distinct analysis begins ( Heaton, 1998 ).

An Exemplar QSA

Below we describe a QSA exemplar conducted by the primary author of this paper (JT), a member of the original research team, who used a supplementary approach to examine concepts revealed but not fully investigated in the primary study. First, we describe an overview of the original study on which the QSA was based. Then, the exemplar QSA is presented to illustrate: (1) the use of auto-data when the new research questions are closely related to or extend the original study aims ( Table 1 ), (2) the collection of additional clinical record data to supplement the original dataset and (3) the performance of separate member checking in the form of expert review and opinion. Considerations and recommendations for use of QSA are reviewed with illustrations taken from the exemplar study ( Table 2 ). Finally, discussion of conclusions and implications is included to assist with planning and implementation of QSA studies.

Research question comparison

Application of the Exemplar Qualitative Secondary Analysis (QSA)

Aitken, L. M., Marshall, A. P., Elliott, R., & McKinley, S. (2009). Critical care nurses' decision making: sedation assessment and management in intensive care. Journal of Clinical Nursing, 18 (1), 36–45.

Morse, J., & Field, P. (1995). Qualitative research methods for health professionals. (2nd ed.). Thousand Oaks, CA: Sage Publishing.

Patel, R. P., Gambrell, M., Speroff, T.,…Strength, C. (2009). Delirium and sedation in the intensive care unit: Survey of behaviors and attitudes of 1384 healthcare professionals. Critical Care Medicine, 37 (3), 825–832.

Shehabi, Y., Botha, J. A., Boyle, M. S., Ernest, D., Freebairn, R. C., Jenkins, I. R., … Seppelt, I. M. (2008). Sedation and delirium in the intensive care unit: an Australian and New Zealand perspective. Anaesthesia & Intensive Care, 36 (4), 570–578.

Tanios, M. A., de Wit, M., Epstein, S. K., & Devlin, J. W. (2009). Perceived barriers to the use of sedation protocols and daily sedation interruption: a multidisciplinary survey. Journal of Critical Care, 24 (1), 66–73.

Weinert, C. R., & Calvin, A. D. (2007). Epidemiology of sedation and sedation adequacy for mechanically ventilated patients in a medical and surgical intensive care unit. Critical Care Medicine , 35(2), 393–401.

The Primary Study

Briefly, the original study was a micro-level ethnography designed to describe the processes of care and communication with patients weaning from prolonged mechanical ventilation (PMV) in a 28-bed Medical Intensive Care Unit ( Broyles, Colbert, Tate, & Happ, 2008 ; Happ, Swigart, Tate, Arnold, Sereika, & Hoffman, 2007 ; Happ et al, 2007 , 2010 ). Both the primary study and the QSA were approved by the Institutional Review Board at the University of Pittsburgh. Data were collected by two experienced investigators and a PhD student-research project coordinator. Data sources consisted of sustained field observations, interviews with patients, family members and clinicians, and clinical record review, including all narrative clinical documentation recorded by direct caregivers.

During iterative data collection and analysis in the original study, it became apparent that anxiety and agitation had an effect on the duration of ventilator weaning episodes, an observation that helped to formulate the questions for the QSA ( Tate, Dabbs, Hoffman, Milbrandt & Happ, 2012 ). Thus, the secondary topic was closely aligned as an important facet of the primary phenomenon. The close, natural relationship between the primary and QSA research questions is demonstrated in the side-by-side comparison in Table 1 . This QSA focused on new questions which extended the original study to recognition and management of anxiety or agitation, behaviors that often accompany mechanical ventilation and weaning but occur throughout the trajectory of critical illness and recovery.

Considerations when Undertaking QSA ( Table 2 )

Practical advantages.

A key practical advantage of QSA is maximizing use of existing data. Data collection efforts represent a significant percentage of the research budget in terms of cost and labor ( Coyer & Gallo, 2005 ). This is particularly important in view of the competition for research funding. Planning and implementing a qualitative study involves considerable time and expertise not only for data collecting (e.g., interviews, participant observation or focus group), but in establishing access, credibility and relationships ( Thorne, 1994 ) and in conducting the analysis. The cost of QSA is often seen as negligible since the outlay of resources for data collection is assumed by the original study. However, QSA incurs costs related to storage, researcher’s effort for review of existing data, analysis, and any further data collection that may be necessary.

Another advantage of QSA is access to data from an assembled cohort. In conducting original primary research, practical concerns arise when participants are difficult to locate or reluctant to divulge sensitive details to a researcher. In the case of vulnerable critically ill patients, participation in research may seem an unnecessary burden to family members who may be unwilling to provide proxy consent ( Fielding, 2004 ). QSA permits new questions to be asked of data collected previously from these vulnerable groups ( Rew, Koniak-Griffin, Lewis, Miles, & O'Sullivan, 2000 ), or from groups or events that occur with scarcity ( Thorne, 1994 ). Participants’ time and effort in the primary study therefore becomes more worthwhile. In fact, it is recommended that data already collected from existing studies of vulnerable populations or about sensitive topics be analyzed prior to engaging new participants. In this way, QSA becomes a cumulative rather than a repetitive process ( Fielding, 2004 ).

Data Adequacy and Congruency

Secondary researchers must determine that the primary data set meets the needs of the QSA. Data may be insufficient to answer a new question or the focus of the QSA may be so different as to render the pursuit of a QSA impossible ( Heaton, 1998 ). The underlying assumptions, sampling plan, research questions, and conceptual framework selected to answer the original study question may not fit the question posed during QSA ( Coyer & Gallo, 2005 ). The researchers of the primary study may have selectively sampled participants and analyzed the resulting data in a manner that produced a narrow or uneven scope of data ( Hinds et al., 1997 ). Thus, the data needed to fully answer questions posed by the QSA may be inadequately addressed in the primary study. A critical review of the existing dataset is an important first step in determining whether the primary data fits the secondary questions ( Hinds et al., 1997 ).

Passage of Time

The timing of the QSA is another important consideration. If the primary study and secondary study are performed sequentially, findings of the original study may influence the secondary study. On the other hand, studies performed concurrently offer the benefit of access to both the primary research team and participants member checking ( Hinds et al., 1997 ).

The passage of time since the primary study was conducted can also have a distinct effect on the usefulness of the primary dataset. Data may be outdated or contain a historical bias ( Coyer & Gallo, 2005 ). Since context changes over time, characteristics of the phenomena of interest may have changed. Analysis of older datasets may not illuminate the phenomena as they exist today.( Hinds et al., 1997 ) Even if participants could be re-contacted, their perspectives, memories and experiences change. The passage of time also has an affect on the relationship of the primary researchers to the data – so auto-data may be interpreted differently by the same researcher with the passage of time. Data are bound by time and history, therefore, may be a threat to internal validity unless a new investigator is able to account for these effects when interpreting data ( Rew et al., 2000 ).

Researcher stance/Context involvement

Issues related to context are a major source of criticism of QSA ( Gladstone, Volpe, & Boydell, 2007 ). One of the hallmarks of qualitative research is the relationship of the researcher to the participants. It can be argued that removing active contact with participants violates this premise. Tacit understandings developed in the field may be difficult or impossible to reconstruct ( Thorne, 1994 ). Qualitative fieldworkers often react and redirect the data collection based on a growing knowledge of the setting. The setting may change as a result of external or internal factors. Interpretation of researchers as participants in a unique time and social context may be impossible to re-construct even if the secondary researchers were members of the primary team ( Mauthner, Parry, & Milburn, 1998 ). Because the context in which the data were originally produced cannot be recovered, the ability of the researcher to react to the lived experience may be curtailed in QSA ( Gladstone et al., 2007 ). Researchers utilize a number of tactics to filter and prioritize what to include as data that may not be apparent in either the written or spoken records of those events ( Thorne, 1994 ). Reflexivity between the researcher, participants and setting is impossible to recreate when examining pre-existing data.

Relationship of QSA Researcher to Primary Study

The relationship of the QSA researcher to the primary study is an important consideration. When the QSA researcher is not part of the original study team, contractual arrangements detailing access to data, its format, access to the original team, and authorship are required ( Hinds et al., 1997 ). The QSA researcher should assess the condition of the data, documents including transcripts, memos and notes, and clarity and flow of interactions ( Hinds et al., 1997 ). An outline of the original study and data collection procedures should be critically reviewed ( Heaton, 1998 ). If the secondary researcher was not a member of the original study team, access to the original investigative team for the purpose of ongoing clarification is essential ( Hinds et al., 1997 ).

Membership on the original study team may, however, offer the secondary researcher little advantage depending on their role in the primary study. Some research team members may have had responsibility for only one type of data collection or data source. There may be differences in involvement with analysis of the primary data.

Informed Consent of Participants

Thorne (1998) questioned whether data collected for one study purpose can ethically be re-examined to answer another question without participants’ consent. Many institutional review boards permit consent forms to include language about the possibility of future use of existing data. While this mechanism is becoming routine and welcomed by researchers, concerns have been raised that a generic consent cannot possibly address all future secondary questions and may violate the principle of full informed consent ( Gladstone et al., 2007 ). Local variations in study approval practices by institutional review boards may influence the ability of researchers to conduct a QSA.

Rigor of QSA

The primary standards for evaluating rigor of qualitative studies are trustworthiness (logical relationship between the data and the analytic claims), fit (the context within which the findings are applicable), transferability (the overall generalizability of the claims) and auditabilty (the transparency of the procedural steps and the analytic moves processes) ( Lincoln & Guba, 1991 ). Thorne suggests that standard procedures for assuring rigor can be modified for QSA ( Thorne, 1994 ). For instance, the original researchers may be viewed as sources of confirmation while new informants, other related datasets and validation by clinical experts are sources of triangulation that may overcome the lack of access to primary subjects ( Heaton, 2004 ; Thorne, 1994 ).

Our observations, derived from the experience of posing a new question of existing qualitative data serves as a template for researchers considering QSA. Considerations regarding quality, availability and appropriateness of existing data are of primary importance. A realistic plan for collecting additional data to answer questions posed in QSA should consider burden and resources for data collection, analysis, storage and maintenance. Researchers should consider context as a potential limitation to new analyses. Finally, the cost of QSA should be fully evaluated prior to making a decision to pursue QSA.

Acknowledgments

This work was funded by the National Institute of Nursing Research (RO1-NR07973, M Happ PI) and a Clinical Practice Grant from the American Association of Critical Care Nurses (JA Tate, PI).

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Disclosure statement: Drs. Tate and Happ have no potential conflicts of interest to disclose that relate to the content of this manuscript and do not anticipate conflicts in the foreseeable future.

Contributor Information

Judith Ann Tate, The Ohio State University, College of Nursing.

Mary Beth Happ, The Ohio State University, College of Nursing.

- Broyles L, Colbert A, Tate J, Happ MB. Clinicians’ evaluation and management of mental health, substance abuse, and chronic pain conditions in the intensive care unit. Critical Care Medicine. 2008; 36 (1):87–93. [ PubMed ] [ Google Scholar ]

- Coyer SM, Gallo AM. Secondary analysis of data. Journal of Pediatric Health Care. 2005; 19 (1):60–63. [ PubMed ] [ Google Scholar ]

- Fielding N. Getting the most from archived qualitative data: Epistemological, practical and professional obstacles. International Journal of Social Research Methodology. 2004; 7 (1):97–104. [ Google Scholar ]

- Gladstone BM, Volpe T, Boydell KM. Issues encountered in a qualitative secondary analysis of help-seeking in the prodrome to psychosis. Journal of Behavioral Health Services & Research. 2007; 34 (4):431–442. [ PubMed ] [ Google Scholar ]

- Happ MB, Swigart VA, Tate JA, Arnold RM, Sereika SM, Hoffman LA. Family presence and surveillance during weaning from prolonged mechanical ventilation. Heart & Lung: The Journal of Acute and Critical Care. 2007; 36 (1):47–57. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Happ MB, Swigart VA, Tate JA, Hoffman LA, Arnold RM. Patient involvement in health-related decisions during prolonged critical illness. Research in Nursing & Health. 2007; 30 (4):361–72. [ PubMed ] [ Google Scholar ]

- Happ MB, Tate JA, Swigart V, DiVirgilio-Thomas D, Hoffman LA. Wash and wean: Bathing patients undergoing weaning trials during prolonged mechanical ventilation. Heart & Lung: The Journal of Acute and Critical Care. 2010; 39 (6 Suppl):S47–56. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Heaton J. Secondary analysis of qualitative data. Social Research Update. 1998;(22) [ Google Scholar ]

- Heaton J. Reworking Qualitative Data. London: SAGE Publications; 2004. [ Google Scholar ]

- Hinds PS, Vogel RJ, Clarke-Steffen L. The possibilities and pitfalls of doing a secondary analysis of a qualitative data set. Qualitative Health Research. 1997; 7 (3):408–424. [ Google Scholar ]

- Lincoln YS, Guba EG. Naturalistic inquiry. Beverly Hills, CA: Sage Publishing; 1991. [ Google Scholar ]

- Mauthner N, Parry O, Milburn K. The data are out there, or are they? Implications for archiving and revisiting qualitative data. Sociology. 1998; 32 :733–745. [ Google Scholar ]

- Rew L, Koniak-Griffin D, Lewis MA, Miles M, O'Sullivan A. Secondary data analysis: new perspective for adolescent research. Nursing Outlook. 2000; 48 (5):223–229. [ PubMed ] [ Google Scholar ]

- Szabo V, Strang VR. Secondary analysis of qualitative data. Advances in Nursing Science. 1997; 20 (2):66–74. [ PubMed ] [ Google Scholar ]

- Tate JA, Dabbs AD, Hoffman LA, Milbrandt E, Happ MB. Anxiety and agitation in mechanically ventilated patients. Qualitative health research. 2012; 22 (2):157–173. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Thorne S. Secondary analysis in qualitative research: Issues and implications. In: Morse JM, editor. Critical Issues in Qualitative Research. Second. Thousand Oaks, CA: SAGE; 1994. [ Google Scholar ]

- Thorne S. Ethical and representational issues in qualitative secondary analysis. Qualitative Health Research. 1998; 8 (4):547–555. [ PubMed ] [ Google Scholar ]

What Is a Research Design? | Definition, Types & Guide

Introduction

Parts of a research design, types of research methodology in qualitative research, narrative research designs, phenomenological research designs, grounded theory research designs.

- Ethnographic research designs

Case study research design

Important reminders when designing a research study.

A research design in qualitative research is a critical framework that guides the methodological approach to studying complex social phenomena. Qualitative research designs determine how data is collected, analyzed, and interpreted, ensuring that the research captures participants' nuanced and subjective perspectives. Research designs also recognize ethical considerations and involve informed consent, ensuring confidentiality, and handling sensitive topics with the utmost respect and care. These considerations are crucial in qualitative research and other contexts where participants may share personal or sensitive information. A research design should convey coherence as it is essential for producing high-quality qualitative research, often following a recursive and evolving process.

Theoretical concepts and research question

The first step in creating a research design is identifying the main theoretical concepts. To identify these concepts, a researcher should ask which theoretical keywords are implicit in the investigation. The next step is to develop a research question using these theoretical concepts. This can be done by identifying the relationship of interest among the concepts that catch the focus of the investigation. The question should address aspects of the topic that need more knowledge, shed light on new information, and specify which aspects should be prioritized before others. This step is essential in identifying which participants to include or which data collection methods to use. Research questions also put into practice the conceptual framework and make the initial theoretical concepts more explicit. Once the research question has been established, the main objectives of the research can be specified. For example, these objectives may involve identifying shared experiences around a phenomenon or evaluating perceptions of a new treatment.

Methodology

After identifying the theoretical concepts, research question, and objectives, the next step is to determine the methodology that will be implemented. This is the lifeline of a research design and should be coherent with the objectives and questions of the study. The methodology will determine how data is collected, analyzed, and presented. Popular qualitative research methodologies include case studies, ethnography , grounded theory , phenomenology, and narrative research . Each methodology is tailored to specific research questions and facilitates the collection of rich, detailed data. For example, a narrative approach may focus on only one individual and their story, while phenomenology seeks to understand participants' lived common experiences. Qualitative research designs differ significantly from quantitative research, which often involves experimental research, correlational designs, or variance analysis to test hypotheses about relationships between two variables, a dependent variable and an independent variable while controlling for confounding variables.

Literature review

After the methodology is identified, conducting a thorough literature review is integral to the research design. This review identifies gaps in knowledge, positioning the new study within the larger academic dialogue and underlining its contribution and relevance. Meta-analysis, a form of secondary research, can be particularly useful in synthesizing findings from multiple studies to provide a clear picture of the research landscape.

Data collection

The sampling method in qualitative research is designed to delve deeply into specific phenomena rather than to generalize findings across a broader population. The data collection methods—whether interviews, focus groups, observations, or document analysis—should align with the chosen methodology, ethical considerations, and other factors such as sample size. In some cases, repeated measures may be collected to observe changes over time.

Data analysis

Analysis in qualitative research typically involves methods such as coding and thematic analysis to distill patterns from the collected data. This process delineates how the research results will be systematically derived from the data. It is recommended that the researcher ensures that the final interpretations are coherent with the observations and analyses, making clear connections between the data and the conclusions drawn. Reporting should be narrative-rich, offering a comprehensive view of the context and findings.

Overall, a coherent qualitative research design that incorporates these elements facilitates a study that not only adds theoretical and practical value to the field but also adheres to high quality. This methodological thoroughness is essential for achieving significant, insightful findings. Examples of well-executed research designs can be valuable references for other researchers conducting qualitative or quantitative investigations. An effective research design is critical for producing robust and impactful research outcomes.

Each qualitative research design is unique, diverse, and meticulously tailored to answer specific research questions, meet distinct objectives, and explore the unique nature of the phenomenon under investigation. The methodology is the wider framework that a research design follows. Each methodology in a research design consists of methods, tools, or techniques that compile data and analyze it following a specific approach.

The methods enable researchers to collect data effectively across individuals, different groups, or observations, ensuring they are aligned with the research design. The following list includes the most commonly used methodologies employed in qualitative research designs, highlighting how they serve different purposes and utilize distinct methods to gather and analyze data.

The narrative approach in research focuses on the collection and detailed examination of life stories, personal experiences, or narratives to gain insights into individuals' lives as told from their perspectives. It involves constructing a cohesive story out of the diverse experiences shared by participants, often using chronological accounts. It seeks to understand human experience and social phenomena through the form and content of the stories. These can include spontaneous narrations such as memoirs or diaries from participants or diaries solicited by the researcher. Narration helps construct the identity of an individual or a group and can rationalize, persuade, argue, entertain, confront, or make sense of an event or tragedy. To conduct a narrative investigation, it is recommended that researchers follow these steps:

Identify if the research question fits the narrative approach. Its methods are best employed when a researcher wants to learn about the lifestyle and life experience of a single participant or a small number of individuals.

Select the best-suited participants for the research design and spend time compiling their stories using different methods such as observations, diaries, interviewing their family members, or compiling related secondary sources.

Compile the information related to the stories. Narrative researchers collect data based on participants' stories concerning their personal experiences, for example about their workplace or homes, their racial or ethnic culture, and the historical context in which the stories occur.

Analyze the participant stories and "restore" them within a coherent framework. This involves collecting the stories, analyzing them based on key elements such as time, place, plot, and scene, and then rewriting them in a chronological sequence (Ollerenshaw & Creswell, 2000). The framework may also include elements such as a predicament, conflict, or struggle; a protagonist; and a sequence with implicit causality, where the predicament is somehow resolved (Carter, 1993).

Collaborate with participants by actively involving them in the research. Both the researcher and the participant negotiate the meaning of their stories, adding a credibility check to the analysis (Creswell & Miller, 2000).

A narrative investigation includes collecting a large amount of data from the participants and the researcher needs to understand the context of the individual's life. A keen eye is needed to collect particular stories that capture the individual experiences. Active collaboration with the participant is necessary, and researchers need to discuss and reflect on their own beliefs and backgrounds. Multiple questions could arise in the collection, analysis, and storytelling of individual stories that need to be addressed, such as: Whose story is it? Who can tell it? Who can change it? Which version is compelling? What happens when narratives compete? In a community, what do the stories do among them? (Pinnegar & Daynes, 2006).

Make the most of your data with ATLAS.ti

Powerful tools in an intuitive interface, ready for you with a free trial today.

A research design based on phenomenology aims to understand the essence of the lived experiences of a group of people regarding a particular concept or phenomenon. Researchers gather deep insights from individuals who have experienced the phenomenon, striving to describe "what" they experienced and "how" they experienced it. This approach to a research design typically involves detailed interviews and aims to reach a deep existential understanding. The purpose is to reduce individual experiences to a description of the universal essence or understanding the phenomenon's nature (van Manen, 1990). In phenomenology, the following steps are usually followed:

Identify a phenomenon of interest . For example, the phenomenon might be anger, professionalism in the workplace, or what it means to be a fighter.

Recognize and specify the philosophical assumptions of phenomenology , for example, one could reflect on the nature of objective reality and individual experiences.

Collect data from individuals who have experienced the phenomenon . This typically involves conducting in-depth interviews, including multiple sessions with each participant. Additionally, other forms of data may be collected using several methods, such as observations, diaries, art, poetry, music, recorded conversations, written responses, or other secondary sources.

Ask participants two general questions that encompass the phenomenon and how the participant experienced it (Moustakas, 1994). For example, what have you experienced in this phenomenon? And what contexts or situations have typically influenced your experiences within the phenomenon? Other open-ended questions may also be asked, but these two questions particularly focus on collecting research data that will lead to a textural description and a structural description of the experiences, and ultimately provide an understanding of the common experiences of the participants.

Review data from the questions posed to participants . It is recommended that researchers review the answers and highlight "significant statements," phrases, or quotes that explain how participants experienced the phenomenon. The researcher can then develop meaningful clusters from these significant statements into patterns or key elements shared across participants.

Write a textual description of what the participants experienced based on the answers and themes of the two main questions. The answers are also used to write about the characteristics and describe the context that influenced the way the participants experienced the phenomenon, called imaginative variation or structural description. Researchers should also write about their own experiences and context or situations that influenced them.

Write a composite description from the structural and textural description that presents the "essence" of the phenomenon, called the essential and invariant structure.

A phenomenological approach to a research design includes the strict and careful selection of participants in the study where bracketing personal experiences can be difficult to implement. The researcher decides how and in which way their knowledge will be introduced. It also involves some understanding and identification of the broader philosophical assumptions.

Grounded theory is used in a research design when the goal is to inductively develop a theory "grounded" in data that has been systematically gathered and analyzed. Starting from the data collection, researchers identify characteristics, patterns, themes, and relationships, gradually forming a theoretical framework that explains relevant processes, actions, or interactions grounded in the observed reality. A grounded theory study goes beyond descriptions and its objective is to generate a theory, an abstract analytical scheme of a process. Developing a theory doesn't come "out of nothing" but it is constructed and based on clear data collection. We suggest the following steps to follow a grounded theory approach in a research design:

Determine if grounded theory is the best for your research problem . Grounded theory is a good design when a theory is not already available to explain a process.

Develop questions that aim to understand how individuals experienced or enacted the process (e.g., What was the process? How did it unfold?). Data collection and analysis occur in tandem, so that researchers can ask more detailed questions that shape further analysis, such as: What was the focal point of the process (central phenomenon)? What influenced or caused this phenomenon to occur (causal conditions)? What strategies were employed during the process? What effect did it have (consequences)?

Gather relevant data about the topic in question . Data gathering involves questions that are usually asked in interviews, although other forms of data can also be collected, such as observations, documents, and audio-visual materials from different groups.

Carry out the analysis in stages . Grounded theory analysis begins with open coding, where the researcher forms codes that inductively emerge from the data (rather than preconceived categories). Researchers can thus identify specific properties and dimensions relevant to their research question.

Assemble the data in new ways and proceed to axial coding . Axial coding involves using a coding paradigm or logic diagram, such as a visual model, to systematically analyze the data. Begin by identifying a central phenomenon, which is the main category or focus of the research problem. Next, explore the causal conditions, which are the categories of factors that influence the phenomenon. Specify the strategies, which are the actions or interactions associated with the phenomenon. Then, identify the context and intervening conditions—both narrow and broad factors that affect the strategies. Finally, delineate the consequences, which are the outcomes or results of employing the strategies.

Use selective coding to construct a "storyline" that links the categories together. Alternatively, the researcher may formulate propositions or theory-driven questions that specify predicted relationships among these categories.

Develop and visually present a matrix that clarifies the social, historical, and economic conditions influencing the central phenomenon. This optional step encourages viewing the model from the narrowest to the broadest perspective.

Write a substantive-level theory that is closely related to a specific problem or population. This step is optional but provides a focused theoretical framework that can later be tested with quantitative data to explore its generalizability to a broader sample.

Allow theory to emerge through the memo-writing process, where ideas about the theory evolve continuously throughout the stages of open, axial, and selective coding.

The researcher should initially set aside any preconceived theoretical ideas to allow for the emergence of analytical and substantive theories. This is a systematic research approach, particularly when following the methodological steps outlined by Strauss and Corbin (1990). For those seeking more flexibility in their research process, the approach suggested by Charmaz (2006) might be preferable.