- Skip to primary navigation

- Skip to main content

Open Computer Vision Library

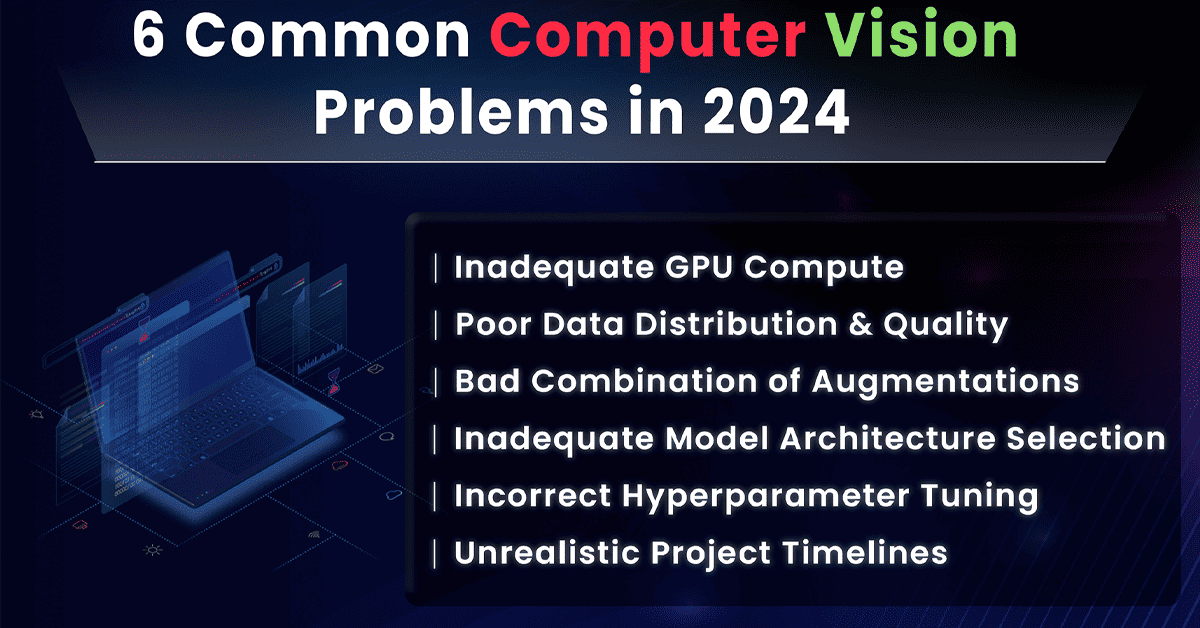

Your 2024 Guide to the Top 6 Computer Vision Problems

bharat February 14, 2024 Leave a Comment AI Careers Tags: common computer vision problems computer vision computer vision problems deep learning

Introduction

Computer Vision is a recent subset of Artificial Intelligence that has seen a huge surge in demand in recent years. We can owe this to the incredible computing power we have today and the vast availability of data. We’ve all used a Computer Vision application in some form or another in our daily lives, say the face unlock on our mobile devices or even the filters we use in Instagram and Snapchat. But with such awesome capabilities, there are numerous factors constraining its implementation. In this read, we discuss the common Computer Vision problems , why they arise, and how they can be tackled.

Table of Contents Introduction Why do problems arise in Computer Vision? Common Computer Vision Problems Conclusion

Why do problems arise in Computer Vision?

When working with Computer Vision systems, they pose many technical problems that could arise, for instance, the inherent complexity of interpreting visual data. Overcoming such issues can help develop robust and adaptable vision systems. In this section, we’ll delve into why computer vision problems arise.

Visual Data Diversity

The diversity in visual representation , say illumination, perspective, or occlusion in objects, poses a big challenge. These variations need to be overcome to eliminate any visual discrepancies.

Dimensional Complexity

With every image composed of millions of pixels, dimensional complexity becomes another barrier one needs to cross. This could be done by adopting different techniques and methodologies.

Dataset Integrity

The integrity of visual data could be breached in the form of compression anomalies or sensor noise. The balance between noise reduction and preservation of features needs to be achieved.

Internal Class Variations

Then, there is variability within the same classes. What does that mean? Well, the diversity of object categories poses a challenge for algorithms to identify unifying characteristics amongst a ton of variations. This requires distilling the quintessential attributes that define a category while disregarding superficial differences.

Real-time Decision Making

Real-time processing can be aggravating. This comes into play when making decisions for autonomous navigation or interactive augmented realities needing optimal performance of computational frameworks and algorithms for swift and accurate analysis.

Perception in Three Dimensions

This is not a problem per se but rather a crucial task which is inferring three dimensionality. This involves extracting three-dimensional insights from two-dimensional images. Here, algorithms must traverse the ambiguity of depth and spatial relationships.

Labeled Dataset Scarcity

The scarcity of annotated data or extensively labeled datasets poses another problem while training state-of-the-art models. This can be overcome using unsupervised and semi-supervised learning. Another reason why a computer vision problem could arise is that vision systems are susceptible to making wrong predictions, which can go unnoticed by researchers. While we are on the topic of labeled datatset scarcity, we must also be familiar with improper labeling . This occurs when a label attached to an object is mislabeled. It can result in inaccurate predictions during model deployment.

Ethical Considerations

Ethical considerations are paramount in Artificial Intelligence, and it is no different in Computer Vision. This could be biases in deep learning models or any discriminatory outcomes. This emphasizes the need for a proper approach to dataset curation or algorithm development.

Multi-modal Implementation

Coming to integrating computer vision into broader technological ecosystems like NLP or Robotics requires not just technical compatibility but also a shared understanding. We’ve only scratched the surface of the causes of different machine vision issues. Now, we will move into the common computer vision problems and their solutions.

Common Computer Vision Problems

When working with deep learning algorithms and models, one tends to run into multiple problems before robust and efficient systems can be brought to life. In this section, we’ll discuss the common computer vision problems one encounters and their solutions.

Inadequate GPU Compute

GPUs or Graphic Processing Units were initially designed for accelerated graphical processing. Nvidia has been at the top of the leaderboard in the GPU scene. So what’s GPU to do with Computer Vision? Well, this past decade has seen a surge in demand for GPUs to accelerate machine learning and deep learning training.

Finding the right GPU can be a daunting task. Big GPUs come at a premium price, and if you are thinking of moving to the cloud, it sees frequent shortages. GPUs need to be optimized since most of us do not have access to clusters of machines.

Memory is one of the most crucial aspects when choosing the proper GPU. Low vRAM (Low memory GPUs) can severely hinder the progress of big computer vision and deep learning projects.

Another way around this memory conundrum is GPU utilization. GPU utilization is the percentage of graphics card used at a particular point in time.

So, what are some of the causes of poor GPU utilization?

- Some vision applications may need large amounts of memory bandwidth, meaning the GPU may have a long wait time for the data to be transferred to or from the memory. This can be sorted by leveraging memory access patterns.

- A few computational tasks can be less intensive, meaning the GPU may not be used to the fullest. This could be conditional logic or other operations which are not apt for parallel processing.

- Another issue is the CPU not being able to supply data fast to the GPU, resulting in GPU idling. By using asynchronous data transferring, this can be fixed.

- Some operations like memory allocation or explicit synchronization can stop the GPU altogether and cause it to idle, which is, again, poor GPU utilization.

- Another cause of poor GPU utilization is inefficient parallelization of threads where the workload is not evenly distributed across all the cores of the GPU.

We need to effectively monitor and control the GPU utilization as it can significantly better the model’s performance. This can be made possible using tools like NVIDIA System Management Interface that offers real-time data on multiple aspects of the GPU, like memory consumption, power usage, and temperature. Let us look at how we can leverage these tools to better optimize GPU usage.

- Batch size adjustments: Larger batch sizes would consume more memory but can also improve overall throughput. One step to boost GPU utilization is modifying the batch size while training the model. The batch size can be modified by testing various batch sizes and help us strike the right balance between memory usage and performance.

- Mixed precision training: Another solution to enhance the efficiency of the GPU is mixed precision training. It uses lower-precision data types when performing calculations on Tensor Cores. This method not only reduces computation time and memory demands but does not compromise on accuracy.

- Distributed Training: Another way around high GPU usage can be distributing the workload across multiple GPUs. By leveraging frameworks like MirroredStrategy from TensorFlow or DistributedDataParallel from PyTorch, the implementation of distributed training approaches can be simplified.

Two standard series of GPUs are the RTX and the GTX series, where RTX is the newer, more powerful graphics card while the GTX is the older series. Before investing in any of them, it is essential to research on them. A few factors to note when choosing the right GPU include analyzing the project requirements and the memory needed for the computations. A good starting point is to have at least 8GB of video RAM for seamless deep learning model training.

GeForce RTX 20-Series

If you are on a budget, then there are alternatives like Google Colab or Azure that offer free access to GPUs for a limited time period. So you can complete your vision projects without needing to invest in a GPU.

As seen, hardware issues like GPUs are pretty common when training models, but there are loads of ways one can work their way around it.

Poor Data Distribution and Quality

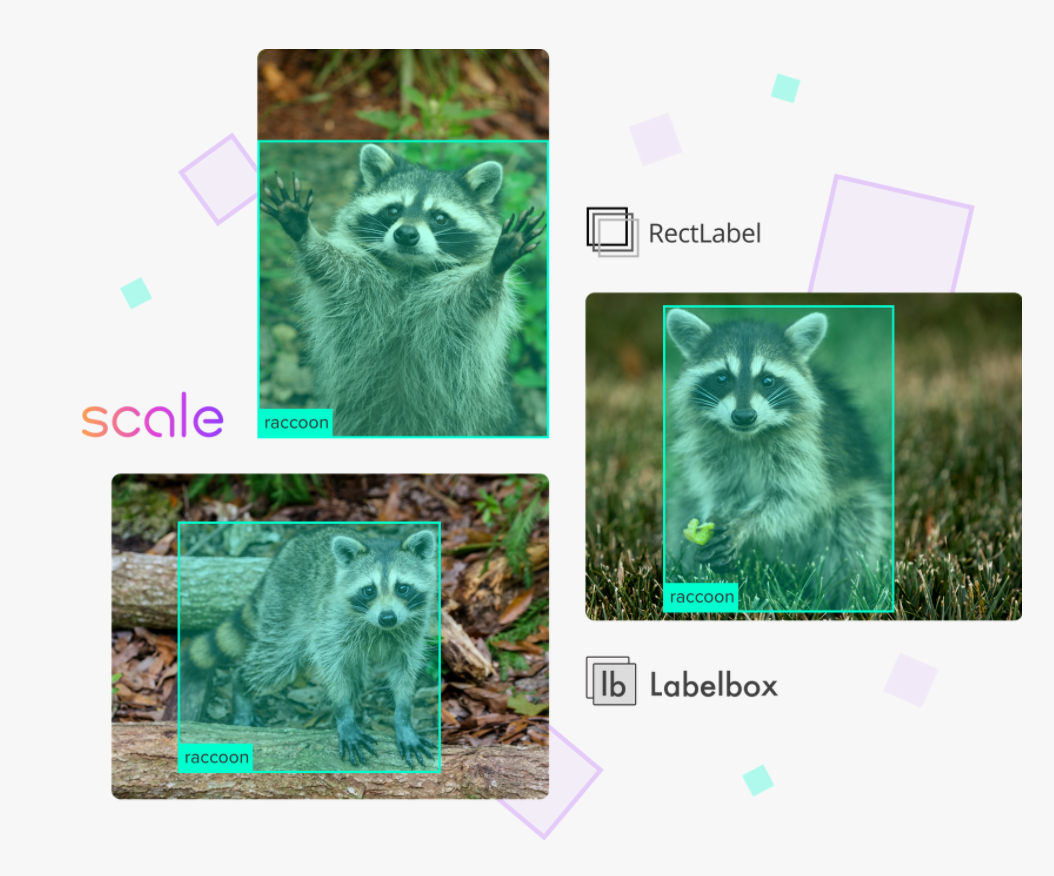

The quality of the dataset being fed into your vision model is essential. Every change made to the annotations must translate to better performance in the project. Rectifying all these inaccuracies can drastically improve the overall accuracy of the production models and drastically improve the quality of the labels and annotations.

Poor quality data within image or video datasets can pose a big problem to researchers. Another issue can be not having access to quality data, which will cause us to be unable to produce the desired output.

Although there are AI-assisted automation tools for labeling data, improving the quality of these datasets can be time-consuming. Add that to having thousands of images and videos in a dataset and looking through each of them on a granular level; looking for inaccuracies can be a painstaking task.

Suboptimal data distribution can significantly undermine the performance and generalization capabilities of these models. Let us look at some causes of poor data distribution or errors and their solutions.

Mislabeled Images

Mislabeled images occur when there exists a conflict between the assigned categorical or continuous label and the actual visual content depicted within the image. This could stem from human error during

- Manual annotation processes

- Algorithmic misclassifications in automated labeling systems, or

- Ambiguous visual representations susceptible to subjective interpretations

If mislabeled images exist within training datasets, it can lead to incorrect feature-label associations within the learning algorithms. This could cause degradation in model accuracy and a diminished capacity for the model to generalize from the training data to novel, unseen datasets.

To overcome mislabeled images

- We can implement rigorous dataset auditing protocols

- Leverage consensus labeling through multiple annotators to ensure label accuracy

- Implement advanced machine learning algorithms that can identify and correct mislabeled instances through iterative refinement processes

Missing Labels

Another issue one can face is when a subset of images within a dataset does not have any labels. This could be due to

- oversight in the annotation process

- the prohibitive scale of manual labeling efforts, or

- failures in automated detection algorithms to identify relevant features within the images

Missing labels can create biased training processes when a portion of a dataset is void of labels. Here, deep learning models are exposed to an incomplete representation of the data distribution, resulting in models performing poorly when applied to unlabeled data.

By leveraging semi-supervised learning techniques, we can eliminate missing labels. By utilizing both labeled and unlabeled data in model training, we can enhance the model’s exposure to the underlying data distribution. Also, by deploying more efficient detection algorithms, we can reduce the incidence of missing labels.

Unbalanced Data

Unbalanced data can take the form of certain classes that are significantly more prevalent than others, resulting in the disproportionate representation of classes.

Much like missing labels, unbalanced training on unbalanced datasets can lead to the development of biases by machine learning models towards the more frequently represented classes. This can drastically affect the model’s ability to accurately recognize and classify instances of underrepresented classes and can severely limit its applicability in scenarios requiring equitable performance across various classes.

Unbalanced data can be counteracted through techniques like

- Oversampling of minority classes

- Undersampling of majority classes

- Synthetic data generation via techniques such as Generative Adversarial Networks (GANs), or

- Implementation of custom loss functions

It is paramount that we address any complex challenges associated with poor data distribution or lack thereof, as it can lead to inefficient model performance or biases. One can develop robust, accurate, and fair computer vision models by incorporating advanced algorithmic strategies and continuous model evaluation.

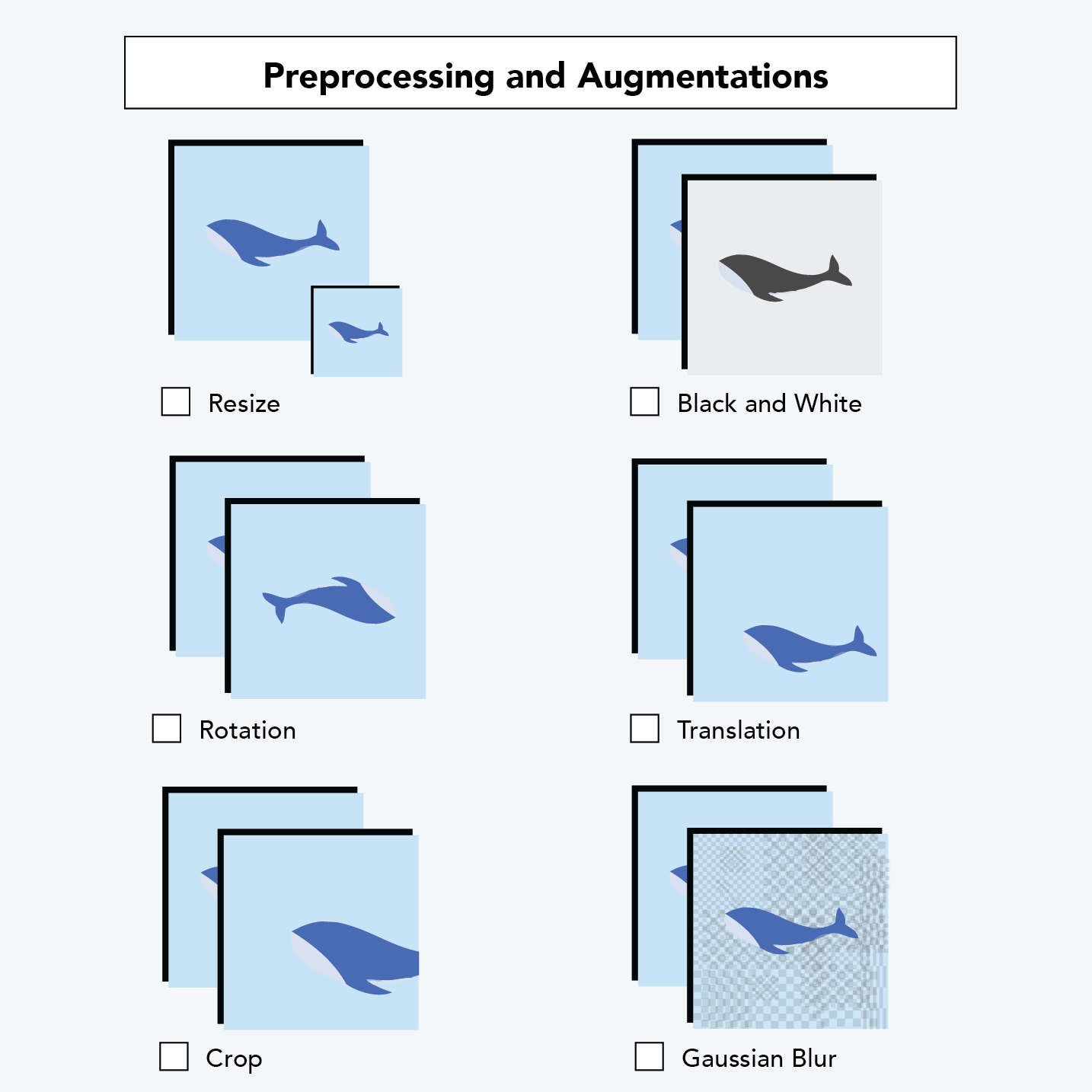

Bad Combination of Augmentations

A huge limiting factor while training deep learning models is the lack of large-scale labeled datasets. This is where Data Augmentation comes into the picture.

What is Data Augmentation? Data augmentation is the process of using image processing-based algorithms to distort data within certain limits and increase the number of available data points. It aids not only in increasing the data size but also in the model generalization for images it has not seen before. By leveraging Data Augmentation, we can limit data issues to some extent. A few data augmentation techniques include

- Image Shifts

- Horizontal Flips

- Translation

- Vertical Flips

- Gaussian noise

Data augmentation is done to generate a synthetic dataset, which is more vast than the original dataset. If the model encounters any issues in production, then augmenting the images to create a more extensive dataset will help generalize it in a better way.

Augmented Images

Let us explore some of the reasons why bad combinations of augmentations in computer vision occur based on tasks.

Excessive Rotation

Excessive rotation can pose a problem for the model to learn the correct orientation of objects. This can mainly be seen with tasks like object detection when the objects are typically found in standard orientations (e.g., street signs) or some orientations are unrealistic.

Heavy Noise

Excessive addition of noise to images can be counterproductive for tasks that require recognizing subtle differences between classes, for instance, the classification of species in biology. The noise can conceal essential features.

Random Cropping

Random cropping can lead to the removal of some essential parts of the image that are critical for correct classification or detection. For instance, randomly cropping parts of medical images might remove pathological features critical for diagnosis.

Excessive Brightness

Making extreme adjustments to brightness or contrast can alter the appearance of critical diagnostic features, leading to misinterpretation made by the model.

Aggressive Distortion

Suppose we are to apply aggressive geometric distortions (like extreme skewing or warping) aggressively. In that case, it can significantly alter the appearance of text in images, making it difficult for models to recognize the characters accurately in optical character recognition (OCR) tasks.

Color Jittering

Color jittering is another issue one can come across when dealing with data augmentation. For any task where the key distinguishing feature is color, excessive modifications to color, like brightness, contrast, or saturation, can distort the natural color distribution of the objects and mislead the model.

Avoiding such excessive augmentations needs a good understanding of the needs and limitations of the models. Let us explore some standard guidelines to help avoid bad augmentation practices.

Understand the Task and Data

First, we need to understand what the task is at hand, for instance, if it is classification or detection, and also the nature of the images. Then, we need to pick the apt form of augmentation. It is also good to understand the characteristics of your dataset . If your dataset includes images from various orientations, excessive rotation might not be necessary.

Use of Appropriate Augmentation Libraries

Try utilizing libraries like Albumentations, imgaug, or TensorFlow’s and PyTorch’s built-in augmentation functionalities . They offer extensive control over the augmentation process, allowing us to specify the degree of augmentation that is applied.

Implement Conditional Augmentation

Dynamically adjust the intensity of augmentations based on the model’s performance or during different training phases.

Augmentation Parameters Fine-tuning

Find the right balance that improves model robustness without distorting the data beyond recognition. This can be achieved by carefully tuning the parameters.

Make incremental changes, start with minor augmentations, and gradually increase their intensity, monitoring the impact on model performance.

Optimize Augmentation Pipelines

Any multiple augmentations in a pipeline must be optimized. We must also ensure that combining any augmentations does not lead to unrealistic images.

Use random parameters within reasonable bounds to ensure diversity without extreme distortion.

Validation and Experimentation

Regularly validate the model on a non-augmented validation set to ensure that augmentations are improving the model’s ability to generalize rather than memorize noise.

Experiment with different augmentation strategies in parallel to compare their impact on model performance.

As seen above, a ton of issues arise when dealing with data augmentation, like excessive brightness, color jittering, or heavy noise. But by leveraging techniques like cropping, image shifts, horizontal flips, and Gaussian noise, we can curb bad combinations of augmentations.

Inadequate Model Architecture Selection

Selecting an inadequate model architecture is another common computer vision problem that can be attributed to many factors. They affect the overall performance, efficiency, and applicability of the model for specific computational tasks.

Let us discuss some of the common causes of poor model architecture selection.

Deep Neural Network Model Architecture Selection

Lack of Domain Understanding

A common issue is the lack of knowledge of the problem space or the requirements for the task. Diverse architectures require proficiency across different fields. For instance, Convolutional Neural Networks (CNNs) are essential for image data, whereas Recurrent Neural Networks (RNNs) are needed for sequential data. Having a superficial understanding of the task nuances can lead to the selection of an architecture that is not aligned with the task requirements.

Computational Limitations

We must always keep in mind the computational resources we have available. Models that require high computational power and memory cannot be viable for deployment. This could lead to the selection of simpler and less efficient models.

Data Constraints

Choosing the right architecture heavily depends on the volume and integrity of available data . Intricate models require voluminous datasets of high-quality, labeled data for effective training. In scenarios that have data paucity, noise, imbalance, or a model with greater sophistication might not yield superior performance and could cause overfitting.

Limited Familiarity with Architectural Paradigms

A lot of novel architectures and models are emerging with the huge strides made in deep learning. However, researchers default to utilizing models they are familiar with, which may not be optimal for their desired outcomes. One must always be updated with the latest contributions in the realm of deep learning and computer vision to analyze the advantages and limitations of the new architectures.

Task Complexity Underestimation

Another cause for poor architecture selection is failing to accurately assess the complexity of the task . This may result in adopting simpler models that lack the ability to capture the essential features within the data. This can be attributed to incomplete or not conducting a comprehensive exploratory data analysis or not fully acknowledging the data’s subtleties and variances.

Overlooking Deployment Constraints

The deployment environment has a significant influence on the architecture selection process. For real-time applications or deployment on devices with limited processing capabilities (e.g., smartphones, IoT devices), architectures optimized for memory and computation efficiency are necessary.

Managing these poor architectural selections requires being updated on the latest architectures, as well as a thorough understanding of the problem domain and data characteristics and a careful consideration of the pragmatic constraints associated with model deployment and functionality.

Now that we’ve explored the possible causes for inadequate model architecture let us see how to avoid them.

Balanced Model

Two common challenges one could face are having an overfitting model , which is too complex and overfits the data, or having an underfitting model , which is too simple and fails to infer patterns from the data. We can leverage techniques like regularization or cross-validation to optimize the models’ performance to avoid overfitting or underfitting.

Understanding Model Limitations

Next, we need to be well aware of the limitations and assumptions of the different algorithms and models. Different models have different strengths and weaknesses. They all require different conditions or properties of the data for optimal performance. For instance, some models are sensitive noise or outliers, some are more viable for different tasks like detection, segmentation, or classification. We must know the theory and logic behind every model and check if the data fulfills the desired conditions.

Curbing Data Leakage

Data leakage occurs when information from the test dataset is used to train the model. This can result in biased estimates of the model’s accuracy and performance. A good rule of thumb is to split the data into training and test datasets before moving to any of the steps like preprocessing or feature engineering. One can also avoid using features that are influenced by the target variable.

Continual Assessment

A common misunderstanding is when researchers assume that deployment is the last stage of the project. We need to continually monitor, analyze, and improve on the deployed models. The accuracy of vision models can decline over time as they generalize based on a subset of data. Additionally, they can struggle to adapt to complex user inputs. These reasons further emphasize the need to monitor models post-deployment.

A few steps for continual assessment and improvement include

- Implementation of a robust monitoring system

- Gathering user feedback

- Leveraging the right tools for optimal monitoring

- Refer real-world scenarios

- Addressing underlying issues by analyzing the root cause of loss of model efficiency or accuracy

Much like other computer vision problems, one must be diligent in selecting the right model architecture by assessing the computing resources one has at his disposal, the data constraints, possessing good domain expertise, and finding the optimal model that is not overfitting or underfitting. Following all these steps will curb poor selections in model architecture.

Incorrect Hyperparameter Tuning

Before we delve into the reasons behind poor hyperparameter tuning and its solutions, let us look at what it is.

What is Hyperparameter?

Hyperparameters are the configurations of the model where the model does not learn from the data but rather from the inputs provided before training. They provide a pathway for the learning process and affect how the model behaves during training and prediction. Learning rate, batch size, and number of layers are a few instances of hyperparameters. They can be set based on the computational resources, the complexity of the task and also the characteristics of the datasets.

Incorrect hyperparameter tuning in deep learning can adversely affect model performance, training efficiency, and generalization ability. Hyperparameters are configurations external to the model that cannot be directly learned from the data. Hyperparameters are critical to the performance of the trained model and the behavior of the training algorithm. Here are some of the downsides of incorrect hyperparameter tuning.

Overfitting or Underfitting

If hyperparameters are not tuned correctly, a model may capture noise in training data as a legitimate pattern. Examples include too many layers or neurons without appropriate regularization or too high a capacity.

Underfitting , on the other hand, can result when the model is too simple to capture the underlying structure of the data due to incorrect tuning. Alternatively, the training process might halt before the model has learned enough from the data due to a low model capacity or a low learning rate.

Underfitting & Overfitting

Poor Generalization

Incorrectly tuned hyperparameters can lead to a model that performs well on the training data but poorly on unseen data. This indicates that the model has not generalized well, which is often a result of overfitting.

Inefficient Training

A number of hyperparameters control the efficiency of the training process, including batch size and learning rate. If these parameters are not adjusted appropriately, the model will take much longer to train, requiring more computational resources than necessary. If the learning rate is too small, convergence might be slowed down, but if it is too large, the training process may oscillate or diverge.

Difficulty in Convergence

An incorrect setting of the hyperparameters can make convergence difficult. For example, an excessively high learning rate can cause the model’s loss to fluctuate rather than decrease steadily.

Resource Wastage

It takes considerable computational power and time to train deep learning models. Incorrect hyperparameter tuning can lead to a number of unnecessary training runs.

Model Instability

In some cases, hyperparameter configurations can lead to model instability, where small changes in the data or initialization of the model can lead to large variations in performance.

The use of systematic hyperparameter optimization strategies is crucial to mitigate these issues.

It is crucial to finetune these hyperparameters as they significantly affect the performance and the accuracy of the model.

Let us explore some of the common hyperparameter optimization methods.

- Learning Rate: To prevent underfitting or overfitting, finding an optimal learning rate is crucial in order to prevent the model from updating its parameters too fast or too slowly during training.

- Batch Size: During model training, batch size determines how many samples are processed during each iteration. This influences the training dynamics, memory requirements, and generalization capability of the model. The batch size should be selected in accordance with the computational resources and the characteristics of the dataset on which the model will be trained.

- Network Architecture: Network architecture outlines the blueprint of a neural network, detailing the arrangement and connection of its layers. This includes specifying the total number of layers, identifying the variety of layers (like convolutional, pooling, or fully connected layers), and how they’re set up. The choice of network architecture is crucial and should be tailored to the task’s complexity and the computational resources at hand.

- Kernel Size: In the realm of convolutional neural networks (CNNs), kernel size is pivotal as it defines the scope of the receptive field for extracting features. This choice influences how well the model can discern detailed and spatial information. Adjusting the kernel size is a balancing act to ensure the model effectively captures both local and broader features.

- Dropout Rate: Dropout is a strategy to prevent overfitting by randomly omitting a proportion of the neural network’s units during the training phase. The dropout rate is the likelihood of each unit being omitted. By doing this, it pushes the network to learn more generalized features and lessens its reliance on any single unit.

- Activation Functions: These functions bring non-linearity into the neural network, deciding the output for each node. Popular options include ReLU (Rectified Linear Unit), sigmoid, and tanh. The selection of an activation function is critical as it influences the network’s ability to learn complex patterns and affects the stability of its training.

- Data Augmentation Techniques: Techniques like rotation, scaling, and flipping are used to introduce more diversity to the training data, enhancing its range. Adjusting hyperparameters related to data augmentation, such as the range of rotation angles, scaling factors, and the probability of flipping, can fine-tune the augmentation process. This, in turn, aids the model in generalizing better to new, unseen data.

Data Augmentation

- Optimization Algorithm: The selection of an optimization algorithm affects how quickly and smoothly the model learns during training. Popular algorithms include stochastic gradient descent (SGD), ADAM, and RMSprop. Adjusting hyperparameters associated with these algorithms, such as momentum, learning rate decay, and weight decay, plays a significant role in optimizing the training dynamics.

Unrealistic Project Timelines

This is rather a broader topic that affects all fields of study and does not pertain only to Computer Vision and Deep Learning. It not only affects our psychological state of mind but also destroys our morale . One main reason could be the individual setting up unrealistic deadlines, often not able to gauge the time or effort needed to complete the project or task at hand. As mentioned earlier, this can lead to low morale or lowering one’s self-esteem.

Now, bringing our attention to the realm of Computer Vision, deadlines could range from time taken for collecting the data to deploying models. How do we tackle this? Let us look at a few steps we can take not only to keep us on time but also to deploy robust and accurate vision systems.

Define your Goals

Before we get into the nitty gritty of a Computer Vision project, we need to have a clear understanding of what we wish to achieve through it. This means identifying and defining the end goal, objectives, and milestones. This also needs to be communicated to the concerned team, which could be our colleagues, clients, and sponsors. This will eliminate any unrealistic timelines or misalignments.

Once we set our objectives, we come to our second step, planning, and prioritizations . This involves understanding and visualizing our workflow, leveraging the appropriate tools, cost estimations, and timelines, and analyzing the available resources, be they hardware or software. We must allocate them optimally, curbing any dependencies or risks and eradicating any assumptions that may affect the project.

Once we’ve got our workflow down, we begin the implementation and testing phase, where we code, debug, and validate the inferences made. One must remember the best practices of model development, documentation, code review, and framework testing. This could involve the appropriate usage of tools and libraries like OpenCV, PyTorch, TensorFlow, or Keras to facilitate the models to perform the tasks we trained them for, which could be segmentation, detection, or classification, model evaluation and the accuracy of the models.

This brings us to our final step, project review . We make inferences from the results, analyze the feedback, and make improvements to them. We also need to check how aligned it is with the suggestions given by sponsors or users and make iterations, if any.

Keeping up with project deadlines can be a daunting task at first, but with more experience and the right mindset, we’ll have better time management and greater success in every upcoming project.

We’ve come to the end of this fun read. We’ve covered the six most common computer vision problems one encounters on their journey, ranging from the inadequacies of GPU computing all the way to incorrect hyperparameter tuning. We’ve comprehensively delved into their causes and how they can all be overcome by leveraging different methods and techniques. More fun reads in the realm of Artificial Intelligence , Deep Learning , and Computer Vision are coming your way. See you guys in the next one!

Related Posts

August 16, 2023 Leave a Comment

August 23, 2023 Leave a Comment

August 30, 2023 Leave a Comment

Become a Member

Stay up to date on OpenCV and Computer Vision news

Free Courses

- TensorFlow & Keras Bootcamp

- OpenCV Bootcamp

- Python for Beginners

- Mastering OpenCV with Python

- Fundamentals of CV & IP

- Deep Learning with PyTorch

- Deep Learning with TensorFlow & Keras

- Computer Vision & Deep Learning Applications

- Mastering Generative AI for Art

Partnership

- Intel, OpenCV’s Platinum Member

- Gold Membership

- Development Partnership

General Link

Subscribe and Start Your Free Crash Course

Stay up to date on OpenCV and Computer Vision news and our new course offerings

- We hate SPAM and promise to keep your email address safe.

Join the waitlist to receive a 20% discount

Courses are (a little) oversubscribed and we apologize for your enrollment delay. As an apology, you will receive a 20% discount on all waitlist course purchases. Current wait time will be sent to you in the confirmation email. Thank you!

Guide to Computer Vision: Why It Matters and How It Helps Solve Problems

This post was written to enable the beginner developer community, especially those new to computer vision and computer science. NVIDIA recognizes that solving and benefiting the world’s visual computing challenges through computer vision and artificial intelligence requires all of us. NVIDIA is excited to partner and dedicate this post to the Black Women in Artificial Intelligence .

Computer vision’s real world use and reach is growing and its applications in turn are challenging and changing its meaning. Computer vision, which has been in some form of its present existence for decades, is becoming an increasingly common phrase littered in conversation, across the world and across industries: computer vision systems, computer vision software, computer vision hardware, computer vision development, computer vision pipelines, computer vision technology.

What is computer vision?

There is more to the term and field of computer vision than meets the eye, both literally and figuratively. Computer vision is also referred to as vision AI and traditional image processing in specific non-AI instances, and machine vision in manufacturing and industrial use cases.

Simply put, computer vision enables devices, including laptops, smartphones, self-driving cars, robots, drones, satellites, and x-ray machines to perceive, process, analyze, and interpret data in digital images and video.

In other words, computer vision fundamentally intakes image data or image datasets as inputs, including both still images and moving frames of a video, either recorded or from a live camera feed. Computer vision enables devices to have and use human-like vision capabilities just like our human vision system. In human vision, your eyes perceive the physical world around you as different reflections of light in real-time.

Similarly, computer vision devices perceive pixels of images and videos, detecting patterns and interpreting image inputs that can be used for further analysis or decision making. In this sense, computer vision “sees” just like human vision and uses intelligence and compute power to process input visual data to output meaningful insights, like a robot detecting and avoiding an obstacle in its path.

Different computer vision tasks mimic the human vision system, performing, automating, and enhancing functions similar to the human vision system.

How does computer vision relate to other forms of AI?

Computer vision is helping to teach and master seeing, just like conversational AI is helping teach and master the sense of sound through speech, in applications of recognizing, translating, and verbalizing text: the words we use to define and describe the physical world around us.

Similarly, computer vision helps teach and master the sense of sight through digital image and video. More broadly, the term computer vision can also be used to describe how device sensors, typically cameras, perceive and work as vision systems in applications of detecting, tracking and recognizing objects or patterns in images.

Multimodal conversational AI combines the capabilities of conversational AI with computer vision in multimedia conferencing applications, such as NVIDIA Maxine .

Computer vision can also be used broadly to describe how other types of sensors like light detection and ranging (LiDAR) and radio detection and ranging (RADAR) perceive the physical world. In self-driving cars, computer vision is used to describe how LiDAR and RADAR sensors work, often together and in-tandem with cameras to recognize and classify people, objects, and debris.

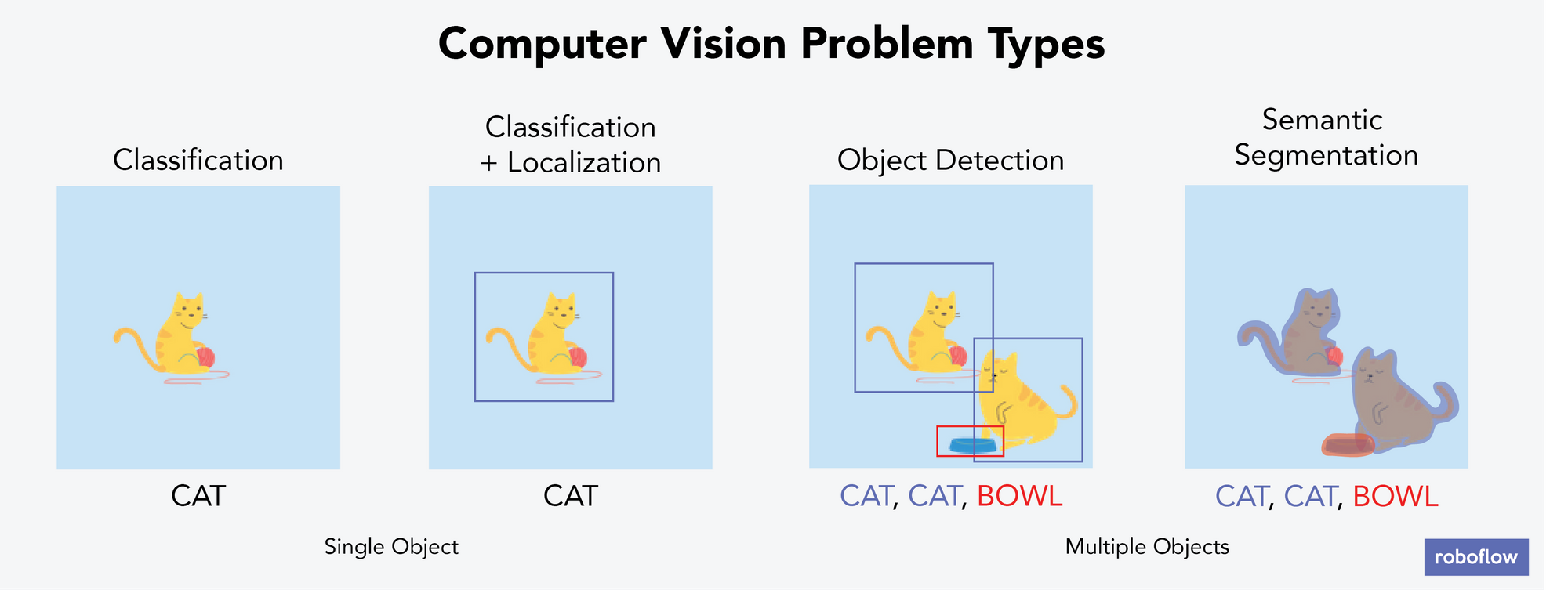

What are some common tasks?

While computer vision tasks cover a wide breadth of perception capabilities and the list continues to grow, the latest techniques support and help solve use cases involving detection, classification, segmentation, and image synthesis.

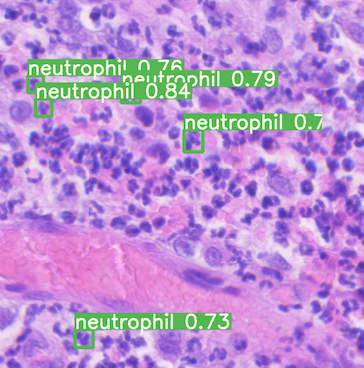

Detection tasks locate, and sometimes track, where an object exists in an image. For example, in healthcare for digital pathology, detection could involve identifying cancer cells through medical imaging. In robotics, software developers are using object detection to avoid obstacles on the factory floor.

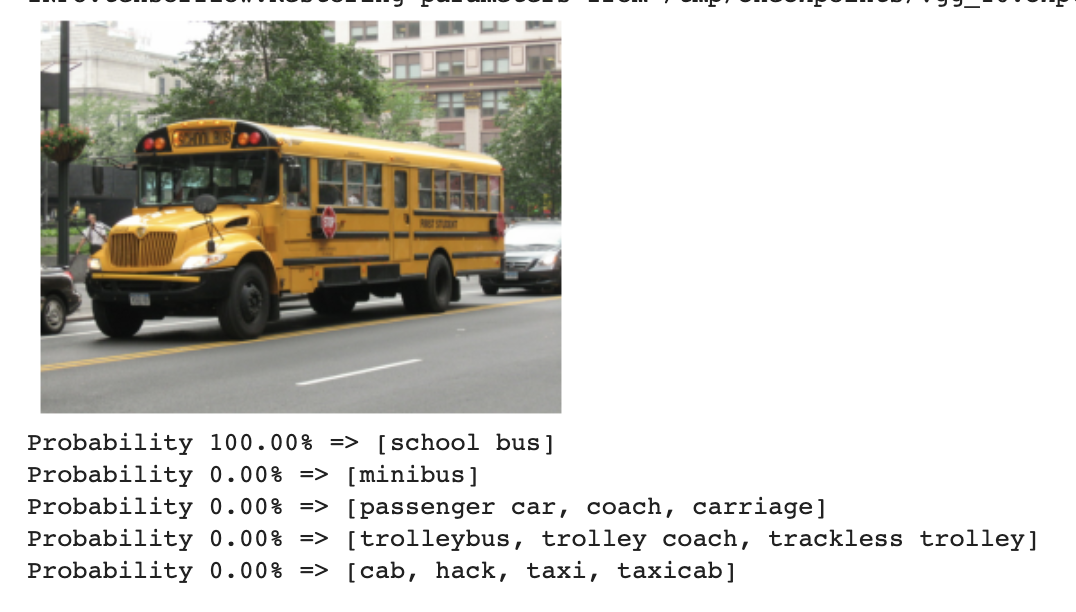

Classification techniques determine what object exists within the visual data. For example, in manufacturing, an object recognition system classifies different types of bottles to package. In agriculture, farmers are using classification to identify weeds among their crops.

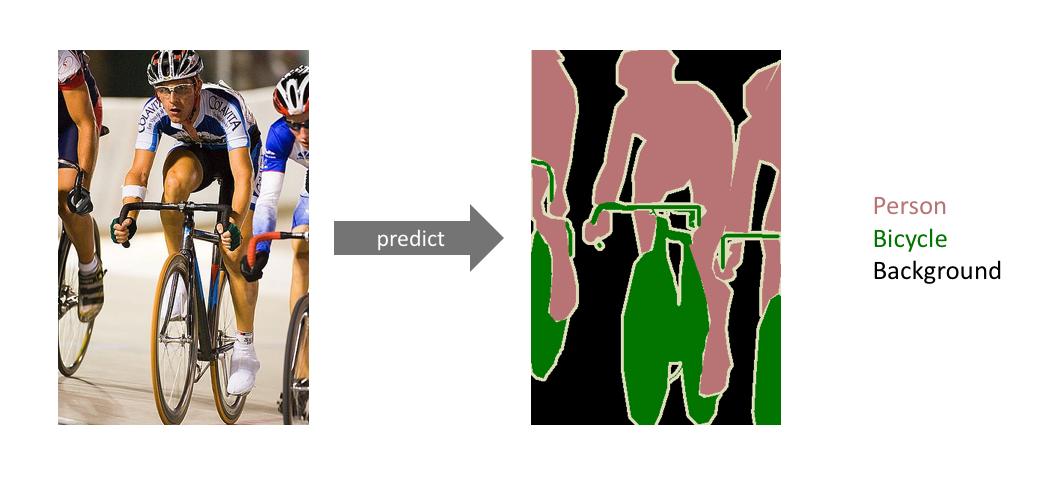

Segmentation tasks classify pixels belonging to a certain category, either individually by pixel (semantic image segmentation) or by assigning multiple object types of the same class as individual instances (instance image segmentation). For example, a self-driving car segments parts of a road scene as drivable and non-drivable space.

Image synthesis techniques create synthetic data by morphing existing digital images to contain desired content. Generative adversarial networks (GANs), such as EditGAN , enable generating synthetic visual information from text descriptions and existing images of landscapes and people. Using synthetic data to compliment and simulate real data is an emerging computer vision use case in logistics using vision AI for applications like smart inventory control.

What are the different types of computer vision?

To understand the different domains within computer vision, it is important to understand the techniques on which computer vision tasks are based. Most computer vision techniques begin with a model, or mathematical algorithm, that performs a specific elementary operation, task, or combination. While we classify traditional image processing and AI-based computer vision algorithms separately, most computer vision systems rely on a combination depending on the use case, complexity, and performance required.

Traditional computer vision

Traditional, non-deep learning-based computer vision can refer to both computer vision and image processing techniques.

In traditional computer vision, a specific set of instructions perform a specific task, like detecting corners or edges in an image to identify windows in an image of a building.

On the other hand, image processing performs a specific manipulation of an image that can be then used for further processing with a vision algorithm. For instance, you may want to smooth or compress an image’s pixels for display or reduce its overall size. This can be likened to bending the light that enters the eye to adjust focus or viewing field. Other examples of image processing include adjusting, converting, rescaling, and warping an input image.

AI-based computer vision

AI-based computer vision or vision AI relies on algorithms that have been trained on visual data to accomplish a specific task, as opposed to programmed, hard-coded instructions like that of image processing.

The detection, classification, segmentation, and synthesis tasks mentioned earlier typically are AI-based computer vision algorithms because of the accuracy and robustness that can be achieved. In many instances, AI-based computer vision algorithms can outperform traditional algorithms in terms of these two performance metrics.

AI-based computer vision algorithms mimic the human vision system more closely by learning from and adapting to visual data inputs, making them the computer vision models of choice in most cases. That being said, AI-based computer vision algorithms require large amounts of data and the quality of that data directly drives the quality of the model’s output. But, the performance outweighs the cost.

AI-based neural networks teach themselves, depending on the data the algorithm was trained on. AI-based computer vision is like learning from experience and making predictions based on context apart from explicit direction. The learning process is akin to when your eye sees an unfamiliar object and the brain tries to learn what it is and stores it for future predictions.

Machine learning compared to deep learning in AI-based computer vision

Machine learning computer vision is a type of AI-based computer vision. AI-based computer vision based on machine learning has artificial neural networks or layers, similar to that seen in the human brain, to connect and transmit signals about the visual data ingested. In machine learning, computer vision neural networks have separate and distinct layers, explicitly-defined connections between the layers, and predefined directions for visual data transmission.

Deep learning-based computer vision models are a subset of machine learning-based computer vision. The “deep” in deep learning derives its name from the depth or number of the layers in the neural network. Typically, a neural network with three or more layers is considered deep.

AI-based computer vision based on deep learning is trained on volumes of data. It is not uncommon to see hundreds of thousands and millions of digital images used to train and develop deep neural network models. For more information, see What’s the difference Between Artificial Intelligence, Machine Learning, and Deep Learning? .

Get started developing computer vision

Now that we have covered the fundamentals of computer vision, we encourage you to get started developing computer vision. We recommend that beginners get started with the Vision Programming Interface (VPI) Computer Vision and Image Processing Library for non-AI algorithms or one of the TAO Toolkit fully-operational, ready-to-use, pretrained AI models .

To see how NVIDIA enables the end-to-end computer vision workflow, see the Computer Vision Solutions page. NVIDIA provides models plus computer vision and image-processing tools. We also provide AI-based software application frameworks for training visual data, testing and evaluation of image datasets, deployment and execution, and scaling.

To help enable emerging computer vision developers everywhere, NVIDIA is curating a series of paths to mastery to chart and nurture next-generation leaders. Stay tuned for the upcoming release of the computer vision path to mastery to self-pace your learning journey and showcase your #NVCV progress on social media.

Related resources

- DLI course: Deep Learning for Industrial Inspection

- GTC session: The Visionaries: A Cross-Industry Exploration of Computer Vision

- GTC session: Vision AI Demystified

- GTC session: Boost your Vision AI Application with Vision Transformer

- NGC Containers: MATLAB

- Webinar: Transforming Warehouse Operation Management Using Computer Vision and Digital Twins

About the Authors

Related posts

Explainer: What Is Computer Vision?

The Future of Computer Vision

AI Startup Aims To Redefine How People Interact with Technology

AI Reinvents the Filmmaking Process

CSIRO Powers Bionic Vision Research with New GPU-Accelerated Supercomputer

Revolutionizing Graph Analytics: Next-Gen Architecture with NVIDIA cuGraph Acceleration

Efficient CUDA Debugging: Memory Initialization and Thread Synchronization with NVIDIA Compute Sanitizer

Analyzing the Security of Machine Learning Research Code

Comparing Solutions for Boosting Data Center Redundancy

Validating nvidia drive sim radar models.

Suggestions or feedback?

MIT News | Massachusetts Institute of Technology

- Machine learning

- Social justice

- Black holes

- Classes and programs

Departments

- Aeronautics and Astronautics

- Brain and Cognitive Sciences

- Architecture

- Political Science

- Mechanical Engineering

Centers, Labs, & Programs

- Abdul Latif Jameel Poverty Action Lab (J-PAL)

- Picower Institute for Learning and Memory

- Lincoln Laboratory

- School of Architecture + Planning

- School of Engineering

- School of Humanities, Arts, and Social Sciences

- Sloan School of Management

- School of Science

- MIT Schwarzman College of Computing

When computer vision works more like a brain, it sees more like people do

Press contact :.

Previous image Next image

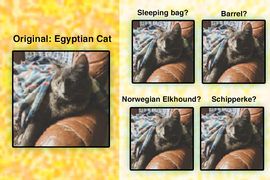

From cameras to self-driving cars, many of today’s technologies depend on artificial intelligence to extract meaning from visual information. Today’s AI technology has artificial neural networks at its core, and most of the time we can trust these AI computer vision systems to see things the way we do — but sometimes they falter. According to MIT and IBM research scientists, one way to improve computer vision is to instruct the artificial neural networks that they rely on to deliberately mimic the way the brain’s biological neural network processes visual images.

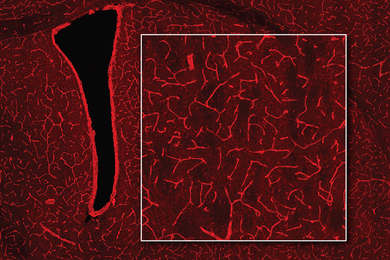

Researchers led by MIT Professor James DiCarlo , the director of MIT’s Quest for Intelligence and member of the MIT-IBM Watson AI Lab, have made a computer vision model more robust by training it to work like a part of the brain that humans and other primates rely on for object recognition. This May, at the International Conference on Learning Representations, the team reported that when they trained an artificial neural network using neural activity patterns in the brain’s inferior temporal (IT) cortex, the artificial neural network was more robustly able to identify objects in images than a model that lacked that neural training. And the model’s interpretations of images more closely matched what humans saw, even when images included minor distortions that made the task more difficult.

Comparing neural circuits

Many of the artificial neural networks used for computer vision already resemble the multilayered brain circuits that process visual information in humans and other primates. Like the brain, they use neuron-like units that work together to process information. As they are trained for a particular task, these layered components collectively and progressively process the visual information to complete the task — determining, for example, that an image depicts a bear or a car or a tree.

DiCarlo and others previously found that when such deep-learning computer vision systems establish efficient ways to solve visual problems, they end up with artificial circuits that work similarly to the neural circuits that process visual information in our own brains. That is, they turn out to be surprisingly good scientific models of the neural mechanisms underlying primate and human vision.

That resemblance is helping neuroscientists deepen their understanding of the brain. By demonstrating ways visual information can be processed to make sense of images, computational models suggest hypotheses about how the brain might accomplish the same task. As developers continue to refine computer vision models, neuroscientists have found new ideas to explore in their own work.

“As vision systems get better at performing in the real world, some of them turn out to be more human-like in their internal processing. That’s useful from an understanding-biology point of view,” says DiCarlo, who is also a professor of brain and cognitive sciences and an investigator at the McGovern Institute for Brain Research.

Engineering a more brain-like AI

While their potential is promising, computer vision systems are not yet perfect models of human vision. DiCarlo suspected one way to improve computer vision may be to incorporate specific brain-like features into these models.

To test this idea, he and his collaborators built a computer vision model using neural data previously collected from vision-processing neurons in the monkey IT cortex — a key part of the primate ventral visual pathway involved in the recognition of objects — while the animals viewed various images. More specifically, Joel Dapello, a Harvard University graduate student and former MIT-IBM Watson AI Lab intern; and Kohitij Kar, assistant professor and Canada Research Chair (Visual Neuroscience) at York University and visiting scientist at MIT; in collaboration with David Cox, IBM Research’s vice president for AI models and IBM director of the MIT-IBM Watson AI Lab; and other researchers at IBM Research and MIT asked an artificial neural network to emulate the behavior of these primate vision-processing neurons while the network learned to identify objects in a standard computer vision task.

“In effect, we said to the network, ‘please solve this standard computer vision task, but please also make the function of one of your inside simulated “neural” layers be as similar as possible to the function of the corresponding biological neural layer,’” DiCarlo explains. “We asked it to do both of those things as best it could.” This forced the artificial neural circuits to find a different way to process visual information than the standard, computer vision approach, he says.

After training the artificial model with biological data, DiCarlo’s team compared its activity to a similarly-sized neural network model trained without neural data, using the standard approach for computer vision. They found that the new, biologically informed model IT layer was — as instructed — a better match for IT neural data. That is, for every image tested, the population of artificial IT neurons in the model responded more similarly to the corresponding population of biological IT neurons.

The researchers also found that the model IT was also a better match to IT neural data collected from another monkey, even though the model had never seen data from that animal, and even when that comparison was evaluated on that monkey’s IT responses to new images. This indicated that the team’s new, “neurally aligned” computer model may be an improved model of the neurobiological function of the primate IT cortex — an interesting finding, given that it was previously unknown whether the amount of neural data that can be currently collected from the primate visual system is capable of directly guiding model development.

With their new computer model in hand, the team asked whether the “IT neural alignment” procedure also leads to any changes in the overall behavioral performance of the model. Indeed, they found that the neurally-aligned model was more human-like in its behavior — it tended to succeed in correctly categorizing objects in images for which humans also succeed, and it tended to fail when humans also fail.

Adversarial attacks

The team also found that the neurally aligned model was more resistant to “adversarial attacks” that developers use to test computer vision and AI systems. In computer vision, adversarial attacks introduce small distortions into images that are meant to mislead an artificial neural network.

“Say that you have an image that the model identifies as a cat. Because you have the knowledge of the internal workings of the model, you can then design very small changes in the image so that the model suddenly thinks it’s no longer a cat,” DiCarlo explains.

These minor distortions don’t typically fool humans, but computer vision models struggle with these alterations. A person who looks at the subtly distorted cat still reliably and robustly reports that it’s a cat. But standard computer vision models are more likely to mistake the cat for a dog, or even a tree.

“There must be some internal differences in the way our brains process images that lead to our vision being more resistant to those kinds of attacks,” DiCarlo says. And indeed, the team found that when they made their model more neurally aligned, it became more robust, correctly identifying more images in the face of adversarial attacks. The model could still be fooled by stronger “attacks,” but so can people, DiCarlo says. His team is now exploring the limits of adversarial robustness in humans.

A few years ago, DiCarlo’s team found they could also improve a model’s resistance to adversarial attacks by designing the first layer of the artificial network to emulate the early visual processing layer in the brain. One key next step is to combine such approaches — making new models that are simultaneously neurally aligned at multiple visual processing layers.

The new work is further evidence that an exchange of ideas between neuroscience and computer science can drive progress in both fields. “Everybody gets something out of the exciting virtuous cycle between natural/biological intelligence and artificial intelligence,” DiCarlo says. “In this case, computer vision and AI researchers get new ways to achieve robustness, and neuroscientists and cognitive scientists get more accurate mechanistic models of human vision.”

This work was supported by the MIT-IBM Watson AI Lab, Semiconductor Research Corporation, the U.S. Defense Research Projects Agency, the MIT Shoemaker Fellowship, U.S. Office of Naval Research, the Simons Foundation, and Canada Research Chair Program.

Share this news article on:

Related links.

- Jim DiCarlo

- McGovern Institute for Brain Research

- MIT-IBM Watson AI Lab

- MIT Quest for Intelligence

- Department of Brain and Cognitive Sciences

Related Topics

- Brain and cognitive sciences

- McGovern Institute

- Artificial intelligence

- Computer vision

- Neuroscience

- Computer modeling

- Quest for Intelligence

Related Articles

Neuroscientists find a way to make object-recognition models perform better

Putting vision models to the test

How the brain distinguishes between objects

Previous item Next item

More MIT News

The power of App Inventor: Democratizing possibilities for mobile applications

Read full story →

Using MRI, engineers have found a way to detect light deep in the brain

From steel engineering to ovarian tumor research

A better way to control shape-shifting soft robots

Professor Emeritus David Lanning, nuclear engineer and key contributor to the MIT Reactor, dies at 96

Discovering community and cultural connections

- More news on MIT News homepage →

Massachusetts Institute of Technology 77 Massachusetts Avenue, Cambridge, MA, USA

- Map (opens in new window)

- Events (opens in new window)

- People (opens in new window)

- Careers (opens in new window)

- Accessibility

- Social Media Hub

- MIT on Facebook

- MIT on YouTube

- MIT on Instagram

What Is Computer Vision?

Computer vision is a field of artificial intelligence (AI) that applies machine learning to images and videos to understand media and make decisions about them. With computer vision, we can, in a sense, give vision to software and technology.

How Does Computer Vision Work?

Computer vision programs use a combination of techniques to process raw images and turn them into usable data and insights.

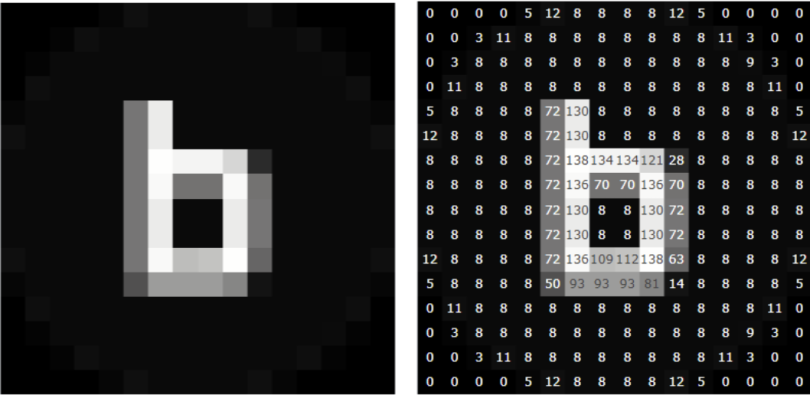

The basis for much computer vision work is 2D images, as shown below. While images may seem like a complex input, we can decompose them into raw numbers. Images are really just a combination of individual pixels and each pixel can be represented by a number (grayscale) or combination of numbers such as (255, 0, 0— RGB ).

Once we’ve translated an image to a set of numbers, a computer vision algorithm applies processing. One way to do this is a classic technique called convolutional neural networks (CNNs) that uses layers to group together the pixels in order to create successively more meaningful representations of the data. A CNN may first translate pixels into lines, which are then combined to form features such as eyes and finally combined to create more complex items such as face shapes.

Why Is Computer Vision Important?

Computer vision has been around since as early as the 1950s and continues to be a popular field of research with many applications. According to the deep learning research group, BitRefine , we should expect the computer vision industry to grow to nearly 50 billion USD in 2022, with 75 percent of the revenue deriving from hardware .

The importance of computer vision comes from the increasing need for computers to be able to understand the human environment. To understand the environment, it helps if computers can see what we do, which means mimicking the sense of human vision. This is especially important as we develop more complex AI systems that are more human-like in their abilities.

On That Note. . . How Do Self-Driving Cars Work?

Computer Vision Examples

Computer vision is often used in everyday life and its applications range from simple to very complex.

Optical character recognition (OCR) was one of the most widespread applications of computer vision. The most well-known case of this today is Google’s Translate , which can take an image of anything — from menus to signboards — and convert it into text that the program then translates into the user’s native language. We can also apply OCR in other use cases such as automated tolling of cars on highways and translating hand-written documents into digital counterparts.

A more recent application, which is still under development and will play a big role in the future of transportation, is object recognition. In object recognition an algorithm takes an input image and searches for a set of objects within the image, drawing boundaries around the object and labelling it. This application is critical in self-driving cars which need to quickly identify its surroundings in order to decide on the best course of action.

Computer Vision Applications

- Facial recognition

- Self-driving cars

- Robotic automation

- Medical anomaly detection

- Sports performance analysis

- Manufacturing fault detection

- Agricultural monitoring

- Plant species classification

- Text parsing

What Are the Risks of Computer Vision?

As with all technology, computer vision is a tool, which means that it can have benefits, but also risks. Computer vision has many applications in everyday life that make it a useful part of modern society but recent concerns have been raised around privacy. The issue that we see most often in the media is around facial recognition. Facial recognition technology uses computer vision to identify specific people in photos and videos. In its lightest form it’s used by companies such as Meta or Google to suggest people to tag in photos, but it can also be used by law enforcement agencies to track suspicious individuals. Some people feel facial recognition violates privacy, especially when private companies may use it to track customers to learn their movements and buying patterns.

Built In’s expert contributor network publishes thoughtful, solutions-oriented stories written by innovative tech professionals. It is the tech industry’s definitive destination for sharing compelling, first-person accounts of problem-solving on the road to innovation.

Great Companies Need Great People. That's Where We Come In.

- Skip to primary navigation

- Skip to main content

- Skip to primary sidebar

- Skip to footer

PyImageSearch

You can master Computer Vision, Deep Learning, and OpenCV - PyImageSearch

Book Examples of Image Search Engines Image Search Engine Basics Tutorials

Announcing “Case Studies: Solving real world problems with computer vision”

by Adrian Rosebrock on June 26, 2014

I have some big news to announce today…

Besides writing a ton of blog posts about computer vision, image processing, and image search engines, I’ve been behind the scenes, working on a second book .

And you may be thinking, hey, didn’t you just finish up Practical Python and OpenCV ?

Yep. I did.

Now, don’t get me wrong. The feedback for Practical Python and OpenCV has been amazing. And it’s done exactly what I thought it would — teach developers, programmers, and students just like you the basics of computer vision in a single weekend .

But now that you know the fundamentals of computer vision and have a solid starting point, it’s time to move on to something more interesting…

Let’s take your knowledge of computer vision and solve some actual, real world problems .

What type of problems?

I’m happy you asked. Read on and I’ll show you.

What does this book cover?

This book covers five main topics related to computer vision in the real world. Check out each one below, along with a screenshot of each.

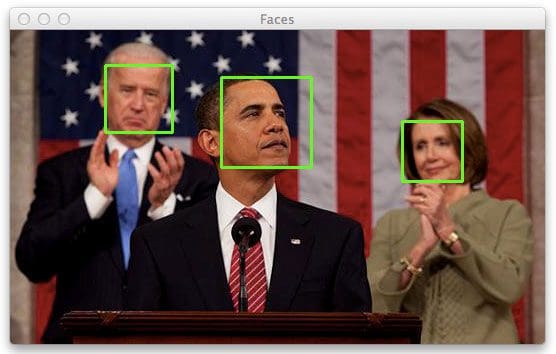

#1. Face detection in photos and video

By far, the most requested tutorial of all time on this blog has been “How do I find faces in images?” If you’re interested in face detection and finding faces in images and video, then this book is for you.

#2. Object tracking in video

Another common question I get asked is “How can I track objects in video?” In this chapter, I discuss how you can use the color of an object to track its trajectory as it moves in the video.

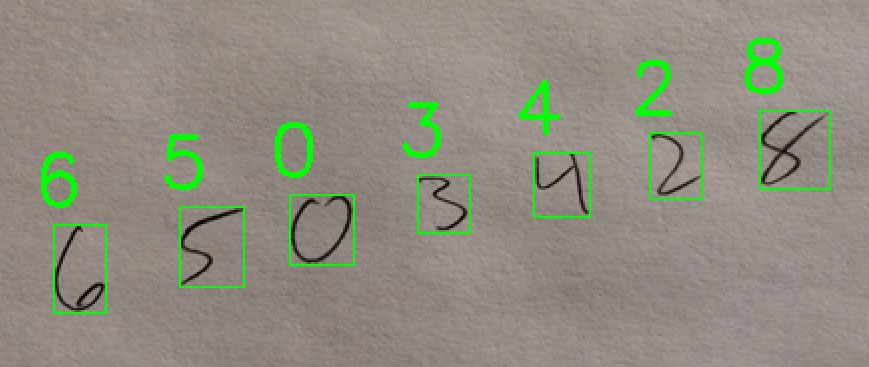

#3. Handwriting recognition with Histogram of Oriented Gradients (HOG)

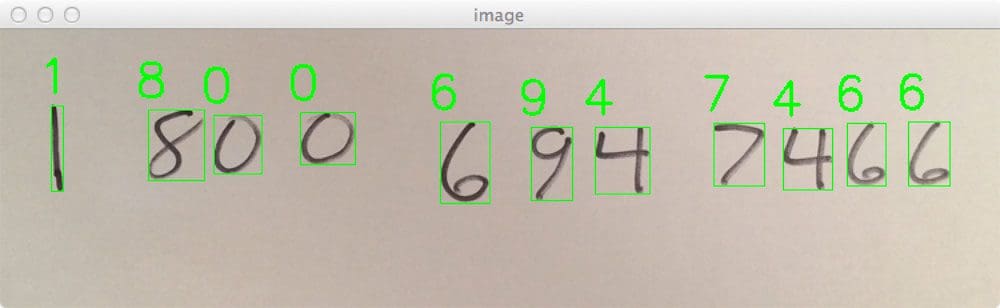

This is probably my favorite chapter in the entire Case Studies book, simply because it is so practical and useful .

Imagine you’re at a bar or pub with a group of friends, when all of a sudden a beautiful stranger comes up to you and hands you their phone number written on a napkin.

Do you stuff the napkin in your pocket, hoping you don’t lose it? Do you take out your phone and manually create a new contact?

Well you could. Or. You could take a picture of the phone number and have it automatically recognized and stored safely.

In this chapter of my Case Studies book, you’ll learn how to use the Histogram of Oriented Gradients (HOG) descriptor and Linear Support Vector Machines to classify digits in an image.

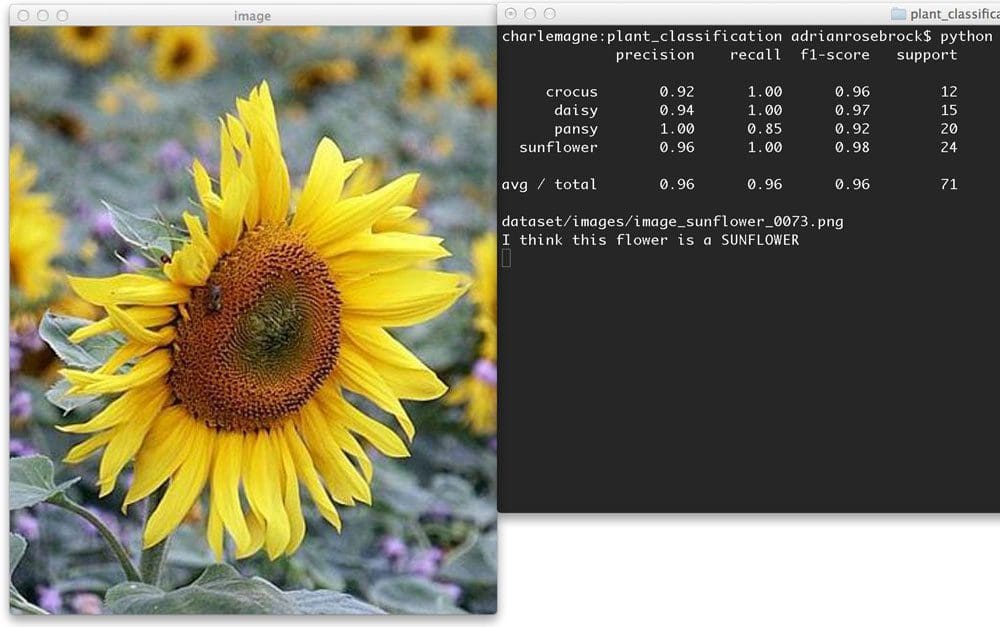

#4. Plant classification using color histograms and machine learning

A common use of computer vision is to classify the contents of an image . In order to do this, you need to utilize machine learning. This chapter explores how to extract color histograms using OpenCV and then train a Random Forest Classifier using scikit-learn to classify the species of a flower.

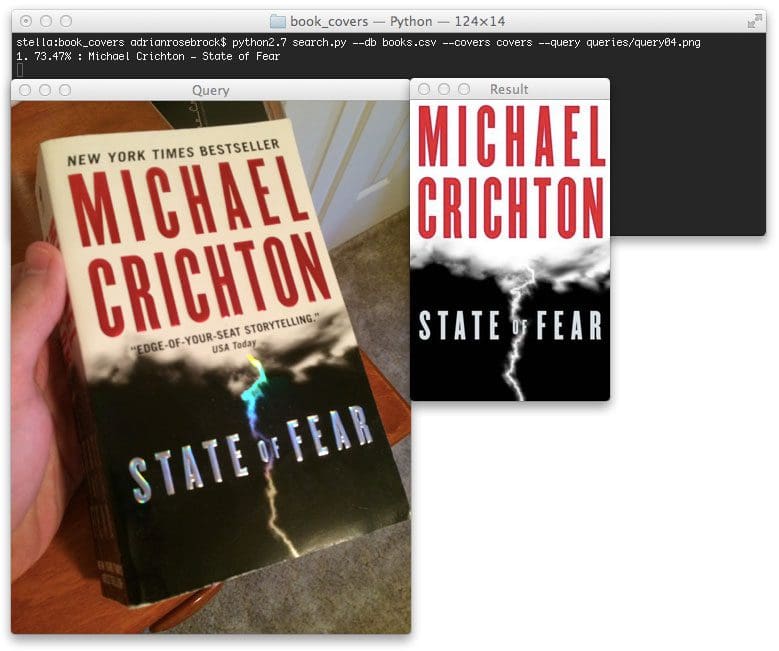

#5. Building an Amazon.com book cover search

Three weeks ago, I went out to have a few beers with my friend Gregory, a hot shot entrepreneur in San Francisco who has been developing a piece of software to instantly recognize and identify book covers — using only an image. Using this piece of software, users could snap a photo of books they were interested in, and then have them automatically added to their cart and shipped to their doorstep — at a substantially cheaper price than your standard Barnes & Noble!

Anyway, I guess Gregory had one too many beers, because guess what?

He clued me in on his secrets.

Gregory begged me not to tell…but I couldn’t resist.

In this chapter you’ll learn how to utilize keypoint extraction and SIFT descriptors to perform keypoint matching.

The end result is a system that can recognize and identify the cover of a book in a snap…of your smartphone!

All of these examples are covered in detail, from front to back, with lots of code.

By the time you finish reading my Case Studies book, you’ll be a pro at solving real world computer vision problems.

So who is this book for?

This book is for people like yourself who have a solid foundation of computer vision and image processing. Ideally, you have already read through Practical Python and OpenCV and have a strong grasp on the basics (if you haven’t had a chance to read Practical Python and OpenCV , definitely pick up a copy ).

I consider my new Case Studies book to be the next logical step in your journey to learn computer vision.

You see, this book focuses on taking the fundamentals of computer vision, and then applying them to solve, actual real-world problems .

So if you’re interested in applying computer vision to solve real world problems, you’ll definitely want to pick up a copy.

Reserve your spot in line to receive early access

If you signup for my newsletter, I’ll be sending out previews of each chapter so you can get see first hand how you can use computer vision techniques to solve real world problems.

But if you simply can’t wait and want to lock-in your spot in line to receive early access to my new Case Studies eBook, just click here .

Sound good?

Sign-up now to receive an exclusive pre-release deal when the book launches.

Join the PyImageSearch Newsletter and Grab My FREE 17-page Resource Guide PDF

Enter your email address below to join the PyImageSearch Newsletter and download my FREE 17-page Resource Guide PDF on Computer Vision, OpenCV, and Deep Learning.

About the Author

Hi there, I’m Adrian Rosebrock, PhD. All too often I see developers, students, and researchers wasting their time, studying the wrong things, and generally struggling to get started with Computer Vision, Deep Learning, and OpenCV. I created this website to show you what I believe is the best possible way to get your start.

Previous Article:

Applying deep learning and a RBM to MNIST using Python

Next Article:

Super fast color transfer between images

Comment section.

Hey, Adrian Rosebrock here, author and creator of PyImageSearch. While I love hearing from readers, a couple years ago I made the tough decision to no longer offer 1:1 help over blog post comments.

At the time I was receiving 200+ emails per day and another 100+ blog post comments. I simply did not have the time to moderate and respond to them all, and the sheer volume of requests was taking a toll on me.

Instead, my goal is to do the most good for the computer vision, deep learning, and OpenCV community at large by focusing my time on authoring high-quality blog posts, tutorials, and books/courses.

If you need help learning computer vision and deep learning, I suggest you refer to my full catalog of books and courses — they have helped tens of thousands of developers, students, and researchers just like yourself learn Computer Vision, Deep Learning, and OpenCV.

Click here to browse my full catalog.

Similar articles

Saving key event video clips with opencv, opencv gamma correction, opencv eigenfaces for face recognition.

You can learn Computer Vision, Deep Learning, and OpenCV.

Get your FREE 17 page Computer Vision, OpenCV, and Deep Learning Resource Guide PDF. Inside you’ll find our hand-picked tutorials, books, courses, and libraries to help you master CV and DL.

- Deep Learning

- Dlib Library

- Embedded/IoT and Computer Vision

- Face Applications

- Image Processing

- OpenCV Install Guides

- Machine Learning and Computer Vision

- Medical Computer Vision

- Optical Character Recognition (OCR)

- Object Detection

- Object Tracking

- OpenCV Tutorials

- Raspberry Pi

Books & Courses

- PyImageSearch University

- FREE CV, DL, and OpenCV Crash Course

- Practical Python and OpenCV

- Deep Learning for Computer Vision with Python

- PyImageSearch Gurus Course

- Raspberry Pi for Computer Vision

- Get Started

- Privacy Policy

Access the code to this tutorial and all other 500+ tutorials on PyImageSearch

Enter your email address below to learn more about PyImageSearch University (including how you can download the source code to this post):

What's included in PyImageSearch University?

- Easy access to the code, datasets, and pre-trained models for all 500+ tutorials on the PyImageSearch blog

- High-quality, well documented source code with line-by-line explanations (ensuring you know exactly what the code is doing)

- Jupyter Notebooks that are pre-configured to run in Google Colab with a single click

- Run all code examples in your web browser — no dev environment configuration required!

- Support for all major operating systems (Windows, macOS, Linux, and Raspbian)

- Full access to PyImageSearch University courses

- Detailed video tutorials for every lesson

- Certificates of Completion for all courses

- New courses added every month! — stay on top of state-of-the-art trends in computer vision and deep learning

PyImageSearch University is really the best Computer Visions "Masters" Degree that I wish I had when starting out. Being able to access all of Adrian's tutorials in a single indexed page and being able to start playing around with the code without going through the nightmare of setting up everything is just amazing. 10/10 would recommend. Sanyam Bhutani Machine Learning Engineer and 2x Kaggle Master

What Is Computer Vision and How It Works

We perceive and interpret visual information from the world around us automatically. So implementing computer vision might seem like a trivial task. But is it really that easy to artificially model a process that took millions of years to evolve?

Read this post if you want to learn more about what is behind computer vision technology and how ML engineers teach machines to see things.

- What is computer vision?

Computer vision is a field of artificial intelligence and machine learning that studies the technologies and tools that allow for training computers to perceive and interpret visual information from the real world.

‘Seeing’ the world is the easy part: for that, you just need a camera. However, simply connecting a camera to a computer is not enough. The challenging part is to classify and interpret the objects in images and videos, the relationship between them, and the context of what is going on. What we want computers to do is to be able to explain what is in an image, video footage, or real-time video stream.

That means that the computer must effectively solve these three tasks:

- Automatically understand what the objects in the image are and where they are located.

- Categorize these objects and understand the relationships between them.

- Understand the context of the scene.

In other words, a general goal of this field is to ensure that a machine understands an image just as well or better than a human. As you will see later on, this is quite challenging.

How does computer vision work?

In order to make the machine recognize visual objects, it must be trained on hundreds of thousands of examples. For example, you want someone to be able to distinguish between cars and bicycles. How would you describe this task to a human?

Normally, you would say that a bicycle has two wheels, and a machine has four. Or that a bicycle has pedals, and the machine doesn’t. In machine learning, this is called feature engineering .

.png)

However, as you might already notice, this method is far from perfect. Some bicycles have three or four wheels, and some cars have only two. Also, motorcycles and mopeds exist that can be mistaken for bicycles. How will the algorithm classify those?

When you are building more and more complicated systems (for example, facial recognition software) cases of misclassification become more frequent. Simply stating the eye or hair color of every person won’t do: the ML engineer would have to conduct hundreds of measurements like the space between the eyes, space between the eye and the corners of the mouth, etc. to be able to describe a person’s face.

Moreover, the accuracy of such a model would leave much to be desired: change the lighting, face expression, or angle and you have to start the measurements all over again.

Here are several common obstacles to solving computer vision problems.

- Different lighting

For computer vision, it is very important to collect knowledge about the real world that represents objects in different kinds of lighting. A filter might make a ball look blue or yellow while in fact it is still white. A red object under a red lamp becomes almost invisible.

.png)

If the image has a lot of noise, it is hard for computer vision to recognize objects. Noise in computer vision is when individual pixels in the image appear brighter or darker than they should be. For example, videocams that detect violations on the road are much less effective when it is raining or snowing outside.

- Unfamiliar angles

It’s important to have pictures of the object from several angles. Otherwise, a computer won’t be able to recognize it if the angle changes.

- Overlapping

When there is more than one object on the image, they can overlap. This way, some characteristics of the objects might remain hidden, which makes it even more difficult for the machine to recognize them.

- Different types of objects

Things that belong to the same category may look totally different. For example, there are many types of lamps, but the algorithm must successfully recognize both a nightstand lamp and a ceiling lamp.

- Fake similarity

Items from different categories can sometimes look similar. For example, you have probably met people that remind you of a celebrity on photos taken from a certain angle but in real life not so much. Cases of misrecognition are common in CV. For example, samoyed puppies can be easily mistaken for little polar bears in some pictures.

It’s almost impossible to think about all of these cases and prevent them via feature engineering. That is why today, computer vision is almost exclusively dominated by deep artificial neural networks.

Convolutional neural networks are very efficient at extracting features and allow engineers to save time on manual work. VGG-16 and VGG-19 are among the most prominent CNN architectures. It is true that deep learning demands a lot of examples but it is not a problem: approximately 657 billion photos are uploaded to the internet each year!

- Uses of computer vision

Interpreting digital images and videos comes in handy in many fields. Let us look at some of the use cases:

Medical diagnosis. Image classification and pattern detection are widely used to develop software systems that assist doctors with the diagnosis of dangerous diseases such as lung cancer. A group of researchers has trained an AI system to analyze CT scans of oncology patients. The algorithm showed 95% accuracy, while humans – only 65%.

Factory management. It is important to detect defects in the manufacture with maximum accuracy, but this is challenging because it often requires monitoring on a micro-scale. For example, when you need to check the threading of hundreds of thousands of screws. A computer vision system uses real-time data from cameras and applies ML algorithms to analyze the data streams. This way it is easy to find low-quality items.

Retail. Amazon was the first company to open a store that runs without any cashiers or cashier machines. Amazon Go is fitted with hundreds of computer vision cameras. These devices track the items customers put in their shopping carts. Cameras are also able to track if the customer returns the product to the shelf and removes it from the virtual shopping cart. Customers are charged through the Amazon Go app, eliminating any necessity to stay in the line. Cameras also prevent shoplifting and prevent being out of product.

Security systems. Facial recognition is used in enterprises, schools, factories, and, basically, anywhere where security is important. Schools in the United States apply facial recognition technology to identify sex offenders and other criminals and reduce potential threats. Such software can also recognize weapons to prevent acts of violence in schools. Meanwhile, some airlines use face recognition for passenger identification and check-in, saving time and reducing the cost of checking tickets.

Animal conservation. Ecologists benefit from the use of computer vision to get data about the wildlife, including tracking the movements of rare species, their patterns of behavior, etc., without troubling the animals. CV increases the efficiency and accuracy of image review for scientific discoveries.

Self-driving vehicles. By using sensors and cameras, cars have learned to recognize bumpers, trees, poles, and parked vehicles around them. Computer vision enables them to freely move in the environment without human supervision.

Main problems in computer vision

Computer vision aids humans across a variety of different fields. But its possibilities for development are endless. Here are some fields that are yet to be improved and developed.

- Scene understanding