Solver Title

Generating PDF...

- Pre Algebra Order of Operations Factors & Primes Fractions Long Arithmetic Decimals Exponents & Radicals Ratios & Proportions Percent Modulo Number Line Expanded Form Mean, Median & Mode

- Algebra Equations Inequalities System of Equations System of Inequalities Basic Operations Algebraic Properties Partial Fractions Polynomials Rational Expressions Sequences Power Sums Interval Notation Pi (Product) Notation Induction Logical Sets Word Problems

- Pre Calculus Equations Inequalities Scientific Calculator Scientific Notation Arithmetics Complex Numbers Polar/Cartesian Simultaneous Equations System of Inequalities Polynomials Rationales Functions Arithmetic & Comp. Coordinate Geometry Plane Geometry Solid Geometry Conic Sections Trigonometry

- Calculus Derivatives Derivative Applications Limits Integrals Integral Applications Integral Approximation Series ODE Multivariable Calculus Laplace Transform Taylor/Maclaurin Series Fourier Series Fourier Transform

- Functions Line Equations Functions Arithmetic & Comp. Conic Sections Transformation

- Linear Algebra Matrices Vectors

- Trigonometry Identities Proving Identities Trig Equations Trig Inequalities Evaluate Functions Simplify

- Statistics Mean Geometric Mean Quadratic Mean Average Median Mode Order Minimum Maximum Probability Mid-Range Range Standard Deviation Variance Lower Quartile Upper Quartile Interquartile Range Midhinge Standard Normal Distribution

- Physics Mechanics

- Chemistry Chemical Reactions Chemical Properties

- Finance Simple Interest Compound Interest Present Value Future Value

- Economics Point of Diminishing Return

- Conversions Roman Numerals Radical to Exponent Exponent to Radical To Fraction To Decimal To Mixed Number To Improper Fraction Radians to Degrees Degrees to Radians Hexadecimal Scientific Notation Distance Weight Time Volume

- Pre Algebra

- Pre Calculus

- Given Points

- Given Slope & Point

- Slope Intercept Form

- Start Point

- Parallel Lines

- Perpendicular

- Perpendicular Lines

- Perpendicular Slope

- Is a Function

- Domain & Range

- Slope & Intercepts

- Periodicity

- Domain of Inverse

- Critical Points

- Inflection Points

- Monotone Intervals

- Extreme Points

- Global Extreme Points

- Absolute Extreme

- Turning Points

- End Behavior

- Average Rate of Change

- Piecewise Functions

- Discontinuity

- Values Table

- Compositions

- Arithmetics

- Circumference

- Eccentricity

- Conic Inequalities

- Transformation

- Linear Algebra

- Trigonometry

- Conversions

Most Used Actions

Number line.

- y=\frac{x^2+x+1}{x}

- f(x)=\ln (x-5)

- f(x)=\frac{1}{x^2}

- y=\frac{x}{x^2-6x+8}

- f(x)=\sqrt{x+3}

- f(x)=\cos(2x+5)

- f(x)=\sin(3x)

functions-calculator

- Functions A function basically relates an input to an output, there’s an input, a relationship and an output. For every input...

Please add a message.

Message received. Thanks for the feedback.

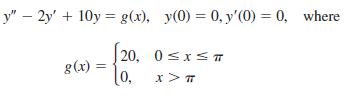

Solve the given initial-value problem in which the input function g(x) is discontinuous. Solve each problem on

Solve the given initial-value problem in which the input function g(x) is discontinuous. Solve each problem on two intervals, and then find a solution so that y and y' are continuous at x = π/2 (Problem 41) and at x = π (Problem 42).

y" – 2y' + 10y = g(x), y(0) = 0, y' (0) = 0, where 20, 0sx< T g(x) lo, [0,

Step by step answer:, we can solve this initialvalue problem by using the method of laplace transforms which is especially useful for solving differential equations with di... view the full answer.

A First Course in Differential Equations with Modeling Applications

ISBN: 978-1111827052

10th edition

Authors: Dennis G. Zill

Students also viewed these Mathematics questions

- Proceed as in Example 6 to solve the given initial value problem. Use a graphing utility to graph the continuous function y(x). (1 + x 2 ) dy/dx + 2xy = f (x), y(0) = 0, where X, f(x) = -x, -x,

- Find the solution of the given initial value problem in explicit form. sin(2x)dx+cos(9y)dy=0, y(/2)=/9 Enclose arguments of functions in parentheses. For example, sin(2x).

- Consider the differential equation . Verify that if c is a real constant, then the piecewise function f ( x ) defined by 1 if x c, f ( x ) = cos( x c ) if c

- Consider an ideal dual-loop heat-powered refrigeration cycle using R-134a as the working fluid, as shown in Fig. P9.135. Saturated vapor at 200 F leaves the boiler and expands in the turbine to the...

- Vincent had these daily balances on his credit card for his last billing period. He did not pay the card in full the previous month, so he will have to pay a finance charge. The APR is 19.2%. Nine...

- A DC motor delivers mechanical power to a rotating stainless steel shaft (k = 15.1 W/m·K) with a length of 25 cm and a diameter of 25 mm. In a surrounding with ambient air temperature of...

- The following data is available for a four stroke petrol engine stroke volume \(=6\) Litres. Mean effective pressure \(=6\) bar. Speed of engine \(=750\) RPM. Calculate i.p. of the engine.

- Green Bean, Inc. began 2016 with cash of $57,000. During the year, Green Bean earned revenue of $596,000 and collected $618,000 from customers. Expenses for the year totaled $433,000, of which Green...

- What is the function of the Occupational Safety and Health Review Commission? 2. How was the OSHRC created? 3 .What is an OSHA violation ? 4 What is an OSHA citation ? 5.What is meant by " penalties...

- The oxygen equivalence number of a weld is a number that can be used to predict properties such as hardness, strength, and ductility. The article Advances in Oxygen Equivalence Equations for...

- Solve the given initial-value problem in which the input function g(x) is discontinuous. Solve each problem on two intervals, and then find a solution so that y and y' are continuous at x = /2...

- Consider the differential equation ay'' + by' + cy = e kx , where a, b, c, and k are constants. The auxiliary equation of the associated homogeneous equation is am 2 + bm + c = 0. (a) If k is not a...

- The efficiency of a refrigerator increases by 3 percent for each C rise in the minimum temperature in the device. What is the increase in the efficiency for each? (a) K, (b) F, and (c) R rise in...

- Applied Manufacturing, Inc. (AM) is a custom metal fabrication corporation providing a wide range of goods and services to its customers. For decades, AM has been a parts manufacturer and supplier to...

- Using this data: Determine the sample size at 95% of confidence level and 3% of accuracy N=Z2 (1-P)p S

- Here is the first Distributive law: p^ (qVr) = (p^q) v (p^r) Provide a pithy verbal description of how to apply the law. In other words, write down what you will say to yourself when applying the...

- 1. In the 3-period binomial model, you are given the following parameters: S=8, u=2,d=1/2, r = 1/4, p = q = 1/2 Consider the following process in this model: Xn = max S = the largest value the stock...

- Teamwork - A case study Four students, Hal, Sue, Frank, and Bert are working together on a term project in a required senior level chemical engineering class. The project will be worth 25% of the...

- The following questions are independent of each other. a. A warranty is like a contingent liability in that the amount to be paid is not known at year end. Why are warranties payable shown as a...

- Velshi Printers has contracts to complete weekly supplements required by fortysix customers. For the year 2018, manufacturing overhead cost estimates total $600,000 for an annual production capacity...

- Determine whether the given differential equation is exact. If it is exact, solve it. (2y sin x cos x - y + 2y 2 e xy2 ) dx = (x - sin 2 x - 4xy exy2 ) dy

- Determine whether the given differential equation is exact. If it is exact, solve it. (4t 3 y - 15t 2 - y) dt + (t 4 + 3y 2 -t) dy = 0

- Determine whether the given differential equation is exact. If it is exact, solve it. {1/t + 1/t 2 y/(t 2 + y 2 )} dt + {ye y + t/(t 2 + y 2 )} dy = 0

- Figure out, salaries, net income, add net income with retained earnings and totally stockholder equity , ?accounts payable and total liabilities and stockholders equity RILEY, INCORPORATED Statement...

- Determine the material inventory balance at the end of may? Received Issued Receiving Received Materials Report Number Received Quantity Unit Price Requisition Number Issued Quantity Issued Balance...

- During October 2 0 2 3 , Fern Field Farms, Inc. received $ 1 0 , 0 0 0 from customers in exchange for fruit and vegetables. During the same month, the company paid $ 2 , 0 0 0 to employees, $ 5 0 0...

Study smarter with the SolutionInn App

Snapsolve any problem by taking a picture. Try it in the Numerade app?

references.bib

Task-Based Design and Policy Co-Optimization for Tendon-driven Underactuated Kinematic Chains

Underactuated manipulators reduce the number of bulky motors, thereby enabling compact and mechanically robust designs. However, fewer actuators than joints means that the manipulator can only access a specific manifold within the joint space, which is particular to a given hardware configuration and can be low-dimensional and/or discontinuous. Determining an appropriate set of hardware parameters for this class of mechanisms, therefore, is difficult - even for traditional task-based co-optimization methods. In this paper, our goal is to implement a task-based design and policy co-optimization method for underactuated, tendon-driven manipulators. We first formulate a general model for an underactuated, tendon-driven transmission. We then use this model to co-optimize a three-link, two-actuator kinematic chain using reinforcement learning. We demonstrate that our optimized tendon transmission and control policy can be transferred reliably to physical hardware with real-world reaching experiments.

I Introduction

Underactuated manipulators require a carefully designed transmission, often tendon-driven, to take advantage of a reduced number of actuators in the robot. Such designs range from planar serial chains with relatively few links to complex, hyper-redundant continuum robots [ HIROSE1978351 , matei2010data , matei2013kinetic , rojas2021force , jbk2021frontiers , continuumReview , yi2023simultaneous ] . In all of these cases, being able to reduce the number of actuators means that we can build smaller and more lightweight designs, place actuation at more proximal locations in the chain, and take advantage of passive compliance in the un-actuated degrees of freedom.

However, the compromise of such designs is that, with fewer actuators than degrees of freedom, underactuated manipulators can directly access only a certain manifold within the overall state space. This manifold, which contains the set of obtainable states for a particular hardware configuration, can be low dimensional and/or discontinuous. These limitations affect our ability to plan controllers that smoothly transition between various states to accomplish a desired task. The process of tuning the hardware parameters in order to ensure the set of accessible states matches the desired task can be slow and cumbersome, particularly if, as is often the case, changes in the design parameters of the underactuated transmission have a counter-intuitive effect the overall behavior of the robot.

Co-optimization, or the process of simultaneously searching the space of both hardware and control, is a possible solution to the problem of ensuring that a given hardware design is capable of a desired task. Such methods have been attempted in the context of underactuated kinematic chains, but often restricted to simulation [ ma2013 , Deimel2017AutomatedCO , Barbazza2017 ] , limited validation of the simulated controller on real hardware [ Xu2021AnED ] , or only implemented on single-actuator systems [ chen2020hwasp ] . The fundamental difficulty of such approaches lies in formulating a co-design problem that a) enables the use of non-trivial controllers or policies, b) can be solved to an acceptable optimum point, c) guarantees that the final result can be physically realized in real hardware, and finally d) ensures that the optimized control policy also transfers to real hardware without substantial loss of performance. This is a difficult set of goals to achieve simultaneously, and, to the best of our knowledge, no current method has done so for underactuated, tendon-driven transmissions with multiple actuators.

In this paper, we start with an underactuated tendon transmission model for general planar kinematic chains, which can capture a diverse set of hardware configurations. We use a forward model that captures the behavior of the system as a function of design parameters and control inputs. We then show that this model enables end-to-end co-optimization of design and control policies for a specified task. To solve the co-optimization problem, we adopt MORPH [ He2023MORPH ] , a recently introduced end-to-end co-optimization framework that uses a proxy model to mimic the effect of hardware, and generate gradients of hardware parameters w.r.t. tasks performance. This allows us to learn both control and hardware parameters to accomplish desired tasks. We then show that it is possible to build a real robotic manipulator with this optimized transmission, and validate that our optimized control policy can be transferred reliably to real hardware.

An additional feature of our hardware design is that some design parameters can be modified without requiring re-assembly of the manipulator, and by using the same set of fabricated components. This allows for different levels of flexibility in robot behaviors. If the task requires explorations of diverse behaviors, we can optimize all hardware parameters to explore a large solution space. In the case that the task is simpler, we can optimize only the easily adaptable parameters to avoid reassembly. While in this paper we focus on a single kinematic chain optimized for simple reaching tasks, our directional goal to enable underactuated, tendon transmission models that can be co-optimized along with their control policies, which can in turn transfer to the real world. Overall, the main contributions of this paper include:

We formulate a model of underactuated, tendon-driven, passively-compliant planar kinematic chains that enables efficient end-to-end task-based optimization of the design and control parameters.

We show that the results of the co-optimization process can be transferred to real hardware implementing the optimized design parameters. The resulting robots can then run the optimized control policy which also achieves sim2real transfer in task performance.

To the best of our knowledge, this is the first time that task-based, policy and design co-optimization methods have been demonstrated for underactuated manipulators with multi-dimensional manifolds.

II Related Work

An underactuated manipulator is a mechanical system composed of links and joints that has fewer actuators than degrees of freedom [ Jiang2011 ] . The transmission of these manipulators are often tendon-driven, as a single actuated tendon can be routed to control multiple joints. Reducing the number of bulky actuators enables designers to build more compact and lightweight manipulators. Moreover, tendon-transmission allow designers to dislocate the motors from the joints. Dislocating the actuators reduces the inertia of the links and makes it easier to make the manipulator robust to water, dust, and other difficult environmental conditions [ ozawa2009design ] . With so many practical benefits, a myriad of diverse underactuated, tendon-driven manipulators have been proposed over the past several decades.

There is an extensive history of underactuated manipulator design. The first underactuated manipulator, Hirose’s soft gripper introduced in 1978, was designed to softly capture a diverse range of objects with uniform pressure [ HIROSE1978351 ] . Over time, these manipulator designs became much more advanced and their applications now extend to more robust and intricate grasping behaviors [ dollar2009 , su2012 , Grioli2012 , santina2018 , chen2021 ] . Currently, there is extensive research in the design and control of underactuated manipulators for tasks even more dexterous than grasping, such as in-hand manipulation [ Udawatta2003 , odhner2011 , morgan2022 ] .To realize these more advanced capabilities, the tendon-transmission of these manipulators had to be carefully designed, optimized, and further hand-tuned. This process is cumbersome and time-consuming, but necessary. Co-optimization methods are a possible tool to help design these complicated mechanisms. With recent advancements in reinforcement learning, co-optimization is now more powerful than ever.

Recently, researchers have considered reinforcement learning for task-driven design and control co-optimization [ jackson2021orchid , Ha2018ReinforcementLF , schaff2019jointly , wang2018neural ] . The key of this line of work is to derive policy gradient w.r.t both the control and design parameters. Chen and He et al. [ chen2020hwasp ] propose to integrate a differentiable model of the hardware with a control policy to adapt hardware design via policy gradients. A key limitation of this approach is the requirement of differentiable physics simulation. In cases when the forward transition cannot be modeled in a differentiable manner, researchers propose methods to learn design parameters in the input or output space of a policy. For instance, Luck et al. [ Luck2019DataefficientCO ] propose to learn an expressive latent space to represent the design parameters and condition a policy with a latent design representation. Transform2Act [ Yuan2021Transform2ActLA ] propose to have a transform stage in their policy that estimates actions to modify a robot’s design and a control stage that computes control sequences given a specific design. Our hardware design is not differentiable. Hence, in this work, we apply MORPH [ He2023MORPH ] , a method that co-optimizes design and control in parameter space that does not require differentiable physics.

We formalize our problem as follows. Our goal is to build a kinematic chain (i.e., tentacle/trunk) robot that can achieve flexible behaviors, such as reaching desired parts of the workspace, while maintaining the practical design benefits of underactuation. For this class of mechanisms, the design parameters and controller for a given task are innately coupled. Therefore, we take a task-based hardware optimization approach to search for hardware parameters that yield manifolds such that we can smoothly transition between desired states.

Our method has three main components: 1) an under-actuated, tendon-driven transmission design for compliant kinematic chains; 2) a model that captures the forward dynamics of our designed robot; and 3) an end-to-end co-optimization pipeline that can directly learn the parameters of the proposed design along with a control policy for a given reaching task.

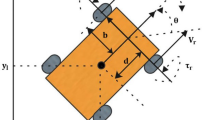

III-A Transmission design

Our design, shown in Figs. 1 and 2 , assume a kinematic chain with N 𝑁 N italic_N links driven by M 𝑀 M italic_M motors. All motors are located inside the base and actuate winches that are connected to the joints via tendons. For each motor i 𝑖 i italic_i , we denote the radius of the actuator as R i A subscript superscript 𝑅 𝐴 𝑖 R^{A}_{i} italic_R start_POSTSUPERSCRIPT italic_A end_POSTSUPERSCRIPT start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT . We assume that each motor is driving a tendon that traverses the entire length of the chain, thereby helping flex every joint. We denote the length of each link j 𝑗 j italic_j as L j subscript 𝐿 𝑗 L_{j} italic_L start_POSTSUBSCRIPT italic_j end_POSTSUBSCRIPT . For a the corresponding joint j 𝑗 j italic_j , each motor i 𝑖 i italic_i will wrap around a pulley. We use R i j f superscript subscript 𝑅 𝑖 𝑗 𝑓 {R_{ij}}^{f} italic_R start_POSTSUBSCRIPT italic_i italic_j end_POSTSUBSCRIPT start_POSTSUPERSCRIPT italic_f end_POSTSUPERSCRIPT to denote the radius of the flexion pulley for this joint and motor pair.

We assume all actuators provide flexion forces, and the transmission uses purely passive extension mechanisms. At each joint, the mechanism features a passive elastic tendon that stretches over a pulley of constant radius (denoted by R e superscript 𝑅 𝑒 R^{e} italic_R start_POSTSUPERSCRIPT italic_e end_POSTSUPERSCRIPT ) to provide a restoring extension torque. Each elastic tendon can be pretensioned individually; we use l j p r e superscript subscript 𝑙 𝑗 𝑝 𝑟 𝑒 {l_{j}}^{pre} italic_l start_POSTSUBSCRIPT italic_j end_POSTSUBSCRIPT start_POSTSUPERSCRIPT italic_p italic_r italic_e end_POSTSUPERSCRIPT to denote the pretensioning elongation of the passive tendon at joint j 𝑗 j italic_j .

This mechanism has a number of desirable characteristics. Underactuation leads to a small number of motors, and placing all motors inside the base frees the links of the kinematic chain from any motors or electronics. However, the movement of the robot is non-trivial to define or control. Critically, robot movement is determined not only by the actuators but also by a number of design parameters. These include all flexion tendon radii, extension tendon radii, and elastic tendon pre-elongations. Furthermore, the ability of a robot to reach specific points in its workspace is clearly also determined by the lengths of the links. Our goal is to devise a procedure capable of optimizing all these design parameters while providing a policy for controlling motor movements in order to achieve a specific task.

In addition, our proposed design makes some of the design parameters easier to change than others. In particular, we mount each elastic tendon on a linear slider mechanism. The position of this slider is set by rotating a lead-screw, thereby controlling the pre-tension elongations of the elastic extension tendons to less than 1mm of precision. This means that some of our design parameters (pulley radii, link lengths) are more difficult to change, as they require a full reassembly, while others (elastic tendon elongations) are easier to change depending on the task. We want to leverage this ability in our co-optimization procedure.

III-B Forward actuation model for our transmission design

In order to enable a co-optimization routine for our transmission design, we first need a forward actuation model that relates the hardware parameters and the actuator inputs to the resulting state of the manipulator. The input to this model consists of the servo angle of the winches that control flexion tendons, which we write as a column vector θ A subscript 𝜃 𝐴 \theta_{A} italic_θ start_POSTSUBSCRIPT italic_A end_POSTSUBSCRIPT of size ℝ M × 1 superscript ℝ 𝑀 1 \mathbb{R}^{M\times 1} blackboard_R start_POSTSUPERSCRIPT italic_M × 1 end_POSTSUPERSCRIPT . The next state is defined by the set of joint angles θ J subscript 𝜃 𝐽 \theta_{J} italic_θ start_POSTSUBSCRIPT italic_J end_POSTSUBSCRIPT of size ℝ N × 1 superscript ℝ 𝑁 1 \mathbb{R}^{N\times 1} blackboard_R start_POSTSUPERSCRIPT italic_N × 1 end_POSTSUPERSCRIPT . In other words, the actuation model must predict joint angles θ J subscript 𝜃 𝐽 \theta_{J} italic_θ start_POSTSUBSCRIPT italic_J end_POSTSUBSCRIPT as a function of the actuator commands θ A subscript 𝜃 𝐴 \theta_{A} italic_θ start_POSTSUBSCRIPT italic_A end_POSTSUBSCRIPT , as well as all design parameters described in the previous section. We formulate this actuation model by searching for θ J subscript 𝜃 𝐽 \theta_{J} italic_θ start_POSTSUBSCRIPT italic_J end_POSTSUBSCRIPT that minimizes the total stored energy, denoted by U 𝑈 U italic_U , while still meeting the constraints imposed by the rigid flexion tendons.

We begin our model by first formulating this constraint: since the flexion tendons cannot extend, we know that the tendons will either be in tension or accumulate slack. This slack is a difference between the collective change in length of the tendon due to the motion of the joints versus the change in length due to servo-driven winch. The slack, therefore, is a function of the flexion pulley radii, actuated winch radii, and joint angles.

The flexion radii can be composed into a matrix of size ℝ M × N superscript ℝ 𝑀 𝑁 \mathbb{R}^{M\times N} blackboard_R start_POSTSUPERSCRIPT italic_M × italic_N end_POSTSUPERSCRIPT as follows:

The radii of the actuated winches can be similarly composed into a diagonal matrix of size ℝ M × M superscript ℝ 𝑀 𝑀 \mathbb{R}^{M\times M} blackboard_R start_POSTSUPERSCRIPT italic_M × italic_M end_POSTSUPERSCRIPT .

With these matrices, we can now define a column vector of the slack collected in the tendons ( w ) 𝑤 (w) ( italic_w ) .

The slack is important as it limits the set of obtainable states for any given action. We know that the robot will settle at a configuration in this set of states that will minimize the overall stored elastic energy ( U ) 𝑈 (U) ( italic_U ) [ Jiang2011 ] . This stored energy comes from the preloaded elongation l p r e subscript 𝑙 𝑝 𝑟 𝑒 l_{pre} italic_l start_POSTSUBSCRIPT italic_p italic_r italic_e end_POSTSUBSCRIPT of the elastic tendons, but also the elongation due to joint flexion. The total elongation for any j-th link ( l j ) subscript 𝑙 𝑗 (l_{j}) ( italic_l start_POSTSUBSCRIPT italic_j end_POSTSUBSCRIPT ) can be written as follows:

We can now pose the forward actuation model to solve for the next state as θ J ′ = f ( θ J , θ A ) superscript subscript 𝜃 𝐽 ′ 𝑓 subscript 𝜃 𝐽 subscript 𝜃 𝐴 \theta_{J}^{\prime}=f(\theta_{J},\theta_{A}) italic_θ start_POSTSUBSCRIPT italic_J end_POSTSUBSCRIPT start_POSTSUPERSCRIPT ′ end_POSTSUPERSCRIPT = italic_f ( italic_θ start_POSTSUBSCRIPT italic_J end_POSTSUBSCRIPT , italic_θ start_POSTSUBSCRIPT italic_A end_POSTSUBSCRIPT ) relating the state to the action as a numerical optimization problem: given θ A subscript 𝜃 𝐴 \theta_{A} italic_θ start_POSTSUBSCRIPT italic_A end_POSTSUBSCRIPT , θ J subscript 𝜃 𝐽 \theta_{J} italic_θ start_POSTSUBSCRIPT italic_J end_POSTSUBSCRIPT ,

Since the flexion tendons are in-extensible, the change in length of the tendon at the joints must always be greater than the change in length of the tendon due to the actuator. The slack, therefore, must always be greater than zero, as shown in Eq. 6 . Additionally, if the joint angles are non-zero, the tendon must always be in tension, and therefore, at least one element in w → → 𝑤 \vec{w} over→ start_ARG italic_w end_ARG must be zero. This constraint is shown in Eq. 7 . We enforce these constraints While minimizing the total stored energy given in Eq. 5 ,

Actions and hardware parameters do not change the global energy landscape, but instead, the section of the landscape that satisfies our constraints (see Fig. 3 ). Hence, optimizing control θ A subscript 𝜃 𝐴 \theta_{A} italic_θ start_POSTSUBSCRIPT italic_A end_POSTSUBSCRIPT and hardware parameters (e.g., 𝐑 𝐅 subscript 𝐑 𝐅 \mathbf{R_{F}} bold_R start_POSTSUBSCRIPT bold_F end_POSTSUBSCRIPT and R e subscript 𝑅 𝑒 R_{e} italic_R start_POSTSUBSCRIPT italic_e end_POSTSUBSCRIPT ) is finding appropriate energy manifolds whose minimum energy states are ones we want to visit for task completion. There exists some combination of hardware parameters that ensures these manifolds are continuous are have a clear energy minima. Arriving at this specific set of parameters relies on observing how the changes in parameters affect the most suitable control strategy for solving a given task. Finally, in this work, we focus on optimizing the following set of hardware parameters: ϕ = ( 𝐑 𝐅 , l p r e , L ) italic-ϕ superscript 𝐑 𝐅 subscript 𝑙 𝑝 𝑟 𝑒 𝐿 \phi=(\mathbf{R^{F}},l_{pre},L) italic_ϕ = ( bold_R start_POSTSUPERSCRIPT bold_F end_POSTSUPERSCRIPT , italic_l start_POSTSUBSCRIPT italic_p italic_r italic_e end_POSTSUBSCRIPT , italic_L ) .

III-C Task-aware co-optimization of design and control

The state transition model ℱ ℱ \mathcal{F} caligraphic_F requires additional considerations. As described in Section III-B , it consists of an optimization-based model f 𝑓 f italic_f whose behaviors are determined by some of the hardware parameters from ϕ italic-ϕ \phi italic_ϕ . However, this model is non-differentiable and, therefore, can not be used in a standard policy gradient optimization routine.

To co-optimize the hardware design with control, we apply MORPH [ He2023MORPH ] , an end-to-end co-optimization method that uses a neural network proxy model h n n superscript ℎ 𝑛 𝑛 h^{nn} italic_h start_POSTSUPERSCRIPT italic_n italic_n end_POSTSUPERSCRIPT to approximate the forward transition ℱ ℱ \mathcal{F} caligraphic_F . The proxy model and a control policy are co-optimized with task performance while asking h n n superscript ℎ 𝑛 𝑛 h^{nn} italic_h start_POSTSUPERSCRIPT italic_n italic_n end_POSTSUPERSCRIPT to be close to ℱ ℱ \mathcal{F} caligraphic_F . In this work, instead of mimicking just the forward transition, we consider the effect of hardware design parameters in task space. Hence, we ask the hardware proxy to approximate both the forward transition and forward kinematics: q ϕ = f ∘ T F K subscript 𝑞 italic-ϕ 𝑓 subscript 𝑇 𝐹 𝐾 q_{\phi}=f\circ T_{FK} italic_q start_POSTSUBSCRIPT italic_ϕ end_POSTSUBSCRIPT = italic_f ∘ italic_T start_POSTSUBSCRIPT italic_F italic_K end_POSTSUBSCRIPT . Note that our hardware parameters ϕ italic-ϕ \phi italic_ϕ are encapsulated in different parts of q 𝑞 q italic_q . For example, link lengths only affect forward kinematics, while pulley radii and preloaded tension govern the behaviors of f 𝑓 f italic_f . Hence, the overall optimization objective is:

where α 𝛼 \alpha italic_α is a constant number.

To derive explicit hardware design parameters, for every N 𝑁 N italic_N epochs, we also use CMA-ES [ hansen2001completely ] to search ϕ italic-ϕ \phi italic_ϕ to match the updated hardware proxy h n n superscript ℎ 𝑛 𝑛 h^{nn} italic_h start_POSTSUPERSCRIPT italic_n italic_n end_POSTSUPERSCRIPT :

The final algorithm is an iterative process: We first co-optimize the hardware proxy and the control policy to improve task performance, then extract explicit hardware parameters that match the current version of the hardware proxy.

IV Experimental Set-up

Iv-a design and control co-optimization.

To evaluate our method, we optimize the aforementioned design with several goal-reaching tasks, i.e., reaching a goal location in 2D with its end-effector. We have three sets of experiments demonstrating three ways to utilize our co-optimization pipeline for flexible motor skills:

Goal reaching via re-fabrication : We adapt all design and control parameters to different goals but only train each policy for a single goal. In this specific experiment, reaching multiple goals requires the re-fabrication of a real robot since the link length and pulley radii cannot be changed after assembly without fabricating new parts and reassembling the manipulator.

Goal reaching via online hardware updates : In this experiment, we optimize the hardware design in two stages: we first optimize design parameters that require re-fabrication (i.e., pulley radii) to a specific goal. Then, we fix all parameters except the pre-tension elongation and co-optimize for a different goal.

Goal reaching via multi-goal control policies : We optimize all parameters to learn a shared design and control that can achieve multiple goals with a single control policy. In this case, we do not need to adapt any of the hardware parameters to reach multiple goals after the policy is trained.

The first experiment is our design’s most limited application due to the need for re-fabrication. However, it demonstrates that our transmission can be optimized to reach different areas of the fully actuated workspace. We also train control policies with fixed initial design parameters to compare task performance. Fig. 5 shows the goal distribution for the first experiment set. The second set of experiments leverages a key aspect of our design - the ability to easily adapt some design parameters without having to go through tedious re-design, fabrication, and assembly. For this second set, we keep the same pulley radii and only optimize the pretension elongation for a goal different from the first experiment. In the first and second experiments, we use the state space described in Section. III for inputs of our control policy.

The last experiment demonstrates that our robot, while being underactuated, can achieve different goals with the same design parameters and control policy—if the desired goals are not too far away. The state space is extended from the first two experiment sets with the addition of the goal locations. To establish the specific set of goals we hope to achieve, We sample goals randomly around a center location ( − 0.16 , 0 ) 0.16 0 (-0.16,0) ( - 0.16 , 0 ) within a radius of 50 m m 50 m m 50\mathrm{mm} 50 roman_m roman_m . For evaluation, we randomly sample 20 20 20 20 goals and execute the policy 10 10 10 10 times for each goal. This experiment requires re-assembly of the entire tentacle as we optimize all the design parameters. Therefore, we only run this experiment in simulation.

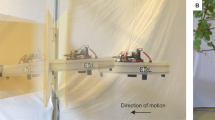

IV-B Hardware implementation and sim-to-real transfer

Shown in Fig. 1 and 6 , we physically build the manipulator with the optimized pulley radii in the first two experiment sets. To simplify both the fabrication and assembly, we set ( R e ) = 15 m m subscript 𝑅 𝑒 15 m m (R_{e})=15\mathrm{mm} ( italic_R start_POSTSUBSCRIPT italic_e end_POSTSUBSCRIPT ) = 15 roman_m roman_m . Our transmission in this robot is driven by two Dynamixel XM-430 servos that sense and control the angle ( θ a ) subscript 𝜃 𝑎 (\theta_{a}) ( italic_θ start_POSTSUBSCRIPT italic_a end_POSTSUBSCRIPT ) of the actuated winches. During our experiments, we fix the manipulator to a experimental rig, which has a mounted camera looking down on the robot. The camera is used in lieu of joint angle encoders, as we calculate the joint angles of our robot ( θ J ) subscript 𝜃 𝐽 (\theta_{J}) ( italic_θ start_POSTSUBSCRIPT italic_J end_POSTSUBSCRIPT ) by tracking the pose of AR tags attached to our robot as shown in Fig. 6 . We use the joint angles collected from the AR tags to execute closed-loop policies for the first two experiment sets. In this case, we close the loop by taking observations from the real robot during policy execution and use our optimized control policy to determine the next action. In addition to closed-loop policies, we also simply train a control policy to reach the goal in simulation, and then directly transfer this policy to the real-robot without adjusting any of the actions during runtime.

We run both the first and second experiment set on the real robot hardware for both closed-loop and open-loop policies. In the next section, we evaluate the accuracy and precision over 20 samples on the real robot for each set of data.

V Results and Analysis

V-a co-optimization results.

Our results from the first set of experiments show that our model learns different design parameters for different goal locations with an error 1.80 mm 1.80 mm 1.80\mathrm{mm} 1.80 roman_mm . As shown in Fig. 5 , our model can adapt hardware parameters to different goals. The initial design can achieve only 1 1 1 1 out of 9 9 9 9 goals, achieving much lower average returns than our method (Fig. 4 ) when we only learn a control policy with a fixed design. Although the robot’s workspace may contain the goal, the initial hardware parameters make learning a stable control policy difficult. After the design parameters are optimized, the control policy can learn a stable action sequence to arrive at the goal position.

In the second experiment set, our results show we can optimize only the pulley radii to achieve one goal ( 0.13 , 0.3 ) 0.13 0.3 (0.13,0.3) ( 0.13 , 0.3 ) with 1.8 mm 1.8 mm 1.8\mathrm{mm} 1.8 roman_mm error in simulation. Then, we fix the pulley radii and only optimize the preloaded extension to another goal ( 0.16 , − 0.08 ) 0.16 0.08 (0.16,-0.08) ( 0.16 , - 0.08 ) with 3.2 mm 3.2 mm 3.2\mathrm{mm} 3.2 roman_mm error in simulation. Using derived design parameters, we build a real tentacle robot and directly transfer the control policy from simulation to the real world.

Our results show that the transfer policy achieves, on average, 6.3 mm 6.3 mm 6.3\mathrm{mm} 6.3 roman_mm error to the goal location. During training, since the control policy is co-optimized with the non-stationary hardware proxy, it is robust to hardware parameters perturbation. We test both open- and closed-loop performance on the real robot. In the open-loop test case, we record action sequences executed from the simulation and directly execute them on the real robot. The robot can touch the goal with 12.97 mm 12.97 mm 12.97\mathrm{mm} 12.97 roman_mm errors.

V-B Sim-to-real accuracy

A key focus in our paper is the ability to transfer the optimized design and control policy to physical robotic hardware. We achieve accurate transfer in terms of task performance, as shown in Table I . Our closed-loop results show that taking observations directly from the real robot improves task performance substantially. The closed-loop policies’ standard deviation of the distance to the goal is much lower, which indicates that they yield more consistent results in the real world. Additionally, the average final distance to the goal for the closed-loop policies is much lower, indicating higher accuracy. Our policy is robust to actuation errors by reasoning about the current robot state and producing actions that correct its errors. In Table II , we calculate the average difference between the real robot state and the simulated robot state for both the closed-loop and open-loop sets of experiments. The average error in joint space for closed-loop policy execution is about half the error for open-loop execution. Similarly, the average distance between the real and simulated end-effector positions for the entire action sequence of each experiment is much lower for closed-loop policies.

V-C Energy manifold optimization

Our forward model is an optimization-based model. Each forward step requires many optimization steps to find a global energy minimum, which is crucial for accurately simulating our robot. Each set of hardware parameters, along with a control action, corresponds to a manifold in the energy landscape. Although the global energy landscape does not have any local optima, a manifold can be discontinuous and has multiple local minimums. This introduces difficulties for optimization and constrains our choice of optimization algorithm to global optimization (e.g., genetic algorithms). However, given the time budget, global optimization can be inefficient and slow down our simulated robot since it generally searches in a large state space.

As mentioned in Section III-B , when we optimize the control and hardware parameters of this robot, we optimize the manifold for energy optimization. Our experiments show that our co-optimization process results in an energy manifold that is easy to learn by task. As shown in Fig. 7 , the original hardware design parameters provide manifolds that are discontinuous, spread in different regions in the energy landscape, and have several local minimums. After MORPH, the resulting manifold (see Fig. 7 b) is a continuous and smooth manifold. To further analyze the optimized manifold’s effect, we apply Sequential Least Squares Programming optimizer (SLSQP) [ kraft1988software ] , a local optimization algorithm with a time budget of 500 500 500 500 optimization steps and 100 100 100 100 random actions, to both the unoptimized and optimized manifolds and compare their results to those of a global search algorithm implemented in-house. When the hardware is unoptimized, given the same time budget, the simulated results have a much higher error ( 0.42 0.42 0.42 0.42 ) than the optimized hardware design ( 0.18 0.18 0.18 0.18 ). This means that the resulting manifold of our optimization is more suitable for fast simulation with local optimization.

VI Conclusion

In this work, we present a method for co-optimizing the design and control of underactuated kinematic chains. The key to our approach is an optimization-based forward actuation model that effectively captures the behavior of our robot design, and a co-optimization pipeline is capable of learning with non-differentiable physics. Our experimental results from simulation and the real world demonstrate that our design, along with the flexibility provided by hardware optimization, results in flexible robot capabilities while enjoying the benefits of underactuation. A key limitation of our current work is the task complexity. While being general, our forward actuation model assumes quasi-static, making contact-rich tasks difficult. In future works, we aim to extend our work to more complex tasks. Another future direction is to further utilize our online adaptable design and explore novel mechanisms to make part of the design adaptable.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 25 May 2024

Constrained trajectory optimization and force control for UAVs with universal jamming grippers

- Paul Kremer 1 ,

- Hamed Rahimi Nohooji 1 &

- Holger Voos 1 , 2

Scientific Reports volume 14 , Article number: 11968 ( 2024 ) Cite this article

Metrics details

- Aerospace engineering

- Computational science

This study presents a novel framework that integrates the universal jamming gripper (UG) with unmanned aerial vehicles (UAVs) to enable automated grasping with no human operator in the loop. Grounded in the principles of granular jamming, the UG exhibits remarkable adaptability and proficiency, navigating the complexities of soft aerial grasping with enhanced robustness and versatility. Central to this integration is a uniquely formulated constrained trajectory optimization using model predictive control, coupled with a robust force control strategy, increasing the level of automation and operational reliability in aerial grasping. This control structure, while simple, is a powerful tool for various applications, ranging from material handling to disaster response, and marks an advancement toward genuine autonomy in aerial manipulation tasks. The key contribution of this research is the combination of a UG with a suitable control strategy, that can be kept relatively straightforward thanks to the mechanical intelligence built into the UG. The algorithm is validated through numerical simulations and virtual experiments.

Similar content being viewed by others

Fast nonlinear model predictive planner and control for an unmanned ground vehicle in the presence of disturbances and dynamic obstacles

Synergistic morphology and feedback control for traversal of unknown compliant obstacles with aerial robots

Grasping through dynamic weaving with entangled closed loops

Introduction.

The evolution of UAVs over the past decade has significantly transformed the landscape of numerous scientific and industrial domains 1 , 2 , 3 , 4 . Initially envisioned as aerial sensors, UAVs demonstrated unparalleled utility in surveillance 5 , 6 , forest fire monitoring 7 , building inspection 8 , 9 , forestry 10 and terrain mapping 11 due to their agility and accessibility to challenging environments 12 , 13 . However, despite their remarkable success in sensing and monitoring, UAVs initially struggled in scenarios that demanded physical interaction with the environment.

Emerging as a solution, the field of aerial manipulation sought to equip UAVs with robotic manipulators 14 , 15 , 16 and claw-like grippers 17 , 18 , 19 . This marked the beginning of a new era where UAVs could engage in tasks requiring direct physical contact 20 . However, these traditional claw-like rigid grippers required precise alignment and positioning relative to the target object 21 . Even minor deviations could prevent successful grasping, making them susceptible to inaccuracies and necessitating exhaustive grasp analysis.

Soft aerial grasping emerged as an evolution to address the challenges posed by traditional grasping mechanisms. Soft grippers, with their adaptability and compliance, can seamlessly fit various object geometries, significantly minimizing the need for detailed grasp analysis 22 , 23 , 24 , 25 , 26 . Inherent to soft robotics is their lightness and versatility thanks to the passive intelligence baked into their mechanical structure. Not only does this reduce the mechanical impact during interactions but it also makes the grippers particularly suitable for aerial applications. The inherent compliance of soft robotics presents an innovative solution to the difficulties related to tolerance in object grasping 25 . Their ability to passively conform to objects leverages the concept of morphological computation, where the gripper’s passive mechanical structures supplement the role of active controls 27 , 28 .

A particular type of soft gripper was introduced in 29 , called the universal jamming gripper (UG). The UG is based on the principle of granular jamming where certain materials transition from a fluid- to a solid-like state when subjected to external pressure 30 . This unique mechanism allows particles to flow and take arbitrary shapes, then solidify in place under external pressure 31 . The UG’s lightweight composition features a membrane filled with such a material that hardens under vacuum, establishing a firm grasp on the englobed object via a combination of suction, physical interlocking, and friction. Unlike many other soft grippers, the UG is specially crafted to engage with a wide array of object geometries 32 , 33 , 34 and delicate manipulations 35 . Its symmetric design, omnidirectional grasping capabilities, and passive compliance sidestep some of the complexities inherent to traditional grippers, giving room to simpler control strategies.

Guidance and control strategies are the foundational pillars in aerial manipulation literature. Typically, trajectory generation and low-level tracking control are used to meet the mission requirements 36 , 37 . The complexity of the tracking controller generally depends on the type of aerial manipulator. AMs equipped with heavy serial link manipulators require their dynamics to be taken into account 38 . In contrast, simple claw-like grippers can often be treated as an unknown disturbance, effectively handled by common model-free robust control schemes such as PID or SMC. Trajectory generation often distinguishes between global and local trajectories, where global trajectories require path planning utilizing some form of map representation 37 . Local, short-term, trajectory generation is frequently formulated as an optimization problem, specifically adapted to the nature of the mission. For instance, B-spline and sequential quadratic programming were used in 39 to generate minimum-time trajectories. Real-time minimal jerk trajectories for aerial perching were shown in 40 , while multi-objective optimization-based trajectory planning was demonstrated in 41 . Dynamic artificial potential fields were used to follow a moving ground target in 42 . Lastly, stochastic model predictive control (MPC) and dynamic movement primitives were applied in 43 and 44 .

MPC is a control strategy that leverages online optimization techniques to compute optimal control inputs over a receding horizon according to a given objective function. It was used with great success in many domains 45 , 46 thanks to its ability to work with non-linear system models and its ability to respect arbitrary constraints. A common problem of this technique is the computational burden related to the optimization step, which was especially problematic for systems exhibiting fast dynamics with limited compute resources (e.g., UAVs). However, in recent years, embedded systems have become more powerful, and optimization algorithms have become more efficient 47 . In UAV trajectory generation, MPC can fully exploit the system’s dynamics while respecting constraints imposed by the environment and the manipulation task. Its ability to handle multiple (potentially conflicting) objectives and its robustness to dynamical uncertainties thanks to the receding horizon approach, makes it a prime candidate for local trajectory generation.

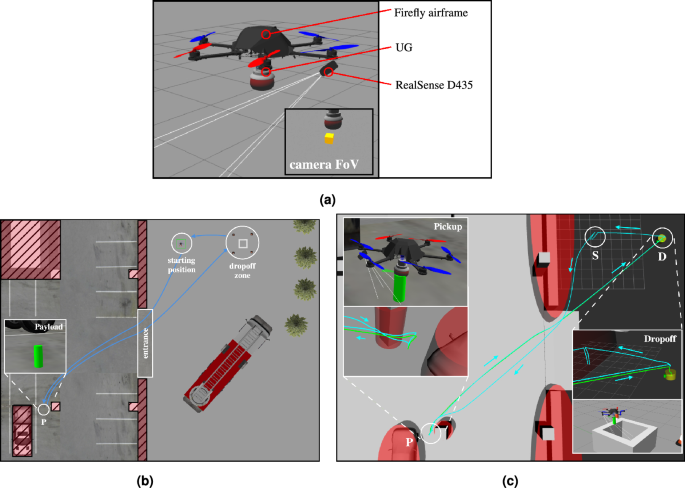

Our previous work 34 experimentally validated the UG’s grasping capabilities on a real UAV in an open-loop setup, highlighting the benefits of an automated approach. The reliance on a human in the loop inherently limits the scalability and adaptability of UAV operations in grasping tasks. Addressing this shortcoming, this study melds the inherent passive intelligence of the gripper with a simple, robust control scheme, marking a significant leap towards fully automated grasping.

The main objective of this paper is to introduce a novel control architecture for aerial grasping with soft jamming grippers, addressing the prevalent challenges in UAV deployments for material handling and grasping tasks. This initiative is realized by customizing a soft universal jamming gripper for aerial grasping and developing a model predictive controller to constrain the UAV’s motion while enhancing operational autonomy and safety. The contributions of this research encompass:

Formulation and implementation of a constrained path planning algorithm, specifically designed for navigating UAVs in complex aerial environments, utilizing model predictive control (MPC) adapted to the UG.

Integration and application of a force control strategy, ensuring both efficacy and robustness in grasping operations.

Presentation and validation of a scenario that showcases the framework’s efficacy, contributing to elevating the level of autonomy in aerial grasping tasks, verified through simulations and virtual experiments.

The salient features of this framework are its simple structure, straightforward architecture, and the synergetic effects between the control system and the passive intelligence of the UG to solve an otherwise complex problem.

The paper is structured as follows: We begin by articulating the problem statement and outlining the necessary technical background, followed by a deep dive into MPC where we elaborate on its formulation and adaptations tailored for the proposed grasping mechanism. Next, we introduce the force control strategy that dictates the gripping actions, ensuring both secure and reliable grasping. After this, we present the numerical simulation process, followed by a description of the virtual experiments conducted to validate our framework, highlighting the simulations and results that underscore the effectiveness of our approach. We then discuss and interpret the findings, explore their implications, and suggest future research directions. Finally, we conclude by summarizing the key takeaways of our research.

Problem statement and preliminaries

Problem statement.

The main objective of this research is to enhance autonomy in aerial grasping tasks involving a UAV equipped with a UG. Achieving this autonomy necessitates overcoming several challenges:

Optimal Approach Planning. The UAV, armed with a UG, must efficiently navigate toward the target object in cluttered and potentially changing environments. The planning algorithm should be robust, adaptable, and capable of handling real-time environmental perturbations, ensuring that the UAV can modify its trajectory dynamically to avoid obstacles and save energy.

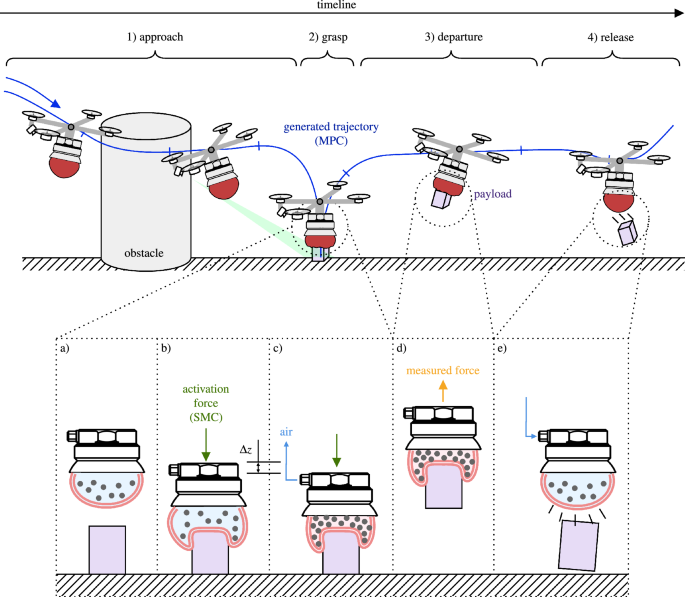

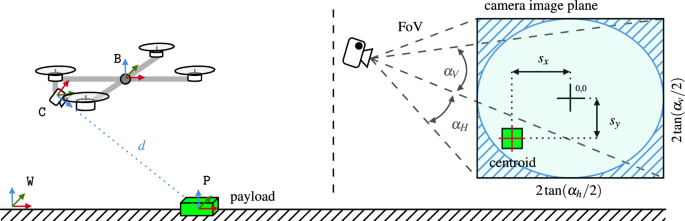

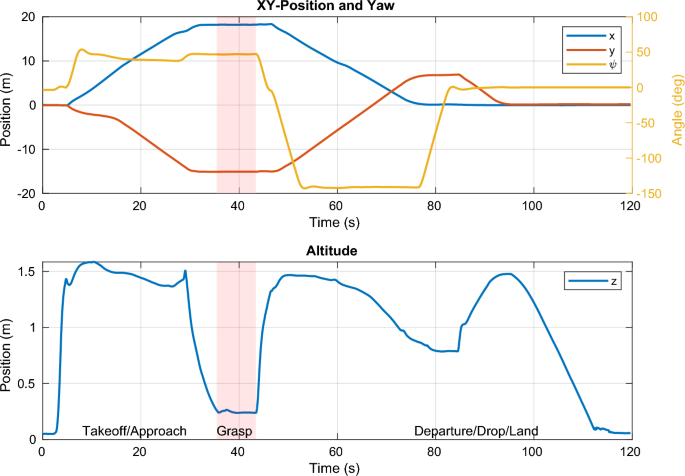

Schematics of autonomous grasping setup with a UG-equipped UAV. (1) The UAV approaches the payload avoiding all obstacles by tracking the MPC-generated trajectory. The payload’s position is roughly known, and then refined as it appears in the FoV of the camera. (2) As the gripper makes contact with the payload, the grasping procedure is started and the UAV switches into force control mode (SMC). (3) Once a reliable grasp is established, the UAV departs with the payload. (4) Upon reaching its destination, the payload is released. ( a ) The membrane is filled with air and a granular material. ( b ) An activation force pushes the membrane against the target, partially englobing the object. ( c ) Air is removed from the membrane. It shrinks and jams the particles, turning itself into a solid-like state. The contact force has to be maintained during this transition to establish a reliable grasp. ( d ) The resulting friction, geometric interlocking, and suction create a firm grasp. An integrated load cell measures the weight of the payload, used as feed-forward in the control architecture. ( e ) Pumping air into the membrane releases the object.

Effective grasping The grasping mechanism utilizes particle jamming and a soft membrane to establish a firm yet gentle grasp on the target object as depicted in Fig. 1 a–c. The control strategies employed should control the activation force, mitigating the risks of damage during the interaction. Further, make sure that contact is maintained during the closing transition of the gripper, a necessary condition for a strong grasp. Moreover, it has to deal with the changing physical properties during the jamming transition of the gripper (from soft to rigid).

Adaptability post-grasping Following the capture of the payload, the UAV, in its role as an aerial manipulator (AM), must efficiently transport the payload to a designated location. This phase leverages the payload’s measured mass as a feed-forward element in the AM’s control system for rapid adjustment, Fig. 1 d. This swift recalibration, essential for maintaining flight stability under increased weight, ensures safe and effective delivery.

The overarching goal is to craft a comprehensive control framework that synergizes with the innovative mechanics of the UG, resulting in a fully automated aerial grasping system. This framework should be capable of navigating the complexities of real-world environments, ensuring the seamless execution of aerial grasping tasks from approach to object retrieval and final deposition. The proposed framework and its integral components, aimed at solving the outlined problems, will be evaluated through numerical simulations and virtual experiments to validate their effectiveness and applicability in practical scenarios. The envisioned automated grasping setup is depicted in Fig. 1 .

UG modeling

This section summarizes crucial insights into the modeling of the UG, laying the foundation for the virtual experiments discussed later in the manuscript. For an in-depth exploration of the UG’s design, fabrication, integration, modeling, and experiments on a UAV, readers are directed to 34 .

Key to the UG’s capabilities is its ability to shift from a soft to a rigid state, see Fig. 1 b–c. This adaptability significantly enhances the robustness of aerial manipulation tasks. Notably, the UG’s ability to adapt to various object geometries negates the need for precise control based on object orientation, thereby drastically simplifying the control complexity.

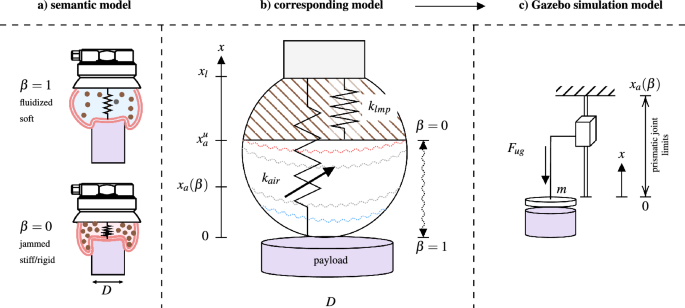

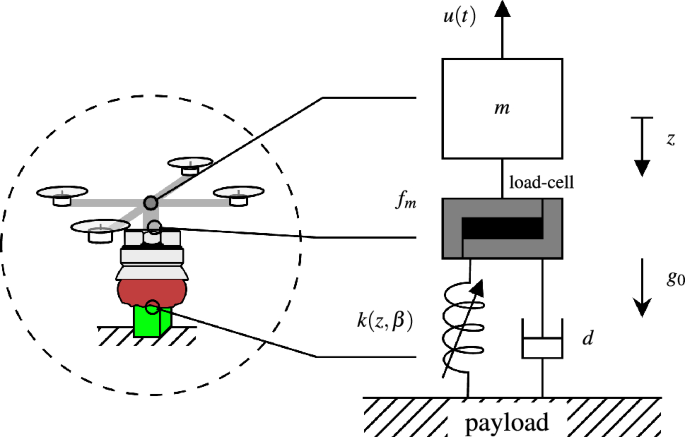

To render the UG’s behavior in robotic simulators, we propose the contact model in Fig. 2 . This model melds two non-linear compression springs, \(k_{lmp}\) and \(k_{air}\) . The former captures the lumped stiffness of the jammed filler material, while the latter encapsulates the dynamics of the air-filled membrane during contact which embodies numerous parameters, including the effective contact area and the non-linear elastic behavior of the membrane, a depiction akin to an air spring as cited in 48 . However, pinpointing a precise model proves challenging, and offers limited practical utility in this context.

UG contact model. ( a ) Semantic model of the UG showing its soft (fluidic) state, and its rigid (closed) state. ( b ) The corresponding model delineates the membrane into two components: the air-filled elastic part and the system’s remainder (filler material and structural components), represented as compression springs \(k_{air}\) and \(k_{lmp}\) in a parallel configuration. ( c ) The derived simulated system encompasses a disk (body) with a mass m , coupled to a prismatic joint with finite, state-dependant travel, governed by the combined elastic force \(F_{ug}\) that substitutes the parallel spring arrangement.

We employed non-linear regression analysis to identify the interrelation between the depth of entrance x , the payload diameter D , and the resultant elastic force \(F_{air}\) . Concurrently, the system’s lumped elastic force is denoted as \(F_{lmp}(x)\) . The combined elastic force \(F_{ug}\) is then derived from the equation

where h ( y ) represents the compression-only action of the springs, defined as \(h(y) = 0\) if \(y < 0\) , and \(h(y) = y\) if \(y \ge 0\) .

The free length \(x_a\) , which depends on the normalized free volume \(\beta\) , follows the linear mapping \(x_a = x^u_a \cdot \beta\) . Here, \(x^u_a\) , determined experimentally to be 40.8 mm, denotes the minimal volume that the filler material occupies in the jammed state. \(\beta\) serves as a pivotal parameter in the model, representing the ratio of the current free volume to the maximum free volume within the membrane. It operates as a dynamic entity, delineating the transitions between the gripper’s fluidic and rigid states, thereby facilitating the control and adaptation of the UG to various object geometries. In the s-domain, \(\beta\) is described by the equation

with R ( s ) serving as a unit step, s representing the Laplace variable, and T denoting the system’s time constant, approximated as 4.3 s 34 . Variable \(\beta\) discerns three discrete states, outlined as

highlighting the system’s increased stiffness as \(\beta\) approaches zero. This contact model is pivotal in developing a digital copy of the UG for robotic simulators such as Gazebo .

UAV modeling

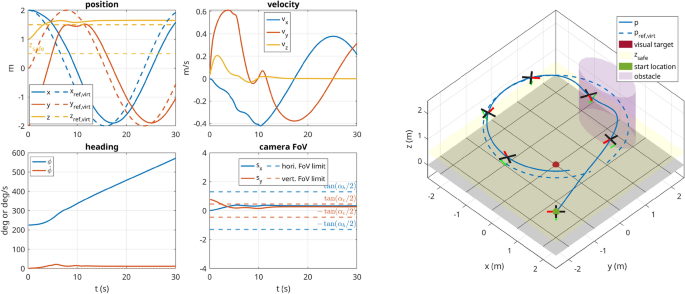

The optimal trajectory of the AM is determined by balancing multiple objectives:

Energy efficiency: Minimize control effort to ensure the path is energetically cost-effective.

Visual servoing: Maintain the targeted object within the camera’s field of view for accurate localization.

Grasping approach: Initially, maintain a distance \(z_{\text {grab}}\) from the floor to prevent collision with the payload and other objects during the approach. Subsequently, when close, descend following a near-vertical path to engage the gripper with the payload.

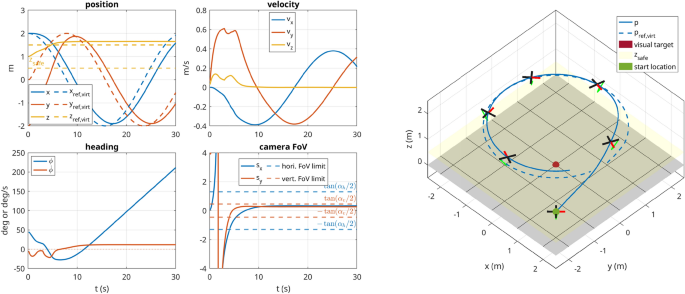

For the problem visualized in Fig. 1 , the following state vector is defined

with \({{}^{\texttt{W}}\pmb {\MakeLowercase {p}}^{\mathtt {}}_{\mathtt {}}} = \left( x, y, z, \psi \right)\) , and \({{}^{\texttt{B}}\pmb {\MakeLowercase {v}}^{\mathtt {}}_{\mathtt {}}} = \left( v_x, v_y, v_z, v_\psi \right)\) , where \({{}^{\texttt{W}}\pmb {\MakeLowercase {p}}^{\mathtt {}}_{\mathtt {}}}\) is the position, \(\psi\) the yaw angle of the UAV, and \({{}^{\texttt{B}}\pmb {\MakeLowercase {v}}^{\mathtt {}}_{\mathtt {}}}\) the velocity of the robot. The superscript indicates the frame of reference: \(\texttt{W}\) for world and \(\texttt{B}\) for body. Lastly, \(\pmb {\MakeLowercase {z}}\) is defined as the vector of parameters which includes

with \({{}^{\texttt{W}}\pmb {\MakeLowercase {p}}^{\mathtt {}}_{\texttt{ref}}} = \left( x_{ref}, y_{ref}, z_{ref}, \psi _{ref}\right) ,\) \({{}^{\texttt{W}}\pmb {\MakeLowercase {p}}^{\mathtt {}}_{\texttt{vis}}} = \left( u_{ref}, v_{ref}, w_{ref}\right) ,\) and \({{}^{\mathtt {}}\pmb {\MakeLowercase {o}}^{\mathtt {}}_{\texttt{i}}} = \left( o_{x,i}, o_{y,i}, o_{sx, i}, o_{sy, i}\right) ,\) where \({{}^{\texttt{W}}\pmb {\MakeLowercase {p}}^{\mathtt {}}_{\texttt{ref}}}\) is the desired position of the UAV, \({{}^{\texttt{W}}\pmb {\MakeLowercase {p}}^{\mathtt {}}_{\texttt{vis}}}\) is the visual target location (point of interest), \(v_0\) and \(v_N\) are the desired velocity at the start, resp., at the end of the horizon, \(c_{lock}\) is the binary state indicating whether the visual lock has been acquired or not (i.e., whether a visual target is present), \(z_{safe}\) designates the safety altitude and \(o_{(\cdot ),i}\) represents the xy -centerpoint and minor and major axis of the i ’th (out of \(N_o\) ) ellipsoidal obstacles. The separation of the visual target and the desired position in combination with \(c_{lock}\) gives a great deal of flexibility. By having \({{}^{\texttt{W}}\pmb {\MakeLowercase {p}}^{\mathtt {}}_{\texttt{ref}}} \ne {{}^{\texttt{W}}\pmb {\MakeLowercase {p}}^{\mathtt {}}_{\texttt{vis}}}\) , the AM can be tasked to explore the vicinity of the object of interest while keeping it in the field of view of the sensor. At the same time, the sensor lock can be disabled by setting \(c_{lock}=0\) to prevent the controller from tracking the target in certain cases (e.g., when moving to the drop-off area). For the actual grasping, \({{}^{\texttt{W}}\pmb {\MakeLowercase {p}}^{\mathtt {}}_{\texttt{ref}}} \approx {{}^{\texttt{W}}\pmb {\MakeLowercase {p}}^{\mathtt {}}_{\texttt{vis}}}\) as the visual target position generally corresponds to the payload position.

Regarding the state dynamics, we propose a basic kinematic UAV hover model, that represents a great simplification of the actual UAV dynamics. High-performance applications generally require more faithful models, however, our main concern is to generate a feasible trajectory that the relatively slow-flying UAV can track. This simple model has the advantage of being easily identifiable and relatively lightweight from a computational point of view. The state dynamics are thus defined as follows:

where \({}^{\texttt{W}}\pmb {\MakeUppercase {R}}_{\texttt{B}} \in SO(3)\) is the rotation from frame \(\texttt{B}\) to \(\texttt{W}\) , \(\odot\) designates the component-wise vector multiplication and the subscript in brackets indicates the components of the respective vector, e.g., \({{}^{\texttt{B}}\pmb {\MakeLowercase {v}}^{\mathtt {}}_{\mathtt {[xyz]}}} \in \mathbb {R}^3\) indicates the x , y , z components of \({{}^{\texttt{B}}\pmb {\MakeLowercase {v}}^{\mathtt {}}_{\mathtt {}}} \in \mathbb {R}^4\) .

The gain \(k_i\) and time constants \(\tau _i\) of the system were determined from the simulated AM with classical step response tangent method:

The results of the system identification are given in Table 1 .

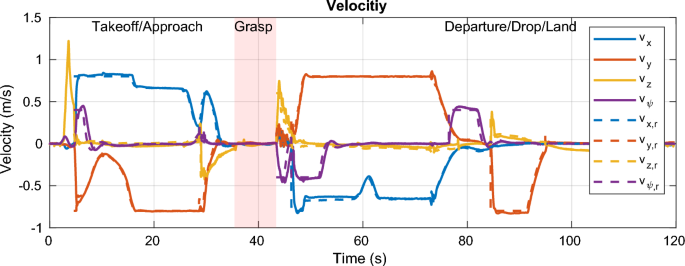

Trajectory optimization using model predictive control

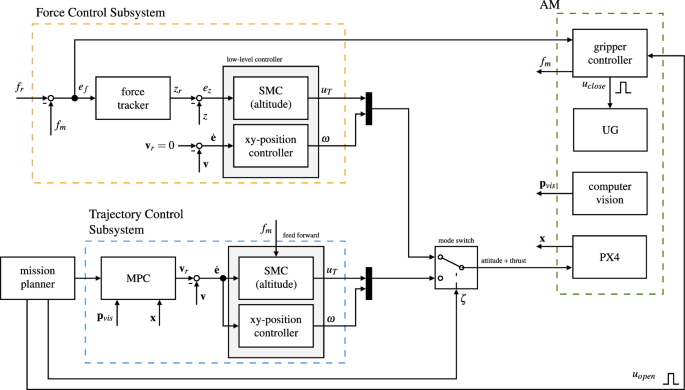

The MPC-based trajectory generation subsystem calculates the optimal velocity commands based on the cost function J developed hereafter. This subsystem is embedded in the AM controller as shown in Fig. 3 .

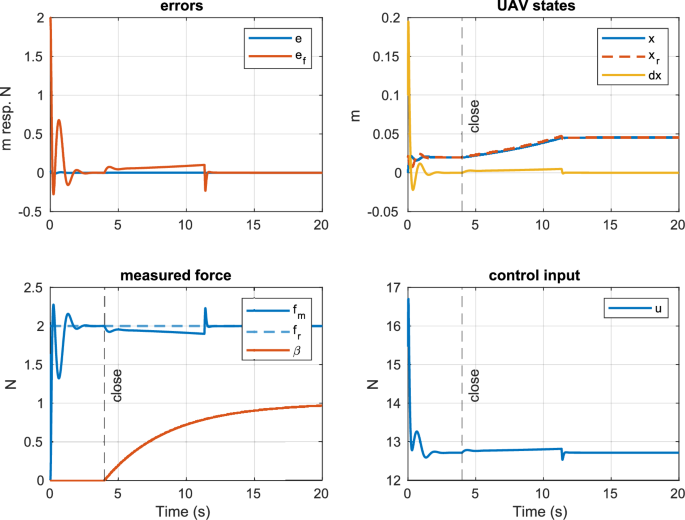

The control architecture consists of a force control subsystem and the MPC trajectory generator orchestrated by the mission planner (a state machine). Depending on the state of the mission, \(\zeta\) switches between the force tracking and the trajectory tracking control modes. Both control modes share a common low-level controller made from the sliding-mode altitude controller and the xy -position controller (cascading PID controller). The force controller commands a cumulative force \(u_T\) to minimize the error \(e_f\) via an intermediate altitude error \(e_z\) . The trajectory controller feeds velocity commands to the low-level controller. Furthermore, it takes the measured weight of the payload as a feed-forward signal to quickly adapt to the changed dynamics. The gripper controller closes the gripper by emitting \(u_{close}\) once a steady state is reached. The mission planner keeps track of all states and executes the mission.

Problem formulation

The discrete-time optimization problem formulation is given by 49 :

where J and E are the stage and terminal costs, respectively. \(H_n\) and \(H_N\) represent general inequality constraints, f are the system dynamics ( 6 ), n is the stage number with a total of N stages in the control horizon, and \(\pmb {\MakeLowercase {u}}\) are the control inputs.

Within this work, the proximal averaged Newton-type method for optimal control (PANOC) algorithm 49 is used to solve the non-convex optimal control problem (OCP) that is part of the non-linear MPC formulation. The PANOC algorithm solves the fixed-point equations with a fast converging line-search, single direct shooting method. The algorithm, being a first-order method, is particularly well suited for embedded onboard applications with limited memory and compute power and generally outperforms sequential quadratic programming (SQP) methods.

The solution to problem ( 8 ) is only optimal within the fixed-length moving horizon. Globally seen, the output, i.e., the trajectory, can be suboptimal, even with a risk of getting trapped in a local minimum.

Cost function: perception

The UAV’s camera detects the payload in green and assigns it a position within its frame of reference. The centroid of the payload is projected back into the camera’s image plane, where it is assigned the coordinates \(s_x\) and \(s_y\) .

For a safe and reliable approach, the targeted object should preferably stay in the field of view (FoV) of the AM. Herein, we follow the development in 50 to impose this behavior on the AM. Imposing this as a hard constraint would in many cases lead to an infeasible problem. By softening those constraints, the MPC gets the flexibility to find a solution that is a more favorable compromise between the objectives, e.g., saving energy at the expense of slightly violating the perception constraint.

The violation of a constraint is a binary state; either it is violated ’1’, or not ’0’. If it is, there is a non-zero cost associated with it, which incentivizes the optimizer to find a solution that satisfies the constraint. That binary behavior is akin to the Heaviside step function

However, its discontinuous nature makes it unsuitable for numerical optimization. Better candidates are found in the family of logistic functions. Herein we use the sigmoid function instead of the Heaviside step function as it is continuously differentiable over its entire domain. The sigmoid function is defined as

where \(k_s\) defines the steepness of the transition between 0 and \(k_g\) . The parameter \(x_0\) defines the center point of the transition, i.e., \(\sigma \left( x, x_0, k_s, k_g\right) =k_g/2\) for \(x=x_0\) . Based on the sigmoid function, the unit pulse function is defined as

To satisfy the perception constraints, the MPC has to ensure that the centroid of the target object stays within the image plane of the camera as depicted in Fig. 4 . The formulation herein does not force the MPC to keep the target in the center of the image plane and thus avoids unnecessary movements, keeping the AM more stable.

The object detection pipeline provides the position of the target object in world coordinates denoted as \({{}^{\texttt{W}}\pmb {\MakeLowercase {p}}^{\mathtt {}}_{\texttt{vis}}}\) , resp., in local camera frame \(\texttt{C}\) as \({{}^{\texttt{C}}\pmb {\MakeLowercase {p}}^{\mathtt {}}_{\texttt{vis}}} = \left( {}^{\texttt{W}}\pmb {\MakeUppercase {T}}_{\texttt{B}} {}^{\texttt{B}}\pmb {\MakeUppercase {T}}_{\texttt{C}}\right) ^{-1} {{}^{\texttt{W}}\pmb {\MakeLowercase {p}}^{\mathtt {}}_{\texttt{vis}}}\) , with \({}^{\mathtt {}}\pmb {\MakeUppercase {T}}_{\mathtt {}} \in SE(3)\) being the transformation matrix between the frames in super-, resp., subscript. Its coordinates \(\pmb {\MakeLowercase {s}}\) in the virtual image plane are then obtained with the help of the following relationship:

Equation ( 12 ) has a singularity at \({{}^{\texttt{C}}\pmb {\MakeLowercase {p}}^{\mathtt {}}_{\texttt{vis}}}_{[z]} = 0\) . In practice, this is not an issue since the distance from the camera to the target can never be zero (the RealSense D435 has a minimal distance of 8 cm). Taking this into account, Eq. ( 12 ) is conditioned to eliminate the singularity at \({{}^{\texttt{C}}\pmb {\MakeLowercase {p}}^{\mathtt {}}_{\texttt{vis}}}_{[z]}=0\) as follows:

where \(s_p = \text {pulse}\left( x={{}^{\texttt{C}}\pmb {\MakeLowercase {p}}^{\mathtt {}}_{\texttt{vis}}}_{[z]}, x_0=0, k_s=80, k_g\right)\) and therefore \(\lim _{{{}^{\texttt{C}}\pmb {\MakeLowercase {p}}^{\mathtt {}}_{\texttt{vis}}}_{[z]} \rightarrow \pmb {\MakeLowercase {0}}} z^* = k_g\) , with \(k_g\) being a very small positive number.

The inequality constraints that keep \(\pmb {\MakeLowercase {s}}\) in the FoV of the camera (defined by \(\alpha _h\) and \(\alpha _v\) ) are thus \(|{\pmb {\MakeLowercase {s}}_{[x]}}| < \tan {\frac{\alpha _h}{2}}\) and \(|{\pmb {\MakeLowercase {s}}_{[y]}}| < \tan {\frac{\alpha _v}{2}}\) , or more conveniently expressed as an inequality that restricts \(\pmb {\MakeLowercase {s}}\) to lay within an ellipsoid centered around \(\pmb {\MakeLowercase {0}}\) defined by its minor and major axis \(h=\tan \alpha _h\) and \(w=\tan \alpha _v\) such that

By making use of the sigmoid logistic function, ( 14 ) becomes

Eq. ( 15 ) has, however, two problems; first , it represents a double elliptical cone, i.e., the constraint is satisfied even if the target is behind the camera, second , \({\nabla c_{p,1}}\approx 0\) in regions where the constraint is violated, which makes recovering from constraint violations unlikely. The solution to the first problem is to add a constraint that guarantees \({{}^{\texttt{C}}\pmb {\MakeLowercase {p}}^{\mathtt {}}_{\texttt{vis}}}_{[z]} > 0\) (target in front of the camera), resp. \(c_{p,z} = \sigma \left( -{{}^{\texttt{C}}\pmb {\MakeLowercase {p}}^{\mathtt {}}_{\texttt{vis}}}_{[z]}, 0, k_{s2}, k_{g2}\right)\) , which extends ( 15 ) to become

Lastly, a quadratic term and a bias term are added to the formulation such that the gradient of non-compliant regions fulfills \({\nabla c_{p}} > 0\) :

The quadratic term includes the condition \(c_{p,z}\) such that the distance of the target from the camera in the positive z -direction is not penalized. The bias term in form of the pulse function gives the optimizer an extra incentive to turn the UAV towards the target. The constraint function ( 17 ) is included as perception cost function

in ( 8 ), where \(k_P\) is a positive weight and \(c_{lock} \in \left\{ 0,1\right\}\) accommodates for the case where no target is detected, and the perception cost should consequently be ignored. This also accounts for cases where the UAV loses sight of the visual target, e.g., due to an obstacle blocking the line of sight with the target.

Cost function: tracking

During approach and departure, the AM is commanded to reach a given target position \({{}^{\texttt{W}}\pmb {\MakeLowercase {p}}^{\mathtt {}}_{\texttt{ref}}}\) and yaw angle \(\psi _{ref}\) with its current position and orientation given by \({{}^{\texttt{W}}\pmb {\MakeLowercase {p}}^{\mathtt {}}_{\mathtt {}}}\) and \(\psi\) . Herein, a cost function is created that encourages the UAV to approach the commanded location for translation and rotation, starting with the latter.

The yaw angle error \(\psi _{err} = \psi _{ref} - \psi\) is not a useful quantity to feed into the OCP due to the discontinuity at \({0}^\circ\) resp. \({360}^\circ\) . Instead, the orientation is encoded in the two-dimensional direction vector

with the orientation error being

Likewise, the position error is defined as

Lastly, to have some control over the velocity at the start \(v_0\) and the end \(v_N\) of the horizon, the following quantity is defined

where N is the number of states, and n is the current stage. The velocity at each stage of the moving horizon is changed linearly between \(v_0\) at the beginning to \(v_N\) at the end to incentivize a gradual (linear) change of velocity and thus avoid an aggressive braking maneuver when approaching the commanded location.

The cost function \(J_T\) associated with the position tracking is composed by ( 19 ), ( 22 ) and ( 21 ). To account for the varying objectives of that cost function, \(c_{lock}\) is defined to switch between either the tracking of the reference angle or the targeted object via the perception constraint. The cost equation to include in ( 8 ) is therefore

where \(k_{T,i}\) are positive weights.

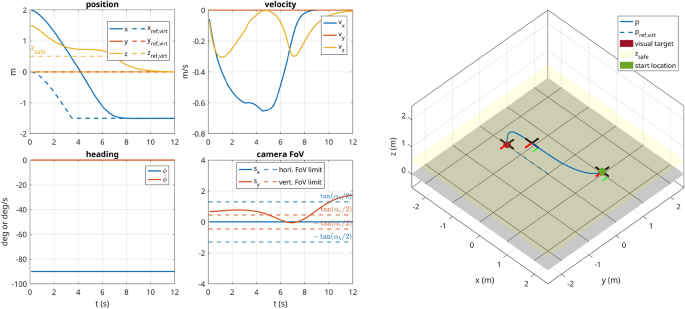

Cost function: grasping

The grasping cost function takes the particularities of the UG into account; it guarantees that the gripper stays at a safe altitude from the ground as long as it is not located above the target, and it only allows the AM to descend while being in close vicinity. At the same time, if the UAV for some reason, e.g., a wind gust, gets dislocated from the target’s location, it is forced to climb again. For that reason, two constraint functions are defined. The altitude penalty constraint is defined as

where \(z_{min}\) marks the safe minimal altitude the AM has to keep. However, as this would prevent the AM from descending to the payload, an additional term is needed based on the xy -position relative to the target, i.e.,

where \(k_s\) is chosen as a function of the radius r of the cone through which the UAV is funneled to the target. Herein \(k_s\left( r={0.1} \text{m}\right) =176.27\) was determined from solving the following equations:

The pulse function has the particularity of \(\text {pulse}\left( x, k_s, k_g\right) =0\) at \(x=0\) , resulting in no residual cost at \({{}^{\texttt{W}}\pmb {\MakeLowercase {p}}^{\mathtt {}}_{\texttt{ref}}}_{xy} = {{}^{\texttt{W}}\pmb {\MakeLowercase {p}}^{\mathtt {}}_{\mathtt {}}}_{xy}\) . The total cost associated with the grasping constraints is thus defined as

where \(k_{G,i}\) are tuneable weights and \(k_{G,3} (1-c_5) \left\| { {{}^{\texttt{B}}\pmb {\MakeLowercase {v}}^{\mathtt {}}_{\mathtt {}}} }\right\| ^2\) penalizes for any velocity close to the target.

Cost function: repulsion

In many applications, it is useful to define areas that should be avoided during flight, e.g., pedestrians, lamp posts, walls or cars. Herein, it is assumed that those areas can be approximated with one or multiple ellipsoids. It is assumed that the targets are static, localized, and cannot be overflown (for safety resp. physical reasons), thus resulting in a 2D xy -problem.

On that account, an axis-aligned ellipsis is defined, located at the world position \(\left( o_x, o_y\right)\) with its minor and major axis defined by \(\left( o_{sx}, o_{sy}\right)\) . A point \(\left( p_x, p_y\right)\) is inside the ellipsis if

Reformulating that expression using the sigmoid logistic function yields

The repulsion cost function for a single area of repulsion i is thus

where a quadratic term was added to improve the gradient in the non-compliant region.

The total repulsion cost of all areas is, therefore

Herein ( 31 ) is included as a soft constraint, rather than as a hard constraint. This has the advantage of being computationally cheaper but may lead to collisions if the repulsion cost term is dominated by other cost functions.

An environment may contain hundreds of obstacles and including each obstacle in ( 5 ) makes solving the OCP computationally very expensive. However, there are generally only a handful of obstacles near the UAV that must be considered. Therefore by simply feeding the momentarily closest obstacles into the OCP’s parameter vector, a large environment with many obstacles can be considered with \(N_o\) still being reasonably small (here, \(N_o=2\) ). Furthermore, the AM’s body size can be accounted for by simply expanding the ellipsis of the obstacle by the radius \(r_B\) of the body, i.e., \(\left( \hat{o}_{sx}, \hat{o}_{sy}\right) = \left( o_{sx} + r_B, o_{sy} + r_B\right)\) . Finally, an obstacle with a non-ellipsoidal shape can be approximated by placing several ellipses within its contour.

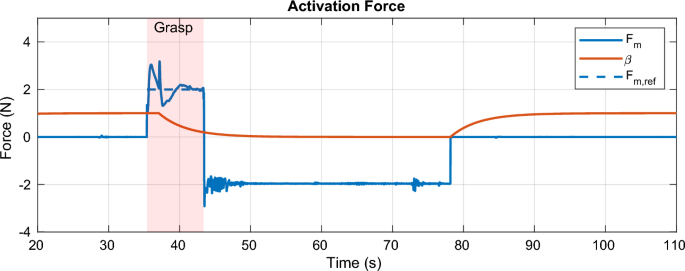

Force control

For optimal operation, the UG requires the activation force to be controlled, i.e., kept constant, over the grasping interval. More precisely, the force should not exceed a certain level as it may cause damage to the gripper (or to the payload), and it must also not drop below a certain threshold as this servery degrades the grasping performance, as discussed in our previous work 34 .

The fundamental problem is thus to control the contact force, as it was shown in 51 and 52 for bridge inspection and in 53 in the context of aerial writing. The noticeable difference here is the strong nonlinearity of the elastic element and the shrinkage of the membrane, which consequently changes the stiffness of the system during operation and may also cause the complete loss of contact. The cascading force control approach followed herein is inspired by 54 , and 55 . Since the contact force measured by the load-cell and the position of the UAV are linked, the contact force tracking problem can be seen as a position tracking problem where the reference position is a function of the force tracking error. Motivated by this statement, the cascading control architecture as shown in Fig. 3 is developed.

The physical properties and grasping performance of the UG were rigorously analyzed in 34 with the main conclusion being that the system is highly non-linear and state-dependent. In particular, the stiffness of the elastic element changes from very soft to rigid-like.

The homologous model of the AM is as shown in Fig. 5 consisting of a mass-spring-damper system, where the stiffness k is non-linear, compression-only and dependant on the gripper’s state \(\beta\) and depth of entrance z . The unknown damping d contains various effects such as the friction between the filler particles and air resistance. The mass m is the total mass of the AM with \(u_T\) being the cumulative thrust applied by the aircraft’s propulsion system.

The UG in contact with the payload is modeled as a mass-spring-damper system, where the spring force is non-linear with respect to the penetration depth z and further depends on the UG state \(\beta\) . The contact force is measured by a load-cell between the UG’s membrane and the rest of the AM (represented by m ). The damping d represents the friction of the grains in the filler as well as other effects such as air resistance.

The dynamics of the system are modeled according to the following set of equations