How to Annotate Texts

Use the links below to jump directly to any section of this guide:

Annotation Fundamentals

How to start annotating , how to annotate digital texts, how to annotate a textbook, how to annotate a scholarly article or book, how to annotate literature, how to annotate images, videos, and performances, additional resources for teachers.

Writing in your books can make you smarter. Or, at least (according to education experts), annotation–an umbrella term for underlining, highlighting, circling, and, most importantly, leaving comments in the margins–helps students to remember and comprehend what they read. Annotation is like a conversation between reader and text. Proper annotation allows students to record their own opinions and reactions, which can serve as the inspiration for research questions and theses. So, whether you're reading a novel, poem, news article, or science textbook, taking notes along the way can give you an advantage in preparing for tests or writing essays. This guide contains resources that explain the benefits of annotating texts, provide annotation tools, and suggest approaches for diverse kinds of texts; the last section includes lesson plans and exercises for teachers.

Why annotate? As the resources below explain, annotation allows students to emphasize connections to material covered elsewhere in the text (or in other texts), material covered previously in the course, or material covered in lectures and discussion. In other words, proper annotation is an organizing tool and a time saver. The links in this section will introduce you to the theory, practice, and purpose of annotation.

How to Mark a Book, by Mortimer Adler

This famous, charming essay lays out the case for marking up books, and provides practical suggestions at the end including underlining, highlighting, circling key words, using vertical lines to mark shifts in tone/subject, numbering points in an argument, and keeping track of questions that occur to you as you read.

How Annotation Reshapes Student Thinking (TeacherHUB)

In this article, a high school teacher discusses the importance of annotation and how annotation encourages more effective critical thinking.

The Future of Annotation (Journal of Business and Technical Communication)

This scholarly article summarizes research on the benefits of annotation in the classroom and in business. It also discusses how technology and digital texts might affect the future of annotation.

Annotating to Deepen Understanding (Texas Education Agency)

This website provides another introduction to annotation (designed for 11th graders). It includes a helpful section that teaches students how to annotate reading comprehension passages on tests.

Once you understand what annotation is, you're ready to begin. But what tools do you need? How do you prepare? The resources linked in this section list strategies and techniques you can use to start annotating.

What is Annotating? (Charleston County School District)

This resource gives an overview of annotation styles, including useful shorthands and symbols. This is a good place for a student who has never annotated before to begin.

How to Annotate Text While Reading (YouTube)

This video tutorial (appropriate for grades 6–10) explains the basic ins and outs of annotation and gives examples of the type of information students should be looking for.

Annotation Practices: Reading a Play-text vs. Watching Film (U Calgary)

This blog post, written by a student, talks about how the goals and approaches of annotation might change depending on the type of text or performance being observed.

Annotating Texts with Sticky Notes (Lyndhurst Schools)

Sometimes students are asked to annotate books they don't own or can't write in for other reasons. This resource provides some strategies for using sticky notes instead.

Teaching Students to Close Read...When You Can't Mark the Text (Performing in Education)

Here, a sixth grade teacher demonstrates the strategies she uses for getting her students to annotate with sticky notes. This resource includes a link to the teacher's free Annotation Bookmark (via Teachers Pay Teachers).

Digital texts can present a special challenge when it comes to annotation; emerging research suggests that many students struggle to critically read and retain information from digital texts. However, proper annotation can solve the problem. This section contains links to the most highly-utilized platforms for electronic annotation.

Evernote is one of the two big players in the "digital annotation apps" game. In addition to allowing users to annotate digital documents, the service (for a fee) allows users to group multiple formats (PDF, webpages, scanned hand-written notes) into separate notebooks, create voice recordings, and sync across all sorts of devices.

OneNote is Evernote's main competitor. Reviews suggest that OneNote allows for more freedom for digital note-taking than Evernote, but that it is slightly more awkward to import and annotate a PDF, especially on certain platforms. However, OneNote's free version is slightly more feature-filled, and OneNote allows you to link your notes to time stamps on an audio recording.

Diigo is a basic browser extension that allows a user to annotate webpages. Diigo also offers a Screenshot app that allows for direct saving to Google Drive.

While the creators of Hypothesis like to focus on their app's social dimension, students are more likely to be interested in the private highlighting and annotating functions of this program.

Foxit PDF Reader

Foxit is one of the leading PDF readers. Though the full suite must be purchased, Foxit offers a number of annotation and highlighting tools for free.

Nitro PDF Reader

This is another well-reviewed, free PDF reader that includes annotation and highlighting. Annotation, text editing, and other tools are included in the free version.

Goodreader is a very popular Mac-only app that includes annotation and editing tools for PDFs, Word documents, Powerpoint, and other formats.

Although textbooks have vocabulary lists, summaries, and other features to emphasize important material, annotation can allow students to process information and discover their own connections. This section links to guides and video tutorials that introduce you to textbook annotation.

Annotating Textbooks (Niagara University)

This PDF provides a basic introduction as well as strategies including focusing on main ideas, working by section or chapter, annotating in your own words, and turning section headings into questions.

A Simple Guide to Text Annotation (Catawba College)

The simple, practical strategies laid out in this step-by-step guide will help students learn how to break down chapters in their textbooks using main ideas, definitions, lists, summaries, and potential test questions.

Annotating (Mercer Community College)

This packet, an excerpt from a literature textbook, provides a short exercise and some examples of how to do textbook annotation, including using shorthand and symbols.

Reading Your Healthcare Textbook: Annotation (Saddleback College)

This powerpoint contains a number of helpful suggestions, especially for students who are new to annotation. It emphasizes limited highlighting, lots of student writing, and using key words to find the most important information in a textbook. Despite the title, it is useful to a student in any discipline.

Annotating a Textbook (Excelsior College OWL)

This video (with included transcript) discusses how to use textbook features like boxes and sidebars to help guide annotation. It's an extremely helpful, detailed discussion of how textbooks are organized.

Because scholarly articles and books have complex arguments and often depend on technical vocabulary, they present particular challenges for an annotating student. The resources in this section help students get to the heart of scholarly texts in order to annotate and, by extension, understand the reading.

Annotating a Text (Hunter College)

This resource is designed for college students and shows how to annotate a scholarly article using highlighting, paraphrase, a descriptive outline, and a two-margin approach. It ends with a sample passage marked up using the strategies provided.

Guide to Annotating the Scholarly Article (ReadWriteThink.org)

This is an effective introduction to annotating scholarly articles across all disciplines. This resource encourages students to break down how the article uses primary and secondary sources and to annotate the types of arguments and persuasive strategies (synthesis, analysis, compare/contrast).

How to Highlight and Annotate Your Research Articles (CHHS Media Center)

This video, developed by a high school media specialist, provides an effective beginner-level introduction to annotating research articles.

How to Read a Scholarly Book (AndrewJacobs.org)

In this essay, a college professor lets readers in on the secrets of scholarly monographs. Though he does not discuss annotation, he explains how to find a scholarly book's thesis, methodology, and often even a brief literature review in the introduction. This is a key place for students to focus when creating annotations.

A 5-step Approach to Reading Scholarly Literature and Taking Notes (Heather Young Leslie)

This resource, written by a professor of anthropology, is an even more comprehensive and detailed guide to reading scholarly literature. Combining the annotation techniques above with the reading strategy here allows students to process scholarly book efficiently.

Annotation is also an important part of close reading works of literature. Annotating helps students recognize symbolism, double meanings, and other literary devices. These resources provide additional guidelines on annotating literature.

AP English Language Annotation Guide (YouTube)

In this ~10 minute video, an AP Language teacher provides tips and suggestions for using annotations to point out rhetorical strategies and other important information.

Annotating Text Lesson (YouTube)

In this video tutorial, an English teacher shows how she uses the white board to guide students through annotation and close reading. This resource uses an in-depth example to model annotation step-by-step.

Close Reading a Text and Avoiding Pitfalls (Purdue OWL)

This resources demonstrates how annotation is a central part of a solid close reading strategy; it also lists common mistakes to avoid in the annotation process.

AP Literature Assignment: Annotating Literature (Mount Notre Dame H.S.)

This brief assignment sheet contains suggestions for what to annotate in a novel, including building connections between parts of the book, among multiple books you are reading/have read, and between the book and your own experience. It also includes samples of quality annotations.

AP Handout: Annotation Guide (Covington Catholic H.S.)

This annotation guide shows how to keep track of symbolism, figurative language, and other devices in a novel using a highlighter, a pencil, and every part of a book (including the front and back covers).

In addition to written resources, it's possible to annotate visual "texts" like theatrical performances, movies, sculptures, and paintings. Taking notes on visual texts allows students to recall details after viewing a resource which, unlike a book, can't be re-read or re-visited ( for example, a play that has finished its run, or an art exhibition that is far away). These resources draw attention to the special questions and techniques that students should use when dealing with visual texts.

How to Take Notes on Videos (U of Southern California)

This resource is a good place to start for a student who has never had to take notes on film before. It briefly outlines three general approaches to note-taking on a film.

How to Analyze a Movie, Step-by-Step (San Diego Film Festival)

This detailed guide provides lots of tips for film criticism and analysis. It contains a list of specific questions to ask with respect to plot, character development, direction, musical score, cinematography, special effects, and more.

How to "Read" a Film (UPenn)

This resource provides an academic perspective on the art of annotating and analyzing a film. Like other resources, it provides students a checklist of things to watch out for as they watch the film.

Art Annotation Guide (Gosford Hill School)

This resource focuses on how to annotate a piece of art with respect to its formal elements like line, tone, mood, and composition. It contains a number of helpful questions and relevant examples.

Photography Annotation (Arts at Trinity)

This resource is designed specifically for photography students. Like some of the other resources on this list, it primarily focuses on formal elements, but also shows students how to integrate the specific technical vocabulary of modern photography. This resource also contains a number of helpful sample annotations.

How to Review a Play (U of Wisconsin)

This resource from the University of Wisconsin Writing Center is designed to help students write a review of a play. It contains suggested questions for students to keep in mind as they watch a given production. This resource helps students think about staging, props, script alterations, and many other key elements of a performance.

This section contains links to lessons plans and exercises suitable for high school and college instructors.

Beyond the Yellow Highlighter: Teaching Annotation Skills to Improve Reading Comprehension (English Journal)

In this journal article, a high school teacher talks about her approach to teaching annotation. This article makes a clear distinction between annotation and mere highlighting.

Lesson Plan for Teaching Annotation, Grades 9–12 (readwritethink.org)

This lesson plan, published by the National Council of Teachers of English, contains four complete lessons that help introduce high school students to annotation.

Teaching Theme Using Close Reading (Performing in Education)

This lesson plan was developed by a middle school teacher, and is aligned to Common Core. The teacher presents her strategies and resources in comprehensive fashion.

Analyzing a Speech Using Annotation (UNC-TV/PBS Learning Media)

This complete lesson plan, which includes a guide for the teacher and relevant handouts for students, will prepare students to analyze both the written and presentation components of a speech. This lesson plan is best for students in 6th–10th grade.

Writing to Learn History: Annotation and Mini-Writes (teachinghistory.org)

This teaching guide, developed for high school History classes, provides handouts and suggested exercises that can help students become more comfortable with annotating historical sources.

Writing About Art (The College Board)

This Prezi presentation is useful to any teacher introducing students to the basics of annotating art. The presentation covers annotating for both formal elements and historical/cultural significance.

Film Study Worksheets (TeachWithMovies.org)

This resource contains links to a general film study worksheet, as well as specific worksheets for novel adaptations, historical films, documentaries, and more. These resources are appropriate for advanced middle school students and some high school students.

Annotation Practice Worksheet (La Guardia Community College)

This worksheet has a sample text and instructions for students to annotate it. It is a useful resource for teachers who want to give their students a chance to practice, but don't have the time to select an appropriate piece of text.

- PDFs for all 136 Lit Terms we cover

- Downloads of 1924 LitCharts Lit Guides

- Teacher Editions for every Lit Guide

- Explanations and citation info for 40,556 quotes across 1924 books

- Downloadable (PDF) line-by-line translations of every Shakespeare play

Need something? Request a new guide .

How can we improve? Share feedback .

LitCharts is hiring!

The Ultimate Guide to Text Annotation: Techniques, Tools, and Best Practices

Puneet Jindal

Introduction.

Welcome to the realm where language meets machine intelligence : text annotation - the catalyst propelling artificial intelligence to understand, interpret, and communicate in human language. Evolving from editorial footnotes to a cornerstone in data science, text annotation now drives Natural Language Processing (NLP) and Computer Vision , reshaping industries across the globe.

Imagine AI models decoding sentiments, recognizing entities, and grasping human nuances in a text. Text annotation is the magical key to making this possible. Join us on this journey through text annotation - exploring its techniques, challenges, and the transformative potential it holds for healthcare, finance, government, logistics, and beyond.

In this exploration, witness text annotation's evolution and its pivotal role in fueling AI's understanding of language. Explore how tools such as Labellerr help in text annotation and work. Let's unravel the artistry behind text annotation, shaping a future where AI comprehends, adapts, and innovates alongside human communication.

1. What is Text Annotation?

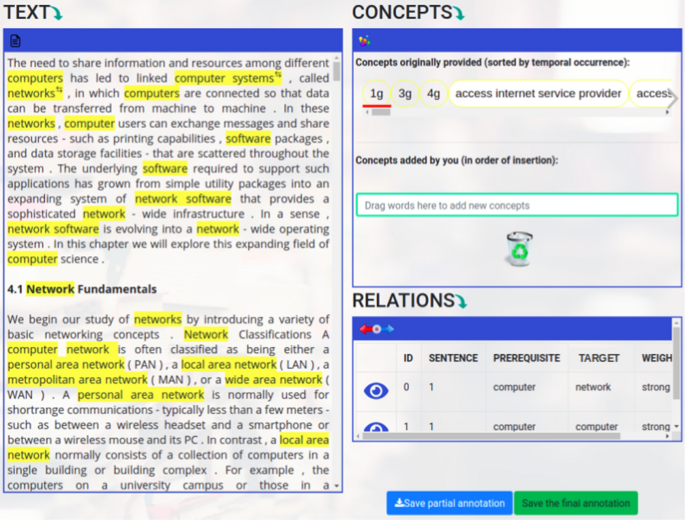

Text annotation is a crucial process that involves adding labels, comments, or metadata to textual data to facilitate machine learning algorithms' understanding and analysis.

This practice, known for its traditional role in editorial reviews by adding comments or footnotes to text drafts, has evolved significantly within the realm of data science, particularly in Natural Language Processing (NLP) and Computer Vision applications .

In the context of machine learning, text annotation takes on a more specific role. It involves systematically labeling pieces of text to create a reference dataset, enabling supervised machine learning algorithms to recognize patterns, learn from labeled data, and make accurate predictions or classifications when faced with new, unseen text.

To elaborate on what it means to annotate text: In data science and NLP, annotating text demands a comprehensive understanding of the problem domain and the dataset. It involves identifying and marking relevant features within the text. This can be akin to labeling images in image classification tasks, but in text, it includes categorizing sentences or segments into predefined classes or topics.

For instance, labeling sentiments in online reviews, distinguishing fake and real news articles, or marking parts of speech and named entities in text.

1.1 Text Annotation Tasks: A Multifaceted Approach to Data Labeling

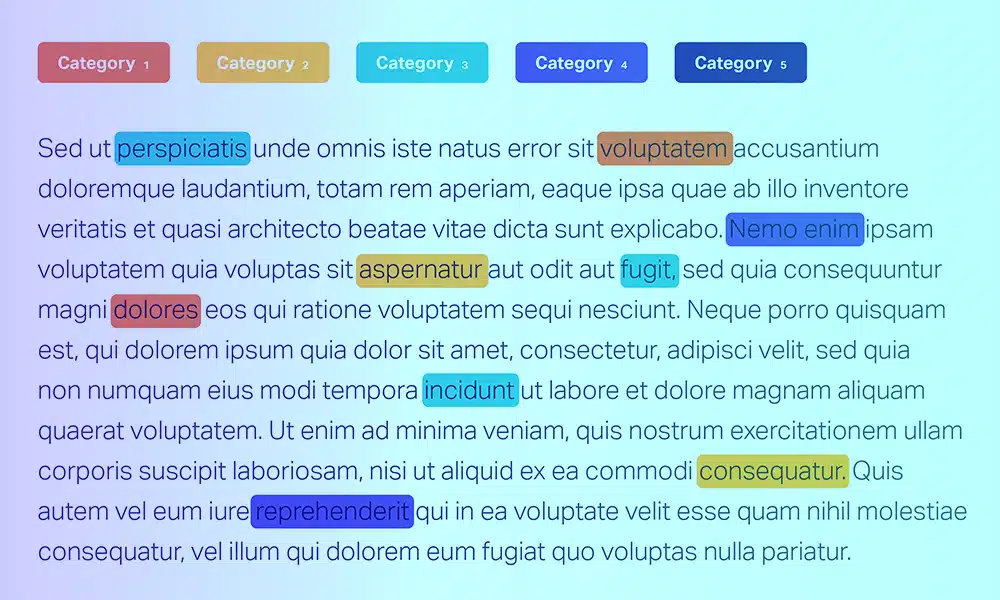

(i) Text Classification : Assigning predefined categories or labels to text segments based on their content, such as sentiment analysis or topic classification.

(ii) Named Entity Recognition (NER) : Identifying and labeling specific entities within the text, like names of people, organizations, locations, dates, etc.

(iii) Parts of Speech Tagging : Labeling words in a sentence with their respective grammatical categories, like nouns, verbs, adjectives, etc.

(iv) Summarization : Condensing a lengthy text into a shorter, coherent version while retaining its key information.

1.2 Significant Benefits of Text Annotation

(i) Improved Machine Learning Models : Annotated data provides labeled examples for algorithms to learn from, enhancing their ability to make accurate predictions or classifications when faced with new, unlabeled text.

(ii) Enhanced Performance and Efficiency : Annotations expedite the learning process by offering clear indicators to algorithms, leading to improved performance and faster model convergence.

(iii) Nuance Recognition : Text annotations help algorithms understand contextual nuances, sarcasm, or subtle linguistic cues that might not be immediately apparent, enhancing their ability to interpret text accurately.

(iv) Applications in Various Industries : Text annotation is vital across industries, aiding in tasks like content moderation, sentiment analysis for customer feedback , information extraction for search engines , and much more.

Text annotation is a critical process in modern machine learning, empowering algorithms to comprehend, interpret, and extract valuable insights from textual data, thereby enabling various applications across different sectors.

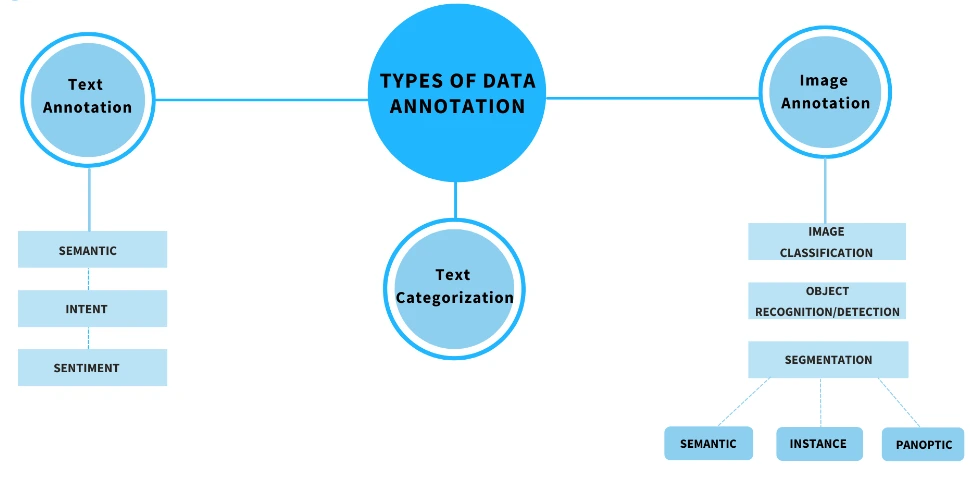

2. Types of Text Annotation

Text annotation, in the realm of data labeling and Natural Language Processing (NLP), encompasses a diverse range of techniques used to label, categorize, and extract meaningful information from textual data. This multifaceted process involves several types of annotations, each serving a distinct purpose in enhancing machine understanding and analysis of text.

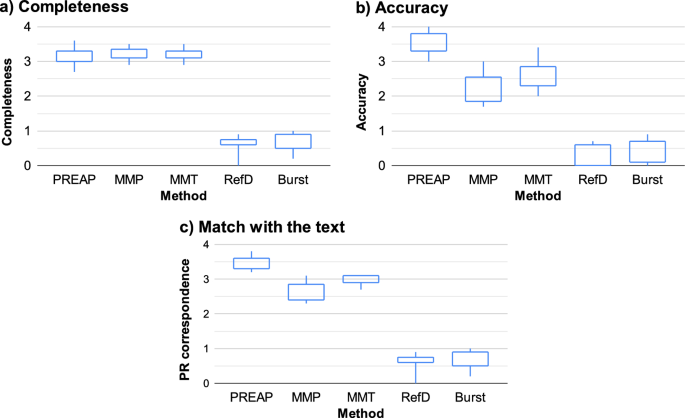

These annotation types include sentiment annotation, intent annotation, entity annotation, text classification, linguistic annotation, named entity recognition (NER), part-of-speech tagging, keyphrase tagging, entity linking, document classification, language identification, and toxicity classification.

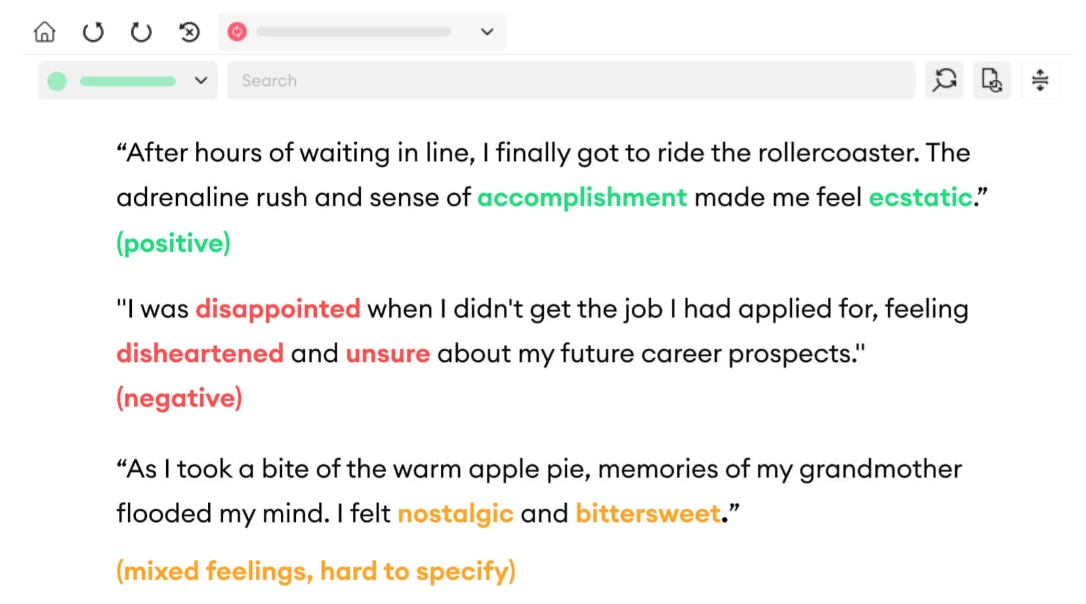

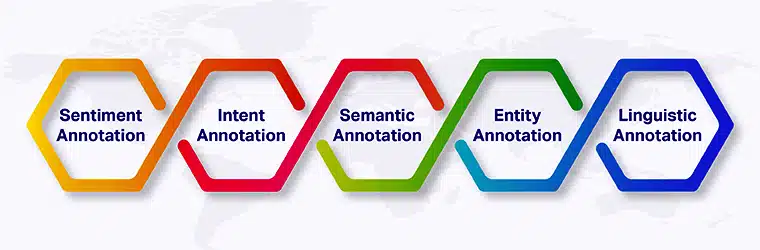

1. Sentiment Annotation

Sentiment annotation is a technique crucial for understanding emotions conveyed in text. Assigning sentiments like positive, negative, or neutral to sentences aids in sentiment analysis .

This process involves deciphering emotions in customer reviews on e-commerce platforms (e.g., Amazon, Flipkart), enabling businesses to gauge customer satisfaction.

Precise sentiment annotation is vital for training machine learning models that categorize texts into various emotions, facilitating a deeper understanding of user sentiments towards products or services.

Let's consider various instances where sentiment annotation encounters complexities:

(i) Clear Emotions: In the initial examples, emotions are distinctly evident. The first instance exudes happiness and positivity, while the second reflects disappointment and negative feelings. However, in the third case, emotions become intricate. Phrases like "nostalgic" or "bittersweet" evoke mixed sentiments, making it challenging to classify into a single emotion.

(ii) Success versus Failure: Analyzing phrases such as "Yay! Argentina beat France in the World Cup Finale" presents a paradox. Initially appearing positive, this sentence also implies negative emotions for the opposing side, complicating straightforward sentiment classification.

(iii) Sarcasm and Ridicule: Capturing sarcasm involves comprehending nuanced human communication styles, relying on context, tone, and social cues—characteristics often intricate for machines to interpret.

(iv) Rhetorical Questions: Phrases like "Why do we have to quibble every time?" may seem neutral initially. However, the speaker's tone and delivery convey a sense of frustration and negativity, posing challenges in categorizing the sentiment accurately.

(v) Quoting or Re-tweeting: Sentiment annotation confronts difficulties when dealing with quoted or retweeted content. The sentiment expressed might not align with the opinions of the one sharing the quote, creating discrepancies in sentiment classification.

In essence, sentiment annotation encounters challenges due to the complexity of human emotions, contextual nuances, and the subtleties of language expression, making accurate classification a demanding task for automated systems.

Intent Annotation

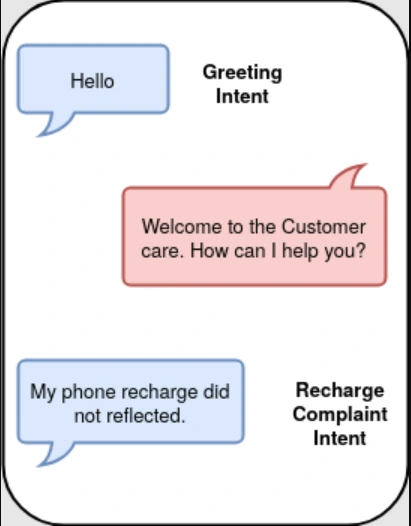

Intent annotation is a crucial aspect in the development of chatbots and virtual assistants , forming the backbone of their functionality. It involves labeling or categorizing user messages or sentences to identify the underlying purpose or intention behind the communication.

This annotation process aims to understand and extract the user's intent, enabling these AI systems to provide contextually relevant and accurate responses. Intent annotation involves labeling sentences to discern the user's intention behind a message. By annotating intents like greetings, complaints, or inquiries, systems can generate appropriate responses.

Key points regarding intent text annotation include:

Purpose Identification: Intent annotation involves categorizing user messages into specific intents such as greetings, inquiries, complaints, feedback, orders, or any other actionable user intents. Each category represents a different user goal or purpose within the conversation.

Training Data Creation: Creating labeled datasets is crucial for training machine learning models to recognize and classify intents accurately. Annotated datasets consist of labeled sentences or phrases paired with their corresponding intended purposes, forming the foundation for model training.

Contextual Understanding: Intent annotation often requires a deep understanding of contextual nuances within language. It's not solely about identifying keywords but comprehending the broader meaning and context of user queries or statements.

Natural Language Understanding (NLU) : It falls under the realm of natural language processing (NLP) and requires sophisticated algorithms capable of interpreting and categorizing user intents accurately. Machine learning models, such as classifiers or neural networks, are commonly used for this purpose.

Iterative Process: Annotation of intents often involves an iterative process. Initially, a set of intent categories is defined based on common user interactions. As the system encounters new user intents, the annotation process may expand or refine these categories to ensure comprehensive coverage.

Quality Assurance and Validation: It's essential to validate and ensure the quality of labeled data. This may involve multiple annotators labeling the same data independently to assess inter-annotator agreement and enhance annotation consistency.

Adaptation and Evolution: Intent annotation isn't a one-time task. As user behaviors, language use, and interaction patterns evolve, the annotated intents also need periodic review and adaptation to maintain accuracy and relevance.

Enhancing User Experience: Accurate intent annotation is pivotal in enhancing user experience. It enables chatbots and virtual assistants to understand user needs promptly and respond with relevant and helpful information or actions, improving overall user satisfaction.

Industry-Specific Customization: Intent annotation can be industry-specific. For instance, in healthcare, intents may include appointment scheduling, medication queries, or symptom descriptions, while in finance, intents may revolve around account inquiries, transaction history, or support requests.

Continuous Improvement: Feedback loops and analytics derived from user interactions help refine intent annotation. Analyzing user feedback on system responses can drive improvements in intent categorization and response generation.

For instance, Siri or Alexa, trained on annotated data for specific intents, responds accurately to user queries, enhancing user experience. Below are given examples:

- Greeting Intent: Hello there, how are you?

- Complaint Intent: I am very disappointed with the service I received.

- Inquiry Intent: What are your business hours?

- Confirmation Intent: Yes, I'd like to confirm my appointment for tomorrow at 10 AM.

- Request Intent: Could you please provide me with the menu?

- Gratitude Intent: Thank you so much for your help!

- Feedback Intent: I wanted to give feedback about the recent product purchase.

- Apology Intent: I'm sorry for the inconvenience caused.

- Assistance Intent: Can you assist me with setting up my account?

- Goodbye Intent: Goodbye, have a great day!

These annotations serve as training data for AI models to learn and understand different user intentions, enabling chatbots or virtual assistants to respond accurately and effectively.

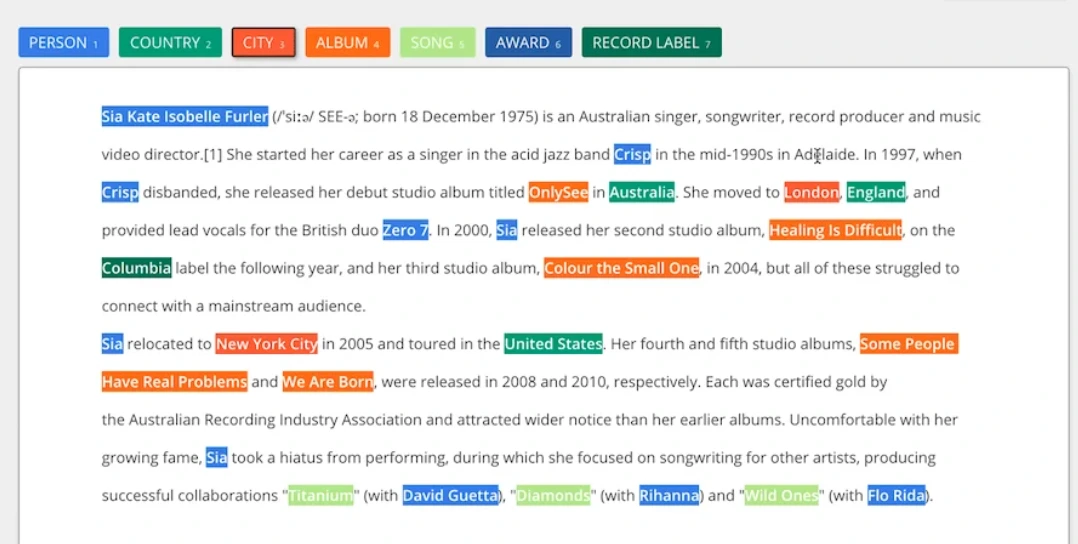

Entity Annotation:

Entity annotation focuses on labeling key phrases, named entities, or parts of speech in text. This technique emphasizes crucial details in lengthy texts and aids in training models for entity extraction. Named entity recognition (NER) is a subset of entity annotation, labeling entities like people's names, locations, dates, etc., enabling machines to comprehend text more comprehensively by distinguishing semantic meanings.

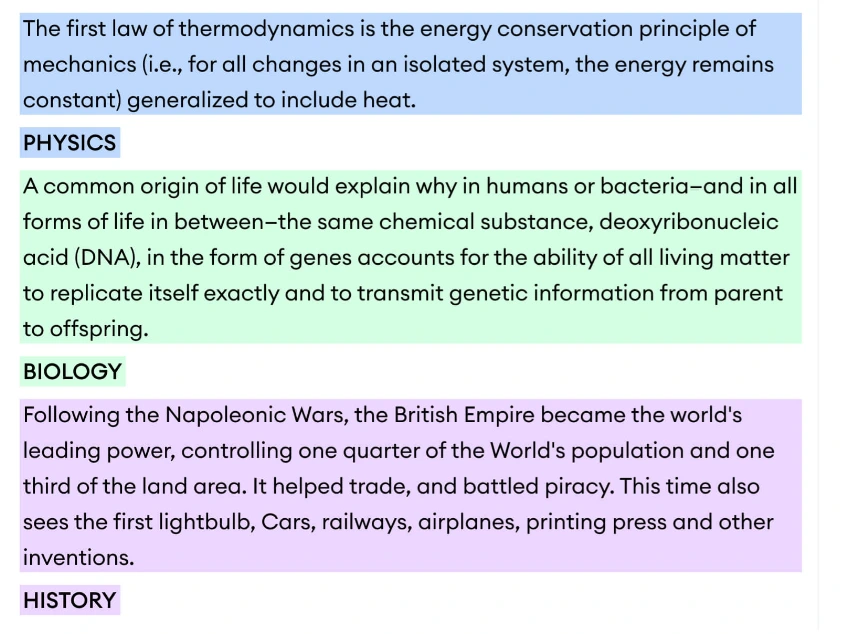

Text Classification

Text classification assigns categories or labels to text segments. This annotation technique is essential for organizing text data into specific classes or topics, such as document classification or sentiment analysis. Categorizing tweets into education, politics, etc., helps organize content and enables better understanding.

Let's look at each of these forms separately.

Document Classification: This involves assigning a single label to a document, aiding in the efficient sorting of vast textual data based on its primary theme or content.

Product Categorization: It's the process of organizing products or services into specific classes or categories. This helps enhance search results in eCommerce platforms, improving SEO strategies and boosting visibility in product ranking pages.

Email Classification: This task involves categorizing emails into either spam or non-spam (ham) categories, typically based on their content, aiding in email filtering and prioritization.

News Article Classification: Categorizing news articles based on their content or topics such as politics, entertainment, sports, technology, etc. This categorization assists in better organizing and presenting news content to readers.

Language Identification: This task involves determining the language used in a given text, is useful in multilingual contexts or language-specific applications.

Toxicity Classification: Identifying whether a social media comment or post contains toxic content, hate speech, or is non-toxic. This classification helps in content moderation and creating safer online environments.

Each form of text annotation serves a specific purpose, enabling better organization, classification, and understanding of textual data, and contributing to various applications across industries and domains.

Linguistic Annotation

Linguistic annotation focuses on language-related details in text or speech, including semantics, phonetics, and discourse. It encompasses intonation, stress, pauses, and discourse relations. It helps systems understand linguistic nuances, like coreference resolution linking pronouns to their antecedents, semantic labeling, and annotating stress or tone in speech.

Named Entity Recognition (NER)

NER identifies and labels named entities like people's names, locations, dates, etc., in text. It plays a pivotal role in NLP applications, allowing systems like Google Translate or Siri to understand and process textual data accurately.

Part-of-Speech Tagging

Part-of-speech tagging labels words in a sentence with their grammatical categories (nouns, verbs, adjectives). It assists in parsing sentences and understanding their structure.

Keyphrase Tagging

Keyphrase tagging locates and labels keywords or keyphrases in text, aiding in tasks like summarization or extracting key concepts from large text documents.

Entity Linking

Entity linking maps words in text to entities in a knowledge base, aiding in disambiguating entities' meanings and connecting them to larger datasets for contextual understanding.

3. Text Annotation use cases

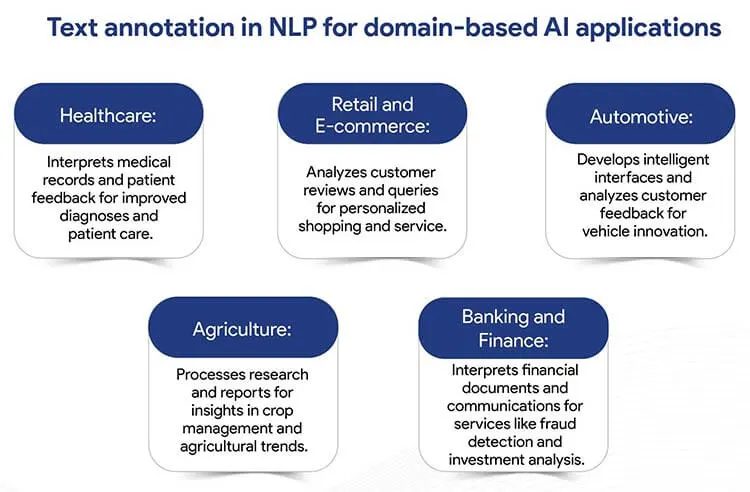

(i) healthcare.

Text annotation significantly transforms healthcare operations by leveraging AI and machine learning techniques to enhance patient care, streamline processes, and improve overall efficiency:

Automatic Data Extraction: Text annotation aids in extracting critical information from clinical trial records, facilitating better access and analysis of medical documents. It expedites research efforts and supports comprehensive data-driven insights.

Patient Record Analysis: Annotated data enables thorough analysis of patient records, leading to improved outcomes and more accurate medical condition detection. It aids healthcare professionals in making informed decisions and providing tailored treatments.

Insurance Claims Processing: Within healthcare insurance, text annotation helps recognize medically insured patients, identify loss amounts, and extract policyholder information. This speeds up claims processing, ensuring faster service delivery to policyholders.

(II) Insurance

Text annotation in the insurance industry revolutionizes various facets of operations, making tasks more efficient and accurate:

Risk Evaluation: By annotating and extracting contextual data from contracts and forms, text annotation supports risk evaluation, enabling insurance companies to make more informed decisions while minimizing potential risks.

Claims Processing: Annotated data assists in recognizing entities like involved parties and loss amounts, significantly expediting the claims processing workflow. It aids in detecting dubious claims, contributing to fraud detection efforts.

Fraud Detection: Through text annotation, insurance firms can monitor and analyze documents and forms more effectively, enhancing their capabilities to detect fraudulent claims and irregularities.

(III) Banking

The banking sector utilizes text annotation to revolutionize operations and ensure better accuracy and customer satisfaction:

Fraud Identification: Text annotation techniques aid in identifying potential fraud and money laundering patterns, allowing banks to take proactive measures and ensure security.

Custom Data Extraction: Annotated text facilitates the extraction of critical information from contracts, improving workflows and ensuring compliance. It enables efficient data extraction for various attributes like loan rates and credit scores, supporting compliance monitoring.

(IV) Government

In government operations, text annotation facilitates various tasks, ensuring better efficiency and compliance:

Regulatory Compliance: Text annotation streamlines financial operations by ensuring regulatory compliance through advanced analytics . It helps maintain compliance standards more effectively.

Document Classification: Through text classification and annotation, different types of legal cases can be categorized, ensuring efficient document management and access to digital documents.

Fraud Detection & Analytics: Text annotation assists in the early detection of fraudulent activities by utilizing linguistic annotation, semantic annotation, tone detection , and entity recognition. It enables analytics on vast amounts of data for insights.

(V) Logistics

Text annotation in logistics plays a pivotal role in handling massive volumes of data and improving customer experiences:

Invoice Annotation: Annotated text assists in extracting crucial details such as amounts, order numbers, and names from invoices. It streamlines billing and invoicing processes.

Customer Feedback Analysis: By utilizing sentiment and entity annotation, logistics companies can analyze customer feedback, ensuring better service improvements and customer satisfaction.

(VI) Media and News

Text annotation's role in the media industry is indispensable for content categorization and credibility:

Content Categorization: Annotation is crucial for categorizing news content into various segments such as sports, education, government, etc., enabling efficient content management and retrieval.

Entity Recognition: Annotating entities like names, locations, and key phrases in news articles aids in information retrieval and fact-checking. It contributes to credibility and accurate reporting.

Fake News Detection: Utilizing text annotation techniques such as NLP annotation and sentiment analysis enables the identification of fake news by analyzing the credibility and sentiment of the content.

These comprehensive applications across sectors showcase how text annotation significantly impacts various industries, making operations more efficient, accurate, and streamlined.

4. Text Annotation Guidelines

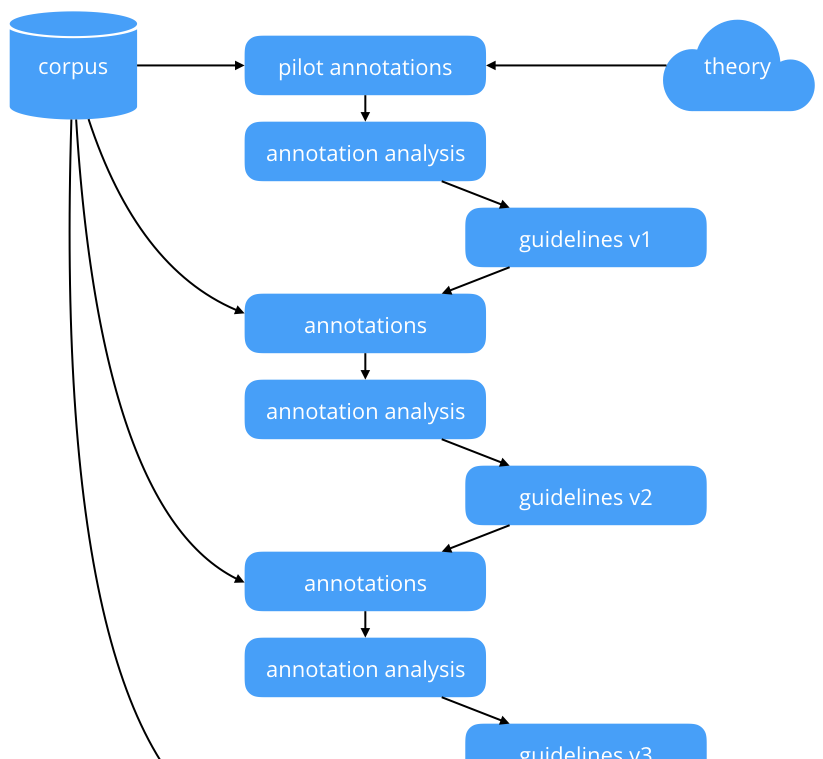

Annotation guidelines serve as a comprehensive set of instructions and rules for annotators when labeling or annotating text data for machine learning tasks. These guidelines are crucial as they define the objectives of the modeling task and the purpose behind the labels assigned to the data. They are crafted by a team familiar with the data and the intended use of the annotations.

Starting with defining the modeling problem and the desired outcomes, annotation guidelines cover various aspects:

(i) Annotation Techniques: Guidelines may start by choosing appropriate annotation methods tailored to the specific problem being addressed.

(ii) Case Definitions: They define common and potentially ambiguous cases that annotators might encounter in the data, along with instructions on how to handle each scenario.

(iii) Handling Ambiguity: Guidelines include examples from the data and strategies to deal with outliers, ambiguous instances, or unusual cases that might arise during annotation.

Text Annotation Workflow

An annotation workflow typically consists of several stages:

(i) Curating Annotation Guidelines: Define the problem, set the expected outcomes, and create comprehensive guidelines that are easy to follow and revisit.

(ii) Selecting a Labeling Tool: Choose appropriate text annotation tools, considering options like Labellerr or other available tools that suit the task's requirements.

(iii) Defining Annotation Process: Create a reproducible workflow that encompasses organizing data sources, utilizing guidelines, employing annotation tools effectively, documenting step-by-step annotation processes, defining formats for saving and exporting annotations, and reviewing each labeled sample.

(iv) Review and Quality Control: Regularly review labeled data to prevent generic label errors, biases, or inconsistencies. Multiple annotators may label the same samples to ensure consistency and reduce interpretational bias. Statistical measures like Cohen's kappa statistic can assess annotator agreement to identify and address discrepancies or biases in annotations.

Ensuring a streamlined flow of incoming data samples, rigorous review processes, and consistent adherence to annotation guidelines are crucial for generating high-quality labeled datasets for machine learning models. Regular monitoring and quality checks help maintain the reliability and integrity of the annotated data.

5. Text Annotation Tools and Technologies

Text annotation tools play a vital role in preparing data for AI and machine learning, particularly in natural language processing (NLP) applications. These tools fall into two main categories: open-source and commercial offerings. Open-source tools, available at no cost, are customizable and widely used in startups and academic projects for their affordability. Conversely, commercial tools offer advanced functionalities and support, making them suitable for large-scale and enterprise-level projects.

Commercial Text Annotation Tools

(i) labellerr.

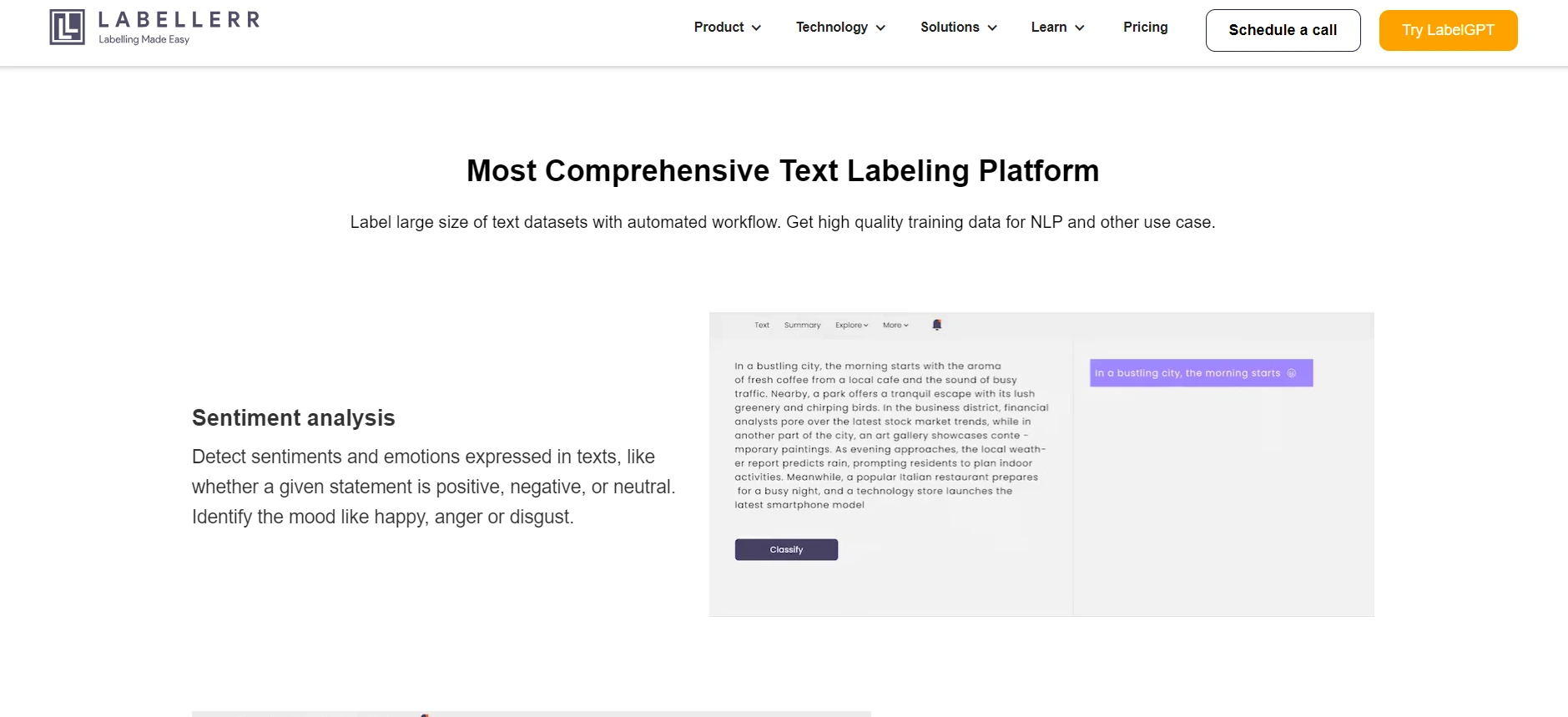

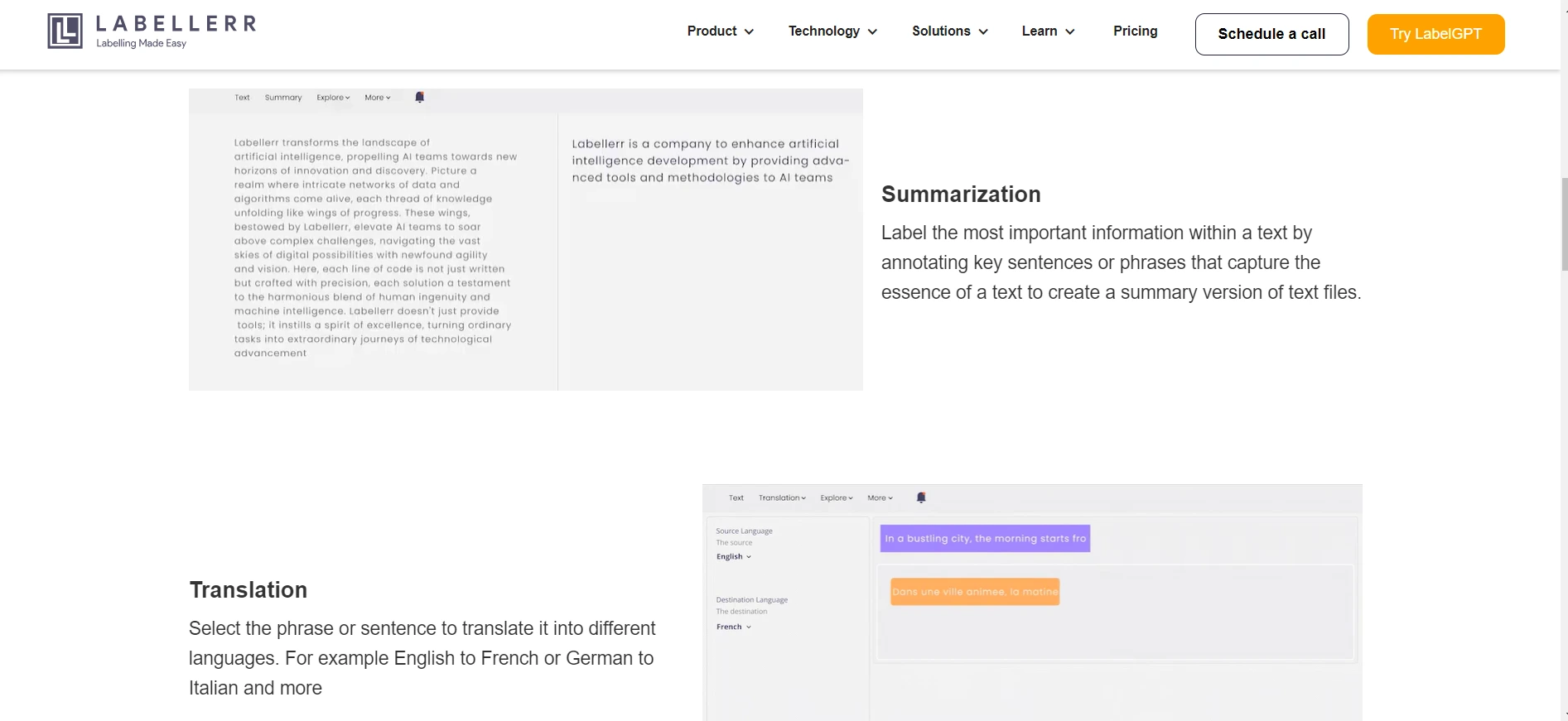

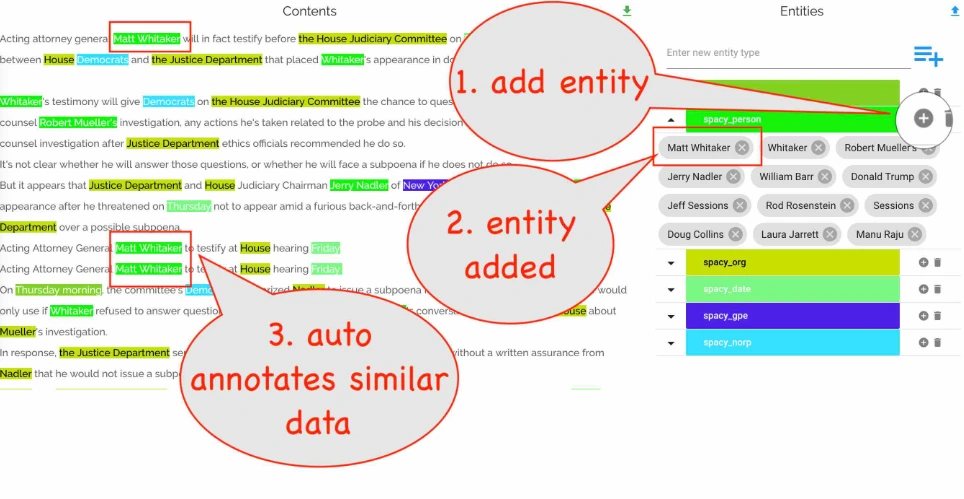

Labellerr is a text annotation tool that provides high-quality and accurate text annotations for training AI models at scale. The tool, Labellerr, offers various features and services tailored to text annotation needs.

Labellerr boasts the following functionalities and services:

Text Annotation Features:

(i) Sentiment Analysis: Identifies sentiments and emotions in text, categorizing statements as positive, negative, or neutral.

(ii) Summarization: Highlights key sentences or phrases within text to create a summarized version.

(iii) Translation: Translates selected text segments into different languages, such as English to French or German to Italian.

(iv) Named-Entity Recognition: Tags named entities (e.g., ID, Name, Place, Price) in text based on predefined categories.

(v) Text Classification: Classifies text by assigning appropriate classes based on their content.

(vi) Question Answering: Matches questions with their respective answers to train models for generating accurate responses.

Automated Workflows:

(i) Customization: Allows users to create custom automated data workflows, collaborate in real-time, perform QA reviews, and gain complete visibility into AI operations.

(ii) Pipeline Management: Enables the creation and automation of text labeling workflows, multiple user roles, review cycles, inter-annotator agreements, and various annotation stages.

Text Labeling Services:

(i) Provides professional text annotators and linguists focused on ensuring quality and accuracy in annotations.

(ii) Offers fully managed services, allowing users to concentrate on other important aspects while delegating text annotation tasks.

Labellerr emerges as a comprehensive and versatile commercial text annotation tool that streamlines the process of annotating large text datasets for AI model training purposes. It provides a wide array of annotation capabilities and customizable workflows, catering to diverse text annotation requirements.

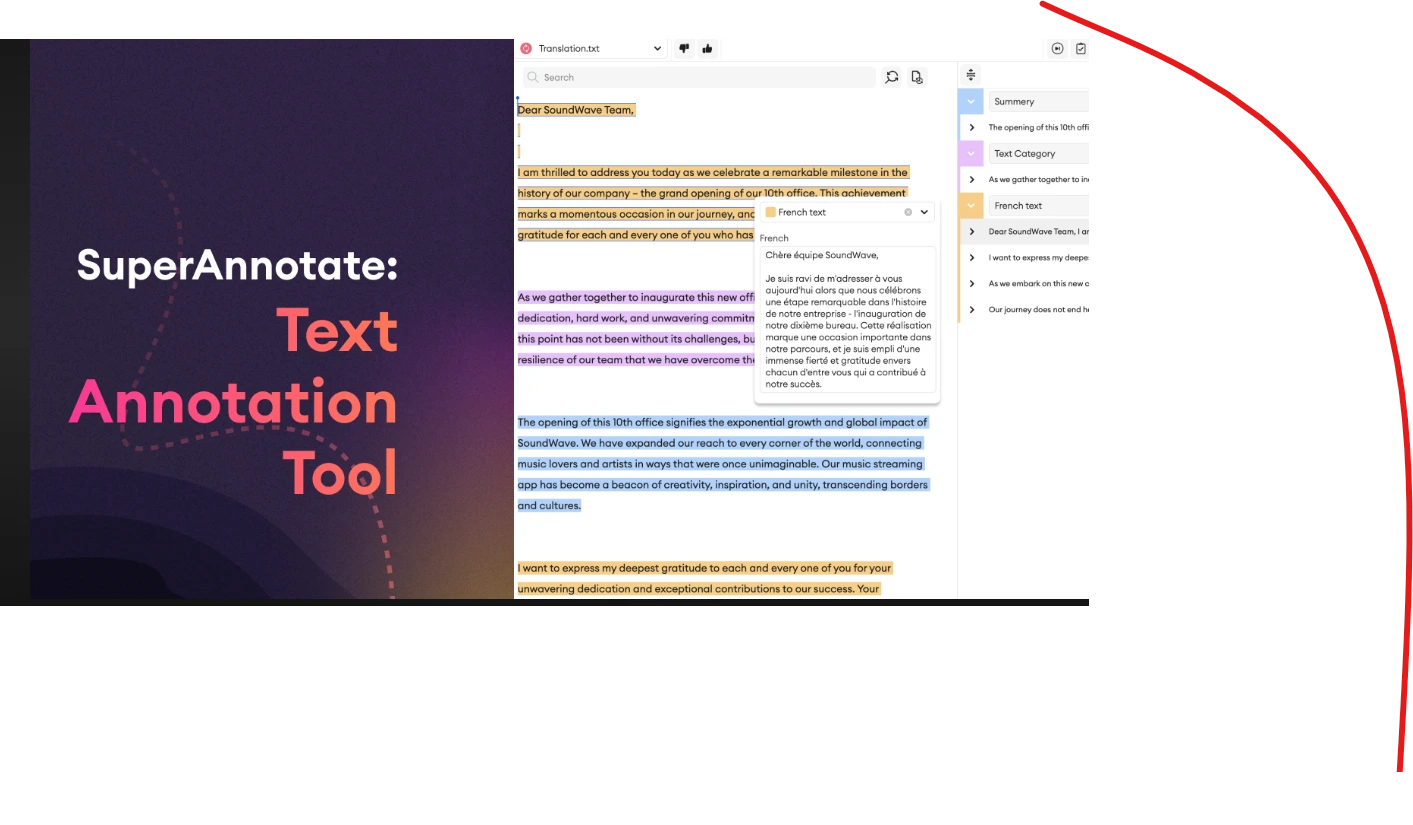

(II) SuperAnnotate

SuperAnnotate is an advanced text annotation tool designed to facilitate the creation of high-quality and accurate annotations essential for training top-performing AI models. This tool offers a wide array of features and functionalities aimed at streamlining text annotation processes for various industries and use cases.

Key Features of SuperAnnotate's Text Annotation Tool:

Cloud Integrations: Supports integration with various cloud storage systems, allowing users to easily add items from their cloud repositories to the SuperAnnotate platform.

Versatile Use Cases: Encompasses all use cases, ensuring its applicability across different industries and scenarios.

Advanced Annotation Tools: Equipped with an array of advanced tools tailored for efficient text annotation.

Functionalities Offered by SuperAnnotate:

Sentiment Analysis: Capable of identifying sentiments expressed in text, determining whether statements are positive, negative, or neutral, and even detecting emotions like happiness or anger.

Summarization: Annotations can focus on key sentences or phrases within text, aiding in the creation of summarized versions.

Translation Assistance: Annotations assist in identifying elements for translation, such as sentences, terms, and specific entities.

Named-Entity Recognition: Detects and classifies named entities within text, sorting them into predefined categories like dates, locations, names of individuals, and more.

Text Classification: Assigns classes to texts based on their content and characteristics.

Question Answering: Enables the pairing of questions with corresponding answers to train models for generating accurate responses.

Efficiency-Boosting Features:

Token Annotation: Splits texts into units using linguistic knowledge, ensuring seamless and accurate annotation.

Classify All: Instantly assigns the same class to every occurrence of a word or phrase in a text, enhancing efficiency.

Quality-Focused Elements:

Collaboration System: Involves stakeholders in the quality review process through comments, fostering seamless collaboration and task distribution.

Status Tracking: Provides visibility into the status of items and projects, allowing users to track progress effectively.

Detailed Instructions: Sets a solid foundation for project execution by offering comprehensive project instructions to the team.

(III) V7 Labs

The V7 Text Annotation Tool is a feature within the V7 platform that facilitates the annotation of text data within images and documents. This tool automates the process of detecting and reading text from various types of visual content, including images, photos, documents, and videos.

Key features and steps associated with the V7 Text Annotation Tool include:

Text Scanner Model : V7 has incorporated a public Text Scanner model within its Neural Networks page. This model is designed to automatically detect and read text within images and documents.

Integration into Workflow : Add a model stage to the workflow under the Settings page of your dataset. Select the Text Scanner model from the dropdown list and map the newly created text class. If desired, enable the Auto-Start option to automatically process new images through the model at the beginning of the workflow.

Automatic Text Detection and Reading : Once set up, the V7 Text Annotation Tool will automatically scan and read text from different types of images, including documents, photos, and videos. The tool is extensively pre-trained, enabling it to interpret characters that might be challenging for humans to decipher accurately.

Overall, the V7 Text Annotation Tool streamlines the process of text annotation by leveraging a pre-trained model to automatically detect and read text within visual content, providing an efficient and accurate solution for handling text data in images and documents.

Open Source Text Annotation Tools

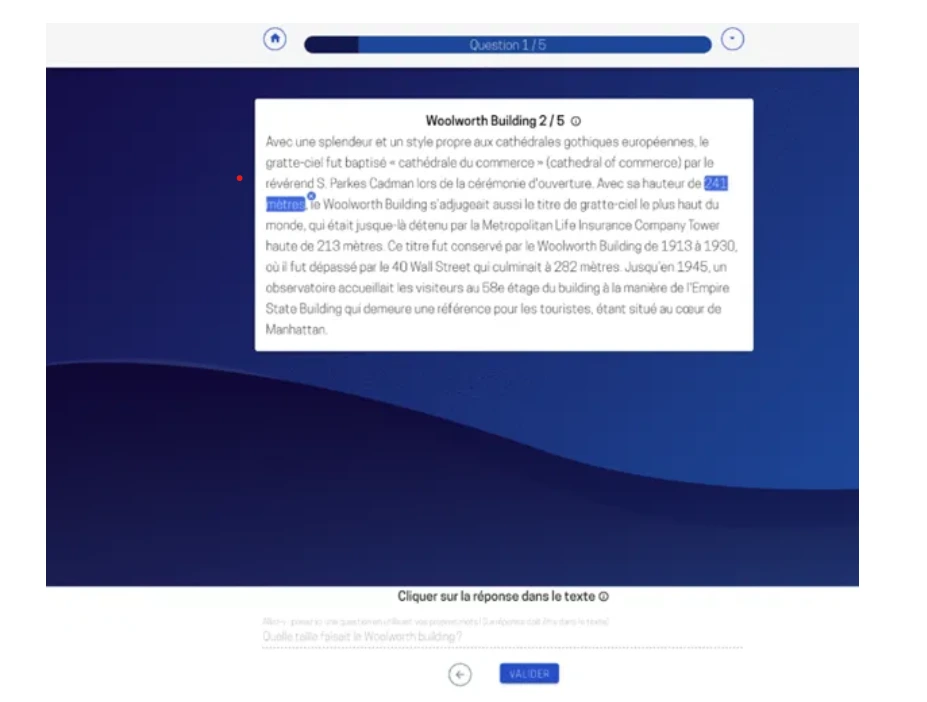

(i) piaf platform.

- Led by Etalab, this tool aims to create a public Q&A dataset in French.

- Initially designed for question/answer annotation, it allows users to write questions and highlight text segments that answer them.

- Offers an easy installation process and collaborative annotation capabilities.

- Export annotations in the format of the Stanford SQuAD dataset.

- Limited to question/answer annotation but has potential for adaptation to other use cases like sentiment analysis or named entity recognition.

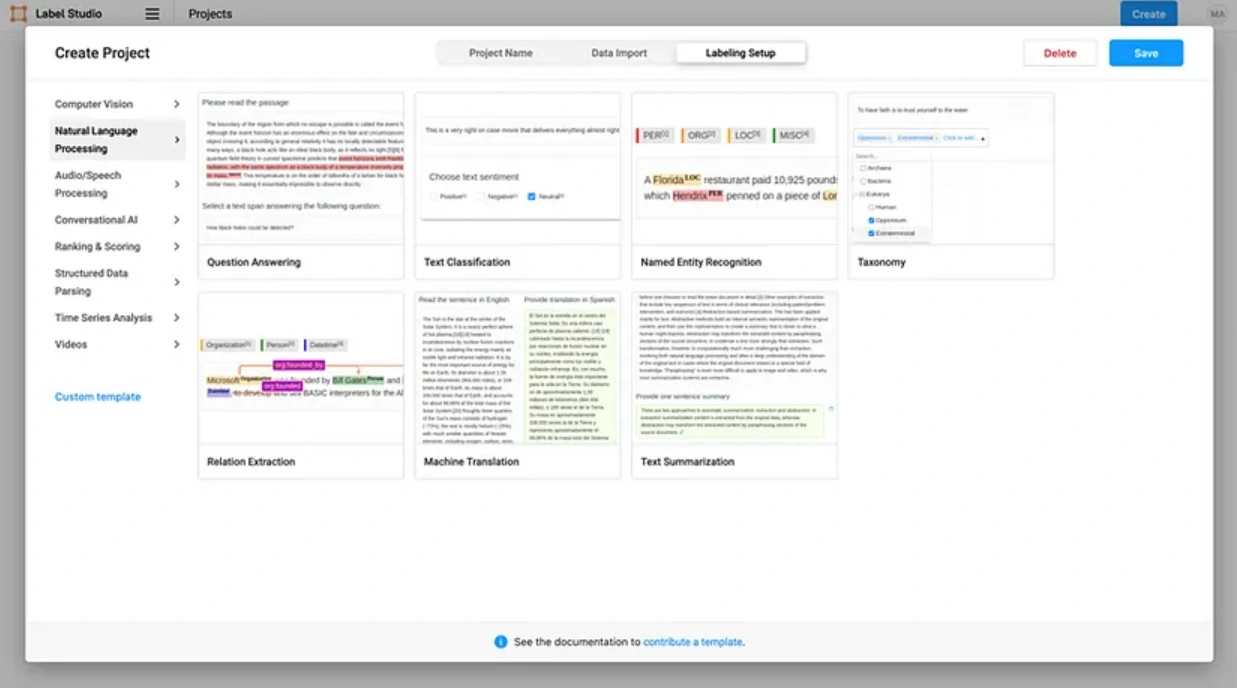

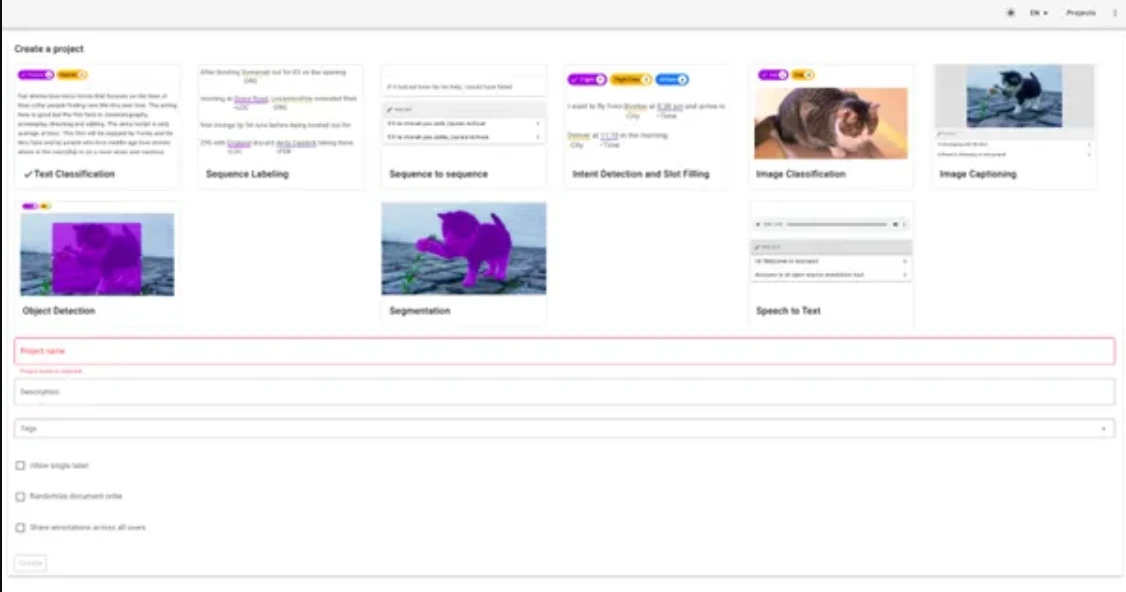

(II) Label Studio

- Free and open-source tool suitable for various tasks like natural language processing, computer vision, and more.

- Highly scalable and configurable labeling interface.

- Provides templates for common tasks (sentiment analysis, named entities, object detection) for easy setup.

- Allows exporting labeled data in multiple formats, compatible with learning algorithms.

- Supports collaborative annotation and can be deployed on servers for simultaneous annotation by multiple collaborators.

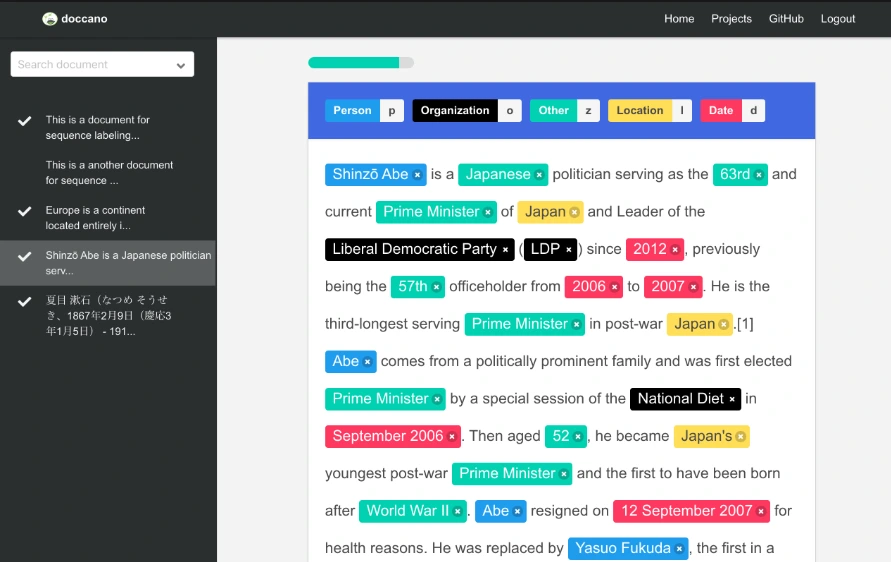

(III) Doccano

- Originally designed for text annotation tasks and recently extended to image classification, object detection, and speech-to-text annotations.

- Offers local installation via pip, supporting SQLite3 or PostgreSQL databases for saving annotations and datasets.

- Docker image available for deployment on various cloud providers.

- Simple user interface, collaborative features, and customizable labeling templates.

- Allows importing datasets in various formats (CSV, JSON, fastText) and exporting annotations accordingly.

These open-source tools provide valuable solutions for annotating text data, with each tool having its unique features and suitability for specific annotation tasks. While PIAF is focused on Q&A datasets in French, Label Studio offers extensive customization, and Doccano supports diverse annotation tasks, expanding beyond text to cover image and speech annotations.

Open-source NLP Service Toolkits

- spaCy : A Python library designed for production-level NLP tasks. While not a standalone annotation tool, it's often used with tools like Prodigy or Doccano for text annotation.

- NLTK (Natural Language Toolkit) : A popular Python platform that provides numerous text-processing libraries for various language-related tasks. It can be combined with other tools for text annotation purposes.

- Stanford CoreNLP : A Java-based toolkit capable of performing diverse NLP tasks like part-of-speech tagging, named entity recognition, parsing, and coreference resolution. It's typically used as a backend for annotation tools.

- GATE (General Architecture for Text Engineering) : An extensive open-source toolkit equipped with components for text processing, information extraction, and semantic annotation.

- Apache OpenNLP : A machine learning-based toolkit supporting tasks such as tokenization, part-of-speech tagging, entity extraction, and more. It's used alongside other tools for text annotation.

- UIMA (Unstructured Information Management Architecture) : An open-source framework facilitating the development of applications for analyzing unstructured information like text, audio, and video. It's used in conjunction with other tools for text annotation.

Commercial NLP Service Platforms

- Amazon Comprehend : A machine learning-powered NLP service offering entity recognition, sentiment analysis, language detection, and other text insights. APIs facilitate easy integration into applications.

- Google Cloud Natural Language API : Provides sentiment analysis, entity analysis, content classification, and other NLP features. Part of Google Cloud's Machine Learning APIs.

- Microsoft Azure Text Analytics : Offers sentiment analysis, key phrase extraction, language detection, and named entity recognition among its text processing capabilities.

- IBM Watson Natural Language Understanding : Utilizes deep learning to extract meaning, sentiment, entities, relations, and more from unstructured text. Available through IBM Cloud with REST APIs and SDKs for integration.

- MeaningCloud : A text analytics platform supporting sentiment analysis, topic extraction, entity recognition, and classification across multiple languages through APIs and SDKs.

- Rosette Text Analytics : Provides entity extraction, sentiment analysis, relationship extraction, and language identification functionalities across various languages. Can be integrated into applications using APIs and SDKs.

6. Challenges in Text Annotation

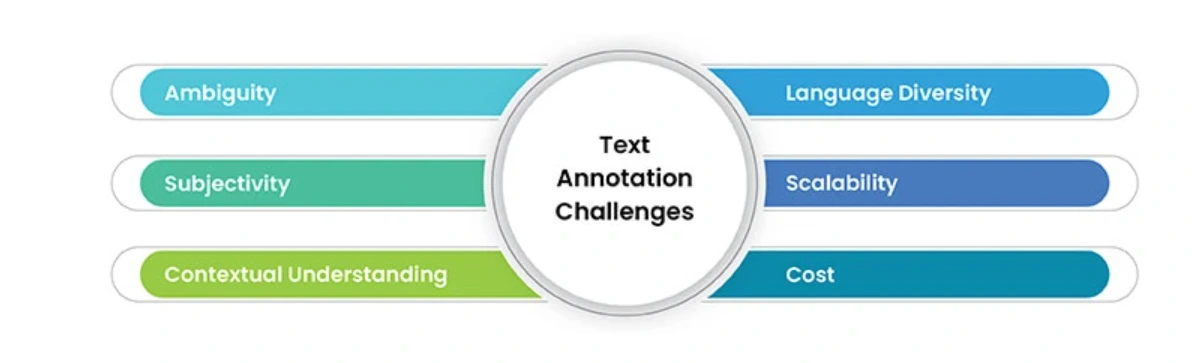

AI and ML companies face numerous hurdles in text annotation processes. These encompass ensuring data quality, efficiently handling large datasets, mitigating annotator biases, safeguarding sensitive information, and scaling operations as data volumes expand. Tackling these issues is crucial to achieving precise model training and robust AI outcomes.

(i) Ambiguity

This occurs when a word, phrase, or sentence holds multiple meanings, leading to inconsistencies in annotations. Resolving such ambiguities is vital for accurate machine learning model training. For instance, the phrase "I saw the man with the telescope" can be interpreted in different ways, impacting annotation accuracy.

(ii) Subjectivity

Annotating subjective language, containing personal opinions or emotions, poses challenges due to differing interpretations among annotators. Labeling sentiment in customer reviews can vary based on annotators' perceptions, resulting in inconsistencies in annotations.

(iii) Contextual Understanding

Accurate annotation relies on understanding the context in which words or phrases are used. Failing to consider context, such as the dual meaning of "bank" referring to a financial institution or a river side, can lead to incorrect annotations and hinder model performance.

(iv) Language Diversity

The need for proficiency in multiple languages poses challenges in annotating diverse datasets. Finding annotators proficient in less common languages or dialects is difficult, leading to inconsistencies in annotations and proficiency levels among annotators.

(v) Scalability

Annotating large volumes of data is time-consuming and resource-intensive. Handling increasing data volumes demands more annotators, posing challenges in efficiently scaling annotation efforts.

Hiring and training annotators and investing in annotation tools can be expensive. The significant investment required in the data labeling market emphasizes the challenge of balancing accurate annotations with the associated costs for AI and machine learning implementation.

7. The Future of Text Annotation

Text annotation, an integral part of data annotation, is experiencing several future trends that align with the broader advancements in data annotation processes. These trends are likely to shape the landscape of text annotation in the coming years:

(i) Natural Language Processing (NLP) Advancements

With the rapid progress in NLP technologies, text annotation is expected to witness the development of more sophisticated tools that can understand and interpret textual data more accurately. This includes improvements in sentiment analysis, entity recognition, named entity recognition, and other text categorization tasks.

(ii) Contextual Understanding

Future trends in text annotation will likely focus on capturing contextual understanding within language models. This involves annotating text with a deeper understanding of nuances, tone, and context, leading to the creation of more context-aware and accurate language models.

(iii) Multilingual Annotation

As the demand for multilingual AI models grows, text annotation will follow suit. Future trends involve annotating and curating datasets in multiple languages, enabling the training of AI models that can understand and generate content in various languages.

(iv) Fine-grained Annotation for Specific Applications

Industries such as healthcare, legal, finance, and customer service are increasingly utilizing AI-driven solutions. Future trends will involve more fine-grained and specialized text annotation tailored to these specific domains, ensuring accurate and domain-specific language models.

(v) Emphasis on Bias Mitigation

Recognizing and mitigating biases within text data is crucial for fair and ethical AI. Future trends in text annotation will focus on identifying and mitigating biases in textual datasets to ensure AI models are fair and unbiased across various demographics and social contexts.

(vi) Semi-supervised and Active Learning Approaches

To optimize annotation efforts, future trends in text annotation might include the integration of semi-supervised and active learning techniques. These methods intelligently select the most informative samples for annotation, reducing the annotation workload while maintaining model performance.

(vii) Privacy-Centric Annotation Techniques

In alignment with broader data privacy concerns, text annotation will likely adopt techniques that ensure the anonymization and protection of sensitive information within text data, balancing the need for annotation with privacy preservation.

(viii) Enhanced Collaboration and Crowdsourcing Platforms

Similar to other data annotation domains, text annotation will benefit from collaborative and crowdsourced platforms that allow distributed teams to annotate text data efficiently. These platforms will offer improved coordination, quality control mechanisms, and scalability.

(ix) Continual Learning and Adaptation

As language evolves and new linguistic patterns emerge, text annotation will evolve towards continual learning paradigms. This will enable AI models to adapt and learn from ongoing annotations, ensuring they remain relevant and up-to-date.

(x) Explainable AI through Annotation

Text annotation may involve creating datasets that facilitate the development of explainable AI models. Annotations focused on explaining decisions made by AI systems can aid in building transparent and interpretable language models.

These future trends in text annotation are driven by the evolving nature of AI technology, the increasing demands for more accurate and specialized AI models, ethical considerations, and the need for scalable and efficient annotation processes.

The exploration of text annotation highlights its crucial role in AI's language understanding. This journey revealed:

(i) Text annotation is vital for AI to interpret human language nuances across industries like healthcare, finance, and more.

(ii) Challenges in annotation, like dealing with ambiguity and subjectivity, stress the need for ongoing innovation.

(iii) The best practices and guidelines for text annotation and various available text annotation tools.

(iv) The future promises advancements in language processing, bias mitigation, and contextual understanding.

Overall, text annotation is a cornerstone in AI's language comprehension, fostering innovation and laying the groundwork for seamless human-machine communication in the future.

Frequently Asked Questions

1. what is text annotation & why is it important.

Text annotation enriches raw text by labeling entities, sentiments, parts of speech , etc. This labeled data trains AI models for better language understanding. It's crucial for improving accuracy in tasks like sentiment analysis, named entity recognition, and more. Annotation aids in creating domain-specific AI models and standardizing data, facilitating precise human-AI interactions.

2. What are the different types of annotation techniques?

Annotation techniques involve labeling different aspects of text data for training AI models. Types include Entity Annotation (identifying entities), Sentiment Annotation (labeling emotions), Intent Annotation (categorizing purposes), Linguistic Annotation (marking grammar), Relation Extraction, Coreference Resolution, Temporal Annotation , and Speech Recognition Annotation .

These techniques are vital for training models in various natural language processing tasks, aiding accurate comprehension and response generation by AI systems.

3. What is in-text annotation?

In-text annotation involves adding labels directly within the text to highlight attributes like phrases, keywords, or sentences. These labels guide machine learning models. Quality in-text annotations are essential for building accurate models as they provide reliable training data for AI systems to understand and process language more effectively.

Book our demo with one of our product specialist

Sign up for more like this.

- Productivity

- Thoughtful learning

Annotating text: The complete guide to close reading

As students, researchers, and self-learners, we understand the power of reading and taking smart notes . But what happens when we combine those together? This is where annotating text comes in.

Annotated text is a written piece that includes additional notes and commentary from the reader. These notes can be about anything from the author's style and tone to the main themes of the work. By providing context and personal reactions, annotations can turn a dry text into a lively conversation.

Creating text annotations during close readings can help you follow the author's argument or thesis and make it easier to find critical points and supporting evidence. Plus, annotating your own texts in your own words helps you to better understand and remember what you read.

This guide will take a closer look at annotating text, discuss why it's useful, and how you can apply a few helpful strategies to develop your annotating system.

What does annotating text mean?

Text annotation refers to adding notes, highlights, or comments to a text. This can be done using a physical copy in textbooks or printable texts. Or you can annotate digitally through an online document or e-reader.

Generally speaking, annotating text allows readers to interact with the content on a deeper level, engaging with the material in a way that goes beyond simply reading it. There are different levels of annotation, but all annotations should aim to do one or more of the following:

- Summarize the key points of the text

- Identify evidence or important examples

- Make connections to other texts or ideas

- Think critically about the author's argument

- Make predictions about what might happen next

When done effectively, annotation can significantly improve your understanding of a text and your ability to remember what you have read.

What are the benefits of annotation?

There are many reasons why someone might wish to annotate a document. It's commonly used as a study strategy and is often taught in English Language Arts (ELA) classes. Students are taught how to annotate texts during close readings to identify key points, evidence, and main ideas.

In addition, this reading strategy is also used by those who are researching for self-learning or professional growth. Annotating texts can help you keep track of what you’ve read and identify the parts most relevant to your needs. Even reading for pleasure can benefit from annotation, as it allows you to keep track of things you might want to remember or add to your personal knowledge management system .

Annotating has many benefits, regardless of your level of expertise. When you annotate, you're actively engaging with the text, which can help you better understand and learn new things . Additionally, annotating can save you time by allowing you to identify the most essential points of a text before starting a close reading or in-depth analysis.

There are few studies directly on annotation, but the body of research is growing. In one 2022 study, specific annotation strategies increased student comprehension , engagement, and academic achievement. Students who annotated read slower, which helped them break down texts and visualize key points. This helped students focus, think critically , and discuss complex content.

Annotation can also be helpful because it:

- Allows you to quickly refer back to important points in the text without rereading the entire thing

- Helps you to make connections between different texts and ideas

- Serves as a study aid when preparing for exams or writing essays

- Identifies gaps in your understanding so that you can go back and fill them in

The process of annotating text can make your reading experience more fruitful. Adding comments, questions, and associations directly to the text makes the reading process more active and enjoyable.

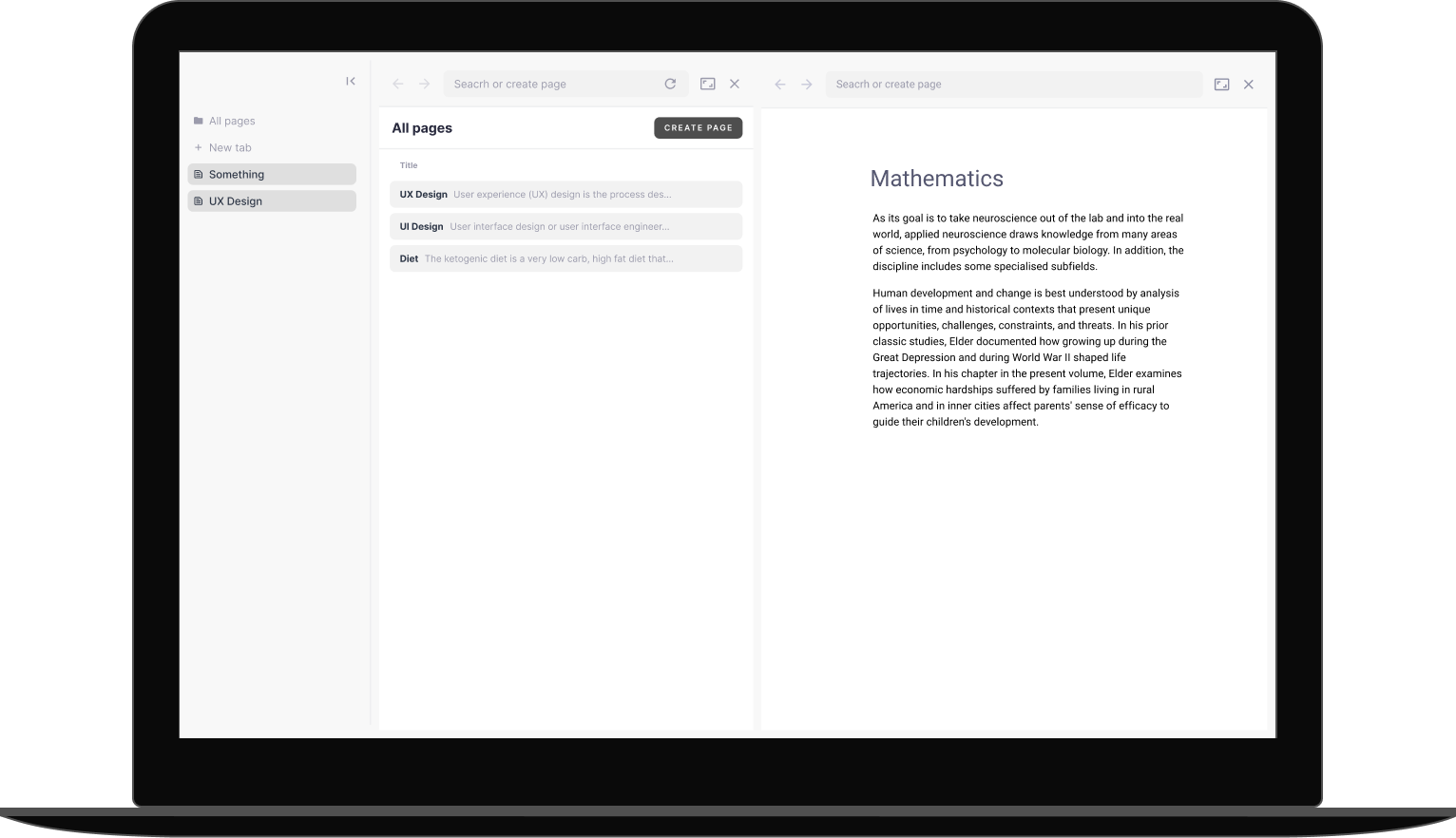

Be the first to try it out!

We're developing ABLE, a powerful tool for building your personal knowledge, capturing information from the web, conducting research, taking notes, and writing content.

How do you annotate text?

There are many different ways to annotate while reading. The traditional method of annotating uses highlighters, markers, and pens to underline, highlight, and write notes in paper books. Modern methods have now gone digital with apps and software. You can annotate on many note-taking apps, as well as online documents like Google Docs.

While there are documented benefits of handwritten notes, recent research shows that digital methods are effective as well. Among college students in an introductory college writing course, those with more highlighting on digital texts correlated with better reading comprehension than those with more highlighted sections on paper.

No matter what method you choose, the goal is always to make your reading experience more active, engaging, and productive. To do so, the process can be broken down into three simple steps:

- Do the first read-through without annotating to get a general understanding of the material.

- Reread the text and annotate key points, evidence, and main ideas.

- Review your annotations to deepen your understanding of the text.

Of course, there are different levels of annotation, and you may only need to do some of the three steps. For example, if you're reading for pleasure, you might only annotate key points and passages that strike you as interesting or important. Alternatively, if you're trying to simplify complex information in a detailed text, you might annotate more extensively.

The type of annotation you choose depends on your goals and preferences. The key is to create a plan that works for you and stick with it.

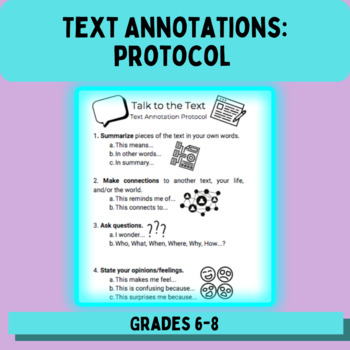

Annotation strategies to try

When annotating text, you can use a variety of strategies. The best method for you will depend on the text itself, your reason for reading, and your personal preferences. Start with one of these common strategies if you don't know where to begin.

- Questioning: As you read, note any questions that come to mind as you engage in critical thinking . These could be questions about the author's argument, the evidence they use, or the implications of their ideas.

- Summarizing: Write a brief summary of the main points after each section or chapter. This is a great way to check your understanding, help you process information , and identify essential information to reference later.

- Paraphrasing: In addition to (or instead of) summaries, try paraphrasing key points in your own words. This will help you better understand the material and make it easier to reference later.

- Connecting: Look for connections between different parts of the text or other ideas as you read. These could be things like similarities, contrasts, or implications. Make a note of these connections so that you can easily reference them later.

- Visualizing: Sometimes, it can be helpful to annotate text visually by drawing pictures or taking visual notes . This can be especially helpful when trying to make connections between different ideas.

- Responding: Another way to annotate is to jot down your thoughts and reactions as you read. This can be a great way to personally engage with the material and identify any areas you need clarification on.

Combining the three-step annotation process with one or more strategies can create a customized, powerful reading experience tailored to your specific needs.

ABLE: Zero clutter, pure flow

Carry out your entire learning, reflecting and writing process from one single, minimal interface. Focus modes for reading and writing make concentrating on what matters at any point easy.

7 tips for effective annotations

Once you've gotten the hang of the annotating process and know which strategies you'd like to use, there are a few general tips you can follow to make the annotation process even more effective.

1. Read with a purpose. Before you start annotating, take a moment to consider what you're hoping to get out of the text. Do you want to gain a general overview? Are you looking for specific information? Once you know what you're looking for, you can tailor your annotations accordingly.

2. Be concise. When annotating text, keep it brief and focus on the most important points. Otherwise, you risk annotating too much, which can feel a bit overwhelming, like having too many tabs open . Limit yourself to just a few annotations per page until you get a feel for what works for you.

3. Use abbreviations and symbols. You can use abbreviations and symbols to save time and space when annotating digitally. If annotating on paper, you can use similar abbreviations or symbols or write in the margins. For example, you might use ampersands, plus signs, or question marks.

4. Highlight or underline key points. Use highlighting or underlining to draw attention to significant passages in the text. This can be especially helpful when reviewing a text for an exam or essay. Try using different colors for each read-through or to signify different meanings.

5. Be specific. Vague annotations aren't very helpful. Make sure your note-taking is clear and straightforward so you can easily refer to them later. This may mean including specific inferences, key points, or questions in your annotations.

6. Connect ideas. When reading, you'll likely encounter ideas that connect to things you already know. When these connections occur, make a note of them. Use symbols or even sticky notes to connect ideas across pages. Annotating this way can help you see the text in a new light and make connections that you might not have otherwise considered.

7. Write in your own words. When annotating, copying what the author says verbatim can be tempting. However, it's more helpful to write, summarize or paraphrase in your own words. This will force you to engage your information processing system and gain a deeper understanding.

These tips can help you annotate more effectively and get the most out of your reading. However, it’s important to remember that, just like self-learning , there is no one "right" way to annotate. The process is meant to enrich your reading comprehension and deepen your understanding, which is highly individual. Most importantly, your annotating system should be helpful and meaningful for you.

Engage your learning like never before by learning how to annotate text

Learning to effectively annotate text is a powerful tool that can improve your reading, self-learning , and study strategies. Using an annotating system that includes text annotations and note-taking during close reading helps you actively engage with the text, leading to a deeper understanding of the material.

Try out different annotation strategies and find what works best for you. With practice, annotating will become second nature and you'll reap all the benefits this powerful tool offers.

I hope you have enjoyed reading this article. Feel free to share, recommend and connect 🙏

Connect with me on Twitter 👉 https://twitter.com/iamborisv

And follow Able's journey on Twitter: https://twitter.com/meet_able

And subscribe to our newsletter to read more valuable articles before it gets published on our blog.

Now we're building a Discord community of like-minded people, and we would be honoured and delighted to see you there.

Straight from the ABLE team: how we work and what we build. Thoughts, learnings, notes, experiences and what really matters.

Read more posts by this author

follow me :

Learning with a cognitive approach: 5 proven strategies to try

What is knowledge management the answer, plus 9 tips to get started.

Managing multiple tabs: how ABLE helps you tackle tab clutter

What is abstract thinking? 10 activities to improve your abstract thinking skills

0 results found.

- Aegis Alpha SA

- We build in public

Building with passion in

Text Annotation for Natural Language Processing – A Comprehensive Guide

Explore the pivotal role of text annotation in shaping NLP algorithms as we walk you through diverse types of text annotation, annotation tools, case studies, trends, and industry applications. The comprehensive guide throws insights into the Human-in-the-loop approach in text annotation.

Text annotation is a crucial part of natural language processing (NLP), through which textual data is labeled to identify and classify its components. Essential for training NLP models, text annotation involves tasks like named entity recognition, sentiment analysis, and part-of-speech tagging. By providing context and meaning to raw text, it plays a central role in enhancing the performance and accuracy of NLP applications.

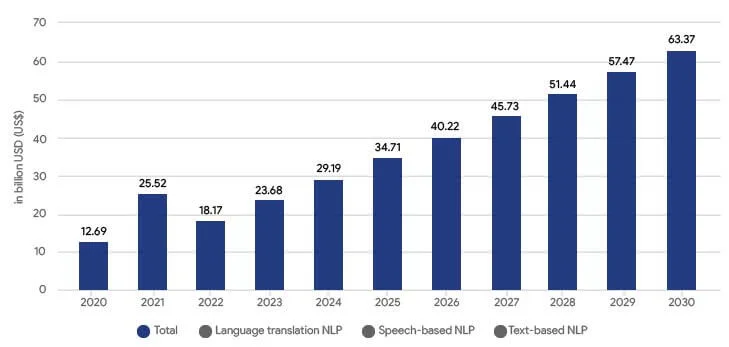

Text annotation is not just a technical requirement, but a foundation for the growing NLP market, which witnessed a turnover of over $12 billion in 2020. According to Statista, the market for NLP is projected to grow at a compound annual growth rate (CAGR) of about 25% from 2021 to 2025.

Recent studies have shown that around two-thirds of NLP systems fail after they are put to use. The primary reason for this failure is their inability to deal with the complex data encountered outside of testing environments, highlighting the importance of high-quality text annotation .

Challenges in annotating text for NLP projects

Popular text annotation techniques, process of annotating text for nlp, how does hitl (human-in-the-loop) approach help, how ai companies benefit from text annotation for domain-based ai apps, types of text annotation in nlp and their effective use cases, text annotation tools, the future of text annotation.

Text annotation is a critical step in preparing data for Natural Language Processing (NLP) systems, which rely heavily on accurately labeled datasets. However, it faces many challenges ranging from data volumes and speed to consistency and data security.

- Volume of Data: NLP projects often require large datasets to be effective. Annotators face the daunting task of labeling vast amounts of text, which can be time consuming and mentally taxing. For instance, a project aimed at understanding customer sentiment might need to process millions of product reviews. This sheer volume can lead to fatigue, affecting the quality of the annotation.

- Speed of Production: In our fast-paced digital world, the speed at which text data is produced and needs to be processed is staggering. Social media platforms generate enormous amounts of data daily. Annotators are under pressure to work quickly, which can sometimes compromise the accuracy and depth of annotation. This need for speed can also lead to burnout among annotators.

- Resource Intensiveness: Text annotation is often a time-consuming and labor-intensive process. It requires a significant amount of human effort, which can be costly and inefficient, especially for large datasets.

- Scalability: As the amount of data increases, scaling the annotation process efficiently while maintaining quality is a major challenge. Automated tools can help, but they often require human validation to ensure accuracy.

- Ambiguity in Language: Natural language is inherently ambiguous and context-dependent. Capturing the correct meaning, especially in cases of idiomatic expressions, sarcasm, or context-specific usage, can be difficult. This ambiguity can lead to challenges in ensuring that the annotations accurately reflect the intended meaning.

- Language and Cultural Diversity: Dealing with multiple languages and cultural contexts increases the complexity of annotation. It’s challenging to ensure that annotators understand the nuances of different languages and cultural references.

- Domain-Specific Knowledge: Certain NLP applications require domain-specific knowledge (such as legal, medical, or technical fields). Finding annotators with the right expertise can be difficult and expensive.

- Annotation Guidelines and Standards: Developing clear, comprehensive annotation guidelines is crucial for consistency. These guidelines must be regularly updated and annotators adequately trained, which adds to the complexity and costs.

- Subjectivity in Interpretation: Different annotators may interpret the same text differently. Achieving consensus or a standardized interpretation can be challenging.

- Adaptation to Evolving Language: Language is dynamic and constantly evolving. Keeping the annotation process and guidelines up to date with new slang, terminologies, and language usage patterns is an ongoing challenge.

- Human Bias: Annotators, being human, bring their own perspectives and biases to the task. This can affect how the text is interpreted and labeled. For example, in sentiment analysis, what one annotator might label as a negative sentiment, another might view as neutral. This subjectivity can lead to inconsistencies in the dataset, which in turn can skew the NLP model’s learning and outputs.

- Consistency: Maintaining consistency in annotation across different annotators and over time is a significant challenge. Different interpretations of guidelines, varying levels of understanding, and even changes in annotators’ perceptions over time can lead to inconsistent labeling. Inconsistent annotations can confuse the NLP models, leading to poor performance.

- Data Security: Annotators often work with sensitive data, which might include personal information. Ensuring the security and privacy of this data is paramount. Data breaches can have serious consequences, not just for the individuals whose data is compromised, but also for the organizations handling the data. Annotators and their employers must adhere to strict data protection protocols, adding another layer of complexity to their work.

Get your solutions to text annotation challenges.

In Natural Language Processing (NLP), the method of text annotation plays a pivotal role in shaping the effectiveness of the technology. Understanding the different text annotation techniques is crucial for selecting the most appropriate method for a given project and address the regular challenges generally involved in them. Here are three primary annotation techniques: Manual, Automated, and Semi-Automated Annotation, each with its unique attributes and applications.

By leveraging these different annotation techniques, organizations and researchers can tailor their approach to suit the specific needs and constraints of their NLP projects, balancing factors like accuracy, speed, and cost-effectiveness.

Confused about what type of text annotation meets your project needs?

Text annotation in NLP is a systematic process in which raw text data is methodically labeled to identify specific linguistic elements, such as entities, sentiments, and syntactic structures. This process not only aids in the training of NLP models, but also significantly improves their ability to understand and process natural language. The stages in this process, from data collection to building an effective annotation team, are crucial for ensuring high-quality data annotation and, consequently, superior model performance in NLP applications.

This comprehensive table encapsulates the entire process of text annotation for NLP, providing a clear roadmap from the initial stages of data collection to the integration of annotated data with machine learning models.

The Human-in-the-Loop (HITL) approach significantly enhances AI-driven data annotation by integrating human expertise into the AI workflow, thereby ensuring greater accuracy and quality. This collaborative technique addresses the limitations of AI, enabling it to navigate complex data more effectively. Key benefits of the HITL approach in text annotation for NLP include:

- Improved Accuracy and Quality: Human experts are better at understanding ambiguous and complex data, allowing them to identify and correct errors that automated systems might overlook. This is particularly beneficial in scenarios involving rare data or languages with limited examples, where machine learning algorithms alone may struggle.