Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Review Article

- Open access

- Published: 15 January 2018

Impact of remote patient monitoring on clinical outcomes: an updated meta-analysis of randomized controlled trials

- Benjamin Noah 1 , 2 ,

- Michelle S. Keller 1 , 2 , 3 ,

- Sasan Mosadeghi 4 ,

- Libby Stein 1 , 2 ,

- Sunny Johl 1 , 2 ,

- Sean Delshad 1 , 2 ,

- Vartan C. Tashjian 1 , 2 , 5 ,

- Daniel Lew 1 , 2 , 5 ,

- James T. Kwan 1 , 2 ,

- Alma Jusufagic 1 , 2 , 3 &

- Brennan M. R. Spiegel 1 , 2 , 3 , 5 , 6

npj Digital Medicine volume 1 , Article number: 20172 ( 2018 ) Cite this article

53k Accesses

140 Citations

655 Altmetric

Metrics details

- Disease prevention

- Health services

- Weight management

An Author Correction to this article was published on 09 April 2018

This article has been updated

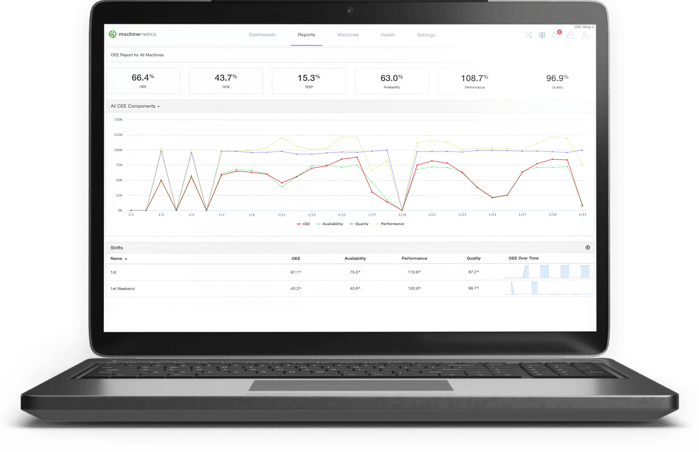

Despite growing interest in remote patient monitoring, limited evidence exists to substantiate claims of its ability to improve outcomes. Our aim was to evaluate randomized controlled trials (RCTs) that assess the effects of using wearable biosensors (e.g. activity trackers) for remote patient monitoring on clinical outcomes. We expanded upon prior reviews by assessing effectiveness across indications and presenting quantitative summary data. We searched for articles from January 2000 to October 2016 in PubMed, reviewed 4,348 titles, selected 777 for abstract review, and 64 for full text review. A total of 27 RCTs from 13 different countries focused on a range of clinical outcomes and were retained for final analysis; of these, we identified 16 high-quality studies. We estimated a difference-in-differences random effects meta-analysis on select outcomes. We weighted the studies by sample size and used 95% confidence intervals (CI) around point estimates. Difference-in-difference point estimation revealed no statistically significant impact of remote patient monitoring on any of six reported clinical outcomes, including body mass index (−0.73; 95% CI: −1.84, 0.38), weight (−1.29; −3.06, 0.48), waist circumference (−2.41; −5.16, 0.34), body fat percentage (0.11; −1.56, 1.34), systolic blood pressure (−2.62; −5.31, 0.06), and diastolic blood pressure (−0.99; −2.73, 0.74). Studies were highly heterogeneous in their design, device type, and outcomes. Interventions based on health behavior models and personalized coaching were most successful. We found substantial gaps in the evidence base that should be considered before implementation of remote patient monitoring in the clinical setting.

Similar content being viewed by others

Smart wearable devices in cardiovascular care: where we are and how to move forward

A systematic review of feasibility studies promoting the use of mobile technologies in clinical research

Wearable fitness tracker use in federally qualified health center patients: strategies to improve the health of all of us using digital health devices

Introduction.

Wearable biosensors are non-invasive devices used to acquire, transmit, process, store, and retrieve health-related data. 1 Biosensors have been integrated into a variety of platforms, including watches, wristbands, skin patches, shoes, belts, textiles, and smartphones. 2 , 3 Patients have the option to share data obtained by biosensors with their providers or social networks to support clinical treatment decisions and disease self-management. 4

The ability of wearable biosensors to passively capture and track continuous health data gives promise to the field of health informatics, which has recently become an area of interest for its potential to advance precision medicine. 1 The concept of leveraging technological innovations to enhance care delivery has many names in the healthcare lexicon. The terms digital health, mobile health, mHealth, wireless health, Health 2.0, eHealth, quantified self, self-tracking, telehealth, telemedicine, precision medicine, personalized medicine, and connected health are among those that are often used synonymously. 5 A 2005 systematic review uncovered over 50 unique and disparate definitions for the term e-health in the literature. 6 A similar 2007 study found 104 individual definitions for the term telemedicine. 7 For the purpose of this study, we employ the term remote patient monitoring (RPM) and define it as the use of a non-invasive, wearable device that automatically transmits data to a web portal or mobile app for patient self-monitoring and/or health provider assessment and clinical decision-making.

The literature on RPM reveals enthusiasm over its promises to improve patient outcomes, reduce healthcare utilization, decrease costs, provide abundant data for research, and increase physician satisfaction. 2 , 3 , 8 Non-invasive biosensors that allow for RPM offer patients and clinicians real-time data that has the potential to improve the timeliness of care, boost treatment adherence, and drive improved health outcomes. 4 , 9 The passive gathering of data may also permit clinicians to focus their efforts on diagnosing, educating, and treating patients, theoretically improving productivity and efficiency of the care provided. 8 However, despite anecdotal reports of RPM efficacy and growing interest in these new health technologies by researchers, providers, and patients alike, little empirical evidence exists to substantiate claims of its ability to improve clinical outcomes, and our research indicates many patients are not yet interested in or willing to share RPM data with their physicians. 4 A recently published systematic review by Vegesna et al. summarized the state of RPM but provided only a qualitative overview of the literature. 10 In this review, we provide a quantitative analysis of RPM studies to provide clinicians, patients, and health system leaders with a clear view of the effectiveness of RPM on clinical outcomes. Specifically, our study questions were as follows: How effective are RPM devices and associated interventions in changing important clinical outcomes of interest to patients and their clinicians? Which elements of RPM interventions lead to a higher likelihood of success in affecting clinically meaningful outcomes?

We sought to identify randomized controlled trials (RCTs) that assess the effects of using non-invasive, wearable biosensors for RPM on clinical outcomes. Understanding precisely in which contexts biosensors can improve health outcomes is important in guiding research pathways and increasing the effectiveness and quality of care.

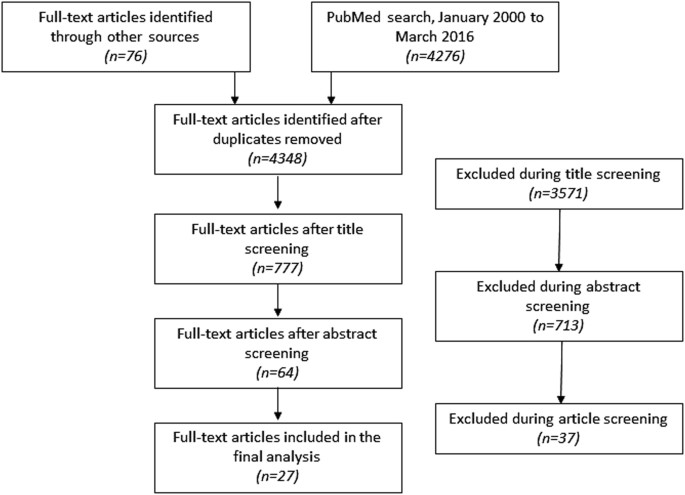

Study selection and data collection

We identified 4348 titles for review (Fig. 1 ). Of these, we selected 777 for abstract review and 64 for full-text review. A total of 27 studies were retained for final analysis. 11 , 12 , 13 , 14 , 15 , 16 , 17 , 18 , 19 , 20 , 21 , 22 , 23 , 24 , 25 , 26 , 27 , 28 , 29 , 30 , 31 , 32 , 33 , 34 , 35 All studies were RCTs published in peer-reviewed journals.

PRISMA flow diagram of the process used in study selection

Quantitative identifiers

Study details.

The 27 studies analyzed had an average study duration of 7.8 months. The study periods ranged from 7 days to 29 months (Table 1 ). The average sample size was 239 patients and ranged from 40 to 1437 patients. Sixteen studies were determined to be of high quality, with a Jadad score equal to 3. Eleven studies were determined to be of low quality. The mean Jadad score for all 27 studies identified in this review was 2.44 (Table 1 ). Since it is often not feasible to double-blind interventions with wearable devices, the maximum Jadad score in these trials was 3.

Study outcomes

Eleven studies examined patient populations with cardiovascular disease, including heart failure, arrhythmias, and hypertension. Six studies evaluated patients with pulmonary diseases, including emphysema, asthma, and sleep apnea. Six trials examined overweight or obese patients or tested interventions aimed at increasing physical activity to prevent weight gain. The remaining studies focused on chronic pain, stroke, and Parkinson’s disease (Table 2 ).

Devices and interventions

RPM devices employed in these studies included blood pressure monitors, ambulatory electrocardiograms, cardiac event recorders, positive airway pressure machines, electronic weight scales, physical activity trackers and accelerometers, spirometers, and pulse oximeters. The control arms of most studies offered education along with standard care but without RPM; however, eight studies used other, similar devices. One study included various types of behavioral economics incentives (either donations to charity or cash incentives) in addition to the biosensors. 36

Twenty-two study interventions contained a feedback loop with a care provider, such as a physician or nurse, who analyzed patient data and communicated back with the patient to modify treatment regimens, improve adherence, or consult. Only five study interventions contained a feedback loop where a care provider was not involved. In those instances, patients logged onto a web portal or mobile app to self-monitor their measurements and view a synthesis of their personal health data.

Qualitative review of high-quality studies

We examined the interventions, theoretical frameworks, and outcomes of the 16 high-quality studies by outcome or disease focus to determine if there were common intervention elements that resulted in greater effects on health and resource outcomes.

Remote patient monitoring for high-acuity patients: chronic obstructive pulmonary disease and heart failure

Five high-quality studies compared RPM with usual care for high-acuity patients with chronic obstructive pulmonary disease (COPD) or heart failure. 11 , 16 , 25 , 31 , 35 In Chau et al., 40 participants with a previous hospitalization and diagnosed with moderate or severe COPD were randomized to usual care or a telecare device kit that provided patient feedback and was monitored by a community nurse. 11 Although several participants experienced technical problems using the device kit, participants expressed greater engagement in self-management of their COPD overall. Nonetheless, the study found no positive effects in any of the primary outcomes when compared to usual care. As the authors note, the study was underpowered and had a short follow-up period of 2 months. In De San Miguel et al., the intervention was similar: 80 participants received telehealth equipment that monitored vital signs daily and was observed by a telehealth nurse. 25 Patients in the intervention group experienced reductions in hospitalizations, emergency department visits, and length of stay, but none of the reductions were statistically significant when compared to the control group. Even so, the costs savings were $2931 per person, suggesting that a study with more power could potentially see significant cost and utilization savings. Dinesen et al. used a similar study design: 111 participants with COPD were randomized to receive telecare kits or usual care. 31 The study found reductions in hospital admissions and lower costs of admissions in the intervention group, but only the mean hospital admission rate was statistically significant. Likewise, Pedone et al. followed 99 participants with COPD randomized to RPM or usual care, and found that the number of exacerbations and exacerbation-related hospitalizations dropped in the intervention group, but neither result was significant. 35 The BEAT-HF trial by Ong et al. followed 1437 participants hospitalized with heart failure who were randomized to RPM or usual care. 16 Centralized nurses actively monitored the RPM data. The researchers found no differences in 180-day all-cause readmissions between the two groups. Four studies demonstrate the promise of RPM for COPD-related hospitalizations and costs; longer follow-up periods and larger sample sizes are needed to determine the full effect of RPM on COPD outcomes. The use of measures such as the Patient Activation Measure 37 in future studies could identify whether factors such as engagement and self-efficacy are important moderators of healthcare outcomes. More evidence is needed to determine whether heart failure is amenable to RPM; these patients may require more intensive follow-up care and may not be the ideal target population for RPM.

Remote patient monitoring for chronic disease: hypertension

Two high-quality studies focused on hypertension. 15 , 23 Kim et al. examined 374 patients randomized to (1) home blood pressure monitoring, (2) remote monitoring using a wireless blood pressure cuff with clinician follow-up, or (3) remote monitoring without clinician follow-up. 23 There were no differences observed in the primary endpoint, sitting systolic blood pressure, in the three groups. However, subjects over 55 years old with remote monitoring (with or without clinician follow-up) experienced significant decreases in the adjusted mean sitting systolic blood pressure when compared to the control group. These results indicate that for a select group of patients, RPM could be effective in hypertension treatment. Logan et al. provided home blood pressure telemonitoring with self-care messages on a smartphone after each reading for patients in the intervention group. 15 Messages were tailored based on care pathways defined by running averages of blood pressure measurements. Physicians were alerted if patients’ blood pressure crossed specific pre-set thresholds and regular feedback was provided to patients and clinicians. Systolic blood pressure decreased in the intervention group; however, self-care smartphone-based support also appeared to worsen depression scores. These studies illustrate that tailored RPM interventions based on care pathways can effectively reduce blood pressure for select groups of patients, but researchers should examine adverse consequences such as depression and other patient-reported outcomes when designing interventions that include continuous monitoring.

Remote patient monitoring for rehabilitation: stroke, Parkinson’s disease, low back pain, and hand function

Four high-quality studies focused on providing feedback regarding various aspects of mobility rehabilitation, including stroke or Parkinson’s disease rehabilitation, low back pain physiotherapy, or hand function physical therapy. 20 , 24 , 27 , 28 Dorsch et al. recruited 135 participants with stroke of any type from 16 rehabilitation centers in 11 countries. 24 All participants wore wireless ankle tri-axial accelerometers while performing conventional rehabilitation exercises; intervention participants received and reviewed augmented feedback with therapists who used the wireless device data, while the control group received standardized verbal feedback from therapists. The researchers found no significant difference in the average daily time spent walking between groups throughout the duration of the trial. The researchers theorized that because participants walked such short amounts of time per day (a mean daily time of eight minutes in the severe group and 12 minutes in the moderate group), there was insufficient time to use the data provided by the wireless devices.

In Ginis et al., 40 participants with Parkinson’s disease undergoing gait training were randomized to home visits from the researcher who provided training on using a smartphone application and ankle-based wireless devices that offered positive and corrective feedback on gait, or an active control, in which they received personalized gait feedback from the same researcher during home visits. 20 Both groups improved on the primary outcomes (single- and dual-task gait speed), but patients using the app and wireless devices improved significantly more on balance and experienced less deterioration over the six-week period.

Kent et al. randomized 112 participants in eight clinics between wearing active wireless motion sensors placed along the spine, and placebo sensors while receiving physical therapy and guideline-based care. 28 Participants received six to eight physical therapy treatment sessions over 10 weeks and were followed for a year. Patients in the intervention group experienced significantly less pain and improved function compared to the control group. Designed as a pilot study, Piga et al. assigned 20 patients with systemic sclerosis or rheumatoid arthritis to use a self-managed hand kinesiotherapy protocol assisted by an RPM device. 27 The device provides both visual and audio feedback on strength-, mobility-, and dexterity-based therapy. The control group received the kinesiotherapy protocol alone. Both groups improved over time, but there was no statistically significant difference in primary outcomes between the two groups. The researchers found, however, that measured adherence to the home-based RPM therapy was very high (90%). These four studies demonstrate mixed results on the use of RPM in rehabilitation but suggest potential insights. First, RPM is most useful in settings where there are clearly defined opportunities to use the data to change clinical care. For example, in the study examining stroke rehabilitation, participants did not walk enough throughout the day to effectively use the feedback. The study examining Parkinson’s disease rehabilitation, however, provided ample opportunities for participants to use the feedback over a six-week period, and participants saw important changes in clinical outcomes. Second, adherence to home-based rehabilitation therapy might be an important process outcome that could be included in future studies. Finally, using placebo sensors such as those used in Kent et al. is an important way to increase the validity and reliability of these studies.

Remote patient monitoring for increasing physical activity: overweightness and obesity

Five studies examined whether RPM could increase physical activity and combined activity monitors with a variety of behavioral interventions, including text messaging, personalized coaching, group-based behavior therapy, or cash- or charity-based incentives. In Wang et al., 67 participants who were overweight or obese were randomly assigned to wear a Fitbit One activity tracker alone or to wear the activity tracker combined with receiving physical activity prompts three times a day via text messages. 32 The researchers found that both groups wearing the Fitbit devices saw a small increase in moderate-to-vigorous physical activity. Participants receiving the automatic text message prompts saw a small additional increase in activity that lasted only one week. Shuger et al. randomized 197 overweight or obese participants between four groups: (1) a control group that received a self-directed weight loss program via a manual, (2) a group that participated in a group-based behavioral weight loss program, (3) a group that received an armband (the SenseWear Armband) that monitored energy balance, daily energy expenditure, and energy intake, and (4) a group that received the armband and the group-based behavioral program. 19 The group receiving the armband and group-based behavioral health intervention was the only one that achieved significant weight loss at nine months compared to the control group. Finkelstein et al. employed a behavioral economics study design, randomizing 800 participants from 13 companies in Singapore to one of four groups: (1) the control group, (2) Fitbit Zip activity tracker alone, (3) Fitbit Zip plus charity incentives, or (4) Fitbit Zip plus cash incentives. 36 At 12 months, the Fitbit-only group and the Fitbit plus charity incentives group outperformed the control group and the Fitbit plus cash incentive group. The group receiving cash incentives saw a reduction in moderate-to-vigorous physical activity when compared to the control group.

Four hundred and seventy-one overweight and obese participants in Jakicic et al. received a low-calorie diet, prescription for physical activity, text message prompts, group counseling sessions, telephone counseling sessions, and access to materials on a website; the enhanced intervention group also received an activity tracker (FIT Core) that displayed data via the device interface or a website. 38 The group that used the activity tracker experienced a lower amount of weight loss compared to the non-tracker group. Finally, in Wijsman et al., 235 participants aged 60–70 years without diabetes were randomized to the intervention group or a waitlist control group. 17 Participants in the intervention group received a commercially available physical activity program (Philips DirectLife) based on the stages of change and I-change health behavior change models. The program includes an accelerometer-based activity tracker, a personal website, and a personal e-coach who provides support via email. After 13 weeks, daily physical activity increased significantly, and weight, waist circumference, and fat mass decreased significantly more in the intervention group compared to the control group. The results from these four different physical activity studies propose plausible directions into how and whether activity trackers can motivate behavior change. Cash incentives proved to be less effective than charity incentives, and automated, non-personalized text messages were also unproductive. Successful interventions combined RPM with several evidence-based components, including personalized coaching or group-based programs, or were grounded in validated behavior change models.

Data analysis

For the meta-analysis, we created six groups of outcomes that had three or more studies, including: body mass index (BMI), weight, waist circumference, body fat percentage, systolic blood pressure, and diastolic blood pressure. There were no groupings found among the binary variables, so they were not included in the meta-analysis. In total, the meta-analysis included eight of the 27 studies.

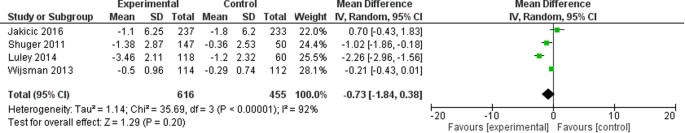

Body mass index (BMI)

Four studies 17 , 19 , 33 , 38 reported baseline and final outcome data for both intervention and control groups for BMI. The total aggregated calculation included 455 control patients and 616 intervention patients (Fig. 2 ). The meta-analysis yielded a mean difference point estimate of −0.73 (95% confidence interval: [−1.84, 0.38]), indicating no statistically significant difference between the experimental and control arms at the 95% confidence level with respect to whether RPM-based interventions resulted in a change in BMI. The I 2 statistic was 92% (95% Confidence Interval: [83%, 96%]), illustrating a high degree of heterogeneity.

Point estimates of the mean difference for each study (green squares) and the corresponding 95% Confidence Intervals (horizontal black lines) are shown, with the size of the green square representing the relative weight of the study. The black diamond represents the overall pooled estimate, with the tips of the diamond representing the 95% Confidence Intervals

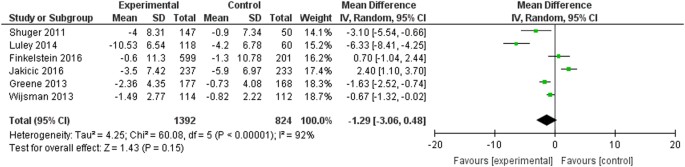

Six studies 17 , 19 , 30 , 33 , 36 , 38 reported data for both intervention and control groups for weight. The meta-analysis calculation was based on 824 control patients and 1392 intervention patients (Fig. 3 ). The meta-analysis yielded a mean difference point estimate of −1.29 (95% Confidence Interval: [−3.06, 0.48]), indicating no statistically significant difference. The I 2 statistic was 92% (95% Confidence Interval: [85%, 96%]), illustrating a high degree of heterogeneity.

Point estimates of the mean difference for each study (green squares) and the corresponding 95% Confidence Intervals (horizontal lines) are shown, with the size of the green square representing the relative weight of the study. The black diamond represents the overall pooled estimate, with the tips of the diamond representing the 95% Confidence Intervals

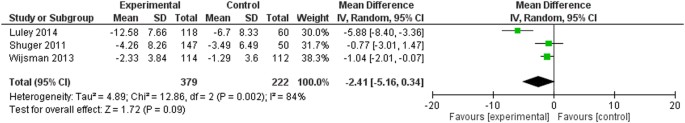

Waist circumference

Three studies 17 , 19 , 33 reported data for both intervention and control groups for waist circumference, with a total of 222 control patients and 379 intervention patients ( Fig. 4 ) . The meta-analysis yielded a mean difference point estimate of −2.41 (95% Confidence Interval: [−5.16, 0.34]), indicating no statistically significant difference. The I 2 statistic was 84% (95% [51%, 95%]), illustrating a moderate to high degree of heterogeneity.

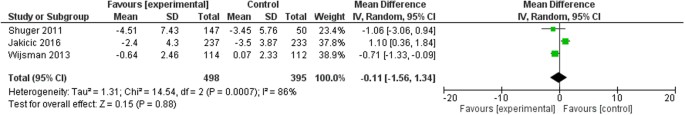

Body fat percentage

Three studies 17 , 19 , 38 reported data for both intervention and control groups for body fat percentage. There were a total of 395 control patients and 498 intervention patients ( Fig. 5 ) . The meta-analysis yielded a mean difference point estimate of 0.11 (95% Confidence Interval: [−1.56, 1.34]), indicating no statistically significant difference. The I 2 statistic was 86% (95% [59%, 95%]), illustrating a moderate to high degree of heterogeneity.

Point estimates of the mean difference for each study (green squares) and the corresponding 95% confidence intervals (horizontal lines) are shown, with the size of the green square representing the relative weight of the study. The black diamond represents the overall pooled estimate, with the tips of the diamond representing the 95% Confidence Intervals

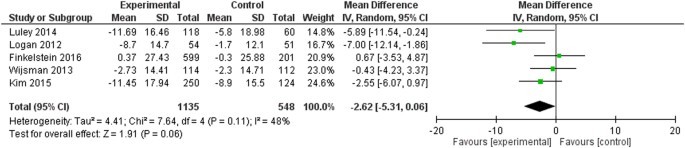

Systolic blood pressure

Five studies 15 , 17 , 19 , 23 , 33 , 36 reported data for both intervention and control groups for systolic blood pressure, with a total of 548 control patients and 1135 intervention patients (Fig. 6 ). The meta-analysis yielded a mean difference point estimate of −0.99 (95% Confidence Interval: [−2.73, 0.74]), indicating no statistically significant difference. The I 2 statistic was 44% (95% [0%, 81%]), illustrating an unknown degree of heterogeneity.

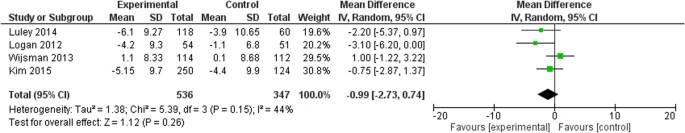

Diastolic blood pressure

Four studies 15 , 17 , 23 , 33 reported data for both intervention and control groups for diastolic blood pressure, with a total of 347 control patients and 536 intervention patients (Fig. 7 ). The meta-analysis yielded a mean difference point estimate of −0.74 (95% Confidence Interval: [−2.34, 0.86]), indicating no statistically significant difference. The I 2 statistic was 28% (95% [0%, 73%]), illustrating an unknown degree of heterogeneity.

Based on our systematic review and examination of high-quality studies on RPM, we found that remote patient monitoring showed early promise in improving outcomes for patients with select conditions, including obstructive pulmonary disease, Parkinson’s disease, hypertension, and low back pain. Interventions aimed at increasing physical activity and weight loss using various activity trackers showed mixed results: cash incentives and automated text messages were ineffective, whereas interventions based on validated health behavior models, care pathways, and tailored coaching were the most successful. However, even within these interventions, certain populations appeared to benefit more from RPM than others. For example, only adults over 55 years of age saw benefits from RPM in one hypertension study. Future studies should be powered to analyze sub-populations to better understand when and for whom RPM is most effective.

For the meta-analyses, we examined six different outcomes (BMI, weight, waist circumference, body fat percentage, systolic blood pressure, and diastolic blood pressure), and found no statistically significant differences between the use of RPM devices and controls with regard to any of these outcomes. However, we were limited by high heterogeneity and scarcity of high-quality studies. The high degree of heterogeneity is likely due to differences in the types of devices used, follow-up periods, and the types of controls in each study. In summary, our results indicate that while some RPM interventions may prove to be promising in changing clinical outcomes in the future, there are still large gaps in the evidence base. Of note, we found that many currently available consumer products have not yet been tested in RCTs with clinically meaningful outcomes. Although some consumer-facing digital health products may be effective for promoting behavior change, there is currently a dearth of evidence that these devices achieve health benefits; more research is needed in this field. Patients, clinicians, and health system leaders should proceed with caution before implementing and using RPM to reliably change clinical outcomes.

Future research should identify and remedy potential barriers to RPM effectiveness on clinical outcomes. For example, factorial design trials should evaluate variants of an RPM intervention in terms of frequency, duration, intensity, and timing. We also found that there are few large-scale clinical trials demonstrating a clinically meaningful impact on patient outcomes. Only one study identified in this review had a sample size of more than 1000 patients; most studies included fewer than 200 patients. Additionally, most studies had relatively short follow-up periods. Given that many of these studies were described as pilot studies, it is clear that the field of RPM is relatively new and evolving. Larger studies with multiple intervention groups will be able to better distinguish which components are most effective and whether behavior change can be sustained over time using RPM.

Future studies would also highly benefit from a mixed-methods approach in which both patients and clinicians are interviewed. Adding a qualitative component would give researchers insight into which RPM elements best engage and motivate patients, nurses, allied health workers, and physicians. Behavior change is complex; understanding how and if specific devices and device-related interventions and incentives motivate health behavior change is an important area that is still not well understood. For example, previous studies have found that most devices result in only short-term changes in behavior and motivation. 39 Activity trackers have been found to change behavior for only approximately three to six months. 40 Studies in this review found that cash incentives performed worse than charity incentives, illustrating that incentivizing individuals is complex and nuanced. Gaining a better understanding of how individuals interface with these health-related technologies will assist in developing evidence-based devices that have the potential to change behavior over longer periods of time.

One of the challenges of this review was the relatively broad survey into the effectiveness of RPM on clinical outcomes. This broad approach allowed us to examine the similarities among interventions targeted at different conditions, but also made it difficult to combine results among studies using different devices and associated interventions. Additional limitations of this study include the use of one primary database, PubMed, to identify articles. However, we examined review articles to identify potential studies that may be listed in other databases. Additionally, the study question focused solely on non-invasive wearable devices and excluded invasive devices such as glucose sensors, on which there have been many studies. The scope of this study included only RCTs with clinically meaningful outcomes. These rigorous search criteria excluded studies without controls or randomization. While non-randomized studies may nonetheless inform the field of RPM, given the risk of selection bias inherent in non-randomized trials, we determined it was optimal to restrict the inclusion criteria to RCTs in this meta-analysis of controlled trials.

An inherent shortcoming of most wearable device studies is difficulty in following double-blind procedures; the intervention arms necessarily include patient engagement or, at minimum, placement of the device on the patient’s body, which can be difficult to blind. Some studies have used devices that were turned off or were non-functional to reduce a potential placebo or Hawthorne effect, 41 but given the data feedback loop integrated into many of these devices, it is extremely difficult to blind the provider receiving the data, which may impact results. Nonetheless, this shortcoming would tend to benefit the active intervention, making it more likely to show a difference in an unblinded study.

For RPM interventions to impact healthcare, they will need to impact outcomes that matter to patients. Examples include patient-reported health related quality of life (HRQOL), symptom severity, satisfaction with care, resource utilization, hospitalizations, readmissions, and survival. There is little data investigating the impact of RPM on these outcome measures. It may strengthen the interventions if they are developed directly in partnership with end-users—i.e. patients themselves. Further research might also emphasize how to personalize RPM interventions, as described by Joseph Kvedar and others. 42 , 43 , 44 This approach seeks to optimize applications and sensors within a biopsychosocial framework. 44 By using validated behavior-based models from the psychological and public health literature that integrate a variety of data from time of day to step counts, to the local weather, to levels of depression or anxiety, these tailored applications aim to generate contextually appropriate, highly tailored messages to patients at the right time and right place. 42 , 43 , 44 , 45 This approach might combine the most successful elements of the effective interventions in this review, including personalized coaching and feedback, in a more cost-effective manner. Additionally, given the pronounced challenges in changing health-related behaviors, incorporating well-researched theoretical frameworks into interventions, such as the Health Belief Model, 46 the Stages of Change Model, 47 or Theory of Reasoned Action/Planned Behavior, 48 may be ultimately more successful than merely improving the technical aspects of RPM.

Study identification

We performed a systematic review of PubMed from January 2000 to October 2016 to identify RCTs that assessed clinical outcomes related to the use of non-invasive wearable biosensors versus a control condition. The subject headings and key words incorporated into the search strategy included:

(“biosensing techniques”[MeSH Terms] OR “Remote sensing technology”[MeSH] OR “remote sensing”[text word] OR “On body sensor”[text word] OR Biosensor*[text word] OR “Wearable device”[text word] OR “Constant health monitoring”[text word] OR “Wireless technology”[text word] OR “wearable sensor”[text word] OR “wearable”[text word] OR “medical sensor”[text word] OR “Body Sensor”[text word] OR “Passive monitor”[text word] OR “wireless monitor”[text word] OR “monitoring device”[text word] OR “wireless sensor”[text word]) AND (hasabstract[text] OR English[lang]) AND (“Clinical Trial “[Publication Type] OR “Randomized Controlled Trial “[Publication Type] OR “randomized”[tiab] OR “placebo”[tiab] OR “therapy”[sh] OR randomly[tiab] OR trial[tiab] OR groups[tiab]) NOT (“animals”[MeSH] NOT “humans”[MeSH]).

After an initial review of our search yield, we added the following subject headings and key words:

(“Remote monitoring”[text word] OR “Remote patient monitoring”[text word] OR “self-monitoring”[text word] OR “self-tracking”[text word] OR “remote tracking”[text word] OR “home monitoring”[text word] OR “wireless monitoring”[text word] OR “online monitoring”[text word] OR “online tracking”[text word] OR “telemonitoring”[text word] OR “ambulatory monitoring”[text word]) AND (“e-health”[text word] OR “m-health”[text word] OR “mobile”[text word] OR “mobile health”[text word] OR “telehealth”[text word] OR “telemedicine”[text word] OR “digital health”[text word] OR “digital medicine”[text word] OR ((“smartphone”[MeSH Terms] OR “smartphone”[All Fields]) AND text[All Fields] AND word[All Fields]) OR “social network”[text word] OR “Web based”[text word] OR “online portal”[text word] OR “internet based”[text word] OR “cell phone”[text word] OR “mobile phone”[text word]).

Additionally, we consulted references from a previous systematic review. 10

Study selection and data extraction

We assessed all titles for relevance and rejected titles if they fulfilled pre-specified exclusion criteria (Table 3 ). Eight trained investigators independently screened titles in pairs of two. We calculated Fleiss’ Kappa, a measure of the degree of consistency between two or more raters to ensure high inter-rater reliability, and aimed for a kappa higher than 0.85. 49 For studies identified in the second review process, a second independent review was performed. Differences regarding inclusion and exclusion criteria were resolved through consensus. We followed a similar method to review abstracts for all studies that passed the title screening stage, and included any study that met all of the abstract inclusion criteria (Table 3 ).

Data abstraction and data management

Each study was jointly abstracted for data by two reviewers and the results were entered into a standardized abstraction form. For each study, the reviewers extracted data about the targeted disease state, device type, control intervention, clinically relevant outcomes, type of feedback loop, descriptive information of subjects, and study design. For the analysis, we examined only continuous variables.

For continuous variables, we used a difference-in-differences model to assess relative change between the baseline measure and final measure for control and treatment groups. If a study did not provide baseline data, we emailed the respective authors and requested the data. If we did not receive a reply or the authors did not have baseline data, we excluded the study from this analysis.

We standardized all studies to provide the change from baseline mean and standard deviation for both the experimental and control arms. If a study reported only standard errors, p -values, or confidence intervals, we converted these to standard deviations (see Appendix). If a study did not provide a standard deviation or any of the three statistics mentioned above, we contacted the primary author, as explained above, and excluded the study from this analysis if they could not provide that information. Many of the identified studies used more than one experimental arm; we followed methods from Cochrane to combine the two groups into one larger group (see Appendix). 50 We directionally corrected all signs and adjusted any differences in units of calculation (i.e. lbs vs. kg).

Given the heterogeneity of the interventions and outcomes, we grouped the outcome variables into separate groups for analysis (e.g. cholesterol, blood pressure). This process was jointly completed by two reviewers, with any disagreements discussed with a third-party arbiter.

Statistical analyses

We used Review Manager (Review Manager [RevMan] Version 5.3. Copenhagen: The Nordic Cochrane Centre, The Cochrane Collaboration, 2014) to conduct a difference-in-differences random effects analysis. We used a difference-in-differences random effects analysis to help control for the many differences in the studies and to limit heterogeneity. We weighted the studies by sample size and used 95% confidence intervals around our point estimates. We also assessed for heterogeneity using the I 2 statistic and calculated the 95% confidence intervals using the standard methods described by Higgins et al. 51 We did not perform tests for funnel plot asymmetry to examine publication bias given that this type of analysis is not recommended for meta-analyses with fewer than 10 studies. 52

Strength of the body of evidence

We assigned a score for methodological quality by applying the Jadad scale, 53 a commonly used instrument for measuring the quality of randomized controlled trials. The score awards points for appropriate randomization, presence of concealed allocation, adequacy of double blinding, appropriateness of blinding technique, and documentation of withdrawals and dropouts. The score ranges from 0 to 5, where a score of ≥3 denotes “high quality” based on the original validation studies. We measured inter-rater agreement for each step with a k statistic, and adopted a threshold of ≥0.7 as the definition for acceptable agreement. Disagreements were adjudicated by discussion and consensus between the two primary reviewers and a third-party arbiter.

Data availability

The data used in this study was manually abstracted from the 27 studies identified in the systematic review. The meta-analysis used data from 8 of those 27 studies, which are referenced at the relevant points in the paper.

Change history

09 april 2018.

A correction to this article has been published and is linked from the HTML version of this article.

Andreu-Perez, J., Leff, D. R., Ip, H. M. & Yang, G. Z. From wearable sensors to smart implants—toward pervasive and personalized healthcare. IEEE Trans. Biomed. Eng. 62 , 2750–2762 (2015).

Article PubMed Google Scholar

Ajami, S. & Teimouri, F. Features and application of wearable biosensors in medical care. J. Res. Med. Sci. 20 , 1208–1215 (2015).

Article PubMed PubMed Central CAS Google Scholar

Steinhubl, S. R., Muse, E. D. & Topol, E. J. The emerging field of mobile health. Sci. Transl. Med. 7 , 283rv283 (2015).

Article Google Scholar

Pevnick, J. M., Fuller, G., Duncan, R. & Spiegel, B. M. R. A large-scale initiative inviting patients to share personal fitness tracker data with their providers: initial results. PLoS ONE 11 , e0165908 (2016).

Atallah, L., Lo, B. & Yang, G. Z. Can pervasive sensing address current challenges in global healthcare? J. Epidemiol. Glob. Health 2 , 1–13 (2012).

Banaee, H., Ahmed, M. U. & Loutfi, A. Data mining for wearable sensors in health monitoring systems: a review of recent trends and challenges. Sens. 13 , 17472–17500 (2013).

Article CAS Google Scholar

Dobkin, B. H. & Dorsch, A. The promise of mHealth: daily activity monitoring and outcome assessments by wearable sensors. Neurorehabil. Neural Repair. 25 , 788–798 (2011).

Article PubMed PubMed Central Google Scholar

Oh, H., Rizo, C., Enkin, M. & Jadad, A. What is eHealth (3): a systematic review of published definitions. J. Med. Internet Res. 7 , e1 (2005).

Sood, S. et al. What is telemedicine? A collection of 104 peer-reviewed perspectives and theoretical underpinnings. Telemed. J. E. Health 13 , 573–590 (2007).

Vegesna, A., Tran, M., Angelaccio, M. & Arcona, S. Remote patient monitoring via non-invasive digital technologies: a systematic review. Telemed. J. E. Health 23 , 3–17 (2017).

Chau, J. P. et al. A feasibility study to investigate the acceptability and potential effectiveness of a telecare service for older people with chronic obstructive pulmonary disease. Int. J. Med. Inform. 81 , 674–682 (2012).

Bloss, C. S. et al. A prospective randomized trial examining health care utilization in individuals using multiple smartphone-enabled biosensors. PeerJ . 4 , e1554 (2016).

Scalvini, S. et al. Cardiac event recording yields more diagnoses than 24-hour Holter monitoring in patients with palpitations. J. Telemed. Telecare. 11 , 14–16 (2005).

Ryan, D. et al. Clinical and cost effectiveness of mobile phone supported self monitoring of asthma: multicentre randomised controlled trial. BMJ 344 , e1756 (2012).

Logan, A. G. et al. Effect of home blood pressure telemonitoring with self-care support on uncontrolled systolic hypertension in diabetics. Hypertension 60 , 51–57 (2012).

Article PubMed CAS Google Scholar

Ong, M. K. et al. Effectiveness of remote patient monitoring after discharge of hospitalized patients with heart failure: the better effectiveness after transition-heart failure (BEAT-HF) randomized clinical trial. JAMA Intern. Med. 176 , 310–318 (2016).

Wijsman, C. A. et al. Effects of a web-based intervention on physical activity and metabolism in older adults: randomized controlled trial. J. Med. Internet Res. 15 , e233 (2013).

Pedone, C., Rossi, F. F., Cecere, A., Costanzo, L. & Antonelli Incalzi, R. Efficacy of a physician-led multiparametric telemonitoring system in very old adults with heart failure. J. Am. Geriatr. Soc. 63 , 1175–1180 (2015).

Shuger, S. L. et al. Electronic feedback in a diet- and physical activity-based lifestyle intervention for weight loss: a randomized controlled trial. Int. J. Behav. Nutr. Phys. Act. 8 , 41 (2011).

Ginis, P. et al. Feasibility and effects of home-based smartphone-delivered automated feedback training for gait in people with Parkinson’s disease: a pilot randomized controlled trial. Park. Relat. Disord. 22 , 28–34 (2016).

Lee, Y. H. et al. Impact of home-based exercise training with wireless monitoring on patients with acute coronary syndrome undergoing percutaneous coronary intervention. J. Korean Med. Sci. 28 , 564–568 (2013).

Tan, B. Y., Ho, K. L., Ching, C. K. & Teo, W. S. Novel electrogram device with web-based service centre for ambulatory ECG monitoring. Singap. Med. J. 51 , 565–569 (2010).

CAS Google Scholar

Kim, Y. N., Shin, D. G., Park, S. & Lee, C. H. Randomized clinical trial to assess the effectiveness of remote patient monitoring and physician care in reducing office blood pressure. Hypertens. Res. 38 , 491–497 (2015).

Dorsch, A. K., Thomas, S., Xu, X., Kaiser, W. & Dobkin, B. H. SIRRACT: an international randomized clinical trial of activity feedback during inpatient stroke rehabilitation enabled by wireless sensing. Neurorehabil. Neural Repair. 29 , 407–415 (2015).

De San Miguel, K., Smith, J. & Lewin, G. Telehealth remote monitoring for community-dwelling older adults with chronic obstructive pulmonary disease. Telemed. J. E. Health 19 , 652–657 (2013).

Woodend, A. K. et al. Telehome monitoring in patients with cardiac disease who are at high risk of readmission. Heart Lung. 37 , 36–45 (2008).

Piga, M. et al. Telemedicine applied to kinesiotherapy for hand dysfunction in patients with systemic sclerosis and rheumatoid arthritis: recovery of movement and telemonitoring technology. J. Rheumatol. 41 , 1324–1333 (2014).

Kent, P., Laird, R. & Haines, T. The effect of changing movement and posture using motion-sensor biofeedback, versus guidelines-based care, on the clinical outcomes of people with sub-acute or chronic low back pain-a multicentre, cluster-randomised, placebo-controlled, pilot trial. BMC Musculoskelet. Disord. 16 , 131 (2015).

Fox, N. et al. The impact of a telemedicine monitoring system on positive airway pressure adherence in patients with obstructive sleep apnea: a randomized controlled trial. Sleep 35 , 477–481 (2012).

Greene, J., Sacks, R., Piniewski, B., Kil, D. & Hahn, J. S. The impact of an online social network with wireless monitoring devices on physical activity and weight loss. J. Prim. Care Community Health 4 , 189–194 (2013).

Dinesen, B. et al. Using preventive home monitoring to reduce hospital admission rates and reduce costs: a case study of telehealth among chronic obstructive pulmonary disease patients. J. Telemed. Telecare. 18 , 221–225 (2012).

Wang, J. B. et al. Wearable sensor/device (Fitbit One) and SMS text-messaging prompts to increase physical activity in overweight and obese adults: a randomized controlled trial. Telemed. J. E. Health 21 , 782–792 (2015).

Luley, C. et al. Weight loss by telemonitoring of nutrition and physical activity in patients with metabolic syndrome for 1 year. J. Am. Coll. Nutr. 33 , 363–374 (2014).

Dansky, K. H., Vasey, J. & Bowles, K. Impact of telehealth on clinical outcomes in patients with heart failure. Clin. Nurs. Res. 17 , 182–199 (2008).

Pedone, C., Chiurco, D., Scarlata, S. & Incalzi, R. A. Efficacy of multiparametric telemonitoring on respiratory outcomes in elderly people with COPD: a randomized controlled trial. BMC Health Serv. Res. 13 , 82 (2013).

Finkelstein, E. A. et al. Effectiveness of activity trackers with and without incentives to increase physical activity (TRIPPA): a randomised controlled trial. Lancet Diabetes Endocrinol . https://doi.org/10.1016/S2213-8587(16)30284-4 (2016).

Hibbard, J. H., Stockard, J., Mahoney, E. R. & Tusler, M. Development of the patient activation measure (PAM): conceptualizing and measuring activation in patients and consumers. Health Serv. Res. 39 , 1005–1026 (2004).

Jakicic, J. M. et al. Effect of wearable technology combined with a lifestyle intervention on long-term weight loss: the IDEA randomized clinical trial. JAMA 316 , 1161–1171 (2016).

Klasnja, P., Consolvo, S. & Pratt, W. In Proc. SIGCHI Conference on Human Factors Computing Systems. 3063–3072 (ACM, Vancouver, BC, Canada, 2011).

Shih, P. C., Han, K., Poole, E. S., Rosson, M. B. & Carroll, J. M. Use and adoption challenges of wearable activity trackers. In iConf. Proc . (iSchools, Newport Beach, California, 2015).

McCambridge, J., Witton, J. & Elbourne, D. R. Systematic review of the Hawthorne effect: new concepts are needed to study research participation effects. J. Clin. Epidemiol. 67 , 267–277 (2014).

Agboola, S. et al. Pain management in cancer patients using a mobile app: study design of a randomized controlled trial. JMIR Res. Protoc. 3 , e76 (2014).

Agboola, S. et al. Improving outcomes in cancer patients on oral anti-cancer medications using a novel mobile phone-based intervention: study design of a randomized controlled trial. JMIR Res. Protoc. 3 , e79 (2014).

Kvedar, J., Coye, M. J. & Everett, W. Connected health: a review of technologies and strategies to improve patient care with telemedicine and telehealth. Health Aff. 33 , 194–199 (2014).

Agboola, S., Palacholla, R. S., Centi, A., Kvedar, J. & Jethwani, K. A multimodal mHealth intervention (FeatForward) to improve physical activity behavior in patients with high cardiometabolic risk factors: rationale and protocol for a randomized controlled trial. JMIR Res. Protoc . 5 (2016).

Rosenstock, I. M. The health belief model and preventive health behavior. Health Educ. Monogr. 2 , 354–386 (1974).

Prochaska, J. O., DiClemente, C. C. & Norcross, J. C. In search of how people change. Appl. Addict. Behav. Am. Psychol. 47 , 1102–1114 (1992).

Ajzen, I. The theory of planned behavior. Organ. Behav. Hum. Decis. Process. 50 , 179–211 (1991).

McHugh, M. L. Interrater reliability: the kappa statistic. Biochem. Med. 22 , 276–282 (2012).

Higgins, J. P. & Green, S. Cochrane Handbook for Systematic Reviews of Interventions. (The Cochrane Collaboration, 2011).

Higgins, J. P. & Thompson, S. G. Quantifying heterogeneity in a meta-analysis. Stat. Med. 21 , 1539–1558 (2002).

Sterne, J. A. C. et al. Recommendations for examining and interpreting funnel plot asymmetry in meta-analyses of randomised controlled trials. BMJ . 343 (2011).

Jadad, A. R. et al. Assessing the quality of reports of randomized clinical trials: is blinding necessary? Control. Clin. Trials 17 , 1–12 (1996).

Download references

Author information

Authors and affiliations.

Division of Health Services Research, Cedars-Sinai Medical Center, Los Angeles, CA, USA

Benjamin Noah, Michelle S. Keller, Libby Stein, Sunny Johl, Sean Delshad, Vartan C. Tashjian, Daniel Lew, James T. Kwan, Alma Jusufagic & Brennan M. R. Spiegel

Cedars-Sinai Center for Outcomes Research and Education (CS-CORE), Los Angeles, CA, USA

Department of Health Policy and Management, UCLA Fielding School of Public Health, Los Angeles, CA, USA

Michelle S. Keller, Alma Jusufagic & Brennan M. R. Spiegel

Department of Medicine, University of Arizona, College of Medicine Tucson, Tucson, AZ, USA

Sasan Mosadeghi

Cedars-Sinai Medical Center, Los Angeles, CA, USA

Vartan C. Tashjian, Daniel Lew & Brennan M. R. Spiegel

American Journal of Gastroenterology, Bethesda, USA

Brennan M. R. Spiegel

You can also search for this author in PubMed Google Scholar

Contributions

B.N. abstracted the data, ran the analysis, and wrote the results. M.S.K. summarized the high-quality studies in the results section. M.S.K., A.J., and B.M.S. assisted in writing and editing the manuscript. M.S.K., S.M., L.S., S.J., S.D., V.C.T., D.L., and J.T.K. conducted the literature search, and systematic review. All authors contributed to and have approved the final manuscript.

Corresponding author

Correspondence to Brennan M. R. Spiegel .

Ethics declarations

Competing interests.

The authors declare no competing financial interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Change history: The original version of this Article had an incorrect Article number of 2 and an incorrect Publication year of 2017. These errors have now been corrected in the PDF and HTML versions of the Article.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Cite this article.

Noah, B., Keller, M.S., Mosadeghi, S. et al. Impact of remote patient monitoring on clinical outcomes: an updated meta-analysis of randomized controlled trials. npj Digital Med 1 , 20172 (2018). https://doi.org/10.1038/s41746-017-0002-4

Download citation

Received : 11 May 2017

Revised : 28 August 2017

Accepted : 31 August 2017

Published : 15 January 2018

DOI : https://doi.org/10.1038/s41746-017-0002-4

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

Post-hospitalization remote monitoring for patients with heart failure or chronic obstructive pulmonary disease in an accountable care organization.

- Samantha Harris

- Kayla Paynter

- Patrick G. Lyons

BMC Health Services Research (2024)

Feasibility and acceptability of C-PRIME: A health promotion intervention for family caregivers of patients with colorectal cancer

- Lisa M. Gudenkauf

- Brian D. Gonzalez

Supportive Care in Cancer (2024)

Association of Remote Patient Monitoring with Mortality and Healthcare Utilization in Hypertensive Patients: a Medicare Claims–Based Study

- Mahip Acharya

- Corey J. Hayes

Journal of General Internal Medicine (2024)

Transforming ophthalmology in the digital century—new care models with added value for patients

- Peter M. Maloca

- Martin K. Schmid

Synchronized wearables for the detection of haemodynamic states via electrocardiography and multispectral photoplethysmography

- Daniel Franklin

- Andreas Tzavelis

- John A. Rogers

Nature Biomedical Engineering (2023)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

- SUGGESTED TOPICS

- The Magazine

- Newsletters

- Managing Yourself

- Managing Teams

- Work-life Balance

- The Big Idea

- Data & Visuals

- Reading Lists

- Case Selections

- HBR Learning

- Topic Feeds

- Account Settings

- Email Preferences

Monitoring Employees Makes Them More Likely to Break Rules

- Chase Thiel,

- Julena M. Bonner,

- David Welsh,

- Niharika Garud

Researchers found that when workers know they’re being surveilled, they often feel less responsible for their own conduct.

As remote work becomes the norm, more and more companies have begun tracking employees through desktop monitoring, video surveillance, and other digital tools. These systems are designed to reduce rule-breaking — and yet new research suggests that in some cases, they can seriously backfire. Specifically, the authors found across two studies that monitored employees were substantially more likely to break rules, including engaging in behaviors such as cheating on a test, stealing equipment, and purposely working at a slow pace. They further found that this effect was driven by a shift in employees’ sense of agency and personal responsibility: Monitoring employees led them to subconsciously feel less responsibility for their own conduct, ultimately making them more likely to act in ways that they would otherwise consider immoral. However, when employees feel that they are being treated fairly, the authors found that they are less likely to suffer a drop in agency and are thus less likely to lose their sense of moral responsibility in response to monitoring. As such, the authors suggest that in cases where monitoring is necessary, employers should take steps to enhance perceptions of justice and thus preserve employees’ sense of agency.

In April 2020, global demand for employee monitoring software more than doubled . Online searches for “how to monitor employees working from home” increased by 1,705%, and sales for systems that track workers’ activity via desktop monitoring, keystroke tracking, video surveillance, GPS location tracking, and other digital tools went through the roof. Some of these systems purport to use employee data to improve wellbeing — for example, Microsoft is developing a system that would use smart watches to collect data on employees’ blood pressure and heart rate, producing personalized “anxiety scores” to inform wellness recommendations. But the vast majority of employee monitoring tools are focused on tracking performance, increasing productivity, and deterring rule-breaking.

- CT Chase Thiel is the Bill Daniels Chair of Business Ethics and an associate professor of management at the University of Wyoming’s College of Business. His research examines causes of organizational misconduct through a behavioral lens, characteristics of moral people, and the role of leaders in the creation and maintenance of ethical workplaces.

- JB Julena M. Bonner is an Associate Professor of management in the Marketing and Strategy Department of the Jon M. Huntsman School of Business at Utah State University. She received her PhD in Management from Oklahoma State University. Her research interests include behavioral ethics, ethical leadership, moral emotions, and workplace deviance. See her faculty page here .

- JB John Bush is an Assistant Professor of Management in the College of Business at the University of Central Florida. His research focuses on employee ethicality and performance in organizations.

- David Welsh is an associate professor in the Department of Management and Entrepreneurship at Arizona State University’s W.P. Carey School of Business. He holds a Ph.D. in Management from the University of Arizona. His research focuses primarily on issues related to unethical behavior in the workplace. See his faculty page here .

- NG Niharika Garud is an associate professor in the Department of Management and Marketing at University of Melbourne’s Faculty of Business & Economics. Her research focuses primarily on understanding management of people, performance, and innovation in organizations. See her faculty page here .

Partner Center

This website may not work correctly because your browser is out of date. Please update your browser .

A case study focuses on a particular unit - a person, a site, a project. It often uses a combination of quantitative and qualitative data.

Case studies can be particularly useful for understanding how different elements fit together and how different elements (implementation, context and other factors) have produced the observed impacts.

There are different types of case studies, which can be used for different purposes in evaluation. The GAO (Government Accountability Office) has described six different types of case study:

1. Illustrative : This is descriptive in character and intended to add realism and in-depth examples to other information about a program or policy. (These are often used to complement quantitative data by providing examples of the overall findings).

2. Exploratory : This is also descriptive but is aimed at generating hypotheses for later investigation rather than simply providing illustration.

3. Critical instance : This examines a single instance of unique interest, or serves as a critical test of an assertion about a program, problem or strategy.

4. Program implementation . This investigates operations, often at several sites, and often with reference to a set of norms or standards about implementation processes.

5. Program effects . This examines the causal links between the program and observed effects (outputs, outcomes or impacts, depending on the timing of the evaluation) and usually involves multisite, multimethod evaluations.

6. Cumulative . This brings together findings from many case studies to answer evaluative questions.

The following guides are particularly recommended because they distinguish between the research design (case study) and the type of data (qualitative or quantitative), and provide guidance on selecting cases, addressing causal inference, and generalizing from cases.

This guide from the US General Accounting Office outlines good practice in case study evaluation and establishes a set of principles for applying case studies to evaluations.

This paper, authored by Edith D. Balbach for the California Department of Health Services is designed to help evaluators decide whether to use a case study evaluation approach.

This guide, written by Linda G. Morra and Amy C. Friedlander for the World Bank, provides guidance and advice on the use of case studies.

Expand to view all resources related to 'Case study'

- Broadening the range of designs and methods for impact evaluations

- Case studies in action

- Case study evaluations - US General Accounting Office

- Case study evaluations - World Bank

- Comparative case studies

- Dealing with paradox – Stories and lessons from the first three years of consortium-building

- Designing and facilitating creative conversations & learning activities

- Estudo de caso: a avaliação externa de um programa

- Evaluation tools

- Evaluations that make a difference

- Methods for monitoring and evaluation

- Reflections on innovation, assessment and social change processes: A SPARC case study, India

- Toward a listening bank: A review of best practices and the efficacy of beneficiary assessment

- UNICEF webinar: Comparative case studies

- Using case studies to do program evaluation

'Case study' is referenced in:

- Week 32: Better use of case studies in evaluation

Back to top

© 2022 BetterEvaluation. All right reserved.

The Monitoring and Evaluation Toolkit

This section asks:

What is a case study?

- What are the different types of case study ?

- What are the advantages and disadvantages of a case study ?

- How to Use Case Studies as part of your Monitoring & Evaluation?

There are many different text books and websites explaining the use of case studies and this section draws heavily on those of Lamar University and the NCBI (worked examples), as well as on the author’s own extensive research experience.

If you are monitoring/ evaluating a project, you may already have obtained general information about your target school, village, hospital or farming community. But the information you have is broad and imprecise. It may contain a lot of statistics but may not give you a feel for what is really going on in that village, school, hospital or farming community.

Case studies can provide this depth. They focus on a particular person, patient, village, group within a community or other sub-set of a wider group. They can be used to illustrate wider trends or to show that the case you are examining is broadly similar to other cases or really quite different.

In other words, a case study examines a person, place, event, phenomenon, or other type of subject of analysis in order to extrapolate key themes and results that help predict future trends, illuminate previously hidden issues that can be applied to practice, and/or provide a means for understanding an important research problem with greater clarity.

A case study paper usually examines a single subject of analysis, but case study papers can also be designed as a comparative investigation that shows relationships between two or among more than two subjects. The methods used to study a case can rest within a quantitative, qualitative, or a mixture of the two.

Different types of case study

There are many types of case study. Drawing on the work of Lamar University and the NCBI , some of the best-known types are set out below.

It is best not to worry too much about the nuances that differentiate types of case study. The key is to recognise that the case study is a detailed illustration of how your project or programme has worked or failed to work on an individual, hospital, school, target community or other group/ economic sector.

- Explanatory case studies aim to answer ‘how’ or ’why’ questions with little control on behalf of researcher over occurrence of events. This type of case studies focus on phenomena within the contexts of real-life situations. Example: “An investigation into the reasons of the global financial and economic crisis of 2008 – 2010.”

- Descriptive case studies aim to analyze the sequence of interpersonal events after a certain amount of time has passed. Studies in business research belonging to this category usually describe culture or sub-culture, and they attempt to discover the key phenomena. Example: Impact of increasing levels of funding for prosthetic limbs on the employment opportunities of amputees. A case study of the West Point community of Monrovia (Liberia).

- Exploratory case studies aim to find answers to the questions of ‘what’ or ‘who’. Exploratory case study data collection method is often accompanied by additional data collection method(s) such as interviews, questionnaires, experiments etc. Example: “A study into differences of local community governance practices between a town in francophone Cameroon and a similar-sized town in anglophone Cameroon.”

- Critical instance : This examines a single instance of unique interest, or serves as a critical test of an assertion about a programme, problem or strategy. The focus might be on the economic or human cost of a tsunami or volcanic eruption in a particular area.

- Representative : This relates to case which is typical in nature and representative of other cases that you might examine. An example might be a mother, with a part-time job and four children, living in a community where this is the norm

- Deviant : This refers to a case which is out of line with others. Deviant cases can be particularly interesting and often attract greater attention from analysts. A patient with immunity to a particular virus is worth studying as that study might provide clues to a possible cure to that virus

- Prototypical : This involves a case which is ahead of the curve in some way and has the capacity to set a trend. A particular African town or city may be a free bicyle loan scheme and the experiences of that town might suggest a future path to be followed by other towns and regions.

- Most similar cases : Here you are looking at more than one case and you have selected two cases which have a preponderance of features in common. You might for example be looking at two schools, each of which teaches boys aged from 11-15 and each of which charges similar fees. They are located in the same country but are in different regions where the local authorities devote different levels of resource to secondary school education. You may have a project in each of these areas and you may wish to explain why your project has been more successful in one than the other.

- Most dissimilar cases : these are cases which are, in most key respects, very different and where you might expect to find different outcomes. You might for example select a class of top-ranking pupils and compare it with a class of bottom-ranking puils. This could help to bring out the factors that contribute to or detract from academic success.

Advantages and Disadvantages of Case Study Method

- It helps explain how and why a phenomenon has occurred, thereby going beyond numerical data

- It allows the integration of qualitative and quantitative data collection and analysis methods

- It provides rich (or ‘thick) detail and is well suited to capturing complexities of real-life situations and the challenges facing real people

- Case studies (sometimes illustrated with quotations from beneficiairies/ stakeholder and with photographs) are often included as boxes in project reports and evaluations, thereby adding adding a human dimension to an otherwise dry description and data.

- Case studies may offer you an opportunity to gather evidence that challenges prevailing assumptions about a research problem and provide a new set of recommendations applied to practice that have not been tested previously.

Disadvantages

- Case studies may be marked by a lack of rigour (e.g. a study may not be sufficiently in-depth or a single case study may not be sufficient)

- Single case studies may offer very little basis for generalisations of findings and conclusions.

- Case studies often tend to be success stories (so they may involve a degree of bias).

Where to next?

Click here to return to the top of the page, here to return to step 3 (Data checking) and here to see a short worked example of a metrics-based evaluation.

This site uses cookies to optimize functionality and give you the best possible experience. If you continue to navigate this website beyond this page, cookies will be placed on your browser. To learn more about cookies, click here .

Search form

202-624-3270

- Mission and Vision

- Board of Directors

- Leadership Council

- Policy Principles & Priorities

- Policy Steering Committee

- Stakeholders

- All Resources

- Health Equity & Access

- Improving the Patient Experience

- Digital Care

- Privacy & Cybersecurity

- Member Benefits

- Become a Member

- Get Involved

- Our Members

- Executives in Focus

- Upcoming Events

- Past Events

- News Releases

- Member News

- Join Our Mailing List

Case Study: Remote Patient Monitoring

Interoperability.

One top-three integrated delivery network (IDN) was facing a challenge: it was unable to manage the growing number of patients with chronic conditions. Past remote patient monitoring (RPM) programs lived outside the EHR in onerous web-based dashboards because integrating data into flowsheets and existing workflows came with exorbitant costs. To meet the various needs of stakeholders, the IDN partnered with Validic to implement a cost-effective approach to RPM.

Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

Enter the email address you signed up with and we'll email you a reset link.

- We're Hiring!

- Help Center

Monitoring and Evaluation in the Public Sector: A Case Study of the Department of Rural Development and Land Reform in South Africa

Since the publication of the Government-Wide Monitoring and Evaluation Policy Framework (GWM&EPF) by the Presidency in South Africa (SA), several policy documents giving direction, clarifying context, purpose, vision, and strategies of M&E were developed. In many instances broad guidelines stipulate how M&E should be implemented at the institutional level, and linked with managerial systems such as planning, budgeting, project management and reporting. This research was undertaken to examine how the „institutionalisation‟ of M&E supports meaningful project implementation within the public sector in South Africa (SA), with specific reference to the Department of Rural Development and Land Reform (DRD&LR). This paper provides a theoretical and analytical framework on how M&E should be “institutionalised”, by emphasising that the IM&E is essential in the public sector, to both improve service delivery and ensure good governance. It is also argued that the M&E has the potential to support meaningful implementation, promote organisational development, enhance organisational learning and support service delivery.

Related Papers

James Malesela

The global context provides an important platform for governments to build and sustain their M&E systems by adopting the best practices and lessons. Monitoring and evaluation (M&E) in South African government has gradually been recognised as a mechanism to enhance good governance. The advent of framework for the government-wide M&E inculcates a culture of reflection and importance of keeping track of the policy, programme or project implementation. M&E form an indispensable part of public management and administrative tools accessible for managers to improve the business processes of the institution. M&E therefore provides a significant panacea for the growing pressure on the institutions to enhance good governance. The principles of good governance comprise accountability, transparency, rule of law, public participation, responsiveness and effectiveness. These principles correlate precisely with the values governing public administration enshrined in the Constitution of the Republic of South Africa, 1996. They serve as standards and indicators to monitor and measure performance. The relevance of monitoring, evaluation and good governance in Public Administration is inevitable. M&E cuts across the generic administrative and managerial functions of public administration while good governance demonstrates/exhibits the outcome of functional M&E.

Niringiye Ignatius

Philipp Krause

Roan Neethling , daniel meyer

The 1994 democratic rule and Constitution of 1996 shaped the way in which service delivery would be transformed in South Africa. This was done by developing new structures and policies that would ultimately attempt to create equity and fairness in the provision of services within the municipal sphere to all residents. This article analyses the perceptions of business owners regarding the creation of an enabling environment and service delivery within one of the best performing municipalities in Gauteng: the Midvaal Local Municipal area. A total of 50 business owners were interviewed by means of a quantitative questionnaire. Data were statistically analysed by using descriptive data as well as a chi-square cross tabulation. The results revealed that the general perception of service delivery is above acceptable levels. However, in some categories the business owners were less satisfied regarding land use and zoning process and regulations. Overall, the business owners felt that the local government was creating an enabling environment for business to prosper. No significant statistical difference was found regarding perceptions of service delivery and the enabling environment, between small and large businesses in the study area. This type of analysis provides the foundation for improved service delivery and policy development and allows for future comparative analysis research into local government. The research has also placed the relationship between good governance, service delivery and the creation of an enabling environment in the spotlight.

Zwelibanzi Mpehle

Gerrit Van der Waldt

Paschal ResearchTrainers

Lebogang L Nawa

The institutionalisation of cultural policy has, to date, become an effective tool for cultureled development in some parts of the world. South Africa is yet to fully embrace this phenomenon in its developmental matrix. While the government has introduced certain strategies, such as the Integrated Development Plan (IDP), to coordinate its post-apartheid development imperatives across all of its spheres, role players, such as politicians, town planners and developers, continue to carry on with their subjective approaches to development, without culture as the mediator. This perpetuates the fragmentation of spatial landscapes and infrastructure networks in these areas along racial and cultural lines. This article suggests that South Africa may benefit from formulating local, cultural policies for the revitalisation of decaying cities into new integrated, liveable and vibrant residential, business and sporting environs.