This website may not work correctly because your browser is out of date. Please update your browser .

- Comparative case studies

- Comparative case studies File type PDF File size 510.74 KB

This guide, written by Delwyn Goodrick for UNICEF, focuses on the use of comparative case studies in impact evaluation.

The paper gives a brief discussion of their use and then outlines when it is appropriate to use them. It then provides step by step guidance on their use for an impact evaluation.

"A case study is an in-depth examination, often undertaken over time, of a single case – such as a policy, programme, intervention site, implementation process or participant. Comparative case studies cover two or more cases in a way that produces more generalizable knowledge about causal questions – how and why particular programmes or policies work or fail to work.

Comparative case studies are undertaken over time and emphasize comparison within and across contexts. Comparative case studies may be selected when it is not feasible to undertake an experimental design and/or when there is a need to understand and explain how features within the context influence the success of programme or policy initiatives. This information is valuable in tailoring interventions to support the achievement of intended outcomes."

- Comparative case studies: a brief description

- When is it appropriate to use this method?

- How to conduct comparative case studies

- Ethical issues and practical limitations

- Which other methods work well with this one?

- Presentation of results and analysis

- Example of good practices

- Examples of challenges

Goodrick, D., (2014), Comparative Case Studies, UNICEF. Retrieved from: http://devinfolive.info/impact_evaluation/img/downloads/Comparative_Case_Studies_ENG.pdf

What does a non-experimental evaluation look like? How can we evaluate interventions implemented across multiple contexts, where constructing a control group is not feasible?

This is part of a series

- UNICEF Impact Evaluation series

- Overview of impact evaluation

- Overview: Strategies for causal attribution

- Overview: Data collection and analysis methods in impact evaluation

- Theory of change

- Evaluative criteria

- Evaluative reasoning

- Participatory approaches

- Randomized controlled trials (RCTs)

- Randomized controlled trials (RCTs) video guide

- Quasi-experimental design and methods

- Developing and selecting measures of child well-being

- Interviewing

- UNICEF webinar: Overview of impact evaluation

- UNICEF webinar: Overview of data collection and analysis methods in Impact Evaluation

- UNICEF webinar: Theory of change

- UNICEF webinar: Overview: strategies for causal inference

- UNICEF webinar: Participatory approaches in impact evaluation

- UNICEF webinar: Randomized controlled trials

- UNICEF webinar: Comparative case studies

- UNICEF webinar: Quasi-experimental design and methods

'Comparative case studies ' is referenced in:

- Developing a research agenda for impact evaluation

- Impact evaluation

Back to top

© 2022 BetterEvaluation. All right reserved.

Sign up for our newsletter

- Evidence & Evaluation

- Evaluation guidance

- Impact evaluation with small cohorts

- Get started with small n evaluation

Comparative Case Study

Share content.

A comparative case study (CCS) is defined as ‘the systematic comparison of two or more data points (“cases”) obtained through use of the case study method’ (Kaarbo and Beasley 1999, p. 372). A case may be a participant, an intervention site, a programme or a policy. Case studies have a long history in the social sciences, yet for a long time, they were treated with scepticism (Harrison et al. 2017). The advent of grounded theory in the 1960s led to a revival in the use of case-based approaches. From the early 1980s, the increase in case study research in the field of political sciences led to the integration of formal, statistical and narrative methods, as well as the use of empirical case selection and causal inference (George and Bennett 2005), which contributed to its methodological advancement. Now, as Harrison and colleagues (2017) note, CCS:

“Has grown in sophistication and is viewed as a valid form of inquiry to explore a broad scope of complex issues, particularly when human behavior and social interactions are central to understanding topics of interest.”

It is claimed that CCS can be applied to detect causal attribution and contribution when the use of a comparison or control group is not feasible (or not preferred). Comparing cases enables evaluators to tackle causal inference through assessing regularity (patterns) and/or by excluding other plausible explanations. In practical terms, CCS involves proposing, analysing and synthesising patterns (similarities and differences) across cases that share common objectives.

What is involved?

Goodrick (2014) outlines the steps to be taken in undertaking CCS.

Key evaluation questions and the purpose of the evaluation: The evaluator should explicitly articulate the adequacy and purpose of using CCS (guided by the evaluation questions) and define the primary interests. Formulating key evaluation questions allows the selection of appropriate cases to be used in the analysis.

Propositions based on the Theory of Change: Theories and hypotheses that are to be explored should be derived from the Theory of Change (or, alternatively, from previous research around the initiative, existing policy or programme documentation).

Case selection: Advocates for CCS approaches claim an important distinction between case-oriented small n studies and (most typically large n) statistical/variable-focused approaches in terms of the process of selecting cases: in case-based methods, selection is iterative and cannot rely on convenience and accessibility. ‘Initial’ cases should be identified in advance, but case selection may continue as evidence is gathered. Various case-selection criteria can be identified depending on the analytic purpose (Vogt et al., 2011). These may include:

- Very similar cases

- Very different cases

- Typical or representative cases

- Extreme or unusual cases

- Deviant or unexpected cases

- Influential or emblematic cases

Identify how evidence will be collected, analysed and synthesised: CCS often applies mixed methods.

Test alternative explanations for outcomes: Following the identification of patterns and relationships, the evaluator may wish to test the established propositions in a follow-up exploratory phase. Approaches applied here may involve triangulation, selecting contradicting cases or using an analytical approach such as Qualitative Comparative Analysis (QCA). Download a Comparative Case Study here Download a longer briefing on Comparative Case Studies here

Useful resources

A webinar shared by Better Evaluation with an overview of using CCS for evaluation.

A short overview describing how to apply CCS for evaluation:

Goodrick, D. (2014). Comparative Case Studies, Methodological Briefs: Impact Evaluation 9 , UNICEF Office of Research, Florence.

An extensively used book that provides a comprehensive critical examination of case-based methods:

Byrne, D. and Ragin, C. C. (2009). The Sage handbook of case-based methods . Sage Publications.

This page deals with the set of methods used in comparative case study analysis, which focuses on comparing a small or medium number of cases and qualitative data. Structured case study comparisons are a way to leverage theoretical lessons from particular cases and elicit general insights from a population of phenomena that share certain characteristics. The content on this page discusses variable-oriented analysis (guided by frameworks), formal concept analysis and qualitative comparative analysis.

The Chapter summary video gives a brief introduction and summary of this group of methods, what SES problems/questions they are useful for, and key resources needed to conduct the methods. The methods video/s introduce specific methods, including their origin and broad purpose, what SES problems/questions the specific method is useful for, examples of the method in use and key resources needed. The Case Studies demonstrate the method in action in more detail, including an introduction to the context and issue, how the method was used, the outcomes of the process and the challenges of implementing the method. The labs/activities give an example of a teaching activity relating to this group of methods, including the objectives of the activity, resources needed, steps to follow and outcomes/evaluation options.

More details can be found in Chapter 20 of the Routledge Handbook of Research Methods for Social-Ecological Systems.

Chapter summary:

Method Summaries

Case studies, comparative case study analysis: comparison of 6 fishing producer organizations.

Dudouet, B. (2023)

Lab teaching/ activity

Tips and tricks.

- Basurto, X., S. Gelcich, and E. Ostrom. 2013. ‘The Social-Ecological System Framework as a Knowledge Classificatory System for Benthic Small-Scale Fisheries.’ Global Environmental Change 23(6): 1366–1380.

- Binder, C., J. Hinkel, P.W.G. Bots, and C. Pahl-Wostl. 2013. ‘Comparison of Frameworks for Analyzing Social-Ecological Systems.’ Ecology and Society 18(4): 26.

- Ragin, C. 2000. Fuzzy-Set Social Science . Chicago: University of Chicago Press.

- Schneider C.Q., and C. Wagemann. 2012. Set-theoretic Methods for the Social Sciences. A Guide to Qualitative Comparative Analysis . Cambridge: Cambridge University Press.

- Villamayor-Tomas, S., C. Oberlack, G. Epstein, S. Partelow, M. Roggero, E. Kellner, M. Tschopp, and M. Cox. 2020. ‘Using Case Study Data to Understand SES Interactions: A Model-centered Meta-analysis of SES Framework Applications.’ Current Opinion in Environmental Sustainability .

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Transl Behav Med

- v.4(2); 2014 Jun

Using qualitative comparative analysis to understand and quantify translation and implementation

Heather kane.

RTI International, 3040 Cornwallis Road, Research Triangle Park, P.O. Box 12194, Durham, NC 27709 USA

Megan A Lewis

Pamela a williams, leila c kahwati.

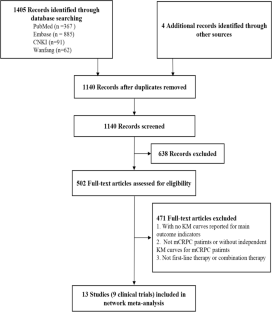

Understanding the factors that facilitate implementation of behavioral medicine programs into practice can advance translational science. Often, translation or implementation studies use case study methods with small sample sizes. Methodological approaches that systematize findings from these types of studies are needed to improve rigor and advance the field. Qualitative comparative analysis (QCA) is a method and analytical approach that can advance implementation science. QCA offers an approach for rigorously conducting translational and implementation research limited by a small number of cases. We describe the methodological and analytic approach for using QCA and provide examples of its use in the health and health services literature. QCA brings together qualitative or quantitative data derived from cases to identify necessary and sufficient conditions for an outcome. QCA offers advantages for researchers interested in analyzing complex programs and for practitioners interested in developing programs that achieve successful health outcomes.

INTRODUCTION

In this paper, we describe the methodological features and advantages of using qualitative comparative analysis (QCA). QCA is sometimes called a “mixed method.” It refers to both a specific research approach and an analytic technique that is distinct from and offers several advantages over traditional qualitative and quantitative methods [ 1 – 4 ]. It can be used to (1) analyze small to medium numbers of cases (e.g., 10 to 50) when traditional statistical methods are not possible, (2) examine complex combinations of explanatory factors associated with translation or implementation “success,” and (3) combine qualitative and quantitative data using a unified and systematic analytic approach.

This method may be especially pertinent for behavioral medicine given the growing interest in implementation science [ 5 ]. Translating behavioral medicine research and interventions into useful practice and policy requires an understanding of the implementation context. Understanding the context under which interventions work and how different ways of implementing an intervention lead to successful outcomes are required for “T3” (i.e., dissemination and implementation of evidence-based interventions) and “T4” translations (i.e., policy development to encourage evidence-based intervention use among various stakeholders) [ 6 , 7 ].

Case studies are a common way to assess different program implementation approaches and to examine complex systems (e.g., health care delivery systems, interventions in community settings) [ 8 ]. However, multiple case studies often have small, naturally limited samples or populations; small samples and populations lack adequate power to support conventional, statistical analyses. Case studies also may use mixed-method approaches, but typically when researchers collect quantitative and qualitative data in tandem, they rarely integrate both types of data systematically in the analysis. QCA offers solutions for the challenges posed by case studies and provides a useful analytic tool for translating research into policy recommendations. Using QCA methods could aid behavioral medicine researchers who seek to translate research from randomized controlled trials into practice settings to understand implementation. In this paper, we describe the conceptual basis of QCA, its application in the health and health services literature, and its features and limitations.

CONCEPTUAL BASIS OF QCA

QCA has its foundations in historical, comparative social science. Researchers in this field developed QCA because probabilistic methods failed to capture the complexity of social phenomena and required large sample sizes [ 1 ]. Recently, this method has made inroads into health research and evaluation [ 9 – 13 ] because of several useful features as follows: (1) it models equifinality , which is the ability to identify more than one causal pathway to an outcome (or absence of the outcome); (2) it identifies conjunctural causation , which means that single conditions may not display their effects on their own, but only in conjunction with other conditions; and (3) it implies asymmetrical relationships between causal conditions and outcomes, which means that causal pathways for achieving the outcome differ from causal pathways for failing to achieve the outcome.

QCA is a case-oriented approach that examines relationships between conditions (similar to explanatory variables in regression models) and an outcome using set theory; a branch of mathematics or of symbolic logic that deals with the nature and relations of sets. A set-theoretic approach to modeling causality differs from probabilistic methods, which examines the independent, additive influence of variables on an outcome. Regression models, based on underlying assumptions about sampling and distribution of the data, ask “what factor, holding all other factors constant at each factor’s average, will increase (or decrease) the likelihood of an outcome .” QCA, an approach based on the examination of set, subset, and superset relationships, asks “ what conditions —alone or in combination with other conditions—are necessary or sufficient to produce an outcome .” For additional QCA definitions, see Ragin [ 4 ].

Necessary conditions are those that exhibit a superset relationship with the outcome set and are conditions or combinations of conditions that must be present for an outcome to occur. In assessing necessity, a researcher “identifies conditions shared by cases with the same outcome” [ 4 ] (p. 20). Figure 1 shows a hypothetical example. In this figure, condition X is a necessary condition for an effective intervention because all cases with condition X are also members of the set of cases with the outcome present; however, condition X is not sufficient for an effective intervention because it is possible to be a member of the set of cases with condition X, but not be a member of the outcome set [ 14 ].

Necessary and sufficient conditions and set-theoretic relationships

Sufficient conditions exhibit subset relationships with an outcome set and demonstrate that “the cause in question produces the outcome in question” [ 3 ] (p. 92). Figure 1 shows the multiple and different combinations of conditions that produce the hypothetical outcome, “effective intervention,” (1) by having condition A present, (2) by having condition D present, or (3) by having the combination of conditions B and C present. None of these conditions is necessary and any one of these conditions or combinations of conditions is sufficient for the outcome of an effective intervention.

QCA AS AN APPROACH AND AS AN ANALYTIC TECHNIQUE

The term “QCA” is sometimes used to refer to the comparative research approach but also refers to the “analytic moment” during which Boolean algebra and set theory logic is applied to truth tables constructed from data derived from included cases. Figure 2 characterizes this distinction. Although this figure depicts steps as sequential, like many research endeavors, these steps are somewhat iterative, with respecification and reanalysis occurring along the way to final findings. We describe each of the essential steps of QCA as an approach and analytic technique and provide examples of how it has been used in health-related research.

QCA as an approach and as an analytic technique

Operationalizing the research question

Like other types of studies, the first step involves identifying the research question(s) and developing a conceptual model. This step guides the study as a whole and also informs case, condition (c.f., variable), and outcome selection. As mentioned above, QCA frames research questions differently than traditional quantitative or qualitative methods. Research questions appropriate for a QCA approach would seek to identify the necessary and sufficient conditions required to achieve the outcome. Thus, formulating a QCA research question emphasizes what program components or features—individually or in combination—need to be in place for a program or intervention to have a chance at being effective (i.e., necessary conditions) and what program components or features—individually or in combination—would produce the outcome (i.e., sufficient conditions). For example, a set theoretic hypothesis would be as follows: If a program is supported by strong organizational capacity and a comprehensive planning process, then the program will be successful. A hypothesis better addressed by probabilistic methods would be as follows: Organizational capacity, holding all other factors constant, increases the likelihood that a program will be successful.

For example, Longest and Thoits [ 15 ] drew on an extant stress process model to assess whether the pathways leading to psychological distress differed for women and men. Using QCA was appropriate for their study because the stress process model “suggests that particular patterns of predictors experienced in tandem may have unique relationships with health outcomes” (p. 4, italics added). They theorized that predictors would exhibit effects in combination because some aspects of the stress process model would buffer the risk of distress (e.g., social support) while others simultaneously would increase the risk (e.g., negative life events).

Identify cases

The number of cases in a QCA analysis may be determined by the population (e.g., 10 intervention sites, 30 grantees). When particular cases can be chosen from a larger population, Berg-Schlosser and De Meur [ 16 ] offer other strategies and best practices for choosing cases. Unless the number of cases relies on an existing population (i.e., 30 programs or grantees), the outcome of interest and existing theory drive case selection, unlike variable-oriented research [ 3 , 4 ] in which numbers are driven by statistical power considerations and depend on variation in the dependent variable. For use in causal inference, both cases that exhibit and do not exhibit the outcome should be included [ 16 ]. If a researcher is interested in developing typologies or concept formation, he or she may wish to examine similar cases that exhibit differences on the outcome or to explore cases that exhibit the same outcome [ 14 , 16 ].

For example, Kahwati et al. [ 9 ] examined the structure, policies, and processes that might lead to an effective clinical weight management program in a large national integrated health care system, as measured by mean weight loss among patients treated at the facility. To examine pathways that lead to both better and poorer facility-level weight loss, 11 facilities from among those with the largest weight loss outcomes and 11 facilities from among those with the smallest were included. By choosing cases based on specific outcomes, Kahwati et al. could identify multiple patterns of success (or failure) that explain the outcome rather than the variability associated with the outcome.

Identify conditions and outcome sets

Selecting conditions relies on the research question, conceptual model, and number of cases similar to other research methods. Conditions (or “sets” or “condition sets”) refer to the explanatory factors in a model; they are similar to variables. Because QCA research questions assess necessary and sufficient conditions, a researcher should consider which conditions in the conceptual model would theoretically produce the outcome individually or in combination. This helps to focus the analysis and number of conditions. Ideally, for a case study design with a small (e.g., 10–15) or intermediate (e.g., 16–100) number of cases, one should aim for fewer than five conditions because in QCA a researcher assesses all possible configurations of conditions. Adding conditions to the model increases the possible number of combinations exponentially (i.e., 2 k , where k = the number of conditions). For three conditions, eight possible combinations of the selected conditions exist as follows: the presence of A, B, C together, the lack of A with B and C present, the lack of A and lack of B with C present, and so forth. Having too many conditions will likely mean that no cases fall into a particular configuration, and that configuration cannot be assessed by empirical examples. When one or more configurations are not represented by the cases, this is known as limited diversity, and QCA experts suggest multiple strategies for managing such situations [ 4 , 14 ].

For example, Ford et al. [ 10 ] studied health departments’ implementation of core public health functions and organizational factors (e.g., resource availability, adaptability) and how those conditions lead to superior and inferior population health changes. They operationalized three core public functions (i.e., assessment of environmental and population public health needs, capacity for policy development, and authority over assurance of healthcare operations) and operationalized those for their study by using composite measures of varied health indicators compiled in a UnitedHealth Group report. In this examination of 41 state health departments, the authors found that all three core public health functions were necessary for population health improvement. The absence of any of the core public health functions was sufficient for poorer population health outcomes; thus, only the health departments with the ability to perform all three core functions had improved outcomes. Additionally, these three core functions in combination with either resource availability or adaptability were sufficient combinations (i.e., causal pathways) for improved population health outcomes.

Calibrate condition and outcome sets

Calibration refers to “adjusting (measures) so that they match or conform to dependably known standards” and is a common way of standardizing data in the physical sciences [ 4 ] (p. 72). Calibration requires the researcher to make sense of variation in the data and apply expert knowledge about what aspects of the variation are meaningful. Because calibration depends on defining conditions based on those “dependably known standards,” QCA relies on expert substantive knowledge, theory, or criteria external to the data themselves [ 14 ]. This may require researchers to collaborate closely with program implementers.

In QCA, one can use “crisp” set or “fuzzy” set calibration. Crisp sets, which are similar to dichotomous categorical variables in regression, establish decision rules defining a case as fully in the set (i.e., condition) or fully out of the set; fuzzy sets establish degrees of membership in a set. Fuzzy sets “differentiate between different levels of belonging anchored by two extreme membership scores at 1 and 0” [ 14 ] (p.28). They can be continuous (0, 0.1, 0.2,..) or have qualitatively defined anchor points (e.g., 0 is fully out of the set; 0.33 is more out than in the set; 0.66 is more in than out of the set; 1 is fully in the set). A researcher selects fuzzy sets and the corresponding resolution (i.e., continuous, four cutoff points, six cutoff) based on theory and meaningful differences between cases and must be able to provide a verbal description for each cutoff point [ 14 ]. If, for example, a researcher cannot distinguish between 0.7 and 0.8 membership in a set, then a more continuous scoring of cases would not be useful, rather a four point cutoff may better characterize the data. Although crisp and fuzzy sets are more commonly used, new multivariate forms of QCA are emerging as are variants that incorporate elements of time [ 14 , 17 , 18 ].

Fuzzy sets have the advantage of maintaining more detail for data with continuous values. However, this strength also makes interpretation more difficult. When an observation is coded with fuzzy sets, a particular observation has some degree of membership in the set “condition A” and in the set “condition NOT A.” Thus, when doing analyses to identify sufficient conditions, a researcher must make a judgment call on what benchmark constitutes recommendation threshold for policy or programmatic action.

In creating decision rules for calibration, a researcher can use a variety of techniques to identify cutoff points or anchors. For qualitative conditions, a researcher can define decision rules by drawing from the literature and knowledge of the intervention context. For conditions with numeric values, a researcher can also employ statistical approaches. Ideally, when using statistical approaches, a researcher should establish thresholds using substantive knowledge about set membership (thus, translating variation into meaningful categories). Although measures of central tendency (e.g., cases with a value above the median are considered fully in the set) can be used to set cutoff points, some experts consider the sole use of this method to be flawed because case classification is determined by a case’s relative value in regard to other cases as opposed to its absolute value in reference to an external referent [ 14 ].

For example, in their study of National Cancer Institutes’ Community Clinical Oncology Program (NCI CCOP), Weiner et al. [ 19 ] had numeric data on their five study measures. They transformed their study measures by using their knowledge of the CCOP and by asking NCI officials to identify three values: full membership in a set, a point of maximum ambiguity, and nonmembership in the set. For their outcome set, high accrual in clinical trials, they established 100 patients enrolled accrual as fully in the set of high accrual, 70 as a point of ambiguity (neither in nor out of the set), and 50 and below as fully out of the set because “CCOPs must maintain a minimum of 50 patients to maintain CCOP funding” (p. 288). By using QCA and operationalizing condition sets in this way, they were able to answer what condition sets produce high accrual, not what factors predict more accrual. The advantage is that by using this approach and analytic technique, they were able to identify sets of factors that are linked with a very specific outcome of interest.

Obtain primary or secondary data

Data sources vary based on the study, availability of the data, and feasibility of data collection; data can be qualitative or quantitative, a feature useful for mixed-methods studies and systematically integrating these different types of data is a major strength of this approach. Qualitative data include program documents and descriptions, key informant interviews, and archival data (e.g., program documents, records, policies); quantitative data consists of surveys, surveillance or registry data, and electronic health records.

For instance, Schensul et al. [ 20 ] relied on in-depth interviews for their analysis; Chuang et al. [ 21 ] and Longest and Thoits [ 15 ] drew on survey data for theirs. Kahwati et al. [ 9 ] used a mixed-method approach combining data from key informant interviews, program documents, and electronic health records. Any type of data can be used to inform the calibration of conditions.

Assign set membership scores

Assigning set membership scores involves applying the decision rules that were established during the calibration phase. To accomplish this, the research team should then use the extracted data for each case, apply the decision rule for the condition, and discuss discrepancies in the data sources. In their study of factors that influence health care policy development in Florida, Harkreader and Imershein [ 22 ] coded contextual factors that supported state involvement in the health care market. Drawing on a review of archival data and using crisp set coding, they assigned a value of 1 for the presence of a contextual factor (e.g., presence of federal financial incentives promoting policy, unified health care provider policy position in opposition to state policy, state agency supporting policy position) and 0 for the absence of a contextual factor.

Construct truth table

After completing the coding, researchers create a “truth table” for analysis. A truth table lists all of the possible configurations of conditions, the number of cases that fall into that configuration, and the “consistency” of the cases. Consistency quantifies the extent to which cases that share similar conditions exhibit the same outcome; in crisp sets, the consistency value is the proportion of cases that exhibit the outcome. Fuzzy sets require a different calculation to establish consistency and are described at length in other sources [ 1 – 4 , 14 ]. Table 1 displays a hypothetical truth table for three conditions using crisp sets.

Sample of a hypothetical truth table for crisp sets

1 fully in the set, 0 fully out of the set

QCA AS AN ANALYTIC TECHNIQUE

The research steps to this point fall into QCA as an approach to understanding social and health phenomena. Analysis of the truth table is the sine qua non of QCA as an analytic technique. In this section, we provide an overview of the analysis process, but analytic techniques and emerging forms of analysis are described in multiple texts [ 3 , 4 , 14 , 17 ]. The use of computer software to conduct truth table analysis is recommended and several software options are available including Stata, fsQCA, Tosmana, and R.

A truth table analysis first involves the researcher assessing which (if any) conditions are individually necessary or sufficient for achieving the outcome, and then second, examining whether any configurations of conditions are necessary or sufficient. In instances where contradictions in outcomes from the same configuration pattern occur (i.e., one case from a configuration has the outcome; one does not), the researcher should also consider whether the model is properly specified and conditions are calibrated accurately. Thus, this stage of the analysis may reveal the need to review how conditions are defined and whether the definition should be recalibrated. Similar to qualitative and quantitative research approaches, analysis is iterative.

Additionally, the researcher examines the truth table to assess whether all logically possible configurations have empiric cases. As described above, when configurations lack cases, the problem of limited diversity occurs. Configurations without representative cases are known as logical remainders, and the researcher must consider how to deal with those. The analysis of logical remainders depends on the particular theory guiding the research and the research priorities. How a researcher manages the logical remainders has implications for the final solution, but none of the solutions based on the truth table will contradict the empirical evidence [ 14 ]. To generate the most conservative solution term, a researcher makes no assumptions about truth table rows with no cases (or very few cases in larger N studies) and excludes them from the logical minimization process. Alternately, a researcher can choose to include (or exclude) rows with no cases from analysis, which would generate a solution that is a superset of the conservative solution. Choosing inclusion criteria for logical remainders also depends on theory and what may be empirically possible. For example, in studying governments, it would be unlikely to have a case that is a democracy (“condition A”), but has a dictator (“condition B”). In that circumstance, the researcher may choose to exclude that theoretically implausible row from the logical minimization process.

Third, once all the solutions have been identified, the researcher mathematically reduces the solution [ 1 , 14 ]. For example, if the list of solutions contains two identical configurations, except that in one configuration A is absent and in the other A is present, then A can be dropped from those two solutions. Finally, the researcher computes two parameters of fit: coverage and consistency. Coverage determines the empirical relevance of a solution and quantifies the variation in causal pathways to an outcome [ 14 ]. When coverage of a causal pathway is high, the more common the solution is, and more of the outcome is accounted for by the pathway. However, maximum coverage may be less critical in implementation research because understanding all of the pathways to success may be as helpful as understanding the most common pathway. Consistency assesses whether the causal pathway produces the outcome regularly (“the degree to which the empirical data are in line with a postulated subset relation,” p. 324 [ 14 ]); a high consistency value (e.g., 1.00 or 100 %) would indicate that all cases in a causal pathway produced the outcome. A low consistency value would suggest that a particular pathway was not successful in producing the outcome on a regular basis, and thus, for translational purposes, should not be recommended for policy or practice changes. A causal pathway with high consistency and coverage values indicates a result useful for providing guidance; a high consistency with a lower coverage score also has value in showing a causal pathway that successfully produced the outcome, but did so less frequently.

For example, Kahwati et al. [ 9 ] examined their truth table and analyzed the data for single conditions and combinations of conditions that were necessary for higher or lower facility-level patient weight loss outcomes. The truth table analysis revealed two necessary conditions and four sufficient combinations of conditions. Because of significant challenges with logical remainders, they used a bottom-up approach to assess whether combinations of conditions yielded the outcome. This entailed pairing conditions to ensure parsimony and maximize coverage. With a smaller number of conditions, a researcher could hypothetically find that more cases share similar characteristics and could assess whether those cases exhibit the same outcome of interest.

At the completion of the truth table analysis, Kahwati et al. [ 9 ] used the qualitative data from site interviews to provide rich examples to illustrate the QCA solutions that were identified, which explained what the solutions meant in clinical practice for weight management. For example, having an involved champion (usually a physician), in combination with low facility accountability, was sufficient for program success (i.e., better weight loss outcomes) and was related to better facility weight loss. In reviewing the qualitative data, Kahwati et al. [ 9 ] discovered that involved champions integrate program activities into their clinical routines and discuss issues as they arise with other program staff. Because involved champions and other program staff communicated informally on a regular basis, formal accountability structures were less of a priority.

ADVANTAGES AND LIMITATIONS OF QCA

Because translational (and other health-related) researchers may be interested in which intervention features—alone or in combination—achieve distinct outcomes (e.g., achievement of program outcomes, reduction in health disparities), QCA is well suited for translational research. To assess combinations of variables in regression, a researcher relies on interaction effects, which, although useful, become difficult to interpret when three, four, or more variables are combined. Furthermore, in regression and other variable-oriented approaches, independent variables are held constant at the average across the study population to isolate the independent effect of that variable, but this masks how factors may interact with each other in ways that impact the ultimate outcomes. In translational research, context matters and QCA treats each case holistically, allowing each case to keep its own values for each condition.

Multiple case studies or studies with the organization as the unit of analysis often involve a small or intermediate number of cases. This hinders the use of standard statistical analyses; researchers are less likely to find statistical significance with small sample sizes. However, QCA draws on analyses of set relations to support small-N studies and to identify the conditions or combinations of conditions that are necessary or sufficient for an outcome of interest and may yield results when probabilistic methods cannot.

Finally, QCA is based on an asymmetric concept of causation , which means that the absence of a sufficient condition associated with an outcome does not necessarily describe the causal pathway to the absence of the outcome [ 14 ]. These characteristics can be helpful for translational researchers who are trying to study or implement complex interventions, where more than one way to implement a program might be effective and where studying both effective and ineffective implementation practices can yield useful information.

QCA has several limitations that researchers should consider before choosing it as a potential methodological approach. With small- and intermediate-N studies, QCA must be theory-driven and circumscribed by priority questions. That is, a researcher ideally should not use a “kitchen sink” approach to test every conceivable condition or combination of conditions because the number of combinations increases exponentially with the addition of another condition. With a small number of cases and too many conditions, the sample would not have enough cases to provide examples of all the possible configurations of conditions (i.e., limited diversity), or the analysis would be constrained to describing the characteristics of the cases, which would have less value than determining whether some conditions or some combination of conditions led to actual program success. However, if the number of conditions cannot be reduced, alternate QCA techniques, such as a bottom-up approach to QCA or two-step QCA, can be used [ 14 ].

Another limitation is that programs or clinical interventions involved in a cross-site analysis may have unique programs that do not seem comparable. Cases must share some degree of comparability to use QCA [ 16 ]. Researchers can manage this challenge by taking a broader view of the program(s) and comparing them on broader characteristics or concepts, such as high/low organizational capacity, established partnerships, and program planning, if these would provide meaningful conclusions. Taking this approach will require careful definition of each of these concepts within the context of a particular initiative. Definitions may also need to be revised as the data are gathered and calibration begins.

Finally, as mentioned above, crisp set calibration dichotomizes conditions of interest; this form of calibration means that in some cases, the finer grained differences and precision in a condition may be lost [ 3 ]. Crisp set calibration provides more easily interpretable and actionable results and is appropriate if researchers are primarily interested in the presence or absence of a particular program feature or organizational characteristic to understand translation or implementation.

QCA offers an additional methodological approach for researchers to conduct rigorous comparative analyses while drawing on the rich, detailed data collected as part of a case study. However, as Rihoux, Benoit, and Ragin [ 17 ] note, QCA is not a miracle method, nor a panacea for all studies that use case study methods. Furthermore, it may not always be the most suitable approach for certain types of translational and implementation research. We outlined the multiple steps needed to conduct a comprehensive QCA. QCA is a good approach for the examination of causal complexity, and equifinality could be helpful to behavioral medicine researchers who seek to translate evidence-based interventions in real-world settings. In reality, multiple program models can lead to success, and this method accommodates a more complex and varied understanding of these patterns and factors.

Implications

Practice : Identifying multiple successful intervention models (equifinality) can aid in selecting a practice model relevant to a context, and can facilitate implementation.

Policy : QCA can be used to develop actionable policy information for decision makers that accommodates contextual factors.

Research : Researchers can use QCA to understand causal complexity in translational or implementation research and to assess the relationships between policies, interventions, or procedures and successful outcomes.

What is Comparative Analysis and How to Conduct It? (+ Examples)

Appinio Research · 30.10.2023 · 36min read

Have you ever faced a complex decision, wondering how to make the best choice among multiple options? In a world filled with data and possibilities, the art of comparative analysis holds the key to unlocking clarity amidst the chaos.

In this guide, we'll demystify the power of comparative analysis, revealing its practical applications, methodologies, and best practices. Whether you're a business leader, researcher, or simply someone seeking to make more informed decisions, join us as we explore the intricacies of comparative analysis and equip you with the tools to chart your course with confidence.

What is Comparative Analysis?

Comparative analysis is a systematic approach used to evaluate and compare two or more entities, variables, or options to identify similarities, differences, and patterns. It involves assessing the strengths, weaknesses, opportunities, and threats associated with each entity or option to make informed decisions.

The primary purpose of comparative analysis is to provide a structured framework for decision-making by:

- Facilitating Informed Choices: Comparative analysis equips decision-makers with data-driven insights, enabling them to make well-informed choices among multiple options.

- Identifying Trends and Patterns: It helps identify recurring trends, patterns, and relationships among entities or variables, shedding light on underlying factors influencing outcomes.

- Supporting Problem Solving: Comparative analysis aids in solving complex problems by systematically breaking them down into manageable components and evaluating potential solutions.

- Enhancing Transparency: By comparing multiple options, comparative analysis promotes transparency in decision-making processes, allowing stakeholders to understand the rationale behind choices.

- Mitigating Risks : It helps assess the risks associated with each option, allowing organizations to develop risk mitigation strategies and make risk-aware decisions.

- Optimizing Resource Allocation: Comparative analysis assists in allocating resources efficiently by identifying areas where resources can be optimized for maximum impact.

- Driving Continuous Improvement: By comparing current performance with historical data or benchmarks, organizations can identify improvement areas and implement growth strategies.

Importance of Comparative Analysis in Decision-Making

- Data-Driven Decision-Making: Comparative analysis relies on empirical data and objective evaluation, reducing the influence of biases and subjective judgments in decision-making. It ensures decisions are based on facts and evidence.

- Objective Assessment: It provides an objective and structured framework for evaluating options, allowing decision-makers to focus on key criteria and avoid making decisions solely based on intuition or preferences.

- Risk Assessment: Comparative analysis helps assess and quantify risks associated with different options. This risk awareness enables organizations to make proactive risk management decisions.

- Prioritization: By ranking options based on predefined criteria, comparative analysis enables decision-makers to prioritize actions or investments, directing resources to areas with the most significant impact.

- Strategic Planning: It is integral to strategic planning, helping organizations align their decisions with overarching goals and objectives. Comparative analysis ensures decisions are consistent with long-term strategies.

- Resource Allocation: Organizations often have limited resources. Comparative analysis assists in allocating these resources effectively, ensuring they are directed toward initiatives with the highest potential returns.

- Continuous Improvement: Comparative analysis supports a culture of continuous improvement by identifying areas for enhancement and guiding iterative decision-making processes.

- Stakeholder Communication: It enhances transparency in decision-making, making it easier to communicate decisions to stakeholders. Stakeholders can better understand the rationale behind choices when supported by comparative analysis.

- Competitive Advantage: In business and competitive environments , comparative analysis can provide a competitive edge by identifying opportunities to outperform competitors or address weaknesses.

- Informed Innovation: When evaluating new products , technologies, or strategies, comparative analysis guides the selection of the most promising options, reducing the risk of investing in unsuccessful ventures.

In summary, comparative analysis is a valuable tool that empowers decision-makers across various domains to make informed, data-driven choices, manage risks, allocate resources effectively, and drive continuous improvement. Its structured approach enhances decision quality and transparency, contributing to the success and competitiveness of organizations and research endeavors.

How to Prepare for Comparative Analysis?

1. define objectives and scope.

Before you begin your comparative analysis, clearly defining your objectives and the scope of your analysis is essential. This step lays the foundation for the entire process. Here's how to approach it:

- Identify Your Goals: Start by asking yourself what you aim to achieve with your comparative analysis. Are you trying to choose between two products for your business? Are you evaluating potential investment opportunities? Knowing your objectives will help you stay focused throughout the analysis.

- Define Scope: Determine the boundaries of your comparison. What will you include, and what will you exclude? For example, if you're analyzing market entry strategies for a new product, specify whether you're looking at a specific geographic region or a particular target audience.

- Stakeholder Alignment: Ensure that all stakeholders involved in the analysis understand and agree on the objectives and scope. This alignment will prevent misunderstandings and ensure the analysis meets everyone's expectations.

2. Gather Relevant Data and Information

The quality of your comparative analysis heavily depends on the data and information you gather. Here's how to approach this crucial step:

- Data Sources: Identify where you'll obtain the necessary data. Will you rely on primary sources , such as surveys and interviews, to collect original data? Or will you use secondary sources, like published research and industry reports, to access existing data? Consider the advantages and disadvantages of each source.

- Data Collection Plan: Develop a plan for collecting data. This should include details about the methods you'll use, the timeline for data collection, and who will be responsible for gathering the data.

- Data Relevance: Ensure that the data you collect is directly relevant to your objectives. Irrelevant or extraneous data can lead to confusion and distract from the core analysis.

3. Select Appropriate Criteria for Comparison

Choosing the right criteria for comparison is critical to a successful comparative analysis. Here's how to go about it:

- Relevance to Objectives: Your chosen criteria should align closely with your analysis objectives. For example, if you're comparing job candidates, your criteria might include skills, experience, and cultural fit.

- Measurability: Consider whether you can quantify the criteria. Measurable criteria are easier to analyze. If you're comparing marketing campaigns, you might measure criteria like click-through rates, conversion rates, and return on investment.

- Weighting Criteria : Not all criteria are equally important. You'll need to assign weights to each criterion based on its relative importance. Weighting helps ensure that the most critical factors have a more significant impact on the final decision.

4. Establish a Clear Framework

Once you have your objectives, data, and criteria in place, it's time to establish a clear framework for your comparative analysis. This framework will guide your process and ensure consistency. Here's how to do it:

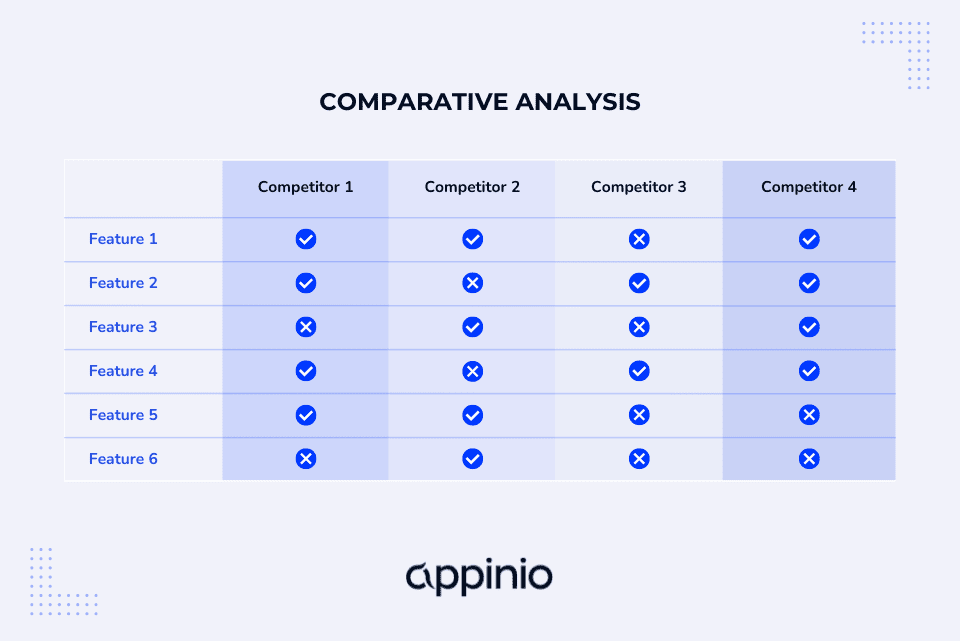

- Comparative Matrix: Consider using a comparative matrix or spreadsheet to organize your data. Each row in the matrix represents an option or entity you're comparing, and each column corresponds to a criterion. This visual representation makes it easy to compare and contrast data.

- Timeline: Determine the time frame for your analysis. Is it a one-time comparison, or will you conduct ongoing analyses? Having a defined timeline helps you manage the analysis process efficiently.

- Define Metrics: Specify the metrics or scoring system you'll use to evaluate each criterion. For example, if you're comparing potential office locations, you might use a scoring system from 1 to 5 for factors like cost, accessibility, and amenities.

With your objectives, data, criteria, and framework established, you're ready to move on to the next phase of comparative analysis: data collection and organization.

Comparative Analysis Data Collection

Data collection and organization are critical steps in the comparative analysis process. We'll explore how to gather and structure the data you need for a successful analysis.

1. Utilize Primary Data Sources

Primary data sources involve gathering original data directly from the source. This approach offers unique advantages, allowing you to tailor your data collection to your specific research needs.

Some popular primary data sources include:

- Surveys and Questionnaires: Design surveys or questionnaires and distribute them to collect specific information from individuals or groups. This method is ideal for obtaining firsthand insights, such as customer preferences or employee feedback.

- Interviews: Conduct structured interviews with relevant stakeholders or experts. Interviews provide an opportunity to delve deeper into subjects and gather qualitative data, making them valuable for in-depth analysis.

- Observations: Directly observe and record data from real-world events or settings. Observational data can be instrumental in fields like anthropology, ethnography, and environmental studies.

- Experiments: In controlled environments, experiments allow you to manipulate variables and measure their effects. This method is common in scientific research and product testing.

When using primary data sources, consider factors like sample size , survey design, and data collection methods to ensure the reliability and validity of your data.

2. Harness Secondary Data Sources

Secondary data sources involve using existing data collected by others. These sources can provide a wealth of information and save time and resources compared to primary data collection.

Here are common types of secondary data sources:

- Public Records: Government publications, census data, and official reports offer valuable information on demographics, economic trends, and public policies. They are often free and readily accessible.

- Academic Journals: Scholarly articles provide in-depth research findings across various disciplines. They are helpful for accessing peer-reviewed studies and staying current with academic discourse.

- Industry Reports: Industry-specific reports and market research publications offer insights into market trends, consumer behavior, and competitive landscapes. They are essential for businesses making strategic decisions.

- Online Databases: Online platforms like Statista , PubMed , and Google Scholar provide a vast repository of data and research articles. They offer search capabilities and access to a wide range of data sets.

When using secondary data sources, critically assess the credibility, relevance, and timeliness of the data. Ensure that it aligns with your research objectives.

3. Ensure and Validate Data Quality

Data quality is paramount in comparative analysis. Poor-quality data can lead to inaccurate conclusions and flawed decision-making. Here's how to ensure data validation and reliability:

- Cross-Verification: Whenever possible, cross-verify data from multiple sources. Consistency among different sources enhances the reliability of the data.

- Sample Size : Ensure that your data sample size is statistically significant for meaningful analysis. A small sample may not accurately represent the population.

- Data Integrity: Check for data integrity issues, such as missing values, outliers, or duplicate entries. Address these issues before analysis to maintain data quality.

- Data Source Reliability: Assess the reliability and credibility of the data sources themselves. Consider factors like the reputation of the institution or organization providing the data.

4. Organize Data Effectively

Structuring your data for comparison is a critical step in the analysis process. Organized data makes it easier to draw insights and make informed decisions. Here's how to structure data effectively:

- Data Cleaning: Before analysis, clean your data to remove inconsistencies, errors, and irrelevant information. Data cleaning may involve data transformation, imputation of missing values, and removing outliers.

- Normalization: Standardize data to ensure fair comparisons. Normalization adjusts data to a standard scale, making comparing variables with different units or ranges possible.

- Variable Labeling: Clearly label variables and data points for easy identification. Proper labeling enhances the transparency and understandability of your analysis.

- Data Organization: Organize data into a format that suits your analysis methods. For quantitative analysis, this might mean creating a matrix, while qualitative analysis may involve categorizing data into themes.

By paying careful attention to data collection, validation, and organization, you'll set the stage for a robust and insightful comparative analysis. Next, we'll explore various methodologies you can employ in your analysis, ranging from qualitative approaches to quantitative methods and examples.

Comparative Analysis Methods

When it comes to comparative analysis, various methodologies are available, each suited to different research goals and data types. In this section, we'll explore five prominent methodologies in detail.

Qualitative Comparative Analysis (QCA)

Qualitative Comparative Analysis (QCA) is a methodology often used when dealing with complex, non-linear relationships among variables. It seeks to identify patterns and configurations among factors that lead to specific outcomes.

- Case-by-Case Analysis: QCA involves evaluating individual cases (e.g., organizations, regions, or events) rather than analyzing aggregate data. Each case's unique characteristics are considered.

- Boolean Logic: QCA employs Boolean algebra to analyze data. Variables are categorized as either present or absent, allowing for the examination of different combinations and logical relationships.

- Necessary and Sufficient Conditions: QCA aims to identify necessary and sufficient conditions for a specific outcome to occur. It helps answer questions like, "What conditions are necessary for a successful product launch?"

- Fuzzy Set Theory: In some cases, QCA may use fuzzy set theory to account for degrees of membership in a category, allowing for more nuanced analysis.

QCA is particularly useful in fields such as sociology, political science, and organizational studies, where understanding complex interactions is essential.

Quantitative Comparative Analysis

Quantitative Comparative Analysis involves the use of numerical data and statistical techniques to compare and analyze variables. It's suitable for situations where data is quantitative, and relationships can be expressed numerically.

- Statistical Tools: Quantitative comparative analysis relies on statistical methods like regression analysis, correlation, and hypothesis testing. These tools help identify relationships, dependencies, and trends within datasets.

- Data Measurement: Ensure that variables are measured consistently using appropriate scales (e.g., ordinal, interval, ratio) for meaningful analysis. Variables may include numerical values like revenue, customer satisfaction scores, or product performance metrics.

- Data Visualization: Create visual representations of data using charts, graphs, and plots. Visualization aids in understanding complex relationships and presenting findings effectively.

- Statistical Significance: Assess the statistical significance of relationships. Statistical significance indicates whether observed differences or relationships are likely to be real rather than due to chance.

Quantitative comparative analysis is commonly applied in economics, social sciences, and market research to draw empirical conclusions from numerical data.

Case Studies

Case studies involve in-depth examinations of specific instances or cases to gain insights into real-world scenarios. Comparative case studies allow researchers to compare and contrast multiple cases to identify patterns, differences, and lessons.

- Narrative Analysis: Case studies often involve narrative analysis, where researchers construct detailed narratives of each case, including context, events, and outcomes.

- Contextual Understanding: In comparative case studies, it's crucial to consider the context within which each case operates. Understanding the context helps interpret findings accurately.

- Cross-Case Analysis: Researchers conduct cross-case analysis to identify commonalities and differences across cases. This process can lead to the discovery of factors that influence outcomes.

- Triangulation: To enhance the validity of findings, researchers may use multiple data sources and methods to triangulate information and ensure reliability.

Case studies are prevalent in fields like psychology, business, and sociology, where deep insights into specific situations are valuable.

SWOT Analysis

SWOT Analysis is a strategic tool used to assess the Strengths, Weaknesses, Opportunities, and Threats associated with a particular entity or situation. While it's commonly used in business, it can be adapted for various comparative analyses.

- Internal and External Factors: SWOT Analysis examines both internal factors (Strengths and Weaknesses), such as organizational capabilities, and external factors (Opportunities and Threats), such as market conditions and competition.

- Strategic Planning: The insights from SWOT Analysis inform strategic decision-making. By identifying strengths and opportunities, organizations can leverage their advantages. Likewise, addressing weaknesses and threats helps mitigate risks.

- Visual Representation: SWOT Analysis is often presented as a matrix or a 2x2 grid, making it visually accessible and easy to communicate to stakeholders.

- Continuous Monitoring: SWOT Analysis is not a one-time exercise. Organizations use it periodically to adapt to changing circumstances and make informed decisions.

SWOT Analysis is versatile and can be applied in business, healthcare, education, and any context where a structured assessment of factors is needed.

Benchmarking

Benchmarking involves comparing an entity's performance, processes, or practices to those of industry leaders or best-in-class organizations. It's a powerful tool for continuous improvement and competitive analysis.

- Identify Performance Gaps: Benchmarking helps identify areas where an entity lags behind its peers or industry standards. These performance gaps highlight opportunities for improvement.

- Data Collection: Gather data on key performance metrics from both internal and external sources. This data collection phase is crucial for meaningful comparisons.

- Comparative Analysis: Compare your organization's performance data with that of benchmark organizations. This analysis can reveal where you excel and where adjustments are needed.

- Continuous Improvement: Benchmarking is a dynamic process that encourages continuous improvement. Organizations use benchmarking findings to set performance goals and refine their strategies.

Benchmarking is widely used in business, manufacturing, healthcare, and customer service to drive excellence and competitiveness.

Each of these methodologies brings a unique perspective to comparative analysis, allowing you to choose the one that best aligns with your research objectives and the nature of your data. The choice between qualitative and quantitative methods, or a combination of both, depends on the complexity of the analysis and the questions you seek to answer.

How to Conduct Comparative Analysis?

Once you've prepared your data and chosen an appropriate methodology, it's time to dive into the process of conducting a comparative analysis. We will guide you through the essential steps to extract meaningful insights from your data.

1. Identify Key Variables and Metrics

Identifying key variables and metrics is the first crucial step in conducting a comparative analysis. These are the factors or indicators you'll use to assess and compare your options.

- Relevance to Objectives: Ensure the chosen variables and metrics align closely with your analysis objectives. When comparing marketing strategies, relevant metrics might include customer acquisition cost, conversion rate, and retention.

- Quantitative vs. Qualitative : Decide whether your analysis will focus on quantitative data (numbers) or qualitative data (descriptive information). In some cases, a combination of both may be appropriate.

- Data Availability: Consider the availability of data. Ensure you can access reliable and up-to-date data for all selected variables and metrics.

- KPIs: Key Performance Indicators (KPIs) are often used as the primary metrics in comparative analysis. These are metrics that directly relate to your goals and objectives.

2. Visualize Data for Clarity

Data visualization techniques play a vital role in making complex information more accessible and understandable. Effective data visualization allows you to convey insights and patterns to stakeholders. Consider the following approaches:

- Charts and Graphs: Use various types of charts, such as bar charts, line graphs, and pie charts, to represent data. For example, a line graph can illustrate trends over time, while a bar chart can compare values across categories.

- Heatmaps: Heatmaps are particularly useful for visualizing large datasets and identifying patterns through color-coding. They can reveal correlations, concentrations, and outliers.

- Scatter Plots: Scatter plots help visualize relationships between two variables. They are especially useful for identifying trends, clusters, or outliers.

- Dashboards: Create interactive dashboards that allow users to explore data and customize views. Dashboards are valuable for ongoing analysis and reporting.

- Infographics: For presentations and reports, consider using infographics to summarize key findings in a visually engaging format.

Effective data visualization not only enhances understanding but also aids in decision-making by providing clear insights at a glance.

3. Establish Clear Comparative Frameworks

A well-structured comparative framework provides a systematic approach to your analysis. It ensures consistency and enables you to make meaningful comparisons. Here's how to create one:

- Comparison Matrices: Consider using matrices or spreadsheets to organize your data. Each row represents an option or entity, and each column corresponds to a variable or metric. This matrix format allows for side-by-side comparisons.

- Decision Trees: In complex decision-making scenarios, decision trees help map out possible outcomes based on different criteria and variables. They visualize the decision-making process.

- Scenario Analysis: Explore different scenarios by altering variables or criteria to understand how changes impact outcomes. Scenario analysis is valuable for risk assessment and planning.

- Checklists: Develop checklists or scoring sheets to systematically evaluate each option against predefined criteria. Checklists ensure that no essential factors are overlooked.

A well-structured comparative framework simplifies the analysis process, making it easier to draw meaningful conclusions and make informed decisions.

4. Evaluate and Score Criteria

Evaluating and scoring criteria is a critical step in comparative analysis, as it quantifies the performance of each option against the chosen criteria.

- Scoring System: Define a scoring system that assigns values to each criterion for every option. Common scoring systems include numerical scales, percentage scores, or qualitative ratings (e.g., high, medium, low).

- Consistency: Ensure consistency in scoring by defining clear guidelines for each score. Provide examples or descriptions to help evaluators understand what each score represents.

- Data Collection: Collect data or information relevant to each criterion for all options. This may involve quantitative data (e.g., sales figures) or qualitative data (e.g., customer feedback).

- Aggregation: Aggregate the scores for each option to obtain an overall evaluation. This can be done by summing the individual criterion scores or applying weighted averages.

- Normalization: If your criteria have different measurement scales or units, consider normalizing the scores to create a level playing field for comparison.

5. Assign Importance to Criteria

Not all criteria are equally important in a comparative analysis. Weighting criteria allows you to reflect their relative significance in the final decision-making process.

- Relative Importance: Assess the importance of each criterion in achieving your objectives. Criteria directly aligned with your goals may receive higher weights.

- Weighting Methods: Choose a weighting method that suits your analysis. Common methods include expert judgment, analytic hierarchy process (AHP), or data-driven approaches based on historical performance.

- Impact Analysis: Consider how changes in the weights assigned to criteria would affect the final outcome. This sensitivity analysis helps you understand the robustness of your decisions.

- Stakeholder Input: Involve relevant stakeholders or decision-makers in the weighting process. Their input can provide valuable insights and ensure alignment with organizational goals.

- Transparency: Clearly document the rationale behind the assigned weights to maintain transparency in your analysis.

By weighting criteria, you ensure that the most critical factors have a more significant influence on the final evaluation, aligning the analysis more closely with your objectives and priorities.

With these steps in place, you're well-prepared to conduct a comprehensive comparative analysis. The next phase involves interpreting your findings, drawing conclusions, and making informed decisions based on the insights you've gained.

Comparative Analysis Interpretation

Interpreting the results of your comparative analysis is a crucial phase that transforms data into actionable insights. We'll delve into various aspects of interpretation and how to make sense of your findings.

- Contextual Understanding: Before diving into the data, consider the broader context of your analysis. Understand the industry trends, market conditions, and any external factors that may have influenced your results.

- Drawing Conclusions: Summarize your findings clearly and concisely. Identify trends, patterns, and significant differences among the options or variables you've compared.

- Quantitative vs. Qualitative Analysis: Depending on the nature of your data and analysis, you may need to balance both quantitative and qualitative interpretations. Qualitative insights can provide context and nuance to quantitative findings.

- Comparative Visualization: Visual aids such as charts, graphs, and tables can help convey your conclusions effectively. Choose visual representations that align with the nature of your data and the key points you want to emphasize.

- Outliers and Anomalies: Identify and explain any outliers or anomalies in your data. Understanding these exceptions can provide valuable insights into unusual cases or factors affecting your analysis.

- Cross-Validation: Validate your conclusions by comparing them with external benchmarks, industry standards, or expert opinions. Cross-validation helps ensure the reliability of your findings.

- Implications for Decision-Making: Discuss how your analysis informs decision-making. Clearly articulate the practical implications of your findings and their relevance to your initial objectives.

- Actionable Insights: Emphasize actionable insights that can guide future strategies, policies, or actions. Make recommendations based on your analysis, highlighting the steps needed to capitalize on strengths or address weaknesses.

- Continuous Improvement: Encourage a culture of continuous improvement by using your analysis as a feedback mechanism. Suggest ways to monitor and adapt strategies over time based on evolving circumstances.

Comparative Analysis Applications

Comparative analysis is a versatile methodology that finds application in various fields and scenarios. Let's explore some of the most common and impactful applications.

Business Decision-Making

Comparative analysis is widely employed in business to inform strategic decisions and drive success. Key applications include:

Market Research and Competitive Analysis

- Objective: To assess market opportunities and evaluate competitors.

- Methods: Analyzing market trends, customer preferences, competitor strengths and weaknesses, and market share.

- Outcome: Informed product development, pricing strategies, and market entry decisions.

Product Comparison and Benchmarking

- Objective: To compare the performance and features of products or services.

- Methods: Evaluating product specifications, customer reviews, and pricing.

- Outcome: Identifying strengths and weaknesses, improving product quality, and setting competitive pricing.

Financial Analysis

- Objective: To evaluate financial performance and make investment decisions.

- Methods: Comparing financial statements, ratios, and performance indicators of companies.

- Outcome: Informed investment choices, risk assessment, and portfolio management.

Healthcare and Medical Research

In the healthcare and medical research fields, comparative analysis is instrumental in understanding diseases, treatment options, and healthcare systems.

Clinical Trials and Drug Development

- Objective: To compare the effectiveness of different treatments or drugs.

- Methods: Analyzing clinical trial data, patient outcomes, and side effects.

- Outcome: Informed decisions about drug approvals, treatment protocols, and patient care.

Health Outcomes Research

- Objective: To assess the impact of healthcare interventions.

- Methods: Comparing patient health outcomes before and after treatment or between different treatment approaches.

- Outcome: Improved healthcare guidelines, cost-effectiveness analysis, and patient care plans.

Healthcare Systems Evaluation

- Objective: To assess the performance of healthcare systems.

- Methods: Comparing healthcare delivery models, patient satisfaction, and healthcare costs.

- Outcome: Informed healthcare policy decisions, resource allocation, and system improvements.

Social Sciences and Policy Analysis

Comparative analysis is a fundamental tool in social sciences and policy analysis, aiding in understanding complex societal issues.

Educational Research

- Objective: To compare educational systems and practices.

- Methods: Analyzing student performance, curriculum effectiveness, and teaching methods.

- Outcome: Informed educational policies, curriculum development, and school improvement strategies.

Political Science

- Objective: To study political systems, elections, and governance.

- Methods: Comparing election outcomes, policy impacts, and government structures.

- Outcome: Insights into political behavior, policy effectiveness, and governance reforms.

Social Welfare and Poverty Analysis

- Objective: To evaluate the impact of social programs and policies.

- Methods: Comparing the well-being of individuals or communities with and without access to social assistance.

- Outcome: Informed policymaking, poverty reduction strategies, and social program improvements.

Environmental Science and Sustainability

Comparative analysis plays a pivotal role in understanding environmental issues and promoting sustainability.

Environmental Impact Assessment

- Objective: To assess the environmental consequences of projects or policies.

- Methods: Comparing ecological data, resource use, and pollution levels.

- Outcome: Informed environmental mitigation strategies, sustainable development plans, and regulatory decisions.

Climate Change Analysis

- Objective: To study climate patterns and their impacts.

- Methods: Comparing historical climate data, temperature trends, and greenhouse gas emissions.

- Outcome: Insights into climate change causes, adaptation strategies, and policy recommendations.

Ecosystem Health Assessment

- Objective: To evaluate the health and resilience of ecosystems.

- Methods: Comparing biodiversity, habitat conditions, and ecosystem services.

- Outcome: Conservation efforts, restoration plans, and ecological sustainability measures.

Technology and Innovation

Comparative analysis is crucial in the fast-paced world of technology and innovation.

Product Development and Innovation

- Objective: To assess the competitiveness and innovation potential of products or technologies.

- Methods: Comparing research and development investments, technology features, and market demand.

- Outcome: Informed innovation strategies, product roadmaps, and patent decisions.

User Experience and Usability Testing

- Objective: To evaluate the user-friendliness of software applications or digital products.

- Methods: Comparing user feedback, usability metrics, and user interface designs.

- Outcome: Improved user experiences, interface redesigns, and product enhancements.

Technology Adoption and Market Entry

- Objective: To analyze market readiness and risks for new technologies.

- Methods: Comparing market conditions, regulatory landscapes, and potential barriers.

- Outcome: Informed market entry strategies, risk assessments, and investment decisions.

These diverse applications of comparative analysis highlight its flexibility and importance in decision-making across various domains. Whether in business, healthcare, social sciences, environmental studies, or technology, comparative analysis empowers researchers and decision-makers to make informed choices and drive positive outcomes.

Comparative Analysis Best Practices