AlphaGeometry: An Olympiad-level AI system for geometry

Trieu Trinh and Thang Luong

- Copy link ×

Our AI system surpasses the state-of-the-art approach for geometry problems, advancing AI reasoning in mathematics

Reflecting the Olympic spirit of ancient Greece, the International Mathematical Olympiad is a modern-day arena for the world's brightest high-school mathematicians. The competition not only showcases young talent, but has emerged as a testing ground for advanced AI systems in math and reasoning.

In a paper published today in Nature , we introduce AlphaGeometry, an AI system that solves complex geometry problems at a level approaching a human Olympiad gold-medalist - a breakthrough in AI performance. In a benchmarking test of 30 Olympiad geometry problems, AlphaGeometry solved 25 within the standard Olympiad time limit. For comparison, the previous state-of-the-art system solved 10 of these geometry problems, and the average human gold medalist solved 25.9 problems.

In our benchmarking set of 30 Olympiad geometry problems (IMO-AG-30), compiled from the Olympiads from 2000 to 2022, AlphaGeometry solved 25 problems under competition time limits. This is approaching the average score of human gold medalists on these same problems. The previous state-of-the-art approach, known as “Wu’s method”, solved 10.

AI systems often struggle with complex problems in geometry and mathematics due to a lack of reasoning skills and training data. AlphaGeometry’s system combines the predictive power of a neural language model with a rule-bound deduction engine, which work in tandem to find solutions. And by developing a method to generate a vast pool of synthetic training data - 100 million unique examples - we can train AlphaGeometry without any human demonstrations, sidestepping the data bottleneck.

With AlphaGeometry, we demonstrate AI’s growing ability to reason logically, and to discover and verify new knowledge. Solving Olympiad-level geometry problems is an important milestone in developing deep mathematical reasoning on the path towards more advanced and general AI systems. We are open-sourcing the AlphaGeometry code and model , and hope that together with other tools and approaches in synthetic data generation and training, it helps open up new possibilities across mathematics, science, and AI.

It makes perfect sense to me now that researchers in AI are trying their hands on the IMO geometry problems first because finding solutions for them works a little bit like chess in the sense that we have a rather small number of sensible moves at every step. But I still find it stunning that they could make it work. It's an impressive achievement.

Ngô Bảo Châu, Fields Medalist and IMO gold medalist

AlphaGeometry adopts a neuro-symbolic approach

AlphaGeometry is a neuro-symbolic system made up of a neural language model and a symbolic deduction engine, which work together to find proofs for complex geometry theorems. Akin to the idea of “ thinking, fast and slow ”, one system provides fast, “intuitive” ideas, and the other, more deliberate, rational decision-making.

Because language models excel at identifying general patterns and relationships in data, they can quickly predict potentially useful constructs, but often lack the ability to reason rigorously or explain their decisions. Symbolic deduction engines, on the other hand, are based on formal logic and use clear rules to arrive at conclusions. They are rational and explainable, but they can be “slow” and inflexible - especially when dealing with large, complex problems on their own.

AlphaGeometry’s language model guides its symbolic deduction engine towards likely solutions to geometry problems. Olympiad geometry problems are based on diagrams that need new geometric constructs to be added before they can be solved, such as points, lines or circles. AlphaGeometry’s language model predicts which new constructs would be most useful to add, from an infinite number of possibilities. These clues help fill in the gaps and allow the symbolic engine to make further deductions about the diagram and close in on the solution.

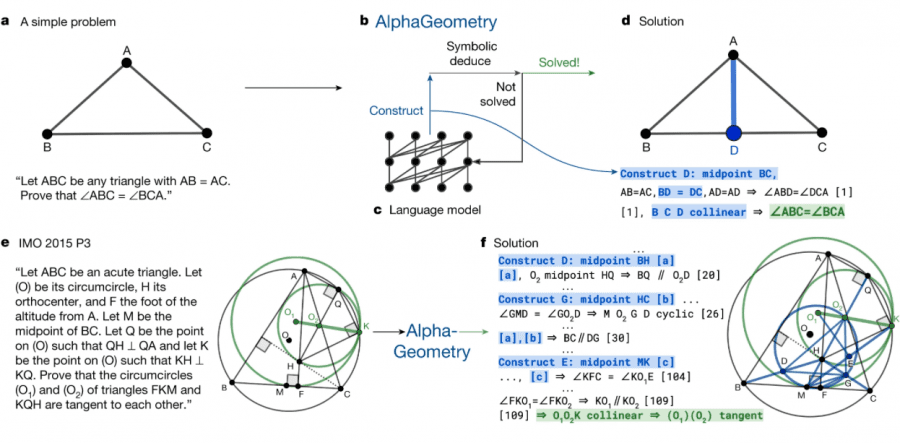

AlphaGeometry solving a simple problem: Given the problem diagram and its theorem premises (left), AlphaGeometry (middle) first uses its symbolic engine to deduce new statements about the diagram until the solution is found or new statements are exhausted. If no solution is found, AlphaGeometry’s language model adds one potentially useful construct (blue), opening new paths of deduction for the symbolic engine. This loop continues until a solution is found (right). In this example, just one construct is required.

AlphaGeometry solving an Olympiad problem: Problem 3 of the 2015 International Mathematics Olympiad (left) and a condensed version of AlphaGeometry’s solution (right). The blue elements are added constructs. AlphaGeometry’s solution has 109 logical steps.

See the full solution.

Generating 100 million synthetic data examples

Geometry relies on understanding of space, distance, shape, and relative positions, and is fundamental to art, architecture, engineering and many other fields. Humans can learn geometry using a pen and paper, examining diagrams and using existing knowledge to uncover new, more sophisticated geometric properties and relationships. Our synthetic data generation approach emulates this knowledge-building process at scale, allowing us to train AlphaGeometry from scratch, without any human demonstrations.

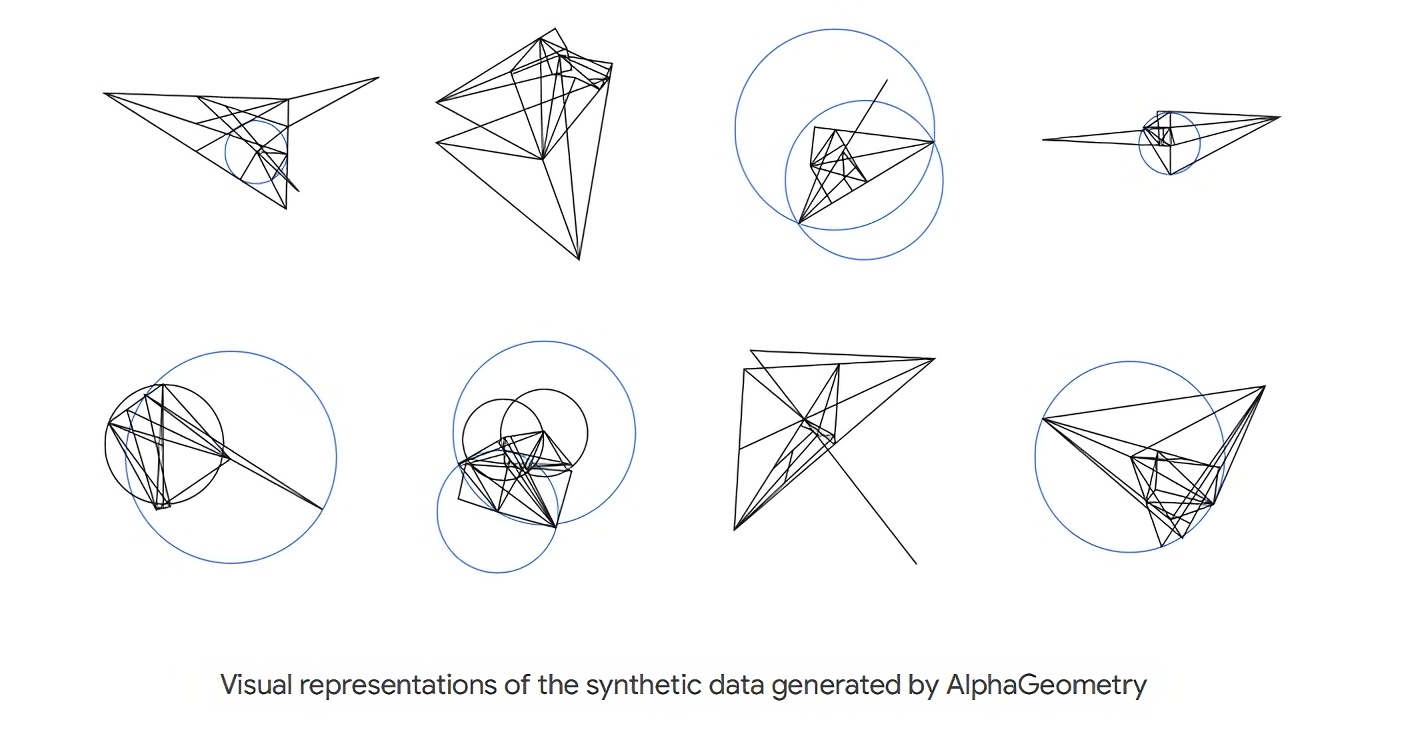

Using highly parallelized computing, the system started by generating one billion random diagrams of geometric objects and exhaustively derived all the relationships between the points and lines in each diagram. AlphaGeometry found all the proofs contained in each diagram, then worked backwards to find out what additional constructs, if any, were needed to arrive at those proofs. We call this process “symbolic deduction and traceback”.

Visual representations of the synthetic data generated by AlphaGeometry

That huge data pool was filtered to exclude similar examples, resulting in a final training dataset of 100 million unique examples of varying difficulty, of which nine million featured added constructs. With so many examples of how these constructs led to proofs, AlphaGeometry’s language model is able to make good suggestions for new constructs when presented with Olympiad geometry problems.

Pioneering mathematical reasoning with AI

The solution to every Olympiad problem provided by AlphaGeometry was checked and verified by computer. We also compared its results with previous AI methods, and with human performance at the Olympiad. In addition, Evan Chen, a math coach and former Olympiad gold-medalist, evaluated a selection of AlphaGeometry’s solutions for us. Chen said: “AlphaGeometry's output is impressive because it's both verifiable and clean. Past AI solutions to proof-based competition problems have sometimes been hit-or-miss (outputs are only correct sometimes and need human checks). AlphaGeometry doesn't have this weakness: its solutions have machine-verifiable structure. Yet despite this, its output is still human-readable. One could have imagined a computer program that solved geometry problems by brute-force coordinate systems: think pages and pages of tedious algebra calculation. AlphaGeometry is not that. It uses classical geometry rules with angles and similar triangles just as students do.”

AlphaGeometry's output is impressive because it's both verifiable and clean…It uses classical geometry rules with angles and similar triangles just as students do.

Evan Chen, math coach and Olympiad gold medalist

As each Olympiad features six problems, only two of which are typically focused on geometry, AlphaGeometry can only be applied to one-third of the problems at a given Olympiad. Nevertheless, its geometry capability alone makes it the first AI model in the world capable of passing the bronze medal threshold of the IMO in 2000 and 2015.

In geometry, our system approaches the standard of an IMO gold-medalist, but we have our eye on an even bigger prize: advancing reasoning for next-generation AI systems. Given the wider potential of training AI systems from scratch with large-scale synthetic data, this approach could shape how the AI systems of the future discover new knowledge, in math and beyond.

AlphaGeometry builds on Google DeepMind and Google Research’s work to pioneer mathematical reasoning with AI – from exploring the beauty of pure mathematics to solving mathematical and scientific problems with language models . And most recently, we introduced FunSearch , which made the first discoveries in open problems in mathematical sciences using Large Language Models.

Our long-term goal remains to build AI systems that can generalize across mathematical fields, developing the sophisticated problem-solving and reasoning that general AI systems will depend on, all the while extending the frontiers of human knowledge.

A.I.’s Latest Challenge: the Math Olympics

Watch out, nerdy high schoolers, AlphaGeometry is coming for your mathematical lunch.

Credit... Christian Gralingen

Supported by

- Share full article

By Siobhan Roberts

Reported from Stanford, Calif.

- Published Jan. 17, 2024 Updated Jan. 22, 2024

For four years, the computer scientist Trieu Trinh has been consumed with something of a meta-math problem: how to build an A.I. model that solves geometry problems from the International Mathematical Olympiad, the annual competition for the world’s most mathematically attuned high-school students.

Last week Dr. Trinh successfully defended his doctoral dissertation on this topic at New York University; this week, he described the result of his labors in the journal Nature. Named AlphaGeometry , the system solves Olympiad geometry problems at nearly the level of a human gold medalist.

While developing the project, Dr. Trinh pitched it to two research scientists at Google, and they brought him on as a resident from 2021 to 2023. AlphaGeometry joins Google DeepMind’s fleet of A.I. systems, which have become known for tackling grand challenges. Perhaps most famously, AlphaZero , a deep-learning algorithm, conquered chess in 2017. Math is a harder problem, as the number of possible paths toward a solution is sometimes infinite; chess is always finite.

“I kept running into dead ends, going down the wrong path,” said Dr. Trinh, the lead author and driving force of the project.

The paper’s co-authors are Dr. Trinh’s doctoral adviser, He He, at New York University; Yuhuai Wu, known as Tony, a co-founder of xAI (formerly at Google) who in 2019 had independently started exploring a similar idea; Thang Luong, the principal investigator, and Quoc Le, both from Google DeepMind.

Dr. Trinh’s perseverance paid off. “We’re not making incremental improvement,” he said. “We’re making a big jump, a big breakthrough in terms of the result.”

“Just don’t overhype it,” he said.

The big jump

Dr. Trinh presented the AlphaGeometry system with a test set of 30 Olympiad geometry problems drawn from 2000 to 2022. The system solved 25; historically, over that same period, the average human gold medalist solved 25.9. Dr. Trinh also gave the problems to a system developed in the 1970s that was known to be the strongest geometry theorem prover ; it solved 10.

Over the last few years, Google DeepMind has pursued a number of projects investigating the application of A.I. to mathematics . And more broadly in this research realm, Olympiad math problems have been adopted as a benchmark; OpenAI and Meta AI have achieved some results. For extra motivation, there’s the I.M.O. Grand Challenge , and a new challenge announced in November, the Artificial Intelligence Mathematical Olympiad Prize , with a $5 million pot going to the first A.I. that wins Olympiad gold.

The AlphaGeometry paper opens with the contention that proving Olympiad theorems “represents a notable milestone in human-level automated reasoning.” Michael Barany, a historian of mathematics and science at the University of Edinburgh, said he wondered whether that was a meaningful mathematical milestone. “What the I.M.O. is testing is very different from what creative mathematics looks like for the vast majority of mathematicians,” he said.

Terence Tao , a mathematician at the University of California, Los Angeles — and the youngest-ever Olympiad gold medalist, when he was 12 — said he thought that AlphaGeometry was “nice work” and had achieved “surprisingly strong results.” Fine-tuning an A.I.-system to solve Olympiad problems might not improve its deep-research skills, he said, but in this case the journey may prove more valuable than the destination.

As Dr. Trinh sees it, mathematical reasoning is just one type of reasoning, but it holds the advantage of being easily verified. “Math is the language of truth,” he said. “If you want to build an A.I., it’s important to build a truth-seeking, reliable A.I. that you can trust,” especially for “safety critical applications.”

Proof of concept

AlphaGeometry is a “neuro-symbolic” system. It pairs a neural net language model (good at artificial intuition, like ChatGPT but smaller) with a symbolic engine (good at artificial reasoning, like a logical calculator, of sorts).

And it is custom-made for geometry. “Euclidean geometry is a nice test bed for automatic reasoning, since it constitutes a self-contained domain with fixed rules,” said Heather Macbeth, a geometer at Fordham University and an expert in computer-verified reasoning. (As a teenager, Dr. Macbeth won two I.M.O. medals.) AlphaGeometry “seems to constitute good progress,” she said.

The system has two especially novel features. First, the neural net is trained only on algorithmically generated data — a whopping 100 million geometric proofs — using no human examples. The use of synthetic data made from scratch overcame an obstacle in automated theorem-proving: the dearth of human-proof training data translated into a machine-readable language. “To be honest, initially I had some doubts about how this would succeed,” Dr. He said.

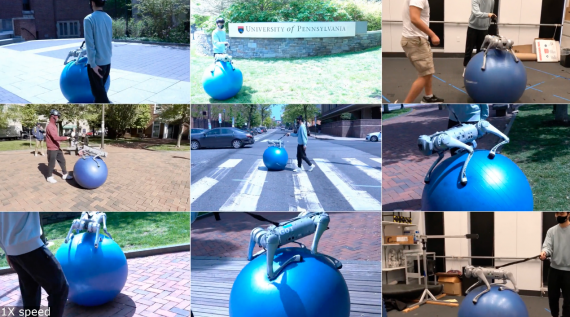

Second, once AlphaGeometry was set loose on a problem, the symbolic engine started solving; if it got stuck, the neural net suggested ways to augment the proof argument. The loop continued until a solution materialized, or until time ran out (four and a half hours). In math lingo, this augmentation process is called “auxiliary construction.” Add a line, bisect an angle, draw a circle — this is how mathematicians, student or elite, tinker and try to gain purchase on a problem. In this system, the neural net learned to do auxiliary construction, and in a humanlike way. Dr. Trinh likened it to wrapping a rubber band around a stubborn jar lid in helping the hand get a better grip.

“It’s a very interesting proof of concept,” said Christian Szegedy, a co-founder at xAI who was formerly at Google. But it “leaves a lot of questions open,” he said, and is not “easily generalizable to other domains and other areas of math.”

Dr. Trinh said he would attempt to generalize the system across mathematical fields and beyond. He said he wanted to step back and consider “the common underlying principle” of all types of reasoning.

Stanislas Dehaene , a cognitive neuroscientist at the Collège de France who has a research interest in foundational geometric knowledge, said he was impressed with AlphaGeometry’s performance. But he observed that “it does not ‘see’ anything about the problems that it solves” — rather, it only takes in logical and numerical encodings of pictures. (Drawings in the paper are for the benefit of the human reader.) “There is absolutely no spatial perception of the circles, lines and triangles that the system learns to manipulate,” Dr. Dehaene said. The researchers agreed that a visual component might be valuable; Dr. Luong said it could be added, perhaps within the year, using Google’s Gemini, a “multimodal” system that ingests both text and images.

Soulful solutions

In early December, Dr. Luong visited his old high school in Ho Chi Minh City, Vietnam, and showed AlphaGeometry to his former teacher and I.M.O. coach, Le Ba Khanh Trinh. Dr. Lê was the top gold medalist at the 1979 Olympiad and won a special prize for his elegant geometry solution. Dr. Lê parsed one of AlphaGeometry’s proofs and found it remarkable yet unsatisfying, Dr. Luong recalled: “He found it mechanical, and said it lacks the soul, the beauty of a solution that he seeks.”

Dr. Trinh had previously asked Evan Chen, a mathematics doctoral student at M.I.T. — and an I.M.O. coach and Olympiad gold medalist — to check some of AlphaGeometry’s work. It was correct, Mr. Chen said, and he added that he was intrigued by how the system had found the solutions.

“I would like to know how the machine is coming up with this,” he said. “But, I mean, for that matter, I would like to know how humans come up with solutions, too.”

Explore Our Coverage of Artificial Intelligence

News and Analysis

News Corp, the Murdoch-owned empire of publications like The Wall Street Journal and The New York Post, announced that it had agreed to a deal with OpenAI to share its content to train and service A.I. chatbots.

The Silicon Valley company Nvidia was again lifted by sales of its A.I. chips , but it faces growing competition and heightened expectations.

Researchers at the A.I. company Anthropic claim to have found clues about the inner workings of large language models, possibly helping to prevent their misuse and to curb their potential threats.

The Age of A.I.

D’Youville University in Buffalo had an A.I. robot speak at its commencement . Not everyone was happy about it.

A new program, backed by Cornell Tech, M.I.T. and U.C.L.A., helps prepare lower-income, Latina and Black female computing majors for A.I. careers.

Publishers have long worried that A.I.-generated answers on Google would drive readers away from their sites. They’re about to find out if those fears are warranted, our tech columnist writes .

A new category of apps promises to relieve parents of drudgery, with an assist from A.I. But a family’s grunt work is more human, and valuable, than it seems.

Advertisement

Advertisement

DeepMind AI solves hard geometry problems from mathematics olympiad

AlphaGeometry scores almost as well as the best students on geometry questions from the International Mathematical Olympiad

By Alex Wilkins

17 January 2024

Geometrical problems involve proving facts about angles or lines in complicated shapes

Google DeepMind

An AI from Google DeepMind can solve some International Mathematical Olympiad (IMO) questions on geometry almost as well as the best human contestants.

How does ChatGPT work and do AI-powered chatbots “think” like us?

“The results of AlphaGeometry are stunning and breathtaking,” says Gregor Dolinar, the IMO president. “It seems that AI will win the IMO gold medal much sooner than was thought even a few months ago.”

The IMO, aimed at secondary school students, is one of the most difficult maths competitions in the world. Answering questions correctly requires mathematical creativity that AI systems have long struggled with. GPT-4, for instance, which has shown remarkable reasoning ability in other domains, scores 0 per cent on IMO geometry questions, while even specialised AIs struggle to answer as well as average contestants.

This is partly down to the difficulty of the problems, but it is also because of a lack of training data. The competition has been run annually since 1959, and each edition consists of just six questions. Some of the most successful AI systems, however, require millions or billions of data points. Geometrical problems in particular, which make up one or two of the six questions and involve proving facts about angles or lines in complicated shapes, are particularly difficult to translate to a computer-friendly format.

Thang Luong at Google DeepMind and his colleagues have bypassed this problem by creating a tool that can generate hundreds of millions of machine-readable geometrical proofs. When they trained an AI called AlphaGeometry using this data and tested it on 30 IMO geometry questions, it answered 25 of them correctly, compared with an estimated score of 25.9 for an IMO gold medallist based on their scores in the contest.

Sign up to our The Daily newsletter

The latest science news delivered to your inbox, every day.

“Our [current] AI systems are still struggling with the ability to do things like deep reasoning, where we need to plan ahead for many, many steps and also see the big picture, which is why mathematics is such an important benchmark and test set for us on our quest to artificial general intelligence,” Luong told a press conference.

AlphaGeometry consists of two parts, which Luong compares to different thinking systems in the brain: a fast, intuitive system and a slower, more analytical one. The first, intuitive part is a language model, similar to the technology behind ChatGPT, called GPT-f. It has been trained on the millions of generated proofs and suggests which theorems and arguments to try next for a problem. Once it suggests a next step, a slower but more careful “symbolic reasoning” engine uses logical and mathematical rules to fully construct the argument that GPT-f has suggested. The two systems then work in tandem, switching between one another until a problem has been solved.

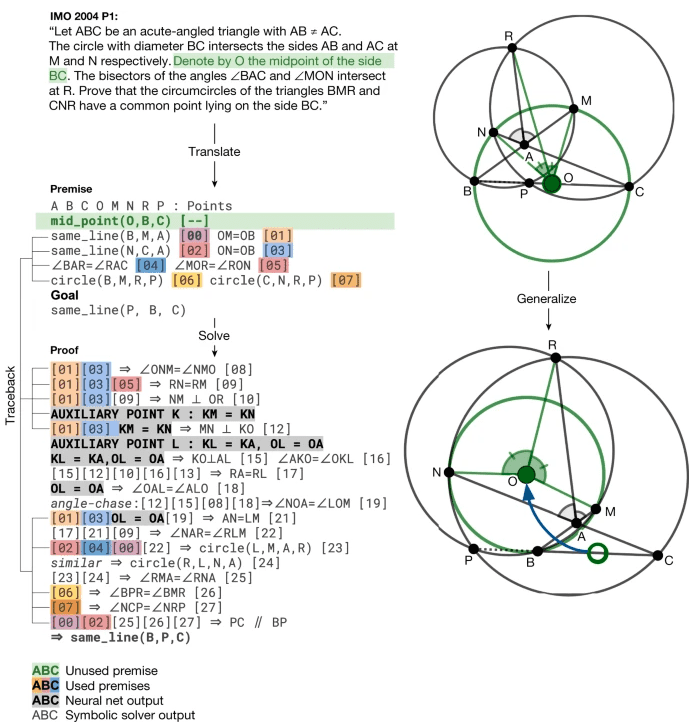

While this method is remarkably successful at solving IMO geometry problems, the answers it constructs tend to be longer and less “beautiful” than human proofs, says Luong. However, it can also spot things that humans miss. For example, it discovered a better and more general solution to a question from the 2004 IMO than was listed in the official answers.

The future of AI: The 5 possible scenarios, from utopia to extinction

Solving IMO geometry problems in this way is impressive, says Yang-Hui He at the London Institute for Mathematical Sciences, but the system is inherently limited in the mathematics it can use because IMO problems should be solvable using theorems taught below undergraduate level. Expanding the amount of mathematical knowledge AlphaGeometry has access to might improve the system or even help it make new mathematical discoveries, he says.

It would also be interesting to see how AlphaGeometry copes with not knowing what it needs to prove, as mathematical insight can often come from exploring theorems with no set proof, says He. “If you don’t know what your endpoint is, can you find within the set of all [mathematical] paths whether there is a theorem that is actually interesting and new?”

Last year, algorithmic trading company XTX Markets announced a $10 million prize fund for AI maths models, with a $5 million grand prize for the first publicly shared AI model that can win an IMO gold medal, as well as smaller progress prizes for key milestones.

“Solving an IMO geometry problem is one of the planned progress prizes supported by the $10 million AIMO challenge fund,” says Alex Gerko at XTX Markets. “It’s exciting to see progress towards this goal, even before we have announced all the details of this progress prize, which would include making the model and data openly available, as well as solving an actual geometry problem during a live IMO contest.”

DeepMind declined to say whether it plans to enter AlphaGeometry in a live IMO contest or whether it is expanding the system to solve other IMO problems not based on geometry. However, DeepMind has previously entered public competitions for protein folding prediction to test its AlphaFold system .

Journal reference:

Nature DOI: 10.1038/s41586-023-06747-5

- mathematics /

Sign up to our weekly newsletter

Receive a weekly dose of discovery in your inbox! We'll also keep you up to date with New Scientist events and special offers.

More from New Scientist

Explore the latest news, articles and features

DeepMind AI with built-in fact-checker makes mathematical discoveries

Crystal-hunting deepmind ai could help discover new wonder materials, game-playing deepmind ai can beat top humans at chess, go and poker, deepmind ai can beat the best weather forecasts - but there is a catch, popular articles.

Trending New Scientist articles

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- NATURE PODCAST

- 17 January 2024

This AI just figured out geometry — is this a step towards artificial reasoning?

Download the Nature Podcast 27 January 2024

In this episode:

0:55 The AI that deduces solutions to complex maths problems

Researchers at Google Deepmind have developed an AI that can solve International Mathematical Olympiad-level geometry problems, something previous AIs have struggled with. They provided the system with a huge number of random mathematical theorems and proofs, which it used to approximate general rules of geometry. The AI then applied these rules to solve the Olympiad problems and show its workings for humans to check. The researchers hope their system shows that it is possible for AIs to ‘learn’ basic principles from large amounts of data and use them to tackle complex logical challenges, which could prove useful in fields outside mathematics.

Research article: Trinh et al.

09:46 Research Highlights

A stiff and squishy ‘hydrospongel’ — part sponge, part hydrogel — that could find use in soft robotics, and how the spread of rice paddies in sub-Saharan Africa helps to drive up atmospheric methane levels.

Research Highlight: Stiff gel as squishable as a sponge takes its cue from cartilage

Research Highlight: A bounty of rice comes at a price: soaring methane emissions

12:26 The food-web effects of mass predator die-offs

Mass mortality events, sometimes called mass die-offs, can result in huge numbers of a single species perishing in a short period of time. But there’s not a huge amount known about the effects that events like these might be having on wider ecosystems. Now, a team of researchers have built a model ecosystem to observe the impact of mass die-offs on the delicate balance of populations within it.

Research article: Tye et al.

20:53 Briefing Chat

An update on efforts to remove the stuck screws on OSIRIS-REx’s sample container, the ancient, fossilized skin that was preserved in petroleum, and a radical suggestion to save the Caribbean’s coral reefs.

OSIRIS-REx Mission Blog: NASA’s OSIRIS-REx Team Clears Hurdle to Access Remaining Bennu Sample

Nature News: This is the oldest fossilized reptile skin ever found — it pre-dates the dinosaurs

Nature News: Can foreign coral save a dying reef? Radical idea sparks debate

Subscribe to Nature Briefing, an unmissable daily round-up of science news, opinion and analysis free in your inbox every weekday.

Never miss an episode. Subscribe to the Nature Podcast on Apple Podcasts , Google Podcasts , Spotify or your favourite podcast app. An RSS feed for the Nature Podcast is available too.

Shamini Bundell

Welcome back to the Nature Podcast, this week: an AI that’s figured out geometry…

Benjamin Thompson

…and how mass predator die-offs might affect ecosystems. I’m Benjamin Thompson.

And I’m Shamini Bundell.

<Music>

First up on the show, reporter Nick Petrić Howe has been learning about an AI that can logically deduce solutions to complex mathematical problems. Here’s Nick.

Nick Petrić Howe

You might think that computers are pretty good at maths. When I have a mathematical conundrum for instance I will often reach for a calculator or a trusty spreadsheet. But fundamentally a lot of maths is about logic — deducing what makes sense from the information you have. Meaning that a lot of mathematical problems are really just complicated puzzles. In fact, there is a competition known as the Mathematical Olympiad where high school students are given challenges, where they need to figure out solutions to such puzzles. They’ll be given a series of statements like “x + y = this” and also “5 to the power y equals that” etc and then they’ll have to use these to figure out the solution to a puzzle, like “what could x be?” Challenges like this are difficult though for computers and AIs, as Thang Luong, deep learning researcher and former Mathematical Olympiad competitor, explains.

Thang Luong

Let's say if I give you two basket, each have like 10 balls, how many balls do I have in total. So these actually machine can solve pretty well. But for problem by Mathematical Olympiad, it will involve very deep reasoning. So the model have to think for many, many, many steps before it can arrive at a solution. That's actually what makes it so interesting.

In fact, tackling these Mathematical Olympiad problems has been of interest to AI researchers for decades and it’s been a tough nut to crack. The best AIs still fare worse than the average International Mathematical Olympiad competitor. The thing that makes these lympiad challenges so well… challenging is that there is essentially an infinitely large number of ways you could try and tackle a problem. Where do you even start? Arguably, you have to reason — logically draw conclusions from what is given to you. In other words, if this is true, then this should also be true. This is something that we normally input into our machines, they don’t normally work it out for themselves from scratch.

So that would require, you know, a lot of thinking ahead, and sometimes creativity as well. So the machine needs to be creative. It needs to think for a long time.

Now the way that many Ais solve challenging problems is by scouring through a lot of data and ‘learning’ from it certain rules about how one thing applies to another. This is how Large Language Models, like ChatGPT, are built for example. But for would-be Mathematical Olympiad Ais there’s a snag here.

Large language models, like ChatGPT can read the entire internet, you know, it understand all kinds of knowledge from Wikipedia, from Reddit. But then for Mathematical Olympiad problems, these kinds of data to teach machine is so limited. There’s not many material on the Internet, there are a few forums, but that’s not enough data to really teach the machine.

But despite this lack of training data , Thang and a team of researchers are demonstrating an AI, known as AlphaGeometry in Nature this week. And unlike previous attempts their system can reach the top levels of human performance on geometry problems from the Maths Olympiad. But for the AI to solve these problems, the team had to solve their own — what to do about the lack of data? Well, they made it themselves.

We leveraged a large amount of computation at Google to synthesise a large amount of training data for the machine to learn from scratch. So, in total, we was able to generate 100 millions of theorem and proof so that the machine can learn all of these by itself. And then it can learn to generalise the new problems.

This huge volume of random mathematical theorems and proofs — statements and the relevant logical argument that back them up — were given to AlphaGeometry, which used them to work out general rules of geometry. With that in place, it was ready to take on the Mathematical Olympiad. AlphaGeometry was fed the olympiad problems, which it worked on using a two-part system, a neural network and a symbolic system, two different kinds of AIs that each operate at a different pace. They worked together, to solve the problems

So the neural network you can think of it like system one, because neural network can think very fast. It can be creative, it can suggest interesting lines and points to help unlock a solution. And then we have a symbolic system, which is system two, it’s reliable, but it's slow.

The symbolic system was the one that was trying to use logic to solve the puzzle — it did the heavy lifting, using the geometry rules it ‘learned’ from the synthetic data to solve the problem. And you might think that system would be enough, but actually you need the creative system one as well. As often the slow symbolic system gets stuck and so it needs a bit of creative juice to get its cogs turning. For example, if the symbolic system is working on a problem involving triangles and gets stuck, the creative system one neural network can suggest maybe splitting the triangle in two and thinking about those two ‘new’ triangles instead. The problem would be the same, but, if you'll pardon the phrase, it would be able to think about it in a different way. The systems then go back and forward like this until the problem is solved. Together they would create a proof, essentially showing how it solved the problem, which humans could then check. And this is exactly what happened. The system was given a series of Maths Olympiad problems, and it figured out ways to solve them and wrote out its solutions. Thang and the team then sent the AI’s solutions to pupils of the Maths Olympiad and the US coach who were then able to verify them. Overall, Thang was pleased with how their AlphaGeometry theoretically would have performed, if it was in an International Mathematical Olympiad.

We were very happy that for the year 2000, 2015 AlphaGeometry was able to solve all the geometry problem in that year. So, you can think of it like an AI for the first time achieved the bronze medal.

The AI would win bronze as it only focused on geometry, whereas the actual competition involves other kinds of maths as well. But, if you did just look at geometry problems then AlphaGeometry was almost at the gold-medallist level — solving 25 out of 30 problems where gold medallists solve on average 25.9. However, in years other than 2000 and 2015 the AI wasn’t able to solve all the geometry problems, which could perhaps be down to certain geometry theories not being part of the synthetic data that the AI was using. Another issue was that the solutions that AlphaGeometry came up with were very long, which may be down to much the same reason. Effectively, the AI only knew the very basic rules of geometry, whereas humans are able to use other theorems and even other mathematical notations to shorten their explanations. So in the future Thang would like to make the solutions a bit more… elegant .

The solution from AlphaGeometry for the year 2015, it’s a long list of steps, it’s 109 steps in the solution. This is something that we're not optimised for actually, so far we only optimised to get the solution, but it can also be in the future, we might want to optimise for some beauty, you know, because with 109 steps, it will be a lot of work for people to actually read the proof.

Beautiful or not though, Thang believes that AlphaGeometry shows that it’s possible to build AIs which can ‘learn’ basic principles from large amounts of data and apply them to other situations. For some researchers, this could be a step towards building AIs that could ‘reason’ and potentially come up with solutions even in fields outside mathematics.

It really tells that we can actually build AI to learn from scratch. And then in the future, hopefully AI can discover new knowledge from other domains.

That was Thang Luong, from Google DeepMind. He’s based in the US. For more on that story, check out the show notes for a link to the paper.

Coming up, how researchers built their own ecosystem to find out what happens when a large number of predators die off in a short period. Right now though, it’s time for the Research Highlights, with Dan Fox.

A new material dubbed a ‘hydrospongel’ could find uses in soft robotics by mimicking living tissue. Tissue, like cartilage, can withstand heavy loads and hold large quantities of water, which is helpful in biological systems. But this combination is a challenge for synthetic materials. Hydrogels, for instance, are good at holding lots of water, but they tend to irreversibly deform when squashed. Sponges are resilient to heavy loads springing back after being deformed, but this lack of stiffness means they are too soft for many uses, and drain their liquid contents too readily. Now, researchers have designed a new material made from a network of Kevlar polymer fibres enriched with nitrogen atoms. The interwoven polymers — similar to those used in bulletproof vests — made the gel-like substance stiff, while creating nanoscale pores that trapped water and allowed it to diffuse. Under high compression, the spaghetti like network released water and got squashed before springing back. The material held more than 5,000 times its own weight in water and was 22 times stiffer than comparable water-rich gels. The authors say it could be used in drug delivery, or to build scaffolds for tissue engineering. You can soak up the rest of that research in Nature Materials .

The level of methane in the atmosphere is rising, and scientists have attributed much of that rise to emissions from tropical Africa. And new research puts a large percentage of the continent’s increased emissions down to rice production. Like cattle and natural wetlands, flooded rice paddies can host micro-organisms that emit methane. Between 2008 and 2018, rice production in sub-Saharan Africa doubled. And so a team of researchers recalculated emissions to take this into account. The new number suggests that rice growing in Africa accounted for nearly a third of the increase in the continent’s methane emissions between 2006 and 2017, and for 7% of the global increase in the same period. With aims for rice production in the region to double again between 2019 and 2030, multinational goals of reducing methane emissions by 30% will require deep reductions elsewhere to compensate. You could read that research in full in Nature Climate Change .

<music>

Ecosystems are all about balance. For example — take a super simplified, three-stage food web — plants, herbivores, carnivores. The nutrients in the system feed the plants, which feed herbivores which feed the carnivores. And so the quantity of nutrients impacts the rest of the system from the bottom up . At the same time, the carnivores eat the herbivores and keep their numbers in check, which reduces the number of plants eaten and so allows more plants to grow and so the system is regulated from the top down . Top down and bottom-up effects like these exist in constant, shifting, complex balance, but what happens when that balance is interrupted? Mass Mortality Events, sometimes called mass die-offs, can result in huge numbers of a single species dying off in a short period of time. Events like these have been seen in a variety of different animals: fish, birds, antelope the list goes on. And the numbers of animals that perish can be staggering, in some cases estimates range into the millions or even billions. But there’s not a huge amount known about the effects that events like these might be having on wider ecosystems. Well, that is something that Adam Siepielski from the University of Arkansas and his colleagues have been studying. I gave Adam a call.

Adam Siepielski

I mean, what we wanted to do was, in part, test some of the theory that we had been developing, and we really wanted to know, could you take these really wonderful classic ideas in community ecology, top-down effects where predators have an important role in affecting primary producers like plants, or algae and ecosystems and bottom-up effects, where nutrients are the important factor regulating primary producers and ecosystems and could we combine them. And then use those combinations to make a prediction about how a system would actually respond when predators die, decompose for these nutrients and generate that bottom-up effect.

So obviously, if you want to test this hypothesis and work out what's happening during a mass die-off, you have to study an ecosystem, right? But you can't go into the wild and cause a mass die-off, of course. So what you've done, then you've actually built your own freshwater ecosystem, a series of artificial ecosystems to test what a predator mass die-off might do. How do you go about making these ecosystems and what does one look like?

So, we did take this classic approach in ecology, and generally these little, they're called mesocosms, they're sort of like a smaller version of like a complex lake-ecosystem. What do they look like? If you ever go out to like a farm or something and see a big barrel of water that cattle are drinking water from, that's what those things are, there's nothing special about them we fill them up with water and then we just sort of start seeding it. A lot of the basic things of the food web naturally kind of come in like some of the bacteria will get in there. But you know, we put some like leaf litter in there to start to decompose that releases nutrients that allows algae, phytoplankton the base of this food web to start to grow in the system. We went out to a local lake and we collected zooplankton, the things that eat all the phytoplankton, so the algae and the diatoms, that sort of thing. And then we eventually stocked it with fish, bluegill, which are really common game fish, and really one of the most important predator species of zooplankton in the part of the lake that we were looking at. So basically, we just established like, three different trophic levels that naturally occur in lakes.

And so you had these artificial ecosystems then, and you treated them in different ways. Some of them you just left as they were, some of them, you removed the fish manually. And some of them you used electricity, in part, to cause a mass die-off of the fish. What did you see? What was the difference between the ecosystems? How do they compare?

Yeah, so after we, you know, induced this mass mortality event, we compared a number of different features of the remaining food levels that were present. And if you remove the fish, one of the things that happens is that the zooplankton become more abundant, because the predators that ate them are now gone. And when they become more abundant, the phytoplankton — the things that the zooplankton eat — start to go down. But one of the unique facets though, was that because in a mass mortality event, when the fish are dying and then decomposing, the zooplankton aren't able to simply consume all of the phytoplankton up and cause the system to sort of collapse. What happens is that because those fish die, and decompose and release those nutrients, that kind of causes a fertilisation effect, that allows for the primary producers, the phytoplankton to stay abundant, even though there's this increase in the zooplankton herbivores in a system. What that actually looks like is that it becomes very similar to the control system where everything is being like, nicely regulated. And so it kind of looks a little bit more just like an intact ecosystem,

And how does this fit then with ideas of what might happen?

I mean, so we had developed some mathematical theory where we had tried to make predictions for what would happen, and some of those predictions that we had actually sort of generated a few possibilities, because one thing that we have sort of surmised could happen, and does sometimes happened during a possible like, mortality event, is that the death of those predators could sort of have almost like a toxifying-like effect, and that they could decompose and cloudy the water like so much, that the primary producers become a little bit light limited. And they couldn't even begin to proliferate. Alternatively, and what we found, though, was that, that doesn't really seem to happen, those predators do go to the bottom, they decomposed to release those nutrients, like nitrogen and phosphorus and causes those producers to increase. So it matched very well. Like I remember, when we first got the data, we were like, holy cow, that looks exactly like what we thought it was going to look like. And that was really reassuring for, you know, community ecologists to be able to say that, you know, we've got this beautiful, messy, complex ecosystem that has all these things going on, we can simplify it into this little body of a couple of differential equations, sort of make these projections for how these things should look. But then when you actually get the empirical data based on, you know, experiments from these mesocosms, it was amazing. I was like, this is so cool. It's like we were able to, you know, kind of predict these things.

I mean, what does this result mean, Adam? Because naively one can make the argument that a mass die-off event of predators isn't that much of an impact to an ecosystem, because as you've shown, the ecosystem will continue. But presumably, it's much more complicated than that.

Oh, yeah. I mean, the experiment that we were able to do wasn't in a natural lake, or anything like that, which is, you know, inherently much more complicated. But I think this is important, because it should give us some reassurance about our understanding of how nature is working. Because, again, like we're able to take decades old work and combine it to make reasonably good predictions about how an ecosystem might respond to an event like a mass mortality event or some sort of ecological catastrophe.

And in previous work, you've suggested that mass die-offs are happening more frequently. How does this work fit into that do you think? In terms of our knowledge of what's causing these things or how they can be prevented, for example.

These predator die-offs are happening because of extreme climatic events, disease outbreaks, human disturbances, that sort of thing. So I don't know that the paper can really tell us much about necessarily preventing those as much as it can tell us about the sort of signal of one of these events having happened. And it can also, I think, it informs us though that, you know, maybe reporting these events is an important thing to do. They are still, I think, relatively rare events. So even though you know, the data does suggest that they may be increasing in frequency, we may be observing them more often. I think that we can only continue to understand them better — what the causes of them are, and the effects of them are in ecological systems, if we can continue to get data and monitor these sorts of events from actually happening in nature. And I think citizens that are going out to lakes, and they observe, you know, a large number of animals dying, reporting that to their local authority would be really, really valuable. Just the document that hey, you know, this did happen.

That was Adam Siepielski from the University of Arkansas in the US. To read his paper about the research, which is out this week in Nature , head over to the show notes for a link.

And finally on the show, it's time for the Briefing Chat, where we discuss a couple of articles that have been highlighted in the Nature Briefing . So Ben, tell us what you've been reading this week.

Well, actually, I've got a quick update on a story we covered last week on the podcast and listeners might remember me and Noah and Flora talking about NASA's problems opening the OSIRIS-REx’s containment canister, right. And they were trying to get to the samples of the Bennu asteroid that were inside. Now there was two screws that they couldn't figure out how to open. And we come up with some, frankly, terrible ideas of how they could have gone about that. And Noah asked Flora, like what's the timeframe for this? And she said, I don't know quite what's going to happen. And the answer is, it happened the day after the podcast came out–

–oh, great–

–and NASA have released these two screws and announced that they've got them undone.

Do you think it's a coincidence that you guys put forward some suggestions, and then the very next day, they actually managed to solve it?

I don't Shamini, let’s be honest. But back in the real world, of course–

–okay, so NASA had to design some special tools to fit into the sealed box that the container is currently in. This is quite a small thing. And they've made essentially a very fancy screwdriver, which is not really like any screwdriver that I've seen before.

Yeah, what does it look like the fancy screwdriver?

It's got a lot of right angles, Shamini, I'll say that. But check out the show notes for a link where listeners can have a look at it. And I will say that all this info has come from NASA's OSIRIS REx blog. But we're not quite there yet. Okay, they managed to loosen these two screws, and there's a few more stages yet until they can get actually into the canister proper and look at the rocks and the dust inside. And I think what's going to happen is they're gonna open it up, then take a load of super high-res photos to see what they've got and then start kind of weighing it out and parcelling it out. And some of the previous stuff that was collected has already gone out to researchers. And presumably this will be eventually too.

I presume it's gonna take more than a week, this time for us to have another update on this story while they all analyse the samples.

I'm hoping that tomorrow–

–tomorrow, yes!–

–yeah, and then next week, we can talk about that too. But it is kind of exciting, and hats off to them for doing it. And it's hoped, of course, that these samples will tell us a huge amount about the origins of the solar system and how you know, the things we see around us came to be because some of these samples will have dated back maybe four and a half billion years, estimates suggest. But I've got another story today as well and I'm gonna keep going, which is also pretty old. Not quite that old I have to say, only 289 million years old.

Is that all? Yeah, pretty recent, yeah.

I mean a snip really, let's be honest. And it's a story that I read about in Nature , and it's about a few shreds of fossilised skin that had been discovered and described in a paper in Current Biology .

Ooh, so whose skin is it from 200 and whatever it was million years ago?

Well, that's a great question. And it seems to have come from a lizard-like animal known as Captorhinus aguti . But these skin fragments are only a few millimetres across, but they are the oldest skin ever found from a group of animals collectively known as amniotes. Okay, this includes reptiles and birds and mammals like me and you, basically all terrestrial vertebrates, except amphibians. And so yeah, quite a big find. And this one is the oldest by quite some distance.

And we've definitely talked about dinosaur skin before, which is always exciting thinking about sort of what dinosaurs looked like and stuff, but this is even older than that?

Oh, millions of years older than that, before the rise of dinosaurs at all. And what's interesting is, in many cases, I think what researchers look at is the imprint of skin on rock, right? So we have the kind of pressing, but this is something different, this is actual skin, 3D, fossilised skin, and and it's very rare to find soft tissues, okay, because usually they decompose which is why we only ever find bones. But this is kind of one of those culmination of a bunch of different factors which has led to this discovery. So this skin was found in a cave in Oklahoma, and this cave has kind of got lots of conditions suitable for preserving soft tissues, right. And one of them in particular is that during the fossilisation process, there was oil seeping in from the walls of this cave, right? And this is brilliant line in the article over at nature.com and it's essentially that this skin was pickled in petroleum, like it's almost jet black. But it's completely infused with hydrocarbons and that's one of the reasons that it's in such good nick. And I will say it kind of looks a bit like crocodile skin, right, it's got those bumps on it as well. But because it's so well preserved, the researchers could actually look at the layers within it, which is kind of amazing right, you can see the dermis, and you can see the epidermis and these different kind of bits that are making it up.

And is this being so ancient tell us something about the evolution of these kinds of soft tissues that we don't usually get to see.

Interesting one, I don't know if it gives any definitive answers. But as I said, like finding soft tissue is so rare. And the development of skin was kind of a big evolutionary step for animals moving from living in water to living on land, because of course, the skin is an amazing barrier that keeps the outside out and the inside, in. And so knowing more about when this happened, I think it's such an interesting one is such a key step in evolution of amniotes. And hopefully, they'll be more findings like this. So we can maybe get things even further back in the evolutionary timeline, because much of what we know about how the Tree of Life exists is from studying bones, because that's all that ever is preserved.

Yeah, it sounds like it was quite a sort of unique situation with a very oily cave. So we're going to take a lot more searching to find even more of this rarely preserved, soft tissue. So that's very cool. I do like these fossil stories. I'm going to bring us back to the modern day about the future. It's a climate-change related story, I've got an it's quite an unusual one. So it's an article in Nature but it's not based on a paper, it's based on a presentation that a researcher gave at the Society for Integrative and Comparative Biology annual meeting at the beginning of January. And he's basically got quite a sort of radical proposal to deal with the massive problem of corals in the Caribbean, dying off and really, really suffering and struggling with climate change and other issues.

Of course, a serious problem with the oceans warming up. And I think we've covered on the show before, there's a bunch of different ways that people have been looking to maybe overcome or reverse this issue, you know, growing coral in a lab and transplanting it, dropping big blocks of concrete down for coral to grow on that sort of thing, right? I mean, presumably, if this is a radical solution, this is maybe not those.

Yeah, in a way more radical than those. But yeah, exactly as you've said, like there's all these things that people have been trying, and people are suggesting, because it's a really urgent problem. The corals in this area have been dying off for decades, loads of them are bleached. The coral reefs themselves are really important because they protect against coastal erosion. There's obviously all the sort of young fish and the ecosystem around them. And once you've got the sort of bleaching effects, like for example, there was a massive heatwave last summer, so that had a really, really bad impact. And each time these kinds of things happen, you've got the sort of remaining bleached bit of the reefs that are more likely to erode or collapse. And then it's harder to do things like as you said, transplant new baby corals from the lab and try and plant them there. Which, by the way, it was sort of noted in this article that planting all these young native corals hasn't really worked. It's not great, hence, more radical solution being proposed or being at least introduced into the discussion. The question is, what if instead of growing these native coral species and trying to transplant them, and help them grow, what if we just give up on the native species and get in species from other reefs that are inherently hardier, tougher and might thrive in the Caribbean? So, for example, corals from the Indo Pacific.

I mean, that’s an interesting one, because we had a long read podcast a few weeks ago, about assisted migration, which was moving endangered species away from habitats that are under threat because of climate change, to new habitats, and kind of controversial, right? Kind of considered to be a last resort–

–absolutely–

–and there were concerns about, you know, the threats that the introduced species might bring diseases or upset an ecosystem. And as we heard earlier in the podcast, these things are very finely balanced. I mean, this is kind of the opposite of that, right is taking a healthy species to an endangered habitat.

Yeah, and you’re absolutely right, this both controversial and last resort. So one environmentalist quoted in this article describes it as “unpopular and painful”. Basically, this goes against all of the sort of principles that environmentalists, conservationists usually try and maintain, which is to protect native species and not just bring in other species from outside and the guy who proposed this, his name is Mikhail Matz, I hope I'm pronouncing that right. And there's a quote from him that says “it's an 11 th -hour solution. And it's now 11.45”. He wouldn't be suggesting this — I think is the implication there — unless, you know, we weren't in such a dire situation. And predicting dire situations into the future as well like, there are predictions that this coming summer the heatwaves in the Caribbean could be too just as bad or worse as they were last year.

And so as you say this was described in a talk, this isn't in a peer-reviewed journal. Is this purely theoretical? Or is this based on some experiments? What are we talking about?

Yeah this is theoretical at this stage. And it's also, at this point, it's so against all the principles that you'd have a really hard time even trying to push anything like this through. And the sort of intention here, I think, is to actually start this conversation and say, like, look, we're not in a good state, we have to talk about this. And there are suggestions for experiments that could be done. You know you’ve mentioned some of the obvious downsides earlier, like, there could be diseases that you've introduced from one reef halfway across the world into another one. So would growing the little transplanted corals in a lab helped to reduce the disease risk. Are there sort of areas where you could do a trial of this kind of thing of introducing these hardier corals from elsewhere, in places where if they were to sort of take over and spread, they wouldn't be able to spread out to then other regions, and you know, absolutely might not work. So, the Caribbean corals are struggling with the heat and with the pollution there. And also, there are obviously diseases specific to Caribbean corals. So who knows what happens when you bring in these other corals. Will they even survive? But the tone from a lot of people quoted in this article is just one of we have to try something. So one biologist sort of talking about the things that have been tried so far, and that haven't worked, says we either do something else, or we lose the corals.

Hmm an interesting one, and I'm sure a lot of people have got a lot of opinions on it. So we will follow that one closely I'm sure over the next months, and potentially years. But let's leave it there for this week's Briefing Chat, Shamini. And listeners, if you want to know more about the stories we discussed, or how to get more like them delivered direct to your inbox by signing up to the Nature Briefing , look out for links in the show notes.

And that's all for this episode then. If you want to get in touch with us, then you can. We’re on X, @NaturePodcast, or you can send us an email to [email protected]'m Shamini Bundell.

And I'm Benjamin Thompson. See you next time.

doi: https://doi.org/10.1038/d41586-024-00145-1

Related Articles

- Climate change

- Materials science

- Mathematics and computing

A tiny killer is making an entire region’s sea urchins disintegrate

Research Highlight 23 MAY 24

Save the forest to save the tiger — why vegetation conservation matters

News & Views 21 MAY 24

China's Yangtze fish-rescue plan is a failure, study says

News 20 MAY 24

Singapore Airlines turbulence: why climate change is making flights rougher

News Explainer 22 MAY 24

Why role-playing games can spur climate action

World View 22 MAY 24

Why babies in South Korea are suing the government

Metals strengthen with increasing temperature at extreme strain rates

Article 22 MAY 24

Strain-invariant stretchable radio-frequency electronics

Combined cement and steel recycling could cut CO2 emissions

News & Views 22 MAY 24

Professor, Division Director, Translational and Clinical Pharmacology

Cincinnati Children’s seeks a director of the Division of Translational and Clinical Pharmacology.

Cincinnati, Ohio

Cincinnati Children's Hospital & Medical Center

Data Analyst for Gene Regulation as an Academic Functional Specialist

The Rheinische Friedrich-Wilhelms-Universität Bonn is an international research university with a broad spectrum of subjects. With 200 years of his...

53113, Bonn (DE)

Rheinische Friedrich-Wilhelms-Universität

Recruitment of Global Talent at the Institute of Zoology, Chinese Academy of Sciences (IOZ, CAS)

The Institute of Zoology (IOZ), Chinese Academy of Sciences (CAS), is seeking global talents around the world.

Beijing, China

Institute of Zoology, Chinese Academy of Sciences (IOZ, CAS)

Full Professorship (W3) in “Organic Environmental Geochemistry (f/m/d)

The Institute of Earth Sciences within the Faculty of Chemistry and Earth Sciences at Heidelberg University invites applications for a FULL PROFE...

Heidelberg, Brandenburg (DE)

Universität Heidelberg

Postdoctoral scholarship in Structural biology of neurodegeneration

A 2-year fellowship in multidisciplinary project combining molecular, structural and cell biology approaches to understand neurodegenerative disease

Umeå, Sweden

Umeå University

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

DeepMind’s latest AI can solve geometry problems

DeepMind, the Google AI R&D lab, believes that the key to more capable AI systems might lie in uncovering new ways to solve challenging geometry problems.

To that end, DeepMind today unveiled AlphaGeometry — a system that the lab claims can solve as many geometry problems as the average International Mathematical Olympiad gold medalist. AlphaGeometry, the code for which was open sourced this morning, solves 25 Olympiad geometry problems within the standard time limit, beating the previous state-of-the-art system’s 10.

“Solving Olympiad-level geometry problems is an important milestone in developing deep mathematical reasoning on the path toward more advanced and general AI systems,” Trieu Trinh and Thang Luong, Google AI research scientists, wrote in a blog post published this morning. “[We] hope that … AlphaGeometry helps open up new possibilities across mathematics, science and AI.”

Why the focus on geometry? DeepMind asserts that proving mathematical theorems, or logically explaining why a theorem (e.g. the Pythagorean theorem) is true, requires both reasoning and the ability to choose from a range of possible steps toward a solution. This problem solving approach could — if DeepMind’s right — turn out to be useful in general-purpose AI systems someday.

“Demonstrating that a particular conjecture is true or false stretches the abilities of even the most advanced AI systems today,” read DeepMind press materials shared with TechCrunch. “Toward that goal, being able to prove mathematical theorems … is an important milestone as it showcases the mastery of logical reasoning and the ability to discover new knowledge.”

But training an AI system to solve geometry problems poses unique challenges.

Owing to the complexities of translating proofs into a format machines can understand, there’s a dearth of usable geometry training data. And many of today’s cutting-edge generative AI models, while exceptional at identifying patterns and relationships in data, lack the ability to reason logically through theorems.

DeepMind’s solution was twofold.

In designing AlphaGeometry, the lab paired a “neural language” model — a model architecturally along the lines of ChatGPT — with a “symbolic deduction engine,” an engine that leverages rules (e.g. mathematical rules) to infer solutions to problems. Symbolic engines can be inflexible and slow, especially when dealing with large or complicated datasets. But DeepMind mitigated these issues by having the neural model “guide” the deduction engine through possible answers to given geometry problems.

In lieu of training data, DeepMind created its own synthetic data, generating 100 million “synthetic theorems” and proofs of varying complexity. The lab then trained AlphaGeometry from scratch on the synthetic data — and evaluated it on Olympiad geometry problems

Olympiad geometry problems are based on diagrams that need “constructs” to be added before they can be solved, such as points, lines or circles. Applied to these problems, AlphaGeometry’s neural model predicts which constructs might be useful to add — predictions that AlphaGeometry’s symbolic engine uses to make deductions about the diagrams to identify like solutions.

“With so many examples of how these constructs led to proofs, AlphaGeometry’s language model is able to make good suggestions for new constructs when presented with Olympiad geometry problems,” Trinh and Luong write. “One system provides fast, ‘intuitive’ ideas, and the other more deliberate, rational decision-making.”

The results of AlphaGeometry’s problem solving, which were published in a study in the journal Nature this week, are likely to fuel the long-running debate over whether AI systems should be built on symbol manipulation — that is, manipulating symbols that represent knowledge using rules — or the ostensibly more brain-like neural networks.

Proponents of the neural network approach argue that intelligent behavior — from speech recognition to image generation — can emerge from nothing more than massive amounts of data and compute. As opposed to symbolic systems, which solve tasks by defining sets of symbol-manipulating rules dedicated to particular jobs (like editing a line in word processor software), neural networks try to solve tasks through statistical approximation and learning from examples.

Neural networks are the cornerstone of powerful AI systems like OpenAI’s DALL-E 3 and GPT-4. But, claim supporters of symbolic AI, they’re not the end-all be-all; symbolic AI might be better positioned to efficiently encode the world’s knowledge, reason their way through complex scenarios and “explain” how they arrived at an answer, these supporters argue.

As a hybrid symbolic-neural network system akin to DeepMind’s AlphaFold 2 and AlphaGo, AlphaGeometry perhaps demonstrates that the two approaches — symbol manipulation and neural networks — combined is the best path forward in the search for generalizable AI. Perhaps.

“Our long-term goal remains to build AI systems that can generalize across mathematical fields, developing the sophisticated problem-solving and reasoning that general AI systems will depend on, all the while extending the frontiers of human knowledge,” Trinh and Luong write. “This approach could shape how the AI systems of the future discover new knowledge, in math and beyond.”

More TechCrunch

Get the industry’s biggest tech news, techcrunch daily news.

Every weekday and Sunday, you can get the best of TechCrunch’s coverage.

Startups Weekly

Startups are the core of TechCrunch, so get our best coverage delivered weekly.

TechCrunch Fintech

The latest Fintech news and analysis, delivered every Sunday.

TechCrunch Mobility

TechCrunch Mobility is your destination for transportation news and insight.

Startups Weekly: Drama at Techstars. Drama in AI. Drama everywhere.

Welcome to Startups Weekly — Haje‘s weekly recap of everything you can’t miss from the world of startups. Sign up here to get it in your inbox every Friday. Well,…

From Plaid to Figma, here are the startups that are likely — or definitely — not having IPOs this year

Last year’s investor dreams of a strong 2024 IPO pipeline have faded, if not fully disappeared, as we approach the halfway point of the year. 2024 delivered four venture-backed tech…

Feds add nine more incidents to Waymo robotaxi investigation

Federal safety regulators have discovered nine more incidents that raise questions about the safety of Waymo’s self-driving vehicles operating in Phoenix and San Francisco. The National Highway Traffic Safety Administration…

Pitch Deck Teardown: Terra One’s $7.5M Seed deck

Terra One’s pitch deck has a few wins, but also a few misses. Here’s how to fix that.

Women in AI: Chinasa T. Okolo researches AI’s impact on the Global South

Chinasa T. Okolo researches AI policy and governance in the Global South.

Disrupt 2024 early-bird tickets fly away next Friday

TechCrunch Disrupt takes place on October 28–30 in San Francisco. While the event is a few months away, the deadline to secure your early-bird tickets and save up to $800…

Big tech companies are plowing money into AI startups, which could help them dodge antitrust concerns

Another week, and another round of crazy cash injections and valuations emerged from the AI realm. DeepL, an AI language translation startup, raised $300 million on a $2 billion valuation;…

Harlem Capital is raising a $150 million fund

If raised, this new fund, the firm’s third, would be its largest to date.

US pharma giant Cencora says Americans’ health information stolen in data breach

About half a million patients have been notified so far, but the number of affected individuals is likely far higher.

Last day to vote for TC Disrupt 2024 Audience Choice program

Attention, tech enthusiasts and startup supporters! The final countdown is here: Today is the last day to cast your vote for the TechCrunch Disrupt 2024 Audience Choice program. Voting closes…

Featured Article

Signal’s Meredith Whittaker on the Telegram security clash and the ‘edge lords’ at OpenAI

Among other things, Whittaker is concerned about the concentration of power in the five main social media platforms.

Lucid Motors slashes 400 jobs ahead of crucial SUV launch

Lucid Motors is laying off about 400 employees, or roughly 6% of its workforce, as part of a restructuring ahead of the launch of its first electric SUV later this…

Google invests $350 million in Indian e-commerce giant Flipkart

Google is investing nearly $350 million in Flipkart, becoming the latest high-profile name to back the Walmart-owned Indian e-commerce startup. The Android-maker will also provide Flipkart with cloud offerings as…

Jio Financial unit to buy $4.32B of telecom gear from Reliance Retail

A Jio Financial unit plans to purchase customer premises equipment and telecom gear worth $4.32 billion from Reliance Retail.

Foursquare just laid off 105 employees

Foursquare, the location-focused outfit that in 2020 merged with Factual, another location-focused outfit, is joining the parade of companies to make cuts to one of its biggest cost centers –…

Using memes, social media users have become red teams for half-baked AI features

“Running with scissors is a cardio exercise that can increase your heart rate and require concentration and focus,” says Google’s new AI search feature. “Some say it can also improve…

ESA prepares for the post-ISS era, selects The Exploration Company, Thales Alenia to develop cargo spacecraft

The European Space Agency selected two companies on Wednesday to advance designs of a cargo spacecraft that could establish the continent’s first sovereign access to space. The two awardees, major…

Expressable brings speech therapy into the home

Expressable is a platform that offers one-on-one virtual sessions with speech language pathologists.

The biggest French startups in 2024 according to the French government

The French Secretary of State for the Digital Economy as of this year, Marina Ferrari, revealed this year’s laureates during VivaTech week in Paris. According to its promoters, this fifth…

Spotify to shut off Car Thing for good, leading users to demand refunds

Spotify is notifying customers who purchased its Car Thing product that the devices will stop working after December 9, 2024. The company discontinued the device back in July 2022, but…

X should bring back stars, not hide ‘likes’

Elon Musk’s X is preparing to make “likes” private on the social network, in a change that could potentially confuse users over the difference between something they’ve favorited and something…

$6M fine for robocaller who used AI to clone Biden’s voice

The FCC has proposed a $6 million fine for the scammer who used voice-cloning tech to impersonate President Biden in a series of illegal robocalls during a New Hampshire primary…

Tesla lobbies for Elon and Kia taps into the GenAI hype

Welcome back to TechCrunch Mobility — your central hub for news and insights on the future of transportation. Sign up here for free — just click TechCrunch Mobility! Is it…

App developer Crowdaa raises €1.2M and plans a US expansion

Crowdaa is an app that allows non-developers to easily create and release apps on the mobile store.

Canva launches a proper enterprise product — and they mean it this time

Back in 2019, Canva, the wildly successful design tool, introduced what the company was calling an enterprise product, but in reality it was more geared toward teams than fulfilling true…

2 days left to vote for Disrupt Audience Choice

TechCrunch Disrupt 2024 isn’t just an event for innovation; it’s a platform where your voice matters. With the Disrupt 2024 Audience Choice Program, you have the power to shape the…

Ticketmaster antitrust lawsuit could give new hope to ticketing startups

The United States Department of Justice and 30 state attorneys general filed a lawsuit against Live Nation Entertainment, the parent company of Ticketmaster, for alleged monopolistic practices. Live Nation and…

‘Pro-competition’ rules for Big Tech make it through UK’s pre-election wash-up

The U.K. will shortly get its own rulebook for Big Tech, after peers in the House of Lords agreed Thursday afternoon to pass the Digital Markets, Competition and Consumer bill…

Spotify experiments with an AI DJ that speaks Spanish

Spotify’s addition of its AI DJ feature, which introduces personalized song selections to users, was the company’s first step into an AI future. Now, Spotify is developing an alternative version…

Arc Search’s new Call Arc feature lets you ask questions by ‘making a phone call’

Call Arc can help answer immediate and small questions, according to the company.

DeepMind AI solves hard geometry problems from mathematics olympiad

By Alex Wilkins

An AI from Google DeepMind can solve some International Mathematical Olympiad (IMO) questions on geometry almost as well as the best human contestants.

How does ChatGPT work and do AI-powered chatbots “think” like us?

"The results of AlphaGeometry are stunning and breathtaking," says Gregor Dolinar, the IMO president. "It seems that AI will win the IMO gold medal much sooner than was thought even a few months ago."

The IMO, aimed at secondary school students, is one of the most difficult maths competitions in the world. Answering questions correctly requires mathematical creativity that AI systems have long struggled with. GPT-4, for instance, which has shown remarkable reasoning ability in other domains, scores 0 per cent on IMO geometry questions, while even specialised AIs struggle to answer as well as average contestants.

This is partly down to the difficulty of the problems, but it is also because of a lack of training data. The competition has been run annually since 1959, and each edition consists of just six questions. Some of the most successful AI systems, however, require millions or billions of data points. Geometrical problems in particular, which make up two of the six questions and involve proving facts about angles or lines in complicated shapes, are particularly difficult to translate to a computer-friendly format.

Thang Luong at Google DeepMind and his colleagues have bypassed this problem by creating a tool that can generate hundreds of millions of machine-readable geometrical proofs. When they trained an AI called AlphaGeometry using this data and tested it on 30 IMO geometry questions, it answered 25 of them correctly, compared with 25.9 for the average IMO gold medallist.

“Our [current] AI systems are still struggling with the ability to do things like deep reasoning, where we need to plan ahead for many, many steps and also see the big picture, which is why mathematics is such an important benchmark and test set for us on our quest to artificial general intelligence,” Luong told a press conference.

AlphaGeometry consists of two parts, which Luong compares to different thinking systems in the brain: a fast, intuitive system and a slower, more analytical one. The first, intuitive part is a language model, similar to the technology behind ChatGPT, called GPT-f. It has been trained on the millions of generated proofs and suggests which theorems and arguments to try next for a problem. Once it suggests a next step, a slower but more careful “symbolic reasoning” engine uses logical and mathematical rules to fully construct the argument that GPT-f has suggested. The two systems then work in tandem, switching between one another until a problem has been solved.

While this method is remarkably successful at solving IMO geometry problems, the answers it constructs tend to be longer and less “beautiful” than human proofs, says Luong. However, it can also spot things that humans miss. For example, it discovered a better and more general solution to a question from the 2004 IMO than was listed in the official answers.

The future of AI: The 5 possible scenarios, from utopia to extinction