An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- My Bibliography

- Collections

- Citation manager

Save citation to file

Email citation, add to collections.

- Create a new collection

- Add to an existing collection

Add to My Bibliography

Your saved search, create a file for external citation management software, your rss feed.

- Search in PubMed

- Search in NLM Catalog

- Add to Search

How to Do a Systematic Review: A Best Practice Guide for Conducting and Reporting Narrative Reviews, Meta-Analyses, and Meta-Syntheses

Affiliations.

- 1 Behavioural Science Centre, Stirling Management School, University of Stirling, Stirling FK9 4LA, United Kingdom; email: [email protected].

- 2 Department of Psychological and Behavioural Science, London School of Economics and Political Science, London WC2A 2AE, United Kingdom.

- 3 Department of Statistics, Northwestern University, Evanston, Illinois 60208, USA; email: [email protected].

- PMID: 30089228

- DOI: 10.1146/annurev-psych-010418-102803

Systematic reviews are characterized by a methodical and replicable methodology and presentation. They involve a comprehensive search to locate all relevant published and unpublished work on a subject; a systematic integration of search results; and a critique of the extent, nature, and quality of evidence in relation to a particular research question. The best reviews synthesize studies to draw broad theoretical conclusions about what a literature means, linking theory to evidence and evidence to theory. This guide describes how to plan, conduct, organize, and present a systematic review of quantitative (meta-analysis) or qualitative (narrative review, meta-synthesis) information. We outline core standards and principles and describe commonly encountered problems. Although this guide targets psychological scientists, its high level of abstraction makes it potentially relevant to any subject area or discipline. We argue that systematic reviews are a key methodology for clarifying whether and how research findings replicate and for explaining possible inconsistencies, and we call for researchers to conduct systematic reviews to help elucidate whether there is a replication crisis.

Keywords: evidence; guide; meta-analysis; meta-synthesis; narrative; systematic review; theory.

PubMed Disclaimer

Similar articles

- The future of Cochrane Neonatal. Soll RF, Ovelman C, McGuire W. Soll RF, et al. Early Hum Dev. 2020 Nov;150:105191. doi: 10.1016/j.earlhumdev.2020.105191. Epub 2020 Sep 12. Early Hum Dev. 2020. PMID: 33036834

- A Primer on Systematic Reviews and Meta-Analyses. Nguyen NH, Singh S. Nguyen NH, et al. Semin Liver Dis. 2018 May;38(2):103-111. doi: 10.1055/s-0038-1655776. Epub 2018 Jun 5. Semin Liver Dis. 2018. PMID: 29871017 Review.

- Publication Bias and Nonreporting Found in Majority of Systematic Reviews and Meta-analyses in Anesthesiology Journals. Hedin RJ, Umberham BA, Detweiler BN, Kollmorgen L, Vassar M. Hedin RJ, et al. Anesth Analg. 2016 Oct;123(4):1018-25. doi: 10.1213/ANE.0000000000001452. Anesth Analg. 2016. PMID: 27537925 Review.

- Summarizing systematic reviews: methodological development, conduct and reporting of an umbrella review approach. Aromataris E, Fernandez R, Godfrey CM, Holly C, Khalil H, Tungpunkom P. Aromataris E, et al. Int J Evid Based Healthc. 2015 Sep;13(3):132-40. doi: 10.1097/XEB.0000000000000055. Int J Evid Based Healthc. 2015. PMID: 26360830

- RAMESES publication standards: meta-narrative reviews. Wong G, Greenhalgh T, Westhorp G, Buckingham J, Pawson R. Wong G, et al. BMC Med. 2013 Jan 29;11:20. doi: 10.1186/1741-7015-11-20. BMC Med. 2013. PMID: 23360661 Free PMC article.

- Surveillance of Occupational Exposure to Volatile Organic Compounds at Gas Stations: A Scoping Review Protocol. Mendes TMC, Soares JP, Salvador PTCO, Castro JL. Mendes TMC, et al. Int J Environ Res Public Health. 2024 Apr 23;21(5):518. doi: 10.3390/ijerph21050518. Int J Environ Res Public Health. 2024. PMID: 38791733 Free PMC article. Review.

- Association between poor sleep and mental health issues in Indigenous communities across the globe: a systematic review. Fernandez DR, Lee R, Tran N, Jabran DS, King S, McDaid L. Fernandez DR, et al. Sleep Adv. 2024 May 2;5(1):zpae028. doi: 10.1093/sleepadvances/zpae028. eCollection 2024. Sleep Adv. 2024. PMID: 38721053 Free PMC article.

- Barriers to ethical treatment of patients in clinical environments: A systematic narrative review. Dehkordi FG, Torabizadeh C, Rakhshan M, Vizeshfar F. Dehkordi FG, et al. Health Sci Rep. 2024 May 1;7(5):e2008. doi: 10.1002/hsr2.2008. eCollection 2024 May. Health Sci Rep. 2024. PMID: 38698790 Free PMC article.

- Studying Adherence to Reporting Standards in Kinesiology: A Post-publication Peer Review Brief Report. Watson NM, Thomas JD. Watson NM, et al. Int J Exerc Sci. 2024 Jan 1;17(7):25-37. eCollection 2024. Int J Exerc Sci. 2024. PMID: 38666001 Free PMC article.

- Evidence for Infant-directed Speech Preference Is Consistent Across Large-scale, Multi-site Replication and Meta-analysis. Zettersten M, Cox C, Bergmann C, Tsui ASM, Soderstrom M, Mayor J, Lundwall RA, Lewis M, Kosie JE, Kartushina N, Fusaroli R, Frank MC, Byers-Heinlein K, Black AK, Mathur MB. Zettersten M, et al. Open Mind (Camb). 2024 Apr 3;8:439-461. doi: 10.1162/opmi_a_00134. eCollection 2024. Open Mind (Camb). 2024. PMID: 38665547 Free PMC article.

- Search in MeSH

LinkOut - more resources

Full text sources.

- Ingenta plc

- Ovid Technologies, Inc.

Other Literature Sources

- scite Smart Citations

Miscellaneous

- NCI CPTAC Assay Portal

- Citation Manager

NCBI Literature Resources

MeSH PMC Bookshelf Disclaimer

The PubMed wordmark and PubMed logo are registered trademarks of the U.S. Department of Health and Human Services (HHS). Unauthorized use of these marks is strictly prohibited.

- Methodology

- Open access

- Published: 11 October 2016

Reviewing the research methods literature: principles and strategies illustrated by a systematic overview of sampling in qualitative research

- Stephen J. Gentles 1 , 4 ,

- Cathy Charles 1 ,

- David B. Nicholas 2 ,

- Jenny Ploeg 3 &

- K. Ann McKibbon 1

Systematic Reviews volume 5 , Article number: 172 ( 2016 ) Cite this article

53k Accesses

27 Citations

13 Altmetric

Metrics details

Overviews of methods are potentially useful means to increase clarity and enhance collective understanding of specific methods topics that may be characterized by ambiguity, inconsistency, or a lack of comprehensiveness. This type of review represents a distinct literature synthesis method, although to date, its methodology remains relatively undeveloped despite several aspects that demand unique review procedures. The purpose of this paper is to initiate discussion about what a rigorous systematic approach to reviews of methods, referred to here as systematic methods overviews , might look like by providing tentative suggestions for approaching specific challenges likely to be encountered. The guidance offered here was derived from experience conducting a systematic methods overview on the topic of sampling in qualitative research.

The guidance is organized into several principles that highlight specific objectives for this type of review given the common challenges that must be overcome to achieve them. Optional strategies for achieving each principle are also proposed, along with discussion of how they were successfully implemented in the overview on sampling. We describe seven paired principles and strategies that address the following aspects: delimiting the initial set of publications to consider, searching beyond standard bibliographic databases, searching without the availability of relevant metadata, selecting publications on purposeful conceptual grounds, defining concepts and other information to abstract iteratively, accounting for inconsistent terminology used to describe specific methods topics, and generating rigorous verifiable analytic interpretations. Since a broad aim in systematic methods overviews is to describe and interpret the relevant literature in qualitative terms, we suggest that iterative decision making at various stages of the review process, and a rigorous qualitative approach to analysis are necessary features of this review type.

Conclusions

We believe that the principles and strategies provided here will be useful to anyone choosing to undertake a systematic methods overview. This paper represents an initial effort to promote high quality critical evaluations of the literature regarding problematic methods topics, which have the potential to promote clearer, shared understandings, and accelerate advances in research methods. Further work is warranted to develop more definitive guidance.

Peer Review reports

While reviews of methods are not new, they represent a distinct review type whose methodology remains relatively under-addressed in the literature despite the clear implications for unique review procedures. One of few examples to describe it is a chapter containing reflections of two contributing authors in a book of 21 reviews on methodological topics compiled for the British National Health Service, Health Technology Assessment Program [ 1 ]. Notable is their observation of how the differences between the methods reviews and conventional quantitative systematic reviews, specifically attributable to their varying content and purpose, have implications for defining what qualifies as systematic. While the authors describe general aspects of “systematicity” (including rigorous application of a methodical search, abstraction, and analysis), they also describe a high degree of variation within the category of methods reviews itself and so offer little in the way of concrete guidance. In this paper, we present tentative concrete guidance, in the form of a preliminary set of proposed principles and optional strategies, for a rigorous systematic approach to reviewing and evaluating the literature on quantitative or qualitative methods topics. For purposes of this article, we have used the term systematic methods overview to emphasize the notion of a systematic approach to such reviews.

The conventional focus of rigorous literature reviews (i.e., review types for which systematic methods have been codified, including the various approaches to quantitative systematic reviews [ 2 – 4 ], and the numerous forms of qualitative and mixed methods literature synthesis [ 5 – 10 ]) is to synthesize empirical research findings from multiple studies. By contrast, the focus of overviews of methods, including the systematic approach we advocate, is to synthesize guidance on methods topics. The literature consulted for such reviews may include the methods literature, methods-relevant sections of empirical research reports, or both. Thus, this paper adds to previous work published in this journal—namely, recent preliminary guidance for conducting reviews of theory [ 11 ]—that has extended the application of systematic review methods to novel review types that are concerned with subject matter other than empirical research findings.

Published examples of methods overviews illustrate the varying objectives they can have. One objective is to establish methodological standards for appraisal purposes. For example, reviews of existing quality appraisal standards have been used to propose universal standards for appraising the quality of primary qualitative research [ 12 ] or evaluating qualitative research reports [ 13 ]. A second objective is to survey the methods-relevant sections of empirical research reports to establish current practices on methods use and reporting practices, which Moher and colleagues [ 14 ] recommend as a means for establishing the needs to be addressed in reporting guidelines (see, for example [ 15 , 16 ]). A third objective for a methods review is to offer clarity and enhance collective understanding regarding a specific methods topic that may be characterized by ambiguity, inconsistency, or a lack of comprehensiveness within the available methods literature. An example of this is a overview whose objective was to review the inconsistent definitions of intention-to-treat analysis (the methodologically preferred approach to analyze randomized controlled trial data) that have been offered in the methods literature and propose a solution for improving conceptual clarity [ 17 ]. Such reviews are warranted because students and researchers who must learn or apply research methods typically lack the time to systematically search, retrieve, review, and compare the available literature to develop a thorough and critical sense of the varied approaches regarding certain controversial or ambiguous methods topics.

While systematic methods overviews , as a review type, include both reviews of the methods literature and reviews of methods-relevant sections from empirical study reports, the guidance provided here is primarily applicable to reviews of the methods literature since it was derived from the experience of conducting such a review [ 18 ], described below. To our knowledge, there are no well-developed proposals on how to rigorously conduct such reviews. Such guidance would have the potential to improve the thoroughness and credibility of critical evaluations of the methods literature, which could increase their utility as a tool for generating understandings that advance research methods, both qualitative and quantitative. Our aim in this paper is thus to initiate discussion about what might constitute a rigorous approach to systematic methods overviews. While we hope to promote rigor in the conduct of systematic methods overviews wherever possible, we do not wish to suggest that all methods overviews need be conducted to the same standard. Rather, we believe that the level of rigor may need to be tailored pragmatically to the specific review objectives, which may not always justify the resource requirements of an intensive review process.

The example systematic methods overview on sampling in qualitative research

The principles and strategies we propose in this paper are derived from experience conducting a systematic methods overview on the topic of sampling in qualitative research [ 18 ]. The main objective of that methods overview was to bring clarity and deeper understanding of the prominent concepts related to sampling in qualitative research (purposeful sampling strategies, saturation, etc.). Specifically, we interpreted the available guidance, commenting on areas lacking clarity, consistency, or comprehensiveness (without proposing any recommendations on how to do sampling). This was achieved by a comparative and critical analysis of publications representing the most influential (i.e., highly cited) guidance across several methodological traditions in qualitative research.

The specific methods and procedures for the overview on sampling [ 18 ] from which our proposals are derived were developed both after soliciting initial input from local experts in qualitative research and an expert health librarian (KAM) and through ongoing careful deliberation throughout the review process. To summarize, in that review, we employed a transparent and rigorous approach to search the methods literature, selected publications for inclusion according to a purposeful and iterative process, abstracted textual data using structured abstraction forms, and analyzed (synthesized) the data using a systematic multi-step approach featuring abstraction of text, summary of information in matrices, and analytic comparisons.

For this article, we reflected on both the problems and challenges encountered at different stages of the review and our means for selecting justifiable procedures to deal with them. Several principles were then derived by considering the generic nature of these problems, while the generalizable aspects of the procedures used to address them formed the basis of optional strategies. Further details of the specific methods and procedures used in the overview on qualitative sampling are provided below to illustrate both the types of objectives and challenges that reviewers will likely need to consider and our approach to implementing each of the principles and strategies.

Organization of the guidance into principles and strategies

For the purposes of this article, principles are general statements outlining what we propose are important aims or considerations within a particular review process, given the unique objectives or challenges to be overcome with this type of review. These statements follow the general format, “considering the objective or challenge of X, we propose Y to be an important aim or consideration.” Strategies are optional and flexible approaches for implementing the previous principle outlined. Thus, generic challenges give rise to principles, which in turn give rise to strategies.

We organize the principles and strategies below into three sections corresponding to processes characteristic of most systematic literature synthesis approaches: literature identification and selection ; data abstraction from the publications selected for inclusion; and analysis , including critical appraisal and synthesis of the abstracted data. Within each section, we also describe the specific methodological decisions and procedures used in the overview on sampling in qualitative research [ 18 ] to illustrate how the principles and strategies for each review process were applied and implemented in a specific case. We expect this guidance and accompanying illustrations will be useful for anyone considering engaging in a methods overview, particularly those who may be familiar with conventional systematic review methods but may not yet appreciate some of the challenges specific to reviewing the methods literature.

Results and discussion

Literature identification and selection.

The identification and selection process includes search and retrieval of publications and the development and application of inclusion and exclusion criteria to select the publications that will be abstracted and analyzed in the final review. Literature identification and selection for overviews of the methods literature is challenging and potentially more resource-intensive than for most reviews of empirical research. This is true for several reasons that we describe below, alongside discussion of the potential solutions. Additionally, we suggest in this section how the selection procedures can be chosen to match the specific analytic approach used in methods overviews.

Delimiting a manageable set of publications

One aspect of methods overviews that can make identification and selection challenging is the fact that the universe of literature containing potentially relevant information regarding most methods-related topics is expansive and often unmanageably so. Reviewers are faced with two large categories of literature: the methods literature , where the possible publication types include journal articles, books, and book chapters; and the methods-relevant sections of empirical study reports , where the possible publication types include journal articles, monographs, books, theses, and conference proceedings. In our systematic overview of sampling in qualitative research, exhaustively searching (including retrieval and first-pass screening) all publication types across both categories of literature for information on a single methods-related topic was too burdensome to be feasible. The following proposed principle follows from the need to delimit a manageable set of literature for the review.

Principle #1:

Considering the broad universe of potentially relevant literature, we propose that an important objective early in the identification and selection stage is to delimit a manageable set of methods-relevant publications in accordance with the objectives of the methods overview.

Strategy #1:

To limit the set of methods-relevant publications that must be managed in the selection process, reviewers have the option to initially review only the methods literature, and exclude the methods-relevant sections of empirical study reports, provided this aligns with the review’s particular objectives.

We propose that reviewers are justified in choosing to select only the methods literature when the objective is to map out the range of recognized concepts relevant to a methods topic, to summarize the most authoritative or influential definitions or meanings for methods-related concepts, or to demonstrate a problematic lack of clarity regarding a widely established methods-related concept and potentially make recommendations for a preferred approach to the methods topic in question. For example, in the case of the methods overview on sampling [ 18 ], the primary aim was to define areas lacking in clarity for multiple widely established sampling-related topics. In the review on intention-to-treat in the context of missing outcome data [ 17 ], the authors identified a lack of clarity based on multiple inconsistent definitions in the literature and went on to recommend separating the issue of how to handle missing outcome data from the issue of whether an intention-to-treat analysis can be claimed.

In contrast to strategy #1, it may be appropriate to select the methods-relevant sections of empirical study reports when the objective is to illustrate how a methods concept is operationalized in research practice or reported by authors. For example, one could review all the publications in 2 years’ worth of issues of five high-impact field-related journals to answer questions about how researchers describe implementing a particular method or approach, or to quantify how consistently they define or report using it. Such reviews are often used to highlight gaps in the reporting practices regarding specific methods, which may be used to justify items to address in reporting guidelines (for example, [ 14 – 16 ]).

It is worth recognizing that other authors have advocated broader positions regarding the scope of literature to be considered in a review, expanding on our perspective. Suri [ 10 ] (who, like us, emphasizes how different sampling strategies are suitable for different literature synthesis objectives) has, for example, described a two-stage literature sampling procedure (pp. 96–97). First, reviewers use an initial approach to conduct a broad overview of the field—for reviews of methods topics, this would entail an initial review of the research methods literature. This is followed by a second more focused stage in which practical examples are purposefully selected—for methods reviews, this would involve sampling the empirical literature to illustrate key themes and variations. While this approach is seductive in its capacity to generate more in depth and interpretive analytic findings, some reviewers may consider it too resource-intensive to include the second step no matter how selective the purposeful sampling. In the overview on sampling where we stopped after the first stage [ 18 ], we discussed our selective focus on the methods literature as a limitation that left opportunities for further analysis of the literature. We explicitly recommended, for example, that theoretical sampling was a topic for which a future review of the methods sections of empirical reports was justified to answer specific questions identified in the primary review.

Ultimately, reviewers must make pragmatic decisions that balance resource considerations, combined with informed predictions about the depth and complexity of literature available on their topic, with the stated objectives of their review. The remaining principles and strategies apply primarily to overviews that include the methods literature, although some aspects may be relevant to reviews that include empirical study reports.

Searching beyond standard bibliographic databases

An important reality affecting identification and selection in overviews of the methods literature is the increased likelihood for relevant publications to be located in sources other than journal articles (which is usually not the case for overviews of empirical research, where journal articles generally represent the primary publication type). In the overview on sampling [ 18 ], out of 41 full-text publications retrieved and reviewed, only 4 were journal articles, while 37 were books or book chapters. Since many books and book chapters did not exist electronically, their full text had to be physically retrieved in hardcopy, while 11 publications were retrievable only through interlibrary loan or purchase request. The tasks associated with such retrieval are substantially more time-consuming than electronic retrieval. Since a substantial proportion of methods-related guidance may be located in publication types that are less comprehensively indexed in standard bibliographic databases, identification and retrieval thus become complicated processes.

Principle #2:

Considering that important sources of methods guidance can be located in non-journal publication types (e.g., books, book chapters) that tend to be poorly indexed in standard bibliographic databases, it is important to consider alternative search methods for identifying relevant publications to be further screened for inclusion.

Strategy #2:

To identify books, book chapters, and other non-journal publication types not thoroughly indexed in standard bibliographic databases, reviewers may choose to consult one or more of the following less standard sources: Google Scholar, publisher web sites, or expert opinion.

In the case of the overview on sampling in qualitative research [ 18 ], Google Scholar had two advantages over other standard bibliographic databases: it indexes and returns records of books and book chapters likely to contain guidance on qualitative research methods topics; and it has been validated as providing higher citation counts than ISI Web of Science (a producer of numerous bibliographic databases accessible through institutional subscription) for several non-biomedical disciplines including the social sciences where qualitative research methods are prominently used [ 19 – 21 ]. While we identified numerous useful publications by consulting experts, the author publication lists generated through Google Scholar searches were uniquely useful to identify more recent editions of methods books identified by experts.

Searching without relevant metadata

Determining what publications to select for inclusion in the overview on sampling [ 18 ] could only rarely be accomplished by reviewing the publication’s metadata. This was because for the many books and other non-journal type publications we identified as possibly relevant, the potential content of interest would be located in only a subsection of the publication. In this common scenario for reviews of the methods literature (as opposed to methods overviews that include empirical study reports), reviewers will often be unable to employ standard title, abstract, and keyword database searching or screening as a means for selecting publications.

Principle #3:

Considering that the presence of information about the topic of interest may not be indicated in the metadata for books and similar publication types, it is important to consider other means of identifying potentially useful publications for further screening.

Strategy #3:

One approach to identifying potentially useful books and similar publication types is to consider what classes of such publications (e.g., all methods manuals for a certain research approach) are likely to contain relevant content, then identify, retrieve, and review the full text of corresponding publications to determine whether they contain information on the topic of interest.

In the example of the overview on sampling in qualitative research [ 18 ], the topic of interest (sampling) was one of numerous topics covered in the general qualitative research methods manuals. Consequently, examples from this class of publications first had to be identified for retrieval according to non-keyword-dependent criteria. Thus, all methods manuals within the three research traditions reviewed (grounded theory, phenomenology, and case study) that might contain discussion of sampling were sought through Google Scholar and expert opinion, their full text obtained, and hand-searched for relevant content to determine eligibility. We used tables of contents and index sections of books to aid this hand searching.

Purposefully selecting literature on conceptual grounds

A final consideration in methods overviews relates to the type of analysis used to generate the review findings. Unlike quantitative systematic reviews where reviewers aim for accurate or unbiased quantitative estimates—something that requires identifying and selecting the literature exhaustively to obtain all relevant data available (i.e., a complete sample)—in methods overviews, reviewers must describe and interpret the relevant literature in qualitative terms to achieve review objectives. In other words, the aim in methods overviews is to seek coverage of the qualitative concepts relevant to the methods topic at hand. For example, in the overview of sampling in qualitative research [ 18 ], achieving review objectives entailed providing conceptual coverage of eight sampling-related topics that emerged as key domains. The following principle recognizes that literature sampling should therefore support generating qualitative conceptual data as the input to analysis.

Principle #4:

Since the analytic findings of a systematic methods overview are generated through qualitative description and interpretation of the literature on a specified topic, selection of the literature should be guided by a purposeful strategy designed to achieve adequate conceptual coverage (i.e., representing an appropriate degree of variation in relevant ideas) of the topic according to objectives of the review.

Strategy #4:

One strategy for choosing the purposeful approach to use in selecting the literature according to the review objectives is to consider whether those objectives imply exploring concepts either at a broad overview level, in which case combining maximum variation selection with a strategy that limits yield (e.g., critical case, politically important, or sampling for influence—described below) may be appropriate; or in depth, in which case purposeful approaches aimed at revealing innovative cases will likely be necessary.

In the methods overview on sampling, the implied scope was broad since we set out to review publications on sampling across three divergent qualitative research traditions—grounded theory, phenomenology, and case study—to facilitate making informative conceptual comparisons. Such an approach would be analogous to maximum variation sampling.

At the same time, the purpose of that review was to critically interrogate the clarity, consistency, and comprehensiveness of literature from these traditions that was “most likely to have widely influenced students’ and researchers’ ideas about sampling” (p. 1774) [ 18 ]. In other words, we explicitly set out to review and critique the most established and influential (and therefore dominant) literature, since this represents a common basis of knowledge among students and researchers seeking understanding or practical guidance on sampling in qualitative research. To achieve this objective, we purposefully sampled publications according to the criterion of influence , which we operationalized as how often an author or publication has been referenced in print or informal discourse. This second sampling approach also limited the literature we needed to consider within our broad scope review to a manageable amount.

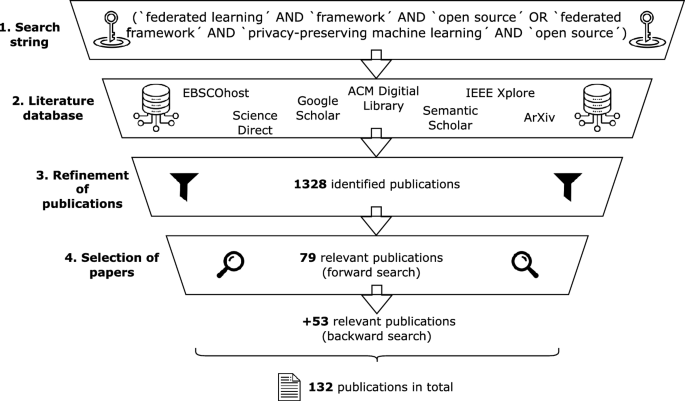

To operationalize this strategy of sampling for influence , we sought to identify both the most influential authors within a qualitative research tradition (all of whose citations were subsequently screened) and the most influential publications on the topic of interest by non-influential authors. This involved a flexible approach that combined multiple indicators of influence to avoid the dilemma that any single indicator might provide inadequate coverage. These indicators included bibliometric data (h-index for author influence [ 22 ]; number of cites for publication influence), expert opinion, and cross-references in the literature (i.e., snowball sampling). As a final selection criterion, a publication was included only if it made an original contribution in terms of novel guidance regarding sampling or a related concept; thus, purely secondary sources were excluded. Publish or Perish software (Anne-Wil Harzing; available at http://www.harzing.com/resources/publish-or-perish ) was used to generate bibliometric data via the Google Scholar database. Figure 1 illustrates how identification and selection in the methods overview on sampling was a multi-faceted and iterative process. The authors selected as influential, and the publications selected for inclusion or exclusion are listed in Additional file 1 (Matrices 1, 2a, 2b).

Literature identification and selection process used in the methods overview on sampling [ 18 ]

In summary, the strategies of seeking maximum variation and sampling for influence were employed in the sampling overview to meet the specific review objectives described. Reviewers will need to consider the full range of purposeful literature sampling approaches at their disposal in deciding what best matches the specific aims of their own reviews. Suri [ 10 ] has recently retooled Patton’s well-known typology of purposeful sampling strategies (originally intended for primary research) for application to literature synthesis, providing a useful resource in this respect.

Data abstraction

The purpose of data abstraction in rigorous literature reviews is to locate and record all data relevant to the topic of interest from the full text of included publications, making them available for subsequent analysis. Conventionally, a data abstraction form—consisting of numerous distinct conceptually defined fields to which corresponding information from the source publication is recorded—is developed and employed. There are several challenges, however, to the processes of developing the abstraction form and abstracting the data itself when conducting methods overviews, which we address here. Some of these problems and their solutions may be familiar to those who have conducted qualitative literature syntheses, which are similarly conceptual.

Iteratively defining conceptual information to abstract

In the overview on sampling [ 18 ], while we surveyed multiple sources beforehand to develop a list of concepts relevant for abstraction (e.g., purposeful sampling strategies, saturation, sample size), there was no way for us to anticipate some concepts prior to encountering them in the review process. Indeed, in many cases, reviewers are unable to determine the complete set of methods-related concepts that will be the focus of the final review a priori without having systematically reviewed the publications to be included. Thus, defining what information to abstract beforehand may not be feasible.

Principle #5:

Considering the potential impracticality of defining a complete set of relevant methods-related concepts from a body of literature one has not yet systematically read, selecting and defining fields for data abstraction must often be undertaken iteratively. Thus, concepts to be abstracted can be expected to grow and change as data abstraction proceeds.

Strategy #5:

Reviewers can develop an initial form or set of concepts for abstraction purposes according to standard methods (e.g., incorporating expert feedback, pilot testing) and remain attentive to the need to iteratively revise it as concepts are added or modified during the review. Reviewers should document revisions and return to re-abstract data from previously abstracted publications as the new data requirements are determined.

In the sampling overview [ 18 ], we developed and maintained the abstraction form in Microsoft Word. We derived the initial set of abstraction fields from our own knowledge of relevant sampling-related concepts, consultation with local experts, and reviewing a pilot sample of publications. Since the publications in this review included a large proportion of books, the abstraction process often began by flagging the broad sections within a publication containing topic-relevant information for detailed review to identify text to abstract. When reviewing flagged text, the reviewer occasionally encountered an unanticipated concept significant enough to warrant being added as a new field to the abstraction form. For example, a field was added to capture how authors described the timing of sampling decisions, whether before (a priori) or after (ongoing) starting data collection, or whether this was unclear. In these cases, we systematically documented the modification to the form and returned to previously abstracted publications to abstract any information that might be relevant to the new field.

The logic of this strategy is analogous to the logic used in a form of research synthesis called best fit framework synthesis (BFFS) [ 23 – 25 ]. In that method, reviewers initially code evidence using an a priori framework they have selected. When evidence cannot be accommodated by the selected framework, reviewers then develop new themes or concepts from which they construct a new expanded framework. Both the strategy proposed and the BFFS approach to research synthesis are notable for their rigorous and transparent means to adapt a final set of concepts to the content under review.

Accounting for inconsistent terminology

An important complication affecting the abstraction process in methods overviews is that the language used by authors to describe methods-related concepts can easily vary across publications. For example, authors from different qualitative research traditions often use different terms for similar methods-related concepts. Furthermore, as we found in the sampling overview [ 18 ], there may be cases where no identifiable term, phrase, or label for a methods-related concept is used at all, and a description of it is given instead. This can make searching the text for relevant concepts based on keywords unreliable.

Principle #6:

Since accepted terms may not be used consistently to refer to methods concepts, it is necessary to rely on the definitions for concepts, rather than keywords, to identify relevant information in the publication to abstract.

Strategy #6:

An effective means to systematically identify relevant information is to develop and iteratively adjust written definitions for key concepts (corresponding to abstraction fields) that are consistent with and as inclusive of as much of the literature reviewed as possible. Reviewers then seek information that matches these definitions (rather than keywords) when scanning a publication for relevant data to abstract.

In the abstraction process for the sampling overview [ 18 ], we noted the several concepts of interest to the review for which abstraction by keyword was particularly problematic due to inconsistent terminology across publications: sampling , purposeful sampling , sampling strategy , and saturation (for examples, see Additional file 1 , Matrices 3a, 3b, 4). We iteratively developed definitions for these concepts by abstracting text from publications that either provided an explicit definition or from which an implicit definition could be derived, which was recorded in fields dedicated to the concept’s definition. Using a method of constant comparison, we used text from definition fields to inform and modify a centrally maintained definition of the corresponding concept to optimize its fit and inclusiveness with the literature reviewed. Table 1 shows, as an example, the final definition constructed in this way for one of the central concepts of the review, qualitative sampling .

We applied iteratively developed definitions when making decisions about what specific text to abstract for an existing field, which allowed us to abstract concept-relevant data even if no recognized keyword was used. For example, this was the case for the sampling-related concept, saturation , where the relevant text available for abstraction in one publication [ 26 ]—“to continue to collect data until nothing new was being observed or recorded, no matter how long that takes”—was not accompanied by any term or label whatsoever.

This comparative analytic strategy (and our approach to analysis more broadly as described in strategy #7, below) is analogous to the process of reciprocal translation —a technique first introduced for meta-ethnography by Noblit and Hare [ 27 ] that has since been recognized as a common element in a variety of qualitative metasynthesis approaches [ 28 ]. Reciprocal translation, taken broadly, involves making sense of a study’s findings in terms of the findings of the other studies included in the review. In practice, it has been operationalized in different ways. Melendez-Torres and colleagues developed a typology from their review of the metasynthesis literature, describing four overlapping categories of specific operations undertaken in reciprocal translation: visual representation, key paper integration, data reduction and thematic extraction, and line-by-line coding [ 28 ]. The approaches suggested in both strategies #6 and #7, with their emphasis on constant comparison, appear to fall within the line-by-line coding category.

Generating credible and verifiable analytic interpretations

The analysis in a systematic methods overview must support its more general objective, which we suggested above is often to offer clarity and enhance collective understanding regarding a chosen methods topic. In our experience, this involves describing and interpreting the relevant literature in qualitative terms. Furthermore, any interpretative analysis required may entail reaching different levels of abstraction, depending on the more specific objectives of the review. For example, in the overview on sampling [ 18 ], we aimed to produce a comparative analysis of how multiple sampling-related topics were treated differently within and among different qualitative research traditions. To promote credibility of the review, however, not only should one seek a qualitative analytic approach that facilitates reaching varying levels of abstraction but that approach must also ensure that abstract interpretations are supported and justified by the source data and not solely the product of the analyst’s speculative thinking.

Principle #7:

Considering the qualitative nature of the analysis required in systematic methods overviews, it is important to select an analytic method whose interpretations can be verified as being consistent with the literature selected, regardless of the level of abstraction reached.

Strategy #7:

We suggest employing the constant comparative method of analysis [ 29 ] because it supports developing and verifying analytic links to the source data throughout progressively interpretive or abstract levels. In applying this approach, we advise a rigorous approach, documenting how supportive quotes or references to the original texts are carried forward in the successive steps of analysis to allow for easy verification.

The analytic approach used in the methods overview on sampling [ 18 ] comprised four explicit steps, progressing in level of abstraction—data abstraction, matrices, narrative summaries, and final analytic conclusions (Fig. 2 ). While we have positioned data abstraction as the second stage of the generic review process (prior to Analysis), above, we also considered it as an initial step of analysis in the sampling overview for several reasons. First, it involved a process of constant comparisons and iterative decision-making about the fields to add or define during development and modification of the abstraction form, through which we established the range of concepts to be addressed in the review. At the same time, abstraction involved continuous analytic decisions about what textual quotes (ranging in size from short phrases to numerous paragraphs) to record in the fields thus created. This constant comparative process was analogous to open coding in which textual data from publications was compared to conceptual fields (equivalent to codes) or to other instances of data previously abstracted when constructing definitions to optimize their fit with the overall literature as described in strategy #6. Finally, in the data abstraction step, we also recorded our first interpretive thoughts in dedicated fields, providing initial material for the more abstract analytic steps.

Summary of progressive steps of analysis used in the methods overview on sampling [ 18 ]

In the second step of the analysis, we constructed topic-specific matrices , or tables, by copying relevant quotes from abstraction forms into the appropriate cells of matrices (for the complete set of analytic matrices developed in the sampling review, see Additional file 1 (matrices 3 to 10)). Each matrix ranged from one to five pages; row headings, nested three-deep, identified the methodological tradition, author, and publication, respectively; and column headings identified the concepts, which corresponded to abstraction fields. Matrices thus allowed us to make further comparisons across methodological traditions, and between authors within a tradition. In the third step of analysis, we recorded our comparative observations as narrative summaries , in which we used illustrative quotes more sparingly. In the final step, we developed analytic conclusions based on the narrative summaries about the sampling-related concepts within each methodological tradition for which clarity, consistency, or comprehensiveness of the available guidance appeared to be lacking. Higher levels of analysis thus built logically from the lower levels, enabling us to easily verify analytic conclusions by tracing the support for claims by comparing the original text of publications reviewed.

Integrative versus interpretive methods overviews

The analytic product of systematic methods overviews is comparable to qualitative evidence syntheses, since both involve describing and interpreting the relevant literature in qualitative terms. Most qualitative synthesis approaches strive to produce new conceptual understandings that vary in level of interpretation. Dixon-Woods and colleagues [ 30 ] elaborate on a useful distinction, originating from Noblit and Hare [ 27 ], between integrative and interpretive reviews. Integrative reviews focus on summarizing available primary data and involve using largely secure and well defined concepts to do so; definitions are used from an early stage to specify categories for abstraction (or coding) of data, which in turn supports their aggregation; they do not seek as their primary focus to develop or specify new concepts, although they may achieve some theoretical or interpretive functions. For interpretive reviews, meanwhile, the main focus is to develop new concepts and theories that integrate them, with the implication that the concepts developed become fully defined towards the end of the analysis. These two forms are not completely distinct, and “every integrative synthesis will include elements of interpretation, and every interpretive synthesis will include elements of aggregation of data” [ 30 ].

The example methods overview on sampling [ 18 ] could be classified as predominantly integrative because its primary goal was to aggregate influential authors’ ideas on sampling-related concepts; there were also, however, elements of interpretive synthesis since it aimed to develop new ideas about where clarity in guidance on certain sampling-related topics is lacking, and definitions for some concepts were flexible and not fixed until late in the review. We suggest that most systematic methods overviews will be classifiable as predominantly integrative (aggregative). Nevertheless, more highly interpretive methods overviews are also quite possible—for example, when the review objective is to provide a highly critical analysis for the purpose of generating new methodological guidance. In such cases, reviewers may need to sample more deeply (see strategy #4), specifically by selecting empirical research reports (i.e., to go beyond dominant or influential ideas in the methods literature) that are likely to feature innovations or instructive lessons in employing a given method.

In this paper, we have outlined tentative guidance in the form of seven principles and strategies on how to conduct systematic methods overviews, a review type in which methods-relevant literature is systematically analyzed with the aim of offering clarity and enhancing collective understanding regarding a specific methods topic. Our proposals include strategies for delimiting the set of publications to consider, searching beyond standard bibliographic databases, searching without the availability of relevant metadata, selecting publications on purposeful conceptual grounds, defining concepts and other information to abstract iteratively, accounting for inconsistent terminology, and generating credible and verifiable analytic interpretations. We hope the suggestions proposed will be useful to others undertaking reviews on methods topics in future.

As far as we are aware, this is the first published source of concrete guidance for conducting this type of review. It is important to note that our primary objective was to initiate methodological discussion by stimulating reflection on what rigorous methods for this type of review should look like, leaving the development of more complete guidance to future work. While derived from the experience of reviewing a single qualitative methods topic, we believe the principles and strategies provided are generalizable to overviews of both qualitative and quantitative methods topics alike. However, it is expected that additional challenges and insights for conducting such reviews have yet to be defined. Thus, we propose that next steps for developing more definitive guidance should involve an attempt to collect and integrate other reviewers’ perspectives and experiences in conducting systematic methods overviews on a broad range of qualitative and quantitative methods topics. Formalized guidance and standards would improve the quality of future methods overviews, something we believe has important implications for advancing qualitative and quantitative methodology. When undertaken to a high standard, rigorous critical evaluations of the available methods guidance have significant potential to make implicit controversies explicit, and improve the clarity and precision of our understandings of problematic qualitative or quantitative methods issues.

A review process central to most types of rigorous reviews of empirical studies, which we did not explicitly address in a separate review step above, is quality appraisal . The reason we have not treated this as a separate step stems from the different objectives of the primary publications included in overviews of the methods literature (i.e., providing methodological guidance) compared to the primary publications included in the other established review types (i.e., reporting findings from single empirical studies). This is not to say that appraising quality of the methods literature is not an important concern for systematic methods overviews. Rather, appraisal is much more integral to (and difficult to separate from) the analysis step, in which we advocate appraising clarity, consistency, and comprehensiveness—the quality appraisal criteria that we suggest are appropriate for the methods literature. As a second important difference regarding appraisal, we currently advocate appraising the aforementioned aspects at the level of the literature in aggregate rather than at the level of individual publications. One reason for this is that methods guidance from individual publications generally builds on previous literature, and thus we feel that ahistorical judgments about comprehensiveness of single publications lack relevance and utility. Additionally, while different methods authors may express themselves less clearly than others, their guidance can nonetheless be highly influential and useful, and should therefore not be downgraded or ignored based on considerations of clarity—which raises questions about the alternative uses that quality appraisals of individual publications might have. Finally, legitimate variability in the perspectives that methods authors wish to emphasize, and the levels of generality at which they write about methods, makes critiquing individual publications based on the criterion of clarity a complex and potentially problematic endeavor that is beyond the scope of this paper to address. By appraising the current state of the literature at a holistic level, reviewers stand to identify important gaps in understanding that represent valuable opportunities for further methodological development.

To summarize, the principles and strategies provided here may be useful to those seeking to undertake their own systematic methods overview. Additional work is needed, however, to establish guidance that is comprehensive by comparing the experiences from conducting a variety of methods overviews on a range of methods topics. Efforts that further advance standards for systematic methods overviews have the potential to promote high-quality critical evaluations that produce conceptually clear and unified understandings of problematic methods topics, thereby accelerating the advance of research methodology.

Hutton JL, Ashcroft R. What does “systematic” mean for reviews of methods? In: Black N, Brazier J, Fitzpatrick R, Reeves B, editors. Health services research methods: a guide to best practice. London: BMJ Publishing Group; 1998. p. 249–54.

Google Scholar

Cochrane handbook for systematic reviews of interventions. In. Edited by Higgins JPT, Green S, Version 5.1.0 edn: The Cochrane Collaboration; 2011.

Centre for Reviews and Dissemination: Systematic reviews: CRD’s guidance for undertaking reviews in health care . York: Centre for Reviews and Dissemination; 2009.

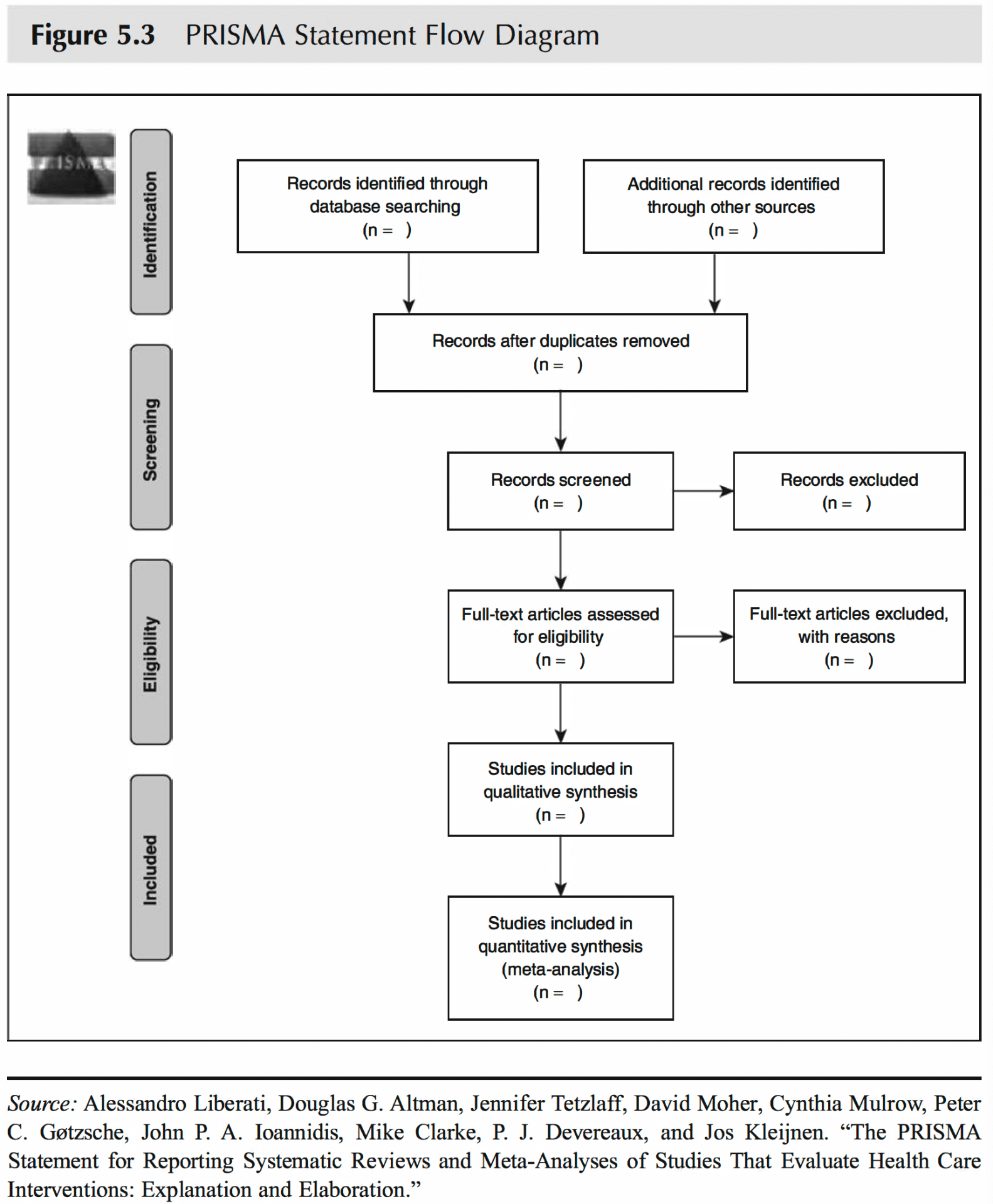

Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gotzsche PC, Ioannidis JPA, Clarke M, Devereaux PJ, Kleijnen J, Moher D. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: explanation and elaboration. BMJ. 2009;339:b2700–0.

Barnett-Page E, Thomas J. Methods for the synthesis of qualitative research: a critical review. BMC Med Res Methodol. 2009;9(1):59.

Article PubMed PubMed Central Google Scholar

Kastner M, Tricco AC, Soobiah C, Lillie E, Perrier L, Horsley T, Welch V, Cogo E, Antony J, Straus SE. What is the most appropriate knowledge synthesis method to conduct a review? Protocol for a scoping review. BMC Med Res Methodol. 2012;12(1):1–1.

Article Google Scholar

Booth A, Noyes J, Flemming K, Gerhardus A. Guidance on choosing qualitative evidence synthesis methods for use in health technology assessments of complex interventions. In: Integrate-HTA. 2016.

Booth A, Sutton A, Papaioannou D. Systematic approaches to successful literature review. 2nd ed. London: Sage; 2016.

Hannes K, Lockwood C. Synthesizing qualitative research: choosing the right approach. Chichester: Wiley-Blackwell; 2012.

Suri H. Towards methodologically inclusive research syntheses: expanding possibilities. New York: Routledge; 2014.

Campbell M, Egan M, Lorenc T, Bond L, Popham F, Fenton C, Benzeval M. Considering methodological options for reviews of theory: illustrated by a review of theories linking income and health. Syst Rev. 2014;3(1):1–11.

Cohen DJ, Crabtree BF. Evaluative criteria for qualitative research in health care: controversies and recommendations. Ann Fam Med. 2008;6(4):331–9.

Tong A, Sainsbury P, Craig J. Consolidated criteria for reportingqualitative research (COREQ): a 32-item checklist for interviews and focus groups. Int J Qual Health Care. 2007;19(6):349–57.

Article PubMed Google Scholar

Moher D, Schulz KF, Simera I, Altman DG. Guidance for developers of health research reporting guidelines. PLoS Med. 2010;7(2):e1000217.

Moher D, Tetzlaff J, Tricco AC, Sampson M, Altman DG. Epidemiology and reporting characteristics of systematic reviews. PLoS Med. 2007;4(3):e78.

Chan AW, Altman DG. Epidemiology and reporting of randomised trials published in PubMed journals. Lancet. 2005;365(9465):1159–62.

Alshurafa M, Briel M, Akl EA, Haines T, Moayyedi P, Gentles SJ, Rios L, Tran C, Bhatnagar N, Lamontagne F, et al. Inconsistent definitions for intention-to-treat in relation to missing outcome data: systematic review of the methods literature. PLoS One. 2012;7(11):e49163.

Article CAS PubMed PubMed Central Google Scholar

Gentles SJ, Charles C, Ploeg J, McKibbon KA. Sampling in qualitative research: insights from an overview of the methods literature. Qual Rep. 2015;20(11):1772–89.

Harzing A-W, Alakangas S. Google Scholar, Scopus and the Web of Science: a longitudinal and cross-disciplinary comparison. Scientometrics. 2016;106(2):787–804.

Harzing A-WK, van der Wal R. Google Scholar as a new source for citation analysis. Ethics Sci Environ Polit. 2008;8(1):61–73.

Kousha K, Thelwall M. Google Scholar citations and Google Web/URL citations: a multi‐discipline exploratory analysis. J Assoc Inf Sci Technol. 2007;58(7):1055–65.

Hirsch JE. An index to quantify an individual’s scientific research output. Proc Natl Acad Sci U S A. 2005;102(46):16569–72.

Booth A, Carroll C. How to build up the actionable knowledge base: the role of ‘best fit’ framework synthesis for studies of improvement in healthcare. BMJ Quality Safety. 2015;24(11):700–8.

Carroll C, Booth A, Leaviss J, Rick J. “Best fit” framework synthesis: refining the method. BMC Med Res Methodol. 2013;13(1):37.

Carroll C, Booth A, Cooper K. A worked example of “best fit” framework synthesis: a systematic review of views concerning the taking of some potential chemopreventive agents. BMC Med Res Methodol. 2011;11(1):29.

Cohen MZ, Kahn DL, Steeves DL. Hermeneutic phenomenological research: a practical guide for nurse researchers. Thousand Oaks: Sage; 2000.

Noblit GW, Hare RD. Meta-ethnography: synthesizing qualitative studies. Newbury Park: Sage; 1988.

Book Google Scholar

Melendez-Torres GJ, Grant S, Bonell C. A systematic review and critical appraisal of qualitative metasynthetic practice in public health to develop a taxonomy of operations of reciprocal translation. Res Synthesis Methods. 2015;6(4):357–71.

Article CAS Google Scholar

Glaser BG, Strauss A. The discovery of grounded theory. Chicago: Aldine; 1967.

Dixon-Woods M, Agarwal S, Young B, Jones D, Sutton A. Integrative approaches to qualitative and quantitative evidence. In: UK National Health Service. 2004. p. 1–44.

Download references

Acknowledgements

Not applicable.

There was no funding for this work.

Availability of data and materials

The systematic methods overview used as a worked example in this article (Gentles SJ, Charles C, Ploeg J, McKibbon KA: Sampling in qualitative research: insights from an overview of the methods literature. The Qual Rep 2015, 20(11):1772-1789) is available from http://nsuworks.nova.edu/tqr/vol20/iss11/5 .

Authors’ contributions

SJG wrote the first draft of this article, with CC contributing to drafting. All authors contributed to revising the manuscript. All authors except CC (deceased) approved the final draft. SJG, CC, KAB, and JP were involved in developing methods for the systematic methods overview on sampling.

Authors’ information

Competing interests.

The authors declare that they have no competing interests.

Consent for publication

Ethics approval and consent to participate, author information, authors and affiliations.

Department of Clinical Epidemiology and Biostatistics, McMaster University, Hamilton, Ontario, Canada

Stephen J. Gentles, Cathy Charles & K. Ann McKibbon

Faculty of Social Work, University of Calgary, Alberta, Canada

David B. Nicholas

School of Nursing, McMaster University, Hamilton, Ontario, Canada

Jenny Ploeg

CanChild Centre for Childhood Disability Research, McMaster University, 1400 Main Street West, IAHS 408, Hamilton, ON, L8S 1C7, Canada

Stephen J. Gentles

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Stephen J. Gentles .

Additional information

Cathy Charles is deceased

Additional file

Additional file 1:.

Submitted: Analysis_matrices. (DOC 330 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License ( http://creativecommons.org/licenses/by/4.0/ ), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated.

Reprints and permissions

About this article

Cite this article.

Gentles, S.J., Charles, C., Nicholas, D.B. et al. Reviewing the research methods literature: principles and strategies illustrated by a systematic overview of sampling in qualitative research. Syst Rev 5 , 172 (2016). https://doi.org/10.1186/s13643-016-0343-0

Download citation

Received : 06 June 2016

Accepted : 14 September 2016

Published : 11 October 2016

DOI : https://doi.org/10.1186/s13643-016-0343-0

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Systematic review

- Literature selection

- Research methods

- Research methodology

- Overview of methods

- Systematic methods overview

- Review methods

Systematic Reviews

ISSN: 2046-4053

- Submission enquiries: Access here and click Contact Us

- General enquiries: [email protected]

Archer Library

Quantitative research: literature review .

- Archer Library This link opens in a new window

- Research Resources handout This link opens in a new window

- Locating Books

- Library eBook Collections This link opens in a new window

- A to Z Database List This link opens in a new window

- Research & Statistics

- Literature Review Resources

- Citations & Reference

Exploring the literature review

Literature review model: 6 steps.

Adapted from The Literature Review , Machi & McEvoy (2009, p. 13).

Your Literature Review

Step 2: search, boolean search strategies, search limiters, ★ ebsco & google drive.

1. Select a Topic

"All research begins with curiosity" (Machi & McEvoy, 2009, p. 14)

Selection of a topic, and fully defined research interest and question, is supervised (and approved) by your professor. Tips for crafting your topic include:

- Be specific. Take time to define your interest.

- Topic Focus. Fully describe and sufficiently narrow the focus for research.

- Academic Discipline. Learn more about your area of research & refine the scope.

- Avoid Bias. Be aware of bias that you (as a researcher) may have.

- Document your research. Use Google Docs to track your research process.

- Research apps. Consider using Evernote or Zotero to track your research.

Consider Purpose

What will your topic and research address?

In The Literature Review: A Step-by-Step Guide for Students , Ridley presents that literature reviews serve several purposes (2008, p. 16-17). Included are the following points:

- Historical background for the research;

- Overview of current field provided by "contemporary debates, issues, and questions;"

- Theories and concepts related to your research;

- Introduce "relevant terminology" - or academic language - being used it the field;

- Connect to existing research - does your work "extend or challenge [this] or address a gap;"

- Provide "supporting evidence for a practical problem or issue" that your research addresses.

★ Schedule a research appointment

At this point in your literature review, take time to meet with a librarian. Why? Understanding the subject terminology used in databases can be challenging. Archer Librarians can help you structure a search, preparing you for step two. How? Contact a librarian directly or use the online form to schedule an appointment. Details are provided in the adjacent Schedule an Appointment box.

2. Search the Literature

Collect & Select Data: Preview, select, and organize

Archer Library is your go-to resource for this step in your literature review process. The literature search will include books and ebooks, scholarly and practitioner journals, theses and dissertations, and indexes. You may also choose to include web sites, blogs, open access resources, and newspapers. This library guide provides access to resources needed to complete a literature review.

Books & eBooks: Archer Library & OhioLINK

| Books | |

Databases: Scholarly & Practitioner Journals

Review the Library Databases tab on this library guide, it provides links to recommended databases for Education & Psychology, Business, and General & Social Sciences.

Expand your journal search; a complete listing of available AU Library and OhioLINK databases is available on the Databases A to Z list . Search the database by subject, type, name, or do use the search box for a general title search. The A to Z list also includes open access resources and select internet sites.

Databases: Theses & Dissertations

Review the Library Databases tab on this guide, it includes Theses & Dissertation resources. AU library also has AU student authored theses and dissertations available in print, search the library catalog for these titles.

Did you know? If you are looking for particular chapters within a dissertation that is not fully available online, it is possible to submit an ILL article request . Do this instead of requesting the entire dissertation.

Newspapers: Databases & Internet

Consider current literature in your academic field. AU Library's database collection includes The Chronicle of Higher Education and The Wall Street Journal . The Internet Resources tab in this guide provides links to newspapers and online journals such as Inside Higher Ed , COABE Journal , and Education Week .

The Chronicle of Higher Education has the nation’s largest newsroom dedicated to covering colleges and universities. Source of news, information, and jobs for college and university faculty members and administrators

The Chronicle features complete contents of the latest print issue; daily news and advice columns; current job listings; archive of previously published content; discussion forums; and career-building tools such as online CV management and salary databases. Dates covered: 1970-present.

Search Strategies & Boolean Operators

There are three basic boolean operators: AND, OR, and NOT.

Used with your search terms, boolean operators will either expand or limit results. What purpose do they serve? They help to define the relationship between your search terms. For example, using the operator AND will combine the terms expanding the search. When searching some databases, and Google, the operator AND may be implied.

Overview of boolean terms

| Search results will contain of the terms. | Search results will contain of the search terms. | Search results the specified search term. |

| Search for ; you will find items that contain terms. | Search for ; you will find items that contain . | Search for online education: you will find items that contain . |

| connects terms, limits the search, and will reduce the number of results returned. | redefines connection of the terms, expands the search, and increases the number of results returned. | excludes results from the search term and reduces the number of results. |

|

Adult learning online education: |

Adult learning online education: |

Adult learning online education: |

About the example: Boolean searches were conducted on November 4, 2019; result numbers may vary at a later date. No additional database limiters were set to further narrow search returns.

Database Search Limiters

Database strategies for targeted search results.

Most databases include limiters, or additional parameters, you may use to strategically focus search results. EBSCO databases, such as Education Research Complete & Academic Search Complete provide options to:

- Limit results to full text;

- Limit results to scholarly journals, and reference available;

- Select results source type to journals, magazines, conference papers, reviews, and newspapers

- Publication date

Keep in mind that these tools are defined as limiters for a reason; adding them to a search will limit the number of results returned. This can be a double-edged sword. How?

- If limiting results to full-text only, you may miss an important piece of research that could change the direction of your research. Interlibrary loan is available to students, free of charge. Request articles that are not available in full-text; they will be sent to you via email.

- If narrowing publication date, you may eliminate significant historical - or recent - research conducted on your topic.

- Limiting resource type to a specific type of material may cause bias in the research results.

Use limiters with care. When starting a search, consider opting out of limiters until the initial literature screening is complete. The second or third time through your research may be the ideal time to focus on specific time periods or material (scholarly vs newspaper).

★ Truncating Search Terms

Expanding your search term at the root.

Truncating is often referred to as 'wildcard' searching. Databases may have their own specific wildcard elements however, the most commonly used are the asterisk (*) or question mark (?). When used within your search. they will expand returned results.

Asterisk (*) Wildcard

Using the asterisk wildcard will return varied spellings of the truncated word. In the following example, the search term education was truncated after the letter "t."

| Original Search | |

| adult education | adult educat* |

| Results included: educate, education, educator, educators'/educators, educating, & educational |

Explore these database help pages for additional information on crafting search terms.

- EBSCO Connect: Basic Searching with EBSCO

- EBSCO Connect: Searching with Boolean Operators

- EBSCO Connect: Searching with Wildcards and Truncation Symbols

- ProQuest Help: Search Tips

- ERIC: How does ERIC search work?

★ EBSCO Databases & Google Drive

Tips for saving research directly to Google drive.

Researching in an EBSCO database?

It is possible to save articles (PDF and HTML) and abstracts in EBSCOhost databases directly to Google drive. Select the Google Drive icon, authenticate using a Google account, and an EBSCO folder will be created in your account. This is a great option for managing your research. If documenting your research in a Google Doc, consider linking the information to actual articles saved in drive.

EBSCO Databases & Google Drive

EBSCOHost Databases & Google Drive: Managing your Research

This video features an overview of how to use Google Drive with EBSCO databases to help manage your research. It presents information for connecting an active Google account to EBSCO and steps needed to provide permission for EBSCO to manage a folder in Drive.

About the Video: Closed captioning is available, select CC from the video menu. If you need to review a specific area on the video, view on YouTube and expand the video description for access to topic time stamps. A video transcript is provided below.

- EBSCOhost Databases & Google Scholar

Defining Literature Review

What is a literature review.

A definition from the Online Dictionary for Library and Information Sciences .

A literature review is "a comprehensive survey of the works published in a particular field of study or line of research, usually over a specific period of time, in the form of an in-depth, critical bibliographic essay or annotated list in which attention is drawn to the most significant works" (Reitz, 2014).

A systemic review is "a literature review focused on a specific research question, which uses explicit methods to minimize bias in the identification, appraisal, selection, and synthesis of all the high-quality evidence pertinent to the question" (Reitz, 2014).

Recommended Reading

About this page

EBSCO Connect [Discovery and Search]. (2022). Searching with boolean operators. Retrieved May, 3, 2022 from https://connect.ebsco.com/s/?language=en_US

EBSCO Connect [Discover and Search]. (2022). Searching with wildcards and truncation symbols. Retrieved May 3, 2022; https://connect.ebsco.com/s/?language=en_US

Machi, L.A. & McEvoy, B.T. (2009). The literature review . Thousand Oaks, CA: Corwin Press:

Reitz, J.M. (2014). Online dictionary for library and information science. ABC-CLIO, Libraries Unlimited . Retrieved from https://www.abc-clio.com/ODLIS/odlis_A.aspx

Ridley, D. (2008). The literature review: A step-by-step guide for students . Thousand Oaks, CA: Sage Publications, Inc.

Archer Librarians

Schedule an appointment.

Contact a librarian directly (email), or submit a request form. If you have worked with someone before, you can request them on the form.

- ★ Archer Library Help • Online Reqest Form

- Carrie Halquist • Reference & Instruction

- Jessica Byers • Reference & Curation

- Don Reams • Corrections Education & Reference

- Diane Schrecker • Education & Head of the IRC

- Tanaya Silcox • Technical Services & Business

- Sarah Thomas • Acquisitions & ATS Librarian

- << Previous: Research & Statistics

- Next: Literature Review Resources >>

- Last Updated: Jun 27, 2024 11:14 AM

- URL: https://libguides.ashland.edu/quantitative

Archer Library • Ashland University © Copyright 2023. An Equal Opportunity/Equal Access Institution.

Ohio State nav bar

The Ohio State University

- BuckeyeLink

- Find People

- Search Ohio State

Literature Review

What exactly is a literature review.

- Critical Exploration and Synthesis: It involves a thorough and critical examination of existing research, going beyond simple summaries to synthesize information.

- Reorganizing Key Information: Involves structuring and categorizing the main ideas and findings from various sources.

- Offering Fresh Interpretations: Provides new perspectives or insights into the research topic.

- Merging New and Established Insights: Integrates both recent findings and well-established knowledge in the field.

- Analyzing Intellectual Trajectories: Examines the evolution and debates within a specific field over time.

- Contextualizing Current Research: Places recent research within the broader academic landscape, showing its relevance and relation to existing knowledge.

- Detailed Overview of Sources: Gives a comprehensive summary of relevant books, articles, and other scholarly materials.

- Highlighting Significance: Emphasizes the importance of various research works to the specific topic of study.

How do Literature Reviews Differ from Academic Research Papers?

- Focus on Existing Arguments: Literature reviews summarize and synthesize existing research, unlike research papers that present new arguments.

- Secondary vs. Primary Research: Literature reviews are based on secondary sources, while research papers often include primary research.

- Foundational Element vs. Main Content: In research papers, literature reviews are usually a part of the background, not the main focus.

- Lack of Original Contributions: Literature reviews do not introduce new theories or findings, which is a key component of research papers.

Purpose of Literature Reviews

- Drawing from Diverse Fields: Literature reviews incorporate findings from various fields like health, education, psychology, business, and more.

- Prioritizing High-Quality Studies: They emphasize original, high-quality research for accuracy and objectivity.

- Serving as Comprehensive Guides: Offer quick, in-depth insights for understanding a subject thoroughly.

- Foundational Steps in Research: Act as a crucial first step in conducting new research by summarizing existing knowledge.

- Providing Current Knowledge for Professionals: Keep professionals updated with the latest findings in their fields.

- Demonstrating Academic Expertise: In academia, they showcase the writer’s deep understanding and contribute to the background of research papers.

- Essential for Scholarly Research: A deep understanding of literature is vital for conducting and contextualizing scholarly research.

A Literature Review is Not About:

- Merely Summarizing Sources: It’s not just a compilation of summaries of various research works.

- Ignoring Contradictions: It does not overlook conflicting evidence or viewpoints in the literature.

- Being Unstructured: It’s not a random collection of information without a clear organizing principle.