Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Systematic Review | Definition, Example, & Guide

Systematic Review | Definition, Example & Guide

Published on June 15, 2022 by Shaun Turney . Revised on November 20, 2023.

A systematic review is a type of review that uses repeatable methods to find, select, and synthesize all available evidence. It answers a clearly formulated research question and explicitly states the methods used to arrive at the answer.

They answered the question “What is the effectiveness of probiotics in reducing eczema symptoms and improving quality of life in patients with eczema?”

In this context, a probiotic is a health product that contains live microorganisms and is taken by mouth. Eczema is a common skin condition that causes red, itchy skin.

Table of contents

What is a systematic review, systematic review vs. meta-analysis, systematic review vs. literature review, systematic review vs. scoping review, when to conduct a systematic review, pros and cons of systematic reviews, step-by-step example of a systematic review, other interesting articles, frequently asked questions about systematic reviews.

A review is an overview of the research that’s already been completed on a topic.

What makes a systematic review different from other types of reviews is that the research methods are designed to reduce bias . The methods are repeatable, and the approach is formal and systematic:

- Formulate a research question

- Develop a protocol

- Search for all relevant studies

- Apply the selection criteria

- Extract the data

- Synthesize the data

- Write and publish a report

Although multiple sets of guidelines exist, the Cochrane Handbook for Systematic Reviews is among the most widely used. It provides detailed guidelines on how to complete each step of the systematic review process.

Systematic reviews are most commonly used in medical and public health research, but they can also be found in other disciplines.

Systematic reviews typically answer their research question by synthesizing all available evidence and evaluating the quality of the evidence. Synthesizing means bringing together different information to tell a single, cohesive story. The synthesis can be narrative ( qualitative ), quantitative , or both.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

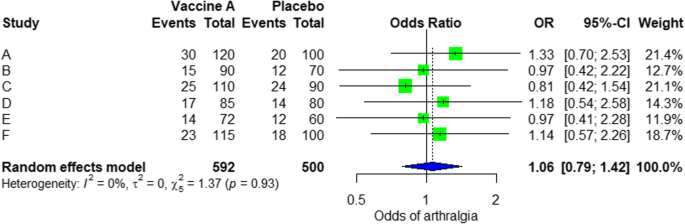

Systematic reviews often quantitatively synthesize the evidence using a meta-analysis . A meta-analysis is a statistical analysis, not a type of review.

A meta-analysis is a technique to synthesize results from multiple studies. It’s a statistical analysis that combines the results of two or more studies, usually to estimate an effect size .

A literature review is a type of review that uses a less systematic and formal approach than a systematic review. Typically, an expert in a topic will qualitatively summarize and evaluate previous work, without using a formal, explicit method.

Although literature reviews are often less time-consuming and can be insightful or helpful, they have a higher risk of bias and are less transparent than systematic reviews.

Similar to a systematic review, a scoping review is a type of review that tries to minimize bias by using transparent and repeatable methods.

However, a scoping review isn’t a type of systematic review. The most important difference is the goal: rather than answering a specific question, a scoping review explores a topic. The researcher tries to identify the main concepts, theories, and evidence, as well as gaps in the current research.

Sometimes scoping reviews are an exploratory preparation step for a systematic review, and sometimes they are a standalone project.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

A systematic review is a good choice of review if you want to answer a question about the effectiveness of an intervention , such as a medical treatment.

To conduct a systematic review, you’ll need the following:

- A precise question , usually about the effectiveness of an intervention. The question needs to be about a topic that’s previously been studied by multiple researchers. If there’s no previous research, there’s nothing to review.

- If you’re doing a systematic review on your own (e.g., for a research paper or thesis ), you should take appropriate measures to ensure the validity and reliability of your research.

- Access to databases and journal archives. Often, your educational institution provides you with access.

- Time. A professional systematic review is a time-consuming process: it will take the lead author about six months of full-time work. If you’re a student, you should narrow the scope of your systematic review and stick to a tight schedule.

- Bibliographic, word-processing, spreadsheet, and statistical software . For example, you could use EndNote, Microsoft Word, Excel, and SPSS.

A systematic review has many pros .

- They minimize research bias by considering all available evidence and evaluating each study for bias.

- Their methods are transparent , so they can be scrutinized by others.

- They’re thorough : they summarize all available evidence.

- They can be replicated and updated by others.

Systematic reviews also have a few cons .

- They’re time-consuming .

- They’re narrow in scope : they only answer the precise research question.

The 7 steps for conducting a systematic review are explained with an example.

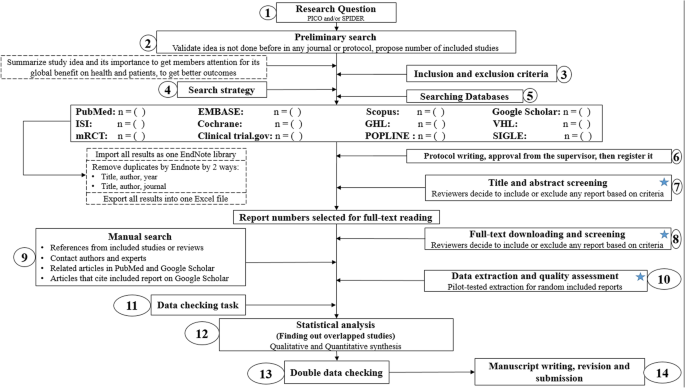

Step 1: Formulate a research question

Formulating the research question is probably the most important step of a systematic review. A clear research question will:

- Allow you to more effectively communicate your research to other researchers and practitioners

- Guide your decisions as you plan and conduct your systematic review

A good research question for a systematic review has four components, which you can remember with the acronym PICO :

- Population(s) or problem(s)

- Intervention(s)

- Comparison(s)

You can rearrange these four components to write your research question:

- What is the effectiveness of I versus C for O in P ?

Sometimes, you may want to include a fifth component, the type of study design . In this case, the acronym is PICOT .

- Type of study design(s)

- The population of patients with eczema

- The intervention of probiotics

- In comparison to no treatment, placebo , or non-probiotic treatment

- The outcome of changes in participant-, parent-, and doctor-rated symptoms of eczema and quality of life

- Randomized control trials, a type of study design

Their research question was:

- What is the effectiveness of probiotics versus no treatment, a placebo, or a non-probiotic treatment for reducing eczema symptoms and improving quality of life in patients with eczema?

Step 2: Develop a protocol

A protocol is a document that contains your research plan for the systematic review. This is an important step because having a plan allows you to work more efficiently and reduces bias.

Your protocol should include the following components:

- Background information : Provide the context of the research question, including why it’s important.

- Research objective (s) : Rephrase your research question as an objective.

- Selection criteria: State how you’ll decide which studies to include or exclude from your review.

- Search strategy: Discuss your plan for finding studies.

- Analysis: Explain what information you’ll collect from the studies and how you’ll synthesize the data.

If you’re a professional seeking to publish your review, it’s a good idea to bring together an advisory committee . This is a group of about six people who have experience in the topic you’re researching. They can help you make decisions about your protocol.

It’s highly recommended to register your protocol. Registering your protocol means submitting it to a database such as PROSPERO or ClinicalTrials.gov .

Step 3: Search for all relevant studies

Searching for relevant studies is the most time-consuming step of a systematic review.

To reduce bias, it’s important to search for relevant studies very thoroughly. Your strategy will depend on your field and your research question, but sources generally fall into these four categories:

- Databases: Search multiple databases of peer-reviewed literature, such as PubMed or Scopus . Think carefully about how to phrase your search terms and include multiple synonyms of each word. Use Boolean operators if relevant.

- Handsearching: In addition to searching the primary sources using databases, you’ll also need to search manually. One strategy is to scan relevant journals or conference proceedings. Another strategy is to scan the reference lists of relevant studies.

- Gray literature: Gray literature includes documents produced by governments, universities, and other institutions that aren’t published by traditional publishers. Graduate student theses are an important type of gray literature, which you can search using the Networked Digital Library of Theses and Dissertations (NDLTD) . In medicine, clinical trial registries are another important type of gray literature.

- Experts: Contact experts in the field to ask if they have unpublished studies that should be included in your review.

At this stage of your review, you won’t read the articles yet. Simply save any potentially relevant citations using bibliographic software, such as Scribbr’s APA or MLA Generator .

- Databases: EMBASE, PsycINFO, AMED, LILACS, and ISI Web of Science

- Handsearch: Conference proceedings and reference lists of articles

- Gray literature: The Cochrane Library, the metaRegister of Controlled Trials, and the Ongoing Skin Trials Register

- Experts: Authors of unpublished registered trials, pharmaceutical companies, and manufacturers of probiotics

Step 4: Apply the selection criteria

Applying the selection criteria is a three-person job. Two of you will independently read the studies and decide which to include in your review based on the selection criteria you established in your protocol . The third person’s job is to break any ties.

To increase inter-rater reliability , ensure that everyone thoroughly understands the selection criteria before you begin.

If you’re writing a systematic review as a student for an assignment, you might not have a team. In this case, you’ll have to apply the selection criteria on your own; you can mention this as a limitation in your paper’s discussion.

You should apply the selection criteria in two phases:

- Based on the titles and abstracts : Decide whether each article potentially meets the selection criteria based on the information provided in the abstracts.

- Based on the full texts: Download the articles that weren’t excluded during the first phase. If an article isn’t available online or through your library, you may need to contact the authors to ask for a copy. Read the articles and decide which articles meet the selection criteria.

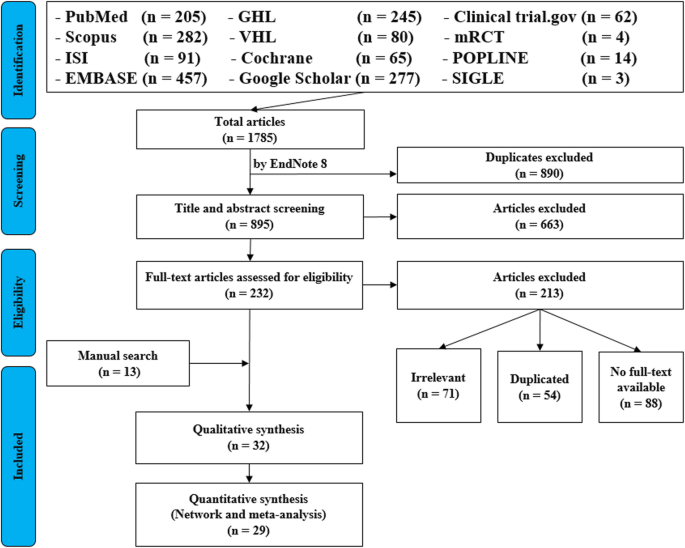

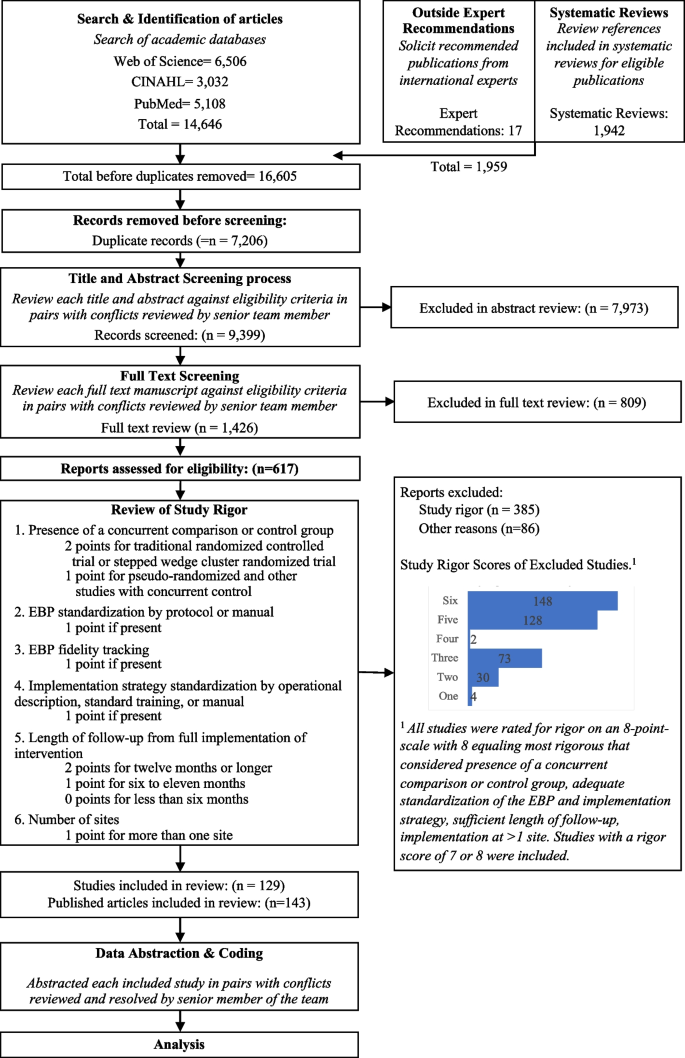

It’s very important to keep a meticulous record of why you included or excluded each article. When the selection process is complete, you can summarize what you did using a PRISMA flow diagram .

Next, Boyle and colleagues found the full texts for each of the remaining studies. Boyle and Tang read through the articles to decide if any more studies needed to be excluded based on the selection criteria.

When Boyle and Tang disagreed about whether a study should be excluded, they discussed it with Varigos until the three researchers came to an agreement.

Step 5: Extract the data

Extracting the data means collecting information from the selected studies in a systematic way. There are two types of information you need to collect from each study:

- Information about the study’s methods and results . The exact information will depend on your research question, but it might include the year, study design , sample size, context, research findings , and conclusions. If any data are missing, you’ll need to contact the study’s authors.

- Your judgment of the quality of the evidence, including risk of bias .

You should collect this information using forms. You can find sample forms in The Registry of Methods and Tools for Evidence-Informed Decision Making and the Grading of Recommendations, Assessment, Development and Evaluations Working Group .

Extracting the data is also a three-person job. Two people should do this step independently, and the third person will resolve any disagreements.

They also collected data about possible sources of bias, such as how the study participants were randomized into the control and treatment groups.

Step 6: Synthesize the data

Synthesizing the data means bringing together the information you collected into a single, cohesive story. There are two main approaches to synthesizing the data:

- Narrative ( qualitative ): Summarize the information in words. You’ll need to discuss the studies and assess their overall quality.

- Quantitative : Use statistical methods to summarize and compare data from different studies. The most common quantitative approach is a meta-analysis , which allows you to combine results from multiple studies into a summary result.

Generally, you should use both approaches together whenever possible. If you don’t have enough data, or the data from different studies aren’t comparable, then you can take just a narrative approach. However, you should justify why a quantitative approach wasn’t possible.

Boyle and colleagues also divided the studies into subgroups, such as studies about babies, children, and adults, and analyzed the effect sizes within each group.

Step 7: Write and publish a report

The purpose of writing a systematic review article is to share the answer to your research question and explain how you arrived at this answer.

Your article should include the following sections:

- Abstract : A summary of the review

- Introduction : Including the rationale and objectives

- Methods : Including the selection criteria, search method, data extraction method, and synthesis method

- Results : Including results of the search and selection process, study characteristics, risk of bias in the studies, and synthesis results

- Discussion : Including interpretation of the results and limitations of the review

- Conclusion : The answer to your research question and implications for practice, policy, or research

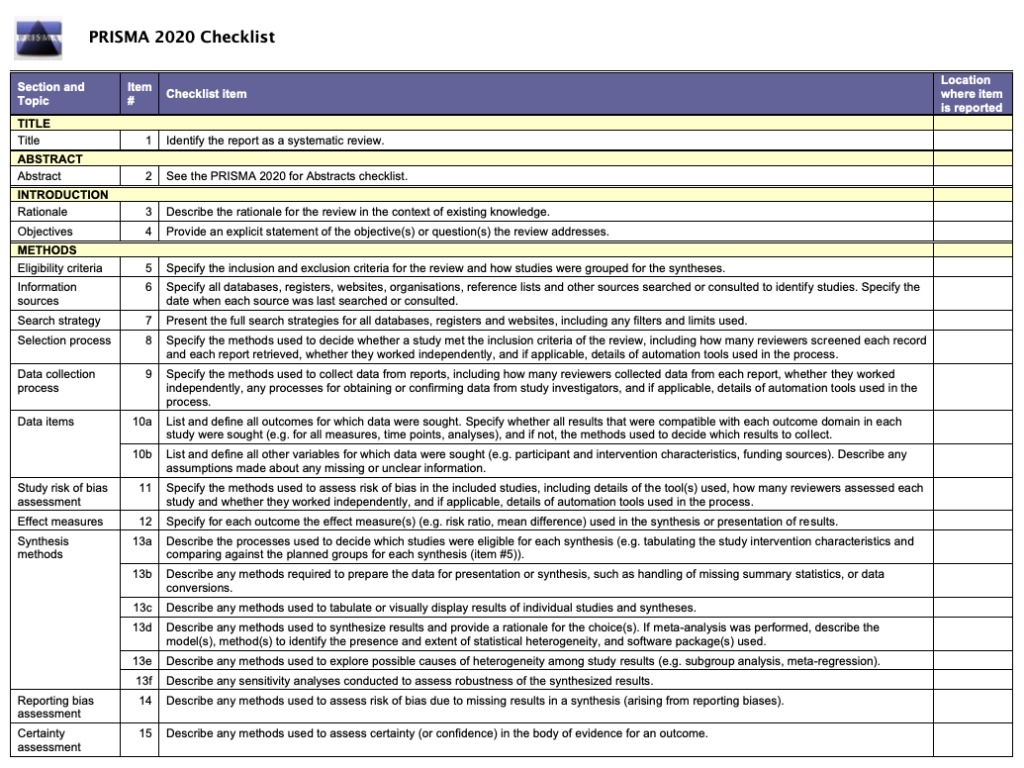

To verify that your report includes everything it needs, you can use the PRISMA checklist .

Once your report is written, you can publish it in a systematic review database, such as the Cochrane Database of Systematic Reviews , and/or in a peer-reviewed journal.

In their report, Boyle and colleagues concluded that probiotics cannot be recommended for reducing eczema symptoms or improving quality of life in patients with eczema. Note Generative AI tools like ChatGPT can be useful at various stages of the writing and research process and can help you to write your systematic review. However, we strongly advise against trying to pass AI-generated text off as your own work.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Student’s t -distribution

- Normal distribution

- Null and Alternative Hypotheses

- Chi square tests

- Confidence interval

- Quartiles & Quantiles

- Cluster sampling

- Stratified sampling

- Data cleansing

- Reproducibility vs Replicability

- Peer review

- Prospective cohort study

Research bias

- Implicit bias

- Cognitive bias

- Placebo effect

- Hawthorne effect

- Hindsight bias

- Affect heuristic

- Social desirability bias

A literature review is a survey of scholarly sources (such as books, journal articles, and theses) related to a specific topic or research question .

It is often written as part of a thesis, dissertation , or research paper , in order to situate your work in relation to existing knowledge.

A literature review is a survey of credible sources on a topic, often used in dissertations , theses, and research papers . Literature reviews give an overview of knowledge on a subject, helping you identify relevant theories and methods, as well as gaps in existing research. Literature reviews are set up similarly to other academic texts , with an introduction , a main body, and a conclusion .

An annotated bibliography is a list of source references that has a short description (called an annotation ) for each of the sources. It is often assigned as part of the research process for a paper .

A systematic review is secondary research because it uses existing research. You don’t collect new data yourself.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Turney, S. (2023, November 20). Systematic Review | Definition, Example & Guide. Scribbr. Retrieved July 5, 2024, from https://www.scribbr.com/methodology/systematic-review/

Is this article helpful?

Shaun Turney

Other students also liked, how to write a literature review | guide, examples, & templates, how to write a research proposal | examples & templates, what is critical thinking | definition & examples, "i thought ai proofreading was useless but..".

I've been using Scribbr for years now and I know it's a service that won't disappoint. It does a good job spotting mistakes”

Covidence website will be inaccessible as we upgrading our platform on Monday 23rd August at 10am AEST, / 2am CEST/1am BST (Sunday, 15th August 8pm EDT/5pm PDT)

How to write the methods section of a systematic review

Home | Blog | How To | How to write the methods section of a systematic review

Covidence breaks down how to write a methods section

The methods section of your systematic review describes what you did, how you did it, and why. Readers need this information to interpret the results and conclusions of the review. Often, a lot of information needs to be distilled into just a few paragraphs. This can be a challenging task, but good preparation and the right tools will help you to set off in the right direction 🗺️🧭.

Systematic reviews are so-called because they are conducted in a way that is rigorous and replicable. So it’s important that these methods are reported in a way that is thorough, clear, and easy to navigate for the reader – whether that’s a patient, a healthcare worker, or a researcher.

Like most things in a systematic review, the methods should be planned upfront and ideally described in detail in a project plan or protocol. Reviews of healthcare interventions follow the PRISMA guidelines for the minimum set of items to report in the methods section. But what else should be included? It’s a good idea to consider what readers will want to know about the review methods and whether the journal you’re planning to submit the work to has expectations on the reporting of methods. Finding out in advance will help you to plan what to include.

Describe what happened

While the research plan sets out what you intend to do, the methods section is a write-up of what actually happened. It’s not a simple case of rewriting the plan in the past tense – you will also need to discuss and justify deviations from the plan and describe the handling of issues that were unforeseen at the time the plan was written. For this reason, it is useful to make detailed notes before, during, and after the review is completed. Relying on memory alone risks losing valuable information and trawling through emails when the deadline is looming can be frustrating and time consuming!

Keep it brief

The methods section should be succinct but include all the noteworthy information. This can be a difficult balance to achieve. A useful strategy is to aim for a brief description that signposts the reader to a separate section or sections of supporting information. This could include datasets, a flowchart to show what happened to the excluded studies, a collection of search strategies, and tables containing detailed information about the studies.This separation keeps the review short and simple while enabling the reader to drill down to the detail as needed. And if the methods follow a well-known or standard process, it might suffice to say so and give a reference, rather than describe the process at length.

Follow a structure

A clear structure provides focus. Use of descriptive headings keeps the writing on track and helps the reader get to key information quickly. What should the structure of the methods section look like? As always, a lot depends on the type of review but it will certainly contain information relating to the following areas:

- Selection criteria ⭕

- Data collection and analysis 👩💻

- Study quality and risk of bias ⚖️

Let’s look at each of these in turn.

1. Selection criteria ⭕

The criteria for including and excluding studies are listed here. This includes detail about the types of studies, the types of participants, the types of interventions and the types of outcomes and how they were measured.

2. Search 🕵🏾♀️

Comprehensive reporting of the search is important because this means it can be evaluated and replicated. The search strategies are included in the review, along with details of the databases searched. It’s also important to list any restrictions on the search (for example, language), describe how resources other than electronic databases were searched (for example, non-indexed journals), and give the date that the searches were run. The PRISMA-S extension provides guidance on reporting literature searches.

Systematic reviewer pro-tip:

Copy and paste the search strategy to avoid introducing typos

3. Data collection and analysis 👩💻

This section describes:

- how studies were selected for inclusion in the review

- how study data were extracted from the study reports

- how study data were combined for analysis and synthesis

To describe how studies were selected for inclusion , review teams outline the screening process. Covidence uses reviewers’ decision data to automatically populate a PRISMA flow diagram for this purpose. Covidence can also calculate Cohen’s kappa to enable review teams to report the level of agreement among individual reviewers during screening.

To describe how study data were extracted from the study reports , reviewers outline the form that was used, any pilot-testing that was done, and the items that were extracted from the included studies. An important piece of information to include here is the process used to resolve conflict among the reviewers. Covidence’s data extraction tool saves reviewers’ comments and notes in the system as they work. This keeps the information in one place for easy retrieval ⚡.

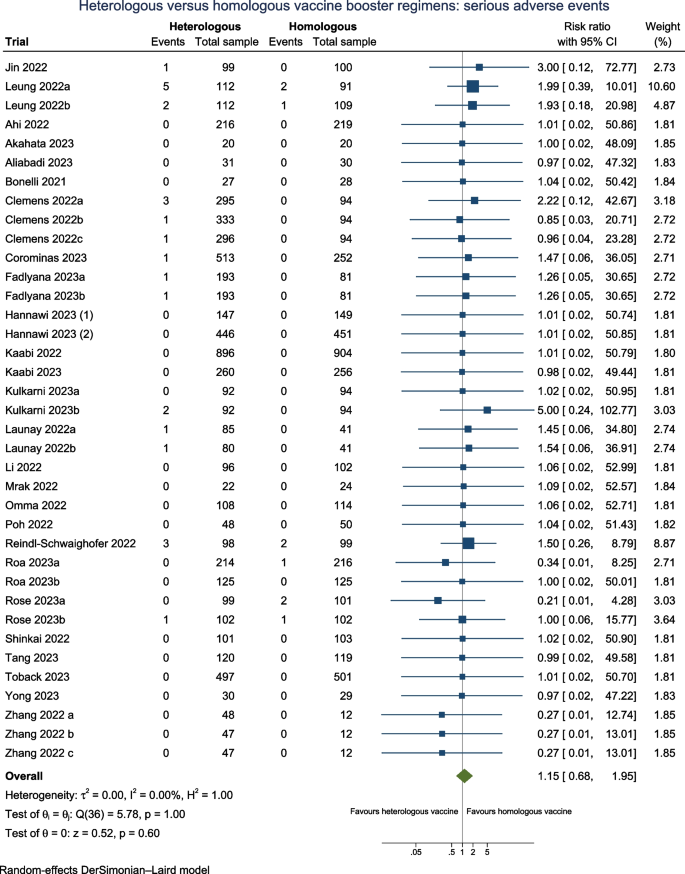

To describe how study data were combined for analysis and synthesis, reviewers outline the type of synthesis (narrative or quantitative, for example), the methods for grouping data, the challenges that came up, and how these were dealt with. If the review includes a meta-analysis, it will detail how this was performed and how the treatment effects were measured.

4. Study quality and risk of bias ⚖️

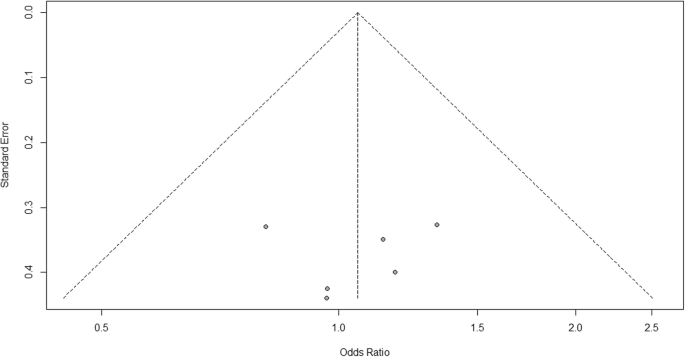

Because the results of systematic reviews can be affected by many types of bias, reviewers make every effort to minimise it and to show the reader that the methods they used were appropriate. This section describes the methods used to assess study quality and an assessment of the risk of bias across a range of domains.

Steps to assess the risk of bias in studies include looking at how study participants were assigned to treatment groups and whether patients and/or study assessors were blinded to the treatment given. Reviewers also report their assessment of the risk of bias due to missing outcome data, whether that is due to participant drop-out or non-reporting of the outcomes by the study authors.

Covidence’s default template for assessing study quality is Cochrane’s risk of bias tool but it is also possible to start from scratch and build a tool with a set of custom domains if you prefer.

Careful planning, clear writing, and a structured approach are key to a good methods section. A methodologist will be able to refer review teams to examples of good methods reporting in the literature. Covidence helps reviewers to screen references, extract data and complete risk of bias tables quickly and efficiently. Sign up for a free trial today!

Laura Mellor. Portsmouth, UK

Perhaps you'd also like....

Top 5 Tips for High-Quality Systematic Review Data Extraction

Data extraction can be a complex step in the systematic review process. Here are 5 top tips from our experts to help prepare and achieve high quality data extraction.

How to get through study quality assessment Systematic Review

Find out 5 tops tips to conducting quality assessment and why it’s an important step in the systematic review process.

How to extract study data for your systematic review

Learn the basic process and some tips to build data extraction forms for your systematic review with Covidence.

Better systematic review management

Head office, working for an institution or organisation.

Find out why over 350 of the world’s leading institutions are seeing a surge in publications since using Covidence!

Request a consultation with one of our team members and start empowering your researchers:

By using our site you consent to our use of cookies to measure and improve our site’s performance. Please see our Privacy Policy for more information.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- Systematic Review | Definition, Examples & Guide

Systematic Review | Definition, Examples & Guide

Published on 15 June 2022 by Shaun Turney . Revised on 17 October 2022.

A systematic review is a type of review that uses repeatable methods to find, select, and synthesise all available evidence. It answers a clearly formulated research question and explicitly states the methods used to arrive at the answer.

They answered the question ‘What is the effectiveness of probiotics in reducing eczema symptoms and improving quality of life in patients with eczema?’

In this context, a probiotic is a health product that contains live microorganisms and is taken by mouth. Eczema is a common skin condition that causes red, itchy skin.

Table of contents

What is a systematic review, systematic review vs meta-analysis, systematic review vs literature review, systematic review vs scoping review, when to conduct a systematic review, pros and cons of systematic reviews, step-by-step example of a systematic review, frequently asked questions about systematic reviews.

A review is an overview of the research that’s already been completed on a topic.

What makes a systematic review different from other types of reviews is that the research methods are designed to reduce research bias . The methods are repeatable , and the approach is formal and systematic:

- Formulate a research question

- Develop a protocol

- Search for all relevant studies

- Apply the selection criteria

- Extract the data

- Synthesise the data

- Write and publish a report

Although multiple sets of guidelines exist, the Cochrane Handbook for Systematic Reviews is among the most widely used. It provides detailed guidelines on how to complete each step of the systematic review process.

Systematic reviews are most commonly used in medical and public health research, but they can also be found in other disciplines.

Systematic reviews typically answer their research question by synthesising all available evidence and evaluating the quality of the evidence. Synthesising means bringing together different information to tell a single, cohesive story. The synthesis can be narrative ( qualitative ), quantitative , or both.

Prevent plagiarism, run a free check.

Systematic reviews often quantitatively synthesise the evidence using a meta-analysis . A meta-analysis is a statistical analysis, not a type of review.

A meta-analysis is a technique to synthesise results from multiple studies. It’s a statistical analysis that combines the results of two or more studies, usually to estimate an effect size .

A literature review is a type of review that uses a less systematic and formal approach than a systematic review. Typically, an expert in a topic will qualitatively summarise and evaluate previous work, without using a formal, explicit method.

Although literature reviews are often less time-consuming and can be insightful or helpful, they have a higher risk of bias and are less transparent than systematic reviews.

Similar to a systematic review, a scoping review is a type of review that tries to minimise bias by using transparent and repeatable methods.

However, a scoping review isn’t a type of systematic review. The most important difference is the goal: rather than answering a specific question, a scoping review explores a topic. The researcher tries to identify the main concepts, theories, and evidence, as well as gaps in the current research.

Sometimes scoping reviews are an exploratory preparation step for a systematic review, and sometimes they are a standalone project.

A systematic review is a good choice of review if you want to answer a question about the effectiveness of an intervention , such as a medical treatment.

To conduct a systematic review, you’ll need the following:

- A precise question , usually about the effectiveness of an intervention. The question needs to be about a topic that’s previously been studied by multiple researchers. If there’s no previous research, there’s nothing to review.

- If you’re doing a systematic review on your own (e.g., for a research paper or thesis), you should take appropriate measures to ensure the validity and reliability of your research.

- Access to databases and journal archives. Often, your educational institution provides you with access.

- Time. A professional systematic review is a time-consuming process: it will take the lead author about six months of full-time work. If you’re a student, you should narrow the scope of your systematic review and stick to a tight schedule.

- Bibliographic, word-processing, spreadsheet, and statistical software . For example, you could use EndNote, Microsoft Word, Excel, and SPSS.

A systematic review has many pros .

- They minimise research b ias by considering all available evidence and evaluating each study for bias.

- Their methods are transparent , so they can be scrutinised by others.

- They’re thorough : they summarise all available evidence.

- They can be replicated and updated by others.

Systematic reviews also have a few cons .

- They’re time-consuming .

- They’re narrow in scope : they only answer the precise research question.

The 7 steps for conducting a systematic review are explained with an example.

Step 1: Formulate a research question

Formulating the research question is probably the most important step of a systematic review. A clear research question will:

- Allow you to more effectively communicate your research to other researchers and practitioners

- Guide your decisions as you plan and conduct your systematic review

A good research question for a systematic review has four components, which you can remember with the acronym PICO :

- Population(s) or problem(s)

- Intervention(s)

- Comparison(s)

You can rearrange these four components to write your research question:

- What is the effectiveness of I versus C for O in P ?

Sometimes, you may want to include a fourth component, the type of study design . In this case, the acronym is PICOT .

- Type of study design(s)

- The population of patients with eczema

- The intervention of probiotics

- In comparison to no treatment, placebo , or non-probiotic treatment

- The outcome of changes in participant-, parent-, and doctor-rated symptoms of eczema and quality of life

- Randomised control trials, a type of study design

Their research question was:

- What is the effectiveness of probiotics versus no treatment, a placebo, or a non-probiotic treatment for reducing eczema symptoms and improving quality of life in patients with eczema?

Step 2: Develop a protocol

A protocol is a document that contains your research plan for the systematic review. This is an important step because having a plan allows you to work more efficiently and reduces bias.

Your protocol should include the following components:

- Background information : Provide the context of the research question, including why it’s important.

- Research objective(s) : Rephrase your research question as an objective.

- Selection criteria: State how you’ll decide which studies to include or exclude from your review.

- Search strategy: Discuss your plan for finding studies.

- Analysis: Explain what information you’ll collect from the studies and how you’ll synthesise the data.

If you’re a professional seeking to publish your review, it’s a good idea to bring together an advisory committee . This is a group of about six people who have experience in the topic you’re researching. They can help you make decisions about your protocol.

It’s highly recommended to register your protocol. Registering your protocol means submitting it to a database such as PROSPERO or ClinicalTrials.gov .

Step 3: Search for all relevant studies

Searching for relevant studies is the most time-consuming step of a systematic review.

To reduce bias, it’s important to search for relevant studies very thoroughly. Your strategy will depend on your field and your research question, but sources generally fall into these four categories:

- Databases: Search multiple databases of peer-reviewed literature, such as PubMed or Scopus . Think carefully about how to phrase your search terms and include multiple synonyms of each word. Use Boolean operators if relevant.

- Handsearching: In addition to searching the primary sources using databases, you’ll also need to search manually. One strategy is to scan relevant journals or conference proceedings. Another strategy is to scan the reference lists of relevant studies.

- Grey literature: Grey literature includes documents produced by governments, universities, and other institutions that aren’t published by traditional publishers. Graduate student theses are an important type of grey literature, which you can search using the Networked Digital Library of Theses and Dissertations (NDLTD) . In medicine, clinical trial registries are another important type of grey literature.

- Experts: Contact experts in the field to ask if they have unpublished studies that should be included in your review.

At this stage of your review, you won’t read the articles yet. Simply save any potentially relevant citations using bibliographic software, such as Scribbr’s APA or MLA Generator .

- Databases: EMBASE, PsycINFO, AMED, LILACS, and ISI Web of Science

- Handsearch: Conference proceedings and reference lists of articles

- Grey literature: The Cochrane Library, the metaRegister of Controlled Trials, and the Ongoing Skin Trials Register

- Experts: Authors of unpublished registered trials, pharmaceutical companies, and manufacturers of probiotics

Step 4: Apply the selection criteria

Applying the selection criteria is a three-person job. Two of you will independently read the studies and decide which to include in your review based on the selection criteria you established in your protocol . The third person’s job is to break any ties.

To increase inter-rater reliability , ensure that everyone thoroughly understands the selection criteria before you begin.

If you’re writing a systematic review as a student for an assignment, you might not have a team. In this case, you’ll have to apply the selection criteria on your own; you can mention this as a limitation in your paper’s discussion.

You should apply the selection criteria in two phases:

- Based on the titles and abstracts : Decide whether each article potentially meets the selection criteria based on the information provided in the abstracts.

- Based on the full texts: Download the articles that weren’t excluded during the first phase. If an article isn’t available online or through your library, you may need to contact the authors to ask for a copy. Read the articles and decide which articles meet the selection criteria.

It’s very important to keep a meticulous record of why you included or excluded each article. When the selection process is complete, you can summarise what you did using a PRISMA flow diagram .

Next, Boyle and colleagues found the full texts for each of the remaining studies. Boyle and Tang read through the articles to decide if any more studies needed to be excluded based on the selection criteria.

When Boyle and Tang disagreed about whether a study should be excluded, they discussed it with Varigos until the three researchers came to an agreement.

Step 5: Extract the data

Extracting the data means collecting information from the selected studies in a systematic way. There are two types of information you need to collect from each study:

- Information about the study’s methods and results . The exact information will depend on your research question, but it might include the year, study design , sample size, context, research findings , and conclusions. If any data are missing, you’ll need to contact the study’s authors.

- Your judgement of the quality of the evidence, including risk of bias .

You should collect this information using forms. You can find sample forms in The Registry of Methods and Tools for Evidence-Informed Decision Making and the Grading of Recommendations, Assessment, Development and Evaluations Working Group .

Extracting the data is also a three-person job. Two people should do this step independently, and the third person will resolve any disagreements.

They also collected data about possible sources of bias, such as how the study participants were randomised into the control and treatment groups.

Step 6: Synthesise the data

Synthesising the data means bringing together the information you collected into a single, cohesive story. There are two main approaches to synthesising the data:

- Narrative ( qualitative ): Summarise the information in words. You’ll need to discuss the studies and assess their overall quality.

- Quantitative : Use statistical methods to summarise and compare data from different studies. The most common quantitative approach is a meta-analysis , which allows you to combine results from multiple studies into a summary result.

Generally, you should use both approaches together whenever possible. If you don’t have enough data, or the data from different studies aren’t comparable, then you can take just a narrative approach. However, you should justify why a quantitative approach wasn’t possible.

Boyle and colleagues also divided the studies into subgroups, such as studies about babies, children, and adults, and analysed the effect sizes within each group.

Step 7: Write and publish a report

The purpose of writing a systematic review article is to share the answer to your research question and explain how you arrived at this answer.

Your article should include the following sections:

- Abstract : A summary of the review

- Introduction : Including the rationale and objectives

- Methods : Including the selection criteria, search method, data extraction method, and synthesis method

- Results : Including results of the search and selection process, study characteristics, risk of bias in the studies, and synthesis results

- Discussion : Including interpretation of the results and limitations of the review

- Conclusion : The answer to your research question and implications for practice, policy, or research

To verify that your report includes everything it needs, you can use the PRISMA checklist .

Once your report is written, you can publish it in a systematic review database, such as the Cochrane Database of Systematic Reviews , and/or in a peer-reviewed journal.

A systematic review is secondary research because it uses existing research. You don’t collect new data yourself.

A literature review is a survey of scholarly sources (such as books, journal articles, and theses) related to a specific topic or research question .

It is often written as part of a dissertation , thesis, research paper , or proposal .

There are several reasons to conduct a literature review at the beginning of a research project:

- To familiarise yourself with the current state of knowledge on your topic

- To ensure that you’re not just repeating what others have already done

- To identify gaps in knowledge and unresolved problems that your research can address

- To develop your theoretical framework and methodology

- To provide an overview of the key findings and debates on the topic

Writing the literature review shows your reader how your work relates to existing research and what new insights it will contribute.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Turney, S. (2022, October 17). Systematic Review | Definition, Examples & Guide. Scribbr. Retrieved 5 July 2024, from https://www.scribbr.co.uk/research-methods/systematic-reviews/

Is this article helpful?

Shaun Turney

Other students also liked, what is a literature review | guide, template, & examples, exploratory research | definition, guide, & examples, what is peer review | types & examples.

- A-Z Publications

Annual Review of Psychology

Volume 70, 2019, review article, how to do a systematic review: a best practice guide for conducting and reporting narrative reviews, meta-analyses, and meta-syntheses.

- Andy P. Siddaway 1 , Alex M. Wood 2 , and Larry V. Hedges 3

- View Affiliations Hide Affiliations Affiliations: 1 Behavioural Science Centre, Stirling Management School, University of Stirling, Stirling FK9 4LA, United Kingdom; email: [email protected] 2 Department of Psychological and Behavioural Science, London School of Economics and Political Science, London WC2A 2AE, United Kingdom 3 Department of Statistics, Northwestern University, Evanston, Illinois 60208, USA; email: [email protected]

- Vol. 70:747-770 (Volume publication date January 2019) https://doi.org/10.1146/annurev-psych-010418-102803

- First published as a Review in Advance on August 08, 2018

- Copyright © 2019 by Annual Reviews. All rights reserved

Systematic reviews are characterized by a methodical and replicable methodology and presentation. They involve a comprehensive search to locate all relevant published and unpublished work on a subject; a systematic integration of search results; and a critique of the extent, nature, and quality of evidence in relation to a particular research question. The best reviews synthesize studies to draw broad theoretical conclusions about what a literature means, linking theory to evidence and evidence to theory. This guide describes how to plan, conduct, organize, and present a systematic review of quantitative (meta-analysis) or qualitative (narrative review, meta-synthesis) information. We outline core standards and principles and describe commonly encountered problems. Although this guide targets psychological scientists, its high level of abstraction makes it potentially relevant to any subject area or discipline. We argue that systematic reviews are a key methodology for clarifying whether and how research findings replicate and for explaining possible inconsistencies, and we call for researchers to conduct systematic reviews to help elucidate whether there is a replication crisis.

Article metrics loading...

Full text loading...

Literature Cited

- APA Publ. Commun. Board Work. Group J. Artic. Rep. Stand. 2008 . Reporting standards for research in psychology: Why do we need them? What might they be?. Am. Psychol . 63 : 848– 49 [Google Scholar]

- Baumeister RF 2013 . Writing a literature review. The Portable Mentor: Expert Guide to a Successful Career in Psychology MJ Prinstein, MD Patterson 119– 32 New York: Springer, 2nd ed.. [Google Scholar]

- Baumeister RF , Leary MR 1995 . The need to belong: desire for interpersonal attachments as a fundamental human motivation. Psychol. Bull. 117 : 497– 529 [Google Scholar]

- Baumeister RF , Leary MR 1997 . Writing narrative literature reviews. Rev. Gen. Psychol. 3 : 311– 20 Presents a thorough and thoughtful guide to conducting narrative reviews. [Google Scholar]

- Bem DJ 1995 . Writing a review article for Psychological Bulletin. Psychol . Bull 118 : 172– 77 [Google Scholar]

- Borenstein M , Hedges LV , Higgins JPT , Rothstein HR 2009 . Introduction to Meta-Analysis New York: Wiley Presents a comprehensive introduction to meta-analysis. [Google Scholar]

- Borenstein M , Higgins JPT , Hedges LV , Rothstein HR 2017 . Basics of meta-analysis: I 2 is not an absolute measure of heterogeneity. Res. Synth. Methods 8 : 5– 18 [Google Scholar]

- Braver SL , Thoemmes FJ , Rosenthal R 2014 . Continuously cumulating meta-analysis and replicability. Perspect. Psychol. Sci. 9 : 333– 42 [Google Scholar]

- Bushman BJ 1994 . Vote-counting procedures. The Handbook of Research Synthesis H Cooper, LV Hedges 193– 214 New York: Russell Sage Found. [Google Scholar]

- Cesario J 2014 . Priming, replication, and the hardest science. Perspect. Psychol. Sci. 9 : 40– 48 [Google Scholar]

- Chalmers I 2007 . The lethal consequences of failing to make use of all relevant evidence about the effects of medical treatments: the importance of systematic reviews. Treating Individuals: From Randomised Trials to Personalised Medicine PM Rothwell 37– 58 London: Lancet [Google Scholar]

- Cochrane Collab. 2003 . Glossary Rep., Cochrane Collab. London: http://community.cochrane.org/glossary Presents a comprehensive glossary of terms relevant to systematic reviews. [Google Scholar]

- Cohn LD , Becker BJ 2003 . How meta-analysis increases statistical power. Psychol. Methods 8 : 243– 53 [Google Scholar]

- Cooper HM 2003 . Editorial. Psychol. Bull. 129 : 3– 9 [Google Scholar]

- Cooper HM 2016 . Research Synthesis and Meta-Analysis: A Step-by-Step Approach Thousand Oaks, CA: Sage, 5th ed.. Presents a comprehensive introduction to research synthesis and meta-analysis. [Google Scholar]

- Cooper HM , Hedges LV , Valentine JC 2009 . The Handbook of Research Synthesis and Meta-Analysis New York: Russell Sage Found, 2nd ed.. [Google Scholar]

- Cumming G 2014 . The new statistics: why and how. Psychol. Sci. 25 : 7– 29 Discusses the limitations of null hypothesis significance testing and viable alternative approaches. [Google Scholar]

- Earp BD , Trafimow D 2015 . Replication, falsification, and the crisis of confidence in social psychology. Front. Psychol. 6 : 621 [Google Scholar]

- Etz A , Vandekerckhove J 2016 . A Bayesian perspective on the reproducibility project: psychology. PLOS ONE 11 : e0149794 [Google Scholar]

- Ferguson CJ , Brannick MT 2012 . Publication bias in psychological science: prevalence, methods for identifying and controlling, and implications for the use of meta-analyses. Psychol. Methods 17 : 120– 28 [Google Scholar]

- Fleiss JL , Berlin JA 2009 . Effect sizes for dichotomous data. The Handbook of Research Synthesis and Meta-Analysis H Cooper, LV Hedges, JC Valentine 237– 53 New York: Russell Sage Found, 2nd ed.. [Google Scholar]

- Garside R 2014 . Should we appraise the quality of qualitative research reports for systematic reviews, and if so, how. Innovation 27 : 67– 79 [Google Scholar]

- Hedges LV , Olkin I 1980 . Vote count methods in research synthesis. Psychol. Bull. 88 : 359– 69 [Google Scholar]

- Hedges LV , Pigott TD 2001 . The power of statistical tests in meta-analysis. Psychol. Methods 6 : 203– 17 [Google Scholar]

- Higgins JPT , Green S 2011 . Cochrane Handbook for Systematic Reviews of Interventions, Version 5.1.0 London: Cochrane Collab. Presents comprehensive and regularly updated guidelines on systematic reviews. [Google Scholar]

- John LK , Loewenstein G , Prelec D 2012 . Measuring the prevalence of questionable research practices with incentives for truth telling. Psychol. Sci. 23 : 524– 32 [Google Scholar]

- Juni P , Witschi A , Bloch R , Egger M 1999 . The hazards of scoring the quality of clinical trials for meta-analysis. JAMA 282 : 1054– 60 [Google Scholar]

- Klein O , Doyen S , Leys C , Magalhães de Saldanha da Gama PA , Miller S et al. 2012 . Low hopes, high expectations: expectancy effects and the replicability of behavioral experiments. Perspect. Psychol. Sci. 7 : 6 572– 84 [Google Scholar]

- Lau J , Antman EM , Jimenez-Silva J , Kupelnick B , Mosteller F , Chalmers TC 1992 . Cumulative meta-analysis of therapeutic trials for myocardial infarction. N. Engl. J. Med. 327 : 248– 54 [Google Scholar]

- Light RJ , Smith PV 1971 . Accumulating evidence: procedures for resolving contradictions among different research studies. Harvard Educ. Rev. 41 : 429– 71 [Google Scholar]

- Lipsey MW , Wilson D 2001 . Practical Meta-Analysis London: Sage Comprehensive and clear explanation of meta-analysis. [Google Scholar]

- Matt GE , Cook TD 1994 . Threats to the validity of research synthesis. The Handbook of Research Synthesis H Cooper, LV Hedges 503– 20 New York: Russell Sage Found. [Google Scholar]

- Maxwell SE , Lau MY , Howard GS 2015 . Is psychology suffering from a replication crisis? What does “failure to replicate” really mean?. Am. Psychol. 70 : 487– 98 [Google Scholar]

- Moher D , Hopewell S , Schulz KF , Montori V , Gøtzsche PC et al. 2010 . CONSORT explanation and elaboration: updated guidelines for reporting parallel group randomised trials. BMJ 340 : c869 [Google Scholar]

- Moher D , Liberati A , Tetzlaff J , Altman DG PRISMA Group. 2009 . Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. BMJ 339 : 332– 36 Comprehensive reporting guidelines for systematic reviews. [Google Scholar]

- Morrison A , Polisena J , Husereau D , Moulton K , Clark M et al. 2012 . The effect of English-language restriction on systematic review-based meta-analyses: a systematic review of empirical studies. Int. J. Technol. Assess. Health Care 28 : 138– 44 [Google Scholar]

- Nelson LD , Simmons J , Simonsohn U 2018 . Psychology's renaissance. Annu. Rev. Psychol. 69 : 511– 34 [Google Scholar]

- Noblit GW , Hare RD 1988 . Meta-Ethnography: Synthesizing Qualitative Studies Newbury Park, CA: Sage [Google Scholar]

- Olivo SA , Macedo LG , Gadotti IC , Fuentes J , Stanton T , Magee DJ 2008 . Scales to assess the quality of randomized controlled trials: a systematic review. Phys. Ther. 88 : 156– 75 [Google Scholar]

- Open Sci. Collab. 2015 . Estimating the reproducibility of psychological science. Science 349 : 943 [Google Scholar]

- Paterson BL , Thorne SE , Canam C , Jillings C 2001 . Meta-Study of Qualitative Health Research: A Practical Guide to Meta-Analysis and Meta-Synthesis Thousand Oaks, CA: Sage [Google Scholar]

- Patil P , Peng RD , Leek JT 2016 . What should researchers expect when they replicate studies? A statistical view of replicability in psychological science. Perspect. Psychol. Sci. 11 : 539– 44 [Google Scholar]

- Rosenthal R 1979 . The “file drawer problem” and tolerance for null results. Psychol. Bull. 86 : 638– 41 [Google Scholar]

- Rosnow RL , Rosenthal R 1989 . Statistical procedures and the justification of knowledge in psychological science. Am. Psychol. 44 : 1276– 84 [Google Scholar]

- Sanderson S , Tatt ID , Higgins JP 2007 . Tools for assessing quality and susceptibility to bias in observational studies in epidemiology: a systematic review and annotated bibliography. Int. J. Epidemiol. 36 : 666– 76 [Google Scholar]

- Schreiber R , Crooks D , Stern PN 1997 . Qualitative meta-analysis. Completing a Qualitative Project: Details and Dialogue JM Morse 311– 26 Thousand Oaks, CA: Sage [Google Scholar]

- Shrout PE , Rodgers JL 2018 . Psychology, science, and knowledge construction: broadening perspectives from the replication crisis. Annu. Rev. Psychol. 69 : 487– 510 [Google Scholar]

- Stroebe W , Strack F 2014 . The alleged crisis and the illusion of exact replication. Perspect. Psychol. Sci. 9 : 59– 71 [Google Scholar]

- Stroup DF , Berlin JA , Morton SC , Olkin I , Williamson GD et al. 2000 . Meta-analysis of observational studies in epidemiology (MOOSE): a proposal for reporting. JAMA 283 : 2008– 12 [Google Scholar]

- Thorne S , Jensen L , Kearney MH , Noblit G , Sandelowski M 2004 . Qualitative meta-synthesis: reflections on methodological orientation and ideological agenda. Qual. Health Res. 14 : 1342– 65 [Google Scholar]

- Tong A , Flemming K , McInnes E , Oliver S , Craig J 2012 . Enhancing transparency in reporting the synthesis of qualitative research: ENTREQ. BMC Med. Res. Methodol. 12 : 181– 88 [Google Scholar]

- Trickey D , Siddaway AP , Meiser-Stedman R , Serpell L , Field AP 2012 . A meta-analysis of risk factors for post-traumatic stress disorder in children and adolescents. Clin. Psychol. Rev. 32 : 122– 38 [Google Scholar]

- Valentine JC , Biglan A , Boruch RF , Castro FG , Collins LM et al. 2011 . Replication in prevention science. Prev. Sci. 12 : 103– 17 [Google Scholar]

- Article Type: Review Article

Most Read This Month

Most cited most cited rss feed, job burnout, executive functions, social cognitive theory: an agentic perspective, on happiness and human potentials: a review of research on hedonic and eudaimonic well-being, sources of method bias in social science research and recommendations on how to control it, mediation analysis, missing data analysis: making it work in the real world, grounded cognition, personality structure: emergence of the five-factor model, motivational beliefs, values, and goals.

Systematic Review

- Library Help

- What is a Systematic Review (SR)?

Steps of a Systematic Review

- Framing a Research Question

- Developing a Search Strategy

- Searching the Literature

- Managing the Process

- Meta-analysis

- Publishing your Systematic Review

Forms and templates

Image: David Parmenter's Shop

- PICO Template

- Inclusion/Exclusion Criteria

- Database Search Log

- Review Matrix

- Cochrane Tool for Assessing Risk of Bias in Included Studies

• PRISMA Flow Diagram - Record the numbers of retrieved references and included/excluded studies. You can use the Create Flow Diagram tool to automate the process.

• PRISMA Checklist - Checklist of items to include when reporting a systematic review or meta-analysis

PRISMA 2020 and PRISMA-S: Common Questions on Tracking Records and the Flow Diagram

- PROSPERO Template

- Manuscript Template

- Steps of SR (text)

- Steps of SR (visual)

- Steps of SR (PIECES)

|

Image by | from the UMB HSHSL Guide. (26 min) on how to conduct and write a systematic review from RMIT University from the VU Amsterdam . , (1), 6–23. https://doi.org/10.3102/0034654319854352 . (1), 49-60. . (4), 471-475. (2020) (2020) - Methods guide for effectiveness and comparative effectiveness reviews (2017) - Finding what works in health care: Standards for systematic reviews (2011) - Systematic reviews: CRD’s guidance for undertaking reviews in health care (2008) |

|

| entify your research question. Formulate a clear, well-defined research question of appropriate scope. Define your terminology. Find existing reviews on your topic to inform the development of your research question, identify gaps, and confirm that you are not duplicating the efforts of previous reviews. Consider using a framework like or to define you question scope. Use to record search terms under each concept. It is a good idea to register your protocol in a publicly accessible way. This will help avoid other people completing a review on your topic. Similarly, before you start doing a systematic review, it's worth checking the different registries that nobody else has already registered a protocol on the same topic. - Systematic reviews of health care and clinical interventions - Systematic reviews of the effects of social interventions (Collaborative Approach to Meta-Analysis and Review of Animal Data from Experimental Studies) - The protocol is published immediately and subjected to open peer review. When two reviewers approve it, the paper is sent to Medline, Embase and other databases for indexing. - upload a protocol for your scoping review - Systematic reviews of healthcare practices to assist in the improvement of healthcare outcomes globally - Registry of a protocol on OSF creates a frozen, time-stamped record of the protocol, thus ensuring a level of transparency and accountability for the research. There are no limits to the types of protocols that can be hosted on OSF. - International prospective register of systematic reviews. This is the primary database for registering systematic review protocols and searching for published protocols. . PROSPERO accepts protocols from all disciplines (e.g., psychology, nutrition) with the stipulation that they must include health-related outcomes. - Similar to PROSPERO. Based in the UK, fee-based service, quick turnaround time. - Submit a pre-print, or a protocol for a scoping review. - Share your search strategy and research protocol. No limit on the format, size, access restrictions or license.outlining the details and documentation necessary for conducting a systematic review: , (1), 28. |

| Clearly state the criteria you will use to determine whether or not a study will be included in your search. Consider study populations, study design, intervention types, comparison groups, measured outcomes. Use some database-supplied limits such as language, dates, humans, female/male, age groups, and publication/study types (randomized controlled trials, etc.). | |

| Run your searches in the to your topic. Work with to help you design comprehensive search strategies across a variety of databases. Approach the grey literature methodically and purposefully. Collect ALL of the retrieved records from each search into , such as , or , and prior to screening. using the and . | |

| - export your Endnote results in this screening software | Start with a title/abstract screening to remove studies that are clearly not related to your topic. Use your to screen the full-text of studies. It is highly recommended that two independent reviewers screen all studies, resolving areas of disagreement by consensus. |

| Use , or systematic review software (e.g. , ), to extract all relevant data from each included study. It is recommended that you pilot your data extraction tool, to determine if other fields should be included or existing fields clarified. | |

| Risk of Bias (Quality) Assessment - (download the Excel spreadsheet to see all data) | Use a Risk of Bias tool (such as the ) to assess the potential biases of studies in regards to study design and other factors. Read the to learn about the topic of assessing risk of bias in included studies. You can adapt ( ) to best meet the needs of your review, depending on the types of studies included. |

| - - - | Clearly present your findings, including detailed methodology (such as search strategies used, selection criteria, etc.) such that your review can be easily updated in the future with new research findings. Perform a meta-analysis, if the studies allow. Provide recommendations for practice and policy-making if sufficient, high quality evidence exists, or future directions for research to fill existing gaps in knowledge or to strengthen the body of evidence. For more information, see: . (2), 217–226. https://doi.org/10.2450/2012.0247-12 - Get some inspiration and find some terms and phrases for writing your manuscript - Automated high-quality spelling, grammar and rephrasing corrections using artificial intelligence (AI) to improve the flow of your writing. Free and subscription plans available. |

| - - | 8. Find the best journal to publish your work. Identifying the best journal to submit your research to can be a difficult process. To help you make the choice of where to submit, simply insert your title and abstract in any of the listed under the tab. |

Adapted from A Guide to Conducting Systematic Reviews: Steps in a Systematic Review by Cornell University Library

|

This diagram illustrates in a visual way and in plain language what review authors actually do in the process of undertaking a systematic review. |

This diagram illustrates what is actually in a published systematic review and gives examples from the relevant parts of a systematic review housed online on The Cochrane Library. It will help you to read or navigate a systematic review. |

Source: Cochrane Consumers and Communications (infographics are free to use and licensed under Creative Commons )

Check the following visual resources titled " What Are Systematic Reviews?"

- Video with closed captions available

- Animated Storyboard

|

Image: | - the methods of the systematic review are generally decided before conducting it.

Source: Foster, M. (2018). Systematic reviews service: Introduction to systematic reviews. Retrieved September 18, 2018, from |

- << Previous: What is a Systematic Review (SR)?

- Next: Framing a Research Question >>

- Last Updated: May 8, 2024 1:44 PM

- URL: https://lib.guides.umd.edu/SR

- UNC Libraries

- HSL Academic Process

- Systematic Reviews

Systematic Reviews: Home

Created by health science librarians.

- Systematic review resources

What is a Systematic Review?

A simplified process map, how can the library help, publications by hsl librarians, systematic reviews in non-health disciplines, resources for performing systematic reviews.

- Step 1: Complete Pre-Review Tasks

- Step 2: Develop a Protocol

- Step 3: Conduct Literature Searches

- Step 4: Manage Citations

- Step 5: Screen Citations

- Step 6: Assess Quality of Included Studies

- Step 7: Extract Data from Included Studies

- Step 8: Write the Review

Check our FAQ's

Email us

Call (919) 962-0800

Make an appointment with a librarian

Request a systematic or scoping review consultation

A systematic review is a literature review that gathers all of the available evidence matching pre-specified eligibility criteria to answer a specific research question. It uses explicit, systematic methods, documented in a protocol, to minimize bias , provide reliable findings , and inform decision-making. ¹

There are many types of literature reviews.

Before beginning a systematic review, consider whether it is the best type of review for your question, goals, and resources. The table below compares a few different types of reviews to help you decide which is best for you.

| Systematic Review | Scoping Review | Systematized Review |

|---|---|---|

| Conducted for Publication | Conducted for Publication | Conducted for Assignment, Thesis, or (Possibly) Publication |

| Protocol Required | Protocol Required | No Protocol Required |

| Focused Research Question | Broad Research Question | Either |

| Focused Inclusion & Exclusion Criteria | Broad Inclusion & Exclusion Criteria | Either |

| Requires Large Team | Requires Small Team | Usually 1-2 People |

- Scoping Review Guide For more information about scoping reviews, refer to the UNC HSL Scoping Review Guide.

- UNC HSL's Simplified, Step-by-Step Process Map A PDF file of the HSL's Systematic Review Process Map.

- Text-Only: UNC HSL's Systematic Reviews - A Simplified, Step-by-Step Process A text-only PDF file of HSL's Systematic Review Process Map.

The average systematic review takes 1,168 hours to complete. ¹ A librarian can help you speed up the process.

Systematic reviews follow established guidelines and best practices to produce high-quality research. Librarian involvement in systematic reviews is based on two levels. In Tier 1, your research team can consult with the librarian as needed. The librarian will answer questions and give you recommendations for tools to use. In Tier 2, the librarian will be an active member of your research team and co-author on your review. Roles and expectations of librarians vary based on the level of involvement desired. Examples of these differences are outlined in the table below.

| Tasks | Tier 1: Consultative | Tier 2: Research Partner / Co-author |

|---|---|---|

| Guidance on process and steps | Yes | Yes |

| Background searching for past and upcoming reviews | Yes | Yes |

| Development and/or refinement of review topic | Yes | Yes |

| Assistance with refinement of PICO (population, intervention(s), comparator(s), and key questions | Yes | Yes |

| Guidance on study types to include | Yes | Yes |

| Guidance on protocol registration | Yes | Yes |

| Identification of databases for searches | Yes | Yes |

| Instruction in search techniques and methods | Yes | Yes |

| Training in citation management software use for managing and sharing results | Yes | Yes |

| Development and execution of searches | No | Yes |

| Downloading search results to citation management software and removing duplicates | No | Yes |

| Documentation of search strategies | No | Yes |

| Management of search results | No | Yes |

| Guidance on methods | Yes | Yes |

| Guidance on data extraction, and management techniques and software | Yes | Yes |

| Suggestions of journals to target for publication | Yes | Yes |

| Drafting of literature search description in "Methods" section | No | Yes |

| Creation of PRISMA diagram | No | Yes |

| Drafting of literature search appendix | No | Yes |

| Review other manuscript sections and final draft | No | Yes |

| Librarian contributions warrant co-authorship | No | Yes |

- Request a systematic or scoping review consultation

The following are systematic and scoping reviews co-authored by HSL librarians.

Only the most recent 15 results are listed. Click the website link at the bottom of the list to see all reviews co-authored by HSL librarians in PubMed

Researchers conduct systematic reviews in a variety of disciplines. If your focus is on a topic outside of the health sciences, you may want to also consult the resources below to learn how systematic reviews may vary in your field. You can also contact a librarian for your discipline with questions.

- EPPI-Centre methods for conducting systematic reviews The EPPI-Centre develops methods and tools for conducting systematic reviews, including reviews for education, public and social policy.

Environmental Topics

- Collaboration for Environmental Evidence (CEE) CEE seeks to promote and deliver evidence syntheses on issues of greatest concern to environmental policy and practice as a public service

Social Sciences

- Siddaway AP, Wood AM, Hedges LV. How to Do a Systematic Review: A Best Practice Guide for Conducting and Reporting Narrative Reviews, Meta-Analyses, and Meta-Syntheses. Annu Rev Psychol. 2019 Jan 4;70:747-770. doi: 10.1146/annurev-psych-010418-102803. A resource for psychology systematic reviews, which also covers qualitative meta-syntheses or meta-ethnographies

- The Campbell Collaboration

Social Work

Software engineering

- Guidelines for Performing Systematic Literature Reviews in Software Engineering The objective of this report is to propose comprehensive guidelines for systematic literature reviews appropriate for software engineering researchers, including PhD students.

Sport, Exercise, & Nutrition

- Application of systematic review methodology to the field of nutrition by Tufts Evidence-based Practice Center Publication Date: 2009

- Systematic Reviews and Meta-Analysis — Open & Free (Open Learning Initiative) The course follows guidelines and standards developed by the Campbell Collaboration, based on empirical evidence about how to produce the most comprehensive and accurate reviews of research

- Systematic Reviews by David Gough, Sandy Oliver & James Thomas Publication Date: 2020

Updating reviews

- Updating systematic reviews by University of Ottawa Evidence-based Practice Center Publication Date: 2007

- Next: Step 1: Complete Pre-Review Tasks >>

- Last Updated: May 16, 2024 3:24 PM

- URL: https://guides.lib.unc.edu/systematic-reviews

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- My Bibliography

- Collections

- Citation manager

Save citation to file

Email citation, add to collections.

- Create a new collection

- Add to an existing collection

Add to My Bibliography

Your saved search, create a file for external citation management software, your rss feed.

- Search in PubMed

- Search in NLM Catalog

- Add to Search

Methodology of a systematic review

Affiliations.

- 1 Hospital Universitario La Paz, Madrid, España. Electronic address: [email protected].

- 2 Hospital Universitario Fundación Alcorcón, Madrid, España.

- 3 Instituto Valenciano de Oncología, Valencia, España.

- 4 Hospital Universitario de Cabueñes, Gijón, Asturias, España.

- 5 Hospital Universitario Ramón y Cajal, Madrid, España.

- 6 Hospital Universitario Gregorio Marañón, Madrid, España.

- 7 Hospital Universitario de Canarias, Tenerife, España.

- 8 Hospital Clínic, Barcelona, España; EAU Guidelines Office Board Member.

- PMID: 29731270

- DOI: 10.1016/j.acuro.2018.01.010

Context: The objective of evidence-based medicine is to employ the best scientific information available to apply to clinical practice. Understanding and interpreting the scientific evidence involves understanding the available levels of evidence, where systematic reviews and meta-analyses of clinical trials are at the top of the levels-of-evidence pyramid.

Acquisition of evidence: The review process should be well developed and planned to reduce biases and eliminate irrelevant and low-quality studies. The steps for implementing a systematic review include (i) correctly formulating the clinical question to answer (PICO), (ii) developing a protocol (inclusion and exclusion criteria), (iii) performing a detailed and broad literature search and (iv) screening the abstracts of the studies identified in the search and subsequently of the selected complete texts (PRISMA).

Synthesis of the evidence: Once the studies have been selected, we need to (v) extract the necessary data into a form designed in the protocol to summarise the included studies, (vi) assess the biases of each study, identifying the quality of the available evidence, and (vii) develop tables and text that synthesise the evidence.

Conclusions: A systematic review involves a critical and reproducible summary of the results of the available publications on a particular topic or clinical question. To improve scientific writing, the methodology is shown in a structured manner to implement a systematic review.

Keywords: Meta-analysis; Metaanálisis; Methodology; Metodología; Revisión sistemática; Systematic review.

Copyright © 2018 AEU. Publicado por Elsevier España, S.L.U. All rights reserved.

PubMed Disclaimer

Similar articles

- Folic acid supplementation and malaria susceptibility and severity among people taking antifolate antimalarial drugs in endemic areas. Crider K, Williams J, Qi YP, Gutman J, Yeung L, Mai C, Finkelstain J, Mehta S, Pons-Duran C, Menéndez C, Moraleda C, Rogers L, Daniels K, Green P. Crider K, et al. Cochrane Database Syst Rev. 2022 Feb 1;2(2022):CD014217. doi: 10.1002/14651858.CD014217. Cochrane Database Syst Rev. 2022. PMID: 36321557 Free PMC article.

- The future of Cochrane Neonatal. Soll RF, Ovelman C, McGuire W. Soll RF, et al. Early Hum Dev. 2020 Nov;150:105191. doi: 10.1016/j.earlhumdev.2020.105191. Epub 2020 Sep 12. Early Hum Dev. 2020. PMID: 33036834

- WHO/ILO work-related burden of disease and injury: Protocol for systematic reviews of occupational exposure to dusts and/or fibres and of the effect of occupational exposure to dusts and/or fibres on pneumoconiosis. Mandrioli D, Schlünssen V, Ádám B, Cohen RA, Colosio C, Chen W, Fischer A, Godderis L, Göen T, Ivanov ID, Leppink N, Mandic-Rajcevic S, Masci F, Nemery B, Pega F, Prüss-Üstün A, Sgargi D, Ujita Y, van der Mierden S, Zungu M, Scheepers PTJ. Mandrioli D, et al. Environ Int. 2018 Oct;119:174-185. doi: 10.1016/j.envint.2018.06.005. Epub 2018 Jun 27. Environ Int. 2018. PMID: 29958118 Review.

- Palliative Treatment of Cancer-Related Pain [Internet]. Kongsgaard U, Kaasa S, Dale O, Ottesen S, Nordøy T, Hessling SE, von Hofacker S, Bruland ØS, Lyngstadaas A. Kongsgaard U, et al. Oslo, Norway: Knowledge Centre for the Health Services at The Norwegian Institute of Public Health (NIPH); 2005 Dec. Report from Norwegian Knowledge Centre for the Health Services (NOKC) No. 09-2005. Oslo, Norway: Knowledge Centre for the Health Services at The Norwegian Institute of Public Health (NIPH); 2005 Dec. Report from Norwegian Knowledge Centre for the Health Services (NOKC) No. 09-2005. PMID: 29320015 Free Books & Documents. Review.

- The Effectiveness of Integrated Care Pathways for Adults and Children in Health Care Settings: A Systematic Review. Allen D, Gillen E, Rixson L. Allen D, et al. JBI Libr Syst Rev. 2009;7(3):80-129. doi: 10.11124/01938924-200907030-00001. JBI Libr Syst Rev. 2009. PMID: 27820426

- Effects of different nutrition interventions on sarcopenia criteria in older people: A study protocol for a systematic review of systematic reviews with meta-analysis. Ferreira LF, Roda Cardoso J, Telles da Rosa LH. Ferreira LF, et al. PLoS One. 2024 May 10;19(5):e0302843. doi: 10.1371/journal.pone.0302843. eCollection 2024. PLoS One. 2024. PMID: 38728270 Free PMC article.

- Editorial: Reviews in psychiatry 2022: psychopharmacology. Taube M. Taube M. Front Psychiatry. 2024 Feb 28;15:1382027. doi: 10.3389/fpsyt.2024.1382027. eCollection 2024. Front Psychiatry. 2024. PMID: 38482070 Free PMC article. No abstract available.

- Writing a Scientific Review Article: Comprehensive Insights for Beginners. Amobonye A, Lalung J, Mheta G, Pillai S. Amobonye A, et al. ScientificWorldJournal. 2024 Jan 17;2024:7822269. doi: 10.1155/2024/7822269. eCollection 2024. ScientificWorldJournal. 2024. PMID: 38268745 Free PMC article. Review.

- Appraising systematic reviews: a comprehensive guide to ensuring validity and reliability. Shaheen N, Shaheen A, Ramadan A, Hefnawy MT, Ramadan A, Ibrahim IA, Hassanein ME, Ashour ME, Flouty O. Shaheen N, et al. Front Res Metr Anal. 2023 Dec 21;8:1268045. doi: 10.3389/frma.2023.1268045. eCollection 2023. Front Res Metr Anal. 2023. PMID: 38179256 Free PMC article. Review.

- A systematic literature review of the role of trust and security on Fintech adoption in banking. Jafri JA, Mohd Amin SI, Abdul Rahman A, Mohd Nor S. Jafri JA, et al. Heliyon. 2023 Nov 29;10(1):e22980. doi: 10.1016/j.heliyon.2023.e22980. eCollection 2024 Jan 15. Heliyon. 2023. PMID: 38163181 Free PMC article. Review.

- Search in MeSH

LinkOut - more resources

Full text sources.

- Elsevier Science

Other Literature Sources

- scite Smart Citations

Research Materials

- NCI CPTC Antibody Characterization Program

- Citation Manager

NCBI Literature Resources

MeSH PMC Bookshelf Disclaimer

The PubMed wordmark and PubMed logo are registered trademarks of the U.S. Department of Health and Human Services (HHS). Unauthorized use of these marks is strictly prohibited.

- Duke NetID Login

- 919.660.1100

- Duke Health Badge: 24-hour access

- Accounts & Access

- Databases, Journals & Books

- Request & Reserve

- Training & Consulting

- Request Articles & Books

- Renew Online

- Reserve Spaces

- Reserve a Locker

- Study & Meeting Rooms

- Course Reserves

- Pay Fines/Fees

- Recommend a Purchase

- Access From Off Campus

- Building Access

- Computers & Equipment

- Wifi Access

- My Accounts

- Mobile Apps

- Known Access Issues

- Report an Access Issue

- All Databases

- Article Databases

- Basic Sciences

- Clinical Sciences

- Dissertations & Theses

- Drugs, Chemicals & Toxicology

- Grants & Funding

- Interprofessional Education

- Non-Medical Databases