- Case studies

- Ultimate Guide

- Data Annotation

- Data Acquisition

- Data Science and AI

Learn how AI can help your company gain a competetive edge!

Homepage / Blog / What Does a Data Annotator Do?

What Does a Data Annotator Do?

In the era of artificial intelligence and machine learning, data annotation has emerged as a critical process.

This article delves into the role of a data annotator, an often-underestimated professional who aids in training AI systems by labeling and categorizing data.

We explore the skills required, the importance of this role in the AI domain, its practical applications, and discuss potential challenges and solutions within the field of data annotation.

Understanding the Role of a Data Annotator

The essence of a data annotator’s role lies in the meticulous processing and labeling of data, which serves as the bedrock for developing and refining machine learning models. As a critical player in the data pipeline, a data annotator is entrusted with the task of creating annotations that provide context and meaning to raw data.

The annotation process is an intricate one, requiring precision and attention to detail. Data annotators are expected to produce high-quality annotated data that can be used to train machine learning algorithms. The accuracy of annotation is paramount, as any inaccuracies can compromise the validity of the machine learning model.

Annotation analysts work closely with data annotators, overseeing the annotation methods used and ensuring that the highest standards are maintained. They scrutinize the quality of the annotations, ensuring that they are comprehensive, relevant, and accurate.

The Process of Data Annotation Explained

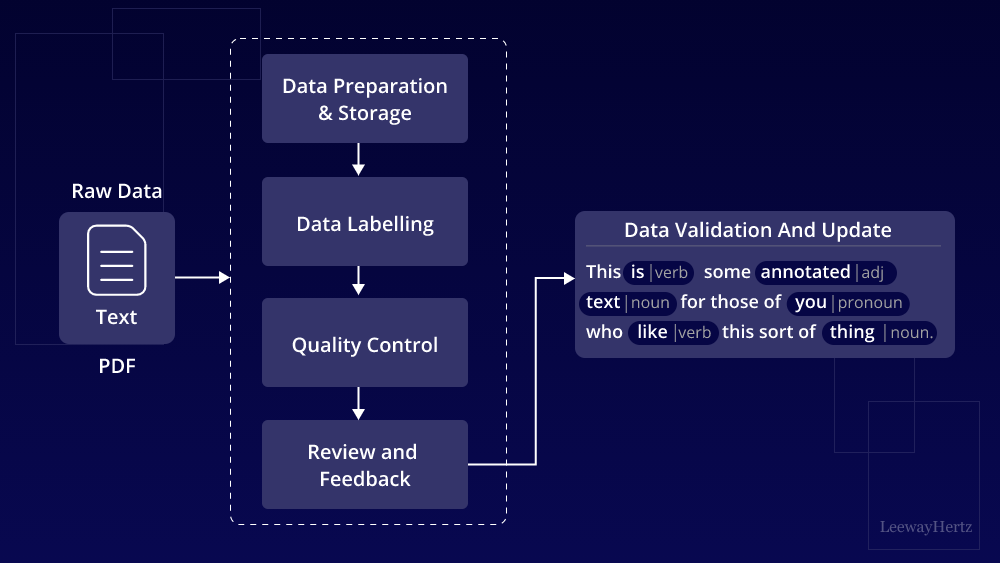

Data annotation, a complex and multifaceted process, involves the application of labels to raw data and, at the same time, requires a deep understanding of the subject matter to ensure accuracy and relevance.

The process of data annotation explained here revolves primarily around the use of annotation software, which assists annotators in labeling data based on pre-established annotation guidelines. These guidelines provide a framework for how the annotation matter should be handled to maintain consistency across the board. Annotators, then, use this framework to apply labels to the data, transforming it from an unstructured mass into an organized set of information.

This labeling of data is crucial in the development of machine learning models and artificial intelligence algorithms, which rely on annotated data to learn and predict future outcomes.

Human-handled data annotation is often preferred over automated methods. This is because human data annotators possess the ability to understand context, nuances, and complex instances better, leading to more accurate and relevant annotations.

The entire process, therefore, while intricate and demanding, plays a crucial role in driving the advancement of technology.

Skills Required to Become a Data Annotator

Acquiring proficiency as a data annotator demands a blend of technical knowledge and soft skills, both of which contribute to the meticulous and nuanced task of data annotation.

To effectively aid machines in pattern recognition and understanding, a data annotator must have a deep understanding of semantic annotation. This involves marking data with metadata that aids in intent annotation, thus helping machines understand the context and meaning behind data.

To become a proficient data annotator, the following skills are crucial:

- A strong understanding of language models : This allows annotators to interpret and annotate data accurately, helping machines comprehend text, speech, or other data forms.

- Proficiency in semantic segmentation : This skill involves dividing data into segments, each carrying a specific meaning.

- Familiarity with a crowdsourcing platform : This is essential as many data annotation tasks are performed on these platforms.

- Strong attention to detail: This is pivotal to ensure high-quality, error-free annotations.

The Importance of Data Annotation in AI and Machine Learning

In the realm of artificial intelligence and machine learning, both of which heavily rely on data, the role of precise and comprehensive data annotation cannot be overstated. Data annotation serves as the cornerstone of these disciplines, forming the foundation upon which advanced algorithms and predictive models are built.

The significance of data annotation is best demonstrated when considering its application in various sectors. For instance, in the development of self-driving cars, data annotation teams meticulously label and categorize countless images and sensor readings, teaching the AI how to interpret and respond to different scenarios on the road.

Similarly, in the realm of finance, data annotation is fundamental to understanding complex market trends and patterns. Here, finance data annotation is utilized to create advanced models capable of predicting stock market movements and financial trends.

In social media analytics, sentiment annotation is employed to understand human emotions and online behaviors, allowing businesses to tailor their strategies accordingly. The same level of precision is required in industrial data annotation, where properly annotated data can significantly improve efficiency and productivity in manufacturing processes.

Everyday Applications of Data Annotation

While many may not realize it, virtually every aspect of our digital lives is influenced by the work of data annotators. These behind-the-scenes professionals play a crucial role in shaping the digital environment around us.

The work of data annotators is widely applied in various everyday applications. Here are a few examples:

- Social Media : Data annotation is used to create algorithms for personalized content suggestion, enabling platforms like Facebook and Instagram to recommend posts and advertisements based on your preferences.

- Online Shopping: It helps in product recommendation systems, making your online shopping experience more personalized by suggesting items that align with your past purchases.

- Healthcare : In the healthcare sector, annotated data assists in diagnosing diseases from medical images, improving patient care.

- Autonomous Vehicles : Data annotators help train autonomous driving systems to recognize and respond to different road signs, pedestrians, and other vehicles, enhancing safety on the roads.

Through these applications, and many more, data annotation significantly influences our digital experiences. It shapes how we interact with technology on a daily basis, and continues to do so as technology evolves.

A deeper understanding of this process helps us appreciate the often-overlooked work of data annotators.

Potential Challenges and Solutions in Data Annotation

Data annotation, despite its critical role in shaping our digital world, presents a unique set of challenges, and understanding these obstacles is key to developing effective solutions.

One of the primary hurdles is maintaining the accuracy and consistency of annotations, which can be compromised by human error or differing interpretations among data annotators. A potential solution is the implementation of strict guidelines and regular quality checks to ensure high standardization.

Another challenge is data privacy, especially when dealing with sensitive information. Annotators often need access to personal data, which could lead to privacy breaches if not handled correctly. One solution is to anonymize data before it is annotated, thereby protecting individual identities.

Furthermore, scalability can be a difficulty as machine learning models often require vast amounts of annotated data. Manual annotation can be time-consuming and costly. To combat this, companies can employ automated annotation tools. However, these tools are not perfect, so a human-in-the-loop approach is often preferred.

Lastly, language and cultural nuances can also pose a challenge in data annotation. This is particularly apparent in Natural Language Processing projects. A potential solution is to engage native speakers or cultural experts in the annotation process. Doing so can help to mitigate misinterpretations and biases.

Bringing the Future Closer to Us

The role of a data annotator is becoming more and more pronounced in the realm of artificial intelligence and machine learning. Their job of adding metadata to data sets requires precision and analytical skills, and has widespread applications in our digital era.

Therefore, the significance of data annotation and annotators will continue to grow as we advance in technology.

Similar posts

V7 and Aya Data Announce Partnership for Accelerating Visual AI Development

Published 22/04/2024

Today V7 & Aya Data announce partnership for end-to-end training data delivery, specializing in supporting AI development for geospatial industries including agriculture. Today V7, the leading data annotation platform for building AI, and Aya Data, the largest data services and AI solutions provider in West Africa, are delighted to announce our partnership for end-to-end […]

The AI Sentience Debate

Published 07/11/2023

When does AI become sentient? We are inching closer to a consensus. Since the dawn of AI, both the scientific community and the public have been locked in debate about when an AI becomes sentient. But to understand when AI becomes sentient, it’s first essential to comprehend sentience, which isn’t straightforward in itself.

What is Data Classification in Machine Learning?

Published 01/11/2023

Data classification is a fundamental concept in machine learning without which most ML models simply couldn’t function. Many real-world applications of AI have data classification at the core – from credit score analysis to medical diagnosis. So how does it work? That’s what we’ll discuss in this article.

The role of a data annotator in machine learning

- Link to current page

- Data Labeling & ML

What is data annotation and why is data important?

Data annotation in machine learning models, ai-based applications: why do we need a machine learning model, data annotation methods and types, crowdsourced data annotation, being a crowd contributor: what is data annotator job, types of data annotation tasks, data annotation analysts and csas, become a data annotator, about toloka.

Subscribe to Toloka News

The two synonymous terms “data annotator” and “data labeler” seem to be everywhere these days. But who is a data annotator? Many know that annotators are somehow connected to the fields of Artificial Intelligence (AI) and Machine Learning (ML), and they probably have important roles to play in the data labelling market. But not everyone fully understands what data labelers actually do. If you want to find out once and for all is data annotation a good job, especially if you’re considering a data labeling career – read on!

Get high-quality data. Fast.

Data annotation is the process of labeling elements of data ( images , videos, text , or any other format) by adding contextual information which ML models can learn from. It helps ML models understand what exactly is important about each piece of data.

To fully grasp and appreciate everything data labelers do and what data annotation skills they need, we need to start with the basics by explaining data annotation and data usage in the field of machine learning. So, let’s begin with something broad to give us appropriate context and then dive into more narrow processes and definitions.

Data comes in many different forms – from images and videos to text and audio files – but in almost all cases, this data has to be processed to render itself usable. What it means is that this data has to be organized and made “clear” to whomever is using it, or as we say, it has to be “labeled”.

If, for example, we have a dataset full of geometric shapes (data points), to prepare this dataset for further use, we need to make sure that every circle is labeled as “circle,” every square as “square,” every triangle as “triangle,” and so on. This turns a random collection of items in the dataset into something with a system that can be picked up and inserted into a real-life project, a bunch of training data for a machine learning algorithm. The opposite of it is “raw” data, which is essentially a mass of disorganized information. And this is where the data annotator role comes in: these people turn “raw data” into “ labeled data ”.

This processing and organization of raw unstructured data – “ data labeling ” or “data annotation” – is even more important in business. When your business relies on data in any way (which is becoming more and more common today), you simply cannot afford for your data to be messy, or else your business will likely run into serious troubles or fail altogether.

Labeled data can assist many different companies, both big and small, whether these companies rely on ML technologies, or have nothing to do with AI. For instance, a real-estate developer or a hotel executive may need to make an expansion decision about building a new facility. But before investing, they need to perform an in-depth analysis in order to understand what types of accommodation get booked, how quickly, during which months, and so on. All of that implies highly organized and “labeled” data (whether it’s called that or not) that can be visualized and used in decision-making.

A training algorithm (also referred to as machine learning algorithm or ML model) is basically clever code written by software engineers that tells an AI solution how to use the data it encounters. The process of training machine learning models involves several stages that we won’t go into right now.

But the main point is this: each and every machine learning model requires adequately labeled data at multiple points in its life cycle. And normally not just some high-quality training data – lots of it! Such ground truth data is used to train an ML model initially, as well as to monitor that it continues to produce accurate results over time.

Today, AI products are no longer the stuff of fiction or even something niche and unique. Most people use AI products on a regular basis, perhaps without even realizing that they’re dealing with an ML-backed solution. Probably one of the best examples is when we use Google Translate or a similar web service.

Think ML models, think data annotations, think training and test data. Feel like asking Siri or Alexa something? It’s the same deal again with virtual assistants: training algorithms, labeled data. Driving somewhere and having an online map service lay out and narrate a route for you? Yes, you guessed it!

Some other examples of disrupting AI technologies include self-driving vehicles, online shopping and product cataloging (e-commerce), cyber security, moderating reviews on social media, financial trading, legal assistance, interpretation of medical results, nautical and space navigation, gaming, and even programming among many others. Regardless of what industry an AI solution is made for or what domain it falls under (for instance, Computer Vision that deals with visual imagery or Natural Language Processing/NLP that deals with speech) – all of them imply continuous data annotation at almost every turn. And, of course, that means having people at hand who can carry out human powered data annotation.

Data annotation can be carried out in a number of ways by utilizing different “approaches”:

- Data can be labeled by human annotators.

- It can be labeled synthetically (using machine intelligence).

- Or it can be labeled in a “hybrid” manner (having both human and machine features).

As of right now, human-handled data annotation remains the most sought-after approach, because it tends to deliver the highest quality datasets. ML processes that involve human-handled data annotation are often referred to as being or having “human-in-the-loop pipelines.”

When it comes to the data annotation process, methodologies of acquiring manually annotated training data differ. One of them is to label the data “internally,” that is, to use an “in-house” team. In this scenario, as usual, the company has to write code and build an ML model at the core of their AI product. But then it also has to prepare training datasets for this machine learning model, often from scratch. While there are advantages to this setup (mainly having full control over every step), the main downside is that this track is normally extremely costly and time-consuming. The reason is that you have to do everything yourself, including training your staff, finding the right data annotation software, learning quality control techniques, and so on.

The alternative is to have your data labeled “externally,” which is known as “outsourcing.” Creators of AI products may outsource to individuals or whole companies to carry out their data annotation for them, which may involve different levels of supervision and project management. In this case, the tasks of annotating data are tackled by specialized groups of human annotators with relevant experience who often work within their chosen paradigm (for example, transcribing speech or working with image annotation).

In a way, outsourcing is a bit like having your own external in-house team that you hire temporarily, except that this team already comes with its own set of data annotation tools. While appealing to some, this method can also be very expensive for AI product makers. What’s more, data quality can often fluctuate wildly from project to project and team to team; after all, the whole data annotation process is handled by a third party. And when you spend so much, you want to be sure you’re getting your money’s worth.

There’s also a type of large-scale outsourcing known as “crowdsourcing” or “crowd-assisted labeling,” which is what we do at Toloka . The logic here is simple: rather than relying on fixed teams of data labelers with fixed skill sets (who are often based in one place), instead, crowdsourcing relies on a large and diverse network of data annotators from all over the globe.

In contrast to other data labeling methodologies, annotators from the “global crowd” choose what exactly they’re going to do and when exactly they wish to contribute. Another big difference between crowdsourcing and all other approaches, both internal and external, is that “crowd contributors” (or “Tolokers” as we call them) do not have to be experts or even have any experience at all. This is possible because:

A short, task-oriented training course takes place before each project in labeling data – only those who perform test tasks at a satisfactory level are allowed to proceed to actual project tasks.

Crowdsourcing utilizes advanced “aggregation techniques,” which means that it’s not so much about individual efforts of crowd contributors, but rather about the “accumulated effort” of everyone on the data annotation project.

To understand this better, think of it as painting a giant canvas. While in-house or outsourced teams gradually paint a complete picture, relying on their knowledge and tenacity, crowd contributors instead paint a tiny brush stroke each. In fact, the same brush stroke in terms of its position on the canvas is painted by several contributors. This is the reason why an individual mistake isn’t detrimental to the final result. A “data annotation analyst” (a special type of ML engineer) then does the following:

- They take each contributor’s input and discard any “noisy” (i.e., low-quality) responses.

- They aggregate the results by putting all of the overlapping brush strokes together (to get the best version of each brush stroke).

They then merge different brush strokes together to receive a complete image. Voila – here’s our ready canvas!

This methodology serves those who need annotated data very well, but it also makes data annotation a lot less tedious for human annotators. Probably the best thing about being a data annotator for a crowdsourcing platform like Toloka is that you can work any time you want, from any location you desire – it’s completely up to you. You can also work in any language, so speaking your native tongue is more than enough. If you speak English together with another language (native or non-native), that’s even better – you’ll be able to participate in more labeling projects.

Another great thing is that all you need is internet access and a device such as a smartphone, a tablet, or a laptop/desktop computer. Nothing else is required, and no prior experience is needed, because, as we've explained already, task-specific training is provided before every labeling project. Certainly, if you have expertise in some field, this will only help you, and you may even be asked to evaluate other contributors’ submissions based on your performance. What you produce may also be treated as a “golden” set (or “honeypot” as we say at Toloka), which is a high-quality standard that the others will be judged against.

All annotation tasks at Toloka are relatively small, because ML engineers decompose large labeling projects into more manageable segments. As a result, no matter how difficult the actual request to label data made by our client, as a crowd contributor, you’ll only ever have to deal with micro tasks. The main thing is following your instructions to the word. You have to be careful and diligent when you label the data. The tasks are normally quite easy, but to do them well, one needs to remain focused throughout the entire labeling process and avoid distractions.

There are many different labeling tasks for crowd contributors to choose from, but they all fall into these two categories:

- Online tasks (you complete everything on your device without traveling anywhere in person)

- Offline tasks, also known as “field” or “feet-on-street” tasks (you travel to target locations to complete labeling assignments).

When you choose to participate in a field task, you’re asked to go to a specific location in your area (normally your town or your neighborhood) to complete a short on-site assignment. This assignment could involve taking photos of all bus stops in the area, monuments, or coffee shops. It can also be something more elaborate like following a specific route within a shopping mall to determine how long it takes or counting and marking benches in a park. The results of these tasks are used to improve web mapping services, as well as brick-and-mortar retail (i.e., physical stores).

Online assignments have a variety of applications, some of which we mentioned earlier, and they may include text, audio, video, or image annotation. Each ML application contains several common task formats that our clients (or “requesters” as we say at Toloka) often ask for.

Text annotation

Text annotation tasks usually require annotators to extract specific information from natural language data. Such labeled data is used for training NLP (natural language processing) models. NLP models are used in search engines, voice assistants, automated translators, parsing of text documents, and so on.

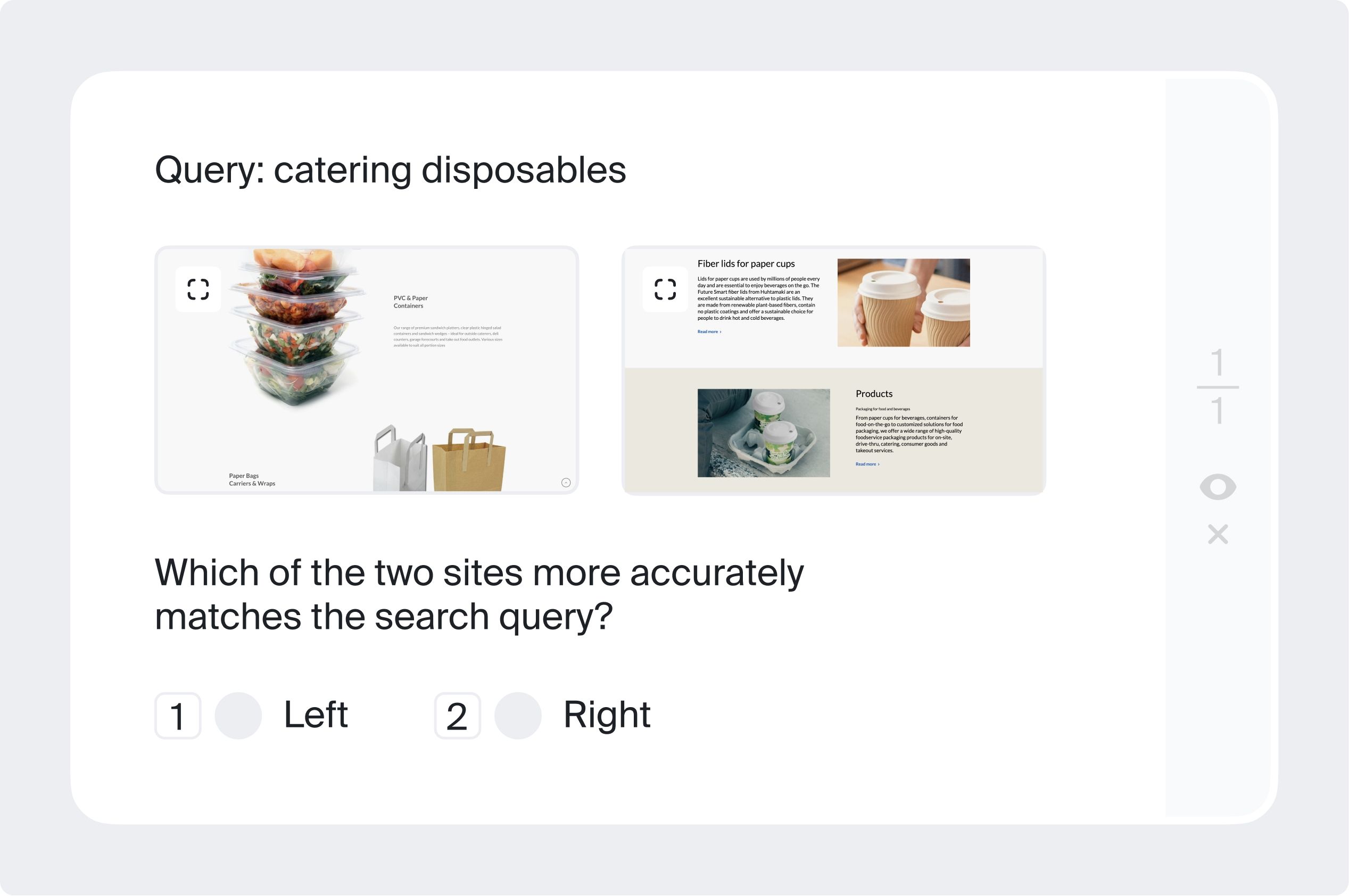

Text classification

In such tasks (also called text categorization) you may need to answer whether the text you see matches the topic provided. For example, to see if a search query matches search engine results — such data helps improve search relevance . It can also be a simple yes/no questionnaire, or you may need to assign the text a specific category. For example, to decide whether the text contains a question or a purchase intent (this is also called intent annotation).

Text generation

In this type of text annotation, you may need to come up with your best description of an image/video/audio or a series of them (normally in 2-3 sentences).

Side-by-side comparison

You may need to compare two texts provided next to each other and decide which one is more informative or sounds better in your native tongue.

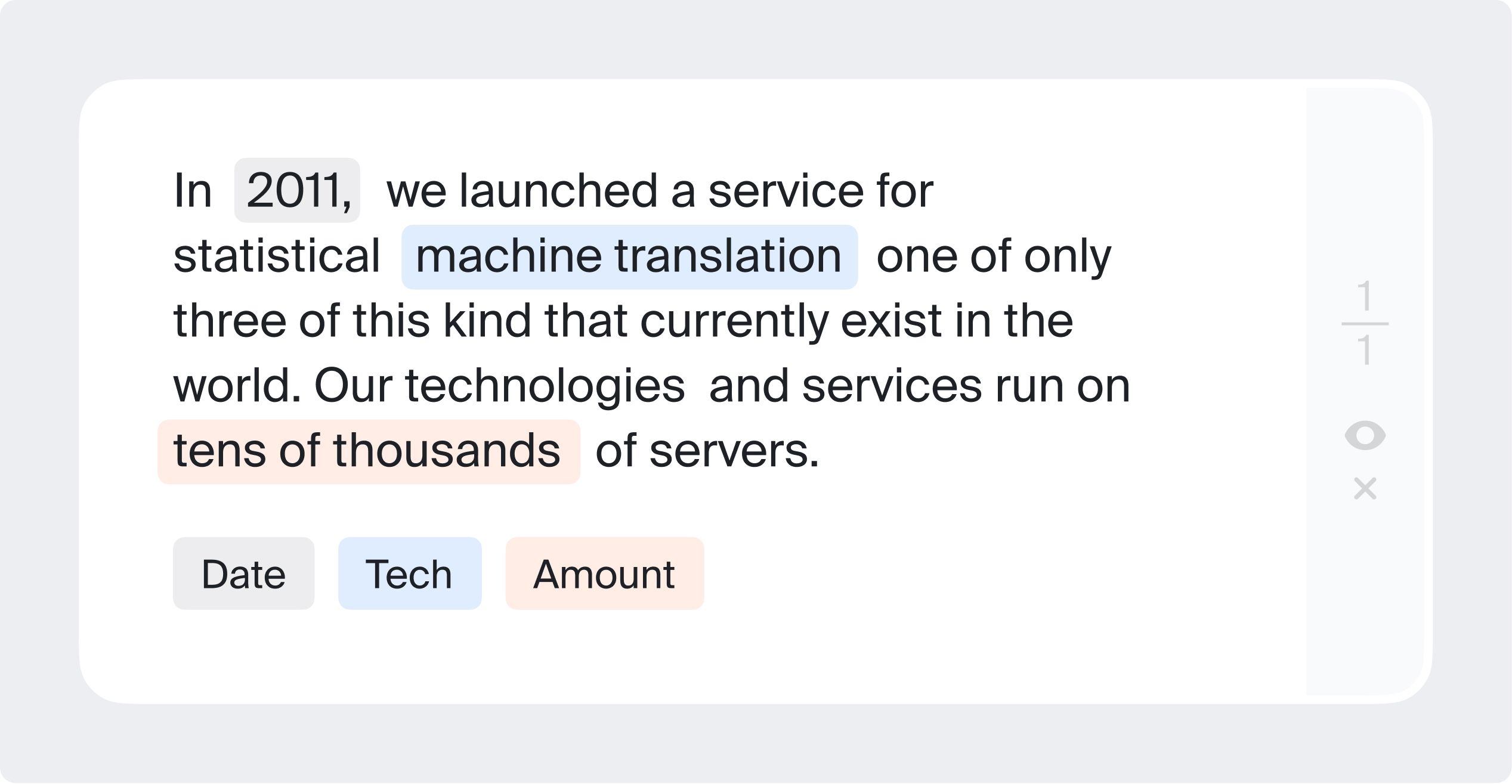

Named entity recognition

You may need to identify parts of text, classify proper nouns, or label any other entities. This type of text entity annotation is also called semantic annotation or semantic segmentation.

Sentiment Annotation

This is an annotation task which requires the annotator to determine the sentiment of a text. Such datasets are used in sentiment analysis, for example, to monitor customer feedback, or in content moderation. ML algorithms have to rely on human-labeled datasets to provide reliable sentiment analysis, especially in such a complicated area as human emotions.

Image annotation

Training data produced by performing image annotation is usually used to train various computer vision models. Such models are used, for example, in self-driving cars or in face recognition technologies. Image annotation tasks include working with images: identifying objects, bounding box annotation, deciding whether an image fits a specified topic, and so on.

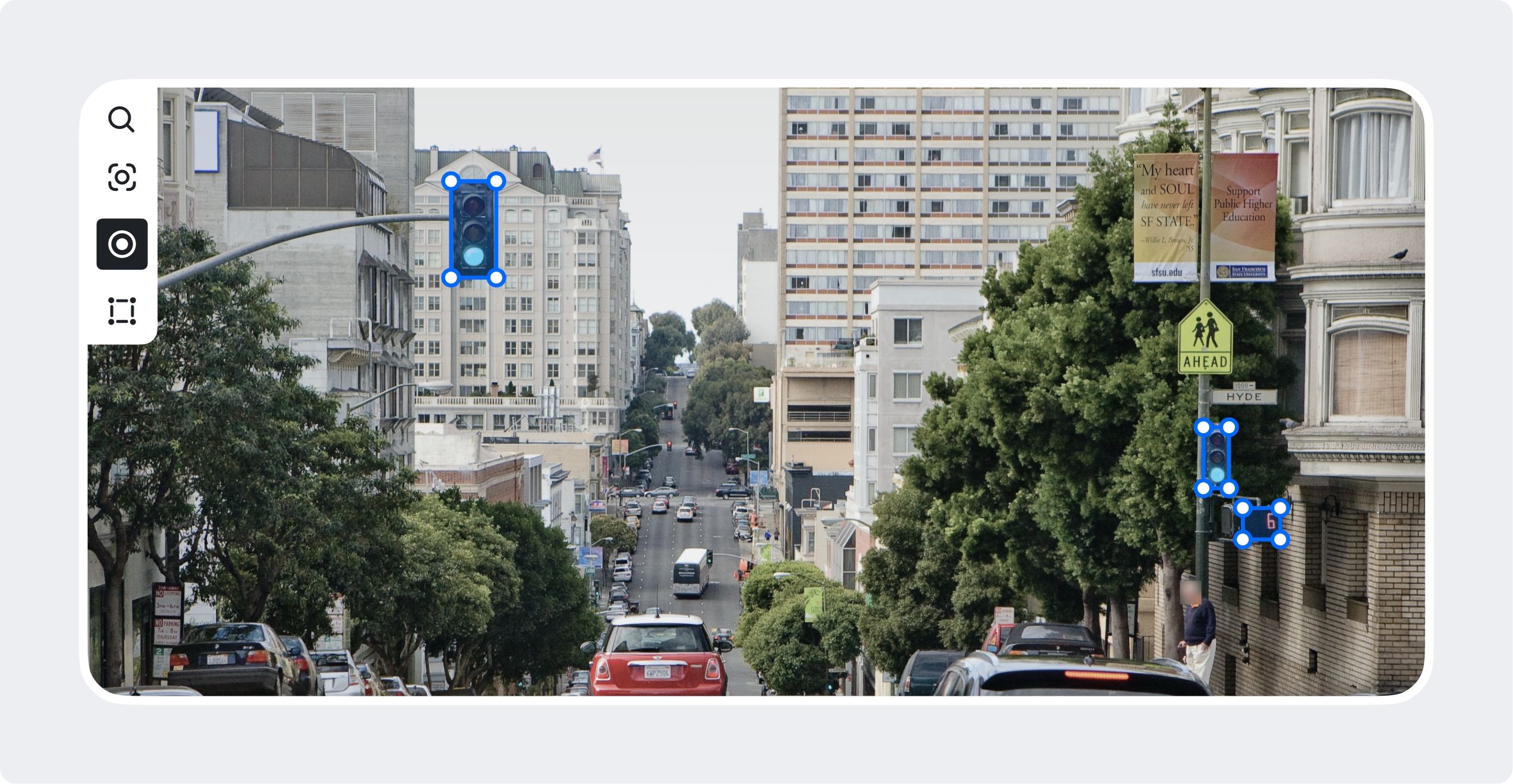

Object recognition and detection

You may be asked to select and/or draw the edges (bounding boxes) of certain items within an image, such as street signs or human faces. A computer vision model needs an image with a distinct object marked by labelers, so that it can provide accurate results.

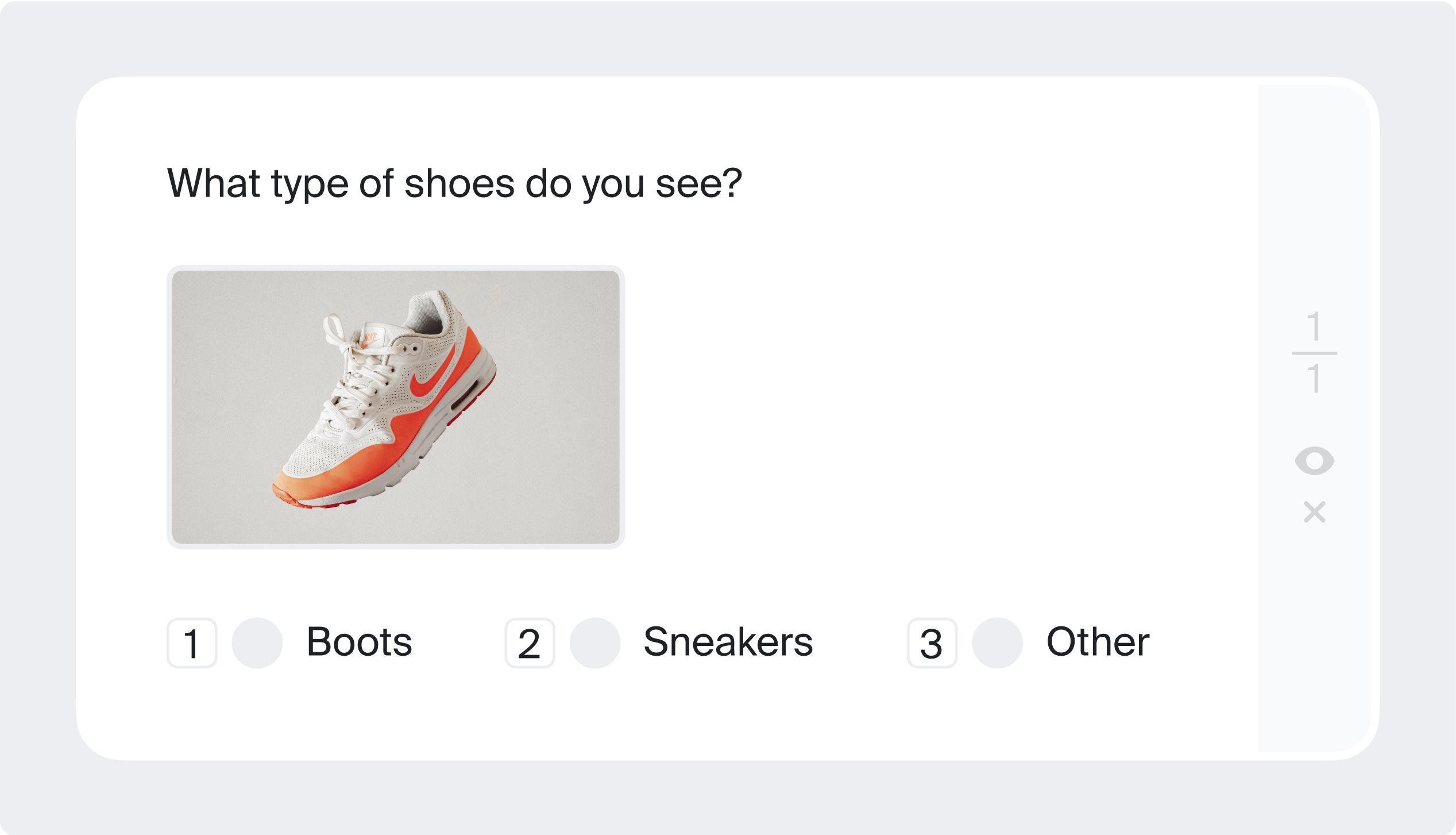

Image classification

You may be asked whether what you see in an image contains something specific, such as an animal, an item of clothing, or a kitchen appliance.

Side-by-side

You may be given two images and asked which one you think looks better, either in your own view or based on a particular characteristic outlined in the task. Later, these annotated images can be used to improve recommender systems in online shops.

Audio annotation

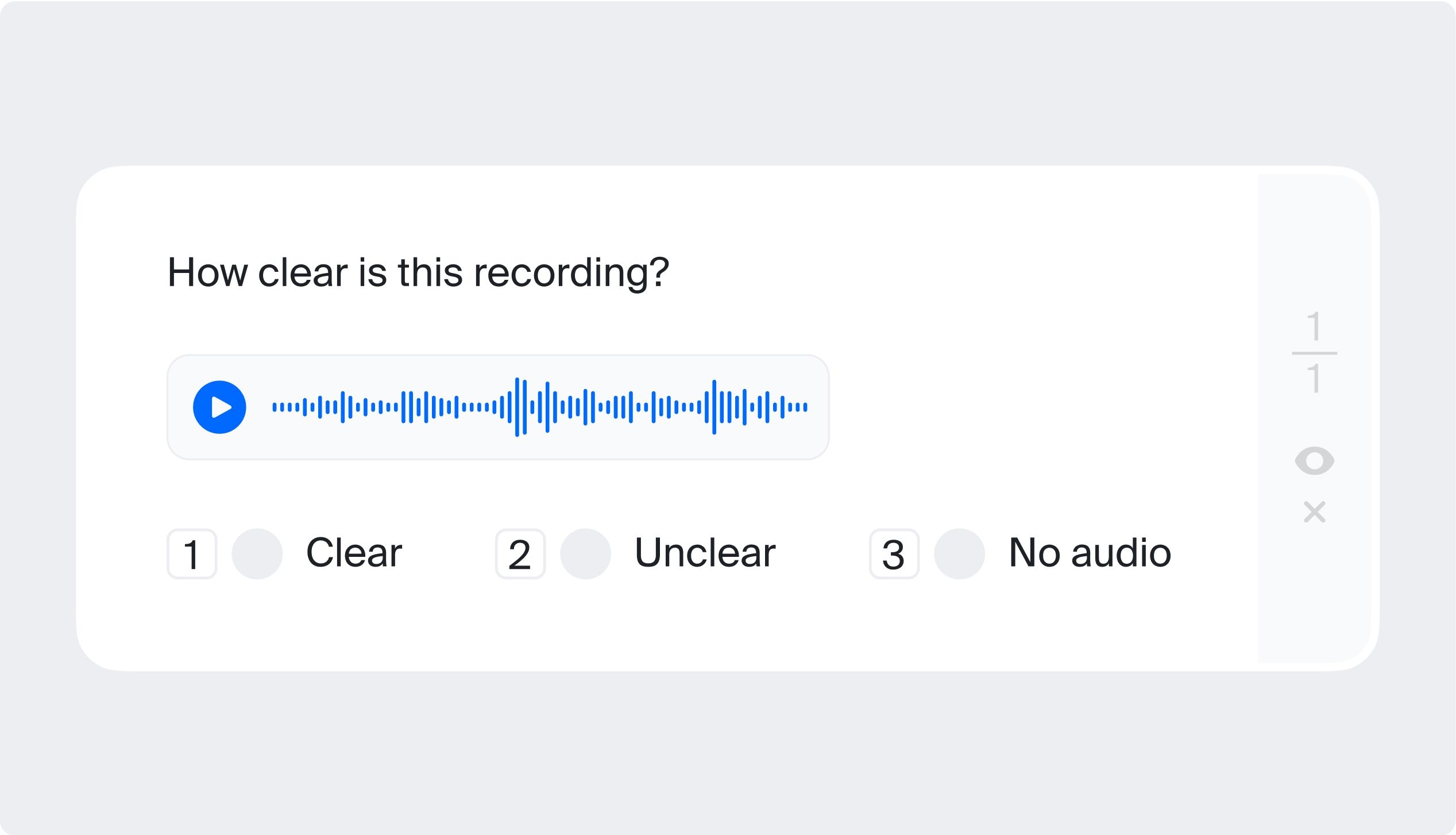

Audio classification.

In this audio annotation task, you may need to listen to an audio recording and answer whether it contains a particular feature, such as a mood, a certain topic, or a reference to some event.

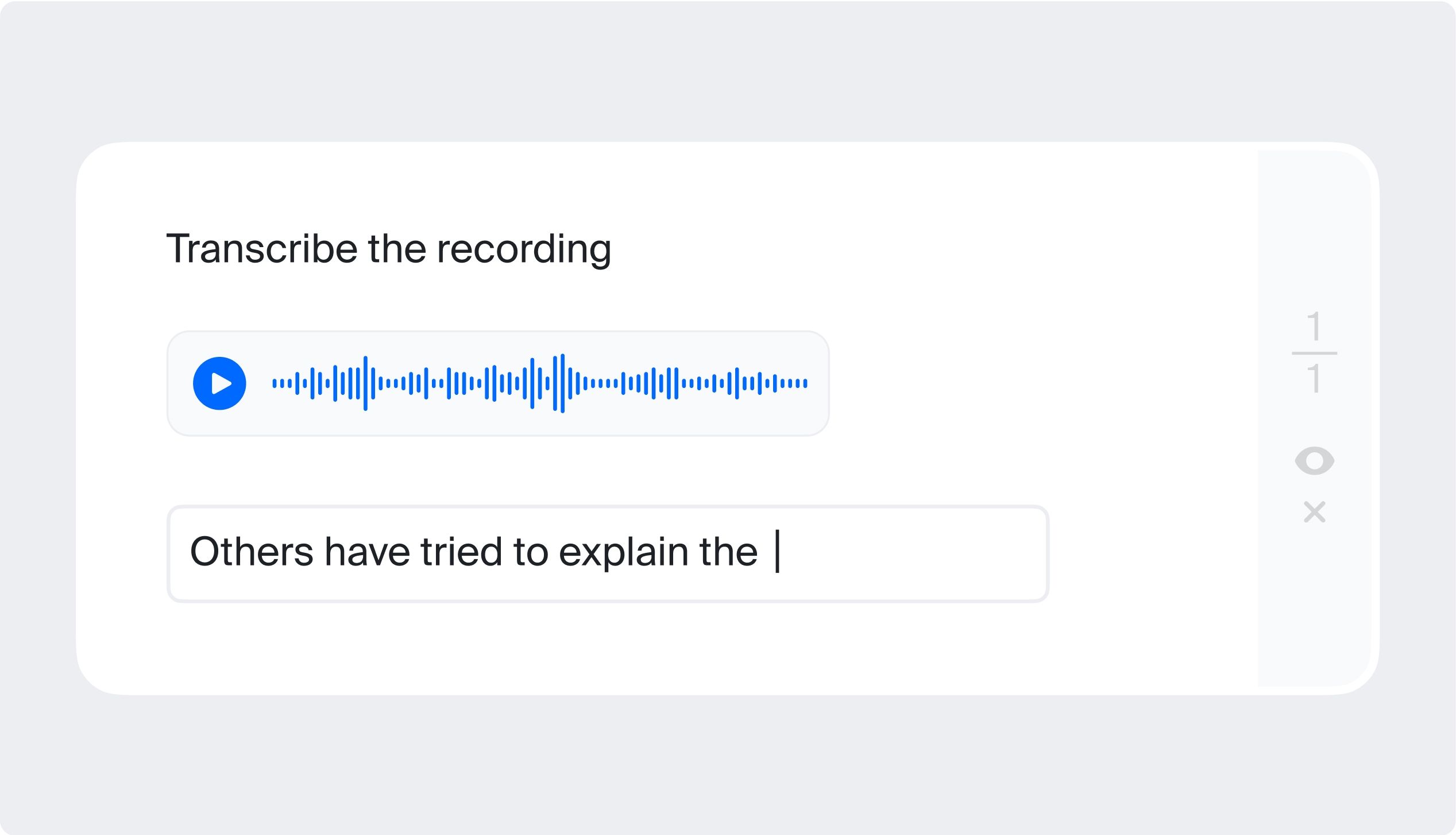

Audio transcription

You may need to listen to some audio data and write or “transcribe” what you hear. Such labeled data can be used, for example, in speech recognition technologies.

Video annotation

Image and video annotation tasks quite often overlap. It's common to divide videos into single frames and annotate specific data in these frames.

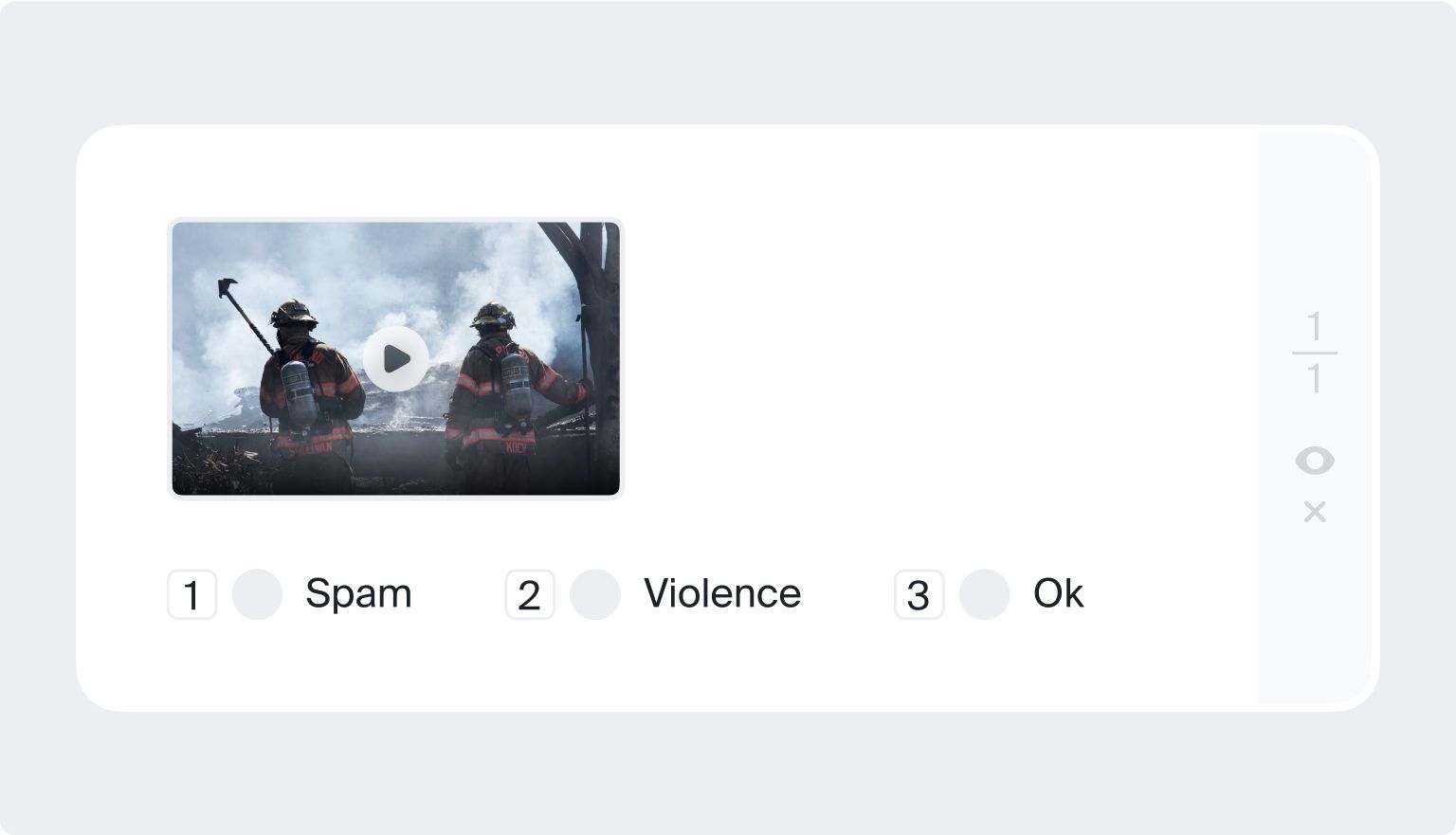

Video classification

You may have to watch a video and decide whether it belongs to a certain category, such as “content for children,” “advertising materials,” “sporting event,” or “mature content with drug references or nudity”.

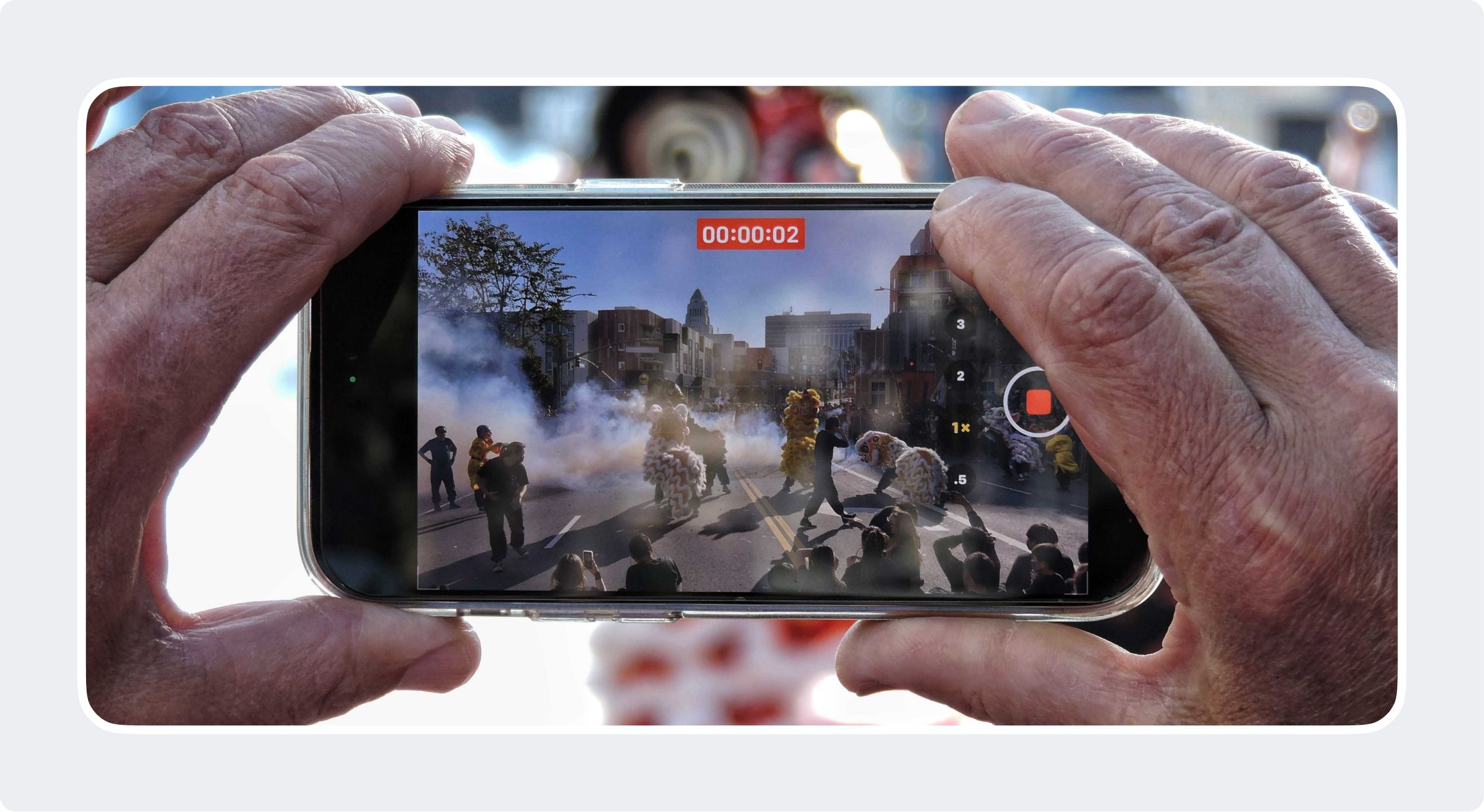

Video collection

This is not exactly a video annotation task, but rather a data collection one. You may be asked to produce your own short videos in various formats containing specified features, such as hand gestures, items of clothing, facial expressions, etc. Video data produced by annotators is also often used to improve computer vision models.

When we explained how crowdsourcing works using our example of a painted canvas, we mentioned a “data annotation analyst” (who are also sometimes data scientists). Without these analysts, none of it is possible. This special breed of ML engineers specializes in processing and analyzing labeled data. They play a vital role in any AI product creation. In the context of human-handled labeling, it’s precisely data annotation analysts who “manage” human labelers by providing them with specific tasks. They also supervise data annotation processes and – together with more colleagues – feed all of the data they receive into training models.

It’s up to data annotation analysts to find the most suitable data annotators to carry out specific labeling tasks and also set quality control mechanisms in place to ensure adequate quality. Crucially, data annotation analysts should be able to clearly explain everything to their data annotators. This is an important aspect of their job, as any confusion or misinterpretation at any point in the annotation process will lead to improperly labeled data and a low-quality AI product.

At Toloka, data annotation analysts are known as Crowd Solutions Architects (CSAs). They differ from other data annotation analysts in that they specialize in crowdsourced data and human-in-the-loop pipelines involving global crowd contributors.

As you can see, labeling data has an essential role to play in both AI-based products and modern business in general. Without high-quality annotated data, an ML algorithm cannot run and AI solutions cannot function. As our planet continues to go through exceedingly more digitization, traditional businesses are beginning to show their need for annotated data, too.

With that in mind, human annotators – people who annotate data – are in high demand all over the world. What’s more, crowdsourced data annotators are at the forefront of the global AI movement with the support they provide. If you feel like becoming a Toloker by joining our global crowd of data annotators, follow this link to sign up and find out more. As a crowd contributor at Toloka, you’ll be able to complete micro tasks online and offline whenever it suits you best.

Toloka is a European company based in Amsterdam, the Netherlands that provides data for Generative AI development. Toloka empowers businesses to build high quality, safe, and responsible AI. We are the trusted data partner for all stages of AI development from training to evaluation. Toloka has over a decade of experience supporting clients with its unique methodology and optimal combination of machine learning technology and human expertise, offering the highest quality and scalability in the market.

Recent articles

Have a data labeling project?

Data Annotation in 2024: Why it matters & Top 8 Best Practices

Annotated data is an integral part of various machine learning, artificial intelligence (AI) and GenAI applications. It is also one of the most time-consuming and labor-intensive parts of AI/ML projects. Data annotation is one of the top limitations of AI implementation for organizations. Whether you work with an AI data service , or perform annotation in-house, you need to get this process right.

Tech leaders and developers need to focus on improving data annotation for their data-hungry digital solutions. To remedy that, we recommend an in-depth understanding of data annotation.

Our research covers the following:

What is data annotation?

- Why it matters?

- What its techniques/types are?

- What are some key challenges of annotating data?

- What are some best practices for data annotation?

Data annotation is the process of labeling data with relevant tags to make it easier for computers to understand and interpret. This data can be in the form of images, text, audio, or video, and data annotators need to label it as accurately as possible. Data annotation can be done manually by a human or automatically using advanced machine learning algorithms and tools. Learn more about automated data annotation.

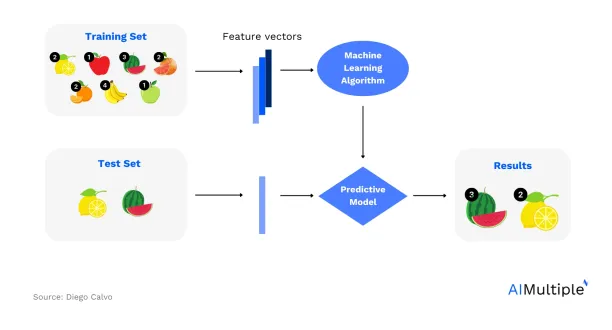

For supervised machine learning, labeled datasets are crucial because ML models need to understand input patterns to process them and produce accurate results. Supervised ML models (see figure 1) train and learn from correctly annotated data and solve problems such as:

- Classification: Assigning test data into specific categories. For instance, predicting whether a patient has a disease and assigning their health data to “disease” or “no disease” categories is a classification problem.

- Regression: Establishing a relationship between dependent and independent variables. Estimating the relationship between the budget for advertising and the sales of a product is an example of a regression problem.

Figure 1: Supervised Learning Example 1

For example, training machine learning models of self-driving cars involve annotated video data. Individual objects in videos are annotated, which allows machines to predict the movements of objects.

Other terms to describe data annotation include data labeling, data tagging, data classification, or machine learning training data generation.

Why does data annotation matter?

Annotated data is the lifeblood of supervised learning models since the performance and accuracy of such models depend on the quality and quantity of annotated data. Machines can not see images and videos as we do. Data annotation makes the different data types machine-readable. Annotated data matters because:

- Machine learning models have a wide variety of critical applications (e.g., healthcare) where erroneous AI/ML models can be dangerous

- Finding high-quality annotated data is one of the primary challenges of building accurate machine-learning models

Here is a data-driven list of the top data annotation services on the market.

Gathering data is a prerequisite for annotation. To help you obtain the right datasets, here is some research:

- Top data crowdsourcing platforms on the market

- Guide to AI data collection.

- Data-driven list of data collection/harvesting services.

What are the different types of data annotation?

Different data annotation techniques can be used depending on the machine learning application. Some of the most common types are:

Reinforcement learning with human feedback (RLHF) was identified in 2017. 2 It increased in popularity significantly in 2022 after the success of large language models (LLMS) like ChatGPT which leveraged the technology. These are the two main types of RLHF:

- Humans generating suitable responses to train LLMs

- Humans annotating (i.e. selecting) better responses among multiple LLM responses.

Human labor is expensive and AI companies are also leveraging reinforcement learning from AI feedback (RLAIF) to scale their annotations cost effectively in cases where AI models are confident about their feedback. 3

2. Text annotation

Text annotation trains machines to better understand the text. For example, chatbots can identify users’ requests with the keywords taught to the machine and offer solutions. If annotations are inaccurate, the machine is unlikely to provide a useful solution. Better text annotations provide a better customer experience. During the data annotation process, with text annotation, some specific keywords, sentences, etc., are assigned to data points. Comprehensive text annotations are crucial for accurate machine training. Some types of text annotation are:

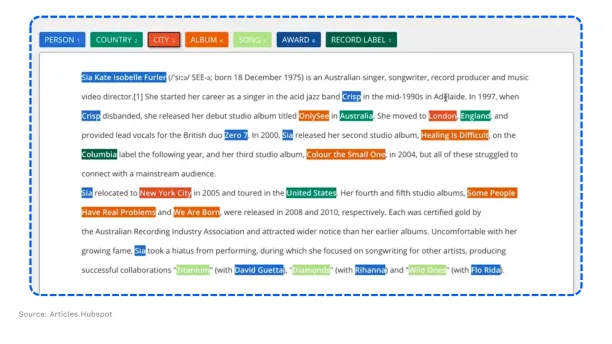

2.1. Semantic annotation

Semantic annotation (see figure 2) is the process of tagging text documents. By tagging documents with relevant concepts, semantic annotation makes unstructured content easier to find. Computers can interpret and read the relationship between a specific part of metadata and a resource described by semantic annotation.

Figure 2: Semantic Annotation Example 4

2.2. Intent annotation

For example, the sentence “I want to chat with David” indicates a request. Intent annotation analyzes the needs behind such texts and categorizes them, such as requests and approvals.

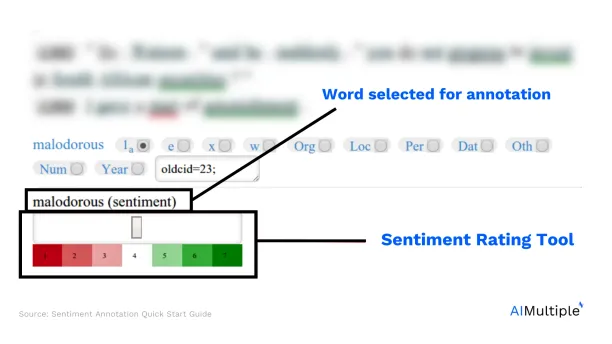

2.3. Sentiment annotation

Sentiment annotation (see Figure 3) tags the emotions within the text and helps machines recognize human emotions through words. Machine learning models are trained with sentiment annotation data to find the true emotions within the text. For example, by reading the comments left by customers about the products, ML models understand the attitude and emotion behind the text and then make the relevant labeling such as positive, negative, or neutral.

Figure 3: Sentiment Annotation Example 5

3. Text categorization

Text categorization assigns categories to the sentences in the document or the whole paragraph in accordance with the subject. Users can easily find the information they are looking for on the website.

4. Image annotation

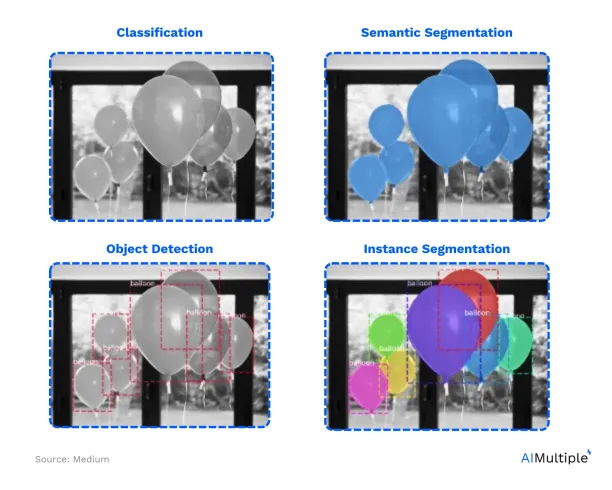

Image annotation is the process of labeling images (see figure 4) to train an AI or ML model. For example, a machine learning model gains a high level of comprehension like a human with tagged digital images and can interpret the images it sees. With data annotation, objects in any image are labeled. Depending on the use case, the number of labels on the image may increase. There are four fundamental types of image annotation:

4.1. Image classification

First, the machine trained with annotated images then determines what an image represents with the predefined annotated images.

4.2. Object recognition/detection

Object recognition/detection is a further version of image classification. It is the correct description of the numbers and exact positions of entities in the image. While a label is assigned to the entire image in image classification, object recognition labels entities separately. For example, with image classification, the image is labeled as day or night. Object recognition individually tags various entities in an image, such as a bicycle, tree, or table.

4.3. Segmentation

Segmentation is a more advanced form of image annotation. In order to analyze the image more easily, it divides the image into multiple segments, and these parts are called image objects. There are three types of image segmentation:

- Semantic segmentation: Label similar objects in the image according to their properties, such as their size and location.

- Instance segmentation: Each entity in the image can be labeled. It defines the properties of entities such as position and number.

- Panoptic segmentation: Both semantic and instance segmentations are used by combining.

Figure 4: Image annotation example 6

5. Video annotation

Video annotation is the process of teaching computers to recognize objects from videos. Image and video annotation are types of data annotation methods that are performed to train computer vision (CV) systems , which is a subfield of artificial intelligence (AI).

Video annotation for a retail store surveillance system:

Click here to learn more about video annotation.

6. Audio annotation

Audio annotation is a type of data annotation that involves classifying components in audio data. Like all other types of annotation (such as image and text annotation), audio annotation requires manual labeling and specialized software. Solutions based on natural language processing (NLP) rely on audio annotation, and as their market grows (projected to grow 14 times between 2017 and 2025), the demand and importance of quality audio annotation will grow as well.

Audio annotation can be done through software that allows data annotators to label audio data with relevant words or phrases. For example, they may be asked to label a sound of a person coughing as “cough.”

Audio annotation can be:

- In-house, completed by that company’s employees.

- Outsourced (i.e., done by a third-party company.)

- Crowdsourced . Crowdsourced data annotation involves using a large network of data annotators to label data through an online platform.

Learn more about audio annotation.

7. Industry-specific data annotation

Each industry uses data annotation differently. Some industries use one type of annotation, and others use a combination to annotate their data. This section highlights some of the industry-specific types of data annotation.

- Medical data annotation: Medical data annotation is used to annotate data such as medical images (MRI scans), EMRs, and clinical notes, etc. This type of data annotation helps develop computer vision-enabled systems for disease diagnosis and automated medical data analysis.

- Retail data annotation: Retail data annotation is used to annotate retail data such as product images, customer data, and sentiment data . This type of annotation helps create and train accurate AI/ML models to determine the sentiment of customers, product recommendations , etc.

- Finance data annotation: Finance data annotation is used to annotate data such as financial documents, transactional data, etc. This type of annotation helps develop AI/ML systems, such as fraud and compliance issues detection systems.

- Automotive data annotation: This industry-specific annotation is used to annotate data from autonomous vehicles, such as data from cameras and lidar sensors. This annotation type helps develop models that can detect objects in the environment and other data points for autonomous vehicle systems.

- Industrial data annotation: Industrial data annotation is used to annotate data from industrial applications, such as manufacturing images, maintenance data, safety data, quality control, etc. This type of data annotation helps create models that can detect anomalies in production processes and ensure worker safety.

What is the difference between data annotation and data labeling?

Data annotation and data labeling mean the same thing. You will come across articles that try to explain them in different ways and make up a difference. For example, some sources claim that data labeling is a subset of data annotation where data elements are assigned labels according to predefined rules or criteria. However, based on our discussions with vendors in this space and with data annotation users, we do not see major differences between these concepts.

What are the main challenges of data annotation?

- Cost of annotating data: Data annotation can be done either manually or automatically. However, manually annotating data requires a lot of effort, and you also need to maintain the quality of the data.

- Accuracy of annotation : Human errors can lead to poor data quality, and these have a direct impact on the prediction of AI/ML models. Gartner’s study highlights that poor data quality costs companies 15% of their revenue.

What are the best practices for data annotation?

- Start with the correct data structure: Focus on creating data labels that are specific enough to be useful but still general enough to capture all possible variations in data sets.

- Prepare detailed and easy-to-read instructions: Develop data annotation guidelines and best practices to ensure data consistency and accuracy across different data annotators.

- Optimize the amount of annotation work: Annotation is costlier and cheaper alternatives need to be examined. You can work with a data collection service that offers pre-labeled datasets.

- Collect data if necessary: If you don’t annotate enough data for machine learning models, their quality can suffer. You can work with data collection companies to collect more data.

- Leverage outsourcing or crowdsourcing if data annotation requirements become too large and time-consuming for internal resources.

- Support humans with machines: Use a combination of machine learning algorithms (data annotation software) with a human-in-the-loop approach to help humans focus on the hardest cases and increase the diversity of the training data set. Labeling data that the machine learning model can correctly process has limited value.

- Regularly test your data annotations for quality assurance purposes.

- Have multiple data annotators review each other’s work for accuracy and consistency in labeling datasets.

- Stay compliant: Carefully consider privacy and ethical issues when annotating sensitive data sets, such as images containing people or health records. Lack of compliance with local rules can damage your company’s reputation.

By following these data annotation best practices, you can ensure that your data sets are accurately labeled and accessible to data scientists and fuel your data-hungry projects.

You can also check our video annotation tools list to choose the fit that best suits your annotation needs.

If you have questions about data annotation, we would like to help:

External links

- 1. Diego Calvo. (2019). Supervised learning. Diego Calvo. Accessed: 29/September/2023.

- 2. Christiano P.; Leike J.; Brown T.B.; Martic M.; Legg S.; Amodei D. (2017). “ Deep reinforcement learning from human preferences “

- 3. Bai Y.; et al. (2022). “ Constitutional AI: Harmlessness from AI Feedback ”. Retrieved January 1, 2024

- 4. Articles Hubspot. (2019). What Is Text Annotation in Machine Learning, Examples and How it’s Done? . Accessed: 29/September/2023.

- 5. Sentiment Annotation – Quick Start Guide. Accessed: 29/September/2023.

- 6. Ashely John. (2020). Why Data & Data Annotation Make or Break AI. Medium. Accessed: 29/September/2023.

Next to Read

5 crowdsourcing image annotation benefits in 2024, video annotation: in-depth guide and use cases in 2024, top 10 open source data labeling/annotation platforms in 2024.

Your email address will not be published. All fields are required.

Related research

Data Preprocessing in 2024: Importance & 5 Steps

The Ultimate Guide to ETL Pipeline in 2024

- Conferences

- Last updated January 7, 2024

- In AI Origins & Evolution

Data annotation career: Scope, opportunities and salaries

- Published on February 18, 2022

- by Kartik Wali

The demand for data annotation specialists has gone up with the rise in language models, training techniques, AI tools, etc. Data annotation– a critical step in supervised learning–is the process of labelling data to teach the AI and ML models to recognise specific data types to produce relevant output. Data annotation has applications in diverse sectors ranging from chatbot companies, finance, medicine to government and space programs.

The market for AI and ML data labelling has seen exponential growth of late. According to market research firm Cognilytica , the data labelling market will grow from USD 1.5 billion in 2019 t0 USD 3.5 billion in 2024.

Types of data labelling

A model is as good as the data it’s fed. Hence, it is imperative to ensure data quality of the highest grade with accurate labelling to optimise AI/ML models.

Let us delve into the types of data annotations:

- Visual data annotation

Visual data annotation analysts facilitate the training of AI/ML models by labelling images, identifying key points or pixels with precise tags in a format the system understands. Data vision analysts use bounding boxes in a specific section of an image or a frame to recognise a particular trait/object within an image and label it.

According to koombea.com , the key skills required for visual data annotation include:

- Analytical mathematics

- In-depth knowledge of ML libraries

- Programming languages like Python, Java, C++, etc.

- Image analysis algorithms

- Visual Database Management

- Understanding of dataflow programming

- Knowledge of tools like OpenCV, Keras, etc.

- Audio data annotation

Audio data labelling has applications in natural language processing (NLP), transcription and conversational commerce. Virtual assistants like Alexa and Siri respond to verbal stimuli in real-time: Their underpinning models are trained on large labelled datasets of vocal commands to generate apt responses. Startups like Shaip are providing auditory data annotation services to tech giants like Amazon Web Services, Microsoft and Google.

The skills required for this field are:

- Spectrogram analysis

- Programming Languages like Python, Java, C++, etc.

- Auditory Database Management

- Knowledge of tools like Audacity, Adobe Audition, Cubase, Studio one, etc.

- Text data annotation

A major part of communications worldwide, be it business, art, politics or leisure, relies on the written word. However, AI systems have trouble parsing unstructured text data. Training the AI systems with right datasets to interpret written language enables the machines to classify text in images, videos, PDFs and files as well as the context within the words. One of the important applications of text data annotation is in chatbots and virtual assistants.

The key skills required for this field are:

- Knowledge in computational linguistics

- Experience in machine learning

- Database management

- Knowledge of tools like GATE, Apache UIMA, AGTK, NLTK, etc.

Emerging field with high salaries

The AI and data analytics industry is booming in India, and as a result the demand for data engineers, data analysts, data labellers and data scientists are exploding. Data annotation specialists should be adept in various skillsets ranging from machine learning to knowledge of tools specific to the type of annotations. The job demands long periods of focus, attention to detail, and ability to handle different aspects of the machine training process.

The freshers in the field of data annotation can expect packages ranging from INR 1.1 lakhs to INR 3 lakhs per annum.

According to a survey by Glassdoor , multinational corporations like Siemens, Apple, Google, etc., offer up to INR 7-8 lakhs/annum packages based on the skills and experience of the individuals.

Labelled data of high quality is the primary requirement for the smooth operation of any AI model. Hence, the demand for the implementation of a secure and cost-effective method of data labelling is of paramount importance now.

The emerging names in the business of data labelling services are:

- Acclivis technologies : Founded in 2009, this Pune based company provides high-end services in machine vision, deep learning, artificial intelligence & IoT. The job profiles the company is currently looking for include ML engineer, Image processing engineer, etc.

- Zuru .ai : The AI-powered data labelling company, founded in 2019, offers high-quality training datasets at scale.

- Cogito Tech : Founded in 2011 by Rohan Agarwal, this UP-based company offers data labelling services through its platform-agnostic strategy across sectors such as healthcare, automotive, agriculture, defence, etc.

- IMerit : Founded in 2011, this company extends end-to-end, high-quality data labelling across NLP, computer vision and various content services. The job profiles the company is currently seeking are – ML engineer, ITES executive, etc. IMerit’s control centre is in West Bengal.

- Wisepl : Founded in 2020 and based out of Kerala, this company applies different labelling techniques like Semantic Segmentation, KeyPoint Annotation, Polygon Annotation, Cuboid, Polylines Annotation, etc. Professionals interested in the field of data annotation can apply on Wisepl’s website .

With international conglomerates outsourcing AI-based services , India has become one of the leading names in the data labelling market globally.

Access all our open Survey & Awards Nomination forms in one place

Kartik Wali

Google Research Introduce PERL, a New Method to Improve RLHF

[Exclusive] Pushpak Bhattacharyya on Understanding Complex Human Emotions in LLMs

Top 7 Hugging Face Spaces to Join

7 Must-Read Generative AI Books

2024 is the Year of AMD

LangChain, Redis Collaborate to Create a Tool to Improve Accuracy in Financial Document Analysis

Apple Smoothly Crafts ‘Mouse Traps’ for Humans

Lights, Camera, Action! Womenpreneur Duo Reinvent Text-to-Video AI

CORPORATE TRAINING PROGRAMS ON GENERATIVE AI

Generative ai skilling for enterprises, our customized corporate training program on generative ai provides a unique opportunity to empower, retain, and advance your talent., upcoming large format conference, data engineering summit 2024, may 30 and 31, 2024 | 📍 bangalore, india, download the easiest way to stay informed.

‘iPhone is the Greatest Piece of Technology Humanity has Ever Made,’ Says OpenAI’s Sam Altman

Top 10 Open Source Text to Image Models in 2024

Confluent has 20% of its Global Workforce in India

Top editorial picks, openai just killed google translate with gpt-4o , tata aig launches india’s first insurance for spacetech sector, zomato is hiring for its generative ai team, airtel and google cloud collaborate to boost business cloud solutions, subscribe to the belamy: our weekly newsletter, biggest ai stories, delivered to your inbox every week., also in news.

5 Ways to Run LLMs Locally on a Computer

AIM Announced the 2nd Edition of MachineCon USA: 26th July 2024, New York

What to Expect from Google I/O 2024

Recursion’s BioHive-2, Powered by NVIDIA GPUs, Joins World’s Top 35 Supercomputers

Newsrooms Are (Not) Using AI Responsibly

Sarvam AI Launches AI Residency Program Offering Up to INR 1 Lakh Monthly Salary

Ola’s Bhavish Aggarwal Questions Future of Social Media in Walled Gardens, Envisions UPI-like DPI

Ola Krutrim Launches its First Large Language Model for Free on the Databricks Marketplace

AI Forum for India

Our discord community for ai ecosystem, in collaboration with nvidia. , "> "> flagship events, rising 2024 | de&i in tech summit, april 4 and 5, 2024 | 📍 hilton convention center, manyata tech park, bangalore, machinecon gcc summit 2024, june 28 2024 | 📍bangalore, india, machinecon usa 2024, 26 july 2024 | 583 park avenue, new york, cypher india 2024, september 25-27, 2024 | 📍bangalore, india, cypher usa 2024, nov 21-22 2024 | 📍santa clara convention center, california, usa, genai corner.

Zoho’s Sridhar Vembu Joins Ola CEO Bhavish Aggarwal’s Rant Against LinkedIn and Microsoft’s ‘Wokeness’

Chennai-Based Startup Behind First AI University Professor Launches Personal AI Home Studio

Top 10 AI Must-Know Coding Assistant Tools for Developers

Sam Altman Proposes ‘Universal Basic Compute’ for Global Access to GPT-7’s Resources

Top 10 DeepMind AlphaFold 3 Alternatives in 2024

Companies Without a Chief AI Officer are Bound to F-AI-L

Robotics will have ChatGPT Moment Soon

TATA AIG is Building an LLM-Powered WhatsApp Chatbot for Customers

World's biggest media & analyst firm specializing in ai, advertise with us, aim publishes every day, and we believe in quality over quantity, honesty over spin. we offer a wide variety of branding and targeting options to make it easy for you to propagate your brand., branded content, aim brand solutions, a marketing division within aim, specializes in creating diverse content such as documentaries, public artworks, podcasts, videos, articles, and more to effectively tell compelling stories., corporate upskilling, adasci corporate training program on generative ai provides a unique opportunity to empower, retain and advance your talent, with machinehack you can not only find qualified developers with hiring challenges but can also engage the developer community and your internal workforce by hosting hackathons., talent assessment, conduct customized online assessments on our powerful cloud-based platform, secured with best-in-class proctoring, research & advisory, aim research produces a series of annual reports on ai & data science covering every aspect of the industry. request customised reports & aim surveys for a study on topics of your interest., conferences & events, immerse yourself in ai and business conferences tailored to your role, designed to elevate your performance and empower you to accomplish your organization’s vital objectives., aim launches the 3rd edition of data engineering summit. may 30-31, bengaluru.

Join the forefront of data innovation at the Data Engineering Summit 2024, where industry leaders redefine technology’s future.

© Analytics India Magazine Pvt Ltd & AIM Media House LLC 2024

- Terms of use

- Privacy Policy

- Skip to primary navigation

- Skip to main content

Open Computer Vision Library

Data Annotation – A Beginner’s Guide

Farooq Alvi February 21, 2024 Leave a Comment AI Careers Tags: data annotation 2024 Data Annotation tools what is Data Annotation

At the heart of computer vision’s effectiveness is data annotation , a crucial process that involves labeling visual data to train machine learning models accurately. This foundational step ensures that computer vision systems can perform tasks with the precision and insight required in our increasingly automated world.

Data Annotation: The Backbone of Computer Vision Models

Data annotation serves as the cornerstone in the development of computer vision models, playing a critical role in their ability to accurately interpret and respond to the visual world. This process involves labeling or tagging visual data —such as images, videos, and also text—with descriptive or identifying information. By meticulously annotating data, we provide these models with the essential context needed to recognize patterns, objects, and scenarios.

This foundational step is similar to teaching a child to identify and name objects by pointing them out and naming them. Similarly, annotated data teaches computer vision models to understand what they ‘see’ in the data they process. Whether it’s identifying a pedestrian in a self-driving car’s path or detecting tumors in medical imaging, data annotation enables models to learn the vast visual cues present in our environment.

Understanding Data Annotation

The essence of data annotation.

In computer vision, data annotation is the process of identifying and labeling the content of images, videos, or other visual media to make the data understandable and usable by computer vision models. This meticulous process involves attaching meaningful information to the visual data, such as tags, labels, or coordinates, which describe the objects or features present within the data. Essentially, data annotation translates the complexity of the visual world into a language that machines can interpret, forming the foundation upon which these models learn and improve.

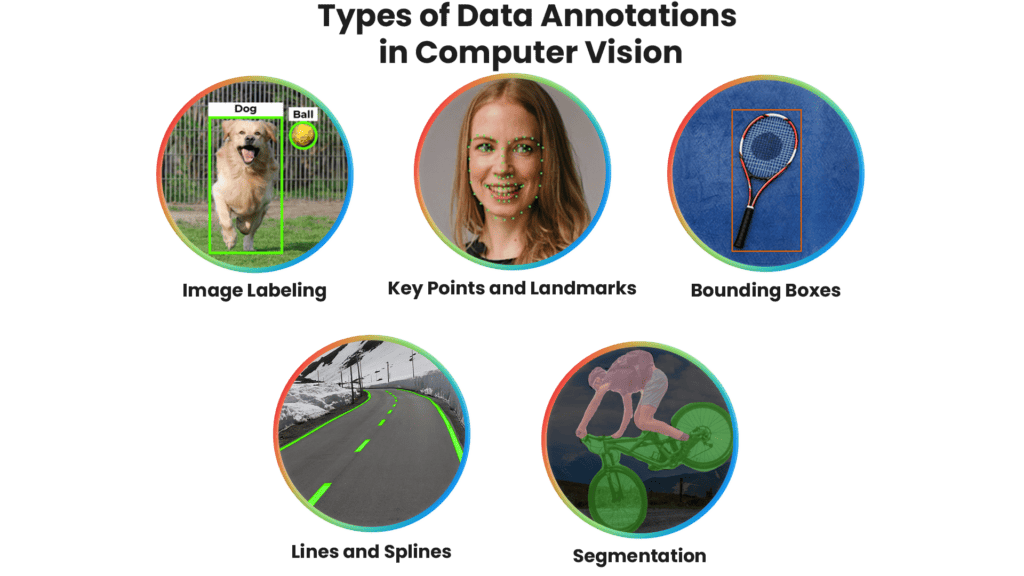

Types of Data Annotations in Computer Vision

The process of data annotation can take various forms, each suited to different requirements and outcomes in the field of computer vision. Here are some of the most common types:

Image Labeling

Image labeling involves assigning a tag or label to an entire image to describe its overall content. This method is often used for categorization tasks, where the model learns to classify images based on the labels provided.

Bounding Boxes

Bounding boxes are rectangular labels that are drawn around objects within an image to specify their location and boundaries. This type of annotation is crucial for object detection models, enabling them to recognize and pinpoint objects in varied contexts.

Segmentation

Segmentation takes data annotation a step further by dividing an image into segments or pixels that belong to different objects or classes. There are two main types:

Semantic Segmentation: Labels every pixel in the image with a class of the object it belongs to, without distinguishing between individual objects of the same class.

Instance Segmentation: Similar to semantic segmentation but differentiates between individual objects of the same class, making it more detailed and complex.

Key Points and Landmarks

This annotation type involves marking specific points or landmarks on objects within an image. It’s particularly useful for applications requiring precise measurements or recognition of specific object features, such as facial recognition or pose estimation.

Lines and Splines

Used for annotating objects with clear shapes or paths, such as roads, boundaries, or even the edges of objects. This type of annotation is essential for models that need to understand object shapes or navigate environments.

Why Data Annotation Matters in Computer Vision

Ensuring quality and accuracy in data annotation.

Accurate annotations train models to understand subtle differences between objects, recognize objects in different contexts, and make reliable predictions or decisions based on visual inputs. Inaccuracies or inconsistencies in data annotation can lead to misinterpretations by the model, reducing its effectiveness and reliability in real-world applications.

The Cornerstone of Model Training

Data annotation is the foundation upon which their learning is built. Annotated data teaches these models to recognize and understand various patterns, shapes, and objects by providing them with examples to learn from. The quality of this teaching material directly influences the model’s performance—accurate annotations lead to more precise and reliable models, while poor annotations can hamper a model’s ability to make correct identifications or predictions.

Impact on Model Performance and Reliability

The performance and reliability of computer vision models are directly tied to the quality of the annotated data they are trained on. Models trained on well-annotated datasets are better equipped to handle the nuances and variability of real-world visual data, leading to higher accuracy and reliability in their output. This is crucial in applications such as medical diagnosis, autonomous driving, and surveillance .

Accelerating Innovation and Application

Quality data annotation also plays a vital role in driving innovation within the field of computer vision. By providing models with accurately annotated datasets, researchers and developers can push the boundaries of what computer vision can achieve, exploring new applications and improving existing technologies. Accurate data annotation enables the development of more sophisticated and capable models, fostering advancements in AI and machine learning that can transform industries and improve lives.

Challenges in Data Annotation

The process of data annotation, while crucial, comes with its set of challenges that can impact the efficiency, accuracy, and overall success of computer vision models. Understanding these challenges is essential for anyone involved in developing AI and machine learning technologies.

Scale and Complexity

One of the significant challenges in data annotation is managing the scale and complexity of the datasets required to train robust computer vision models. As the demand for sophisticated and versatile AI systems grows, so does the need for extensive, well-annotated datasets that cover a wide range of scenarios and variations. Annotating these large datasets is not only time-consuming but also requires a high level of precision to ensure the quality of the data. Additionally, the complexity of certain images, where objects may be occluded, partially visible, or presented in challenging lighting conditions, adds another layer of difficulty to the annotation process.

Subjectivity and Consistency

Data annotation often involves a degree of subjectivity, especially in tasks requiring the identification of nuanced or abstract features within an image. Different annotators may have varying interpretations of the same image, leading to inconsistencies in the data. These inconsistencies can affect the training of computer vision models, as they rely on consistent data to learn how to accurately recognize and interpret visual information. Ensuring consistency across large volumes of data , therefore, becomes a critical challenge, necessitating clear guidelines and quality control measures to maintain annotation accuracy.

Balancing Cost and Quality

The process of data annotation also presents a significant cost challenge, particularly when high levels of accuracy are required. Manual annotation , while offering the potential for high-quality data, is labor-intensive and costly. On the other hand, automated annotation tools can reduce costs and increase the speed of annotation but may not always achieve the same level of accuracy and detail as manual methods. Finding the right balance between cost and quality is a constant challenge for organizations and researchers in the field of computer vision. Investing in advanced annotation tools and techniques, or a combination of manual and automated processes, can help reduce these challenges, but requires careful consideration and planning to ensure the effectiveness of the resulting models.

Tools and Technologies in Data Annotation

A variety of tools and technologies that range from simple manual annotation software to sophisticated platforms offering semi-automated and fully automated annotation capabilities.

Manual Annotation Tools

Manual annotation tools are software applications that allow human annotators to label data by hand. These tools provide interfaces for tasks such as drawing bounding boxes, segmenting images, and labeling objects within images. Examples include:

LabelImg : An open-source graphical image annotation tool that supports labeling objects in images with bounding boxes.

VGG Image Annotator (VIA) : A simple, standalone tool designed for image annotation, supporting a variety of annotation types, including points, rectangles, circles, and polygons.

LabelMe: An online annotation tool that offers a web interface for image labeling, popular for tasks requiring detailed annotations, such as segmentation.

Semi-automated Annotation Tools

CVAT (Computer Vision Annotation Tool) : An open-source tool that offers automated annotation capabilities using pre-trained models to assist in the annotation process.

MakeSense.ai : A free online tool that provides semi-automated annotation features, streamlining the process for various types of data annotation.

Automated Annotation Tools

Fully automated annotation tools aim to eliminate the need for human intervention by using advanced AI models to generate annotations. While these tools can greatly accelerate the annotation process, their effectiveness is often dependent on the complexity of the task and the quality of the pre-existing data.

Examples include proprietary systems developed by AI research labs and companies, which are often tailored to specific use cases or datasets.

The Emergence of Advanced Annotation Platforms

Several commercial platforms have emerged that provide additional functionalities such as project management, quality control workflows, and integration with machine learning pipelines. Examples include:

Amazon Mechanical Turk (MTurk) : While not specifically designed for data annotation, MTurk is widely used for crowdsourcing annotation tasks, offering access to a large pool of human annotators.

Scale AI : Provides a data annotation platform that combines human workforces with AI to annotate data for various AI applications.

Labelbox : A data labeling platform that offers tools for creating and managing annotations at scale, supporting both manual and semi-automated annotation workflows.

Also Read: Computer Vision and Image Processing : Understanding the Distinction and Interconnection

Getting started with data annotation.

Here are some tips and recommendations to get you started:

Educate Yourself Through Online Tutorials

Several online platforms offer courses specifically designed to teach the fundamentals of computer vision and data annotation. These tutorials often start with the basics, making them ideal for beginners.

Recommended tutorials:

CVAT – Nearly Everything You Need To Know

The Best Way to Annotate Images for Object Detection

Practice on Annotation Platforms

Hands-on experience is invaluable. Several platforms allow you to practice data annotation and even contribute to real-world projects:

LabelMe : A great tool for beginners to practice image annotation, offering a wide range of images and projects.

Zooniverse : A platform for citizen science projects, including those requiring image annotation. Participating in these projects can provide practical experience and contribute to scientific research.

MakeSense.ai : Offers a user-friendly interface for practicing different types of data annotation, with no setup required.

Label Studio : This is an open-source data labeling tool for labeling, annotating, and exploring many different data types.

Participate in Competitions and Open-Source Projects

Engaging with the community through competitions and open-source projects can accelerate your learning and provide valuable experience:

Kaggle : Known for its machine learning competitions, Kaggle also hosts datasets that require annotation. Participating in competitions or working on these datasets can offer hands-on experience with real-world data.

GitHub : Search for open-source computer vision projects that are looking for contributors. Contributing to these projects can provide practical experience and help you understand the challenges and solutions in data annotation.

CVPR and ICCV Challenges : These conferences often host challenges that involve data annotation and model training. Participating can offer insights into the latest research and methodologies in computer vision.

Also Read: Your 2024 Guide to becoming a Computer Vision Engineer

Data annotation is a critical yet underappreciated element in developing computer vision technologies. Through this article, we’ve explored the foundational role of data annotation, its various forms, its challenges, and the tools and techniques available to overcome these hurdles.

By understanding and contributing to this field, beginners can not only enhance their own skills but also play a part in shaping the future of technology.

Related Posts

August 16, 2023 Leave a Comment

August 23, 2023 Leave a Comment

August 30, 2023 Leave a Comment

Become a Member

Stay up to date on OpenCV and Computer Vision news

Free Courses

- TensorFlow & Keras Bootcamp

- OpenCV Bootcamp

- Python for Beginners

- Mastering OpenCV with Python

- Fundamentals of CV & IP

- Deep Learning with PyTorch

- Deep Learning with TensorFlow & Keras

- Computer Vision & Deep Learning Applications

- Mastering Generative AI for Art

Partnership

- Intel, OpenCV’s Platinum Member

- Gold Membership

- Development Partnership

General Link

Subscribe and Start Your Free Crash Course

Stay up to date on OpenCV and Computer Vision news and our new course offerings

- We hate SPAM and promise to keep your email address safe.

Join the waitlist to receive a 20% discount

Courses are (a little) oversubscribed and we apologize for your enrollment delay. As an apology, you will receive a 20% discount on all waitlist course purchases. Current wait time will be sent to you in the confirmation email. Thank you!

What is Data Annotation?

Building an AI or ML model that acts like a human requires large volumes of training data . For a model to make decisions and take action, it must be trained to understand specific information. Data annotation is the categorization and labeling of data for AI applications. Training data must be properly categorized and annotated for a specific use case. With high-quality, human-powered data annotation, companies can build and improve AI implementations. The result is an enhanced customer experience solution such as product recommendations, relevant search engine results, computer vision, speech recognition, chatbots, and more. There are several primary types of data: text, audio, image, and video

Text Annotation

The most commonly used data type is text - according to the 2020 State of AI and Machine Learning report , 70% of companies rely on text. Text annotations include a wide range of annotations like sentiment, intent, and query.

Sentiment Annotation

Sentiment analysis assesses attitudes, emotions, and opinions, making it important to have the right training data. To obtain that data, human annotators are often leveraged as they can evaluate sentiment and moderate content on all web platforms, including social media and eCommerce sites, with the ability to tag and report on keywords that are profane, sensitive, or neologistic, for example.

Intent Annotation

As people converse more with human-machine interfaces, machines must be able to understand both natural language and user intent. Multi-intent data collection and categorization can differentiate intent into key categories including request, command, booking, recommendation, and confirmation.

Semantic Annotation

Semantic annotation both improves product listings and ensures customers can find the products they’re looking for. This helps turn browsers into buyers. By tagging the various components within product titles and search queries, semantic annotation services help train your algorithm to recognize those individual parts and improve overall search relevance.

Named Entity Annotation

Named Entity Recognition (NER) systems require a large amount of manually annotated training data. Organizations like Appen apply named entity annotation capabilities across a wide range of use cases, such as helping eCommerce clients identify and tag a range of key descriptors, or aiding social media companies in tagging entities such as people, places, companies, organizations, and titles to assist with better-targeted advertising content.

Real World Use Case: Improving Search Quality for Microsoft Bing in Multiple Markets

Microsoft's Bing search engine required large-scale datasets to continuously improve the quality of its search results – and the results needed to be culturally relevant for the global markets they served. We delivered results that surpassed expectations. Beyond delivering project and program management, we provided the ability to grow rapidly in new markets with high-quality data sets. (Read the full case study here)

Audio Annotation

Audio annotation is the transcription and time-stamping of speech data, including the transcription of specific pronunciation and intonation, along with the identification of language, dialect, and speaker demographics. Every use case is different, and some require a very specific approach: for example, the tagging of aggressive speech indicators and non-speech sounds like glass breaking for use in security and emergency hotline technology applications.

Real World Use Case: Dialpad’s transcription models leverage our platform for audio transcription and categorization

Dialpad improves conversations with data. They collect telephonic audio, transcribe those dialogs with in-house speech recognition models, and use natural language processing algorithms to comprehend every conversation. They use this universe of one-on-one conversation to identify what each rep–and the company at large–is doing well and what they aren’t, all with the goal of making every call a success. Dialpad had worked with a competitor of Appen for six months but were having trouble reaching an accuracy threshold to make their models a success. It took just a couple weeks for the change to bear fruit for Dialpad and to create the transcription and NLP training data they needed to make their models a success. (Read the full case study here)

Image Annotation

Image annotation is vital for a wide range of applications, including computer vision, robotic vision, facial recognition, and solutions that rely on machine learning to interpret images. To train these solutions, metadata must be assigned to the images in the form of identifiers, captions, or keywords. From computer vision systems used by self-driving vehicles and machines that pick and sort produce, to healthcare applications that auto-identify medical conditions, there are many use cases that require high volumes of annotated images. Image annotation increases precision and accuracy by effectively training these systems.

Real World Use Case: Adobe Stock Leverages Massive Asset Profile to Make Customers Happy

One of Adobe’s flagship offerings is Adobe Stock, a curated collection of high-quality stock imagery. The library itself is staggeringly large: there are over 200 million assets (including more than 15 million videos, 35 million vectors, 12 million editorial assets, and 140 million photos, illustrations, templates, and 3D assets). Every one of those assets needs to be discoverable. Appen provided highly accurate training data to create a model that could surface these subtle attributes in both their library of over a hundred million images, as well as the hundreds of thousands of new images that are uploaded every day. That training data powers models that help Adobe serve their most valuable images to their massive customer base. Instead of scrolling through pages of similar images, users can find the most useful ones quickly, freeing them up to start creating powerful marketing materials. (Read the full case study here)

Video Annotation

Human-annotated data is the key to successful machine learning. Humans are simply better than computers at managing subjectivity, understanding intent, and coping with ambiguity. For example, when determining whether a search engine result is relevant, input from many people is needed for consensus. When training a computer vision or pattern recognition solution, humans are needed to identify and annotate specific data, such as outlining all the pixels containing trees or traffic signs in an image. Using this structured data, machines can learn to recognize these relationships in testing and production.

Real World Use Case: HERE Technologies Creates Data to Fine-Tune Maps Faster Than Ever

With a goal of creating three-dimensional maps that are accurate down to a few centimeters, HERE has remained an innovator in the space since the mid-’80s, giving hundreds of businesses and organizations detailed, precise and actionable location data and insights. HERE has an ambitious goal of annotating tens of thousands of kilometers of driven roads for the ground truth data that powers their sign-detection models. Parsing videos into images for that goal, however, is simply untenable. Our Machine Learning assisted Video Object Tracking solution presented a perfect solution to this lofty ambition. That’s because it combines human intelligence with machine learning to drastically increase the speed of video annotation. (Read the full case study here)

What Appen Can Do For You