We recognize the significance of content in the modern digital world. Sign up on our website to receive the most recent technology trends directly in your email inbox..

Safe and Secure

Free Articles

We recognize the significance of content in the modern digital world. Sign up on our website to receive the most recent technology trends directly in your email inbox.

Please leave this field empty. We assure a spam-free experience. You can update your email preference or unsubscribe at any time and we'll never share your information without your consent. Click here for Privacy Policy.

Top 6 Use Cases of AWS Glue

AWS Glue Use Cases one of the most popular ETL (extract, transform, and load) tools. It falls under the data processing and operations category.

We have previously discussed the difference between AWS Glue and other tools. We have also focused on the limitations of AWS Glue and how to overcome the same as well.

This time around we will be discussing some of the top use cases of AWS Glue that will help you understand how you can use AWS Glue for your organization.

Top 6 AWS Glue Use Cases

- Burt Corporation

How Deloitte transformed application deployment process with AWS Glue

Deloitte needs no formal introduction. It is one of the biggest professional and financial service providers across the globe.

Deloitte wanted to use a set of solutions that could transform their application deployment process .

This was required to record and process real-world data. They decided to go for the AWS Service catalog to fulfill these needs.

AWS Glue is a part of this service catalog, and it was essential in using advanced ETL functionalities. It played a crucial part in speeding up the process of data capture and processing through the serverless architecture.

In tangible terms, this association helped Deloitte to deploy the full-featured miner environment within 45 minutes.

How Siemens optimized its cybersecurity environment with AWS Glue

One of the most recognized global brands, needs no introduction. It specializes in the technology sector and specifically in industrial manufacturing.

Siemens is a German multinational company and headquartered in Munich, Germany.

It wanted to counter the cyber threats with the smart system. This system was expected to prepare the data, analyze it, and make predictions with machine learning.

The company decided to approach Amazon Web Services for the solution. Experts at AWS advised the company to Amazon SageMaker, AWS Glue, and AWS Lambda.

AWS Glue played a crucial part in the process. It is a tool that extracted the data and helped the data scientists to categorize the data easily.

It also helped them to make the decisions by converting the hashed data into readable data.

As a result of this association, Siemens could go well beyond the published benchmarks for the threat analysis, system performance, and much more.

What Burt Corporation did to create a data intelligence and analytics platform with AWS Glue

Burt Corporationis a startup data company that specializes in data products to transform the new online media landscape. It has offices in New York, Berlin, and Gothenberg (Sweden).

Many of the big online media publication houses use Burt's data intelligence and analytics platform to understand and optimize online marketing strategies.

A platform needs to have effective data capture, process, analysis, and decision-making capabilities to fulfill these requirements.

Hence, the company decided to use AWS Glue, Amazon Redshift, and Amazon Athena to fulfill these requirements.

Burt transformed its data analytics and intelligence platform by integrating Amazon Athena and AWS Glue.

As a result, Burt could deliver a solution that fulfilled its client’s requirements.

Also Read: What are the Limitations of using AWS Glue?

How AWS Glue helped Cigna streamline its operational processes

Cigna is also a popular American healthcare service organization based in Connecticut. It needed a reporting and analytics solution that could transform their then existing processes.

It also required a solution that could streamline the procurement solutions and contract management process.

They approached KPI Cloud Analytics for a solution, and it developed a solution to fulfill these needs. The key part of this solution offered by KPI was AWS Glue.

AWS Glue was the bridge between Amazon S3 and Amazon Redshift. It helped the company to collect, process, and transform the data for Redshift to do further operations.

As a result, Cigna could extend the periphery of its data collection and process sources. It could also optimize and streamline the process that helped them overhaul their processes.

How Claranet improved its incidence data reading capabilities with AWS Glue

Claranet is a hosting, network, and managed application services. It is headquartered in London and operates in European countries and Brazil.

It wanted a solution that could read millions of records and use them in Elasticsearch. They decided to use AWS for this solution and zeroed on the use of AWS Glue and AWS Lambda.

Before then the company was using on-premise architecture for ETL operations and it was facing some speed and performance issues.

But, with AWS glue, the company replaced the existing environment and store and load the data on a serverless architecture.

This allowed the company to migrate the load from the on-premise solution to the cloud.

This association proved fruitful. It also helped them to improve system efficiency, increase the speed, and enhance the overall structure.

How AWS Glue helped Finaccel improve the speed and reduce costs

Finaccel is a technology company that specializes in financial services related to products.

These products are useful and help out the financial services companies in Southeast Asia. It is headquartered in Jakarta, Indonesia.

It wanted to increase and transform day-to-day ETL jobs. The company had opted for many other solutions but still could not find an ideal solution for the needs.

Ultimately, they decided to use AWS Glue, and it paid off as the team could easily load the data, do the processes, and transform it for further process to the Redshift.

It also allowed the company to speed up the process and save costs .

This association could not only turn out to be faster and effective, but it was also a lot cheaper than the tried alternatives.

For a startup like Finaccel, the saved costs were one of the crucial factors.

Key Takeaways:

We can see from the above-mentioned examples of how AWS Glue can transform your organization's data collection, processing, and analysis process.

Also Read: AWS Glue Vs. EMR: Which One is Better?

Blogs Category List

Monthly Blog Pick

AWS Glue Vs. EMR: Which One is Better?

Understanding the Pros and Cons of MongoDB

Top 8 Open Source Mobile Device Management (MDM) Tools

AWS Glue Best Practices: Building an Operationally Efficient Data Pipeline

Publication date: August 26, 2022 ( Document revisions )

Data integration is a critical element in building a data lake and a data warehouse. Data integration enables data from different sources to be cleaned, harmonized, transformed, and finally loaded. In the process of building a data warehouse, most of the development efforts are required for building a data integration pipeline. Data integration is one of the most critical elements in data analytics ecosystems. An efficient and well-designed data integration pipeline is critical for making the data available and trusted amongst the analytics consumers.

This whitepaper shows you some of the considerations and best practices for building and efficiently operating your data pipeline with AWS Glue .

Are you Well-Architected?

The AWS Well-Architected Framework helps you understand the pros and cons of the decisions you make when building systems in the cloud. The six pillars of the Framework allow you to learn architectural best practices for designing and operating reliable, secure, efficient, cost-effective, and sustainable systems. Using the AWS Well-Architected Tool , available at no charge in the AWS Management Console , you can review your workloads against these best practices by answering a set of questions for each pillar.

For more expert guidance and best practices for your cloud architecture—reference architecture deployments, diagrams, and whitepapers—refer to the AWS Architecture Center .

Introduction

Data volumes and complexities are increasing at an unprecedented rate, exploding from terabytes to petabytes or even exabytes of data. Traditional on-premises based approaches for bundling a data pipeline do not work well with a cloud-based strategy, and most of the time, do not provide the elasticity and cost effectiveness of cloud native approaches.

AWS hears from customers that they want to extract more value from their data, but struggle to capture, store, and analyze all the data generated by today’s modern and digital businesses. Data is growing exponentially, coming from new sources. It is increasingly diverse, and needs to be securely accessed and analyzed by any number of applications and people.

With changing data and business needs, the focus on building a high performing, cost effective, and low maintenance data pipeline is paramount. Introduced in 2017, AWS Glue is a fully managed, serverless data integration service that allows customers to scale based on their workload, with no infrastructures to manage.

The next section discusses common best practices for building and efficiently operating your data pipeline with AWS Glue. This document is intended for advanced users, data engineers and architects.

To get the most out of this whitepaper, it’s helpful to be familiar with AWS Glue , AWS Glue DataBrew , Amazon Simple Storage Service (Amazon S3), AWS Lambda , and AWS Step Functions .

Refer to AWS Glue Best Practices: Building a Secure and Reliable Data Pipeline for best practices around security and reliability for your data pipelines with AWS Glue.

Refer to AWS Glue Best Practices: Building a Performant and Cost Optimized Data Pipeline for best practices around performance efficiency and cost optimization for your data pipelines with AWS Glue.

To use the Amazon Web Services Documentation, Javascript must be enabled. Please refer to your browser's Help pages for instructions.

Thanks for letting us know we're doing a good job!

If you've got a moment, please tell us what we did right so we can do more of it.

Thanks for letting us know this page needs work. We're sorry we let you down.

If you've got a moment, please tell us how we can make the documentation better.

5 AWS Glue Use Cases and Examples That Showcase Its Power

Discover a Variety of AWS Glue Examples and Use Cases, From Data Cataloging To Efficient Data Processing | ProjectPro

Did you know over 5140 businesses worldwide started using AWS Glue as a big data tool in 2023?

With the rapid growth of data in the industry, businesses often deal with several challenges when handling complex processes such as data integration and analytics. This increases the demand for big data processing tools such as AWS Glue. AWS Glue is a serverless platform that makes acquiring, managing, and integrating data for analytics, machine learning, and application development easier. It streamlines all data integration processes so that you can effectively and instantly utilize your integrated data. ETL tools , such as AWS Glue, efficiently improve your data quality, which BI tools can leverage for various purposes, including analyzing customer trends, maximizing operational efficiency, boosting user experience, enhancing business performance, and more. So, without further ado, let’s explore some of the most efficient AWS Glue examples and use cases to help you understand what AWS Glue is and how it helps businesses transform their data processing workflows and glean valuable insights into operations.

Top 5 AWS Glue Examples and Use Cases

Below are five significant AWS Glue ETL use cases that are applicable across organizations for various purposes-

Data Cataloging

Businesses use AWS Glue Data Catalog to eliminate the barriers between cross-functional data production teams and business-focused consumer teams and create business intelligence (BI) reports and dashboards. Domain experts can easily add data descriptions using the Data Catalog, and data analysts can easily access this metadata using BI tools. The Data Catalog essentially contains the most basic details about the data stored in several data sources, such as Amazon Simple Storage Service (Amazon S3), Amazon Relational Database Service (Amazon RDS), and Amazon Redshift , among others. Using AWS Glue for data cataloging helps businesses easily manage and organize their data for easy access while maintaining data accuracy and compliance.

Here's what valued users are saying about ProjectPro

Savvy Sahai

Data Science Intern, Capgemini

Abhinav Agarwal

Graduate Student at Northwestern University

Not sure what you are looking for?

Data Lake Ingestion

By identifying the form and structure of your data, AWS Glue significantly decreases the time and effort required to generate business insights from an Amazon S3 data lake instantly. Your Amazon S3 data is automatically crawled by AWS Glue, which then determines data formats and offers schemas for usage with other AWS analytic services. Hundreds of tables are often extracted from source databases to the data lake raw layer as part of a business project. A separate AWS Glue job for each source table is preferable to streamline operations, state management, and error handling. This approach is ideal for a limited number of tables.

Gain expertise in big data tools and frameworks with exciting big data projects for students.

Data Preparation

For advanced data transformation and operational purposes, few businesses may have use cases where self-service data preparation must be integrated with a traditional corporate data pipeline. Using Step Functions, they may integrate the typical AWS Glue ETL workflow with data preparation done by data analysts and scientists. Businesses prepare and clean the latest data using DataBrew, then leverage AWS Glue ETL's Step Functions for complex transformation. AWS Glue DataBrew helps minimize the time it takes to prepare the data by around 80% compared to standard data preparation methods due to its more than 250 pre-built transformations.

Data Processing

Companies must often integrate several datasets with various data ingestion patterns as part of their data processing requirements. Some of these datasets are incremental, acquired at regular intervals, and integrated with the full datasets to provide output, while others are ingested once in full, received occasionally, and always used in their entirety. Companies use AWS Glue to create the extract, transform, and load (ETL) pipelines to fulfill this requirement. When a job is rerun at a specified interval, Glue provides a feature called job bookmarks that processes incremental data. A job bookmark comprises the states of different job elements, including sources, transformations, and targets. This is implemented by preserving state data from a job run, which helps AWS Glue limit the reprocessing of older data.

Unlock the ProjectPro Learning Experience for FREE

Data Archiving

Enterprises must determine how to manage the exponential growth of data in a way that is both efficient and cost-effective. Business leaders seek ways to manage the data and techniques to extract insights from the data so their businesses can achieve results that can be put into action. Organizations can use CloudArchive Direct to archive the essential data to Amazon S3 and AWS analytics services like AWS Glue, Amazon Athena , etc., to analyze and deliver insights on their data. These platforms give businesses the tools they need to quickly access their dark data and use it to generate valuable business results.

Now that you know how to leverage AWS Glue for various scenarios, it's time for you to use it in real-world projects. Working on projects is the best way to understand the real-world AWS Glue use cases and unleash its full potential. Getting your hands dirty on various data engineering projects , from data ingestion and cleansing to data warehousing and analytics, will help you better understand the benefits of using AWS Glue.

Check out ProjectPro repository, which offers over 250 industry-level Big Data and Data Science projects that leverage AWS Glue and many other modern tools and technologies.

About the Author

Daivi is a highly skilled Technical Content Analyst with over a year of experience at ProjectPro. She is passionate about exploring various technology domains and enjoys staying up-to-date with industry trends and developments. Daivi is known for her excellent research skills and ability to distill

© 2024

© 2024 Iconiq Inc.

Privacy policy

User policy

Write for ProjectPro

Cloud Training Program

Learn Cloud With Us

AWS Glue: Overview, Features, Architecture, Use Cases & Pricing

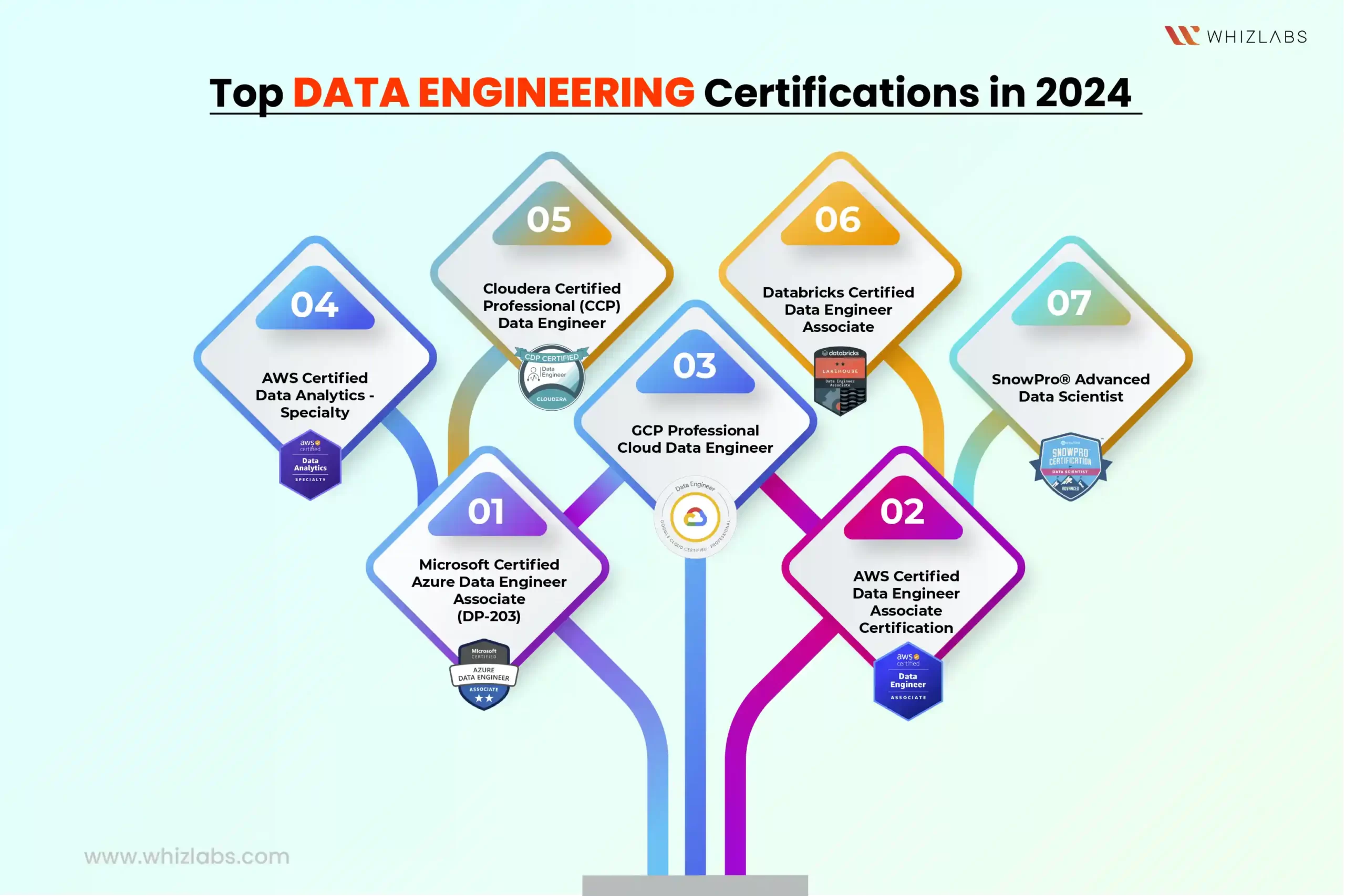

March 26, 2024 by Ayush Jain 2 Comments

AWS Glue is a fully managed ETL (extract, transform, and load) service that enables categorising your data, cleaning it, enriching it, and reliably moving it between various data stores and data streams simple and cost-effective.

What is AWS Glue?

- AWS Glue Components

- AWS Glue Architecture

- Monitoring

AWS Glue is a serverless data integration service that makes finding, preparing, and combining data for analytics, machine learning, and application development simple. AWS Glue delivers all of the capabilities required for data integration, allowing you to begin analysing and putting your data to use in minutes rather than months.

Data integration is the procedure of preparing and merging data for analytics, machine learning, and application development. It entails a variety of tasks, including data discovery and extraction from a variety of sources, data enrichment, cleansing, normalisation, and merging, and data loading and organisation in databases, data warehouses, and data lakes. Different types of users and products are frequently used to complete these tasks.

AWS Glue offers both visual and code-based interfaces to help with data integration. Users can rapidly search and retrieve data using the AWS Glue Data Catalog. Data engineers and ETL (extract, transform, and load) developers may graphically create, run, and monitor ETL workflows with a few clicks in AWS Glue Studio.

Check Also: Free AWS Training and Certifications

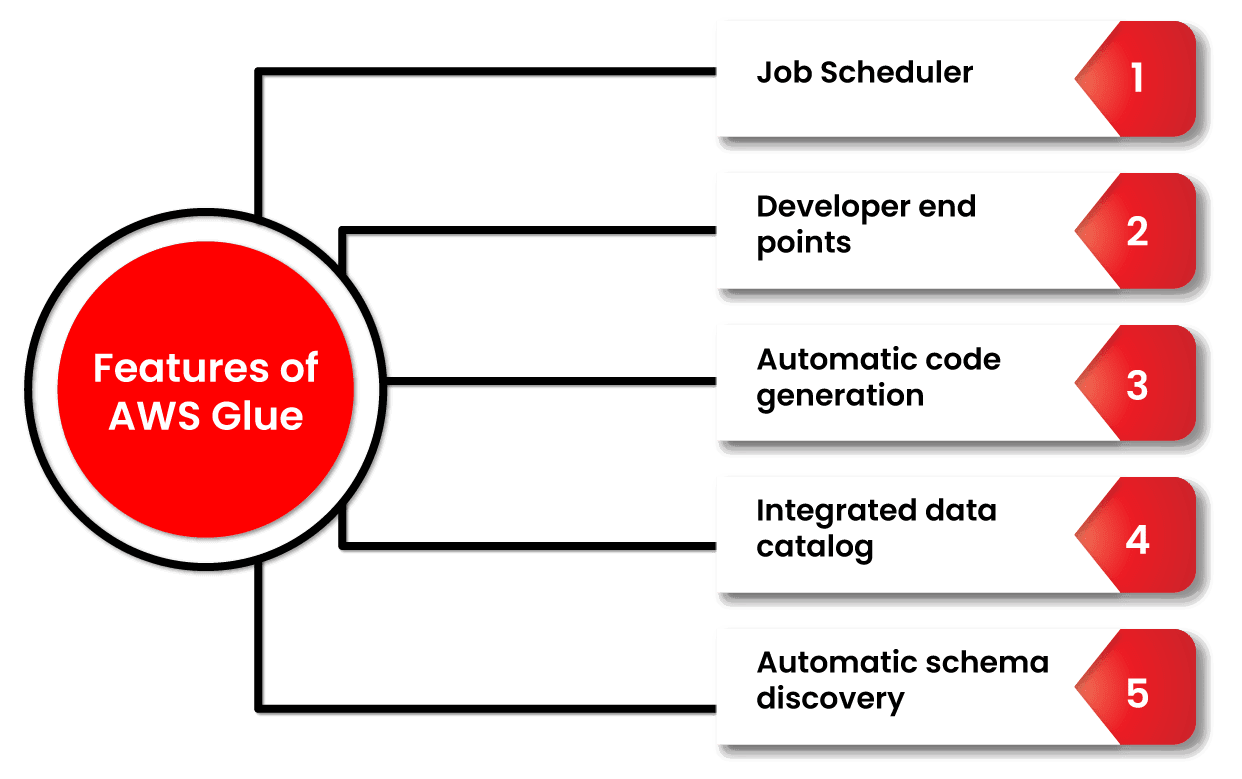

Features of AWS Glue

- Automatic schema discovery. Developers can use Glue to automate crawlers that collect schema-related data and store it in a data catalogue that can later be used to manage jobs.

- Job scheduler. Glue jobs can be scheduled and called on a flexible basis, using event-based triggers or on-demand. Several jobs can be initiated at the same time, and users can specify job dependencies.

- Developer endpoints. Developers can use them to debug Glue as well as develop custom readers, writers, and transformations, which can subsequently be imported into custom libraries.

- Automatic code generation. The ETL process automatically generates code, and all that is necessary is a location/path for the data to be saved. The code is written in Scala or Python.

- Integrated data catalog. Acts as a singular metadata store of data from a disparate source in the AWS pipeline. There is only one catalog in an AWS account.

AWS Glue Components

AWS Glue Data Catalog: The Glue Data Catalog is where persistent metadata is saved. It delivers table, task, and other control data to keep your Glue environment running well. For each account in each location, AWS provides a single Glue Data Catalog. Classifier: A classifier is a programme that determines the schema of your data. AWS Glue has classifiers for CSV, JSON, AVRO, XML, and other common relational database management systems and file types. Connection: The AWS Glue Connection object is a Data Catalog object that contains the properties required to connect to a certain data storage. Crawler: It’s a feature that explores many data repositories in a single session. It uses a prioritised collection of classifiers to establish the schema for your data and then builds metadata tables in the Glue Data Catalog. Database: A database is a structured collection of Data Catalog table definitions that are connected together. Data Store: Data storage refers to a location where you can store your data for an extended period of time. Two examples are relational databases and Amazon S3 buckets. Data Source: A data source is a set of data that is used as input to a transformation process. Data Target: A data target is a storage location where the modified data is written by the task. Transform: Transform is the logic in the code that is used to change the format of your data.

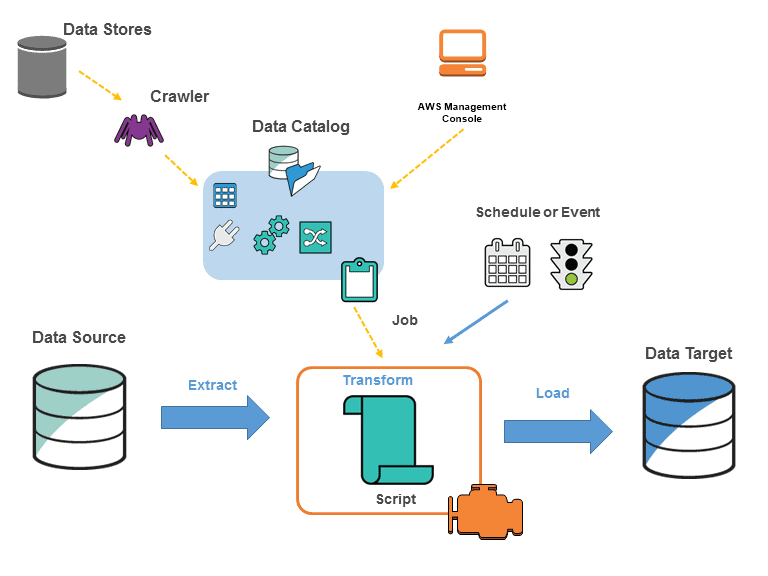

AWS Glue Architecture

You define tasks in AWS Glue to perform the process of extracting, transforming, and loading (ETL) data from one data source to another. The actions you need to take are as follows:

- To begin, you must pick which data source you will use.

- If you’re utilising a data storage source, you’ll need to create a crawler to send metadata table definitions to the AWS Glue Data Catalog.

- When you point your crawler at a data store, it adds metadata to the Data Catalog.

- Alternatively, if you’re using streaming sources, you’ll need to explicitly establish Data Catalog tables and data stream characteristics.

- Once the Data Catalog has been categorised, the data is instantly searchable, queryable, and ready for ETL.

- The data is then converted using AWS Glue, which generates a script. You can also provide the script using the Glue console or API. (The script runs on an Apache Spark environment in AWS Glue.)

- You can perform the task on-demand or schedule it to start when a specific event occurs after you’ve created the script. The trigger can be a time-based schedule or an occurrence.

- While the task is running, the script will extract data from the data source, transform it, and load it to the data target, as seen in the above graphic. The ETL (Extract, Transform, Load) task in AWS Glue succeeds in this fashion.

- Use an Amazon S3 data lake to run queries: Without relocating your data, you may utilise AWS Glue to make it available for analytics.

- Analyze your data warehouse’s log data: To transform, flatten, and enrich data from source to target, write ETL scripts.

- Create an event-driven ETL pipeline: You can perform an ETL job as soon as new data is available in Amazon S3 by launching AWS Glue ETL jobs with an AWS Lambda function.

- A unique view of your data from many sources: You can instantly search and discover all of your datasets using AWS Glue Data Catalog, and save all essential metadata in one central repository.

Advantages of AWS Glue

- Glue is a serverless data integration solution that eliminates the requirement for infrastructure creation and management.

- It provides easy-to-use tools for creating and tracking job activities that are triggered by schedules and events , as well as on-demand.

- It’s a budget friendly option. You only have to pay for the resources you use during the job execution process.

- The glue will develop ETL pipeline code in Scala or Python based on your data sources and destinations.

- Multiple organisations within the corporation can utilise AWS Glue to collaborate on various data integration initiatives. The amount of time it takes to analyse the data is reduced as a result of this.

- Using AWS CloudTrail, record the actions of the user, role, and AWS service.

- When an event fits a rule, you may utilise Amazon CloudWatch Events and AWS Glue to automate the actions.

- You can monitor, store, and access log files from many sources with Amazon CloudWatch Logs.

- A tag can be assigned to a crawler, job, trigger, development endpoint, or machine learning transform.

- Using the Apache Spark web UI, you can monitor and troubleshoot ETL operations and Spark applications.

- If you enabled continuous logging, you could see real-time logs on the Amazon CloudWatch dashboard.

- The amount of DPUs utilised to conduct your ETL operation determines how much you’ll be charged each hour

- The number of DPUs utilised to run your crawler determines how much you’ll be paid per hour.

- You will be charged per month if you store more than a million objects.

- You will be charged per month if you exceed a million requests in a month.

Related Links/References

- AWS Free Tier Limits

- AWS Free Tier Account Details

- How to create a free tier account in AWS

- AWS Certified Solutions Architect Associate SAA-CO3

- Amazon Elastic File System User guide

- AWS Certified Solution Architect Associate SAA-C03 Step By Step Activity Guides (Hands-On Labs)

- AWS Free Tier Account Services

- AWS Route 53 Introduction

Next Task For You

Begin your journey toward becoming an AWS Data Engineer by clicking on the below image and joining the waitlist.

June 12, 2022 at 12:11 pm

perfect………. brr

June 12, 2022 at 12:15 pm

Hi Barfiin,

We are glad that you liked our blog!

Please stay tuned for more informative blogs like this.

Thanks & Regards Rahul Dangayach Team K21 Academy

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

"Learn Cloud From Experts"

- Partner with Us

- Terms and Conditions

- Privacy Policy

- Docker and Kubernetes Job Oriented Program

- AWS Job Oriented Program

- Azure Job Oriented Program

- Azure Data Job Oriented Program

- DevOps Job Oriented Program

- Oracle Cloud Job Oriented Program

- Terraform Job Oriented

Get in touch with us

8 Magnolia Pl, Harrow HA2 6DS, United Kingdom

Email : [email protected]

A Complete Guide to AWS Glue

AWS Glue is a fully managed, pay-as-you-go, extract-transform-load (ETL) service that makes it easy for data engineers, data analysts and developers to efficiently process, convert, and analyze vast amounts of complex datasets from numerous sources. In this guide we will cover the basics of AWS Glue, its components and features, as well as tips & tricks for making the most out of it.

Table of Contents

What is aws glue.

AWS Glue is an Extract-Transform-Load (ETL) service from Amazon Web Services that enables organizations to effectively analyze and transform datasets. AWS Glue uses several network components, including crawlers, data pipelines, and triggers, to perform ETL tasks. It extracts data from various sources and stores it in a secure data warehouse so that it can be queried, analyzed and transformed into meaningful information quickly and easily.

Components of AWS Glue

AWS Glue consists of a number of components that work together to provide an efficient and reliable ETL service. These components include the following: crawlers, data pipelines, triggers and a data catalog. Crawlers are used to discover data sources and extract their schema so that it can be stored as metadata in the data catalog. Data pipelines then move the extracted raw data from its source format into formats optimized for querying and analysis. Finally, triggers enable automated execution of ETL tasks whenever specific conditions are met.

Features of AWS Glue

AWS Glue comes with a variety of features that make it an ideal choice for data integration. This includes cloud-native compatibility, native code generation (Python, Scala and Apache Spark), rich direct connectivity to popular data sources, automation options and much more. Additionally, AWS Glue makes it easy to focus on managing your data integration tasks without the need for manual coding or configuration of traditional ETL tools. This results in greater efficiency and faster time-to-value.

Benefits and Limitations of AWS Glue

One of the biggest benefits AWS Glue provides is a unified interface and easy deployment of data pipelines. With its variety of features and options, it can handle most of your data integration needs while offering an efficient way to connect all your cloud-native applications. AWS Glue also automates much of the tedious work involved in managing your ETL processes, allowing you to focus on refining your data for better analytics and insights. However, it does have some limitations; it’s not as comprehensive as traditional ETL tools or other big data processing solutions, so it may not be suitable for more complex tasks.

Scalability

AWS Glue can automatically scale up or down depending on the size of your data processing needs.

Cost-effectiveness

You only pay for the data processing resources you use, which can help reduce costs.

AWS Glue automates many of the data transformation and processing tasks, saving time and effort.

Integration

AWS Glue can integrate with other AWS services like Amazon S3 , Amazon Redshift, and Amazon RDS .

Customizability

AWS Glue is highly customizable and can be configured to meet specific data processing and transformation needs.

AWS Glue is a serverless service, which means you don’t need to manage any infrastructure, allowing you to focus on your data processing tasks.

Bonus: The Best Practices for Using AWS Glue

When using AWS Glue for data integration, it’s important to follow certain best practices.

Consider the following when creating and managing data pipelines:

- Leverage existing schemas when possible.

- Use version control and log files to maintain history.

- Ensure good test coverage of your data transformations with automated unit tests.

- Take advantage of Cloudformation templates for easy replacement of resources.

- Incorporate monitoring tools like Cloudwatch and Datadog for tracking resource usage and job performance.

Following these practices can help ensure that your ETL processes are as efficient and effective as possible.

Ready to make the most of AWS Glue?

Other aws guides.

AWS Outposts: Enhancing On-Premises and Cloud Integration

AWS IoT Core: Key Features and Pricing Explained

Optimizing Software Development: The Power of Amazon CodeGuru

AWS Transit Gateway: Streamlining Complex Network Architectures

AWS X-Ray for Application Insight and Debugging

Text Insights with AWS Comprehend: A Comprehensive Guide

Get the latest articles and news about AWS

I have read and agree with Cloudvisor's Privacy Policy .

Take advantage of instant discounts on your AWS and Cloudfront services

AWS Cost Optimization

Squeeze the best performance out of your AWS infrastructure for less money

Well-Architected Framework Review

Ensure you're following AWS best practices with a free annual WAFR review

Monitoring Service

24/7 monitoring catches any potential issues before they turn into a problem

Data Engineering Services

Make the most of your data with optimization, analysis, and automation

Migration to AWS

Seamlessly transfer your cloud infrastructure to AWS with minimal downtime

AWS Security

Protect your AWS infrastructure with sophisticated security tools and consultation

AWS Marketplace

Access the best tools for your use case via the AWS Marketplace

- For Startups

- Case Studies

Dive into our latest insights, trends, and tips on cloud technology.

Your comprehensive resource for mastering AWS services.

Join our interactive webinars to learn from cloud experts.

Whitepapers

Explore in-depth analyses and research on cloud strategies.

- Free consultation

Real-world Case Studies: How Organizations Solved Data Integration Challenges with AWS ETL Tools

Introduction to the importance of data integration and the challenges organizations face in achieving it.

In today's data-driven world, organizations are constantly faced with the challenge of integrating vast amounts of data from various sources. Data integration plays a crucial role in enabling businesses to make informed decisions and gain valuable insights. However, achieving seamless data integration can be a complex and time-consuming process. That's where AWS ETL tools come into play. With their cost-effective, scalable, and streamlined solutions, these tools have proven to be game-changers for organizations facing data integration challenges. In this blog post, we will explore real-world case studies that demonstrate how organizations have successfully solved their data integration challenges using AWS ETL tools. So, let's dive in and discover the power of these tools in overcoming data integration hurdles.

Overview of AWS ETL Tools

Introduction to aws etl tools.

In today's data-driven world, organizations are faced with the challenge of integrating and analyzing vast amounts of data from various sources. This is where Extract, Transform, Load (ETL) tools come into play. ETL tools are essential for data integration as they enable the extraction of data from multiple sources, transform it into a consistent format, and load it into a target system or database.

Amazon Web Services (AWS) offers a range of powerful ETL tools that help organizations tackle their data integration challenges effectively. These tools provide scalable and cost-effective solutions for managing and processing large volumes of data. Let's take a closer look at some of the key AWS ETL tools.

AWS Glue: Simplifying Data Integration

AWS Glue is a fully managed extract, transform, and load (ETL) service that makes it easy to prepare and load data for analytics. It provides a serverless environment for running ETL jobs on big data sets stored in Amazon S3 or other databases. With AWS Glue, you can discover, catalog, and transform your data quickly and easily.

One of the key features of AWS Glue is its ability to automatically generate ETL code based on your source and target schemas. This eliminates the need for manual coding and reduces development time significantly. Additionally, AWS Glue supports various data formats such as CSV, JSON, Parquet, and more, making it compatible with different types of data sources.

AWS Data Pipeline: Orchestrating Data Workflows

AWS Data Pipeline is another powerful tool offered by AWS for orchestrating and automating the movement and transformation of data between different services. It allows you to define complex workflows using a visual interface or JSON templates.

With AWS Data Pipeline, you can schedule regular data transfers between various AWS services like Amazon S3, Amazon RDS, Amazon Redshift, etc., without writing any custom code. It also provides built-in fault tolerance and error handling, ensuring the reliability of your data workflows.

AWS Database Migration Service: Seamlessly Migrating Data

Migrating data from legacy systems to the cloud can be a complex and time-consuming process. AWS Database Migration Service simplifies this task by providing a fully managed service for migrating databases to AWS quickly and securely.

Whether you are migrating from on-premises databases or other cloud platforms, AWS Database Migration Service supports a wide range of source and target databases, including Oracle, MySQL, PostgreSQL, Amazon Aurora, and more. It handles schema conversion, data replication, and ongoing replication tasks seamlessly, minimizing downtime during the migration process.

Tapdata: A Modern Data Integration Solution

While AWS ETL tools offer robust capabilities for data integration, there are also third-party solutions that complement these tools and provide additional features. One such solution is Tapdata .

Tapdata is a modern data integration platform that offers real-time data capture and synchronization capabilities. It allows organizations to capture data from various sources in real-time and keep it synchronized across different systems. This ensures that businesses have access to up-to-date information for their analytics and decision-making processes.

One of the key advantages of Tapdata is its flexible and adaptive schema. It can handle structured, semi-structured, and unstructured data efficiently, making it suitable for diverse use cases. Additionally, Tapdata offers a low code/no code pipeline development and transformation environment, enabling users to build complex data pipelines without extensive coding knowledge.

Tapdata is trusted by industry leaders across various sectors such as e-commerce, finance, healthcare, and more. It offers a free-forever tier for users to get started with basic data integration needs. By combining the power of Tapdata with AWS ETL tools like Glue or Data Pipeline, organizations can enhance their data integration capabilities significantly.

Common Data Integration Challenges

Overview of data integration challenges.

Data integration is a critical process for organizations that need to combine and unify data from various sources to gain valuable insights and make informed business decisions. However, this process comes with its own set of challenges that organizations must overcome to ensure successful data integration. In this section, we will discuss some common data integration challenges faced by organizations and explore potential solutions.

One of the most prevalent challenges in data integration is dealing with data silos . Data silos occur when different departments or systems within an organization store their data in separate repositories, making it difficult to access and integrate the information effectively. This can lead to fragmented insights and hinder the ability to make accurate decisions based on a holistic view of the data.

To overcome this challenge, organizations can implement a centralized data storage solution that consolidates all relevant data into a single repository. AWS offers various tools like AWS Glue and AWS Data Pipeline that enable seamless extraction, transformation, and loading (ETL) processes to bring together disparate datasets from different sources. By breaking down these silos and creating a unified view of the data, organizations can enhance collaboration across departments and improve decision-making capabilities.

Disparate Data Formats

Another common challenge in data integration is dealing with disparate data formats. Different systems often use different formats for storing and representing data, making it challenging to merge them seamlessly. For example, one system may use CSV files while another uses JSON or XML files.

AWS provides powerful ETL tools like AWS Glue that support multiple file formats and provide built-in connectors for popular databases such as Amazon Redshift, Amazon RDS, and Amazon S3. These tools can automatically detect the schema of different datasets and transform them into a consistent format for easy integration. Additionally, AWS Glue supports custom transformations using Python or Scala code, allowing organizations to handle complex data format conversions efficiently.

Complex Data Transformation Requirements

Organizations often face complex data transformation requirements when integrating data from multiple sources. Data may need to be cleansed, standardized, or enriched before it can be effectively integrated and analyzed. This process can involve tasks such as deduplication, data validation, and data enrichment.

AWS Glue provides a visual interface for creating ETL jobs that simplify the process of transforming and preparing data for integration. It offers a wide range of built-in transformations and functions that organizations can leverage to clean, validate, and enrich their data. Additionally, AWS Glue supports serverless execution, allowing organizations to scale their data integration processes based on demand without worrying about infrastructure management.

Data Security and Compliance

Data security and compliance are critical considerations in any data integration project. Organizations must ensure that sensitive information is protected throughout the integration process and comply with relevant regulations such as GDPR or HIPAA.

AWS provides robust security features and compliance certifications to address these concerns. AWS Glue supports encryption at rest and in transit to protect sensitive data during storage and transfer. Additionally, AWS services like AWS Identity and Access Management (IAM) enable organizations to manage user access control effectively.

Case Study 1: How Company X Leveraged AWS Glue for Data Integration

Overview of company x's data integration challenge.

Company X, a leading global organization in the retail industry, faced significant challenges when it came to data integration. With operations spread across multiple regions and numerous systems generating vast amounts of data, they struggled to consolidate and harmonize information from various sources. This resulted in data silos, inconsistencies, and poor data quality.

The primary goal for Company X was to streamline their data integration processes and improve the overall quality of their data. They needed a solution that could automate the transformation of raw data into a usable format while ensuring accuracy and reliability.

Solution: Leveraging AWS Glue

To address their data integration challenges, Company X turned to AWS Glue, a fully managed extract, transform, and load (ETL) service offered by Amazon Web Services (AWS). AWS Glue provided them with a scalable and cost-effective solution for automating their data transformation processes.

By leveraging AWS Glue's powerful capabilities, Company X was able to build an end-to-end ETL pipeline that extracted data from various sources, transformed it according to predefined business rules, and loaded it into a centralized data warehouse. The service offered pre-built connectors for popular databases and file formats, making it easy for Company X to integrate their diverse range of systems.

One key advantage of using AWS Glue was its ability to automatically discover the schema of the source data. This eliminated the need for manual intervention in defining the structure of each dataset. Additionally, AWS Glue provided visual tools for creating and managing ETL jobs, simplifying the development process for Company X's technical team.

Another significant benefit of using AWS Glue was its serverless architecture. This meant that Company X did not have to worry about provisioning or managing infrastructure resources. The service automatically scaled up or down based on demand, ensuring optimal performance without any additional effort or cost.

Results and Benefits

The implementation of AWS Glue brought about several positive outcomes and benefits for Company X. Firstly, the automation of data transformation processes significantly improved efficiency. What used to take days or even weeks to complete manually now happened in a matter of hours. This allowed Company X to make faster and more informed business decisions based on up-to-date data.

Furthermore, by eliminating manual intervention, AWS Glue reduced the risk of human errors and ensured data accuracy. The predefined business rules applied during the transformation process standardized the data across different sources, resulting in improved data quality.

In terms of cost savings, AWS Glue proved to be highly cost-effective for Company X. The pay-as-you-go pricing model meant that they only paid for the resources they consumed during ETL jobs. Compared to building and maintaining a custom ETL solution or using traditional ETL tools, AWS Glue offered significant cost advantages.

Additionally, AWS Glue's scalability allowed Company X to handle increasing volumes of data without any performance degradation. As their business grew and new systems were added, AWS Glue seamlessly accommodated the additional workload without requiring any manual intervention or infrastructure upgrades.

In summary, by leveraging AWS Glue for their data integration needs, Company X successfully overcame their challenges related to consolidating and improving the quality of their data. The automation provided by AWS Glue not only enhanced efficiency but also ensured accuracy and reliability. With significant cost savings and scalability benefits, AWS Glue emerged as an ideal solution for Company X's data integration requirements.

Overall, this case study demonstrates how organizations can leverage AWS ETL tools like AWS Glue to solve complex data integration challenges effectively. By adopting such solutions, businesses can streamline their processes, improve data quality, and drive better decision-making capabilities while optimizing costs and ensuring scalability.

Case Study 2: Overcoming Data Silos with AWS Data Pipeline

Overview of data silos challenge.

Data silos are a common challenge faced by organizations when it comes to data integration. These silos occur when data is stored in separate systems or databases that are not easily accessible or compatible with each other. This can lead to inefficiencies, duplication of efforts, and limited visibility into the organization's data.

For example, Company Y was struggling with data silos as their customer information was scattered across multiple systems and databases. The marketing team had their own CRM system, while the sales team used a different database to store customer details. This fragmentation made it difficult for the organization to have a holistic view of their customers and hindered effective decision-making.

To overcome this challenge, Company Y needed a solution that could seamlessly move and transform data across different systems and databases, breaking down the barriers created by data silos.

Solution: Utilizing AWS Data Pipeline

Company Y turned to AWS Data Pipeline to address their data integration challenges. AWS Data Pipeline is a web service that allows organizations to orchestrate the movement and transformation of data between different AWS services and on-premises data sources.

By leveraging AWS Data Pipeline, Company Y was able to create workflows that automated the movement of customer data from various sources into a centralized repository. This allowed them to consolidate their customer information and eliminate the need for manual intervention in transferring data between systems.

One key advantage of using AWS Data Pipeline was its scalability and flexibility. As Company Y's business requirements evolved, they were able to easily modify their workflows within AWS Data Pipeline without disrupting existing processes. This adaptability ensured that they could keep up with changing demands and continue improving their data integration capabilities.

The implementation of AWS Data Pipeline brought about several positive outcomes for Company Y:

Improved Data Accessibility: With all customer information consolidated in one central repository, employees across different departments had easy access to accurate and up-to-date customer data. This enhanced data accessibility enabled better collaboration and decision-making within the organization.

Reduced Data Duplication: Prior to implementing AWS Data Pipeline, Company Y had multiple instances of customer data stored in different systems. This duplication not only wasted storage space but also increased the risk of inconsistencies and errors. By centralizing their data using AWS Data Pipeline, Company Y was able to eliminate data duplication and ensure a single source of truth for customer information.

Enhanced Data Integration Across Systems: AWS Data Pipeline facilitated seamless integration between various systems and databases within Company Y's infrastructure. This allowed them to break down the barriers created by data silos and establish a unified view of their customers. As a result, they were able to gain valuable insights into customer behavior, preferences, and trends, enabling more targeted marketing campaigns and personalized customer experiences.

Case Study 3: Migrating Legacy Data with AWS Database Migration Service

Overview of legacy data migration challenge.

Migrating legacy data from on-premises databases to the cloud can be a complex and challenging task for organizations. The specific data integration challenge faced by the organization in this case study was related to migrating large volumes of legacy data to AWS cloud. The organization had accumulated a vast amount of data over the years, stored in various on-premises databases. However, with the increasing need for scalability, improved accessibility, and reduced maintenance costs, they decided to migrate their legacy data to AWS.

The main challenge they encountered was the sheer volume of data that needed to be migrated. It was crucial for them to ensure minimal downtime during the migration process and maintain data integrity throughout. They needed a reliable solution that could handle the migration efficiently while minimizing any potential disruptions to their operations.

Solution: Leveraging AWS Database Migration Service

To address this challenge, the organization turned to AWS Database Migration Service (DMS). AWS DMS is a fully managed service that enables seamless and secure migration of databases to AWS with minimal downtime. It supports both homogeneous and heterogeneous migrations, making it an ideal choice for organizations with diverse database environments.

The organization leveraged AWS DMS to migrate their legacy data from on-premises databases to AWS cloud. They were able to take advantage of its robust features such as schema conversion, continuous replication, and automatic database conversion. This allowed them to migrate their data efficiently while ensuring compatibility between different database engines.

One key advantage of using AWS DMS was its seamless integration with existing AWS infrastructure. The organization already had an established AWS environment, including Amazon S3 for storage and Amazon Redshift for analytics. With AWS DMS, they were able to easily integrate their migrated data into these existing services without any major disruptions or additional configuration.

The implementation of AWS DMS brought about several positive outcomes and benefits for the organization. Firstly, it significantly improved data accessibility. By migrating their legacy data to AWS cloud, the organization was able to centralize and consolidate their data in a scalable and easily accessible environment. This allowed their teams to access and analyze the data more efficiently, leading to better decision-making processes.

Additionally, the migration to AWS cloud resulted in reduced maintenance costs for the organization. With on-premises databases, they had to invest significant resources in hardware maintenance, software updates, and security measures. By migrating to AWS, they were able to offload these responsibilities to AWS's managed services, reducing their overall maintenance costs.

Furthermore, the scalability offered by AWS DMS allowed the organization to handle future growth effortlessly. As their data continued to expand, they could easily scale up their storage capacity and computing power without any disruptions or additional investments in infrastructure.

Best Practices for Data Integration with AWS ETL Tools

Data governance and quality assurance.

Data governance and quality assurance are crucial aspects of data integration with AWS ETL tools. Implementing effective data governance policies and ensuring data quality assurance can significantly enhance the success of data integration projects. Here are some practical tips and best practices for organizations looking to leverage AWS ETL tools for data integration:

Establish clear data governance policies : Define clear guidelines and processes for managing data across the organization. This includes defining roles and responsibilities, establishing data ownership, and implementing data access controls.

Ensure data accuracy and consistency : Perform regular checks to ensure the accuracy and consistency of the integrated data. This can be achieved by implementing automated validation processes, conducting periodic audits, and resolving any identified issues promptly.

Implement metadata management : Metadata provides valuable information about the integrated datasets, such as their source, structure, and transformations applied. Implementing a robust metadata management system helps in understanding the lineage of the integrated data and facilitates easier troubleshooting.

Maintain data lineage : Establish mechanisms to track the origin of each piece of integrated data throughout its lifecycle. This helps in maintaining transparency, ensuring compliance with regulations, and facilitating traceability during troubleshooting or auditing processes.

Enforce security measures : Implement appropriate security measures to protect sensitive or confidential information during the integration process. This includes encrypting data at rest and in transit, implementing access controls based on user roles, and regularly monitoring access logs for any suspicious activities.

Perform regular backups : Regularly back up integrated datasets to prevent loss of critical information due to hardware failures or accidental deletions. Implement automated backup processes that store backups in secure locations with proper version control.

Scalability Considerations

Scalability is a key consideration when designing data integration processes with AWS ETL tools. By leveraging serverless architectures and auto-scaling capabilities offered by AWS services, organizations can ensure that their integration workflows can handle increasing data volumes and processing demands. Here are some important scalability considerations for data integration with AWS ETL tools:

Utilize serverless architectures : AWS offers serverless services like AWS Lambda, which allow organizations to run code without provisioning or managing servers. By leveraging serverless architectures, organizations can automatically scale their integration workflows based on the incoming data volume, ensuring efficient utilization of resources.

Leverage auto-scaling capabilities : AWS provides auto-scaling capabilities for various services, such as Amazon EC2 and Amazon Redshift. These capabilities automatically adjust the capacity of resources based on workload fluctuations. By configuring auto-scaling policies, organizations can ensure that their integration processes can handle peak loads without manual intervention.

Optimize data transfer : When integrating large volumes of data, it is essential to optimize the transfer process to minimize latency and maximize throughput. Utilize AWS services like Amazon S3 Transfer Acceleration or AWS Direct Connect to improve data transfer speeds and reduce network latency.

Design fault-tolerant workflows : Plan for potential failures by designing fault-tolerant workflows that can handle errors gracefully and resume processing from the point of failure. Utilize features like AWS Step Functions or Amazon Simple Queue Service (SQS) to build resilient workflows that can recover from failures automatically.

Monitor performance and resource utilization : Regularly monitor the performance of your integration workflows and track resource utilization metrics using AWS CloudWatch or third-party monitoring tools. This helps in identifying bottlenecks, optimizing resource allocation, and ensuring efficient scaling based on actual usage patterns.

Consider multi-region deployments : For high availability and disaster recovery purposes, consider deploying your integration workflows across multiple AWS regions. This ensures that even if one region experiences an outage, the integration processes can continue seamlessly in another region.

By following these best practices for data governance, quality assurance, and scalability considerations when using AWS ETL tools, organizations can ensure successful and efficient data integration processes. These practices not only enhance the reliability and accuracy of integrated data but also enable organizations to scale their integration workflows as their data volumes and processing demands grow.

Cost Optimization Strategies

Resource allocation and optimization.

When it comes to using AWS ETL tools, cost optimization is a crucial aspect that organizations need to consider. By implementing effective strategies, businesses can ensure that they are making the most out of their resources while minimizing unnecessary expenses. In this section, we will discuss some cost optimization strategies when using AWS ETL tools, including resource allocation and leveraging cost-effective storage options.

Optimizing Resource Allocation

One of the key factors in cost optimization is optimizing resource allocation. AWS provides various ETL tools that allow organizations to scale their resources based on their specific needs. By carefully analyzing the data integration requirements and workload patterns, businesses can allocate resources efficiently, avoiding overprovisioning or underutilization.

To optimize resource allocation, it is essential to monitor the performance of ETL jobs regularly. AWS offers monitoring and logging services like Amazon CloudWatch and AWS CloudTrail, which provide valuable insights into resource utilization and job execution times. By analyzing these metrics, organizations can identify any bottlenecks or areas for improvement in their data integration processes.

Another approach to optimizing resource allocation is by utilizing serverless architectures offered by AWS ETL tools like AWS Glue. With serverless computing, businesses only pay for the actual compute time used during job execution, eliminating the need for provisioning and managing dedicated servers. This not only reduces costs but also improves scalability and agility.

Leveraging Cost-Effective Storage Options

In addition to optimizing resource allocation, leveraging cost-effective storage options can significantly impact overall costs when using AWS ETL tools. AWS provides various storage services with different pricing models that cater to different data integration requirements.

For example, Amazon S3 (Simple Storage Service) offers highly scalable object storage at a low cost per gigabyte. It allows organizations to store large volumes of data generated during ETL processes without worrying about capacity limitations or high storage costs. Additionally, S3 provides features like lifecycle policies and intelligent tiering, which automatically move data to cost-effective storage classes based on access patterns.

Another cost-effective storage option is Amazon Redshift. It is a fully managed data warehousing service that provides high-performance analytics at a lower cost compared to traditional on-premises solutions. By leveraging Redshift for storing and analyzing integrated data, organizations can achieve significant cost savings while benefiting from its scalability and performance capabilities.

Best Practices for Cost Optimization

To further optimize costs when using AWS ETL tools, it is essential to follow some best practices:

Right-sizing resources : Analyze the workload requirements and choose the appropriate instance types and sizes to avoid overprovisioning or underutilization.

Implementing data compression : Compressing data before storing it in AWS services like S3 or Redshift can significantly reduce storage costs.

Data lifecycle management : Define proper data retention policies and use features like lifecycle policies in S3 to automatically move infrequently accessed data to cheaper storage classes.

Monitoring and optimization : Continuously monitor resource utilization, job execution times, and overall system performance to identify areas for optimization.

By following these best practices, organizations can ensure that they are effectively managing their costs while maintaining optimal performance in their data integration processes.

Future Trends and Innovations in Data Integration with AWS

Emerging trends in data integration.

As technology continues to advance at a rapid pace, the field of data integration is also evolving. AWS is at the forefront of these innovations, offering cutting-edge solutions that enable organizations to seamlessly integrate and analyze their data. In this section, we will explore some of the emerging trends and innovations in data integration with AWS.

Integration of Machine Learning Capabilities

One of the most exciting trends in data integration is the integration of machine learning capabilities. Machine learning algorithms have the ability to analyze large volumes of data and identify patterns and insights that humans may not be able to detect. With AWS, organizations can leverage machine learning tools such as Amazon SageMaker to build, train, and deploy machine learning models for data integration purposes.

By incorporating machine learning into their data integration processes, organizations can automate repetitive tasks, improve accuracy, and gain valuable insights from their data. For example, machine learning algorithms can be used to automatically categorize and tag incoming data, making it easier to organize and analyze.

Real-Time Data Streaming

Another trend in data integration is the increasing demand for real-time data streaming. Traditional batch processing methods are no longer sufficient for organizations that require up-to-the-minute insights from their data. AWS offers services such as Amazon Kinesis Data Streams and Amazon Managed Streaming for Apache Kafka (MSK) that enable real-time streaming of large volumes of data.

Real-time streaming allows organizations to process and analyze incoming data as it arrives, enabling them to make timely decisions based on the most current information available. This is particularly valuable in industries such as finance, e-commerce, and IoT where real-time insights can drive business growth and competitive advantage.

Use of Data Lakes

Data lakes have emerged as a popular approach for storing and analyzing large volumes of structured and unstructured data. A data lake is a centralized repository that allows organizations to store all types of raw or processed data in its native format. AWS provides a comprehensive suite of services for building and managing data lakes, including Amazon S3, AWS Glue, and Amazon Athena.

By leveraging data lakes, organizations can break down data silos and enable cross-functional teams to access and analyze data from various sources. Data lakes also support advanced analytics techniques such as machine learning and artificial intelligence, allowing organizations to derive valuable insights from their data.

In conclusion, the real-world case studies highlighted in this blog post demonstrate the effectiveness of AWS ETL tools in solving data integration challenges. These tools offer a cost-effective and scalable solution for organizations looking to streamline their data integration processes.

One key takeaway from these case studies is the cost-effectiveness of AWS ETL tools. By leveraging cloud-based resources, organizations can avoid the high upfront costs associated with traditional on-premises solutions. This allows them to allocate their budget more efficiently and invest in other areas of their business.

Additionally, the scalability of AWS ETL tools is a significant advantage. As organizations grow and their data integration needs increase, these tools can easily accommodate the expanding workload. With AWS's elastic infrastructure, organizations can scale up or down as needed, ensuring optimal performance and efficiency.

Furthermore, AWS ETL tools provide a streamlined approach to data integration. The intuitive user interface and pre-built connectors simplify the process, reducing the time and effort required to integrate disparate data sources. This allows organizations to quickly gain insights from their data and make informed decisions.

In light of these benefits, we encourage readers to explore AWS ETL tools for their own data integration needs. By leveraging these tools, organizations can achieve cost savings, scalability, and a streamlined data integration process. Take advantage of AWS's comprehensive suite of ETL tools and unlock the full potential of your data today.

Success Stories and Benefits of Real-world Database Integration

Best Practices for Cost-Efficient Performance Optimization with AWS ETL Tools

Streamline Data Integration and Transformation with the Best ETL Tools for SQL Server

Comparing Reverse ETL with Alternative Data Integration Methods

Simplified Real-Time Data Integration using Tapdata

Everything you need for enterprise-grade data replication

© Copyright 2024 Tapdata - All Rights Reserved

- Cloud Data Migration

On-premise to Cloud Data Replication with AWS Glue

A Global Research and Advisory Company intended to modernize its on-premises ‘Enterprise Data Quality’ application by migrating it to the AWS cloud. Bitwise spearheaded the initiative and replicated the on-premises Oracle data to PostgreSQL on AWS.

Client Challenges and Requirements

- Replicating data from on-premise to AWS cloud

- Scrapping Materialized View for data replication and creating a process that is Cloud native, scalable, secure, and open for modifications

- Build a metadata-driven, scalable data replication system using AWS services

- Trigger based on source system availability (online/offline)

- Orchestrate data replication processes

Bitwise Solution

- Developed a metadata-driven AWS-based data replication process that is re-runnable, fault-tolerant, and triggered by Rest API linked to the existing scheduler

- API triggers the step function that orchestrates all other services

- Lambda: Monitors database health. System up/down status. Reads Metadata table, returns the Staging table name, and metadata query to the Step function

- Map: Receives the details of all the tables in a particular workflow and creates ‘n’ number of virtual instances if there are ‘n’ number of tables present

- Virtual instances contain the glue job and a Lambda. Virtual instances run in parallel ensuring all the tables in the workflow are replicated into staging parallelly

- Glue: Connections are created and used for on-prem cloud databases and unloading takes place as truncate and load in the staging table in PostgreSQL

- Lambda: Loads the data from the staging table to the target table in PostgreSQL

Tools & Technologies We Used

Postgresql (aws rds), aws step-function, key results.

A cloud native application that is reliable, scalable and secure

Metadata driven process which has re-runabilty, fault tolerance and is open for modifications

Fully operational and unit-tested code delivered with ~40% overall time efficiency

Share This Case Study

Download case study.

To get our latest updates subscribe to our Newsletter.

Ready to start a conversation?

Never miss bitwise updates, we are great place to work® certified.

- ETL Converter

Digital And Application Development Solutions

- Enterprise Applications

- Web and Mobile

Data And Analytics Solutions

- Data Warehouse and Business Intelligence

- Automated ETL Migration

- Hadoop & NoSQL

- Big Data Analytics and Data Science

Cloud Solutions

- Journey to the Cloud

- Application Modernization

QA and Testing Solutions

- Functional and Mobile Testing

- Performance Testing

- SOA Testing Services

- API Automation Testing

- Web Automation Testing

Data Governance Solutions

- Data Quality

- Master Data Management

- Metadata Management

- Life@Bitwise

- Current Opening

- Case Studies

- News and Events

Certificates

Iso/iec 27001:2013, ico registered:za581909.

Website and cookie policy

All rights reserved @ bitwise 2024.

- Business Intelligence & Data Warehousing

- Data Science & AI

- Python, Hadoop, Spark and Kafka

- Cloud Data Warehousing

- Planning & Consolidation

- Managed Services

- KPI Cloud Analytics

- KPI Real-Time Analytics

- BI Report Conversion Utilities

- Oracle to Snowflake Migration Utility

- Discoverer Migration Wizard

- Integrated Salesforce-ERP Analytics

- Amazon Web Services

- Microsoft Azure

- ThoughtSpot

Experience / Case Studies

- Testimonials

- White Papers

- Quick Start Programs

Case Study: Rivian - Enterprise Data Analytics on AWS Redshift and Tableau

Leading Edge Data Analytics platform on AWS Cloud to address the key information needs and analytical insights for multiple business groups

About rivian automotive.

Rivian is an American electric vehicle automaker and automotive technology company founded in 2009. Rivian is building an electric sport utility vehicle and a pickup truck on a skateboard platform that can support future vehicles or be adopted by other companies

Tags: Case Study , Tableau , Amazon Redshift , AWS Glue , Balachandra Sridhara , AWS Cloud , AWS Lambda , Python pipelines , Step Functions , CloudWatch , Kinesis Stream , Salesforce REST API , Airflow

Case Study: Global Manufacturer - DevOps for Data Engineering

DevOps for Data Engineering

About our client.

KPI’s client is a global manufacturer and distributor of scientific instrumentation, reagents and consumables, and software and services to healthcare, life science, and other laboratories in academia, government, and industry with annual revenues of $32 billion and a global team of more than 75,000 colleagues.

Tags: Case Study , AWS S3 , AWS Glue , DevOps , Databricks , GitHub , Jenkins , Lambda , Terraform , Splunk , Datadog

Case Study: Cigna - KPI Cloud Analytics for AWS

KPI leverages AWS Redshift and Tableau to optimize spend for large health insurance company

Tags: Case Study , KPI Cloud Analytics , Tableau , Amazon Redshift , Financial reporting , Cigna , KPI Cloud Analytics for AWS , AWS S3 , SAP Ariba , AWS Cloud Watch , AWS Glue

- Case Studies

- Aerospace and Defence

- Life Sciences

- Manufacturing

- Travel and Transportation

- Financial Services

- Wholesalers

- Pharmaceutical

- Consumer Goods

- Digital Music

- Hospitality

- Professional Services

- Public Sector

- Agriculture

- Property Management

- Medical Devices

- Asset Management

- Property and Casualty Insurance

- Retail Banking

- Medical device

- Advertising

- Credit Scoring

- Engineering

- Health Insurance

- Electricity

- Investment Banking

- Life Insurance

- Wealth Management

- Business Intelligence

- Data Discovery

- ERP Analytics

- Marketing Analytics

- HR Analytics

- Customer 360 Analytics

- Planning and Consolidation

- Online NOSQL Databases

- Cloud Application Integration

- Cloud Master Data Management

- Delivery Leadership

- Product Engineering

- Informatica

- Salesforce.com

- Systems Implementation

- Application Extensions

- Migration Programs

- System Upgrades

- Acceleration Tools

- AMC Entertainment

- Avery Dennison

- Bank of Hawaii

- Cox Communications

- Cricket Wireless

- Dealer Tire

- EMC Corporation

- Family Dollar

- GE Healthcare

- New York University

- PNC Financial Services

- Progressive Insurance

- Royal Caribbean

- San Diego Unified School District

- Savings Bank Life Insurance

- St. Jude Medical

- State of Louisiana

- Stiefel Laboratories

- Travis Credit Union

- University of California-Berkeley

VIEW ALL CASE STUDIES

KPI Partners provides strategic guidance and technology systems for clients wishing to solve complex business challenges involving cloud applications and big data. Learn more

- Technologies

Oracle | Tableau | Snowflake | AWS | Azure | Confluent Qlik | MapR | Cloudera | Hortonworks | DataStax | SAP Teradata | NetSuite | Salesforce | Attunity | Denodo | Numerify View all

- Our Process

- BI & Data Warehousing

KPI Partners, Inc. 39899 Balentine Drive, Suite #212

Newark, CA 94560, USA Tel: (510) 818-9480

© 2022 KPI Partners

- Data Science

- Data Analysis

- Data Visualization

- Machine Learning

- Deep Learning

- Computer Vision

- Artificial Intelligence

- AI ML DS Interview Series

- AI ML DS Projects series

- Data Engineering

- Web Scrapping

- Top 10 API Integration Tools for 2024

- Top 20 Data Science Tools in 2024

- Top Data Management tools in 2024

- Top 7 MongoDB Tools for 2024

- Top 10 Best AI Tools for Startups in 2024

- Top 10 Automated UI Testing Tools in 2024

- Top 7 AI Tools for Data Visualization: 2024 Selection

- Top 10 AI Tools for Business in 2024

- Top 7 Databases for Data Scientists in 2024

- 12 Top Generative AI Tools (2024)

- Top 10 Data Warehouse Tools in 2024

- Top 10 Data Analytics Tools in 2024

- Top 7 AI Analytics Tools for Big Data in 2024 [Free]

- Top 12 AI Tools for Digital Marketing: 2024 Edition

- Top 10 Excel AI Tools in 2024

- 7 Best AI Tools for IT Professionals (2024)

- Top 10 Python Libraries for Data Science in 2024

- Best Tools for Job Hunt in 2024

- Top 10 Social Media Analytics Tools for 2024

Top Data Ingestion Tools for 2024

To capture data for utilising the informational value in today’s environment, the ingestion of data is of high importance to organisations. Data ingestion tools are especially helpful in this process and are responsible for transferring data from origin to storage and/or processing environments. As enterprises deliver more diverse data, the importance of the right ingestion tools becomes even more pronounced.

This guide focuses on the top data ingestion tools 2024 detailing the features, components, and fit for organization applications to help organizations make the right choice for their data architecture plan.

Table of Content

Apache NiFi

Apache kafka, google cloud dataflow, microsoft azure data factory, streamsets data collector, talend data integration, informatica intelligent cloud services, matillion etl, snowflake data cloud, mongodb atlas data lake, azure synapse analytics, ibm datastage.

Apache NiFi is an open-source software with a data conversion framework for computerized data transfer between heterogeneous systems. It is intended to handle transactions involving data that transits between sources and destinations in a real-time mode for purposes of data analysis. NiFi has an easily portable and interactive GUI used for modelling the data flow and it possesses the data lineage, scalability, and security properties. They include Relational databases, Flat files, Text files, Syslog messages, Oracle AQs, MSMQ, TibCO, XML, and more.

One of the typical Apache NiFi deployment scenarios is the ingest of data in real-time together with storage in a data lake. For instance, a retail firm may employ NiFi in order to gather information from different data sources, including POS terminals, online purchases along inventory control mechanisms. NiFi is capable of processing this data in real-time and the data can be transformed to meet the required format before being moved to a data lake for excessive processing. This keeps the company informed on timely sales performance, inventory, customer compliance and sales trends, thus enhancing decision-making processes.

Case Study:

A healthcare organisation in this case used Apache NiFi to improve the handling of patient information which is originated from various sources like EHRs, lab outputs and wearable devices. Some of the issues that the organization encountered included a lack of integrated data structures or increased levels of data fragmentation where the various patients’ data were comprised of different format structures. This way, with the help of NiFi, they managed to create data flows that ensured the standardizing and enriching of the data and making all the data relevant to the works. It gave better data access to the clinicians so that they had better option availability to make better options and also increased the care of patients. In addition, data provenance in NiFi made it easier to trace these data lines thus meeting the data lineage compliance set by the law; HIPAA in this case.

Apache Kafka is an open-source messaging system that is particularly used to construct real-time applications as well as data pipelines. It is used particularly for the analysis of events with large volumes of data streams incoming in real-time with low latency to large-scale systems. The basic building blocks of Kafka are producers and consumers or, more specifically, writing and reading operations for messages of any type, storage of such messages with a strong emphasis on their durability and fault-tolerance and, last but not least, the possibility of processing the messages as they arrive. Specifically based upon distributed commit-log, it provides high durability and is extremely scalable.