To read this content please select one of the options below:

Please note you do not have access to teaching notes, sample size for qualitative research.

Qualitative Market Research

ISSN : 1352-2752

Article publication date: 12 September 2016

Qualitative researchers have been criticised for not justifying sample size decisions in their research. This short paper addresses the issue of which sample sizes are appropriate and valid within different approaches to qualitative research.

Design/methodology/approach

The sparse literature on sample sizes in qualitative research is reviewed and discussed. This examination is informed by the personal experience of the author in terms of assessing, as an editor, reviewer comments as they relate to sample size in qualitative research. Also, the discussion is informed by the author’s own experience of undertaking commercial and academic qualitative research over the last 31 years.

In qualitative research, the determination of sample size is contextual and partially dependent upon the scientific paradigm under which investigation is taking place. For example, qualitative research which is oriented towards positivism, will require larger samples than in-depth qualitative research does, so that a representative picture of the whole population under review can be gained. Nonetheless, the paper also concludes that sample sizes involving one single case can be highly informative and meaningful as demonstrated in examples from management and medical research. Unique examples of research using a single sample or case but involving new areas or findings that are potentially highly relevant, can be worthy of publication. Theoretical saturation can also be useful as a guide in designing qualitative research, with practical research illustrating that samples of 12 may be cases where data saturation occurs among a relatively homogeneous population.

Practical implications

Sample sizes as low as one can be justified. Researchers and reviewers may find the discussion in this paper to be a useful guide to determining and critiquing sample size in qualitative research.

Originality/value

Sample size in qualitative research is always mentioned by reviewers of qualitative papers but discussion tends to be simplistic and relatively uninformed. The current paper draws attention to how sample sizes, at both ends of the size continuum, can be justified by researchers. This will also aid reviewers in their making of comments about the appropriateness of sample sizes in qualitative research.

- Qualitative research

- Qualitative methodology

- Case studies

- Sample size

Boddy, C.R. (2016), "Sample size for qualitative research", Qualitative Market Research , Vol. 19 No. 4, pp. 426-432. https://doi.org/10.1108/QMR-06-2016-0053

Emerald Group Publishing Limited

Copyright © 2016, Emerald Group Publishing Limited

Related articles

We’re listening — tell us what you think, something didn’t work….

Report bugs here

All feedback is valuable

Please share your general feedback

Join us on our journey

Platform update page.

Visit emeraldpublishing.com/platformupdate to discover the latest news and updates

Questions & More Information

Answers to the most commonly asked questions here

Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

Enter the email address you signed up with and we'll email you a reset link.

- We're Hiring!

- Help Center

Adequacy of sample size in a qualitative case study and the dilemma of data saturation: A narrative review

2021, World Journal of Advanced Research and Reviews

The consensus of many researchers on data saturation is that data saturation is a key driver for determining the adequacy of sample size in a qualitative case study. Despite these global consensuses, some researchers described data saturation as complex because the decision to stop data collection is solely dictated by the judgment and experience of researchers. Other researchers claimed that guidelines for determining non-probability sample sizes, used as an indication of data saturation are virtually non-existent, problematic, or controversial. Others claimed that data saturation hitched to sample size is practically weak, because data are never truly saturated, as there are always new data to be discovered. This narrative study highlights the dilemma of data saturation and strategies to adequately determine sample size in a qualitative case study. A narrative review of prior research that focused on the vast works of literature that revealed significant information on data saturation and strategies to adequately determine sample size was adopted. Peer-reviewed articles within the last five years from electronic databases, using some keywords such as "qualitative case study", "sample size in a qualitative case study", "data saturation", etc., were also extracted. Results show that data saturation is very helpful especially at the conceptual stage, but its concept and standard is elusive, because it lacks practical guidance for estimating sample size for a robust research prior to data collection. Findings from this study may encourage researcher on better guidelines for determining non-probability sample sizes.

Related Papers

Dr. Lawrence Ness

Failure to reach data saturation has an impact on the quality of the research conducted and hampers content validity. The aim of a study should include what determines when data saturation is achieved, for a small study will reach saturation more rapidly than a larger study. Data saturation is reached when there is enough information to replicate the study when the ability to obtain additional new information has been attained, and when further coding is no longer feasible. The following article critiques two qualitative studies for data saturation: Wolcott (2004) and Landau and Drori (2008). Failure to reach data saturation has a negative impact on the validity on one’s research. The intended audience is novice student researchers. Keywords: Data Saturation, Triangulation, Interviews, Personal Lens, Bias.

Qualitative Research

Nikki Kiyimba

Journal of Advocacy, Research and Education

Jacob Owusu Sarfo , Timothy Debrah , Paul Obeng , Senam Jubey

Qualitative research is currently growing in acceptance, especially within the health research scope. Notwithstanding this positive trend, issues about the adequacy of sample size have been a contention among qualitative and quantitative-based researchers. Our paper seeks to address some of the issues facing popular qualitative designs in human research with this backdrop. Our article explains the five key qualitative designs (case study, narrative inquiry, ethnography, phenomenology, and grounded theory). Based on the existing studies, we reported their respective sample size ranges that supported their data adequacy points. Our paper posits that sample size concerns for qualitative designs revolve around their extensiveness and appropriateness. Therefore, qualitative researchers' judgement for data adequacy for a particular method should not only rely on data saturation or a rule-of-thumb. Instead, they should also be guided by their research goals, sampling approach, and research participants. Furthermore, we recommend that qualitative researchers always verify the quality of data saturation by conducting additional interviews and be more open in reporting their selected methodologies.

Shalini Rawla

Choosing a suitable sample size in qualitative research is an area of conceptual debate and practical uncertainty. Sample size principles, guidelines and tools have been developed to enable researchers to justify the acceptability of their sample size. Nevertheless, research shows that sample size sufficiency reporting is often poor, if not absent, across a range of disciplinary fields. The issue of sample size is accepted as an important marker of the quality of qualitative research. The purpose of this paper is to delineate a standardized framework for qual studies to arrive at a sample size strategy that is transparent and logical about its sample size sufficiency.

Benjamin Saunders , Jackie Waterfield

Benjamin Saunders , Julius Sim , Jackie Waterfield

Saturation has attained widespread acceptance as a methodological principle in qualitative research. It is commonly taken to indicate that, on the basis of the data that have been collected or analysed hitherto, further data collection and/or analysis are unnecessary. However, there appears to be uncertainty as to how saturation should be conceptualized, and inconsistencies in its use. In this paper, we look to clarify the nature, purposes and uses of saturation, and in doing so add to theoretical debate on the role of saturation across different methodologies. We identify four distinct approaches to saturation, which differ in terms of the extent to which an inductive or a deductive logic is adopted, and the relative emphasis on data collection, data analysis, and theorizing. We explore the purposes saturation might serve in relation to these different approaches, and the implications for how and when saturation will be sought. In examining these issues, we highlight the uncertain logic underlying saturation— as essentially a predictive statement about the unobserved based on the observed, a judgement that, we argue, results in equivocation, and may in part explain the confusion surrounding its use. We conclude that saturation should be operationalized in a way that is consistent with the research question(s), and the theoretical position and analytic framework adopted, but also that there should be some limit to its scope, so as not to risk saturation losing its coherence and potency if its conceptualization and uses are stretched too widely.

Psychology and …

Liz Glidewell

International Journal of Social Research Methodology

Norman Blaikie

RELATED PAPERS

Jesus Boticario

Francesco Da Riva Grechi

Edutic - Scientific Journal of Informatics Education

Puji Rahayu Ningsih

Olalekan Akinbo

Alberto Giacometti

Antonio Galves

Naoko Shimazu

Esmeralda Rubi Ochoa

Jurnal Perikanan Pantura (JPP)

Rahmad Jumadi

Inovbiz: Jurnal Inovasi Bisnis

Afvan AQUINO, SE., MM

MANAGEMENT CONTROL

Mara Del Baldo

Courtney Shelley

Gabriella Pusztai

Neraca : Jurnal Akuntansi Terapan

Graefe's Archive for Clinical and Experimental Ophthalmology

Maurizio Parodi

British journal of hospital medicine (London, England : 2005)

hugues henri

Alfred Goldberg

Journal Of Cardiology And Cardiovascular Research

Journal of Cardiology and Cardiovascular Research

The journal of maternal-fetal & neonatal medicine : the official journal of the European Association of Perinatal Medicine, the Federation of Asia and Oceania Perinatal Societies, the International Society of Perinatal Obstetricians

Francis Kidangan

Proceedings of the 8th World Congress on New Technologies

Mario Pezzi Filho

RELATED TOPICS

- We're Hiring!

- Help Center

- Find new research papers in:

- Health Sciences

- Earth Sciences

- Cognitive Science

- Mathematics

- Computer Science

- Academia ©2024

Root out friction in every digital experience, super-charge conversion rates, and optimize digital self-service

Uncover insights from any interaction, deliver AI-powered agent coaching, and reduce cost to serve

Increase revenue and loyalty with real-time insights and recommendations delivered to teams on the ground

Know how your people feel and empower managers to improve employee engagement, productivity, and retention

Take action in the moments that matter most along the employee journey and drive bottom line growth

Whatever they’re are saying, wherever they’re saying it, know exactly what’s going on with your people

Get faster, richer insights with qual and quant tools that make powerful market research available to everyone

Run concept tests, pricing studies, prototyping + more with fast, powerful studies designed by UX research experts

Track your brand performance 24/7 and act quickly to respond to opportunities and challenges in your market

Explore the platform powering Experience Management

- Free Account

- For Digital

- For Customer Care

- For Human Resources

- For Researchers

- Financial Services

- All Industries

Popular Use Cases

- Customer Experience

- Employee Experience

- Net Promoter Score

- Voice of Customer

- Customer Success Hub

- Product Documentation

- Training & Certification

- XM Institute

- Popular Resources

- Customer Stories

- Artificial Intelligence

- Market Research

- Partnerships

- Marketplace

The annual gathering of the experience leaders at the world’s iconic brands building breakthrough business results, live in Salt Lake City.

- English/AU & NZ

- Español/Europa

- Español/América Latina

- Português Brasileiro

- REQUEST DEMO

- Experience Management

- How To Determine Sample Size

Try Qualtrics for free

How to determine sample size.

12 min read Sample size can make or break your research project. Here’s how to master the delicate art of choosing the right sample size.

Author: Will Webster

Sample size is the beating heart of any research project. It’s the invisible force that gives life to your data, making your findings robust, reliable and believable.

Sample size is what determines if you see a broad view or a focus on minute details; the art and science of correctly determining it involves a careful balancing act. Finding an appropriate sample size demands a clear understanding of the level of detail you wish to see in your data and the constraints you might encounter along the way.

Remember, whether you’re studying a small group or an entire population, your findings are only ever as good as the sample you choose.

Free eBook: Empower your market research efforts today

Let’s delve into the world of sampling and uncover the best practices for determining sample size for your research.

“How much sample do we need?” is one of the most commonly-asked questions and stumbling points in the early stages of research design . Finding the right answer to it requires first understanding and answering two other questions:

How important is statistical significance to you and your stakeholders?

What are your real-world constraints.

At the heart of this question is the goal to confidently differentiate between groups, by describing meaningful differences as statistically significant. Statistical significance isn’t a difficult concept, but it needs to be considered within the unique context of your research and your measures.

First, you should consider when you deem a difference to be meaningful in your area of research. While the standards for statistical significance are universal, the standards for “meaningful difference” are highly contextual.

For example, a 10% difference between groups might not be enough to merit a change in a marketing campaign for a breakfast cereal, but a 10% difference in efficacy of breast cancer treatments might quite literally be the difference between life and death for hundreds of patients. The exact same magnitude of difference has very little meaning in one context, but has extraordinary meaning in another. You ultimately need to determine the level of precision that will help you make your decision.

Within sampling, the lowest amount of magnification – or smallest sample size – could make the most sense, given the level of precision needed, as well as timeline and budgetary constraints.

If you’re able to detect statistical significance at a difference of 10%, and 10% is a meaningful difference, there is no need for a larger sample size, or higher magnification. However, if the study will only be useful if a significant difference is detected for smaller differences – say, a difference of 5% — the sample size must be larger to accommodate this needed precision. Similarly, if 5% is enough, and 3% is unnecessary, there is no need for a larger statistically significant sample size.

You should also consider how much you expect your responses to vary. When there isn’t a lot of variability in response, it takes a lot more sample to be confident that there are statistically significant differences between groups.

For instance, it will take a lot more sample to find statistically significant differences between groups if you are asking, “What month do you think Christmas is in?” than if you are asking, “How many miles are there between the Earth and the moon?”. In the former, nearly everybody is going to give the exact same answer, while the latter will give a lot of variation in responses. Simply put, when your variables do not have a lot of variance, larger sample sizes make sense.

Statistical significance

The likelihood that the results of a study or experiment did not occur randomly or by chance, but are meaningful and indicate a genuine effect or relationship between variables.

Magnitude of difference

The size or extent of the difference between two or more groups or variables, providing a measure of the effect size or practical significance of the results.

Actionable insights

Valuable findings or conclusions drawn from data analysis that can be directly applied or implemented in decision-making processes or strategies to achieve a particular goal or outcome.

It’s crucial to understand the differences between the concepts of “statistical significance”, “magnitude of difference” and “actionable insights” – and how they can influence each other:

- Even if there is a statistically significant difference, it doesn’t mean the magnitude of the difference is large: with a large enough sample, a 3% difference could be statistically significant

- Even if the magnitude of the difference is large, it doesn’t guarantee that this difference is statistically significant: with a small enough sample, an 18% difference might not be statistically significant

- Even if there is a large, statistically significant difference, it doesn’t mean there is a story, or that there are actionable insights

There is no way to guarantee statistically significant differences at the outset of a study – and that is a good thing.

Even with a sample size of a million, there simply may not be any differences – at least, any that could be described as statistically significant. And there are times when a lack of significance is positive.

Imagine if your main competitor ran a multi-million dollar ad campaign in a major city and a huge pre-post study to detect campaign effects, only to discover that there were no statistically significant differences in brand awareness . This may be terrible news for your competitor, but it would be great news for you.

With Stats iQ™ you can analyze your research results and conduct significance testing

As you determine your sample size, you should consider the real-world constraints to your research.

Factors revolving around timings, budget and target population are among the most common constraints, impacting virtually every study. But by understanding and acknowledging them, you can definitely navigate the practical constraints of your research when pulling together your sample.

Timeline constraints

Gathering a larger sample size naturally requires more time. This is particularly true for elusive audiences, those hard-to-reach groups that require special effort to engage. Your timeline could become an obstacle if it is particularly tight, causing you to rethink your sample size to meet your deadline.

Budgetary constraints

Every sample, whether large or small, inexpensive or costly, signifies a portion of your budget. Samples could be like an open market; some are inexpensive, others are pricey, but all have a price tag attached to them.

Population constraints

Sometimes the individuals or groups you’re interested in are difficult to reach; other times, they’re a part of an extremely small population. These factors can limit your sample size even further.

What’s a good sample size?

A good sample size really depends on the context and goals of the research. In general, a good sample size is one that accurately represents the population and allows for reliable statistical analysis.

Larger sample sizes are typically better because they reduce the likelihood of sampling errors and provide a more accurate representation of the population. However, larger sample sizes often increase the impact of practical considerations, like time, budget and the availability of your audience. Ultimately, you should be aiming for a sample size that provides a balance between statistical validity and practical feasibility.

4 tips for choosing the right sample size

Choosing the right sample size is an intricate balancing act, but following these four tips can take away a lot of the complexity.

1) Start with your goal

The foundation of your research is a clearly defined goal. You need to determine what you’re trying to understand or discover, and use your goal to guide your research methods – including your sample size.

If your aim is to get a broad overview of a topic, a larger, more diverse sample may be appropriate. However, if your goal is to explore a niche aspect of your subject, a smaller, more targeted sample might serve you better. You should always align your sample size with the objectives of your research.

2) Know that you can’t predict everything

Research is a journey into the unknown. While you may have hypotheses and predictions, it’s important to remember that you can’t foresee every outcome – and this uncertainty should be considered when choosing your sample size.

A larger sample size can help to mitigate some of the risks of unpredictability, providing a more diverse range of data and potentially more accurate results. However, you shouldn’t let the fear of the unknown push you into choosing an impractically large sample size.

3) Plan for a sample that meets your needs and considers your real-life constraints

Every research project operates within certain boundaries – commonly budget, timeline and the nature of the sample itself. When deciding on your sample size, these factors need to be taken into consideration.

Be realistic about what you can achieve with your available resources and time, and always tailor your sample size to fit your constraints – not the other way around.

4) Use best practice guidelines to calculate sample size

There are many established guidelines and formulas that can help you in determining the right sample size.

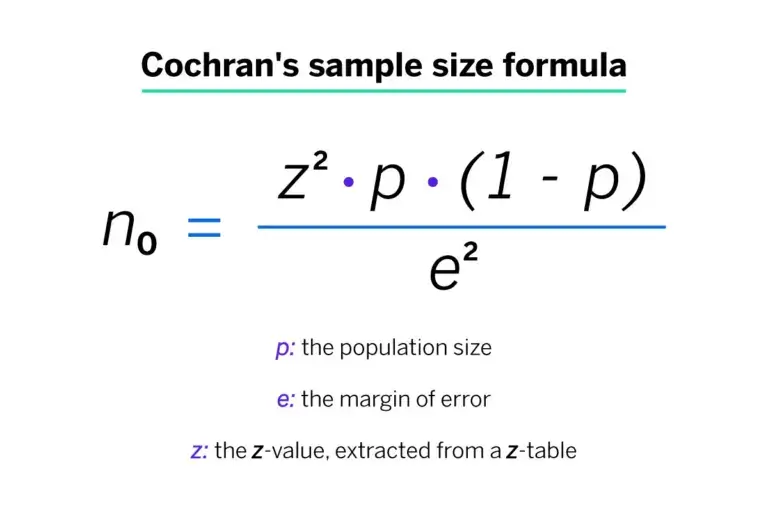

The easiest way to define your sample size is using a sample size calculator , or you can use a manual sample size calculation if you want to test your math skills. Cochran’s formula is perhaps the most well known equation for calculating sample size, and widely used when the population is large or unknown.

Beyond the formula, it’s vital to consider the confidence interval, which plays a significant role in determining the appropriate sample size – especially when working with a random sample – and the sample proportion. This represents the expected ratio of the target population that has the characteristic or response you’re interested in, and therefore has a big impact on your correct sample size.

If your population is small, or its variance is unknown, there are steps you can still take to determine the right sample size. Common approaches here include conducting a small pilot study to gain initial estimates of the population variance, and taking a conservative approach by assuming a larger variance to ensure a more representative sample size.

Empower your market research

Conducting meaningful research and extracting actionable intelligence are priceless skills in today’s ultra competitive business landscape. It’s never been more crucial to stay ahead of the curve by leveraging the power of market research to identify opportunities, mitigate risks and make informed decisions.

Equip yourself with the tools for success with our essential eBook, “The ultimate guide to conducting market research” .

With this front-to-back guide, you’ll discover the latest strategies and best practices that are defining effective market research. Learn about practical insights and real-world applications that are demonstrating the value of research in driving business growth and innovation.

Related resources

Selection bias 11 min read, systematic random sampling 15 min read, convenience sampling 18 min read, probability sampling 8 min read, non-probability sampling 17 min read, stratified random sampling 12 min read, simple random sampling 9 min read, request demo.

Ready to learn more about Qualtrics?

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Audience

Sample Size Determination: Definition, Formula, and Example

Are you ready to survey your research target? Research surveys help you gain insights from your target audience. The data you collect gives you insights to meet customer needs, leading to increased sales and customer loyalty. Sample size calculation and determination are imperative to the researcher to determine the right number of respondents, keeping in mind the research study’s quality.

So, how should you do the sample size determination? How do you know who should get your survey? How do you decide on the number of the target audience?

Sending out too many surveys can be expensive without giving you a definitive advantage over a smaller sample. But if you send out too few, you won’t have enough data to draw accurate conclusions.

Knowing how to calculate and determine the appropriate sample size accurately can give you an edge over your competitors. Let’s take a look at what a good sample includes. Also, let’s look at the sample size calculation formula so you can determine the perfect sample size for your next survey.

What is Sample Size?

‘Sample size’ is a market research term used for defining the number of individuals included in conducting research. Researchers choose their sample based on demographics, such as age, gender questions , or physical location. It can be vague or specific.

For example, you may want to know what people within the 18-25 age range think of your product. Or, you may only require your sample to live in the United States, giving you a wide population range. The total number of individuals in a particular sample is the sample size.

What is sample size determination?

Sample size determination is the process of choosing the right number of observations or people from a larger group to use in a sample. The goal of figuring out the sample size is to ensure that the sample is big enough to give statistically valid results and accurate estimates of population parameters but small enough to be manageable and cost-effective.

In many research studies, getting information from every member of the population of interest is not possible or useful. Instead, researchers choose a sample of people or events that is representative of the whole to study. How accurate and precise the results are can depend a lot on the size of the sample.

Choosing the statistically significant sample size depends on a number of things, such as the size of the population, how precise you want your estimates to be, how confident you want to be in the results, how different the population is likely to be, and how much money and time you have for the study. Statistics are often used to figure out how big a sample should be for a certain type of study and research question.

Figuring out the sample size is important in ensuring that research findings and conclusions are valid and reliable.

Why do you need to determine the sample size?

Let’s say you are a market researcher in the US and want to send out a survey or questionnaire . The survey aims to understand your audience’s feelings toward a new cell phone you are about to launch. You want to know what people in the US think about the new product to predict the phone’s success or failure before launch.

Hypothetically, you choose the population of New York, which is 8.49 million. You use a sample size determination formula to select a sample of 500 individuals that fit into the consumer panel requirement. You can use the responses to help you determine how your audience will react to the new product.

However, determining a sample size requires more than just throwing your survey at as many people as possible. If your estimated sample sizes are too big, it could waste resources, time, and money. A sample size that’s too small doesn’t allow you to gain maximum insights, leading to inconclusive results.

LEARN ABOUT: Survey Sample Sizes

What are the terms used around the sample size?

Before we jump into sample size determination, let’s take a look at the terms you should know:

1. Population size:

Population size is how many people fit your demographic. For example, you want to get information on doctors residing in North America. Your population size is the total number of doctors in North America.

Don’t worry! Your population size doesn’t always have to be that big. Smaller population sizes can still give you accurate results as long as you know who you’re trying to represent.

2. Confidence level:

The confidence level tells you how sure you can be that your data is accurate. It is expressed as a percentage and aligned to the confidence interval. For example, if your confidence level is 90%, your results will most likely be 90% accurate.

3. The margin of error (confidence interval):

There’s no way to be 100% accurate when it comes to surveys. Confidence intervals tell you how far off from the population means you’re willing to allow your data to fall.

A margin of error describes how close you can reasonably expect a survey result to fall relative to the real population value. Remember, if you need help with this information, use our margin of error calculator .

4. Standard deviation:

Standard deviation is the measure of the dispersion of a data set from its mean. It measures the absolute variability of a distribution. The higher the dispersion or variability, the greater the standard deviation and the greater the magnitude of the deviation.

For example, you have already sent out your survey. How much variance do you expect in your responses? That variation in response is the standard deviation.

Sample size calculation formula – sample size determination

With all the necessary terms defined, it’s time to learn how to determine sample size using a sample calculation formula.

Your confidence level corresponds to a Z-score. This is a constant value needed for this equation. Here are the z-scores for the most common confidence levels:

90% – Z Score = 1.645

95% – Z Score = 1.96

99% – Z Score = 2.576

If you choose a different confidence level, various online tools can help you find your score.

Necessary Sample Size = (Z-score)2 * StdDev*(1-StdDev) / (margin of error)2

Here is an example of how the math works, assuming you chose a 90% confidence level, .6 standard deviation, and a margin of error (confidence interval) of +/- 4%.

((1.64)2 x .6(.6)) / (.04)2

( 2.68x .0.36) / .0016

.9648 / .0016

603 respondents are needed, and that becomes your sample size.

Free Sample Size Calculator

How is a sample size determined?

Determining the right sample size for your survey is one of the most common questions researchers ask when they begin a market research study. Luckily, sample size determination isn’t as hard to calculate as you might remember from an old high school statistics class.

Before calculating your sample size, ensure you have these things in place:

Goals and objectives:

What do you hope to do with the survey? Are you planning on projecting the results onto a whole demographic or population? Do you want to see what a specific group thinks? Are you trying to make a big decision or just setting a direction?

Calculating sample size is critical if you’re projecting your survey results on a larger population. You’ll want to make sure that it’s balanced and reflects the community as a whole. The sample size isn’t as critical if you’re trying to get a feel for preferences.

For example, you’re surveying homeowners across the US on the cost of cooling their homes in the summer. A homeowner in the South probably spends much more money cooling their home in the humid heat than someone in Denver, where the climate is dry and cool.

For the most accurate results, you’ll need to get responses from people in all US areas and environments. If you only collect responses from one extreme, such as the warm South, your results will be skewed.

Precision level:

How close do you want the survey results to mimic the true value if everyone responded? Again, if this survey determines how you’re going to spend millions of dollars, then your sample size determination should be exact.

The more accurate you need to be, the larger the sample you want to have, and the more your sample will have to represent the overall population. If your population is small, say, 200 people, you may want to survey the entire population rather than cut it down with a sample.

Confidence level:

Think of confidence from the perspective of risk. How much risk are you willing to take on? This is where your Confidence Interval numbers become important. How confident do you want to be — 98% confident, 95% confident?

Understand that the confidence percentage you choose greatly impacts the number of completions you’ll need for accuracy. This can increase the survey’s length and how many responses you need, which means increased costs for your survey.

Knowing the actual numbers and amounts behind percentages can help make more sense of your correct sample size needs vs. survey costs.

For example, you want to be 99% confident. After using the sample size determination formula, you find you need to collect an additional 1000 respondents.

This, in turn, means you’ll be paying for samples or keeping your survey running for an extra week or two. You have to determine if the increased accuracy is more important than the cost.

Population variability:

What variability exists in your population? In other words, how similar or different is the population?

If you are surveying consumers on a broad topic, you may have lots of variations. You’ll need a larger sample size to get the most accurate picture of the population.

However, if you’re surveying a population with similar characteristics, your variability will be less, and you can sample fewer people. More variability equals more samples, and less variability equals fewer samples. If you’re not sure, you can start with 50% variability.

Response rate:

You want everyone to respond to your survey. Unfortunately, every survey comes with targeted respondents who either never open the study or drop out halfway. Your response rate will depend on your population’s engagement with your product, service organization, or brand.

The higher the response rate, the higher your population’s engagement level. Your base sample size is the number of responses you must get for a successful survey.

Consider your audience:

Besides the variability within your population, you need to ensure your sample doesn’t include people who won’t benefit from the results. One of the biggest mistakes you can make in sample size determination is forgetting to consider your actual audience.

For example, you don’t want to send a survey asking about the quality of local apartment amenities to a group of homeowners.

Select your respondents

Focus on your survey’s objectives:

You may start with general demographics and characteristics, but can you narrow those characteristics down even more? Narrowing down your audience makes getting a more accurate result from a small sample size easier.

For example, you want to know how people will react to new automobile technology. Your current population includes anyone who owns a car in a particular market.

However, you know your target audience is people who drive cars that are less than five years old. You can remove anyone with an older vehicle from your sample because they’re unlikely to purchase your product.

Once you know what you hope to gain from your survey and what variables exist within your population, you can decide how to calculate sample size. Using the formula for determining sample size is a great starting point to get accurate results.

After calculating the sample size, you’ll want to find reliable customer survey software to help you accurately collect survey responses and turn them into analyzed reports.

LEARN MORE: Population vs Sample

In sample size determination, statistical analysis plan needs careful consideration of the level of significance, effect size, and sample size.

Researchers must reconcile statistical significance with practical and ethical factors like practicality and cost. A well-designed study with a sufficient sample size can improve the odds of obtaining statistically significant results.

To meet the goal of your survey, you may have to try a few methods to increase the response rate, such as:

- Increase the list of people who receive the survey.

- To reach a wider audience, use multiple distribution channels, such as SMS, website, and email surveys.

- Send reminders to survey participants to complete the survey.

- Offer incentives for completing the survey, such as an entry into a prize drawing or a discount on the respondent’s next order.

- Consider your survey structure and find ways to simplify your questions. The less work someone has to do to complete the survey, the more likely they will finish it.

- Longer surveys tend to have lower response rates due to the length of time it takes to complete the survey. In this case, you can reduce the number of questions in your survey to increase responses.

QuestionPro’s sample size calculator makes it easy to find the right sample size for your research based on your desired level of confidence, your margin of error, and the size of the population.

FREE TRIAL LEARN MORE

Frequently Asked Questions (FAQ)

The four ways to determine sample size are: 1. Power analysis 2. Convenience sampling, 3. Random sampling , 4. Stratified sampling

The three factors that determine sample size are: 1. Effect size, 2. Level of significance 3. Power

Using statistical techniques like power analysis, the minimal detectable effect size, or the sample size formula while taking into account the study’s goals and practical limitations is the best way to calculate the sample size.

The sample size is important because it affects how precise and accurate the results of a study are and how well researchers can spot real effects or relationships between variables.

The sample size is the number of observations or study participants chosen to be representative of a larger group

MORE LIKE THIS

Data Information vs Insight: Essential differences

May 14, 2024

Pricing Analytics Software: Optimize Your Pricing Strategy

May 13, 2024

Relationship Marketing: What It Is, Examples & Top 7 Benefits

May 8, 2024

The Best Email Survey Tool to Boost Your Feedback Game

May 7, 2024

Other categories

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

Sample size estimation for case-crossover studies

Affiliation.

- 1 Office of Biostatistics, Division of Biometrics VII, Center for Drug Evaluation and Research, Food and Drug Administration, Silver Spring, Maryland.

- PMID: 30397907

- DOI: 10.1002/sim.8030

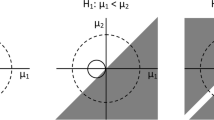

Case-crossover study designs are observational studies used to assess postmarket safety of medical products (eg, vaccines or drugs). As a case-crossover study is self-controlled, its advantages include better control for confounding because the design controls for any time-invariant measured and unmeasured confounding and potentially greater feasibility as only data from those experiencing an event (or cases) are required. However, self-matching also introduces correlation between case and control periods within a subject or matched unit. To estimate sample size in a case-crossover study, investigators currently use Dupont's formula (Biometrics 1988; 43:1157-1168), which was originally developed for a matched case-control study. This formula is relevant as it takes into account correlation in exposure between controls and cases, which are expected to be high in self-controlled studies. However, in our study, we show that Dupont's formula and other currently used methods to determine sample size for case-crossover studies may be inadequate. Specifically, these formulas tend to underestimate the true required sample size, determined through simulations, for a range of values in the parameter space. We present mathematical derivations to explain where some currently used methods fail and propose two new sample size estimation methods that provide a more accurate estimate of the true required sample size.

Keywords: case-crossover; correlation in exposure; matched case-control; sample size formula.

Published 2018. This article is a U.S. Government work and is in the public domain in the USA.

Publication types

- Research Support, U.S. Gov't, Non-P.H.S.

- Case-Control Studies

- Cross-Over Studies*

- Models, Statistical

- Observational Studies as Topic / methods

- Proportional Hazards Models

- Sample Size*

Sample size calculations for case-control studies

This R package can be used to calculate the required samples size for unconditional multivariate analyses of unmatched case-control studies. The sample sizes are for a scalar exposure effect, such as binary, ordinal or continuous exposures. The sample sizes can also be computed for scalar interaction effects. The analyses account for the effects of potential confounder variables that are also included in the multivariate logistic model.

- License Agreement

- samplesizelogisticcasecontrol (Link to CRAN)

- Gail MH, Haneuse S. Power and sample size for multivariate logistic modeling of unmatched case-control studies . Stat Methods Med Res 2017 Jan 1:962280217737157. doi: 10.1177/0962280217737157.

A new sample-size planning approach for person-specific VAR(1) studies: Predictive accuracy analysis

- Original Manuscript

- Published: 08 May 2024

Cite this article

- Jordan Revol ORCID: orcid.org/0000-0001-5511-3617 1 ,

- Ginette Lafit ORCID: orcid.org/0000-0002-8227-128X 2 &

- Eva Ceulemans ORCID: orcid.org/0000-0002-7611-4683 1

45 Accesses

8 Altmetric

Explore all metrics

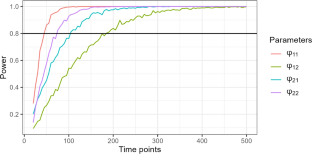

Researchers increasingly study short-term dynamic processes that evolve within single individuals using N = 1 studies. The processes of interest are typically captured by fitting a VAR(1) model to the resulting data. A crucial question is how to perform sample-size planning and thus decide on the number of measurement occasions that are needed. The most popular approach is to perform a power analysis, which focuses on detecting the effects of interest. We argue that performing sample-size planning based on out-of-sample predictive accuracy yields additional important information regarding potential overfitting of the model. Predictive accuracy quantifies how well the estimated VAR(1) model will allow predicting unseen data from the same individual. We propose a new simulation-based sample-size planning method called predictive accuracy analysis (PAA), and an associated Shiny app. This approach makes use of a novel predictive accuracy metric that accounts for the multivariate nature of the prediction problem. We showcase how the values of the different VAR(1) model parameters impact power and predictive accuracy-based sample-size recommendations using simulated data sets and real data applications. The range of recommended sample sizes is smaller for predictive accuracy analysis than for power analysis.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Similar content being viewed by others

Estimating power in (generalized) linear mixed models: An open introduction and tutorial in R

Sample size planning for complex study designs: A tutorial for the mlpwr package

Bayesian updating: increasing sample size during the course of a study

We used the following packages: DataFrames (version 1.6.1), DataTables (version 0.1.0), DataAPI (version 1.1.0), CSV (version 0.10.12) to handle the data; LinearAlgebra (version 0.5.1), GLM (version 1.9.0), HypothesisTests (version 0.11.0), StatsBase (version 0.34.2) to estimate the model and extract the estimated parameters; Distributions (version 0.25.107) and Distances (version 0.10.11) to handle statistical distributions.

When generating (V)AR(1) time series, we have to use starting values, that is, the variable scores at the first time point. To remove the influence of these starting values, we removed the first 1000 time points (known as the burn-in phase).

Adolf, J. K., Voelkle, M. C., Brose, A., & Schmiedek, F. (2017). Capturing context-related change in emotional dynamics via fixed moderated time series analysis. Multivariate Behavioral Research, 52 (4), 499–531.

Ariens, S., Ceulemans, E., & Adolf, J. K. (2020). Time series analysis of intensive longitudinal data in psychosomatic research: A methodological overview. Journal of Psycho-somatic Research, 137 , 110191.

Article Google Scholar

Babyak, M. A. (2004). What you see may not be what you get: A brief, nontechnical introduction to overfitting in regression-type models. Psychosomatic Medicine, 66 (3), 411–421.

Bezanson, J., Karpinski, S., Shah, V., & Edelman, A. (2012). Julia: A fast dynamic language for technical computing.

Borsboom, D., & Cramer, A. O. (2013). Network analysis: An integrative approach to the structure of psychopathology. Annual Review of Clinical Psychology, 9 (1), 91–121.

Bulteel, K., Mestdagh, M., Tuerlinckx, F., & Ceulemans, E. (2018). VAR(1) based models do not always outpredict AR(1) models in typical psychological applications. Psychological Methods, 23 , 740–756.

Article PubMed Google Scholar

Bulteel, K., Tuerlinckx, F., Brose, A., & Ceulemans, E. (2018). Improved insight into and prediction of network dynamics by combining VAR and dimension reduction. Multivariate Behavioral Research, 53 (6), 853–875.

Button, K. S., Ioannidis, J. P. A., Mokrysz, C., Nosek, B. A., Flint, J., Robinson, E. S. J., & Munafó, M. R. (2013). Power failure: Why small sample size undermines the reliability of neuroscience. Nature Reviews Neuroscience, 14 (5), 365–376.

Chang, W., Cheng, J., Allaire, JJ., Sievert, C., Schloerke, B., Xie, Y., Allen, J., McPherson, J., Dipert, A., & Borges, B. (2023). Shiny: Web application framework for R.

Cohen, J. (1992). Statistical power analysis. Current Directions in Psychological Science, 1 (3), 98–101.

De Haan-Rietdijk, S., Voelkle, M. C., Keijsers, L., & Hamaker, E. L. (2017). Discretevs. continuous-time modeling of unequally spaced experience sampling method data. Frontiers in Psychology, 8 , 1849.

Dejonckheere, E., Kalokerinos, E. K., Bastian, B., & Kuppens, P. (2019). Poor emotion regulation ability mediates the link between depressive symptoms and affective bipolarity. Cognition and Emotion, 33 (5), 1076–1083.

Dejonckheere, E., Mestdagh, M., Houben, M., Rutten, I., Sels, L., Kuppens, P., & Tuerlinckx, F. (2019). Complex affect dynamics add limited information to the prediction of psychological well-being. Nature Human Behaviour, 3 (5), 478–491.

Epskamp, S., van Borkulo, C. D., van der Veen, D. C., Servaas, M. N., Isvoranu, A.-M., Riese, H., & Cramer, A. O. J. (2018). Personalized network modeling in psychopathology: The importance of contemporaneous and temporal connections. Clinical Psycho-logical Science, 6 (3), 416–427.

Fisher, A. J., Reeves, J. W., Lawyer, G., Medaglia, J. D., & Rubel, J. A. (2017). Exploring the idiographic dynamics of mood and anxiety via network analysis. Journal of Abnormal Psychology, 126 (8), 1044–1056.

Green, P., & MacLeod, C. J. (2016). SIMR : An R package for power analysis of generalized linear mixed models by simulation. Methods in Ecology and Evolution, 7 (4), 493–498.

Hamaker, E. L., Asparouhov, T., Brose, A., Schmiedek, F., & Muthén, B. (2018). At the frontiers of modeling intensive longitudinal data: Dynamic structural equation models for the affective measurements from the COGITO study. Multivariate Behavioral Research, 53 (6), 820–841.

Hamaker, E. L., Ceulemans, E., Grasman, R. P. P. P., & Tuerlinckx, F. (2015). Modeling affect dynamics: State of the art and future challenges. Emotion Review, 7 (4), 316–322.

Hamaker, E. L., & Wichers, M. (2017). No time like the present: Discovering the hidden dynamics in intensive longitudinal data. Current Directions in Psychological Science, 26 (1), 10–15.

Hamaker, E. L., Zhang, Z., & Van Der Maas, H. L. J. (2009). Using threshold autoregressive models to study dyadic interactions. Psychometrika, 74 (4), 727.

Hastie, T., Tibshirani, R., & Friedman, J. (2013). The Elements of Statistical Learning: Data Mining, Inference, and Prediction . New York, NY: Springer.

Google Scholar

Heininga, V. E., Dejonckheere, E., Houben, M., Obbels, J., Sienaert, P., Leroy, B., van Roy, J., & Kuppens, P. (2019). The dynamical signature of anhedonia in major depressive disorder: Positive emotion dynamics, reactivity, and recovery. BMC Psychiatry, 19 (1), 59.

Article PubMed PubMed Central Google Scholar

Jongerling, J., Laurenceau, J.-P., & Hamaker, E. L. (2015). A multilevel AR(1) model: Allowing for inter-individual differences in trait-scores, inertia, and innovation variance. Multivariate Behavioral Research, 50 (3), 334–349.

Kirtley, O. J. (2022). Advancing credibility in longitudinal research by implementing open science practices: Opportunities, practical examples, and challenges. Infant and Child Development, 31 (1).

Krone, T., Albers, C. J., Kuppens, P., & Timmerman, M. E. (2018). A multivariate statistical model for emotion dynamics. Emotion, 18 , 739–754.

Kuppens, P. (2015). It’s about time: A special section on affect dynamics. Emotion Review, 7 (4), 297–300.

Kuppens, P., Allen, N. B., & Sheeber, L. B. (2010). Emotional inertia and psychological maladjustment. Psychological Science, 21 (7), 984–991.

Kuppens, P., Champagne, D., & Tuerlinckx, F. (2012). The dynamic interplay between appraisal and core affect in daily life. Frontiers in Psychology, 3 .

Kuppens, P., & Verduyn, P. (2017). Emotion dynamics. Current Opinion in Psychology, 17 , 22–26.

Lafit, G., Adolf, J. K., Dejonckheere, E., Myin-Germeys, I., Viechtbauer, W., & Ceulemans, E. (2021). Selection of the number of participants in intensive longitudinal studies: A user-friendly shiny app and tutorial for performing power analysis in multilevel regression models that account for temporal dependencies. Advances in Methods and Practices in Psychological Science, 4 (1), 251524592097873.

Lafit, G., Meers, K., & Ceulemans, E. (2022). A systematic study into the factors that affect the predictive accuracy of multilevel VAR(1) models. Psychometrika, 87 (2), 432–476.

Lafit, G., Revol, J., Cloos, L., Kuppens, P., & Ceulemans, E. (2023). The effect of different operationalizations of affect and preprocessing choices on power-based sample size recommendations in intensive longitudinal research .

Lafit, G., Sels, L., Adolf, J. K., Loeys, T., & Ceulemans, E. (2022b). PowerLAPIM: An application to conduct power analysis for linear and quadratic longitudinal actor–partner interdependence models in intensive longitudinal dyadic designs. Journal of Social and Personal Relationships , page 02654075221080128.

Lakens, D. (2022). Sample size justification. Collabra. Psychology, 8 (1), 33267.

Lane, S. P., & Hennes, E. P. (2018). Power struggles: Estimating sample size for multilevel relationships research. Journal of Social and Personal Relationships, 35 (1), 7–31.

Larson, R. & Csikszentmihalyi, M. (2014). The Experience Sampling Method, pages 21–34. Springer Netherlands, Dordrecht.

Liu, S. & Zhou, D. J. (2023). Using cross-validation methods to select time series models: Promises and pitfalls. British Journal of Mathematical and Statistical Psychology , page bmsp.12330.

Loossens, T., Dejonckheere, E., Tuerlinckx, F., & Verdonck, S. (2021). Informing VAR(1) with qualitative dynamical features improves predictive accuracy. Psychological Methods, 26 (6), 635–659.

Lütkepohl, H. (2005). New Introduction to Multiple Time Series Analysis . Berlin Heidelberg: Springer.

Mansueto, A. C., Wiers, R. W., van Weert, J. C. M., Schouten, B. C., & Epskamp, S. (2022). Investigating the feasibility of idiographic network models. Psychological Methods .

Marriott, F. H. C., & Pope, J. A. (1954). Bias in the estimation of autocorrelations. Biometrika, 41 (3/4), 390.

Munafó, M. R., Nosek, B. A., Bishop, D. V. M., Button, K. S., Chambers, C. D., Percie du Sert, N., Simonsohn, U., Wagenmakers, E.-J., Ware, J. J., & Ioannidis, J. P. A. (2017). A manifesto for reproducible science. Nature Human Behaviour, 1 (1), 0021.

Myin-Germeys, I., & Kuppens, P. (Eds.). (2021). The Open Handbook of Experience Sampling Methodology: A Step-by-Step Guide to Designing, Conducting, and Analyzing ESM Studies . Leuven: Center for Research on Experience Sampling and Ambulatory Methods.

Pe, M. L., Brose, A., Gotlib, I. H., & Kuppens, P. (2016). Affective updating ability and stressful events interact to prospectively predict increases in depressive symptoms over time. Emotion, 16 (1), 73–82.

Pe, M. L., Kircanski, K., Thompson, R. J., Bringmann, L. F., Tuerlinckx, F., Mestdagh, M., Mata, J., Jaeggi, S. M., Buschkuehl, M., Jonides, J., Kuppens, P., & Gotlib, I. H. (2015). Emotion-network density in major depressive disorder. Clinical Psychological Science, 3 (2), 292–300.

Phillips, P. C. B. (1995). Fully modified least squares and vector autoregression. Econo-metrica, 63 (5), 1023.

Provenzano, J., Fossati, P., Dejonckheere, E., Verduyn, P., & Kuppens, P. (2021). In exibly sustained negative affect and rumination independently link default mode network efficiency to subclinical depressive symptoms. Journal of Affective Disorders, 293 , 347–354.

Rosseel, Y. (2012). lavaan: An R package for structural equation modeling. Journal of Statistical Software, 48 (2), 1–36.

Schuurman, N. K., & Hamaker, E. L. (2019). Measurement error and person-specific reliability in multilevel autoregressive modeling. Psychological Methods, 24 (1), 70–91.

Sels, L., Ceulemans, E., & Kuppens, P. (2017). Partner-expected affect: How you feel now is predicted by how your partner thought you felt before. Emotion, 17 (7), 1066–1077.

Tong, H., & Lim, K. S. (1980). Threshold autoregression, limit cycles and cyclical data. Journal of the Royal Statistical Society: Series B (Methodological), 42 (3), 245–268.

Trafimow, D. (2022). Generalizing across auxiliary, statistical, and inferential assumptions. Journal for the Theory of Social Behaviour, 52 (1), 37–48.

Trull, T. J., & Ebner-Priemer, U. W. (2020). Ambulatory assessment in psychopathology research: A review of recommended reporting guidelines and current practices. Journal of Abnormal Psychology, 129 (1), 56–63.

Vanhasbroeck, N., Ariens, S., Tuerlinckx, F., & Loossens, T. (2021). Computational Models for Affect Dynamics. In C. E. Waugh & P. Kuppens (Eds.), Affect Dynamics (pp. 213–260). Cham: Springer International Publishing.

Chapter Google Scholar

Vanhasbroeck, N., Loossens, T., Anarat, N., Ariens, S., Vanpaemel, W., Moors, A., & Tuerlinckx, F. (2022). Stimulus-driven affective change: Evaluating computational models of affect dynamics in conjunction with input. Affective Science, 3 (3), 559–576.

Yarkoni, T., & Westfall, J. (2017). Choosing prediction over explanation in psychology: Lessons from machine learning. Perspectives on Psychological Science, 12 (6), 1100–1122.

Zhang, Y., Revol, J., Lafit, G., Ernst, A., Razum, J., Ceulemans, E., & Bringmann, L. (2023). Sample size optimization for person-specific temporal networks using power analysis and predictive accuracy analysis. Manuscript in preparation.

Download references

The research presented in this article was supported by research grants from the Fund for Scientific Research-Flanders (FWO; Project No. G0C9821N) and from the Research Council of KU Leuven (C14/23/062; iBOF/21/090) awarded to E. Ceulemans.

Author information

Authors and affiliations.

Research Group of Quantitative Psychology and Individual Differences, KU Leuven, Leuven, Belgium

Jordan Revol & Eva Ceulemans

Methodology of Educational Sciences Research Group, KU Leuven, Leuven, Belgium

Ginette Lafit

You can also search for this author in PubMed Google Scholar

Contributions

The authors made the following contributions. Jordan Revol: Conceptualization, Formal Analysis, Methodology, Visualization, Software, Writing - Original Draft Preparation, Review & Editing; Ginette Lafit: Conceptualization, Methodology, Supervision, Writing - Original Draft Preparation, Review & Editing. Eva Ceulemans: Conceptualization, Methodology, Funding acquisition, Supervision, Writing - Original Draft Preparation, Review & Editing.

Corresponding author

Correspondence to Jordan Revol .

Ethics declarations

Conflict of interest.

The authors declare that there are no conflicts of interest with respect to the authorship or the publication of this article.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Reprints and permissions

About this article

Revol, J., Lafit, G. & Ceulemans, E. A new sample-size planning approach for person-specific VAR(1) studies: Predictive accuracy analysis. Behav Res (2024). https://doi.org/10.3758/s13428-024-02413-4

Download citation

Accepted : 28 March 2024

Published : 08 May 2024

DOI : https://doi.org/10.3758/s13428-024-02413-4

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Predictive accuracy

- Sample-size planning method

- Power analysis

- Monte Carlo simulation

- Intensive longitudinal designs

- Autoregressive models

- Find a journal

- Publish with us

- Track your research

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- BMC Med Res Methodol

Sample size requirements for case-control study designs

Michael d edwardes.

1 Division of Clinical Epidemiology, Royal Victoria Hospital, Montreal, Quebec, Canada

Associated Data

Published formulas for case-control designs provide sample sizes required to determine that a given disease-exposure odds ratio is significantly different from one, adjusting for a potential confounder and possible interaction.

The formulas are extended from one control per case to F controls per case and adjusted for a potential multi-category confounder in unmatched or matched designs. Interactive FORTRAN programs are described which compute the formulas. The effect of potential disease-exposure-confounder interaction may be explored.

Conclusions

Software is now available for computing adjusted sample sizes for case-control designs.

Breslow and Day [ 1 ] and Smith and Day [ 2 ] provide asymptotic formulas for the computation of case-control sample sizes required for odds ratios, unadjusted or adjusted for a confounder [ 1 ] and for stratified matched designs [ 2 ]. The notation we use is their notation. Their formulas are extended here to include more than one control per case. The formulas for stratified matched were deduced from applying the approach of Breslow and Day [ 1 ] (pages 305–6) to Table 7 of [ 2 ]. Modification of the formulas for specified interactions [ 1 , 3 ] is also shown. These formulas are based on the logarithm of the odds ratio, for which the normal approximation is more accurate than for the exposure difference, so these formulas are more accurate than the exposure difference formula that is given in the majority of general methods references [ 4 , 5 ].

Two conversational FORTRAN programs, DAYSMITH and DESIGN, compute the formulas. They were submitted to STATLIB for non-commercial distribution a few years ago, and are obtained with an e-mail message such as "send design.exe from general" to http://[email protected] . The programs produce a table of numbers of cases and controls required for a variety of specifications of Type I and Type II error, adjusted for the confounder, unadjusted, and adjusted for stratified matching, with the strata being the levels of the confounder. The two programs have different input requirements. Program DAYSMITH asks for exactly the items required for the Smith and Day formulas. Program DESIGN accepts alternative input that is converted in the program to the items required for the same formulas. The formulas used are shown in Appendix 1.

The input to program DAYSMITH

The sample sizes computed are for the detection of a given disease-exposure odds ratio, that is, the sample sizes at which a certain statistical test will reject the null hypothesis that the odds ratio is one. The input items are as follows:

R E = the odds ratio to be detected (typically a minimum value),

S = 1 or 2 for one-sided or two-sided type I error,

F = the number of controls per case,

P = the control population exposure probability, and

I = an indicator to request interaction adjustment.

Roughly speaking, interaction in statistics corresponds to effect modification in epidemiology. By not selecting an interaction adjustment, we effectively assume that the disease-exposure odds ratio does not differ across confounder levels. Interaction is discussed further below.

The number of confounder levels, denoted K is asked for next. If K = 1, unadjusted sample sizes only are computed, and no other input is required. Program DESIGN is identical to this point. For most applications, no confounder adjustment is required and so the program returns unadjusted sample sizes and is finished after a 1 is entered for K. The unadjusted formula [ 1 ] is more accurate than the usual unadjusted formulas [ 4 , 5 ], and may therefore produce different sample sizes than those.

If K > 1, one of the levels of the confounder is taken to be a reference level, and is referred to as level one. The order of the levels is otherwise immaterial. The input required next is three numbers for each of the K –1 remaining levels, p 1 i , p 2 i , and R Ci , i = 2,..., K , which are

p 1 i = Pr ( C i | E ) = among the exposed population, the proportion at level i of the confounder,

R Ci = the disease-confounder odds ratio (with confounder level i versus level 1).

For the reference level, we set R c 1 = 1 for the formulas that follow. We compute

Input for program DESIGN

Whereas DAYSMITH asks for the same input as requested in the original references [ 1 - 3 ], we found that alternative input made more sense for our initial applications [ 6 , 7 ], so a second program was written. The input for DESIGN is the same as for DAYSMITH up to the point after which the number of levels of the confounder, K , is asked for.

Again, one of the levels of the confounder is taken to be a reference level, and is referred to as level one. The input that is required next is one number for the reference level, r i , and then three (four when interaction is included) numbers for each of the K –1 remaining levels, r i , p i , and R Ci , i = 2,..., K , which are

r i = Pr ( E | C i ) = the probability of exposure at level i of the confounder,

p i = Pr ( C i ) = the probability of being in level i of the confounder, and

R Ci = the odds ratio of disease and confounder level i (versus level 1).

For the reference level, we again set R Ci = 1.

From Bayes Theorem, we compute

p 1i =r i p i /P and

p 2i =(1 – r i )p i /(1 – P).

We have one more input item than is actually required, and that is used for a check, where we can use the fact that

What we actually do is check the sum

The sum Δ is supposed to be equal to one. If it is not one, then we re-define and report

unless they are negative. An alternative used in earlier versions was to compute

and replace

for j = 1,2 and i = 1,..., K. This is equivalent to replacing

i = 1,..., K , which is how the program used to report the change.

An example, adjusting for a confounder

The following example is one of several computations performed for a published research protocol for a study of the association of oral contraceptive (OC) use with cardiovascular risks, controlling for age group [ 6 ]. A related protocol [ 7 ] has smoking as a confounder.

The numbers entered for P , r i , p i , and R Ci , i = 2,..., K, are all taken from the Saskatchewan government medical database, which includes the entire population from which a case-control sample is to be taken. In many applications, such numbers are not available from a reliable source. In that case, one may try sets of alternative minimum and maximum numbers for a range of results. The maximum sample sizes obtained from such sensitivity analyses would be the conservative recommendation.

Both programs first request R E to I. For R E , the outcome of interest is hospitalisation due to certain cardiovascular risks. The exposure is a specific OC with 10% of the market share [ 7 ]. Since overall OC prevalence is 30%, then P = .03 for that specific OC. Using > to denote the cursor for computer entry, we type:

>2 2 3 .03 0

for R E , S, F, P and I, respectively, then press enter. We then receive the message:

Type the number of confounder levels, and <enter>. Type 1 if no confounder.

We enter 5 levels and press enter.

Now type in the population exposure probability for the reference level of the confounding variable.

This will be put at level 1, so it is Pr(E|C1)

The confounder levels are five age groups, and level 1 corresponds to the youngest age group 15–21, for which we enter the prevalence for a specific OC with 10% of the market share. We type .055 and press enter.

> .055

The reply is:

Now type in, for each of the other 4 level(s) of the confounding variable, Pr(E|Ci), Pr(Ci), and Rc(i), separated by at least one blank or <enter>, where Pr(E|Ci) = in the population at level i of the confounder, the proportion exposed, Pr(Ci) = the probability of being at level i, and Rc(i) = odds ratio of disease and confounder level i (versus level 1).

The following numbers are entered for age groups 22–26, 27–31, 22–39 and 40+:

> .038 .24 2

> .021 .2 8

> .008 .18 8

> .004 .15 28.5

Note that Rc(5) = R C 5 = 28.5, a very high value. That is to be expected if all older women are included. (For the final protocol [ 6 ], a cut-off was made at age 45.) When enter is pressed, we receive some confirmation of the input, and a message that the result is written to file design.out. That is, as currently written, the sample sizes and other output are not automatically shown on the screen, but are saved in "design.out" to be viewed directly there. Appendix 2 (Second attached file, app2.txt, a text file) shows the output from the preceding session, which includes a correction of the input values.

Looking at Appendix 2, we see unadjusted sample sizes, those adjusted for age in an unmatched study, and a third set of sample sizes for a matched case-control study. For our example [ 6 ], both unmatched and matched designs are considered. With the low value of P and the high value R C 5 , we see that a large difference in sample sizes required for either design may result. In most applications, however, the differences are not so dramatic.

Adjusting for a matching confounder

Epidemiological literature usually gives formulas for matching which are based on the strong assumption that all sources of extraneous variation among a case and its controls are accounted for [ 1 , 8 , 9 ]. A third program DESIGNM was written to compute such a formula (from [ 1 ], p.294), but DESIGNM does not adjust for a confounding variable, and that strong assumption of implicit matching is rarely justified in case-control studies, so this program was not made freely available. Software which compute sample sizes for conditional logistic regression, such as EGRET SIZ[ 10 ], are alternatives to DESIGNM, which is based on Miettinen's test of the Mantel-Haenszel odds ratio for matched case-control designs. The adjustment in DAYSMITH and DESIGN is for stratified matching [ 2 , 11 , 12 ], where matching is by confounders. This presumes that the eventual analysis will be unconditional [ 2 ] and will account for the stratification. Consequently, it is not required that F controls be linked with each case, only that the total number of controls be F times the total number of cases.

Interaction

The literature [ 1 , 3 , 13 , 15 ] discusses stratified analysis interaction adjustment only for confounders with K = 2. It is easy, however, to modify the formulas for multi-level interaction. Every occurrence of R E in the formulas (Appendix 1) is replaced by R E R Ij , where R Ij is the interaction factor corresponding to the j th level, j = 2,..., K. (For ∑', put R Ij inside the first sum.) We set R I 1 = 1.

For two confounder levels, R I 2 , which is R I in Smith and Day's notation [ 3 ], is the multiplicative factor by which the odds ratio for those exposed and in level 2 of the confounder is different from the odds ratio when there is confounder-exposure-disease interaction. For R Ij , contrast is between level j and the reference level (level one).

This adjustment was made available for sensitivity analysis; specifically, to explore how much the sample size result could change if the confounder were in fact an effect modifier. Nevertheless, the adjusted formulas have been used to determine sample size in the presence of gene-environment interaction [ 13 ].

The competitors to these programs are regression-based sample size programs, such as those in EGRET SIZ [ 10 ], which compute sample sizes required for unconditional logistic regression. The package nQuery [ 14 ] has an unconditional logistic regression option, but is not set up for case-control designs. These may be useful for continuous exposures, and make sense when the final analysis is intended to be such a regression, rather than a stratified analysis, such as a Mantel-Haenszel test, which our programs correspond to. We are unaware of any generally available competitor for stratified analysis.

In a series of papers on sample-size estimation to detect gene-environment interaction, which is a controversial role for sample-size formulas, comparisons have been made between regression based approaches and the stratified analysis approach [ 13 , 15 ]. One solution is even to consider a case-only design [ 16 ]. EGRET SIZ provides no guidance for interaction adjustment, but it probably could be used for that purpose.

When there is more than one confounder, we define one super-confounder, where each category corresponds to a sub-category. For example, if age, with 5 categories, and smoking, with 2 categories, are both confounders, then we define one super-confounder with 10 = 5 × 2 categories. The estimates of r i , p i , and R Ci , i = 2, ...,10, then all have to take age and smoking into account jointly. As the number of confounders and the size of K increases, regression-based sample size programs become more advantageous, since information is not required for every sub-category.

The current programs yield results for 80% and 90% power, but versions are available for alternative powers, from 60% to 95%. A new version may print to the screen, if users want that option, and ask whether sample sizes for a specific power and Type I error are required.

The programs described are for two levels of disease (case vs. control) and of exposure. For several levels of exposure or disease, measures are available which correspond to odds ratios, risk ratios and risk differences [ 17 ], and it is not difficult to compute sample size formulas for these. If there is some demand, software to do those calculations may be created.

The Breslow-Day-Smith formulas which we extend utilize the classical method, based on testing. A more modern approach is that based on a confidence interval for the odds ratio [ 18 ], which may eventually become a program option. A Bayesian approach seems most suited for the sample size problem, although some issues need to be resolved [ 19 ]. Although not yet written, a Bayesian solution will soon be formulated for case-control designs.

Competing interests

none declared

Pre-publication history

The pre-publication history for this paper can be accessed here:

http://www.biomedcentral.com/1471-2288/1/11/prepub

Supplementary Material

Appendix 1 - Shows the formulas utilized by DESIGN and DAYSMITH. Appendix 2 - Shows output from the DESIGN session described in the main text.

Acknowledgement

The author is supported by an Équipe grant from the FRSQ (Fonds de la recherche en santé du Québec). I appreciate the input of Eric Johnson, Sholom Wacholder and Jesse Berlin.

- Breslow NE, Day NE. Statistical Methods in Cancer Research, Vol 2: The Design and Analysis of Cohort Studies, IARC Scientific Publications No 82, International Agency of Research on Cancer, Lyon, France, 1987. pp. 305–306. [ PubMed ]

- Smith PG, Day NE. Matching and confounding in the design and analysis of epidemiological case-control studies. Perspectives in Medical Statistics, JF Bithell, R Coppi, eds London: Academic Press, 1987. pp. 39–64.

- Smith PG, Day NE. The design of case-control studies: the influence of confounding and interaction effects. International Journal of Epidemiology, 1984; 13(3) :356–365. [ PubMed ] [ Google Scholar ]

- Fleiss JL. Statistical Methods for Rates and Proportions, 2nd Edition, Wiley: New York, 1981.

- Schlesselman JJ. Case-Control Studies: design, conduct, analysis, Oxford University Press: New York, 1982.

- Suissa S, Hemmelgarn B, Spitzer WO, Brophy J, Collet JP, Côté R, Downey W, Edouard L, LeClerc J, Paltiel O. The Saskatchewan oral contraceptive cohort study of oral contraceptive use and cardiovascular risks. Pharmacoepidemiology and Drug Safety, 1993; 2 :33–49. [ Google Scholar ]

- Spitzer WO, Thorogood M, Heinemann L. Tri-national case-control study of oral contraceptives and health. Pharmacoepidemiology and Drug Safety, 1993; 2 :21–31. [ PubMed ] [ Google Scholar ]

- Parker RA, Bregman DJ. Sample size for individually matched case-control studies. Biometrics, 1986; 42 :919–926. [ PubMed ] [ Google Scholar ]

- Ejigou A. Power and sample size for matched case-control studies. Biometrics, 1996; 52 :925–933. [ Google Scholar ]

- EGRET. Cytel Software Corporation: Cambridge, MA, 1997. http://www.cytel.com (SIZ is a separate module).