AI Literature Review Generator

Generate high-quality literature reviews fast with ai.

- Academic Research: Create a literature review for your thesis, dissertation, or research paper.

- Professional Research: Conduct a literature review for a project, report, or proposal at work.

- Content Creation: Write a literature review for a blog post, article, or book.

- Personal Research: Conduct a literature review to deepen your understanding of a topic of interest.

New & Trending Tools

In-cite ai reference generator, legal text refiner, job search ai assistant.

Revolutionize Your Research with Jenni AI

Literature Review Generator

Welcome to Jenni AI, the ultimate tool for researchers and students. Our AI Literature Review Generator is designed to assist you in creating comprehensive, high-quality literature reviews, enhancing your academic and research endeavors. Say goodbye to writer's block and hello to seamless, efficient literature review creation.

Loved by over 1 million academics

Endorsed by Academics from Leading Institutions

Join the Community of Scholars Who Trust Jenni AI

Elevate Your Research Toolkit

Discover the Game-Changing Features of Jenni AI for Literature Reviews

Advanced AI Algorithms

Jenni AI utilizes cutting-edge AI technology to analyze and suggest relevant literature, helping you stay on top of current research trends.

Get started

Idea Generation

Overcome writer's block with AI-generated prompts and ideas that align with your research topic, helping to expand and deepen your review.

Citation Assistance

Get help with proper citation formats to maintain academic integrity and attribute sources correctly.

Our Pledge to Academic Integrity

At Jenni AI, we are deeply committed to the principles of academic integrity. We understand the importance of honesty, transparency, and ethical conduct in the academic community. Our tool is designed not just to assist in your research, but to do so in a way that respects and upholds these fundamental values.

How it Works

Start by creating your account on Jenni AI. The sign-up process is quick and user-friendly.

Define Your Research Scope

Enter the topic of your literature review to guide Jenni AI’s focus.

Citation Guidance

Receive assistance in citing sources correctly, maintaining the academic standard.

Easy Export

Export your literature review to LaTeX, HTML, or .docx formats

Interact with AI-Powered Suggestions

Use Jenni AI’s suggestions to structure your literature review, organizing it into coherent sections.

What Our Users Say

Discover how Jenni AI has made a difference in the lives of academics just like you

· Aug 26

I thought AI writing was useless. Then I found Jenni AI, the AI-powered assistant for academic writing. It turned out to be much more advanced than I ever could have imagined. Jenni AI = ChatGPT x 10.

Charlie Cuddy

@sonofgorkhali

· 23 Aug

Love this use of AI to assist with, not replace, writing! Keep crushing it @Davidjpark96 💪

Waqar Younas, PhD

@waqaryofficial

· 6 Apr

4/9 Jenni AI's Outline Builder is a game-changer for organizing your thoughts and structuring your content. Create detailed outlines effortlessly, ensuring your writing is clear and coherent. #OutlineBuilder #WritingTools #JenniAI

I started with Jenni-who & Jenni-what. But now I can't write without Jenni. I love Jenni AI and am amazed to see how far Jenni has come. Kudos to http://Jenni.AI team.

· 28 Jul

Jenni is perfect for writing research docs, SOPs, study projects presentations 👌🏽

Stéphane Prud'homme

http://jenni.ai is awesome and super useful! thanks to @Davidjpark96 and @whoisjenniai fyi @Phd_jeu @DoctoralStories @WriteThatPhD

Frequently asked questions

What exactly does jenni ai do, is jenni ai suitable for all academic disciplines, is there a trial period or a free version available.

How does Jenni AI help with writer's block?

Can Jenni AI write my literature review for me?

How often is the literature database updated in Jenni AI?

How user-friendly is Jenni AI for those not familiar with AI tools?

Jenni AI: Standing Out From the Competition

In a sea of online proofreaders, Jenni AI stands out. Here’s how we compare to other tools on the market:

Feature Featire

COMPETITORS

Advanced AI-Powered Assistance

Uses state-of-the-art AI technology to provide relevant literature suggestions and structural guidance.

May rely on simpler algorithms, resulting in less dynamic or comprehensive support.

User-Friendly Interface

Designed for ease of use, making it accessible for users with varying levels of tech proficiency.

Interfaces can be complex or less intuitive, posing a challenge for some users.

Transparent and Flexible Pricing

Offers a free trial and clear, flexible pricing plans suitable for different needs.

Pricing structures can be opaque or inflexible, with fewer user options.

Unparalleled Customization

Offers highly personalized suggestions and adapts to your specific research needs over time.

Often provide generic suggestions that may not align closely with individual research topics.

Comprehensive Literature Access

Provides access to a vast and up-to-date range of academic literature, ensuring comprehensive research coverage.

Some may have limited access to current or diverse research materials, restricting the scope of literature reviews.

Ready to Transform Your Research Process?

Don't wait to elevate your research. Sign up for Jenni AI today and discover a smarter, more efficient way to handle your academic literature reviews.

We generate robust evidence fast

What is silvi.ai .

Silvi is an end-to-end screening and data extraction tool supporting Systematic Literature Review and Meta-analysis.

Silvi helps create systematic literature reviews and meta-analyses that follow Cochrane guidelines in a highly reduced time frame, giving a fast and easy overview. It supports the user through the full process, from literature search to data analyses. Silvi is directly connected with databases such as PubMed and ClinicalTrials.gov and is always updated with the latest published research. It also supports RIS files, making it possible to upload a search string from your favorite search engine (i.e., Ovid). Silvi has a tagging system that can be tailored to any project.

Silvi is transparent, meaning it documents and stores the choices (and the reasons behind them) the user makes. Whether publishing the results from the project in a journal, sending them to an authority, or collaborating on the project with several colleagues, transparency is optimal to create robust evidence.

Silvi is developed with the user experience in mind. The design is intuitive and easily available to new users. There is no need to become a super-user. However, if any questions should arise anyway, we have a series of super short, instructional videos to get back on track.

To see Silvi in use, watch our short introduction video.

Short introduction video

Learn more about Silvi’s specifications here.

"I like that I can highlight key inclusions and exclusions which makes the screening process really quick - I went through 2000+ titles and abstracts in just a few hours"

Eishaan Kamta Bhargava

Consultant Paediatric ENT Surgeon, Sheffield Children's Hospital

"I really like how intuitive it is working with Silvi. I instantly felt like a superuser."

Henriette Kristensen

Senior Director, Ferring Pharmaceuticals

"The idea behind Silvi is great. Normally, I really dislike doing literature reviews, as they take up huge amounts of time. Silvi has made it so much easier! Thanks."

Claus Rehfeld

Senior Consultant, Nordic Healthcare Group

"AI has emerged as an indispensable tool for compiling evidence and conducting meta-analyses. Silvi.ai has proven to be the most comprehensive option I have explored, seamlessly integrating automated processes with the indispensable attributes of clarity and reproducibility essential for rigorous research practices."

Martin Södermark

M.Sc. Specialist in clinical adult psychology

Silvi.ai was founded in 2018 by Professor in Health Economic Evidence, Tove Holm-Larsen, and expert in Machine Learning, Rasmus Hvingelby. The idea for Silvi stemmed from their own research, and the need to conduct systematic literature reviews and meta-analyses faster.

The ideas behind Silvi were originally a component of a larger project. In 2016, Tove founded the group “Evidensbaseret Medicin 2.0” in collaboration with researchers from Ghent University, Technical University of Denmark, University of Copenhagen, and other experts. EBM 2.0 wanted to optimize evidence-based medicine to its highest potential using Big Data and Artificial Intelligence, but needed a highly skilled person within AI.

Around this time, Tove met Rasmus, who shared the same visions. Tove teamed up with Rasmus, and Silvi.ai was created.

Our story

.png)

Free Trial

No card de t ails nee ded!

RAxter is now Enago Read! Enjoy the same licensing and pricing with enhanced capabilities. No action required for existing customers.

Your all in one AI-powered Reading Assistant

A Reading Space to Ideate, Create Knowledge, and Collaborate on Your Research

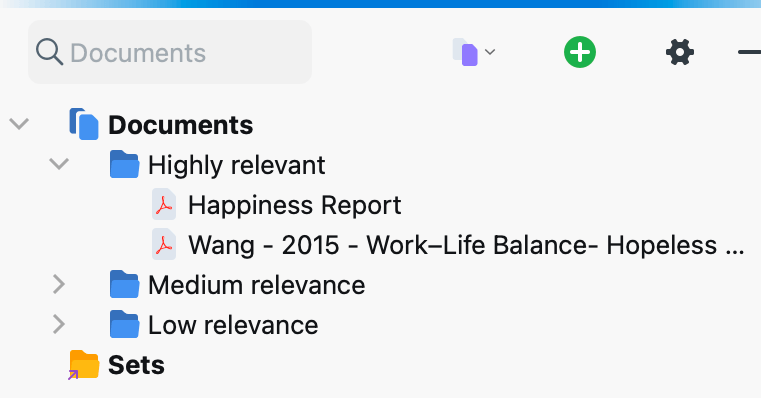

- Smartly organize your research

- Receive recommendations that cannot be ignored

- Collaborate with your team to read, discuss, and share knowledge

From Surface-Level Exploration to Critical Reading - All in one Place!

Fine-tune your literature search.

Our AI-powered reading assistant saves time spent on the exploration of relevant resources and allows you to focus more on reading.

Select phrases or specific sections and explore more research papers related to the core aspects of your selections. Pin the useful ones for future references.

Our platform brings you the latest research related to your and project work.

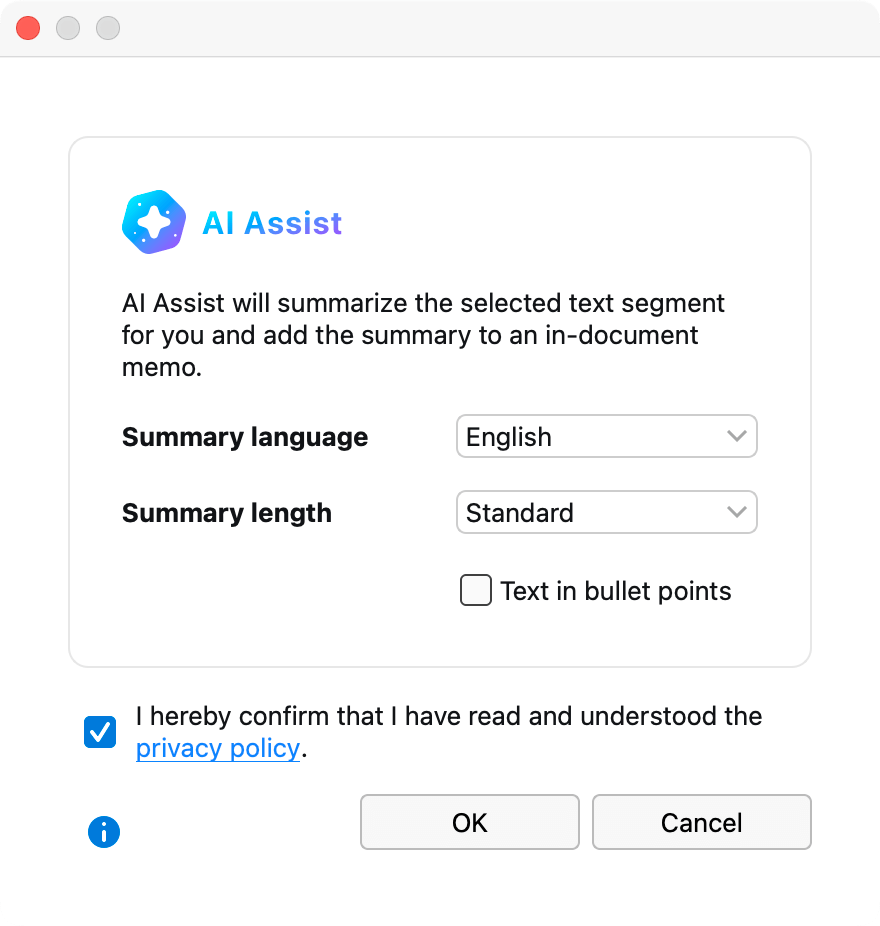

Speed up your literature review

Quickly generate a summary of key sections of any paper with our summarizer.

Make informed decisions about which papers are relevant, and where to invest your time in further reading.

Get key insights from the paper, quickly comprehend the paper’s unique approach, and recall the key points.

Bring order to your research projects

Organize your reading lists into different projects and maintain the context of your research.

Quickly sort items into collections and tag or filter them according to keywords and color codes.

Experience the power of sharing by finding all the shared literature at one place.

Decode papers effortlessly for faster comprehension

Highlight what is important so that you can retrieve it faster next time.

Select any text in the paper and ask Copilot to explain it to help you get a deeper understanding.

Ask questions and follow-ups from AI-powered Copilot.

Collaborate to read with your team, professors, or students

Share and discuss literature and drafts with your study group, colleagues, experts, and advisors. Recommend valuable resources and help each other for better understanding.

Work in shared projects efficiently and improve visibility within your study group or lab members.

Keep track of your team's progress by being constantly connected and engaging in active knowledge transfer by requesting full access to relevant papers and drafts.

Find papers from across the world's largest repositories

Testimonials

Privacy and security of your research data are integral to our mission..

Everything you add or create on Enago Read is private by default. It is visible if and when you share it with other users.

You can put Creative Commons license on original drafts to protect your IP. For shared files, Enago Read always maintains a copy in case of deletion by collaborators or revoked access.

We use state-of-the-art security protocols and algorithms including MD5 Encryption, SSL, and HTTPS to secure your data.

HTML conversions sometimes display errors due to content that did not convert correctly from the source. This paper uses the following packages that are not yet supported by the HTML conversion tool. Feedback on these issues are not necessary; they are known and are being worked on.

- failed: arydshln

- failed: inconsolata

Authors: achieve the best HTML results from your LaTeX submissions by following these best practices .

LitLLM: A Toolkit for Scientific Literature Review

Conducting literature reviews for scientific papers is essential for understanding research, its limitations, and building on existing work. It is a tedious task which makes an automatic literature review generator appealing. Unfortunately, many existing works that generate such reviews using Large Language Models (LLMs) have significant limitations. They tend to hallucinate—generate non-factual information—and ignore the latest research they have not been trained on. To address these limitations, we propose a toolkit that operates on Retrieval Augmented Generation (RAG) principles, specialized prompting and instructing techniques with the help of LLMs. Our system first initiates a web search to retrieve relevant papers by summarizing user-provided abstracts into keywords using an off-the-shelf LLM. Authors can enhance the search by supplementing it with relevant papers or keywords, contributing to a tailored retrieval process. Second, the system re-ranks the retrieved papers based on the user-provided abstract. Finally, the related work section is generated based on the re-ranked results and the abstract. There is a substantial reduction in time and effort for literature review compared to traditional methods, establishing our toolkit as an efficient alternative. Our open-source toolkit is accessible at https://github.com/shubhamagarwal92/LitLLM and Huggingface space ( https://huggingface.co/spaces/shubhamagarwal92/LitLLM ) with the video demo at https://youtu.be/E2ggOZBAFw0

Shubham Agarwal 1,2,3 , Issam H. Laradji 1,4 , Laurent Charlin 2,3,5 , Christopher Pal 1,2,5 1 ServiceNow Research, 2 Mila - Quebec AI Institute, 3 HEC Montreal, Canada 4 UBC, Vancouver, Canada, 5 Canada CIFAR AI Chair Correspondence: [email protected]

1 Introduction

Scientists have long used NLP systems like search engines to find and retrieve relevant papers. Scholarly engines, including Google Scholar, Microsoft Academic Graph, and Semantic Scholar, provide additional tools and structure to help researchers further. Following recent advances in large language models (LLMs), a new set of systems provides even more advanced features. For example, Explainpaper 1 1 1 https://www.explainpaper.com/ helps explain the contents of papers, and Writefull 2 2 2 https://x.writefull.com/ helps with several writing tasks, including abstract and title generation. There are, of course, many other tasks where similar technologies could be helpful.

Systems that help researchers with literature reviews hold promising prospects. The literature review is a difficult task that can be decomposed into several sub-tasks, including retrieving relevant papers and generating a related works section that contextualizes the proposed work compared to the existing literature. It is also a task where factual correctness is essential. In that sense, it is a challenging task for current LLMs, which are known to hallucinate. Overall, creating tools to help researchers more rapidly identify, summarize and contextualize relevant prior work could significantly help the research community.

Recent works explore the task of literature review in parts or in full. For example, Lu et al. ( 2020 ) proposes generating the related works section of a paper using its abstract and a list of (relevant) references. Researchers also look at the whole task and build systems using LLMs like ChatGPT for literature review Haman and Školník ( 2023 ); Huang and Tan ( 2023 ) . While these LLMs tend to generate high-quality text, they are prone to hallucinations Athaluri et al. ( 2023 ) . For example, the Galactica system was developed to reason about scientific knowledge (Taylor et al., 2022 ) . While it outperforms contemporary models on various scientific tasks, it generates made-up content like inaccurate citations and imaginary papers. 3 3 3 see e.g., What Meta Learned from Galactica

As a step forward, we explore retrieval-augmented-generation (RAG) to improve factual correctness Lewis et al. ( 2020 ) . The idea is to use the retrieval mechanism to obtain a relevant list of existing papers to be cited which provides relevant contextual knowledge for LLM based generation.

LitLLM is an interactive tool to help scientists write the literature review or related work section of a scientific paper starting from a user-provided abstract (see Figure 1 ). The specific objectives of this work are to create a system to help users navigate through research papers and write a literature review for a given paper or project. Our main contributions are:

We provide a system based on a modular pipeline that conducts a literature review based on a user-proposed abstract.

We use Retrieval Augmented Generation (RAG) techniques to condition the generated related work on factual content and avoid hallucinations using multiple search techniques.

We incorporate sentence-based planning to promote controllable generation.

2 Related Work

LLMs have demonstrated significant capabilities in storing factual knowledge and achieving state-of-the-art results when fine-tuned on downstream Natural Language Processing (NLP) tasks Lewis et al. ( 2020 ) .

However, they also face challenges such as hallucination, outdated knowledge, and non-transparent, untraceable reasoning processes Huang et al. ( 2023 ); Gao et al. ( 2023 ); Li et al. ( 2024 ) . These limitations have motivated the development of RAG (Retrieval Augmented Generation), which incorporates knowledge from external databases to enhance the accuracy and credibility of the models, particularly for knowledge-intensive tasks Gao et al. ( 2023 ) . RAG has emerged as a promising solution to the challenges faced by LLMs. It synergistically merges LLMs’ intrinsic knowledge with the vast, dynamic repositories of external databases Gao et al. ( 2023 ) . This approach allows for continuous knowledge updates and integration of domain-specific information in an attempt to limit the effect of outdated knowledge. The proposed work builds upon the advancements around RAG to provide a more efficient solution for academic writing.

On the other hand, there has been a notable emphasis on utilizing Large Language Models (LLMs) for tasks related to information retrieval and ranking Zhu et al. ( 2023 ) . The work by Sun et al. ( 2023 ) leverages generative LLMs such as ChatGPT and GPT-4 for relevance ranking in information retrieval, demonstrating that these models can deliver competitive results to state-of-the-art supervised methods. Pradeep et al. ( 2023b , a ) introduce different open-source LLM for listwise zero-shot reranking, further motivating the proposed approach of using LLMs for reranking in our work.

The exploration of large language models (LLMs) and their zero-shot abilities has been a significant focus in recent research. For instance, one study investigated using LLMs in recommender systems, demonstrating their promising zero-shot ranking abilities, although they struggled with the order of historical interactions and position bias Hou et al. ( 2023 ) . Another study improved the zero-shot learning abilities of LLMs through instruction tuning, which led to substantial improvements in performance on unseen tasks Wei et al. ( 2021 ) . A similar approach was taken to enhance the zero-shot reasoning abilities of LLMs, with the introduction of an autonomous agent to instruct the reasoning process, resulting in significant performance boosts Crispino et al. ( 2023 ) . The application of LLMs has also been explored in the context of natural language generation (NLG) assessment, with comparative assessment found to be superior to prompt scoring Liusie et al. ( 2023 ) . In the domain of Open-Domain Question Answering (ODQA), a Self-Prompting framework was proposed to utilize the massive knowledge stored in LLMs, leading to significant improvements over previous methods Li et al. ( 2022 ) . Prompt engineering has been identified as a key technique for enhancing the abilities of LLMs, with various strategies being explored Shi et al. ( 2023 ) . 4 4 4 This paragraph was generated using our platform with some minor modifications based on a slightly different version of our abstract.

Figure 2 provides an overview of the pipeline. The user provides a draft of the abstract or a research idea. We use LLM to first summarize the abstract in keywords that can be used as a query for search engines. Optionally, the users could provide relevant keywords to improve search results. This query is passed to the search engine, which retrieves relevant papers with the corresponding information, such as abstracts and open-access PDF URLs. These retrieved abstracts with the original query abstract are used as input to the other LLM Re-ranker, which provides a listwise ranking of the papers based on the relevance to the query abstract. These re-ranked abstracts with the original query are finally passed to the LLM generator, which generates the related work section of the paper. Recently, Agarwal et al. ( 2024 ) showed that prompting the LLMs with the sentence plans results in reduced hallucinations in the generation outputs. These plans contain information about the number of sentences and the citation description on each line, providing control to meet author preferences. We include this sentence-based planning in the LLM generator as part of this system. In the following, we provide more details about each of the modules.

3.1 Paper Retrieval Module

In our toolkit, we retrieve relevant papers using the Semantic Scholar API. Other platforms could be used, but the S2 Platform is well-adapted to this use case. It is a large-scale academic corpus comprising 200M+ metadata records across multiple research areas, providing information about papers’ metadata, authors, paper embedding, etc. The Recommendations API also provides relevant papers similar to any seed paper. Figure 3 shows our system’s different strategies. We describe these three settings that we use to search for references:

User provides an abstract or a research idea (roughly the length of the abstract). We prompt an LLM (see Figure 4 ) to summarize this abstract in keywords which can be used as a search query with most APIs.

Users can optionally also provide keywords that can improve search results. This is similar (in spirit) to how researchers search for related work with a search engine. This is particularly useful in interdisciplinary research, and authors would like to include the latest research from a particular domain, which could not be captured much in the abstract.

Lastly, any seed paper the user finds relevant enough to their idea could be used with the Recommendations API from search engines to provide other closely related papers.

3.2 Paper Re-Ranking Module

Recent efforts have explored the application of proprietary LLMs for ranking Sun et al. ( 2023 ); Ma et al. ( 2023 ) as well as open-source models like Pradeep et al. ( 2023a , b ) . These approaches provide a combined list of passages directly as input to the model and retrieve the re-ordered ranking list Zhang et al. ( 2023 ) . Typically, a retriever first filters top-k potential candidates, which are then re-ranked by an LLM to provide the final output list. In our work, we use the instructional permutation generation approach Sun et al. ( 2023 ) where the model is prompted to generate a permutation of the different papers in descending order based on the relevance to the user-provided abstract, thus producing an ordered list of preferences against providing intermediate scores. Figure 5 showcases the prompt we used for LLM-based re-ranking.

3.3 Summary Generation Module

We explore two strategies for generation: (1) Zero-shot generation and (2) Plan-based generation, which relies on sentence plans for controllable generation, described in the following

3.3.1 Zero-shot generation

While LLMs can potentially search and generate relevant papers from their parametric memory and trained data, they are prone to hallucinating and generating non-factual content. Retrieval augmented generation, first introduced in Parvez et al. ( 2021 ) for knowledge tasks, addresses this by augmenting the generation model with an information retrieval module. The RAG principles have been subsequently used for dialogue generation in task-oriented settings Thulke et al. ( 2021 ) , code generation Liu et al. ( 2020 ); Parvez et al. ( 2021 ) and product review generation Kim et al. ( 2020 ) . RAG drastically reduces hallucinations in the generated output Gao et al. ( 2023 ); Tonmoy et al. ( 2024 ) .

Our work builds upon the principles of RAG, where we retrieve the relevant papers based on the query and augment them as context for generating the literature review. This also allows the system to be grounded in the retrieved information and be updated with the latest research where the training data limits the parametric knowledge of the LLM. Figure 6 shows our system’s prompt for effective Retrieval Augmented Generation (RAG).

3.3.2 Plan based generation

To get the best results from LLM, recent research shifts focus on designing better prompts (Prompt Engineering) including 0-shot chain-of-thought prompting Kojima et al. ( 2022 ); Zhou et al. ( 2022 ) , few-shot prompting Brown et al. ( 2020 ) techniques, few-shot Chain-of-thought prompting Wei et al. ( 2022 ) and in-context prompting Li and Liang ( 2021 ); Qin and Eisner ( 2021 ) . However, the longer context of our problem statement (query paper and multiple relevant papers) hinders the application of these techniques for response generation.

We utilized sentence plan-based prompting techniques drawing upon insights from the literature of traditional modular Natural Language Generation (NLG) pipelines with intermediary steps of sentence planning and surface realization Reiter and Dale ( 1997 ); Stent et al. ( 2004 ) . These plans provide a sentence structure of the expected output, which efficiently guides the LLM in generating the literature review in a controllable fashion as demonstrated in concurrent work (Agarwal et al., 2024 ) . Figure 7 (in Appendix) shows the prompt for plan-based generation with an example template as:

Please generate {num_sentences} sentences in {num_words} words. Cite {cite_x} at line {line_x}. Cite {cite_y} at line {line_y}.

4 Implementation Details

We build our system using Gradio Abid et al. ( 2019 ) , which provides a nice interface to quickly and efficiently build system demos. Our user interface is also available at HuggingFace Space 5 5 5 https://huggingface.co/spaces/shubhamagarwal92/LitLLM . We query the Semantic Scholar API available through the Semantic Scholar Open Data Platform (Lo et al., 2020 ; Kinney et al., 2023 ) to search for the relevant papers. Specifically, we use the Academic Graph 6 6 6 https://api.semanticscholar.org/api-docs/graph and Recommendations 7 7 7 https://api.semanticscholar.org/api-docs/recommendations API endpoint. In this work, we use OpenAI API 8 8 8 https://platform.openai.com/docs/guides/gpt to generate results for LLM using GPT-3.5-turbo and GPT-4 model. At the same time, our modular pipeline allows using any LLM (proprietary or open-sourced) for different components. We also allow the end-user to sort the retrieved papers by relevance (default S2 results), citation count, or year.

5 User Experience

As a preliminary study, we provided access to our user interface to 5 different researchers who worked through the demo to write literature reviews and validate the system’s efficacy. We also provide an example in the demo with an abstract for a quick start. Particularly, the users found the 0-shot generation to be more informative about the literature in general while the plan-based generation to be more accessible and tailored for their research paper, as also evident in our demo video. 9 9 9 https://youtu.be/E2ggOZBAFw0 . Table 1 (in Appendix) shows the output-related work for a recent paper Li et al. ( 2023 ) that was randomly chosen with a number of cited papers as 4. Our system generated an informative query Multimodal Research: Image-Text Model Interaction and retrieved relevant papers where the top recommended paper was also cited in the original paper. While zero-shot generation provides valuable insights into existing literature, plan-based generation produces a more succinct and readily usable literature review.

6 Conclusion and Future Work

In this work, we introduce and describe LitLLM, a system which can generate literature reviews in a few clicks from an abstract using off-the-shelf LLMs. This LLM-powered toolkit relies on the RAG with a re-ranking strategy to generate a literature review with attribution. Our auxiliary tool allows researchers to actively search for related work based on a preliminary research idea, research proposal or even a full abstract. We present a modular pipeline that can be easily adapted to include the next generation of LLMs and other domains, such as news, by changing the source of retrieval information.

Given the growing impact of different LLM-based writing assistants, we are optimistic that our system may aid researchers in searching relevant papers and improve the quality of automatically generated related work sections of a paper. While our system shows promise as a helpful research assistant, we believe that their usage should be disclosed to the readers, and authors should also observe caution in eliminating any possible hallucinations.

In the future, we would also like to explore academic search through multiple APIs, such as Google Scholar. This work only considered abstracts of the query paper and the retrieved papers, which creates a bottleneck in effective literature review generation. With the advent of longer context LLMs, we envision our system ingesting the whole paper (potentially leveraging an efficient LLM-based PDF parser) to provide a more relevant background of the related research. We consider our approach as an initial step for building intelligent research assistants which could help academicians through an interactive setting (Dwivedi-Yu et al., 2022 ) .

- Abid et al. (2019) Abubakar Abid, Ali Abdalla, Ali Abid, Dawood Khan, Abdulrahman Alfozan, and James Zou. 2019. Gradio: Hassle-free sharing and testing of ml models in the wild . arXiv preprint arXiv:1906.02569 .

- Agarwal et al. (2024) Shubham Agarwal, Issam Laradji, Laurent Charlin, and Christopher Pal. 2024. LLMs for Literature Review generation: Are we there yet? Under submission .

- Athaluri et al. (2023) Sai Anirudh Athaluri, Sandeep Varma Manthena, V S R Krishna Manoj Kesapragada, Vineel Yarlagadda, Tirth Dave, and Rama Tulasi Siri Duddumpudi. 2023. Exploring the boundaries of reality: Investigating the phenomenon of artificial intelligence hallucination in scientific writing through chatgpt references . Cureus , 15.

- Brown et al. (2020) Tom B. Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared Kaplan, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda Askell, Sandhini Agarwal, Ariel Herbert-Voss, Gretchen Krueger, Tom Henighan, Rewon Child, Aditya Ramesh, Daniel M. Ziegler, Jeffrey Wu, Clemens Winter, Christopher Hesse, Mark Chen, Eric Sigler, Mateusz Litwin, Scott Gray, Benjamin Chess, Jack Clark, Christopher Berner, Sam McCandlish, Alec Radford, Ilya Sutskever, and Dario Amodei. 2020. Language models are few-shot learners .

- Crispino et al. (2023) Nicholas Crispino, Kyle Montgomery, Fankun Zeng, Dawn Song, and Chenguang Wang. 2023. Agent instructs large language models to be general zero-shot reasoners . ArXiv , abs/2310.03710.

- Dwivedi-Yu et al. (2022) Jane Dwivedi-Yu, Timo Schick, Zhengbao Jiang, Maria Lomeli, Patrick Lewis, Gautier Izacard, Edouard Grave, Sebastian Riedel, and Fabio Petroni. 2022. Editeval: An instruction-based benchmark for text improvements. arXiv preprint arXiv:2209.13331 .

- Gao et al. (2023) Yunfan Gao, Yun Xiong, Xinyu Gao, Kangxiang Jia, Jinliu Pan, Yuxi Bi, Yi Dai, Jiawei Sun, and Haofen Wang. 2023. Retrieval-augmented generation for large language models: A survey . arXiv preprint arXiv:2312.10997 .

- Haman and Školník (2023) Michael Haman and Milan Školník. 2023. Using chatgpt to conduct a literature review. Accountability in Research , pages 1–3.

- Hou et al. (2023) Yupeng Hou, Junjie Zhang, Zihan Lin, Hongyu Lu, Ruobing Xie, Julian McAuley, and Wayne Xin Zhao. 2023. Large language models are zero-shot rankers for recommender systems . ArXiv , abs/2305.08845.

- Huang and Tan (2023) Jingshan Huang and Ming Tan. 2023. The role of chatgpt in scientific communication: writing better scientific review articles . American Journal of Cancer Research , 13(4):1148.

- Huang et al. (2023) Lei Huang, Weijiang Yu, Weitao Ma, Weihong Zhong, Zhangyin Feng, Haotian Wang, Qianglong Chen, Weihua Peng, Xiaocheng Feng, Bing Qin, et al. 2023. A survey on hallucination in large language models: Principles, taxonomy, challenges, and open questions . arXiv preprint arXiv:2311.05232 .

- Kim et al. (2020) Jihyeok Kim, Seungtaek Choi, Reinald Kim Amplayo, and Seung-won Hwang. 2020. Retrieval-augmented controllable review generation. In Proceedings of the 28th International Conference on Computational Linguistics , pages 2284–2295.

- Kinney et al. (2023) Rodney Kinney, Chloe Anastasiades, Russell Authur, Iz Beltagy, Jonathan Bragg, Alexandra Buraczynski, Isabel Cachola, Stefan Candra, Yoganand Chandrasekhar, Arman Cohan, et al. 2023. The semantic scholar open data platform. arXiv preprint arXiv:2301.10140 .

- Kojima et al. (2022) Takeshi Kojima, Shixiang Shane Gu, Machel Reid, Yutaka Matsuo, and Yusuke Iwasawa. 2022. Large language models are zero-shot reasoners. Advances in neural information processing systems , 35:22199–22213.

- Lewis et al. (2020) Patrick Lewis, Ethan Perez, Aleksandra Piktus, Fabio Petroni, Vladimir Karpukhin, Naman Goyal, Heinrich Küttler, Mike Lewis, Wen-tau Yih, Tim Rocktäschel, et al. 2020. Retrieval-augmented generation for knowledge-intensive nlp tasks. Advances in Neural Information Processing Systems , 33:9459–9474.

- Li et al. (2023) Hang Li, Jindong Gu, Rajat Koner, Sahand Sharifzadeh, and Volker Tresp. 2023. Do dall-e and flamingo understand each other? In Proceedings of the IEEE/CVF International Conference on Computer Vision , pages 1999–2010.

- Li et al. (2022) Junlong Li, Zhuosheng Zhang, and Hai Zhao. 2022. Self-prompting large language models for zero-shot open-domain qa .

- Li et al. (2024) Junyi Li, Jie Chen, Ruiyang Ren, Xiaoxue Cheng, Wayne Xin Zhao, Jian-Yun Nie, and Ji-Rong Wen. 2024. The dawn after the dark: An empirical study on factuality hallucination in large language models. arXiv preprint arXiv:2401.03205 .

- Li and Liang (2021) Xiang Lisa Li and Percy Liang. 2021. Prefix-tuning: Optimizing continuous prompts for generation. arXiv preprint arXiv:2101.00190 .

- Liu et al. (2020) Shangqing Liu, Yu Chen, Xiaofei Xie, Jingkai Siow, and Yang Liu. 2020. Retrieval-augmented generation for code summarization via hybrid gnn. arXiv preprint arXiv:2006.05405 .

- Liusie et al. (2023) Adian Liusie, Potsawee Manakul, and Mark John Francis Gales. 2023. Llm comparative assessment: Zero-shot nlg evaluation through pairwise comparisons using large language models .

- Lo et al. (2020) Kyle Lo, Lucy Lu Wang, Mark Neumann, Rodney Kinney, and Daniel Weld. 2020. S2ORC: The semantic scholar open research corpus . In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics , pages 4969–4983, Online. Association for Computational Linguistics.

- Lu et al. (2020) Yao Lu, Yue Dong, and Laurent Charlin. 2020. Multi-XScience: A large-scale dataset for extreme multi-document summarization of scientific articles . In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP) , pages 8068–8074. Association for Computational Linguistics.

- Ma et al. (2023) Xueguang Ma, Xinyu Zhang, Ronak Pradeep, and Jimmy Lin. 2023. Zero-shot listwise document reranking with a large language model. arXiv preprint arXiv:2305.02156 .

- Parvez et al. (2021) Md Rizwan Parvez, Wasi Uddin Ahmad, Saikat Chakraborty, Baishakhi Ray, and Kai-Wei Chang. 2021. Retrieval augmented code generation and summarization. arXiv preprint arXiv:2108.11601 .

- Pradeep et al. (2023a) Ronak Pradeep, Sahel Sharifymoghaddam, and Jimmy Lin. 2023a. Rankvicuna: Zero-shot listwise document reranking with open-source large language models. arXiv preprint arXiv:2309.15088 .

- Pradeep et al. (2023b) Ronak Pradeep, Sahel Sharifymoghaddam, and Jimmy Lin. 2023b. Rankzephyr: Effective and robust zero-shot listwise reranking is a breeze! arXiv preprint arXiv:2312.02724 .

- Qin and Eisner (2021) Guanghui Qin and Jason Eisner. 2021. Learning how to ask: Querying LMs with mixtures of soft prompts . arXiv preprint arXiv:2104.06599 .

- Qu et al. (2021) Leigang Qu, Meng Liu, Jianlong Wu, Zan Gao, and Liqiang Nie. 2021. Dynamic modality interaction modeling for image-text retrieval . Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval .

- Reiter and Dale (1997) Ehud Reiter and Robert Dale. 1997. Building applied natural language generation systems. Natural Language Engineering , 3(1):57–87.

- Shi et al. (2023) Fobo Shi, Peijun Qing, D. Yang, Nan Wang, Youbo Lei, H. Lu, and Xiaodong Lin. 2023. Prompt space optimizing few-shot reasoning success with large language models . ArXiv , abs/2306.03799.

- Srinivasan et al. (2021) Krishna Srinivasan, Karthik Raman, Jiecao Chen, Michael Bendersky, and Marc Najork. 2021. Wit: Wikipedia-based image text dataset for multimodal multilingual machine learning . Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval .

- Stent et al. (2004) Amanda Stent, Rashmi Prasad, and Marilyn Walker. 2004. Trainable sentence planning for complex information presentations in spoken dialog systems . In Proceedings of the 42nd Annual Meeting of the Association for Computational Linguistics (ACL-04) , pages 79–86, Barcelona, Spain.

- Sun et al. (2023) Weiwei Sun, Lingyong Yan, Xinyu Ma, Pengjie Ren, Dawei Yin, and Zhaochun Ren. 2023. Is chatgpt good at search? investigating large language models as re-ranking agent. arXiv preprint arXiv:2304.09542 .

- Taylor et al. (2022) Ross Taylor, Marcin Kardas, Guillem Cucurull, Thomas Scialom, Anthony Hartshorn, Elvis Saravia, Andrew Poulton, Viktor Kerkez, and Robert Stojnic. 2022. Galactica: A large language model for science . arXiv preprint arXiv:2211.09085 .

- Thulke et al. (2021) David Thulke, Nico Daheim, Christian Dugast, and Hermann Ney. 2021. Efficient retrieval augmented generation from unstructured knowledge for task-oriented dialog . arXiv preprint arXiv:2102.04643 .

- Tonmoy et al. (2024) SM Tonmoy, SM Zaman, Vinija Jain, Anku Rani, Vipula Rawte, Aman Chadha, and Amitava Das. 2024. A comprehensive survey of hallucination mitigation techniques in large language models. arXiv preprint arXiv:2401.01313 .

- Wei et al. (2021) Jason Wei, Maarten Bosma, Vincent Zhao, Kelvin Guu, Adams Wei Yu, Brian Lester, Nan Du, Andrew M. Dai, and Quoc V. Le. 2021. Finetuned language models are zero-shot learners . ArXiv , abs/2109.01652.

- Wei et al. (2022) Jason Wei, Xuezhi Wang, Dale Schuurmans, Maarten Bosma, Fei Xia, Ed H Chi, Quoc V Le, Denny Zhou, et al. 2022. Chain-of-thought prompting elicits reasoning in large language models. In Advances in Neural Information Processing Systems .

- Yu et al. (2022) Jiahui Yu, Zirui Wang, Vijay Vasudevan, Legg Yeung, Mojtaba Seyedhosseini, and Yonghui Wu. 2022. Coca: Contrastive captioners are image-text foundation models . Trans. Mach. Learn. Res. , 2022.

- Zhang et al. (2023) Xinyu Zhang, Sebastian Hofstätter, Patrick Lewis, Raphael Tang, and Jimmy Lin. 2023. Rank-without-gpt: Building gpt-independent listwise rerankers on open-source large language models. arXiv preprint arXiv:2312.02969 .

- Zhao et al. (2022) Zijia Zhao, Longteng Guo, Xingjian He, Shuai Shao, Zehuan Yuan, and Jing Liu. 2022. Mamo: Fine-grained vision-language representations learning with masked multimodal modeling . Proceedings of the 46th International ACM SIGIR Conference on Research and Development in Information Retrieval .

- Zhou et al. (2022) Yongchao Zhou, Andrei Ioan Muresanu, Ziwen Han, Keiran Paster, Silviu Pitis, Harris Chan, and Jimmy Ba. 2022. Large language models are human-level prompt engineers. arXiv preprint arXiv:2211.01910 .

- Zhu et al. (2023) Yutao Zhu, Huaying Yuan, Shuting Wang, Jiongnan Liu, Wenhan Liu, Chenlong Deng, Zhicheng Dou, and Ji-Rong Wen. 2023. Large language models for information retrieval: A survey . arXiv preprint arXiv:2308.07107 .

In the following, we provide snippets of code to retrieve results from the Semantic Scholar API for both recommendation and query-based search:

- Research Guides

- University Libraries

AI-Based Literature Review Tools

- Dialogues: Insightful Facts

- How to Craft Prompts

- Plugins / Extensions for AI-powered Searches

- Cite ChatGPT in APA / MLA

- AI and Plagiarism

- ChatGPT & Higher Education

- Author Profile

Selected AI-Based Literature Review Tools

Updates: See news or release of AI (Beta) across various academic research databases including Web of Science , Scopus , Ebsco , ProQues t, OVID , Dimensions , JStor , Westlaw , and LexisNexis . ********* ********** ********** ********** **********

Disclaimer: TAMU libraries do not have subscription access to the AI-powered tools listed below the divider line. The guide serves solely as an informational resource. It is recommended that you assess these tools and their usage methodologies independently. ------------------------------------------------------------------------------------------------------------------------------------------------------------- SEMANTIC SCHOLAR

- SCIENTIFIC LITERATURE SEARCH ENGINE - finding semantically similar research papers.

- " A free, AI-powered research tool for scientific literature." <https://www.semanticscholar.org/>. But login is required in order to use all functions.

- Over 200 millions of papers from all fields of science, the data of which has also served as a wellspring for the development of other AI-driven tools.

The 4000+ results can be sorted by Fields of Study, Date Range, Author, Journals & Conferences

Save the papers in your Library folder. The Research Feeds will recommend similar papers based on the items saved.

Example - SERVQUAL: A multiple-item scale for measuring consumer perceptions of service quality Total Citations: 22,438 [Note: these numbers were gathered when this guide was created] Highly Influential Citations 2,001 Background Citations 6,109 Methods Citations 3,273 Results Citations 385

TLDRs (Too Long; Didn't Read) Try this example . Press the pen icon to reveal the highlighted key points . TLDRs "are super-short summaries of the main objective and results of a scientific paper generated using expert background knowledge and the latest GPT-3 style NLP techniques. This new feature is available in beta for nearly 60 million papers in computer science, biology, and medicine..." < https://www.semanticscholar.org/product/tldr>

- https://www.openread.academy/

- Institutionally accessed by Harvard, MIT, University of Oxford, Johns Hopkins, Standford, Beijing University. .

- AI-powered Academic Searching + Web Searching - Over 300 million papers and real-time web content.

- Every keyword search or AI quest will yield a synthesis report with citations. If you want to re-orient the search outcomes, just click on the Re-generate button and all citations will be refreshed accordingly. After that click on Follow-Up Questions to delve deeper into a particular area or subject.

- Use Paper Q&A to interact with a text directly, e.g. " What does this paper say about literature review ?"

- Click on the Translation to put a text or search results into another language.

- Upload a PDF document and let Paper Expresso to read it for you and parse the content into an academic report format for easy screening: Background and context> Research objectives and hypotheses> Methodology> Results and findings> Discussion and interpretation> Contributions to the field > Structure and flow> Achievements and significance> Limitations and future work>

- AI-POWERED RESEARCH ASSISTANT - finding papers, filtering study types, automating research flow, brainstorming, summarizing and more.

- " Elicit is a research assistant using language models like GPT-3 to automate parts of researchers’ workflows. Currently, the main workflow in Elicit is Literature Review. If you ask a question, Elicit will show relevant papers and summaries of key information about those papers in an easy-to-use table." <https://elicit.org/faq#what-is-elicit.>; Find answers from 175 million papers. FAQS

- Example - How do mental health interventions vary by age group? / Fish oil and depression Results: [Login required] (1) Summary of top 4 papers > Paper #1 - #4 with Title, abstract, citations, DOI, and pdf (2) Table view: Abstract / Interventions / Outcomes measured / Number of participants (3) Relevant studies and citations. (4) Click on Search for Paper Information to find - Metadata about Sources ( SJR etc.) >Population ( age etc.) >Intervention ( duration etc.) > Results ( outcome, limitations etc.) and > Methodology (detailed study design etc.) (5) Export as BIB or CSV

- How to Search / Extract Data / List of Concept Search -Enter a research question >Workflow: Searching > Summarizing 8 papers> A summary of 4 top papers > Final answers. Each result will show its citation counts, DOI, and a full-text link to Semantic Scholar website for more information such as background citations, methods citation, related papers and more. - List of Concepts search - e.g. adult learning motivation . The results will present a list the related concepts. - Extract data from a pdf file - Upload a paper and let Elicit extract data for you.

- Export Results - Various ways to export results.

- How to Cite - Includes the elicit.org URL in the citation, for example: Ought; Elicit: The AI Research Assistant; https://elicit.org; accessed xxxx/xx/xx

CONSENSUS.APP

ACADEMIC SEARCH ENGINE- using AI to find insights in research papers.

"We are a search engine that is designed to accept research questions, find relevant answers within research papers, and synthesize the results using the same language model technology." <https://consensus.app/home/blog/maximize-your-consensus-experience-with-these-best-practices/>

- Example - Does the death penalty reduce the crime? / Fish oil and depression / (1) Extracted & aggregated findings from relevant papers. (2) Results may include AIMS, DESIGN, PARTICIPANTS, FINDINGS or other methodological or report components. (3) Summaries and Full Text

- How to Search Direct questions - Does the death penalty reduce the crime? Relationship between two concepts - Fish oil and depression / Does X cause Y? Open-ended concepts - effects of immigration on local economics Tips and search examples from Consensus' Best Practice

- Synthesize (beta) / Consensus Meter When the AI recognizes certain types of research questions, this functionality may be activated. It will examine a selection of some studies and provide a summary along with a Consensus Meter illustrating their collective agreement. Try this search: Is white rice linked to diabetes? The Consensus Meter reveals the following outcomes after analyzing 10 papers: 70% indicate a positive association, 20% suggest a possible connection, and 10% indicate no link.

Prompt “ write me a paragraph about the impact of climate change on GDP with citations “

CITATIONS IN CONTEXT

Integrated with Research Solutions.

Over 1.2 billion Citation Statements and metadata from over 181 million papers suggested reference.

How does it work? - "scite uses access to full-text articles and its deep learning model to tell you, for a given publication: - how many times it was cited by others - how it was cited by others by displaying the text where the citation happened from each citing paper - whether each citation offers supporting or contrasting evidence of the cited claims in the publication of interest, or simply mention it." <https://help.scite.ai/en-us/article/what-is-scite-1widqmr/>

EXAMPLE of seeing all citations and citation statements in one place

More information: Scite: A smart citation index that displays the context of citations and classifies their intent using deep learning

- GPT3.5 by OpenAI. Knowledge cutoff date is September 2021.

- Input/ Output length - ChatGPT-3.5 allows a maximum token limit of 4096 tokens. According to ChatGPT " On average, a token in English is roughly equivalent to 4 bytes or characters. English words are typically around 5 characters long. This means that, very roughly, you could fit around 800 to 1000 English words within 4096 tokens."

- According to ChatGPT, the generated responses are non-deterministic by default. So if you run the searches again and get slightly or very different results, it's likely due to this factor.

- ChatGPT may find non-existent references.

- According to this study < https://arxiv.org/ftp/arxiv/papers/2304/2304.06794.pdf > "ChatGPT cites the most-cited articles and journals, relying solely on Google Scholar's citation counts" within the field of environmental science.

- Example - "INTERVIEW WITH CHATGPT" as a Research Method & Teaching Tool Some researchers began to use this approach to obtain their research data. Try this Google Scholar search link "interview with ChatGPT" or see two articles below: (1) Chatting about ChatGPT: how may AI and GPT impact academia and libraries? BD Lund, T Wang - Library Hi Tech News, 2023 (2) An interview with ChatGPT: discussing artificial intelligence in teaching, research, and practice , G Scaringi, M Loche - 2023

Increased risk of sudden infant death syndrome (SIDS) Increased risk of premature birth Increased risk of low birth weight Increased risk of respiratory problems in newborns Increased risk of respiratory problems in infants exposed to secondhand smoke Increased risk of developing asthma and other respiratory illnesses later in life for infants exposed to secondhand smoke [Note : ChatGPT may generate non-existent references or false knowledge. To find out why Large Language Models hallucinate, check out this Wiki article: Hallucination (artificial intelligence) and this blog post - A Gentle Introduction to Hallucinations in Large Language Models by Adrian Tam ]

Infant death Neonatal mortality (referring specifically to deaths within the first 28 days of life) Perinatal mortality (referring to deaths occurring during the period from 22 completed weeks of gestation to 7 completed days after birth) Early childhood mortality (referring to deaths occurring within the first five years of life) Child mortality (referring to deaths occurring before the age of 18) [Press the Regenerate button to get more.]

- Example - RELATED WORDS What are the related words of infant mortality? Neonatal mortality, Post-neonatal mortality, Stillbirths, Low birth weight, Malnutrition, Infectious diseases, Vaccination, Maternal health, Access to healthcare, Poverty, Social inequality, Sanitation, Hygiene, Water quality, Childbirth complications, Congenital abnormalities, Birth defects, Maternal age, Under-five mortality, Child mortality, Perinatal mortality, Preterm birth, Low birth weight, Sudden infant death syndrome (SIDS), Maternal mortality, Postnatal care, Malnutrition, Immunization, Access to healthcare, Clean water and sanitation, Congenital anomalies, Infant health disparities, Infant mortality rate (IMR), Infant survival.

(("Infant Death"[Mesh] OR "Sudden Infant Death Syndrome"[Mesh] OR "Infant Mortality"[Mesh] OR "Perinatal Mortality"[Mesh] OR "Neonatal Mortality"[Mesh] OR "Fetal Mortality"[Mesh] OR "Infant Death" OR "Sudden Infant Death Syndrome" OR "Infant Mortality" OR "Perinatal Mortality" OR "Neonatal Mortality" OR "Fetal Mortality") AND ("Risk Factors"[Mesh] OR "Etiology"[Mesh] OR "Prevention and Control"[Mesh] OR "Interventions"[Mesh] OR "Public Health"[Mesh] OR "Health Disparities"[Mesh] OR "Healthcare Disparities"[Mesh] OR "Poverty"[Mesh] OR "Malnutrition"[Mesh] OR "Infection"[Mesh] OR "Congenital Abnormalities"[Mesh] OR "Prematurity"[Mesh] OR "Low Birth Weight"[Mesh] OR "Maternal Education"[Mesh] OR "Maternal Health"[Mesh] OR "Risk Factors" OR "Etiology" OR "Prevention and Control" OR "Interventions" OR "Public Health" OR "Health Disparities" OR "Healthcare Disparities" OR "Poverty" OR "Malnutrition" OR "Infection" OR "Congenital Abnormalities" OR "Prematurity" OR "Low Birth Weight" OR "Maternal Education" OR "Maternal Health")) Comments and Suggestions: #1 -This search string may not work on all database platforms. For example, Medline (OVID) may not be able to run it, Medline & CINAHL (EBSCO) can run it without any problems while PubMed will run it but it will indicate which MeSH terms are not indexed. See PubMed's search History and Details under Advanced . #2 - This is just one possible combination. You may need to adjust it based on your task and the databases you are using . #3 - It might be easier if you break the task into 3 parts: ask for synonyms > ask for thesauri terms > and ask it to combine them.

Examples - DATABASE RECOMMENDATIONS Heath Science - Which databases to use to find articles on the EFFECTS OF MATERNAL SMOKING ON INFANT DEATH? PubMed: This is a database of biomedical literature from the National Library of Medicine. It includes articles from medical journals and other sources on a wide range of health-related topics. Embase: This is a database of biomedical literature that covers more international and pharmaceutical content than PubMed. It includes articles from medical journals and other sources on a wide range of health-related topics. Cochrane Library : This is a collection of databases that provides systematic reviews and meta-analyses of healthcare interventions, including interventions related to maternal smoking and infant death. Scopus: This is a multidisciplinary database that covers research from a wide range of fields, including health sciences. It includes articles from scientific journals, books, and conference proceedings. Web of Science: This is a database that covers research from a wide range of fields, including health sciences. It includes articles from scientific journals, books, and conference proceedings. CINAHL: This is a database of nursing and allied health literature, including articles on maternal smoking and infant death. [Note: The response above was copied and pasted directly from ChatGPT. No editing was added.] Education -- I am doing a meta-analysis on k-12 STEM education. What databases should I use? ERIC : This is a database of education research and information, including articles from journals, reports, and conference papers. It is a commonly used database for educational research. Education Source : This is a comprehensive database of education research and information, including articles from journals, books, and conference papers. Web of Science: This is a multidisciplinary database that covers research from a wide range of fields, including education. It includes articles from scientific journals, books, and conference proceedings. Scopus: This is another multidisciplinary database that covers research from a wide range of fields, including education. It includes articles from scientific journals, books, and conference proceedings. PsycINFO: This is a database of psychology research, including articles from journals, books, and dissertations. It can be useful for research on STEM education that includes psychological factors. Cochrane Library : This is a collection of databases that provides systematic reviews and meta-analyses of healthcare interventions including interventions related to education. [Author's note: You could also ask for the GREY LITERATURE sources related to the topic above,]

Example: ACT-AS-AN-EXPERT PROMPT Here's an alternative way to consolidate all the questions into a single consultation by using this popular Act-As-An-Expert prompt, aka, system message prompt: I am going to conduct a systematic review on games and mathematics related to K-12 education I want you to act as a systematic review expert . I will ask you some questions related to my research topic and you advise me on how to do it.

[You may ask one question at a time] My first question is "which databases should I use?" My second question is "what are the relevant subject terms, descriptors and key words?" My third question is "how to do data extraction?" ..... , .... Please note that the above prompts are merely for illustrative purposes. Actual questions may contain more specific instructions. As an example, for the first question, could you provide guidance on how to identify relevant publications? Specifically, can you provide a list of the primary databases and grey literature sources related to my topic?

Example - GREY LITERATURE Please show me all the resources that offer grey literature for conducting research in the field of education ERIC (Education Resources Information Center): https://eric.ed.gov/ OpenGrey: http://www.opengrey.eu/ British Education Index: https://www.ebscohost.com/academic/british-education-index WorldCat: https://www.worldcat.org/ ProQuest Dissertations & Theses Global: https://www.proquest.com/products-services/pqdtglobal.html EThOS (Electronic Theses Online Service): https://ethos.bl.uk/ Institutional Repositories of universities and academic institutions (search for specific institution repositories) American Educational Research Association (AERA): https://www.aera.net/ National Education Association (NEA): https://www.nea.org/ Association for Supervision and Curriculum Development (ASCD): http://www.ascd.org/ RAND Corporation: https://www.rand.org/ National Center for Education Research (NCER): https://ies.ed.gov/ncer/ National Center for Education Statistics (NCES): https://nces.ed.gov/ European Educational Research Association (EERA): https://www.eera-ecer.de/ UNESCO International Institute for Educational Planning (IIEP): http://www.iiep.unesco.org/ Organization for Economic Cooperation and Development (OECD): https://www.oecd.org/education/ Education Development Center (EDC): https://www.edc.org/ National Institute for Educational Policy Research (NIER) - Japan: https://www.nier.go.jp/ Australian Council for Educational Research (ACER): https://www.acer.org/ Canadian Education Association (CEA): https://www.cea-ace.ca/ My topic is about stem education. Which grey literature databases should I use? STEM Education Research Centre (SERC) Grey Literature Database National STEM Education Research Repository (NSERR) National Science Digital Library (NSDL) STEM Education Resource Center (SERC) GrayLit Network STEM Education Grey Literature Library

See other Comparison Examples under Bing AI.

Examples - ZERO SHOT, ONE SHOT & FEW SHOT PROMPTING for literature review See the examples on the left panel- How to Fine Tune Prompts

Bing Chat / Copilot

- Bing Chat has become Copilot.

- CONVERSATIONAL LLM 4.0 + SEARCH ENGINE. Connects to the Internet.

- Three conversation modes: More Creative, More Balanced or More Precise.

- Comparison - Prompt: My topic is about psychology. Which grey literature databases should I use? More Precise - PsycExtra, NIH Library and links to library guides, .gov and .org websites More Balanced - PsycExtra and three main research guides that mention psychology grey literature sources. More Creative- PsycExtra, LILACS, and Worldcat + definition of grey literature + suggestions on searching for professional association websites, newsletters, magazines, standards and guidelines, and other sources that may contain relevant information for your topic.

- Some examples below were tested in Bing Chat only.

- Example - factors of infant mortality Infant mortality is a term that refers to the death of infants before their first birthday. The leading causes of infant mortality include birth asphyxia, pneumonia, congenital malformations, term birth complications such as abnormal presentation of the fetus umbilical cord prolapse, or prolonged labor, neonatal infection, diarrhea, malaria, measles, and malnutrition1. Many situational factors lead to infant mortality such as the pregnant woman’s level of education, environmental conditions, political infrastructure, and level of medical support1. In the United States, a primary determinant of infant mortality risk is infant birth weight with lower birth weights increasing the risk of infant mortality. The determinants of low birth weight include socioeconomic, psychological, behavioral and environmental factors2.

- Example: PEER REVIEWED SOURCES I need to find some peer reviewed articles about infant mortality? The answer includes 5 articles. The first one is “Causes of death and infant mortality rates among full-term births in the United States between 2010 and 2012: An observational study” by Neha Bairoliya and Günther Fink1. A click on " Tell me more about the first article " leads to this summary: " The first article is titled “Causes of death and infant mortality rates among full-term births in the United States between 2010 and 2012: An observational study” and was published in PLOS Medicine on March 20, 2018. The authors are Neha Bairoliya and Günther Fink. The study used linked birth and death records for the period 2010–2012 to identify the state- and cause-specific burden of infant mortality among full-term infants (born at 37–42 weeks of gestation) in the United States. The researchers found that the full-term infant mortality rate (FTIMR) was 2.2 per 1,000 live births overall and ranged between 1.29 (Connecticut) and 3.77 (Mississippi) at the state level. Sudden unexpected death in infancy (SUDI) accounted for 43% of FTIM; congenital malformations and perinatal conditions accounted for 31% and 11.3% of FTIM, respectively. The largest mortality differentials between states with good and states with poor FTIMR were found for SUDI, with particularly large risk differentials for deaths due to sudden infant death syndrome (SIDS) and suffocation." More searches: Please find five empirical studies focusing on the factors influencing adult learners engaged in online education. Please locate five scholarly articles on stress management among college students site:pubmed.ncbi.nlm.nih.gov

The ERIC Thesaurus contains nearly 12,000 terms, including more than 4,500 descriptors and 7,000 synonyms1. You can use the term “Academic Achievement” or its synonyms such as “Educational Achievement”, “Student Achievement”, “Academic Performance”, “Academic Success”, “Learning Achievement” and more. [ Note : The follow-up responses include some additional sources. See two below: https://www.powerthesaurus.org/academic_achievement https://eric.ed.gov/?ti=Achievement+Gap including https://eric.ed.gov/?ti=Academic+Achievement |

My topic is about psychology. Which grey literature databases should I use?

ChatGPT PsycEXTRA : This database is produced by the American Psychological Association (APA) and includes grey literature such as conference papers, white papers, and government reports. OpenGrey : This database provides access to grey literature produced in Europe, including reports, theses, and conference papers Grey Literature Report : This database is produced by The New York Academy of Medicine and includes reports, conference papers, and other grey literature on health and biomedical topics, including psychology. ProQuest Dissertations and Theses Global : This database provides access to graduate-level research, including theses and dissertations related to psychology. Google Scholar: While not exclusively a grey literature database, Google Scholar can be a useful tool for finding conference papers and other grey literature related to psychology. National Technical Information Service (NTIS) : This database provides access to technical reports produced by U.S. government agencies, including those related to psychology. Bing AI - [asked in the Precise mode] For psychology, some recommended grey literature databases include PsycEXTRA and PsyArXiv . PsycEXTRA is a database of grey literature material relating to psychology, behavioral sciences and health. PsyArXiv is a psychology archive designed to facilitate rapid dissemination of psychological research. What other models have been developed based on SERVQUAL?

ChatGPT SERVPERF: This is a modified version of SERVQUAL that measures service performance instead of service quality. It was developed by Cronin and Taylor (1992) and is based on the same five dimensions as SERVQUAL. SERVPERF-GAP: This model, developed by Caruana and Ewing (2006), extends the SERVQUAL model to include three additional gaps that can occur when customers evaluate service quality. E-SERVQUAL: This model, developed by Parasuraman et al. (2005), adapts the SERVQUAL scale to measure the quality of online services. SERVCO: This is a modified version of SERVQUAL that includes a sixth dimension, "cost," in addition to the five original dimensions. It was developed by Sureshchandar et al. (2002) to address the importance of cost in service quality evaluation. SERVQ-HC: This is a modified version of SERVQUAL that is specifically designed for evaluating the quality of health care services. It was developed by Cronin et al. (2000) and includes 20 items across five dimensions. Bing AI The Nordic model of service quality2. The hierarchical model of service quality3. The American Customer Satisfaction Index (ACSI)4.

Bing AI Sidebar - CHAT, THINK, & WRITE

- Examples of Using AI sidebar to Recap Google Scholar findings

Chat - If you highlight a study in the search results of Google Scholar, you will be prompted with a question by AI sidebar asking if you want to send the selected text to it. If you choose to do so, you can then select either EXPLAIN or EXPAND to learn more about the chosen article. It's worth noting that EXPAND provides more detailed information. You can then click on any of the follow-up links to delve further into the selected article or topic. If the text of the chosen study is written in a foreign language, the AI sidebar can also provide an explanation of the text in English. Another option for extracting the key points from a selected article is to submit the URL of the PDF. Insights - Use the insights button to get more ideas about the topic. Compose - Tell the Edge sidebar your topic and let its Compose tool to generate a draft for you. Google Scholar Button- See also the Plugins and Extension page for how to perform a combined search using Google, Google Scholar, and ChatGPT .

Example: FINDING SIMILAR ARTICLES Find similar articles like this one - [then paste in the abstract ] Note - Use this prompt with Bing chat since real-time web info is required.

Examples: SUMMARIZE + REFERENCE Use a basic prompt like " Please summarize . ..." or add a REFERENCE TEXT that provides the necessary information about what you want it to focus or how the summary or resolution should look like. The reference could be a quote, an excerpt, a partial sentence, a conversation, an abstract, a code snippet and so on. Formula : Specify a role > assign a task > provide a reference text. Example#1 - Scholarly Journal Quote

STEP 1 Prompt: As a research assistant specializing in renewable energy, your task is to locate comparable research studies based on the provided abstract from an article: "This paper discusses the perspective of renewable energy (wind, solar, wave and biomass) in the making of strategies for a sustainable development. Such strategies typically involve three major technological changes: energy savings on the demand side, efficiency improvements in the energy production, and replacement of fossil fuels by various sources of renewable energy."

Bing Chat then found 3 documents: the ScienceDirect paper where the abstract came from and two other documents from un.org and frontiersin.org respectively.

STEP 2 Prompt: Please synthesize the findings of the three studies provided above.

Bing Chat: "Sure! Here is a synthesis of the findings from the three studies:

All three studies emphasize the importance of renewable energy in achieving sustainable development and mitigating climate change. They all discuss various strategies and actions that can be taken to increase the use of renewable energy sources and reduce reliance on fossil fuels.

Henrik Lund’s paper discusses the perspective of renewable energy (wind, solar, wave and biomass) in the making of strategies for a sustainable development. Such strategies typically involve three major technological changes: energy savings on the demand side, efficiency improvements in the energy production, and .....

The United Nations outlines five critical actions the world needs to prioritize now to transform our energy systems and speed up the shift to renewable energy: Make renewable energy technology a global public good, Improve global access to components and raw materials......

“Sustainable Energy Transition for Renewable and Low Carbon Grid” discusses that a sustainable global electricity transition will entail increased use of renewable energy sources particularly wind and solar, nuclear energy as a low carbon energy source, electrification of transport and.......

In summary , these studies suggest that transitioning to renewable energy sources is crucial for sustainable development and mitigating climate change. This can be achieved through various strategies such as increasing energy efficiency, improving access to renewable technology and materials, leveling the playing field for renewables, ending fossil fuel subsidies, and redirecting investments towards renewables." Example #2 Y ou are an expert researcher, given the following article, please discuss its key findings. Reference: How Large Language Models are Transforming Machine-Paraphrased Plagiarism at https://arxiv.org/abs/2210.03568 This is another way to put REFERENCE TEXT. As a side not, role specifying or the use of persona is important as it can impact the level of responses obtained. Just imagine if the designated role were " You are a high school student... ." Example #3 Please read this article and summarize it f or me - "Who Should I Trust: AI or Myself? Leveraging Human and AI Correctness Likelihood to Promote Appropriate Trust in AI-Assisted Decision-Making" and then find 5 similar studies and please also summarize each for me.

CONNECTED PAPERS

- RELATED STUDIES

- Uses visual graphs or other ways to show relevant studies. The database is connected to the Semantic Scholar Paper Corpus which has compiled hundreds of millions of published papers across many science and social science fields.

- See more details about how it works .

- Example - SERVQUAL and then click on SELECT A PAPER TO BUILD THE GRAPH > The first paper was selected. Results: (1) Origin paper - SERVQUAL: A multiple-item scale for measuring consumer perceptions of service quality + Connected papers with links to Connected Papers / PDF / DOI or Publisher's site / Semantic Scholar / Google Scholar. (2) Graph showing the origin paper + connected papers with links to the major sources . See above. (3) Links to Prior Works and Derivative Works See the detailed citations by Semantic Scholar on the origin SERVQUAL paper on the top of this page within Semantic Scholars.

- How to Search Search by work title. Enter some keywords about a topic.

- Download / Save Download your saved Items in Bib format.

PAPER DIGEST

- SUMMARY & SYNTHESIS

- " Knowledge graph & natural language processing platform tailored for technology domain . <"https://www.paperdigest.org/> Areas covered: technology, biology/health, all sciences areas, business, humanities/ social sciences, patents and grants ...

- LITERATURE REVIEW - https://www.paperdigest.org/review/ Systematic Review - https://www.paperdigest.org/literature-review/

- SEARCH CONSOLE - https://www.paperdigest.org/search/ Conference Digest - NIPS conference papers ... Tech AI Tools: Literature Review | Literature Search | Question Answering | Text Summarization Expert AI Tools: Org AI | Expert search | Executive Search, Reviewer Search, Patent Lawyer Search...

Daily paper digest / Conference papers digest / Best paper digest / Topic tracking. In Account enter the subject areas interested. Daily Digest will upload studies based on your interests.

RESEARCH RABBIT

- CITATION-BASED MAPPING: SIMILAR / EARLY / LATER WORKS

- " 100s of millions of academic articles and covers more than 90%+ of materials that can be found in major databases used by academic institutions (such as Scopus, Web of Science, and others) ." See its FAQs page. Search algorithms were borrowed from NIH and Semantic Scholar.

The default “Untitled Collection” will collect your search histories, based on which Research Rabbit will send you recommendations for three types of related results: Similar Works / Earlier Works / Later Works, viewable in graph such as Network, Timeline, First Authors etc.

Zotero integration: importing and exporting between these two apps.

- Example - SERVQUAL: A multiple-item scale for measuring consumer perceptions of service quality [Login required] Try it to see its Similar Works, Earlier Works and Later Works or other documents.

- Export Results - Findings can be exported in BibTxt, RIS or CSV format.

CITING GENERATIVE AI

- How to cite ChatGPT [APA] - https://apastyle. apa.org/blog /how-to-cite-chatgpt

- How to Cite Generative AI [MLA] https://style. mla.org /citing-generative-ai/

- Citation Guide - Citing ChatGPT and Other Generative AI (University of Queensland, Australia)

- Next: Dialogues: Insightful Facts >>

- Last Updated: May 9, 2024 2:16 PM

- URL: https://tamu.libguides.com/c.php?g=1289555

Accelerate your dissertation literature review with AI

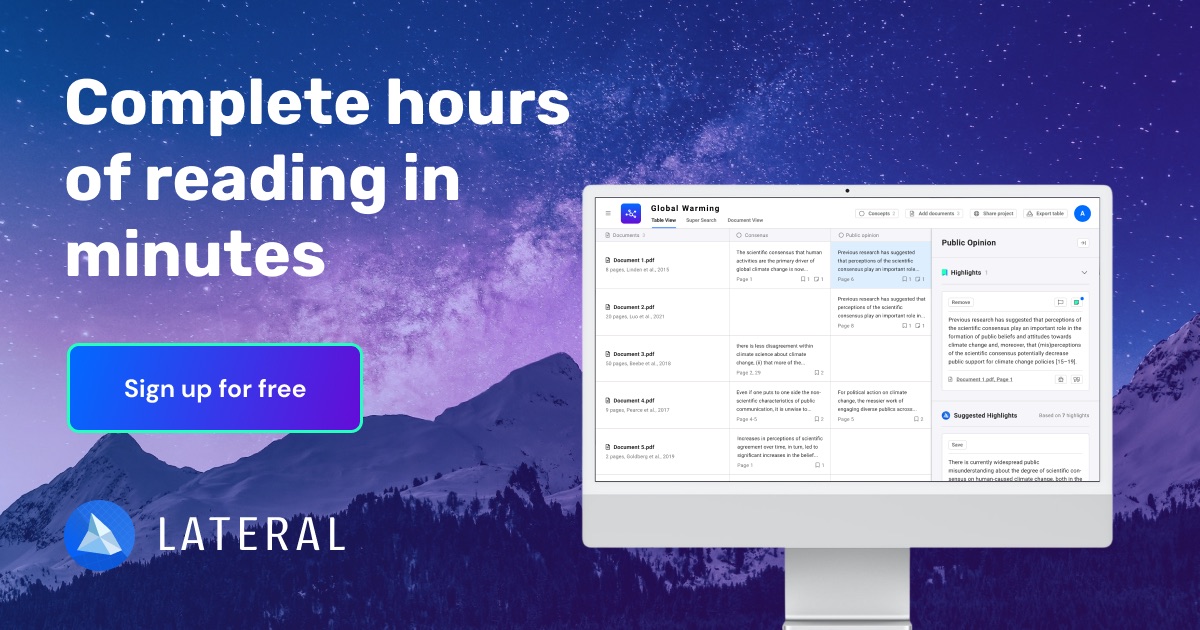

Become a lateral pioneer.

Get started for free and help craft the future of research.

Early access. No credit card required.

Introduction

Dissertation writing is part of being a graduate student. There are many different ways to organise your research, and several steps to this process . Typically, the literature review is an early chapter in the dissertation, providing an overview of the field of study. It should summarise relevant research papers and other materials in your field, with specific references. To understand how to write a good literature review, we must first understand its purpose. The goals of a literature review are to place your dissertation topic in the context of existing work (this also allows you to acknowledge prior contributions, and avoid accusations of plagiarism), and to set you up to show you are making a new contribution to the field. Since literature review is repetitive, many students find it tedious. While there are some traditional tools and techniques to help, covered below, they tend to be cumbersome and keyword-based. For this reason, we built a better tool for research and literature review, which I describe in the last section. You can see the Lateral tool in action , and how it makes the literature review a lot easier. To sign up to the tool, click here.

1. Different kinds of reading

We can divide the activity of reading for research into three different kinds:

- Exploratory reading, mostly done in the initial phase;

- Deep reading of highly informative sources; and

- Broad, targeted skim reading of large collections of books and articles, in order to find specific kinds of information you already know exist.

1.1. Exploratory reading

Initially, a research student will need to read widely in a new field to gain fundamental understanding. In this early stage, the goal is to explore and digest the main ideas in existing research. Traditionally, this phase has been a manual process, but there is a new generation of digital tools to aid in getting a quick overview of your field, and more generally to organise your research . This stage can happen both before and after the research topic or question has been formulated. It is often unstructured and full of serendipitous (“happy accidental”) discovery — the student’s job is to absorb what they find, rather than to conduct a targeted search for particular information.

Put another way: You don’t know what you’re looking for ahead of time. By the end of this phase, you should be able to sketch a rough map of your field of study.

1.2. Narrow, deep reading

After the exploratory reading phase, you will be able to prioritise the information you read. Now comes the second phase: Deep, reflective reading. In this phase, your focus will narrow to a small number of highly relevant sources — perhaps one or two books, or a handful of articles — which you will read carefully, with the goal of fully understanding important concepts. This is a deliberative style of reading, often accompanied by reflective pauses and significant note taking. If the goal in the first phase was sketching a map of the globe, the goal in this second phase is to decide which cities interest you most, and map them out in colour and detail.

1.3. Broad, targeted reading