Nginx Modules

In this article, we will explore the world of Nginx modules and their importance in extending the functionality of your Nginx web server. Nginx modules are crucial components that enable you to add new features, enhance performance , and customize the behavior of your server. Understanding the role and types of Nginx modules will empower you to optimize your server configuration and deliver a highly efficient web application.

What are NGINX Modules?

Nginx modules are modular components that allow you to expand the capabilities of your Nginx server. They are designed to address specific functionalities and can be easily added or removed from your server configuration. Modules act as building blocks, enabling you to tailor Nginx to meet your specific requirements. Whether you need to enhance security, enable caching , or implement load balancing, Nginx modules provide the necessary tools to achieve these objectives.

Types of Nginx Modules

There are two main types of Nginx modules: core modules and third-party modules.

Popular Third-Party Modules for Nginx

There is a vibrant ecosystem of third-party Nginx modules developed by the community. These modules extend Nginx’s capabilities and offer advanced features and functionalities. Third-party Nginx modules cater to various use cases such as content caching, authentication, rate limiting , security, and more. Some popular third-party modules include the Nginx Amplify module, Lua module, GeoIP module, and Let’s Encrypt module.

There are numerous third-party modules available for Nginx that extend its functionality and provide additional features.

Here are some popular third-party Nginx modules:

This module integrates the Lua scripting language into Nginx, allowing you to write powerful and flexible configurations and extensions using Lua scripts.

To configure the Nginx Lua module, you can easily follow the step-by-step guide provided in the article Install and Configure Nginx Lua Module . This comprehensive guide will walk you through the entire process, ensuring that you have a smooth and successful configuration.

Brotli Module

ngx_brotli is a module that enables Brotli compression support in Nginx. Brotli is a compression algorithm developed by Google that offers superior compression ratios compared to gzip.

To configure the Nginx Brotli module, refer to the detailed step-by-step guide outlined in the article Install and Configure Nginx Brotli Module .

Redis Module

The Redis module integrates Nginx with Redis, a popular in-memory data store. It allows you to cache content, perform dynamic lookups, and leverage Redis’ key-value store within Nginx configurations.

To set up Redis with your Nginx server, carefully follow the instructions provided in this comprehensive guide .

Nginx Pagespeed Module

The Nginx Pagespeed module, developed by Google, optimizes web page delivery and performance. It automatically applies various optimizations like minification, compression, and caching to improve page load times.

To effortlessly configure the Nginx Pagespeed Module, simply consult the comprehensive step-by-step guide provided in the article Nginx Pagespeed Module . This guide will walk you through the process and ensure a smooth configuration experience.

ModSecurity Module

The ModSecurity module integrates the ModSecurity Web Application Firewall (WAF) into Nginx. It provides advanced security features, including request filtering, intrusion detection, and protection against various web attacks.

The tutorial How to Install Nginx ModSecurity Module provides a detailed step-by-step guide that will make the configuration process a breeze. Just follow the instructions outlined in the article, and you’ll have your Nginx ModSecurity module up and running smoothly in no time.

Nginx RTMP Module

The Nginx RTMP module adds support for real-time streaming and broadcasting using the RTMP (Real-Time Messaging Protocol) protocol. It allows you to build scalable video streaming platforms or deliver live video content. Feel free to check out our comprehensive guide on installing and configuring the Nginx RTMP module . It covers everything you need to know to get started with setting up Nginx for RTMP streaming.

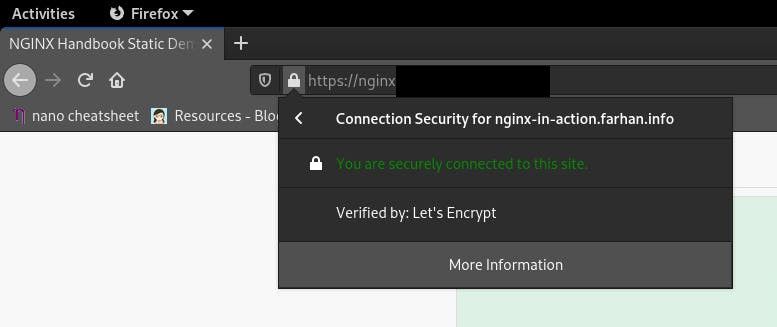

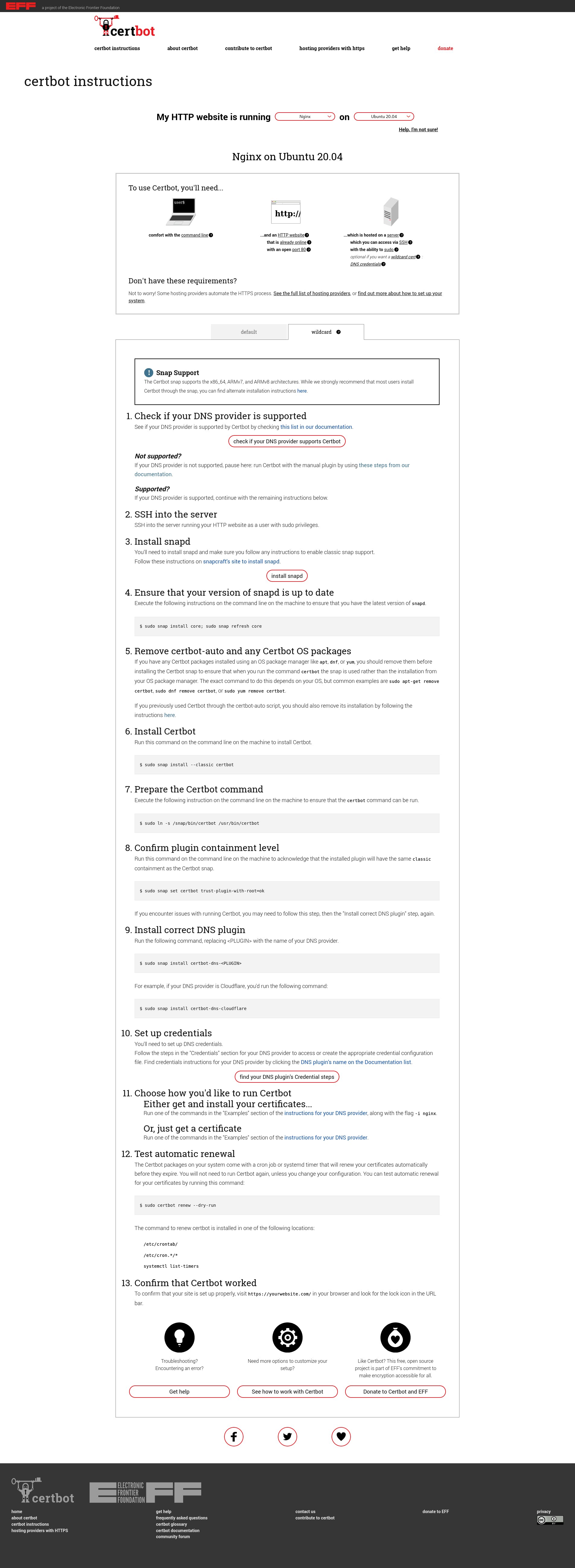

Let’s Encrypt Nginx Module

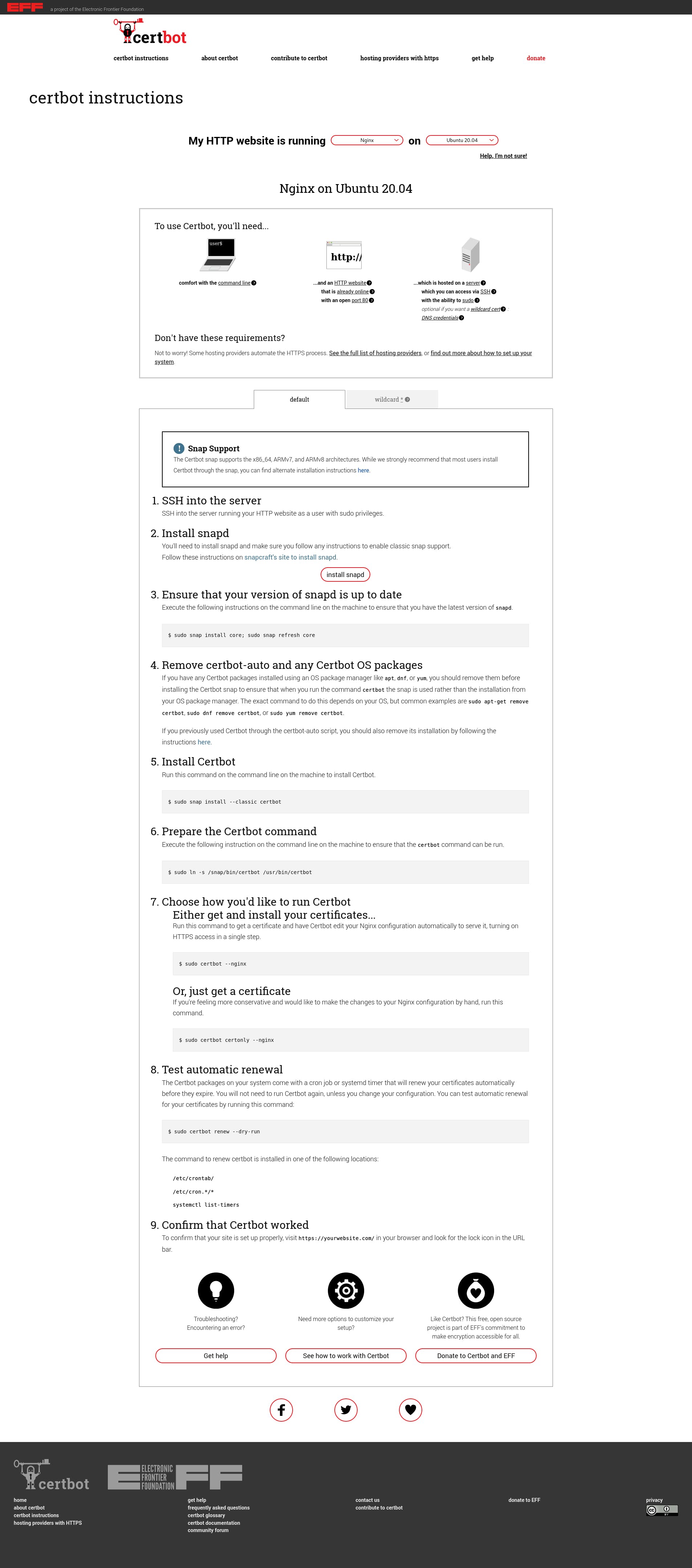

This module simplifies the integration of Let’s Encrypt SSL certificates into Nginx configurations . It automatically handles certificate issuance, renewal, and installation, making it easier to secure your websites with free SSL/TLS certificates.

To configure Let’s Encrypt with Nginx, simply follow the steps outlined in our tutorial on setting up Nginx with Let’s Encrypt . By following these instructions, you’ll be able to seamlessly integrate Let’s Encrypt SSL/TLS certificates into your Nginx server and ensure secure communication for your website or application. Let’s dive in and get started!

Nginx Upload Progress Module

The Nginx Upload Progress module tracks and reports the progress of file uploads. It allows you to provide real-time upload progress feedback to users during large file uploads. To configure the Nginx Upload Progress Module on your website, follow the steps mentioned in our tutorial .

GeoIP2 Nginx Module

This module integrates MaxMind’s GeoIP2 databases into Nginx, enabling you to determine the geographic location of clients based on their IP addresses.

HttpEchoModule

The HttpEchoModule allows you to easily send custom HTTP responses from Nginx. It is useful for testing and debugging purposes, or for creating specialized HTTP endpoints.

These are just a few examples of popular third-party modules available for Nginx . There are many more modules developed by the Nginx community that provide additional functionality and customization options for your Nginx server.

Nginx Core Modules

Nginx Core modules are integral and provide fundamental features for handling HTTP requests, managing server events, enabling SSL/TLS encryption, and more. Let’s explore some essential core modules and their functionalities:

HTTP Module

The HTTP module is responsible for handling HTTP requests and responses. It enables you to configure server-wide settings, set up virtual hosts, define location-based rules, and manage proxying and load balancing.

Events Module

The Events module allows you to configure how Nginx handles network connections and events. You can control parameters such as the maximum number of connections, timeouts, and the use of multi-threading or event-driven architectures.

The SSL module provides support for SSL/TLS encryption, allowing you to secure communication between clients and your server. It enables the configuration of SSL certificates, cipher suites, and other security-related settings.

Here is a list of some of the core modules available in Nginx:

ngx_http_core_module

The ngx_http_core_module provides the basic functionality of the HTTP server. It handles request processing, URI mapping, and access control. This module is essential for any HTTP server configuration in Nginx.

ngx_http_ssl_module

The ngx_http_ssl_module enables HTTPS support in Nginx by providing SSL/TLS encryption for secure communication between clients and the server. It allows you to configure SSL certificates and specify SSL-related settings.

ngx_http_access_module

The ngx_http_access_module allows you to control access to the server based on client IP addresses, domain names, or other request attributes. It provides directives like allow and deny to specify access rules and restrictions.

ngx_http_proxy_module

The ngx_http_proxy_module implements a proxy server functionality in Nginx. It enables Nginx to act as a reverse proxy , forwarding client requests to other servers and processing responses. This module is commonly used for load balancing and caching.

ngx_http_fastcgi_module

The ngx_http_fastcgi_module allows Nginx to interact with FastCGI servers, enabling the execution of dynamic scripts and applications. It provides directives to define FastCGI server addresses and control the communication between Nginx and the FastCGI server.

ngx_http_rewrite_module

The ngx_http_rewrite_module provides URL rewriting capabilities in Nginx. It allows you to modify request URIs or redirect requests based on defined rules. This module is often used for URL manipulation and redirection.

ngx_http_gzip_module

The ngx_http_gzip_module enables gzip compression for HTTP responses in Nginx. It reduces the size of transmitted data, improving performance and reducing bandwidth usage. This module can be configured to compress certain types of files or based on client request headers.

ngx_http_realip_module

The ngx_http_realip_module replaces client IP addresses in the request headers with the IP address of the proxy server. This module is useful when Nginx acts as a reverse proxy, as it ensures the backend server receives the correct client IP address for logging or other purposes.

ngx_http_limit_req_module

The ngx_http_limit_req_module allows you to limit the request rate from clients. It helps prevent abuse or excessive resource consumption by enforcing limits on the number of requests per second or per minute from individual IP addresses or other request attributes.

ngx_http_autoindex_module

The ngx_http_autoindex_module generates directory listings for directories that don’t have an index file. It allows users to browse the contents of a directory when an index file is not present, making it useful for serving static files.

ngx_http_auth_basic_module

The ngx_http_auth_basic_module provides HTTP basic authentication support in Nginx. It allows you to protect resources by requiring username and password authentication from clients. This module is commonly used to secure specific areas of a website.

ngx_http_stub_status_module

The ngx_http_stub_status_module exposes basic server status information through a simple HTML page. It provides metrics such as the number of active connections, requests being processed, and other useful information about the server’s performance.

ngx_http_v2_module

The ngx_http_v2_module enables support for the HTTP/2 protocol in Nginx. It allows clients and servers to communicate using the more efficient HTTP/2 protocol, which offers features such as multiplexing, server push, and header compression.

ngx_http_dav_module

The ngx_http_dav_module provides support for WebDAV (Web Distributed Authoring and Versioning) functionality in Nginx. It allows clients to perform file operations such as upload, download, and delete through HTTP.

ngx_http_flv_module

The ngx_http_flv_module enables streaming of FLV (Flash Video) files in Nginx. It allows clients to view FLV video files in real-time as they are being downloaded from the server, making it useful for video streaming applications.

ngx_http_mp4_module

The ngx_http_mp4_module provides support for streaming MP4 (MPEG-4 Part 14) files in Nginx. It allows clients to progressively play MP4 files while they are still being downloaded, making it useful for video streaming applications.

ngx_http_random_index_module

The ngx_http_random_index_module allows Nginx to select a random file from a directory to serve as the index file. This module is handy when you want to display a different file as the default page each time the directory is accessed.

ngx_http_secure_link_module

The ngx_http_secure_link_module provides a mechanism for creating secure links to protect your resources. It generates time-limited and tamper-proof URLs that grant temporary access to specific resources.

ngx_http_slice_module

The ngx_http_slice_module allows Nginx to serve large files in smaller slices or chunks. It helps optimize file transmission and enables clients to download files in parts, supporting resumable downloads.

ngx_http_ssi_module

The ngx_http_ssi_module enables Server Side Includes (SSI) functionality in Nginx. It allows you to include dynamic content in static HTML pages, such as displaying the current date, including the output of a script, or conditionally showing content based on request parameters.

ngx_http_userid_module

The ngx_http_userid_module assigns a unique identifier to clients visiting your server. It sets a cookie with a unique ID for each client, allowing you to track and identify individual users.

ngx_http_headers_module

The ngx_http_headers_module allows you to modify and manipulate HTTP headers in Nginx. It provides directives to add, modify, or remove headers from the client’s request or the server’s response.

ngx_http_referer_module

The ngx_http_referer_module allows you to block or control access based on the referring URL. It provides directives to restrict access to resources based on the HTTP Referer header.

ngx_http_memcached_module

The ngx_http_memcached_module integrates Nginx with a Memcached server. It allows you to cache and retrieve data from Memcached, improving performance by serving cached content directly from memory.

ngx_http_empty_gif_module

The ngx_http_empty_gif_module returns a 1×1 transparent GIF image. It is often used as a placeholder or tracking pixel.

ngx_http_geo_module

The ngx_http_geo_module provides geolocation-based features in Nginx. It allows you to define geographic IP ranges and perform actions based on the client’s location.

ngx_http_map_module

The ngx_http_map_module enables you to define key-value mappings and use them in various parts of the configuration. It is useful for conditional configuration based on variables.

ngx_http_split_clients_module

The ngx_http_split_clients_module allows you to split client traffic based on various factors, such as random distribution or by specific variables. It is commonly used for A/B testing or traffic splitting purposes.

ngx_http_upstream_module

The ngx_http_upstream_module provides functionality for load balancing and proxying requests to backend servers. It allows you to define a group of upstream servers and distribute client requests among them.

ngx_http_fastcgi_cache_module

The ngx_http_fastcgi_cache_module enables caching of FastCGI responses. It allows Nginx to store and serve cached content from FastCGI applications, improving performance and reducing the load on backend servers.

ngx_http_addition_module

The ngx_http_addition_module allows you to add additional content to HTTP responses. It provides directives to append or prepend content to the response body, headers, or both.

ngx_http_xslt_module

The ngx_http_xslt_module enables XSLT (Extensible Stylesheet Language Transformations) support in Nginx. It allows you to apply XSLT transformations to XML data before serving it to clients.

ngx_http_image_filter_module

The ngx_http_image_filter_module provides image processing capabilities in Nginx. It allows you to resize, crop, rotate, and perform other transformations on images dynamically.

ngx_http_sub_module

The ngx_http_sub_module enables response content substitution in Nginx. It allows you to replace specific strings or patterns in the response body with other content, providing the ability to modify the response on the fly.

ngx_http_dav_ext_module

The ngx_http_dav_ext_module extends the WebDAV (Web Distributed Authoring and Versioning) functionality in Nginx. It adds support for more advanced WebDAV features like file locking, properties, and DeltaV version control.

ngx_http_flv_live_module

The ngx_http_flv_live_module provides support for live FLV (Flash Video) streaming in Nginx. It allows clients to watch live video streams in FLV format.

ngx_http_gunzip_module

The ngx_http_gunzip_module enables on-the-fly decompression of gzipped HTTP responses. It automatically decompresses gzipped content before serving it to clients that do not support gzip compression.

ngx_http_mirror_module

The ngx_http_mirror_module allows you to mirror incoming requests to one or more remote servers. It is useful for performing A/B testing, load testing, or capturing requests for analysis.

ngx_http_auth_request_module

The ngx_http_auth_request_module enables subrequest-based authentication in Nginx. It allows you to perform an internal subrequest to authenticate a request before allowing access to protected resources.

ngx_http_perl_module

The ngx_http_perl_module integrates the Perl programming language into Nginx. It allows you to write custom modules or scripts in Perl to extend the functionality of Nginx.

ngx_http_geoip_module

The ngx_http_geoip_module provides geolocation-based features using the MaxMind GeoIP database in Nginx. It allows you to determine the geographic location of a client based on their IP address.

ngx_http_degradation_module

The ngx_http_degradation_module provides a mechanism to degrade or limit the functionality of Nginx based on various conditions. It allows you to control the behavior of Nginx under high load or other situations.

ngx_http_headers_more_module

The ngx_http_headers_more_module extends the functionality of the ngx_http_headers_module . It allows you to modify or add additional headers to the client’s request or the server’s response.

ngx_http_xslt_proc_module

The ngx_http_xslt_proc_module enables XSLT (Extensible Stylesheet Language Transformations) processing of XML data in Nginx. It allows you to apply XSLT transformations to XML data on the fly.

ngx_http_js_module

The ngx_http_js_module allows you to embed JavaScript code into Nginx configurations. It enables you to write custom modules or scripts in JavaScript to extend the functionality of Nginx.

These are just a few examples of the core modules available in Nginx. Nginx is highly modular, and there are many additional modules that can be added to extend its functionality further. By leveraging both core and third-party modules, you can unlock a vast array of possibilities and fine-tune your Nginx server to meet your specific needs.

Please Note: we have provided an overview of the functionality of each Nginx module. In future articles, we will take a closer look at each Nginx module individually, exploring their features and capabilities in depth. Stay tuned for dedicated articles on each module.

Nginx Modules Best Practice

When using Nginx modules, it’s important to follow best practices to ensure smooth integration, maintainability, and performance.

Here are some best practices for using Nginx modules:

- Select Nginx modules that align with your specific requirements. Consider factors such as stability, community support, compatibility with your Nginx version, and the module’s track record.

- Keep your Nginx modules up to date with the latest stable versions. This ensures you benefit from bug fixes, security patches, and new features.

- Thoroughly review the documentation provided by the module developers. Understand the module’s configuration directives, dependencies, and any limitations or considerations specific to the module.

- Before deploying a module in a production environment, test it thoroughly in a controlled staging or development environment. Verify its functionality, performance impact, and compatibility with your existing setup.

- Always create backups of your Nginx configuration files before making changes or adding modules. This allows you to revert back to a working configuration if any issues arise.

- Organize your Nginx configuration by keeping module-specific settings in separate files. This improves readability, makes it easier to manage and update individual Nginx modules, and allows for better modularization.

- Be mindful of module dependencies and avoid unnecessary dependencies between modules. Minimize the number of modules loaded to reduce resource usage and potential conflicts.

- Monitor the performance of your Nginx server after adding or updating modules. Keep an eye on resource utilization, response times, and error logs. Performance testing and benchmarking can help identify any bottlenecks or performance issues introduced by the Nginx modules.

- Regularly review the security posture of your Nginx installation , including the modules used. Stay informed about any security advisories or vulnerabilities associated with the modules and update them promptly.

- Engage with the Nginx community , including module developers and user forums. This provides an opportunity to seek help, share experiences, and contribute back to the community by reporting issues or providing feedback.

Related Posts

Exploring Nginx Modules: A Guide to Custom Module Development self.__wrap_b=(t,n,e)=>{e=e||document.querySelector(`[data-br="${t}"]`);let a=e.parentElement,r=R=>e.style.maxWidth=R+"px";e.style.maxWidth="";let o=a.clientWidth,c=a.clientHeight,i=o/2-.25,l=o+.5,u;if(o){for(;i+1 {self.__wrap_b(0,+e.dataset.brr,e)})).observe(a):process.env.NODE_ENV==="development"&&console.warn("The browser you are using does not support the ResizeObserver API. Please consider add polyfill for this API to avoid potential layout shifts or upgrade your browser. Read more: https://github.com/shuding/react-wrap-balancer#browser-support-information"))};self.__wrap_b(":R4mr36:",1)

Understanding Nginx Modules

Setting up the development environment, creating a custom module, compiling and installing the custom module, testing the custom module.

Nginx is a powerful, high-performance web server, reverse proxy server, and load balancer. It's well-known for its ability to handle a large number of connections and serve static files efficiently. One of the reasons behind its popularity is its modular architecture, which allows developers to extend its functionality by creating custom modules. In this blog post, we'll take an in-depth look at Nginx modules and guide you through the process of developing your custom module. We'll explore the different types of modules, their structure, and how to compile and install them. Let's dive in!

Nginx is designed with a modular architecture, which means its functionality is divided into smaller, independent components called modules. These modules can be enabled or disabled during the compilation process, allowing users to tailor Nginx to their specific needs. There are several types of modules in Nginx, including:

- Core modules

- Event modules

- Protocol modules (like HTTP, Mail, and Stream)

- HTTP modules

- Mail modules

- Stream modules

For this guide, we'll focus on developing custom HTTP modules since they're the most commonly used modules.

Before we start developing our custom module, let's set up a development environment. We'll need the following tools and dependencies:

- A Linux-based operating system (we'll use Ubuntu in this guide)

- Nginx source code

- GCC (GNU Compiler Collection) and development tools

- Text editor or IDE of your choice

First, install the necessary tools and dependencies:

Next, download the Nginx source code and extract it:

Now that our development environment is ready, let's create a simple "Hello, World!" module. We'll name it ngx_http_hello_module .

Step 1: Create the Module Directory and Source Files

Create a new directory for your module and navigate to it:

Next, create a new C source file named ngx_http_hello_module.c and open it in your favorite text editor or IDE.

Step 2: Define the Module's Structure

In the ngx_http_hello_module.c file, we'll start by including the necessary Nginx headers and defining the module's structure:

In the code above, we defined a simple "Hello, World!" HTTP module. We started by including the required Nginx headers and defining the module's structure, commands, and context. Then, we implemented the ngx_http_hello and ngx_http_hello_handler functions to handle the hello directive and generate the response.

Now that our custom module is ready, we need to compile and install it. First, navigate back to the Nginx source directory:

Next, configure the Nginx build with the --add-module option to include our custom module:

Compile and install Nginx:

Finally, start Nginx:

To test our custom module, we'll need to modify the Nginx configuration file located at /usr/local/nginx/conf/nginx.conf . Open the file in your favorite text editor and add the following line inside the location / block:

Your configuration file should look like this:

Restart Nginx to apply the changes:

Now, visit http://localhost/ in your web browser or use a command-line tool like curl :

You should see the "Hello, World!" message, indicating that our custom module is working as expected.

1. Can I use third-party modules with the official Nginx package?

Yes, you can use third-party modules with the official Nginx package. However, you'll need to recompile Nginx with the --add-module or --add-dynamic-module options to include the third-party module.

2. What is the difference between a static module and a dynamic module?

A static module is compiled directly into the Nginx binary, while a dynamic module is compiled as a separate shared object file ( .so ) that can be loaded at runtime. Static modules are always available, whereas dynamic modules can be loaded or unloaded as needed.

3. Can I load my custom module without recompiling Nginx?

Yes, you can compile your custom module as a dynamic module and load it at runtime without recompiling Nginx. To do this, use the --add-dynamic-module option during the configuration step and add the load_module directive to your Nginx configuration file.

4. How do I debug my custom module?

You can use a debugger like GDB (GNU Debugger) to debug your custom module. You'll need to compile Nginx with the -g flag for debugging symbols and start Nginx with the gdb command. Additionally, you can add ngx_log_debug() statements in your module's code to print debug messages in the Nginx error log.

5. Are there any resources for learning more about Nginx module development?

Yes, there are several resources available for learning more about Nginx module development. Some recommended resources include:

- The official Nginx Development Guide

- The book "Nginx HTTP Server" by Clément Nedelcu

- Various open-source Nginx modules on GitHub

Sharing is caring

Did you like what Mehul Mohan wrote? Thank them for their work by sharing it on social media.

No comment s so far

Curious about this topic? Continue your journey with these coding courses:

125 students learning

Husein Nasser

Backend Web Development with Python

Piyush Garg

Master Node.JS in Hindi

Why Make Your Own NGINX Modules? Theory and Practice

Vasiliy Soshnikov, Head of Development Group at Mail.Ru Group

Sometimes you have business goals which can be reached by developing your own modules for NGINX. NGINX modules can be business‑oriented and contain some business logic as well. However, how do you decide for certain that a module should be developed? How might NGINX help you with development?

In his session at NGINX Conf 2018 , Vasiliy provides the detailed knowledge you need to build your own NGINX modules, including details about NGINX's core, its modular architecture, and guiding principles for NGINX code development. Using real‑world case studies and business scenarios, he answers the question, "Why and when do you need to develop your own modules?"

The session is quite technical. To get the most out of it, attendees need at least intermediate‑level experience with NGINX code.

Experience F5 in action by testing our products in your pre-production environment.

Get a free trial

We can assess your needs and connect you with the right cloud provider, reseller partner, or F5 sales engineer.

We’re dedicated to building partnerships that drive your business forward.

Find a partner

Introduction

Welcome to my Nginx module guide!

To follow this guide, you need to know a decent amount of C. You should know about structs, pointers, and functions. You also need to know how the nginx.conf file works.

If you find a mistake in the guide, please report it in an issue !

The Handler Guide

Let’s get started with a quick hello world module called ngx_http_hello_world_module .

This module will be a handler, meaning that it will take a request and generate output.

In the nginx source, create a folder called ngx_http_hello_world_module , and make two files in it: config and ngx_http_hello_world_module.c .

The Config File

The config file is just a simple shell script that will be used at compile time to show Nginx where your module source is. As you can see, the config file tests to see if your nginx version supports dynamic modules (the test -n line). If it supports dynamic modules, the module is added the new way. Otherwise, it is added the old way.

ngx_http_hello_world_module.c

This C file is huge! Let’s go through it line by line:

The first line is a prototype for the function ngx_http_hello_world . We’ll define the function at the end of the file.

ngx_http_hello_world_commands is a static array of directives. In our module, we have only one directive: print_hello_world . It will have no arguments, so we put in NGX_CONF_NOARGS .

ngx_http_hello_world_module_ctx is an array of function references. The functions will be executed for various purposes such as preconfiguration, postconfiguration, etc. We don’t need this array in our module, but we still have to define it and fill it with NULL s.

ngx_http_hello_world_module is an array of definitions for the module. It tells where the array of directives and functions are ( ngx_http_hello_world_module and ngx_http_hello_world_module_ctx ). We can also add init and exit callback functions. In our module, we don’t need them so we put NULL s instead.

Now for the interesting part. ngx_http_hello_world_handler is the heart of our module. We want to print Hello World! on the screen, so we have an unsigned char * with our message in it. Right after that, there is another variable with the size of the message.

Next, we have to send the headers. Notice that ngx_http_hello_world_handler had 1 argument that was of type ngx_http_request_t . This is a custom struct made by Nginx. It has a member called headers_out , which we use to send the headers. After we are done setting the headers, we can send them with ngx_http_send_header(r) .

Now we have to send the body. ngx_buf_t is a buffer, and ngx_chain_t is a chain link. The chain links send responses buffer by buffer and point to the next link. In our module, there is no next link, so we set out->next to NULL . ngx_calloc_buf and ngx_alloc_chain_link are Nginx’s calloc wrappers that automatically take care of garbage collection. b->pos and b->last help us send our content. b->pos is the first position in the memory and b->last is the last position. b->memory is set to 1 because our content is read-only. b->last_buf tells that our buffer is the last buffer in the request.

Now that we’re done setting the body, we can send it with return ngx_http_output_filter(r, &out)

Now we define that function we prototyped in the beginning. We can show Nginx what our handler is called with clcf-> handler = ngx_http_hello_world_handler .

And we’re done with our C file! Time to build the module.

Building the Module

How to build the module:

In the Nginx source, run configure , make , and make install . If you only want to build the modules and not the Nginx server itself, you can run make modules .

Using the Module

To use the module, edit your nginx.conf file found in the conf directory in the install location.

When you’re done, you can run nginx ( <nginx_install_location>/sbin/nginx ) and take a look at your work at localhost:8000/test . You should get a blank page saying Hello World! . If so, congratulations! You made your first Nginx module! This module is the base for making any handler.

Printing All the URL Arguments

Modified ngx_http_hello_world_handler:

Now, we’ll modify our module slightly to print all the URL arguments (everything after the ? ). So if our request is localhost:8000/test?foo=bar&hello=world we should get foo=bar&hello=world printed in the body.

We need to modify the handler, ngx_http_hello_world_handler . Notice that the string Hello World! was changed to r->args.data , and strlen(ngx_hello_world) was changed to r->args.len . r->args stores all the arguments and is of type ngx_str_t . ngx_str_t s have a data and a len element, for storing the string and its length.

When you’re done, you should stop nginx ( <nginx_install_location>/sbin/nginx> -s stop ) and build again. After that’s done, start Nginx again and go to localhost:8000/test?foo=hello&bar=world . You should see foo=hello&bar=world printed in the body.

Many Buffers

Get your hello world template again and add another buffer ( ngx_buf_t *b2 ) and another chain link ( ngx_chain_t out2; ). Then allocate some memory with ngx_calloc_buf and ngx_alloc_chain_link . Everything is the same except that we are setting b->last_buf to 0 and out.next to out2 . This is because our original buffer is no longer the last one, and the next buffer is out2 .

Now, if we send the headers we only mention our original buffer because out links to the next buffer.

Rebuild, restart, and go to localhost:8000/test . You should see Hello World!Hello World! .

The Filter Guide

After a handler is loaded and run, all the filter modules are executed. Filters take the header and/or body, manipulate them, and then send them back.

Our module will add a music track to all web pages where the module is loaded.

Nothing is very different here, except that HTTP is replaced with HTTP_FILTER .

Let’s see how this is different from our handler. First we see our u_char : An HTML <audio> element with a song (from Wikipedia).

Next, instead of prototyping ngx_http_background_music , we just defined it. Normally, this function would be more interesting, but since this is just a demo module, we don’t need to do anything other than returning NGX_OK .

The filter is made of two parts: the header filter, and the body filter. For our header filter, we only have to add the content length of our background_music variable. After that, we pass on the baton to the next header filter with ngx_http_next_header_filter .

The body filter accepts 2 arguments: An ngx_http_request_t , and a chain link, ngx_chain_t . The chain link is from the handler and previous filters (if any). What we want to do is to prefix our audio element to the chain link in . It won’t be perfectly valid HTML, but it’s good enough for now.

Our buffer should have last_buf set to 0 because it isn’t the last buffer: The last buffer is in the in chain link. So we’ll just set link->next to in and call the next body filter.

The ngx_http_background_music_init just tells what our filter funtions are called.

And we’re done. Now build and reload nginx, and you should see… a 404 page? Yes, there will be a 404 page, but with an audio track above it.

There was a 404 page because there was no other handler given. If you just add an HTML file, that should be taken care of.

ngx_http_request_t

Example usage of the server variable:

This table lists some useful members of ngx_http_request_t

ngx_str_t usage:

The ngx_str_t datatype has 2 members: data and len . They allow you to access the contents of the string and it’s length.

Other Stuff

Troubleshooting, 1. only part of my text is showing up.

You have to correctly set the size in both the headers and in the buffer. If your string is a u_char* , use ngx_strlen , if it’s an ngx_str_t , use {variable_name}.len .

2. Some weird string is showing up after my text.

3. why is my filter not working (but compiling).

Make sure you’ve configured it correctly. The config file should have HTTP_FILTER instead of HTTP . Also, check if you’ve sent the body correctly, and make sure your buffer is in the chain link.

Useful Links

- Emiller’s Guide

- Nginx Main Module API

- Nginx Memory Management API

- Example Module: The EightC Module

The NGINX Handbook – Learn NGINX for Beginners

A young Russian developer named Igor Sysoev was frustrated by older web servers' inability to handle more than 10 thousand concurrent requests. This is a problem referred to as the C10k problem . As an answer to this, he started working on a new web server back in 2002.

NGINX was first released to the public in 2004 under the terms of the 2-clause BSD license. According to the March 2021 Web Server Survey , NGINX holds 35.3% of the market with a total of 419.6 million sites.

Thanks to tools like NGINXConfig by DigitalOcean and an abundance of pre-written configuration files on the internet, people tend to do a lot of copy-pasting instead of trying to understand when it comes to configuring NGINX.

I'm not saying that copying code is bad, but copying code without understanding is a big "no no".

Also NGINX is the kind of software that should be configured exactly according to the requirements of the application to be served and available resources on the host.

That's why instead of copying blindly, you should understand and then fine tune what you're copying – and that's where this handbook comes in.

After going through the entire book, you should be able to:

- Understand configuration files generated by popular tools as well as those found in various documentation.

- Configure NGINX as a web server, a reverse proxy server, and a load balancer from scratch.

- Optimize NGINX to get maximum performance out of your server.

Prerequisites

- Familiarity with the Linux terminal and common Unix programs such as ls , cat , ps , grep , find , nproc , ulimit and nano .

- A computer powerful enough to run a virtual machine or a $5 virtual private server.

- Understanding of web applications and a programming language such as JavaScript or PHP.

Table of Contents

Introduction to nginx, how to provision a local virtual machine, how to provision a virtual private server, how to install nginx on a provisioned server or virtual machine, introduction to nginx's configuration files, how to write your first configuration file, how to validate and reload configuration files, how to understand directives and contexts in nginx, how to serve static content using nginx, static file type handling in nginx, how to include partial config files, location matches, variables in nginx, redirects and rewrites, how to try for multiple files, logging in nginx, node.js with nginx, php with nginx, how to use nginx as a load balancer, how to configure worker processes and worker connections, how to cache static content, how to compress responses, how to understand the main configuration file, how to configure ssl.

- How to Enable HTTP/2

How to Enable Server Push

Project code.

You can find the code for the example projects in the following repository:

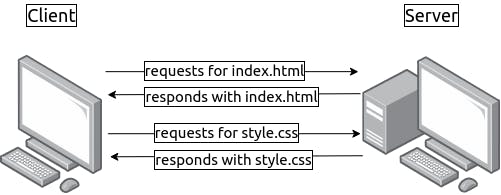

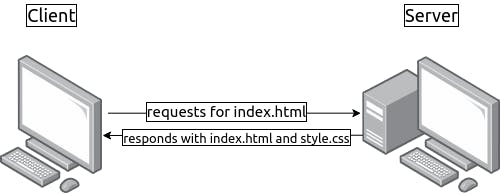

NGINX is a high performance web server developed to facilitate the increasing needs of the modern web. It focuses on high performance, high concurrency, and low resource usage. Although it's mostly known as a web server, NGINX at its core is a reverse proxy server.

NGINX is not the only web server on the market, though. One of its biggest competitors is Apache HTTP Server (httpd) , first released back on 1995. In spite of the fact that Apache HTTP Server is more flexible, server admins often prefer NGINX for two main reasons:

- It can handle a higher number of concurrent requests.

- It has faster static content delivery with low resource usage.

I won't go further into the whole Apache vs NGINX debate. But if you wish to learn more about the differences between them in detail, this excellent article from Justin Ellingwood may help.

In fact, to explain NGINX's request handling technique, I would like to quote two paragraphs from Justin's article here:

Nginx came onto the scene after Apache, with more awareness of the concurrency problems that would face sites at scale. Leveraging this knowledge, Nginx was designed from the ground up to use an asynchronous, non-blocking, event-driven connection handling algorithm. Nginx spawns worker processes, each of which can handle thousands of connections. The worker processes accomplish this by implementing a fast looping mechanism that continuously checks for and processes events. Decoupling actual work from connections allows each worker to concern itself with a connection only when a new event has been triggered.

If that seems a bit complicated to understand, don't worry. Having a basic understanding of the inner workings will suffice for now.

NGINX is faster in static content delivery while staying relatively lighter on resources because it doesn't embed a dynamic programming language processor. When a request for static content comes, NGINX simply responds with the file without running any additional processes.

That doesn't mean that NGINX can't handle requests that require a dynamic programming language processor. In such cases, NGINX simply delegates the tasks to separate processes such as PHP-FPM , Node.js or Python . Then, once that process finishes its work, NGINX reverse proxies the response back to the client.

NGINX is also a lot easier to configure thanks to a configuration file syntax inspired from various scripting languages that results in compact, easily maintainable configuration files.

How to Install NGINX

Installing NGINX on a Linux -based system is pretty straightforward. You can either use a virtual private server running Ubuntu as your playground, or you can provision a virtual machine on your local system using Vagrant.

For the most part, provisioning a local virtual machine will suffice and that's the way I'll be using in this article.

For those who doesn't know, Vagrant is an open-source tool by Hashicorp that allows you to provision virtual machines using simple configuration files.

For this approach to work, you'll need VirtualBox and Vagrant , so go ahead and install them first. If you need a little warm up on the topic, this tutorial may help.

Create a working directory somewhere in your system with a sensible name. Mine is ~/vagrant/nginx-handbook directory.

Inside the working directory create a file named Vagrantfile and put following content in there:

This Vagrantfile is the configuration file I talked about earlier. It contains information like name of the virtual machine, number of CPUs, size of RAM, the IP address, and more.

To start a virtual machine using this configuration, open your terminal inside the working directory and execute the following command:

The output of the vagrant up command may differ on your system, but as long as vagrant status says the machine is running, you're good to go.

Given that the virtual machine is now running, you should be able to SSH into it. To do so, execute the following command:

If everything's done correctly you should be logged into your virtual machine, which will be evident by the vagrant@nginx-handbook-box line on your terminal.

This virtual machine will be accessible on http://192.168.20.20 on your local machine. You can even assign a custom domain like http://nginx-handbook.test to the virtual machine by adding an entry to your hosts file:

Now append the following line at the end of the file:

Now you should be able to access the virtual machine on http://nginx-handbook.test URI in your browser.

You can stop or destroy the virtual machine by executing the following commands inside the working directory:

If you want to learn about more Vagrant commands, this cheat sheet may come in handy.

Now that you have a functioning Ubuntu virtual machine on your system, all that is left to do is install NGINX .

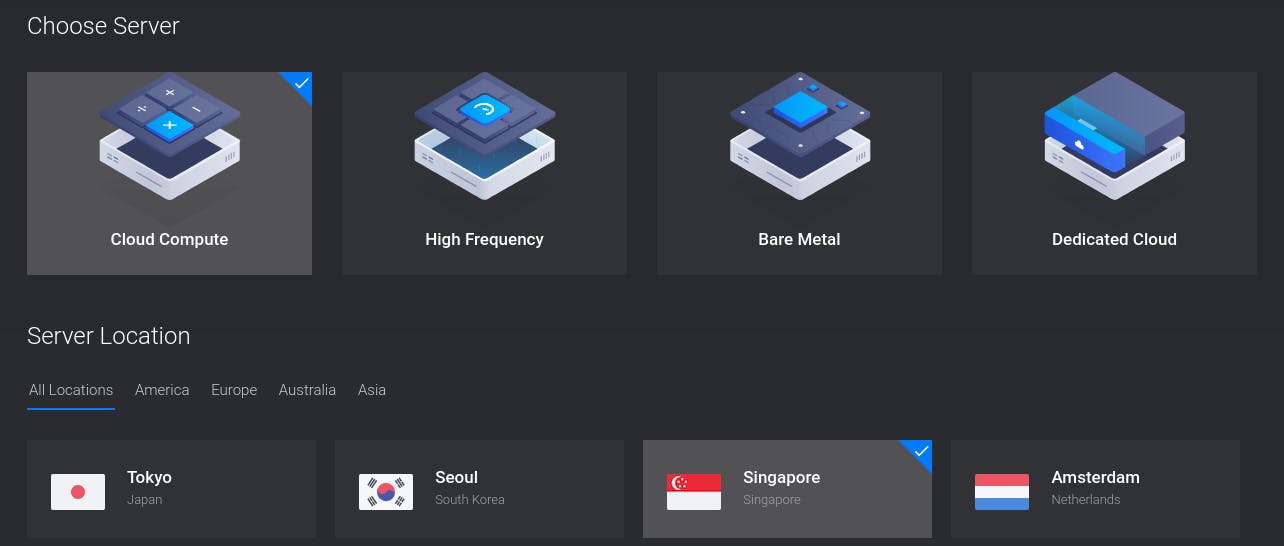

For this demonstration, I'll use Vultr as my provider but you may use DigitalOcean or whatever provider you like.

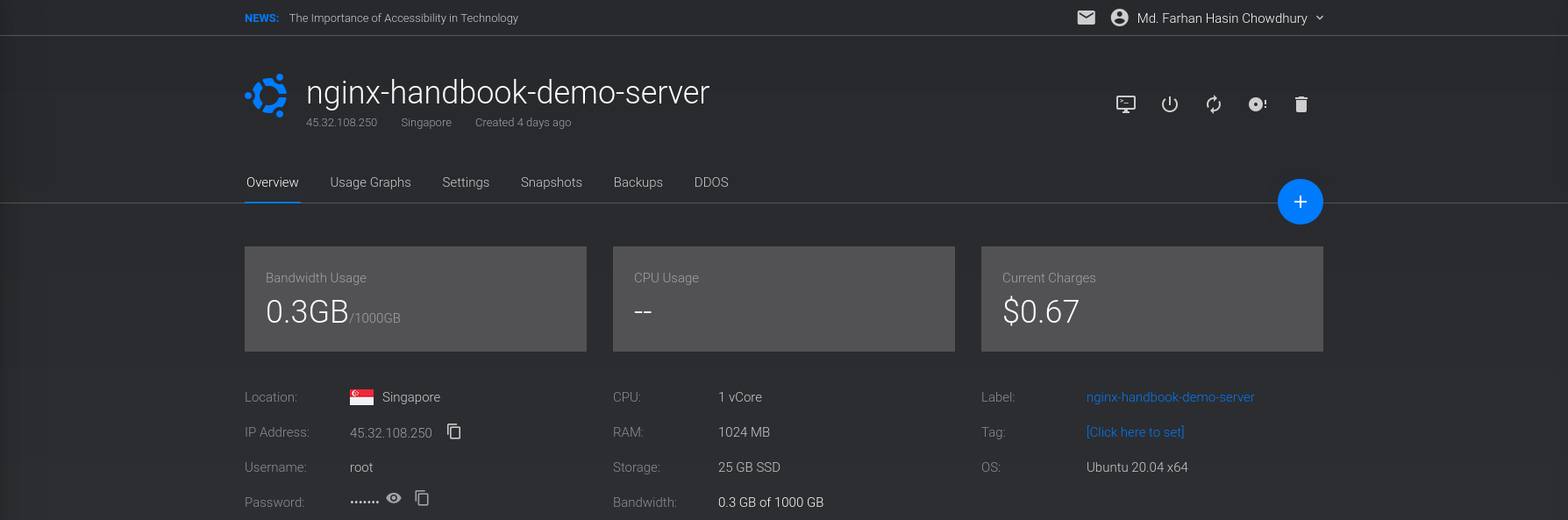

Assuming you already have an account with your provider, log into the account and deploy a new server:

On DigitalOcean, it's usually called a droplet. On the next screen, choose a location close to you. I live in Bangladesh which is why I've chosen Singapore:

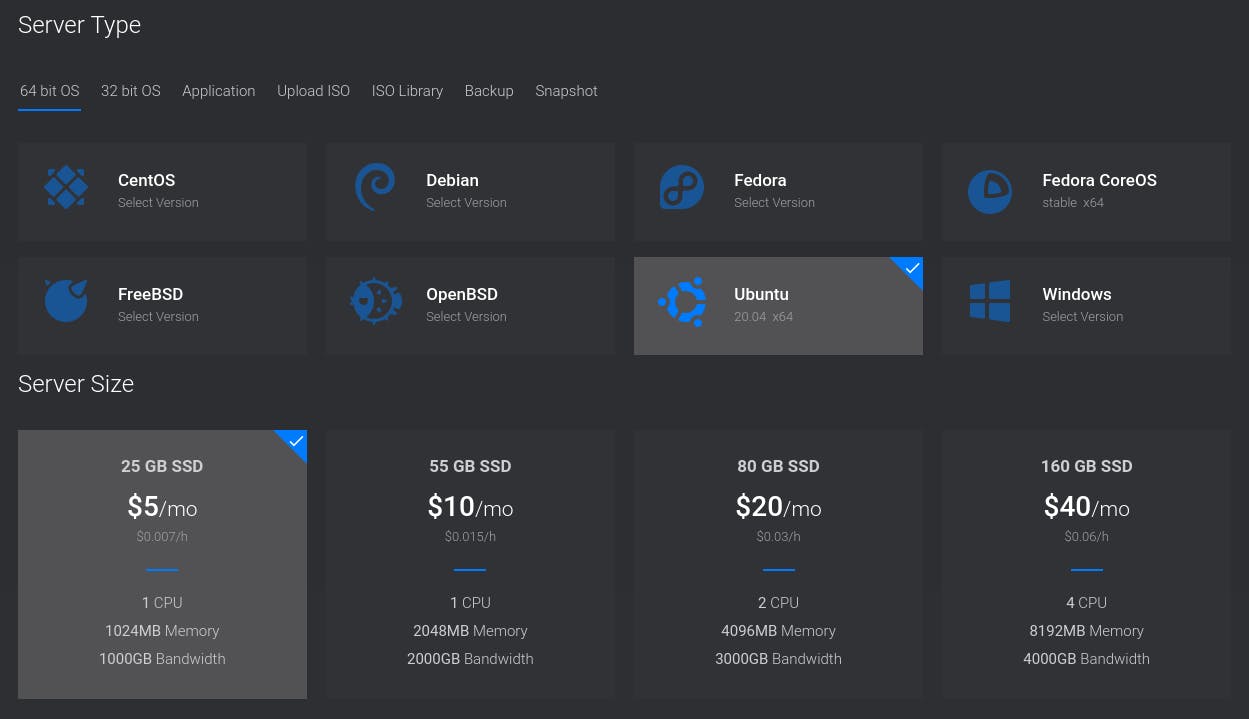

On the next step, you'll have to choose the operating system and server size. Choose Ubuntu 20.04 and the smallest possible server size:

Although production servers tend to be much bigger and more powerful than this, a tiny server will be more than enough for this article.

Finally, for the last step, put something fitting like nginx-hadnbook-demo-server as the server host and label. You can even leave them empty if you want.

Once you're happy with your choices, go ahead and press the Deploy Now button.

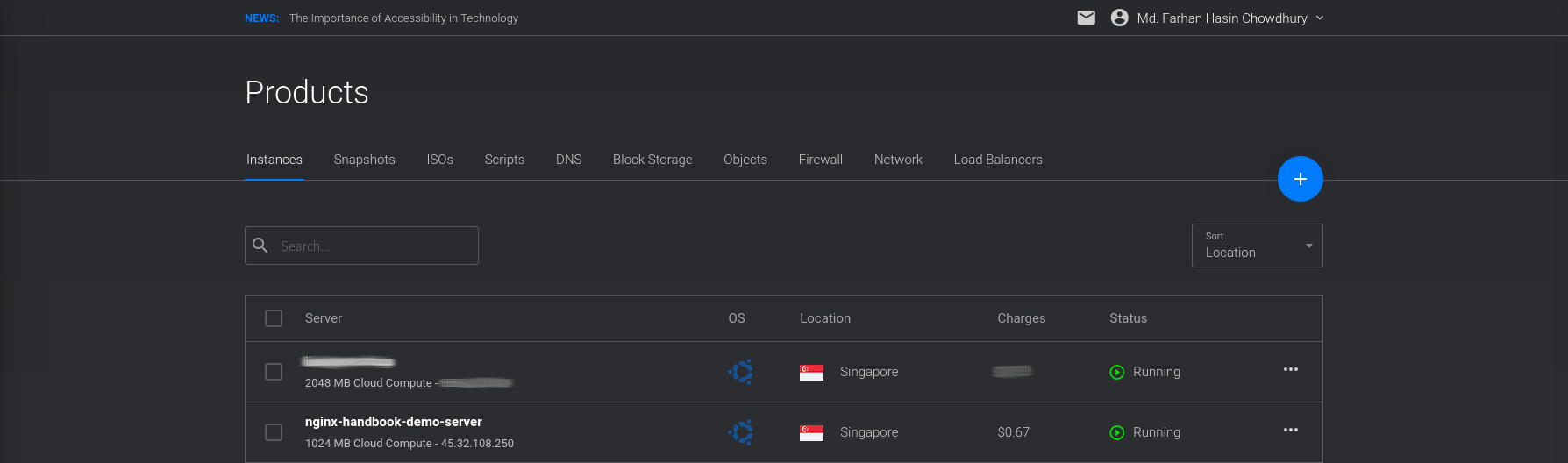

The deployment process may take some time to finish, but once it's done, you'll see the newly created server on your dashboard:

Also pay attention to the Status – it should say Running and not Preparing or Stopped . To connect to the server, you'll need a username and password.

Go into the overview page for your server and there you should see the server's IP address, username, and password:

The generic command for logging into a server using SSH is as follows:

So in the case of my server, it'll be:

You'll be asked if you want to continue connecting to this server or not. Answer with yes and then you'll be asked for the password. Copy the password from the server overview page and paste that into your terminal.

If you do everything correctly you should be logged into your server – you'll see the root@localhost line on your terminal. Here localhost is the server host name, and may differ in your case.

You can access this server directly by its IP address. Or if you own any custom domain, you can use that also.

Throughout the article you'll see me adding test domains to my operating system's hosts file. In case of a real server, you'll have to configure those servers using your DNS provider.

Remember that you'll be charged as long as this server is being used. Although the charge should be very small, I'm warning you anyways. You can destroy the server anytime you want by hitting the trash icon on the server overview page:

If you own a custom domain name, you may assign a sub-domain to this server. Now that you're inside the server, all that is left to is install NGINX .

Assuming you're logged into your server or virtual machine, the first thing you should do is performing an update. Execute the following command to do so:

After the update, install NGINX by executing the following command:

Once the installation is done, NGINX should be automatically registered as a systemd service and should be running. To check, execute the following command:

If the status says running , then you're good to go. Otherwise you may start the service by executing this command:

Finally for a visual verification that everything is working properly, visit your server/virtual machine with your favorite browser and you should see NGINX's default welcome page:

NGINX is usually installed on the /etc/nginx directory and the majority of our work in the upcoming sections will be done in here.

Congratulations! Bow you have NGINX up and running on your server/virtual machine. Now it's time to jump head first into NGINX.

As a web server, NGINX's job is to serve static or dynamic contents to the clients. But how that content are going to be served is usually controlled by configuration files.

NGINX's configuration files end with the .conf extension and usually live inside the /etc/nginx/ directory. Let's begin by cd ing into this directory and getting a list of all the files:

Among these files, there should be one named nginx.conf . This is the the main configuration file for NGINX. You can have a look at the content of this file using the cat program:

Whoa! That's a lot of stuff. Trying to understand this file at its current state will be a nightmare. So let's rename the file and create a new empty one:

I highly discourage you from editing the original nginx.conf file unless you absolutely know what you're doing. For learning purposes, you may rename it, but later on , I'll show you how you should go about configuring a server in a real life scenario.

How to Configure a Basic Web Server

In this section of the book, you'll finally get your hands dirty by configuring a basic static web server from the ground up. The goal of this section is to introduce you to the syntax and fundamental concepts of NGINX configuration files.

Start by opening the newly created nginx.conf file using the nano text editor:

Throughout the book, I'll be using nano as my text editor. You may use something more modern if you want to, but in a real life scenario, you're most likely to work using nano or vim on servers instead of anything else. So use this book as an opportunity to sharpen your nano skills. Also the official cheat sheet is there for you to consult whenever you need.

After opening the file, update its content to look like this:

If you have experience building REST APIs then you may guess from the return 200 "Bonjour, mon ami!\n"; line that the server has been configured to respond with a status code of 200 and the message "Bonjour, mon ami!".

Don't worry if you don't understand anything more than that at the moment. I'll explain this file line by line, but first let's see this configuration in action.

After writing a new configuration file or updating an old one, the first thing to do is check the file for any syntax mistakes. The nginx binary includes an option -t to do just that.

If you have any syntax errors, this command will let you know about them, including the line number.

Although the configuration file is fine, NGINX will not use it. The way NGINX works is it reads the configuration file once and keeps working based on that.

If you update the configuration file, then you'll have to instruct NGINX explicitly to reload the configuration file. There are two ways to do that.

- You can restart the NGINX service by executing the sudo systemctl restart nginx command.

- You can dispatch a reload signal to NGINX by executing the sudo nginx -s reload command.

The -s option is used for dispatching various signals to NGINX. The available signals are stop , quit , reload and reopen . Among the two ways I just mentioned, I prefer the second one simply because it's less typing.

Once you've reloaded the configuration file by executing the nginx -s reload command, you can see it in action by sending a simple get request to the server:

The server is responding with a status code of 200 and the expected message. Congratulations on getting this far! Now it's time for some explanation.

The few lines of code you've written here, although seemingly simple, introduce two of the most important terminologies of NGINX configuration files. They are directives and contexts .

Technically, everything inside a NGINX configuration file is a directive . Directives are of two types:

- Simple Directives

- Block Directives

A simple directive consists of the directive name and the space delimited parameters, like listen , return and others. Simple directives are terminated by semicolons.

Block directives are similar to simple directives, except that instead of ending with semicolons, they end with a pair of curly braces { } enclosing additional instructions.

A block directive capable of containing other directives inside it is called a context, that is events , http and so on. There are four core contexts in NGINX:

- events { } – The events context is used for setting global configuration regarding how NGINX is going to handle requests on a general level. There can be only one events context in a valid configuration file.

- http { } – Evident by the name, http context is used for defining configuration regarding how the server is going to handle HTTP and HTTPS requests, specifically. There can be only one http context in a valid configuration file.

- server { } – The server context is nested inside the http context and used for configuring specific virtual servers within a single host. There can be multiple server contexts in a valid configuration file nested inside the http context. Each server context is considered a virtual host.

- main – The main context is the configuration file itself. Anything written outside of the three previously mentioned contexts is on the main context.

You can treat contexts in NGINX like scopes in other programming languages. There is also a sense of inheritance among them. You can find an alphabetical index of directives on the official NGINX docs.

I've already mentioned that there can be multiple server contexts within a configuration file. But when a request reaches the server, how does NGINX know which one of those contexts should handle the request?

The listen directive is one of the ways to identify the correct server context within a configuration. Consider the following scenario:

Now if you send a request to http://nginx-handbook.test:80 then you'll receive "hello from port 80!" as a response. And if you send a request to http://nginx-handbook.test:8080, you'll receive "hello from port 8080!" as a response:

These two server blocks are like two people holding telephone receivers, waiting to respond when a request reaches one of their numbers. Their numbers are indicated by the listen directives.

Apart from the listen directive, there is also the server_name directive. Consider the following scenario of an imaginary library management application:

This is a basic example of the idea of virtual hosts. You're running two separate applications under different server names in the same server.

If you send a request to http://library.test then you'll get "your local library!" as a response. If you send a request to http://librarian.library.test, you'll get "welcome dear librarian!" as a response.

To make this demo work on your system, you'll have to update your hosts file to include these two domain names as well:

Finally, the return directive is responsible for returning a valid response to the user. This directive takes two parameters: the status code and the string message to be returned.

Now that you have a good understanding of how to write a basic configuration file for NGINX, let's upgrade the configuration to serve static files instead of plain text responses.

In order to serve static content, you first have to store them somewhere on your server. If you list the files and directory on the root of your server using ls , you'll find a directory called /srv in there:

This /srv directory is meant to contain site-specific data which is served by this system. Now cd into this directory and clone the code repository that comes with this book:

Inside the nginx-handbook-projects directory there should a directory called static-demo containing four files in total:

Now that you have the static content to be served, update your configuration as follows:

The code is almost the same, except the return directive has now been replaced by a root directive. This directive is used for declaring the root directory for a site.

By writing root /srv/nginx-handbook-projects/static-demo you're telling NGINX to look for files to serve inside the /srv/nginx-handbook-projects/static-demo directory if any request comes to this server. Since NGINX is a web server, it is smart enough to serve the index.html file by default.

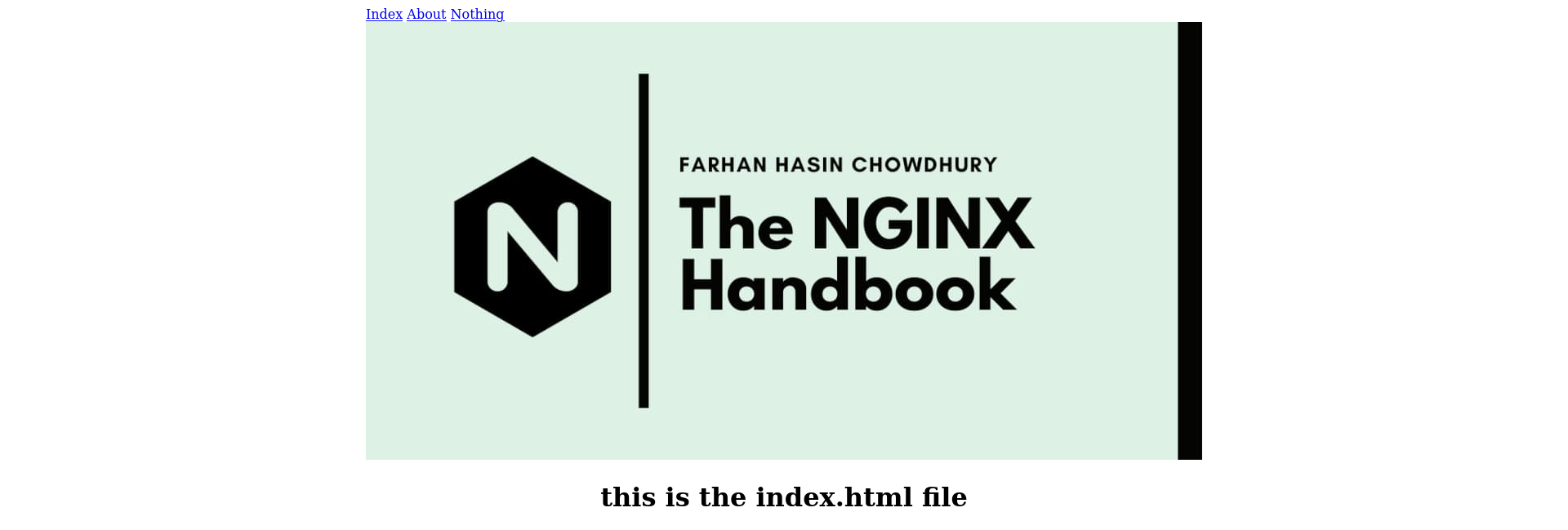

Let's see if this works or not. Test and reload the updated configuration file and visit the server. You should be greeted with a somewhat broken HTML site:

Although NGINX has served the index.html file correctly, judging by the look of the three navigation links, it seems like the CSS code is not working.

You may think that there is something wrong in the CSS file. But in reality, the problem is in the configuration file.

To debug the issue you're facing right now, send a request for the CSS file to the server:

Pay attention to the Content-Type and see how it says text/plain and not text/css . This means that NGINX is serving this file as plain text instead of as a stylesheet.

Although NGINX is smart enough to find the index.html file by default, it's pretty dumb when it comes to interpreting file types. To solve this problem update your configuration once again:

The only change we've made to the code is a new types context nested inside the http block. As you may have already guessed from the name, this context is used for configuring file types.

By writing text/html html in this context you're telling NGINX to parse any file as text/html that ends with the html extension.

You may think that configuring the CSS file type should suffice as the HTML is being parsed just fine – but no.

If you introduce a types context in the configuration, NGINX becomes even dumber and only parses the files configured by you. So if you only define the text/css css in this context then NGINX will start parsing the HTML file as plain text.

Validate and reload the newly updated config file and visit the server once again. Send a request for the CSS file once again, and this time the file should be parsed as a text/css file:

Visit the server for a visual verification, and the site should look better this time:

If you've updated and reloaded the configuration file correctly and you're still seeing the old site, perform a hard refresh.

Mapping file types within the types context may work for small projects, but for bigger projects it can be cumbersome and error-prone.

NGINX provides a solution for this problem. If you list the files inside the /etc/nginx directory once again, you'll see a file named mime.types .

Let's have a look at the content of this file:

The file contains a long list of file types and their extensions. To use this file inside your configuration file, update your configuration to look as follows:

The old types context has now been replaced with a new include directive. Like the name suggests, this directive allows you to include content from other configuration files.

Validate and reload the configuration file and send a request for the mini.min.css file once again:

In the section below on how to understand the main configuration file, I'll demonstrate how include can be used to modularize your virtual server configurations.

Dynamic Routing in NGINX

The configuration you wrote in the previous section was a very simple static content server configuration. All it did was match a file from the site root corresponding to the URI the client visits and respond back.

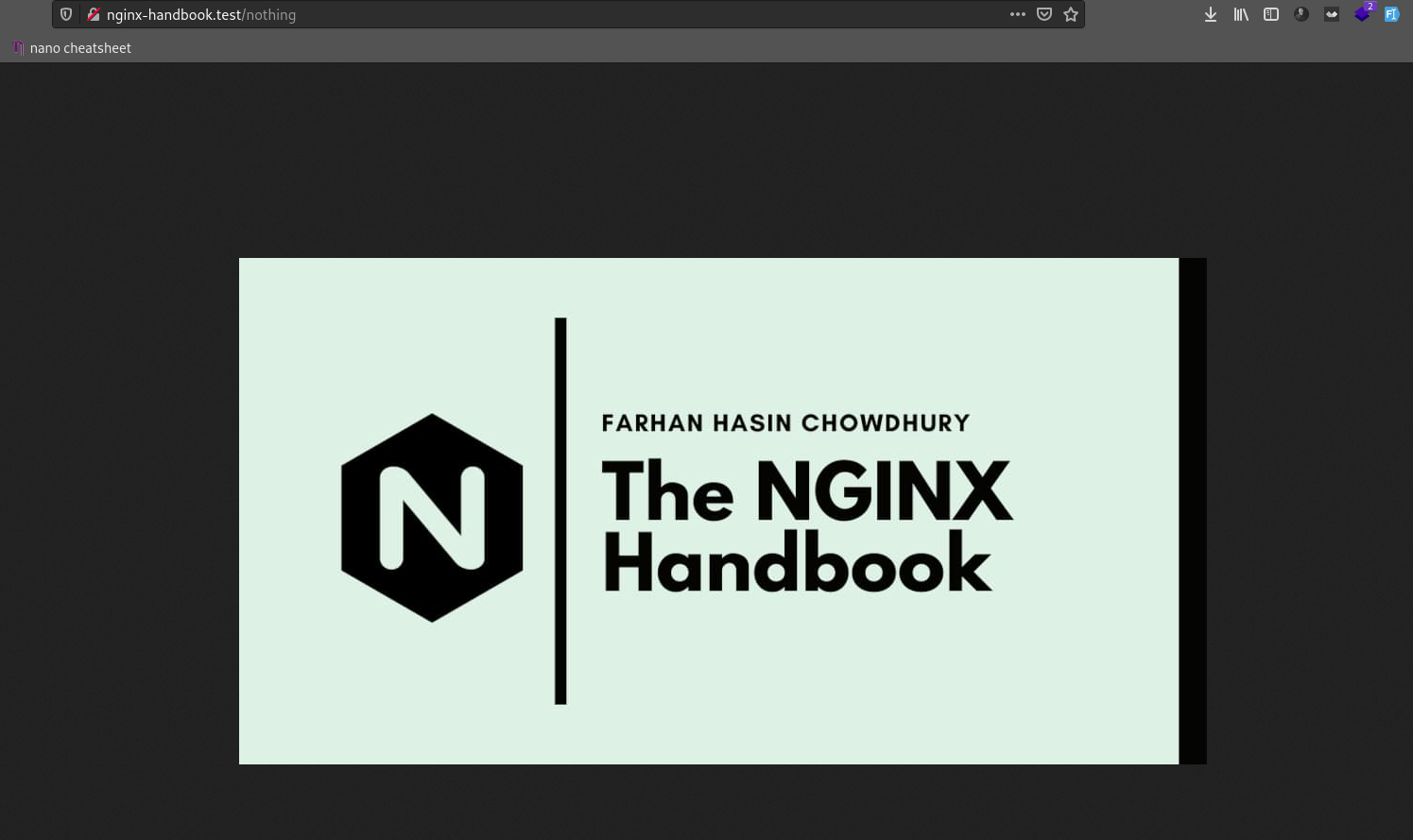

So if the client requests files existing on the root such as index.html , about.html or mini.min.css NGINX will return the file. But if you visit a route such as http://nginx-handbook.test/nothing, it'll respond with the default 404 page:

In this section of the book, you'll learn about the location context, variables, redirects, rewrites and the try_files directive. There will be no new projects in this section but the concepts you learn here will be necessary in the upcoming sections.

Also the configuration will change very frequently in this section, so do not forget to validate and reload the configuration file after every update.

The first concept we'll discuss in this section is the location context. Update the configuration as follows:

We've replaced the root directive with a new location context. This context is usually nested inside server blocks. There can be multiple location contexts within a server context.

If you send a request to http://nginx-handbook.test/agatha, you'll get a 200 response code and list of characters created by Agatha Christie .

Now if you send a request to http://nginx-handbook.test/agatha-christie, you'll get the same response:

This happens because, by writing location /agatha , you're telling NGINX to match any URI starting with "agatha". This kind of match is called a prefix match .

To perform an exact match , you'll have to update the code as follows:

Adding an = sign before the location URI will instruct NGINX to respond only if the URL matches exactly. Now if you send a request to anything but /agatha , you'll get a 404 response.

Another kind of match in NGINX is the regex match . Using this match you can check location URLs against complex regular expressions.

By replacing the previously used = sign with a ~ sign, you're telling NGINX to perform a regular expression match. Setting the location to ~ /agatha[0-9] means NIGINX will only respond if there is a number after the word "agatha":

A regex match is by default case sensitive, which means that if you capitalize any of the letters, the location won't work:

To turn this into case insensitive, you'll have to add a * after the ~ sign.

That will tell NGINX to let go of type sensitivity and match the location anyways.

NGINX assigns priority values to these matches, and a regex match has more priority than a prefix match.

Now if you send a request to http://nginx-handbook.test/Agatha8, you'll get the following response:

But this priority can be changed a little. The final type of match in NGINX is a preferential prefix match . To turn a prefix match into a preferential one, you need to include the ^~ modifier before the location URI:

This time, the prefix match wins. So the list of all the matches in descending order of priority is as follows:

Variables in NGINX are similar to variables in other programming languages. The set directive can be used to declare new variables anywhere within the configuration file:

Variables can be of three types

Apart from the variables you declare, there are embedded variables within NGINX modules. An alphabetical index of variables is available in the official documentation.

To see some of the variables in action, update the configuration as follows:

Now upon sending a request to the server, you should get a response as follows:

As you can see, the $host and $uri variables hold the root address and the requested URI relative to the root, respectively. The $args variable, as you can see, contains all the query strings.

Instead of printing the literal string form of the query strings, you can access the individual values using the $arg variable.

Now the response from the server should look like as follows:

The variables I demonstrated here are embedded in the ngx_http_core_module . For a variable to be accessible in the configuration, NGINX has to be built with the module embedding the variable. Building NGINX from source and usage of dynamic modules is slightly out of scope for this article. But I'll surely write about that in my blog.

A redirect in NGINX is same as redirects in any other platform. To demonstrate how redirects work, update your configuration to look like this:

Now if you send a request to http://nginx-handbook.test/about_page, you'll be redirected to http://nginx-handbook.test/about.html:

As you can see, the server responded with a status code of 307 and the location indicates http://nginx-handbook.test/about.html. If you visit http://nginx-handbook.test/about_page from a browser, you'll see that the URL will automatically change to http://nginx-handbook.test/about.html.

A rewrite directive, however, works a little differently. It changes the URI internally, without letting the user know. To see it in action, update your configuration as follows:

Now if you send a request to http://nginx-handbook/about_page URI, you'll get a 200 response code and the HTML code for about.html file in response:

And if you visit the URI using a browser, you'll see the about.html page while the URL remains unchanged:

Apart from the way the URI change is handled, there is another difference between a redirect and rewrite. When a rewrite happens, the server context gets re-evaluated by NGINX. So, a rewrite is a more expensive operation than a redirect.

The final concept I'll be showing in this section is the try_files directive. Instead of responding with a single file, the try_files directive lets you check for the existence of multiple files.

As you can see, a new try_files directive has been added. By writing try_files /the-nginx-handbook.jpg /not_found; you're instructing NGINX to look for a file named the-nginx-handbook.jpg on the root whenever a request is received. If it doesn't exist, go to the /not_found location.

So now if you visit the server, you'll see the image:

But if you update the configuration to try for a non-existent file such as blackhole.jpg, you'll get a 404 response with the message "sadly, you've hit a brick wall buddy!".

Now the problem with writing a try_files directive this way is that no matter what URL you visit, as long as a request is received by the server and the the-nginx-handbook.jpg file is found on the disk, NGINX will send that back.

And that's why try_files is often used with the $uri NGINX variable.

By writing try_files $uri /not_found; you're instructing NGINX to try for the URI requested by the client first. If it doesn't find that one, then try the next one.

So now if you visit http://nginx-handbook.test/index.html you should get the old index.html page. The same goes for the about.html page:

But if you request a file that doesn't exist, you'll get the response from the /not_found location:

One thing that you may have already noticed is that if you visit the server root http://nginx-handbook.test, you get the 404 response.

This is because when you're hitting the server root, the $uri variable doesn't correspond to any existing file so NGINX serves you the fallback location. If you want to fix this issue, update your configuration as follows:

By writing try_files $uri $uri/ /not_found; you're instructing NGINX to try for the requested URI first. If that doesn't work then try for the requested URI as a directory, and whenever NGINX ends up into a directory it automatically starts looking for an index.html file.

Now if you visit the server, you should get the index.html file just right:

The try_files is the kind of directive that can be used in a number of variations. In the upcoming sections, you'll encounter a few other variations but I would suggest that you do some research on the internet regarding the different usage of this directive by yourself.

By default, NGINX's log files are located inside /var/log/nginx . If you list the content of this directory, you may see something as follows:

Let's begin by emptying the two files.

If you do not dispatch a reopen signal to NGINX, it'll keep writing logs to the previously open streams and the new files will remain empty.

Now to make an entry in the access log, send a request to the server.

As you can see, a new entry has been added to the access.log file. Any request to the server will be logged to this file by default. But we can change this behavior using the access_log directive.

The first access_log directive inside the /admin location block instructs NGINX to write any access log of this URI to the /var/logs/nginx/admin.log file. The second one inside the /no_logging location turns off access logs for this location completely.

Validate and reload the configuration. Now if you send requests to these locations and inspect the log files, you should see something like this:

The error.log file, on the other hand, holds the failure logs. To make an entry to the error.log, you'll have to make NGINX crash. To do so, update your configuration as follows:

As you know, the return directive takes only two parameters – but we've given three here. Now try reloading the configuration and you'll be presented with an error message:

Check the content of the error log and the message should be present there as well:

Error messages have levels. A notice entry in the error log is harmless, but an emerg or emergency entry has to be addressed right away.

There are eight levels of error messages:

- debug – Useful debugging information to help determine where the problem lies.

- info – Informational messages that aren't necessary to read but may be good to know.

- notice – Something normal happened that is worth noting.

- warn – Something unexpected happened, however is not a cause for concern.

- error – Something was unsuccessful.

- crit – There are problems that need to be critically addressed.

- alert – Prompt action is required.

- emerg – The system is in an unusable state and requires immediate attention.

By default, NGINX records all level of messages. You can override this behavior using the error_log directive. If you want to set the minimum level of a message to be warn , then update your configuration file as follows:

Validate and reload the configuration, and from now on only messages with a level of warn or above will be logged.

Unlike the previous output, there are no notice entries here. emerg is a higher level error than warn and that's why it has been logged.

For most projects, leaving the error configuration as it is should be fine. The only suggestion I have is to set the minimum error level to warn . This way you won't have to look at unnecessary entries in the error log.

But if you want to learn more about customizing logging in NGINX, this link to the official docs may help.

How to Use NGINX as a Reverse Proxy

When configured as a reverse proxy, NGINX sits between the client and a back end server. The client sends requests to NGINX, then NGINX passes the request to the back end.

Once the back end server finishes processing the request, it sends it back to NGINX. In turn, NGINX returns the response to the client.

During the whole process, the client doesn't have any idea about who's actually processing the request. It sounds complicated in writing, but once you do it for yourself you'll see how easy NGINX makes it.

Let's see a very basic and impractical example of a reverse proxy:

Apart from validating and reloading the configuration, you'll also have to add this address to your hosts file to make this demo work on your system:

Now if you visit http://nginx.test, you'll be greeted by the original https://nginx.org site while the URI remains unchanged.

You should be even able to navigate around the site to an extent. If you visit http://nginx.test/en/docs/ you should get the http://nginx.org/en/docs/ page in response.

So as you can see, at a basic level, the proxy_pass directive simply passes a client's request to a third party server and reverse proxies the response to the client.

Now that you know how to configure a basic reverse proxy server, you can serve a Node.js application reverse proxied by NGINX. I've added a demo application inside the repository that comes with this article.

I'm assuming that you have experience with Node.js and know how to start a Node.js application using PM2.

If you've already cloned the repository inside /srv/nginx-handbook-projects then the node-js-demo project should be available in the /srv/nginx-handbook-projects/node-js-demo directory.

For this demo to work, you'll need to install Node.js on your server. You can do that following the instructions found here .

The demo application is a simple HTTP server that responds with a 200 status code and a JSON payload. You can start the application by simply executing node app.js but a better way is to use PM2 .

For those of you who don't know, PM2 is a daemon process manager widely used in production for Node.js applications. If you want to learn more, this link may help.

Install PM2 globally by executing sudo npm install -g pm2 . After the installation is complete, execute following command while being inside the /srv/nginx-handbook-projects/node-js-demo directory:

Alternatively you can also do pm2 start /srv/nginx-handbook-projects/node-js-demo/app.js from anywhere on the server. You can stop the application by executing the pm2 stop app command.

The application should be running now but should not be accessible from outside of the server. To verify if the application is running or not, send a get request to http://localhost:3000 from inside your server:

If you get a 200 response, then the server is running fine. Now to configure NGINX as a reverse proxy, open your configuration file and update its content as follows:

Nothing new to explain here. You're just passing the received request to the Node.js application running at port 3000. Now if you send a request to the server from outside you should get a response as follows:

Although this works for a basic server like this, you may have to add a few more directives to make it work in a real world scenario depending on your application's requirements.

For example, if your application handles web socket connections, then you should update the configuration as follows:

The proxy_http_version directive sets the HTTP version for the server. By default it's 1.0, but web socket requires it to be at least 1.1. The proxy_set_header directive is used for setting a header on the back-end server. Generic syntax for this directive is as follows:

So, by writing proxy_set_header Upgrade $http_upgrade; you're instructing NGINX to pass the value of the $http_upgrade variable as a header named Upgrade – same for the Connection header.

If you would like to learn more about web socket proxying, this link to the official NGINX docs may help.

Depending on the headers required by your application, you may have to set more of them. But the above mentioned configuration is very commonly used to serve Node.js applications.

PHP and NGINX go together like bread and butter. After all the E and the P in the LEMP stack stand for NGINX and PHP.

I'm assuming you have experience with PHP and know how to run a PHP application.

I've already included a demo PHP application in the repository that comes with this article. If you've already cloned it in the /srv/nginx-handbook-projects directory, then the application should be inside /srv/nginx-handbook-projects/php-demo .

For this demo to work, you'll have to install a package called PHP-FPM. To install the package, you can execute following command:

To test out the application, start a PHP server by executing the following command while inside the /srv/nginx-handbook-projects/php-demo directory:

Alternatively you can also do php -S localhost:8000 /srv/nginx-handbook-projects/php-demo/index.php from anywhere on the server.

The application should be running at port 8000 but it can not be accessed from the outside of the server. To verify, send a get request to http://localhost:8000 from inside your server:

If you get a 200 response then the server is running fine. Just like the Node.js configuration, now you can simply proxy_pass the requests to localhost:8000 – but with PHP, there is a better way.

The FPM part in PHP-FPM stands for FastCGI Process Module. FastCGI is a protocol just like HTTP for exchanging binary data. This protocol is slightly faster than HTTP and provides better security.

To use FastCGI instead of HTTP, update your configuration as follows:

Let's begin with the new index directive. As you know, NGINX by default looks for an index.html file to serve. But in the demo-project, it's called index.php. So by writing index index.php , you're instructing NGINX to use the index.php file as root instead.

This directive can accept multiple parameters. If you write something like index index.php index.html , NGINX will first look for index.php. If it doesn't find that file, it will look for an index.html file.

The try_files directive inside the first location context is the same as you've seen in a previous section. The =404 at the end indicates the error to throw if none of the files are found.

The second location block is the place where the main magic happens. As you can see, we've replaced the proxy_pass directive by a new fastcgi_pass . As the name suggests, it's used to pass a request to a FastCGI service.

The PHP-FPM service by default runs on port 9000 of the host. So instead of using a Unix socket like I've done here, you can pass the request to http://localhost:9000 directly. But using a Unix socket is more secure.

If you have multiple PHP-FPM versions installed, you can simply list all the socket file locations by executing the following command:

The /run/php/php-fpm.sock file refers to the latest version of PHP-FPM installed on your system. I prefer using the one with the version number. This way even if PHP-FPM gets updated, I'll be certain about the version I'm using.

Unlike passing requests through HTTP, passing requests through FPM requires us to pass some extra information.

The general way of passing extra information to the FPM service is using the fastcgi_param directive. At the very least, you'll have to pass the request method and the script name to the back-end service for the proxying to work.

The fastcgi_param REQUEST_METHOD $request_method; passes the request method to the back-end and the fastcgi_param SCRIPT_FILENAME $realpath_root$fastcgi_script_name; line passes the exact location of the PHP script to run.

At this state, your configuration should work. To test it out, visit your server and you should be greeted by something like this:

Well, that's weird. A 500 error means NGINX has crashed for some reason. This is where the error logs can come in handy. Let's have a look at the last entry in the error.log file:

Seems like the NGINX process is being denied permission to access the PHP-FPM process.

One of the main reasons for getting a permission denied error is user mismatch. Have a look at the user owning the NGINX worker process.

As you can see, the process is currently owned by nobody . Now inspect the PHP-FPM process.