- ePermitTest.com

- Drivers education

- Distracted Driving

Visual Search Patterns for Safe Driving: Proactive Scanning

When it comes to collecting visual information while driving, there is a right way and a wrong way to go about it. Knowing where to look and how long for can be confusing for new drivers, particularly when there is so much to keep track of inside your car, right in front of the vehicle and 20 seconds ahead of you on the roadway. To drive safely, you need to adopt a systematic and efficient method of visually scanning your environment .

Immediate range

Secondary range, target area range, proactive scanning.

Never allow yourself to stay focused on one point on the roadway while driving. Sure, you need to monitor the situation on the road directly in front of your vehicle, but you also need to monitor the gauges inside your car and the situation on the roadway some distance ahead. Organizing a visual search of the roadway is easier and more effective when we sort the things we need to monitor into ranges and switch our attention between these points at regular intervals.

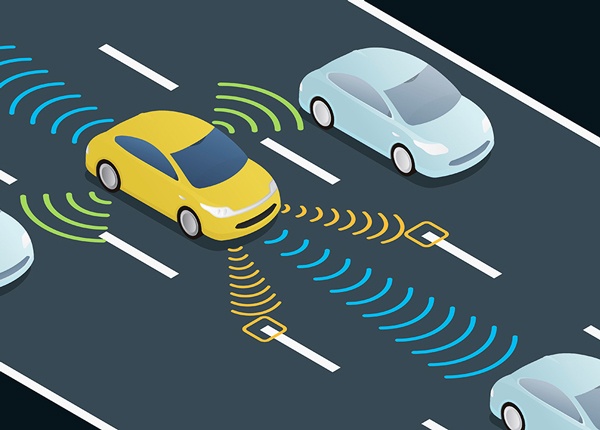

Everything in the area 4 to 6 seconds ahead of you, including the car’s dashboard, falls into your immediate range . The rear of the car in front of you should mark the far end of your immediate range when you are driving at a safe following distance . All the visual information you receive with this range will tell you how to set your speed and position the vehicle within the lane . Therefore, by the time a point at which you need to stop, turn or merge has entered your immediate range, you should already have started the necessary maneuver.

Beyond your immediate range is the secondary range , which covers everything from the end of the immediate range up to 12 to 15 seconds ahead of your vehicle. Any visual information gathered from your secondary range will help you make decisions about your speed and lane position. At this distance, you may prepare to execute a maneuver, respond to an upcoming situation and communicate with oncoming traffic .

The farthest range you must scan is your target area range (also known as the visual lead area ) which covers the area about 20 to 30 seconds ahead of your vehicle. When directing your attention to the target area range , you should look for all key visual information relating to potential hazards and changes in the roadway environment that may require action as the target area enters your secondary range. You should aim to select a target area as far ahead as possible while maintaining a clear line of sight. This will give you the maximum amount of time to absorb and react to visual information.

While driving, you must always keep your eyes moving between these three ranges so that no important information is neglected. The vehicle immediately in front of you may obstruct your view to some extent, though you can maximize your view of the secondary and target area ranges by maintaining a safe following distance and positioning your vehicle appropriately within the lane. Allow a greater following distance when traveling behind very large vehicles , as they may completely obscure your line of sight and hide other smaller vehicles.

In addition to alternating between the immediate, secondary and target area ranges, you must glance at your mirrors every three to five seconds and visually check the space to the sides of your vehicle. Remember that in doing so, you must not take your attention away from the roadway for more than about half a second at a time.

Would you pass a driving test today?

Find out with our free quiz!

Like the article? Give us 5 points!

Click a star to add your vote

Safe Following Distance

It is impossible to drive safely and attentively without leaving enough space between your vehicle and the car ahead of you. Maintaining an adequate following distance is crucial to maximize your view of the roadway up ahead.

The Effects of Speed

Keeping speed to a minimum is one of the best risk-reducing tactics you can employ as an attentive driver. As the speed you are traveling at increases, so too does the danger you are exposed to and the challenges you face.

Interacting With Other Drivers

Without effective communication between motorists, it would be impossible to predict the movement of other vehicles and negotiate the roadway safely. Attentive, conscientious motorists think about how their actions will affect other drivers and endeavor to behave considerately, at all times. It is not simply a matter of being polite, it is a matter of safety.

The Importance of Paying Attention

Your ability to fully and consistently focus your attention on the environment around your vehicle is every bit as important as your road rule knowledge and vehicle control skills. Paying attention while driving is an important skill which must not be overlooked while you’re learning to drive.

The Importance of Good Vision

No sense is more important to a driver than vision. As your eyes are responsible for 90% of the information you receive while driving, good vision is essential in making safe and appropriate driving decisions.

Vision Impairments

People with less than 20/40 vision do not qualify for an unrestricted driver’s license in most states. However, there are vast numbers of people with poorer than 20/40 vision who can drive safely and legally under a restricted license, providing they wear corrective glasses or contact lenses. Only in extreme cases of vision impairment or blindness will a person be refused a driving license altogether.

Mental Skills for Driving

The “vision, memory and understanding” trinity allows you to assess and make decisions based on all the information your eyes receive while you’re driving. If you do not receive accurate visual information due to a vision impairment, or do not have relevant memory information stored in your brain to help you make sense of what you have seen, you may not respond to roadway hazards appropriately.

Proprioception and Kinesthesia

While most of the information we receive while driving is visual, our other senses are important too. In addition to sight, our brains collect information about the world around us via hearing, smell, taste and touch.

Visual Targeting

Visual targeting is the practice of focusing your attention on a stationary object which is 12 to 20 seconds ahead of your vehicle. As you move closer to your visual target, you should then select a new fixed object within that 12 to 20-second window, repeating this process continually as you move along the roadway.

- Select State

- Connecticut

- Massachusetts

- Mississippi

- New Hampshire

- North Carolina

- North Dakota

- Pennsylvania

- Rhode Island

- South Carolina

- South Dakota

- Washington D.C.

- West Virginia

- In-Car Driving Lessons

- Traffic School

Practice Permit Test

- DMV Information

Visual Search Strategies

Welcome to our quick & easy driving information guide.

Helpful Driving Information

- Start Your Drivers Ed Course Today

- Signs, Signals, and Markings

- Being Fit to Drive

- Driving Techniques

- City, Rural, and Freeway Driving

- Sharing the Road with Others

- Car Information

- Auto Central

- Useful Driving Terms

Online Drivers Ed

Courses available for all skill levels. Select your state to get started.

Get ready for the permit test with DriversEd.com

Safe driving depends on your ability to notice many things at once. Our eyes provide two types of visions: central vision and peripheral or side vision. Central vision allows us to make very important judgments like estimating distance and understanding details in the path ahead, whereas peripheral vision helps us detect events to the side that are important to us, even when we're not looking directly at them. Most driving mistakes are caused by bad habits in the way drivers use their eyes.

IPDE (Identify, Predict, Decide, and Execute) is an important concept in defensive driving. To know more about its principles, read carefully the following section.

In order to avoid last minute moves and spot possible traffic hazards, you should always look down the road ahead of your vehicle. Start braking early if you see any hazards or traffic ahead of you slowing down. Also check the space between your car and any vehicles in the lane next to you. It is very important to check behind you before you change lanes, slow down quickly, back up, or drive down a long or steep hill. You should also glance at your instrument panel often to ensure there are no problems with the vehicle and to verify your speed.

- Using Your Eyes Effectively

- Visual Search Categories

- Scanning The Road

Hear about tips, offers, & more.

- General Questions

- Payment Info

- System Requirements

- Course Requirements

- Privacy Policy

- Terms and Conditions

- California Driving Lessons

- Georgia Driving Lessons

- Ohio Driving Lessons

- Texas Driving Lessons

- California Traffic School

- Michigan BDIC

- Texas Defensive Driving

- Texas DPS Driving Record

- More States

- Arizona Drivers Ed

- California Drivers Ed

- Colorado Drivers Ed

- Florida Drivers Ed

- Florida Permit Test

- Florida Drug & Alcohol Course

- Georgia Drivers Ed

- Idaho Drivers Ed

- Indiana Drivers Ed

- Minnesota Drivers Ed

- Nebraska Drivers Ed

- Ohio Drivers Ed

- Oklahoma Drivers Ed

- Pennsylvania Drivers Ed

- Texas Drivers Ed

- Texas Parent-Taught Drivers Ed

- Utah Drivers Ed

- Virginia Drivers Ed

- Wisconsin Drivers Ed

- Colorado 55+ Mature Drivers Ed

- Nationwide 18+ Adult Drivers Ed

- Illinois 18-21 Adult Drivers Course

- Texas 18+ Adult Drivers Course

- Become a Partner

- Facebook FanPage

- Twitter Feed

- Driving Information

- Drivers Ed App

© 1997-2024 DriversEd.com. All rights reserved. Please see our privacy policy for more details.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Review Article

- Published: 08 March 2017

Five factors that guide attention in visual search

- Jeremy M. Wolfe 1 &

- Todd S. Horowitz 2

Nature Human Behaviour volume 1 , Article number: 0058 ( 2017 ) Cite this article

11k Accesses

474 Citations

49 Altmetric

Metrics details

- Human behaviour

- Visual system

How do we find what we are looking for? Even when the desired target is in the current field of view, we need to search because fundamental limits on visual processing make it impossible to recognize everything at once. Searching involves directing attention to objects that might be the target. This deployment of attention is not random. It is guided to the most promising items and locations by five factors discussed here: bottom-up salience, top-down feature guidance, scene structure and meaning, the previous history of search over timescales ranging from milliseconds to years, and the relative value of the targets and distractors. Modern theories of visual search need to incorporate all five factors and specify how these factors combine to shape search behaviour. An understanding of the rules of guidance can be used to improve the accuracy and efficiency of socially important search tasks, from security screening to medical image perception.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Incorporating the properties of peripheral vision into theories of visual search

Active visual search in naturalistic environments reflects individual differences in classic visual search performance

Feature-based attention warps the perception of visual features

Hyman, I. E., Boss, S. M., Wise, B. M., McKenzie, K. E. & Caggiano, J. M. Did you see the unicycling clown? Inattentional blindness while walking and talking on a cell phone. Appl. Cognitive Psych. 24 , 597–607 (2010).

Article Google Scholar

Keshvari, S. & Rosenholtz, R. Pooling of continuous features provides a unifying account of crowding. J. Vis. 16 , 39 (2016).

Article PubMed PubMed Central Google Scholar

Rosenholtz, R., Huang, J. & Ehinger, K. A. Rethinking the role of top-down attention in vision: effects attributable to a lossy representation in peripheral vision. Front. Psychol. http://dx.doi.org/10.3389/fpsyg.2012.00013 (2012).

Wolfe, J. M. What do 1,000,000 trials tell us about visual search? Psychol. Sci. 9 , 33–39 (1998).

Moran, R., Zehetleitner, M., Liesefeld, H., Müller, H. & Usher, M. Serial vs. parallel models of attention in visual search: accounting for benchmark RT-distributions. Psychon. B. Rev. 23 , 1300–1315 (2015).

Townsend, J. T. & Wenger, M. J. The serial-parallel dilemma: a case study in a linkage of theory and method. Psychon. B. Rev. 11 , 391–418 (2004).

Egeth, H. E., Virzi, R. A. & Garbart, H. Searching for conjunctively defined targets. J. Exp. Psychol. Human 10 , 32–39 (1984).

Article CAS Google Scholar

Kristjansson, A. Reconsidering visual search. i-Perception http://dx.doi.org/10.1177/2041669515614670 (2015).

Wolfe, J. M. Visual search revived: the slopes are not that slippery: a comment on Kristjansson (2015). i-Perception http://dx.doi.org/10.1177/2041669516643244 (2016).

Neider, M. B. & Zelinsky, G. J. Exploring set size effects in scenes: identifying the objects of search. Vis. Cogn. 16 , 1–10 (2008).

Wolfe, J. M., Alvarez, G. A., Rosenholtz, R., Kuzmova, Y. I. & Sherman, A. M. Visual search for arbitrary objects in real scenes. Atten. Percept. Psychophys. 73 , 1650–1671 (2011).

Kovacs, I. & Julesz, B. A closed curve is much more than an incomplete one: effect of closure in figure-ground segmentation. Proc. Natl Acad. Sci. USA 90 , 7495–7497 (1993).

Article CAS PubMed PubMed Central Google Scholar

Taylor, S. & Badcock, D. Processing feature density in preattentive perception. Percept. Psychophys. 44 , 551–562 (1988).

Article CAS PubMed Google Scholar

Wolfe, J. M. & DiMase, J. S. Do intersections serve as basic features in visual search? Perception 32 , 645–656 (2003).

Article PubMed Google Scholar

Buetti, S., Cronin, D. A., Madison, A. M., Wang, Z. & Lleras, A. Towards a better understanding of parallel visual processing in human vision: evidence for exhaustive analysis of visual information. J. Exp. Psychol. Gen. 145 , 672–707 (2016).

Duncan, J. & Humphreys, G. W. Visual search and stimulus similarity. Psychol. Rev. 96 , 433–458 (1989).

Koehler, K., Guo, F., Zhang, S. & Eckstein, M. P. What do saliency models predict? J. Vis. 14 , 14 (2014).

Koch, C. & Ullman, S. Shifts in selective visual attention: towards the underlying neural circuitry. Human Neurobiol. 4 , 219–227 (1985).

CAS Google Scholar

Itti, L., Koch, C. & Niebur, E. A model of saliency-based visual attention for rapid scene analysis. IEEE T. Pattern Anal. 20 , 1254–1259 (1998).

Itti, L. & Koch, C. A saliency-based search mechanism for overt and covert shifts of visual attention. Vision. Res 40 , 1489–1506 (2000).

Bruce, N. D. B., Wloka, C., Frosst, N., Rahman, S. & Tsotsos, J. K. On computational modeling of visual saliency: examining what's right, and what's left. Vision Res. 116 , 95–112 (2015).

Zhang, L., Tong, M. H., Marks, T. K., Shan, H. & Cottrell, G. W. SUN: A Bayesian framework for saliency using natural statistics. J. Vis. 8 , 1–20 (2008).

Henderson, J. M., Malcolm, G. L. & Schandl, C. Searching in the dark: cognitive relevance drives attention in real-world scenes. Psychon. Bull. Rev. 16 , 850–856 (2009).

Tatler, B. W., Hayhoe, M. M., Land, M. F. & Ballard, D. H. Eye guidance in natural vision: reinterpreting salience. J. Vis. 11 , 5 (2011).

Nuthmann, A. & Henderson, J. M. Object-based attentional selection in scene viewing. J. Vis. 10 , 20 (2010).

Einhäuser, W., Spain, M. & Perona, P. Objects predict fixations better than early saliency. J. Vis. 8 , 18 (2008).

Stoll, J., Thrun, M., Nuthmann, A. & Einhäuser, W. Overt attention in natural scenes: objects dominate features. Vision Res. 107 , 36–48 (2015).

Maunsell, J. H. & Treue, S. Feature-based attention in visual cortex. Trends Neurosci. 29 , 317–322 (2006).

Nordfang, M. & Wolfe, J. M. Guided search for triple conjunctions. Atten. Percept. Psychophys. 76 , 1535–1559 (2014).

Friedman-Hill, S. R. & Wolfe, J. M. Second-order parallel processing: visual search for the odd item in a subset. J. Exp. Psychol. Human 21 , 531–551 (1995).

Olshausen, B. A. & Field, D. J. Sparse coding of sensory inputs. Curr. Opin. Neurobiol. 14 , 481–487 (2004).

DiCarlo, J. J., Zoccolan, D. & Rust, N. C. How does the brain solve visual object recognition? Neuron 73 , 415–434 (2012).

Vickery, T. J., King, L.-W. & Jiang, Y. Setting up the target template in visual search. J. Vis. 5 , 8 (2005).

Neisser, U. Cognitive Psychology (Appleton-Century-Crofts, 1967).

Treisman, A. & Gelade, G. A feature-integration theory of attention. Cognitive Psychol. 12 , 97–136 (1980).

Wolfe, J. M., Cave, K. R. & Franzel, S. L. Guided search: an alternative to the feature integration model for visual search. J. Exp. Psychol. Human 15 , 419–433 (1989).

Wolfe, J. M. in Oxford Handbook of Attention (eds Nobre, A. C & Kastner, S. ) 11–55 (Oxford Univ. Press, 2014).

Google Scholar

Wolfe, J. M. & Horowitz, T. S. What attributes guide the deployment of visual attention and how do they do it? Nat. Rev. Neurosci. 5 , 495–501 (2004).

Alexander, R. G., Schmidt, J. & Zelinsky, G. J. Are summary statistics enough? Evidence for the importance of shape in guiding visual search. Vis. Cogn. 22 , 595–609 (2014).

Yamins, D. L. K. & DiCarlo, J. J. Using goal-driven deep learning models to understand sensory cortex. Nat. Neurosci. 19 , 356–365 (2016).

Reijnen, E., Wolfe, J. M. & Krummenacher, J. Coarse guidance by numerosity in visual search. Atten. Percept. Psychophys. 75 , 16–28 (2013).

Godwin, H. J., Hout, M. C. & Menneer, T. Visual similarity is stronger than semantic similarity in guiding visual search for numbers. Psychon. Bull. Rev. 21 , 689–695 (2014).

Gao, T., Newman, G. E. & Scholl, B. J. The psychophysics of chasing: a case study in the perception of animacy. Cogn. Psychol. 59 , 154–179 (2009).

Meyerhoff, H. S., Schwan, S. & Huff, M. Perceptual animacy: visual search for chasing objects among distractors. J. Exp Psychol. Human 40 , 702–717 (2014).

Notebaert, L., Crombez, G., Van Damme, S., De Houwer, J. & Theeuwes, J. Signals of threat do not capture, but prioritize, attention: a conditioning approach. Emotion 11 , 81–89 (2011).

Wolfe, J. M. & Franzel, S. L. Binocularity and visual search. Percept. Psychophys. 44 , 81–93 (1988).

Paffen, C., Hooge, I., Benjamins, J. & Hogendoorn, H. A search asymmetry for interocular conflict. Atten. Percept. Psychophys. 73 , 1042–1053 (2011).

Paffen, C. L., Hessels, R. S. & Van der Stigchel, S. Interocular conflict attracts attention. Atten. Percept. Psychophys. 74 , 251–256 (2012).

Zou, B., Utochkin, I. S., Liu, Y. & Wolfe, J. M. Binocularity and visual search—revisited. Atten. Percept. Psychophys. 79 , 473–483 (2016).

Hershler, O. & Hochstein, S . At first sight: a high-level pop out effect for faces. Vision Res. 45 , 1707–1724 (2005).

Golan, T., Bentin, S., DeGutis, J. M., Robertson, L. C. & Harel, A. Association and dissociation between detection and discrimination of objects of expertise: evidence from visual search. Atten. Percept. Psychophys. 76 , 391–406 (2014).

VanRullen, R. On second glance: still no high-level pop-out effect for faces. Vision Res. 46 , 3017–3027 (2006).

Hershler, O. & Hochstein, S. With a careful look: still no low-level confound to face pop-out. Vision Res. 46 , 3028–3035 (2006).

Frischen, A., Eastwood, J. D. & Smilek, D. Visual search for faces with emotional expressions. Psychol. Bull. 134 , 662–676 (2008).

Dugué, L., McLelland, D., Lajous, M. & VanRullen, R. Attention searches nonuniformly in space and in time. Proc. Natl Acad. Sci. USA 112 , 15214–15219 (2015).

Gerritsen, C., Frischen, A., Blake, A., Smilek, D. & Eastwood, J. D. Visual search is not blind to emotion. Percept. Psychophys. 70 , 1047–1059 (2008).

Aks, D. J. & Enns, J. T. Visual search for size is influenced by a background texture gradient. J. Exp. Psychol. Human 22 , 1467–1481 (1996).

Richards, W. & Kaufman, L. ‘Centre-of-gravity’ tendencies for fixations and flow patterns. Percept. Psychophys 5 , 81–84 (1969).

Kuhn, G. & Kingstone, A. Look away! Eyes and arrows engage oculomotor responses automatically. Atten. Percept. Psychophys. 71 , 314–327 (2009).

Rensink, R. A. in Human Attention in Digital Environments (ed. Roda, C. ) Ch 3, 63–92 (Cambridge Univ. Press, 2011).

Book Google Scholar

Enns, J. T. & Rensink, R. A. Influence of scene-based properties on visual search. Science 247 , 721–723 (1990).

Zhang, X., Huang, J., Yigit-Elliott, S. & Rosenholtz, R. Cube search, revisited. J. Vis. 15 , 9 (2015).

Wolfe, J. M. & Myers, L. Fur in the midst of the waters: visual search for material type is inefficient. J. Vis. 10 , 8 (2010).

Kunar, M. A. & Watson, D. G. Visual search in a multi-element asynchronous dynamic (MAD) world. J. Exp. Psychol. Human 37 , 1017–1031 (2011).

Ehinger, K. A. & Wolfe, J. M. How is visual search guided by shape? Using features from deep learning to understand preattentive “shape space”. In Vision Sciences Society 16th Annual Meeting (2016); http://go.nature.com/2l1azoy

Vickery, T. J., King, L. W. & Jiang, Y. Setting up the target template in visual search. J. Vis. 5 , 81–92 (2005).

Biederman, I., Mezzanotte, R. J. & Rabinowitz, J. C. Scene perception: detecting and judging objects undergoing relational violations. Cognitive Psychol. 14 , 143–177 (1982).

Henderson, J. M. Object identification in context: the visual processing of natural scenes. Can. J. Psychol. 46 , 319–341 (1992).

Henderson, J. M. & Hollingworth, A. High-level scene perception. Annu. Rev. Psychol. 50 , 243–271 (1999).

Vo, M. L. & Wolfe, J. M. Differential ERP signatures elicited by semantic and syntactic processing in scenes. Psychol. Sci. 24 , 1816–1823 (2013).

‘t Hart, B. M., Schmidt, H. C. E. F., Klein-Harmeyer, I. & Einhä user, W. Attention in natural scenes: contrast affects rapid visual processing and fixations alike. Philos. T. Roy. Soc. B 368 , http://dx.doi.org/10.1098/rstb.2013.0067 (2013).

Henderson, J. M., Brockmole, J. R., Castelhano, M. S. & Mack, M. L. in Eye Movement Research: Insights into Mind and Brain (eds van Gompel, R., Fischer, M., Murray, W., & Hill, R. ) 537–562 (Elsevier, 2007).

Rensink, R. A. Seeing, sensing, and scrutinizing. Vision Res. 40 , 1469–1487 (2000).

Castelhano, M. S. & Henderson, J. M. Initial scene representations facilitate eye movement guidance in visual search. J. Exp. Psychol. Human 33 , 753–763 (2007).

Vo, M. L.-H. & Henderson, J. M. The time course of initial scene processing for eye movement guidance in natural scene search. J. Vis. 10 , 14 (2010).

Hollingworth, A. Two forms of scene memory guide visual search: memory for scene context and memory for the binding of target object to scene location. Vis. Cogn. 17 , 273–291 (2009).

Oliva, A. in Neurobiology of Attention (eds Itti, L., Rees, G., & Tsotsos, J. ) 251–257 (Academic Press, 2005).

Greene, M. R. & Oliva, A. The briefest of glances: the time course of natural scene understanding. Psychol. Sci. 20 , 464–472 (2009).

Castelhano, M. & Heaven, C. Scene context influences without scene gist: eye movements guided by spatial associations in visual search. Psychon. B. Rev. 18 , 890–896 (2011).

Malcolm, G. L. & Henderson, J. M. Combining top-down processes to guide eye movements during real-world scene search. J. Vis. 10 , 1–11 (2010).

Torralba, A., Oliva, A., Castelhano, M. S. & Henderson, J. M. Contextual guidance of eye movements and attention in real-world scenes: the role of global features on object search. Psychol. Rev. 113 , 766–786 (2006).

Vo, M. L. & Wolfe, J. M. When does repeated search in scenes involve memory? Looking at versus looking for objects in scenes. J. Exp. Psychol. Human 38 , 23–41 (2012).

Vo, M. L.-H. & Wolfe, J. M. The role of memory for visual search in scenes. Ann. NY Acad. Sci. 1339 , 72–81 (2015).

Hillstrom, A. P., Scholey, H., Liversedge, S. P. & Benson, V. The effect of the first glimpse at a scene on eye movements during search. Psychon. B. Rev. 19 , 204–210 (2012).

Hwang, A. D., Wang, H.-C. & Pomplun, M. Semantic guidance of eye movements in real-world scenes. Vision Res. 51 , 1192–1205 (2011).

Watson, D. G. & Humphreys, G. W. Visual marking: prioritizing selection for new objects by top-down attentional inhibition of old objects. Psychol. Rev. 104 , 90–122 (1997).

Donk, M. & Theeuwes, J. Prioritizing selection of new elements: bottom-up versus top-down control. Percept. Psychophys. 65 , 1231–1242 (2003).

Maljkovic, V. & Nakayama, K. Priming of popout: I. Role of features. Mem. Cognition 22 , 657–672 (1994).

Lamy, D., Zivony, A. & Yashar, A. The role of search difficulty in intertrial feature priming. Vision Res. 51 , 2099–2109 (2011).

Wolfe, J., Horowitz, T., Kenner, N. M., Hyle, M. & Vasan, N. How fast can you change your mind? The speed of top-down guidance in visual search. Vision Res. 44 , 1411–1426 (2004).

Wolfe, J. M., Butcher, S. J., Lee, C. & Hyle, M. Changing your mind: on the contributions of top-down and bottom-up guidance in visual search for feature singletons. J. Exp. Psychol. Human 29 , 483–502 (2003).

Kristjansson, A. Simultaneous priming along multiple feature dimensions in a visual search task. Vision Res. 46 , 2554–2570 (2006).

Kristjansson, A. & Driver, J. Priming in visual search: separating the effects of target repetition, distractor repetition and role-reversal. Vision Res. 48 , 1217–1232 (2008).

Sigurdardottir, H. M., Kristjansson, A. & Driver, J. Repetition streaks increase perceptual sensitivity in visual search of brief displays. Vis. Cogn. 16 , 643–658 (2008).

Kruijne, W. & Meeter, M. Long-term priming of visual search prevails against the passage of time and counteracting instructions. J. Exp. Psychol. Learn. 42 , 1293–1303 (2016).

Chun, M. & Jiang, Y. Contextual cuing: implicit learning and memory of visual context guides spatial attention. Cogn. Psychol. 36 , 28–71 (1998).

Chun, M. M. & Jiang, Y. Top-down attentional guidance based on implicit learning of visual covariation. Psychol. Sci. 10 , 360–365 (1999).

Kunar, M. A., Flusberg, S. J., Horowitz, T. S. & Wolfe, J. M. Does contextual cueing guide the deployment of attention? J. Exp. Psychol. Human 33 , 816–828 (2007).

Geyer, T., Zehetleitner, M. & Muller, H. J. Contextual cueing of pop-out visual search: when context guides the deployment of attention. J. Vis. 10 , 20 (2010).

Schankin, A. & Schubo, A. Contextual cueing effects despite spatially cued target locations. Psychophysiology 47 , 717–727 (2010).

PubMed Google Scholar

Schankin, A., Hagemann, D. & Schubo, A. Is contextual cueing more than the guidance of visual-spatial attention? Biol. Psychol. 87 , 58–65 (2011).

Peterson, M. S. & Kramer, A. F. Attentional guidance of the eyes by contextual information and abrupt onsets. Percept. Psychophys. 63 , 1239–1249 (2001).

Tseng, Y. C. & Li, C. S. Oculomotor correlates of context-guided learning in visual search. Percept. Psychophys. 66 , 1363–1378 (2004).

Wolfe, J. M., Klempen, N. & Dahlen, K. Post-attentive vision. J. Exp. Psychol. Human 26 , 693–716 (2000).

Brockmole, J. R. & Henderson, J. M. Using real-world scenes as contextual cues for search. Vis. Cogn. 13 , 99–108 (2006).

Hollingworth, A. & Henderson, J. M. Accurate visual memory for previously attended objects in natural scenes. J. Exp. Psychol. Human 28 , 113–136 (2002).

Flowers, J. H. & Lohr, D. J. How does familiarity affect visual search for letter strings? Percept. Psychophys. 37 , 557–567 (1985).

Krueger, L. E. The category effect in visual search depends on physical rather than conceptual differences. Percept. Psychophys. 35 , 558–564 (1984).

Frith, U. A curious effect with reversed letters explained by a theory of schema. Percept. Psychophys. 16 , 113–116 (1974).

Wang, Q., Cavanagh, P. & Green, M. Familiarity and pop-out in visual search. Percept. Psychophy. 56 , 495–500 (1994).

Qin, X. A., Koutstaal, W. & Engel, S. The hard-won benefits of familiarity on visual search — familiarity training on brand logos has little effect on search speed and efficiency. Atten. Percept. Psychophys. 76 , 914–930 (2014).

Fan, J. E. & Turk-Browne, N. B. Incidental biasing of attention from visual long-term memory. J. Exp. Psychol. Learn. 42 , 970–977 (2015).

Huang, L. Familiarity does not aid access to features. Psychon. B. Rev. 18 , 278–286 (2011).

Wolfe, J. M., Boettcher, S. E. P., Josephs, E. L., Cunningham, C. A. & Drew, T. You look familiar, but I don't care: lure rejection in hybrid visual and memory search is not based on familiarity. J. Exp. Psychol. Human 41 , 1576–1587 (2015).

Anderson, B. A., Laurent, P. A. & Yantis, S. Value-driven attentional capture. Proc. Natl Acad. Sci. USA 108 , 10367–10371 (2011).

MacLean, M. & Giesbrecht, B. Irrelevant reward and selection histories have different influences on task-relevant attentional selection. Atten. Percept. Psychophys. 77 , 1515–1528 (2015).

Anderson, B. A. & Yantis, S. Persistence of value-driven attentional capture. J. Exp. Psychol. Human 39 , 6–9 (2013).

Moran, R., Zehetleitner, M. H., Mueller, H. J. & Usher, M. Competitive guided search: meeting the challenge of benchmark RT distributions. J. Vis. 13 , 24 (2013).

Wolfe, J. M. in Integrated Models of Cognitive Systems (ed. Gray, W. ) 99–119 (Oxford Univ. Press, 2007).

Proulx, M. J. & Green, M. Does apparent size capture attention in visual search? Evidence from the Müller–Lyer illusion. J. Vis. 11 , 21 (2011).

Kunar, M. A. & Watson, D. G. When are abrupt onsets found efficiently in complex visual search? Evidence from multielement asynchronous dynamic search. J. Exp. Psychol. Human 40 , 232–252 (2014).

Shirama, A. Stare in the crowd: frontal face guides overt attention independently of its gaze direction. Perception 41 , 447–459 (2012).

von Grunau, M. & Anston, C. The detection of gaze direction: a stare-in-the-crowd effect. Perception 24 , 1297–1313 (1995).

Enns, J. T. & MacDonald, S. C. The role of clarity and blur in guiding visual attention in photographs. J. Exp. Psychol. Human 39 , 568–578 (2013).

Li, H., Bao, Y., Poppel, E. & Su, Y. H. A unique visual rhythm does not pop out. Cogn. Process. 15 , 93–97 (2014).

Download references

Author information

Authors and affiliations.

Department of Surgery, Visual Attention Lab, Brigham and Women's Hospital, 64 Sidney Street, Suite 170, Cambridge, 02139-4170, Massachusetts, USA

Jeremy M. Wolfe

Division of Cancer Control and Population Sciences, Basic Biobehavioral and Psychological Sciences Branch, Behavioral Research Program, National Cancer Institute, 9609 Medical Center Drive, 3E-116, Rockville, 20850, Maryland, USA

Todd S. Horowitz

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Jeremy M. Wolfe .

Ethics declarations

Competing interests.

J.M.W occasionally serves as an expert witness or consultant (paid or unpaid) on the applications of visual search to topics from legal disputes (for example, how could that truck have hit that clearly visible motorcycle?) to consumer behaviour (for example, how could we redesign this shelf to attract more attention to our product?).

Rights and permissions

Reprints and permissions

About this article

Cite this article.

Wolfe, J., Horowitz, T. Five factors that guide attention in visual search. Nat Hum Behav 1 , 0058 (2017). https://doi.org/10.1038/s41562-017-0058

Download citation

Received : 11 October 2016

Accepted : 27 January 2017

Published : 08 March 2017

DOI : https://doi.org/10.1038/s41562-017-0058

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

Eye and head movements in visual search in the extended field of view.

- Niklas Stein

- Tamara Watson

- Szonya Durant

Scientific Reports (2024)

Using a flashlight-contingent window paradigm to investigate visual search and object memory in virtual reality and on computer screens

- Julia Beitner

- Jason Helbing

- Melissa Lê-Hoa Võ

Distracted by Previous Experience: Integrating Selection History, Current Task Demands and Saliency in an Algorithmic Model

- Neda Meibodi

- Hossein Abbasi

- Dominik Endres

Computational Brain & Behavior (2024)

Framing the fallibility of Computer-Aided Detection aids cancer detection

- Melina A. Kunar

- Derrick G. Watson

Cognitive Research: Principles and Implications (2023)

- Thomas L. Botch

- Brenda D. Garcia

- Caroline E. Robertson

Scientific Reports (2023)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

BRIEF RESEARCH REPORT article

The visual search strategies underpinning effective observational analysis in the coaching of climbing movement.

- 1 Human Sciences Research Centre, College of Life and Natural Sciences, University of Derby, Derby, United Kingdom

- 2 Lattice Training Ltd., Chesterfield, United Kingdom

Despite the importance of effective observational analysis in coaching the technical aspects of climbing performance, limited research informs this aspect of climbing coach education. Thus, the purpose of the present research was to explore the feasibility and the utility of a novel methodology, combining eye tracking technology and cued retrospective think-aloud (RTA), to capture the cognitive–perceptual mechanisms that underpin the visual search behaviors of climbing coaches. An analysis of gaze data revealed that expert climbing coaches demonstrate fewer fixations of greater duration and fixate on distinctly different areas of the visual display than their novice counterparts. Cued RTA further demonstrated differences in the cognitive–perceptual mechanisms underpinning these visual search strategies, with expert coaches being more cognizant of their visual search strategy. To expand, the gaze behavior of expert climbing coaches was underpinned by hierarchical and complex knowledge structures relating to the principles of climbing movement. This enabled the expert coaches to actively focus on the most relevant aspects of a climber’s performance for analysis. The findings demonstrate the utility of combining eye tracking and cued RTA interviewing as a new, efficient methodology of capturing the cognitive–perceptual processes of climbing coaches to inform coaching education/strategies.

Introduction

Climbing’s acceptance as an Olympic event in Tokyo 2020 is recognition of the sports’ increasing popularity and professionalization ( Bautev and Robinson, 2019 ). As demand increases, so too will the need for effective coaching, thus requiring coach educators to consider how coaching expertise is developed ( Sport England, 2018 ). Climbing coaches employ a range of complex and inter-related strategies to facilitate physical, technical, mental, and tactical improvements ( Currell and Jeukendrup, 2008 ). However, to date, climbing research has predominantly focused on the physiological and the psychological aspects of performance, somewhat neglecting the importance of the technical components of climbing ( Taylor et al., 2020 ). Furthermore, the process by which climbing coaches facilitate technical improvements in their athletes is wholly under-researched.

The characteristics that define expertise in the coaching of climbing movement, and the process by which expertise is developed, have yet to be explored. Wider expertise research has sought to identify the key characteristics of expert performance; among others, one of the key hallmarks that define expert performance is superior visual search behavior ( Ericsson, 2017 ). Research in a variety of sporting contexts (i.e., athletes, officials, and coaches) has demonstrated that experts have a superior ability to pick up on salient postural cues and detect patterns of movement and can more accurately predict the probabilities of likely event occurrences ( Williams et al., 2018 , p. 663). The superior visual search behavior of expert coaches is thought to be due to more refined domain-specific knowledge and memory structures ( Williams and Ward, 2007 ). Declarative and procedural knowledge, acquired through extensive deliberate practice, enables expert coaches to extract the most salient information from the visual display to identify the key aspects of the athlete’s performance that can subsequently be targeted for improvement ( Hughes and Franks, 2004 ).

Yet without a systematic approach to observational analysis, coaches potentially threaten the validity of their analysis ( Knudson, 2013 ). To understand how coaches analyze and evaluate climbing performance, it is argued that a fundamental step in this process is characterizing the underlying cognitive–perceptual mechanisms that underpin expertise ( Spitz et al., 2016 ). To enable this, the study of expertise in sport has commonly adopted the “Expert Performance Approach” (EPA) ( Ericsson and Smith, 1991 ). In EPA, the superior performance of experts is captured, identifying the mediating mechanisms underlying their performance by recording process-tracing measures such as eye movements and/or verbalizations ( Ford et al., 2009 ). Such advances have begun to enable significant insight into the cognitive–perceptual mechanisms underlying expert performance ( Gegenfurtner et al., 2011 ). For example, lightweight mobile eye tracking devices provide a precise, non-intrusive, millisecond-to-millisecond measurement of where, for how long, and in what sequence coaches focus their visual attention when viewing athlete performance ( Duchowski, 2007 ).

Gegenfurtner et al. (2011) conducted a meta-analysis of 65 eye tracking studies to identify the common characteristics of expert performance. They concluded that the superior performance of experts, across a variety of different domains (sport, medicine, aviation, etc.), could be explained by a combination of three factors: First, experts develop specific long-term working memory skills because of accumulated deliberate practice. Second, expert coaches can optimize the amount of processed information by ignoring task-irrelevant information. This allows for a greater proportion of their attentional resources to be allocated to more task-relevant areas of the visual display ( Haider and Frensch, 1999 ). Finally, they suggest that expert–novice performance differences in visual search are explained by an enhanced ability among experts to utilize their peripheral vision.

To date, however, there has been no eye tracking studies conducted on the visual search strategies of climbing coaches. Yet in other sports, eye tracking technology has yielded insight into differences between expert and novice coaches, which can be used to inform coaching strategies. Here eye tracking research conducted with coaches in basketball ( Damas and Ferreira, 2013 ), tennis ( Moreno et al., 2006 ), gymnastics ( Moreno et al., 2002 ), and football ( Iwatsuki et al., 2013 ) has demonstrated that expert coaches focus on distinctly different locations. Experts fixate their attention on the most salient areas of the visual display as compared to novices ( Williams et al., 1999 ). Additionally, experts demonstrate fewer fixations of greater duration in relatively static tasks/sports ( Mann et al., 2007 ; Gegenfurtner et al., 2011 ).

Most eye tracking research has, nonetheless, been conducted in laboratory settings, leading some researchers to challenge the ecological validity of the approach ( Hüttermann et al., 2018 ). Adding to this, Mann et al.(2007 ; see also Gegenfurtner et al., 2011 ) argue that the more realistic the experimental design is to the realities of the sporting context, the more likely it is that experts will be able to demonstrate their enhanced cognitive–perceptual skills afforded by their increased context-specific knowledge ( Travassos et al., 2013 ). Thus, some researchers have cast doubt on whether the results of laboratory studies can be transferred beyond their immediate context into the complex realities of the coaching environment ( Renshaw et al., 2019 ). Moving forward, therefore, the use of mobile eye tracking technology potentially enables researchers to capture the expert performance of coaches in naturalistic coaching environments, thus enhancing ecological validity and ensuring transferability to coaching practice.

Although eye tracking enables researchers to investigate the processes of visual attention, the relevance of specific gaze location biases to the coaching process still requires elaboration, that is, eye tracking gaze data can tell us where someone is looking, but importantly not why. Over-reliance on averaged and uncontextualized gaze data potentially oversimplifies and limits our understanding of the coaching process ( Dicks et al., 2017 ). Indeed one of the main conceptual concerns with sports expertise research is the relative neglect of the cognitive processes underpinning expert performance ( Moran et al., 2018 ). As Abernethy (2013) identifies, there remains a lack of evidence on the defining characteristics of sports expertise and how such characteristics are developed. Hence, additional methodological approaches are needed to complement eye tracking if the mechanisms underpinning the superior cognitive–perceptual skills of expert coaches are to be captured.

Currently, two such methodologies are proposed. These are concurrent think-aloud (CTA)—and retrospective think-aloud (RTA). In CTA, the participants verbalize their thought process during the actual task (e.g., Ericsson et al., 1993 ), whereas in RTA, the participants verbalize their thought process immediately after the task (e.g., Afonso and Mesquita, 2013 ). In critique, as we can mentally process visual stimuli much faster than we can verbalize our observations, it is argued that, when using CTA, verbalizations are often incomplete ( Wilson, 1994 ). Furthermore, attempting to verbalize complex cognitively demanding tasks while simultaneously performing them affects the user’s task performance and associated gaze behavior ( Holmqvist et al., 2011 ). The alternative, to record participants thinking aloud after the task, circumvents this disruption to the participants’ performance in the primary task. However, due to the time-lag between the primary task and RTA, a “loss of detail from memory or fabulation” may occur ( Holmqvist et al., 2011 , p. 104). The limitations of RTA are, however, potentially negated when it is combined with eye tracking technology.

Cued RTA utilizes eye tracking gaze data, as an objective reference point to stimulate memory recall, and structure RTA, reducing loss of detail from memory and fabulation ( Hyrskykari et al., 2008 ). Furthermore, cued RTA provides explicit detail as to the declarative and procedural knowledge that underpin the coach’s visual search strategies, adding depth and meaning to otherwise uncontextualized gaze data ( Gegenfurtner and Seppänen, 2012 ). Cued RTA can therefore be adopted for both empirical and theoretical reasons. First, cued RTA is confirmatory in that RTA data enable the researcher to verify the gaze data for accuracy (e.g., fixation location and allocation of attention), and gaze data provide an objective location to reduce memory loss and fabulation when conducting RTA. Second, cued RTA enables the researcher to elicit a greater level of insight into the cognitive–perceptual mechanisms that underpin the visual search strategies of coaches. It is therefore proposed that cued RTA is potentially more effective than either eye tracking or RTA methodologies applied in isolation.

Thus, in the present study, we explored the feasibility and the utility of a novel methodology, combining eye tracking technology with cued RTA, to capture the cognitive–perceptual mechanisms underpinning the visual search behaviors of climbing coaches. As this was a first trial of the combined methodology, three expert and three novice coaches were asked to observe and analyze the live climbing performances of intermediate boulderers in a naturalistic and ecologically valid setting.

Materials and Methods

Participants.

A total of six UK climbing coaches were recruited for the present study based on their level of expertise (see Moreno et al., 2002 ). The “expert” group (successful elite, as defined by Swann et al., 2015 ) consisted of three national team coaches with a minimum of 5 years of professional coaching experience (three males; 8.3 ± 1.5 years). The “novice” group ( Nash and Sproule, 2011 ) consisted of three club-level coaches, with a minimum of 1 year of coaching experience (one female, two males; 3.6 ± 2.1 years). All the participants had normal or corrected-to-normal vision and voluntarily agreed to participate following the local University of Derby ethical approval.

Climber/Bouldering Problems

The coaches were asked to observe the same intermediate (V4/F6B) climber (male; 21 years) climb four different boulder problems (2 × vertical, 1 × slab, 1 × roof) at a grade of V4/F6B ( Draper et al., 2016 ) at a national center climbing wall. Each boulder problem was repeated three times, requiring the coach to view a total of 12 attempts lasting approximately 16 s each (15.87 ± 0.81 s). The boulder problems were of a maximum height of 4 m and ranged from six to eight moves for each problem. The problems were selected in consultation with an independent national-level coach to ensure that they were judged to be of an appropriate level for the grade and representative of a normal coaching setting.

Visual Gaze Behavior

Mobile eye tracking glasses (SMI ETG 2.0; SensoMotoric Instruments, Tetlow, Germany; binocular, 60 Hz) were used to record the coaches’ visual gaze behavior. The gaze data were collected via a lightweight smart recorder (Samsung Galaxy 4) using SMI IViewX software. This enabled the recording of visual gaze data in a real-world setting. Prior to capturing eye tracking data, a three-point calibration procedure was implemented by placing three targets in a triangular configuration at a distance of 5 m. The coaches were placed 5 m away from the base of each boulder problem; i.e., at the optimum viewing angle for each specific problem (as decided by an independent national-level coach), and instructed to remain stationary. However, they could move their heads to ensure that the climber remained in the eye-tracker’s recordable visual field. To validate the accuracy, a nine-point calibration grid was placed on each boulder problem, with the markers placed at the outermost areas of the visual field that the coach would be required to observe. This ensured that the gaze data were accurate across the entire visual field. The dependent variable data collected included fixation count, fixation duration, and fixation location.

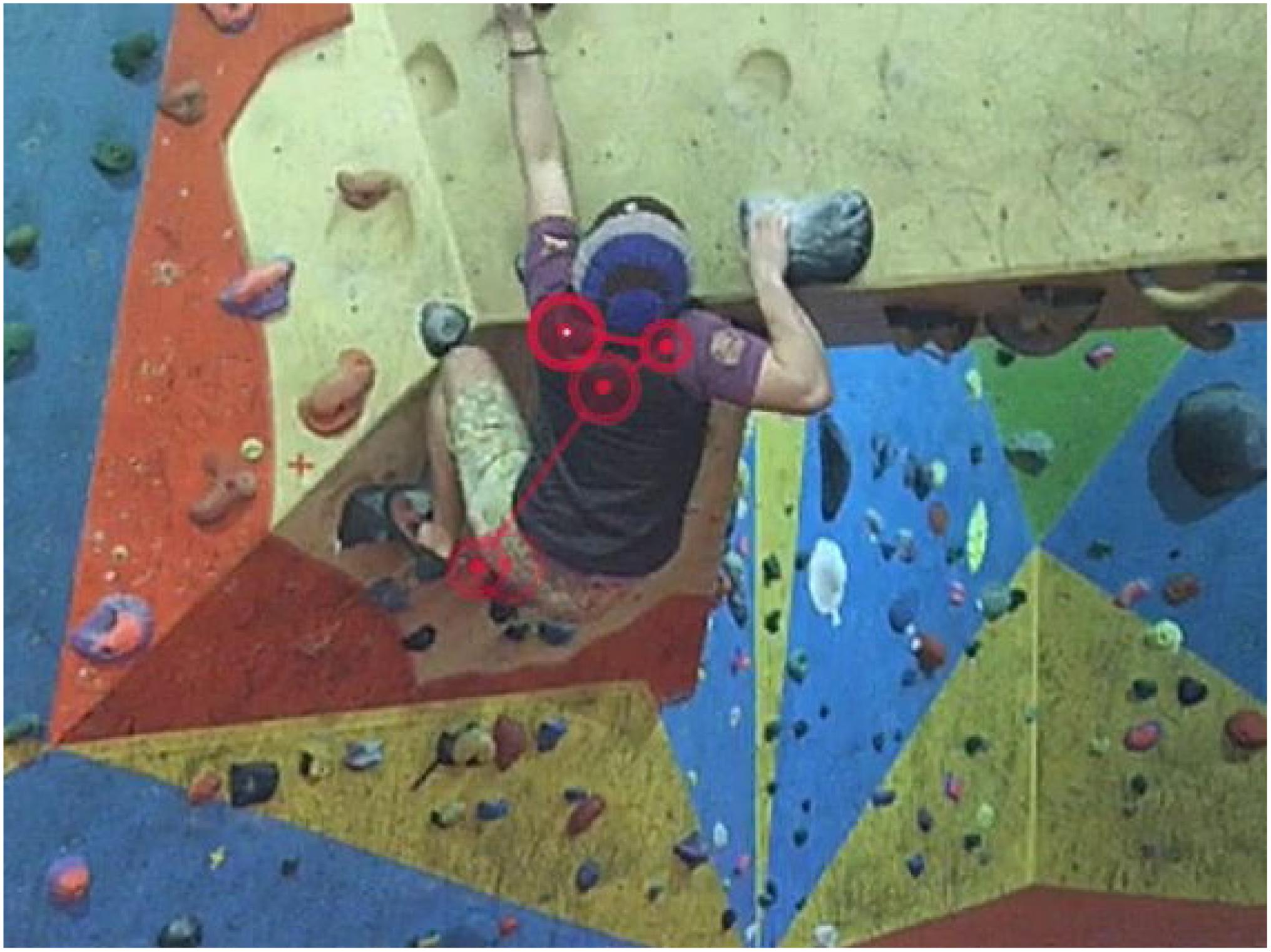

Retrospective Think-Aloud Data Capture

Retrospective think-aloud was conducted using gaze data to cue responses from the coaches: i.e., the coaches were asked to explain individual fixation locations during their analysis of the climber’s performance, verbalizing their relevance to their coaching process. The gaze data were presented to the coach as video replay with the coach’s own visual gaze scan-path super-imposed (see Figure 1 ). This scan-path showed the most recent 2-s of gaze data appearing to the coaches as a connected string of fixations (circles) and saccades (connecting lines). Each attempt was replayed at 100% speed and then slowed down to 25%.

Figure 1. Example of how the gaze data were presented to coaches to cue retrospective think-aloud: visual gaze data super-imposed as 2-s scan path [a connected string of fixations (circles) and saccades (connecting lines)] to cue verbal responses (i.e., why coaches focus on specific fixation locations).

Other Materials

A demographic questionnaire captured the coaches’ prior experience: i.e., highest level of coaching experience, accumulated coaching experience, and current coaching role/responsibilities.

Once the participants had completed the demographic questionnaire, they were fitted with mobile eye tracking glasses and undertook the calibration process. The coaches were then instructed to observe the climber to assess their quality of movement and identify movement errors. It was further explained that they would be required to verbalize their analysis of the climber’s performance later in the experiment. Each coach observed the same climber climb four different boulder problems at a grade of V4, viewing three attempts for each problem. Once each coach had observed all 12 attempts, gaze data were downloaded for further review using SMI BeGaze (V3.2, SensoMotoric Instruments, Tetlow, Germany) analysis software. Using the BeGaze RTA function, the cued RTA interviews were conducted immediately after the collection of gaze data using video replay with the gaze data super-imposed to cue verbal responses. After viewing the gaze data in real time, the participants were asked to scroll through gaze data at 25% speed, explaining why they focused on specific fixation locations and their relevance to the analyses. The fixations discussed were self-selected by the participant in order to reduce researcher bias. The gaze data were replayed until each coach had exhausted all fixations they could recall.

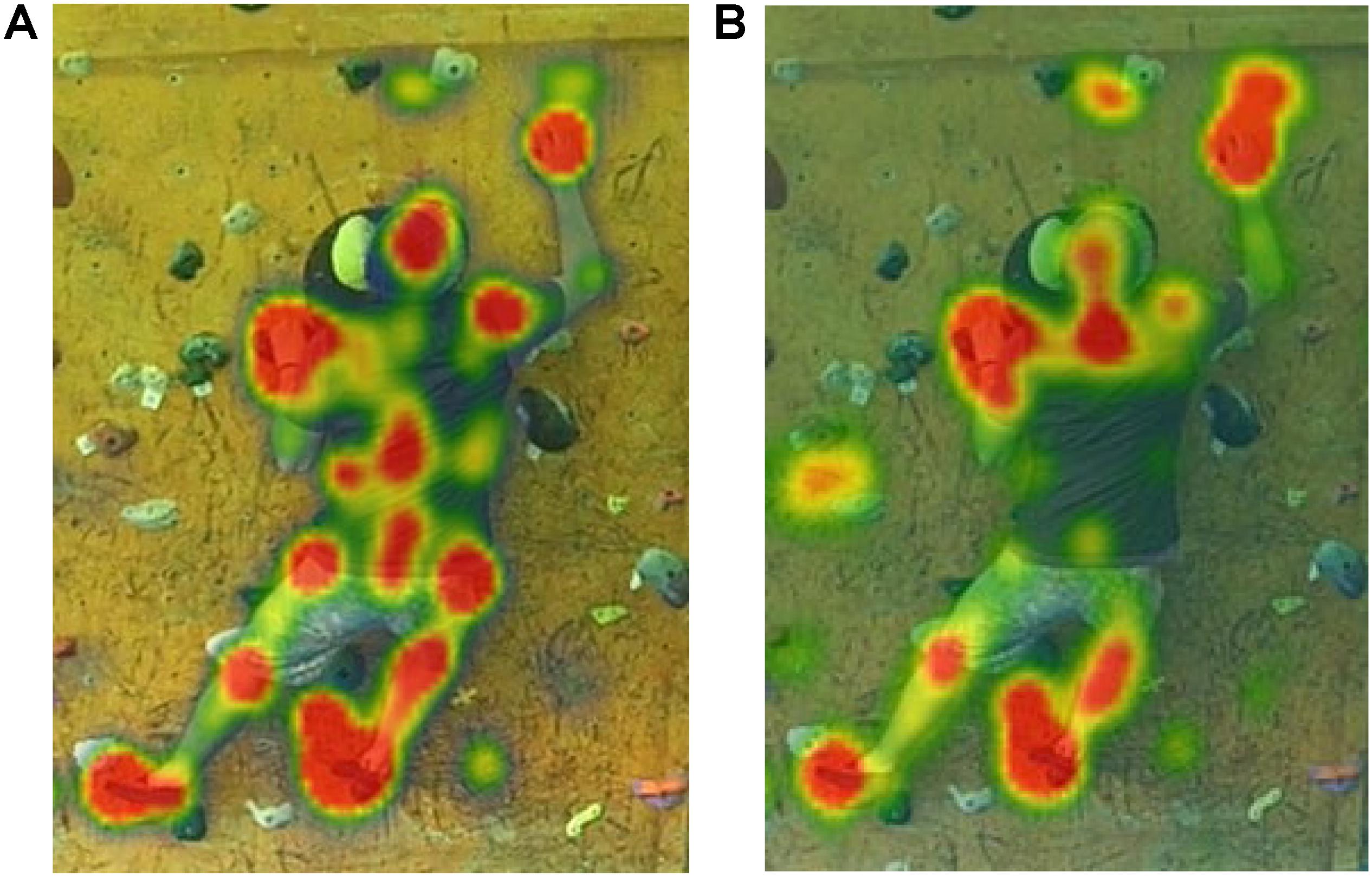

The eye tracking metrics analyzed were: (a) “fixation rate” (i.e., average number of fixations per second), (b) “average fixation duration” (i.e., average fixation duration of all fixations throughout the entire viewing period), and (c) “total fixation duration” (i.e., total duration of a viewer’s fixations landing on a given visual element throughout the entire viewing period) within pre-defined areas of interest. Visual fixations were defined as periods where the eye remained stable in the same location (within 1° degree of tolerance) for a minimum of 120 ms ( Catteeuw et al., 2009 ). The visual gaze data were analyzed using the “semantic gaze mapping” function of SMI BeGaze to manually code fixations against three predefined areas of interest. These were the hands, the feet, and the core regions. Only the gaze data collected while the climber was attempting the problem were included in analysis. As the length of recordings differed for individual coach’s visual gaze behavior due to small variations (±5%) in the athlete’s performance, the data were normalized by cropping the recordings so that each trial was of equal duration to the shortest trial. This enabled the eye tracking metrics (e.g., “total fixation duration”) to be analyzed for comparison between coaches/groups. To enable comparison in visual search strategy, the aggregated gaze data as a function of expert or novice group were used to produce heat maps ( Holmqvist et al., 2011 ). Additional analysis was pursued using Microsoft Excel (Version 15.37, Santa Rosa, CA, United States). Due to the small sample size, the magnitude of differences was determined using Cohen’s d ( Cohen, 1988 ).

The cued RTA data were recorded concurrently, ensuring that the interview responses were not separated from the context of the coaches’ individual gaze data. The cued RTA data were transcribed verbatim, and inductive thematic analysis was conducted in accordance to the six-step process outlined by Braun and Clarke (2006) . Two members of the research team initially conducted thematic analyses independently before comparing and auditing the analysis process (i.e., first- and second-level codes and final themes). Issues of credibility and transferability were addressed by a process of member checking to ensure a good “fit” between the coaches’ views and the researchers’ final interpretation of themes, as well as ensuring that the themes transfer to the wider coaching context ( Tobin and Begley, 2004 ).

The eye tracking data quality was 98.6% (±0.9), i.e., 98.6% of the samples were captured. An analysis of the gaze data revealed distinct differences between expert and novice groups. The experts demonstrated slower fixation rates (experts 2.23 ± 0.20/s, novices 2.44s ± 0.37/s; d = 0.71) and greater average fixation durations (experts 315 ± 30 ms, novices 261 ± 59 ms; d = 1.07) than their novice counterparts. In other words, the experts demonstrated fewer fixations but of greater duration.

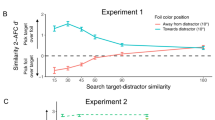

Furthermore, distinct differences were identified in the locations that the groups allocated attentional resources to. The experts allocated a greater proportion of their attention to the proximal (core) features of the climber’s body, demonstrating a greater number of fixations (experts 58.7 ± 24.5, novices 17.4 ± 1.4; d = 2.4) and longer total fixation durations to core body areas (experts 23.6 ± 14.5 s, novices 4.5 ± 1.2 s; d = 1.9). The experts additionally placed less attention on the climber’s hand placements than the novices did, with fewer total fixations (experts 41.0 ± 25.9, novices 69.5 ± 27.6; d = 1.1) and shorter total fixation durations (experts 16.6 ± 11.6 s, novices 25.8 ± 0.4 s; d = 1.1) toward hand placements. Finally, the experts spent more time fixating their attention on the climber’s foot placements than the novices did, with greater numbers of total fixations (experts 44.7 ± 14.6, novices 38.5 ± 14.9; d = 0.4) and longer total fixation durations (experts 20.2 ± 4.7 s, novices 11.1 ± 1.4 s; d = 2.6) toward foot placements. These differences between the expert and the novice coaches’ visual search strategy were evident from the aggregated heat maps ( Figure 2 ), which illustrate that the experts focused more attention on proximal features (e.g., hips, lumbar region, and center of back), whereas the novices almost solely focused on distal features (e.g., feet and hands).

Figure 2. Aggregated heat maps of expert (A) and novice (B) coaches’ gaze behavior over 12 boulder problems illustrate notable differences in the allocation of visual attention to different regions of the climber’s body.

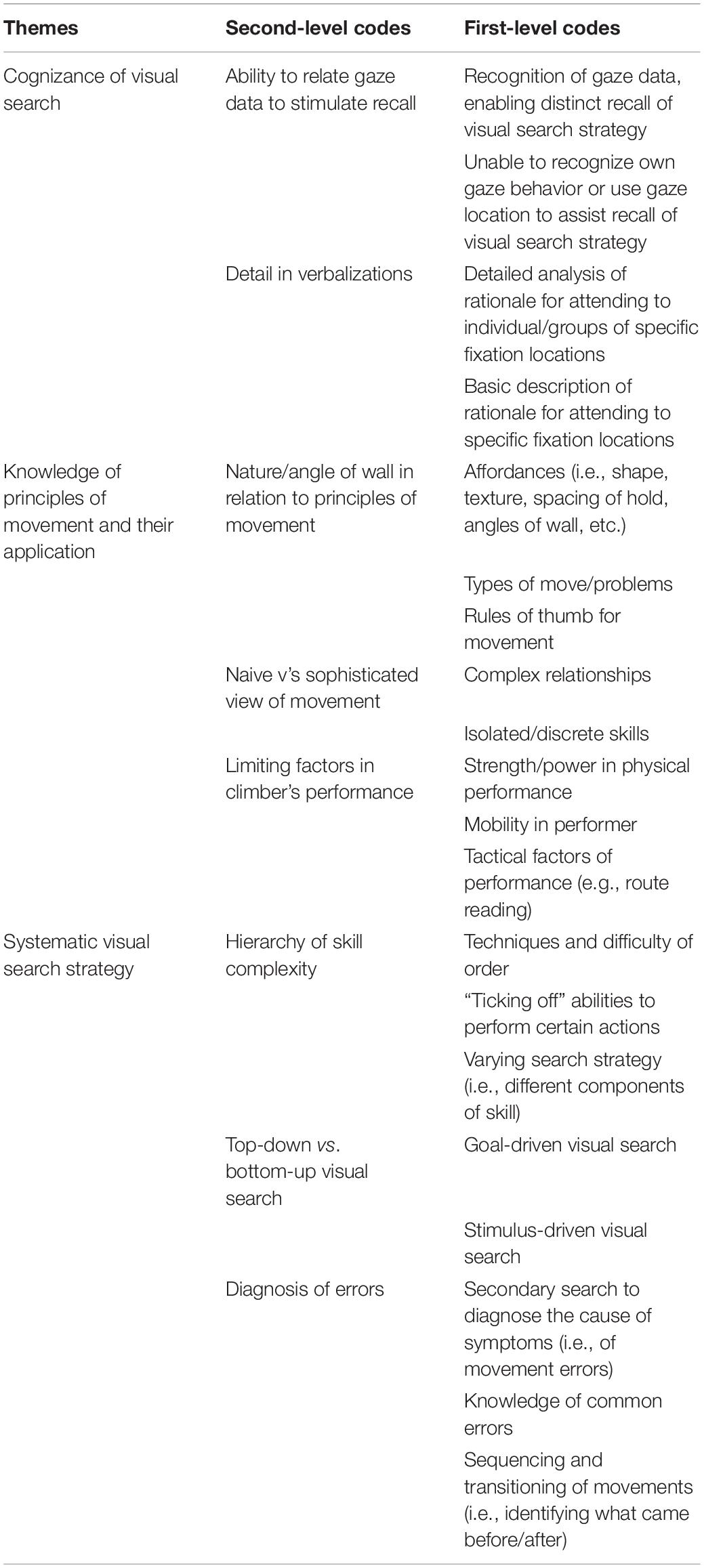

Retrospective Think-Aloud Data

The interview durations (min) differed noticeably between the expert and the novice coaches (experts 75.3 ± 12.3, novices 38.0 ± 11.5; d = 3.1), reflecting the level of detail that each group was able to provide while explaining their visual gaze data. The thematic analyses revealed three themes: “cognizance of visual search behavior,” “knowledge in the principles of movement and their application,” and “systematic visual search strategy.” Table 1 illustrates the first- and second-level codes that contribute to the three main themes.

Table 1. Organization of data codes from the thematic analysis.

In respect to the first theme, the expert coaches were far more cognizant of their visual search behavior, being able to verbalize their thought process and provide rationale that explains how the gaze data relate to their coaching process. For example, one expert coach stated:

“I can tell immediately these are my eye movements… You can see I am going through my standard functional movement screening process here. This point here, I am looking at whether hip mobility limiting the climber’s ability to rock-over.” (participant E3)

The novice group, by comparison, was often unable to make any link between their gaze data and their coaching process, simply passing no comment or stating: “I’m not sure why I was looking there” (participant N2). One coach was particularly candid by stating:

“To be honest, I don’t really know what I’m looking for when I’m coaching. I know to look for messy footwork, so that’s what I look for. Beyond that, I don’t know what to look for.” (participant N3)

Considering the second theme, the expert coaches demonstrated a far greater understanding of the principles of movement and their application. Here they demonstrated more complex frameworks and principles of movement that applied to the nature and the angle of the problem. For example, one expert coach succinctly described their process as follows:

“Climbing is a really complex 3D interrelationship between the climber and infinitely varied points of contacts, at differing and changing angles. I try to think of how those points of contact can be used in conjunction, so that the climber can move their center of mass into the optimal position for that particular situation. When the climber is not achieving that position, I try to diagnose secondary factors that may be prohibiting them.” (participant E2)

By comparison, the novice coaches often discussed specific aspects of technique in isolation. For example, participant N1 stated: “So I’m looking for bad footwork here, then I’m looking for if they are holding the hold in the right way.” Comments relating to isolated aspects of technique were common among the novice group with little to no reference to the complex interrelationships between the components of the movement system and their interaction with the environment.

Finally, in reference to the third theme, the expert coaches eluded to a hierarchy of skills that guided their priorities for analysis. Participant E2 observed that:

“If you can see, I am looking at completely different areas during each attempt…looking at different aspects of their performance. I start by looking at the most basic aspects of technique, building up a picture of their ability, working through to more complex skills. When I start to see errors creeping in, I look to see if it is a consistent pattern or just a one-off. If there is a consistent pattern, that is usually the aspect of their climbing I look to address first.”

By contrast, the process of the novice coaches was continually described as a process of search for foot placement errors and search for hand placement errors, continually repeating this cycle. Thus, while both groups eluded to the skills that they prioritized, the above quote highlights how expert verbalizations were more comprehensive and demonstrated a logical/systematic progression in skill complexity. By comparison, novice verbalizations demonstrated a limited and rudimentary grasp of the critical factors that underpin the climbing movement.

Despite the importance of observational analysis in the coaching of climbing movement, the cognitive–perceptual mechanisms underpinning the visual search behavior of climbing coaches have not previously been explored. This study sets out to explore the feasibility and the utility of a previously underutilized methodology within sports expertise research, namely, if mobile eye tracking data, captured in a naturalistic and ecologically valid coaching environment, combined with cued RTA interviews can effectively capture the mechanisms that underpin the visual search behavior in expert and novice coaches. Here the results revealed that the gaze behavior of expert climbing coaches is characterized by fewer fixations, but fixations that were of longer duration than those of novice coaches. Additionally, that experts coaches tend to focus a greater proportion of their attention on proximal regions, whereas the novice coaches typically focused on distal regions. Finally, the RTA analysis revealed that the experts were more cognizant of their visual search strategy, detailing how their visual gaze behavior is guided by a systematic hierarchical process underpinned by complex knowledge structures relating to the principles of climbing movement.

A major finding of the current research was that visual attentional strategies differed between expert and novice climbing coaches. We observed that the expert coaches demonstrated fewer fixations—but these were of greater duration, suggesting that the accumulated context-specific experience of the expert coaches enables them to develop a more efficient visual search behavior. The expert coaches selectively attend to only the most task-relevant areas of the visual display, requiring them to make fewer fixations (of longer duration) to efficiently extract relevant information from specific gaze locations ( Ericsson and Kintsch, 1995 ; Haider and Frensch, 1999 ). These findings accord with previous studies investigating the visual search strategies of coaches in similar self-paced individual sports (e.g., coaching a tennis serve; Moreno et al., 2006 ).

The current research further highlighted the relevance of specific fixation locations to more efficient visual search. The proportion of attentional resources that coaches allocated to specific locations varied distinctly between experts and novices. The experts spent nearly five times as long focusing on the proximal regions of the climber’s body (or core) as compared to the novice coaches (refer again to Figure 2 ), supporting Lamb et al. (2010) notion that the observational strategies of coaches may be overly influenced by the motion of distal segments due to the greater range of motion and velocities than that of proximal segments. It is therefore proposed that the climber’s core represents one of the most salient areas upon which to analyze a climbing performance. Fluency of the center of mass, as defined by the geometric index of entropy, has been shown to be an important performance characteristic ( Cordier et al., 1994 ; Taylor et al., 2020 ). Identifying the most salient areas to analyze a climbing performance may provide a viable means to inform future coach training, helping novice coaches make their visual search behaviors more efficient ( Spitz et al., 2018 ). However, identifying gaze location alone is of limited practical value to developing coaches unless its relevance is made explicit ( Nash et al., 2011 ).

The addition of cued RTA to the eye tracking methodology revealed three themes that provide insight into the cognitions underpinning the visual attentional strategies of novice vs . expert coaches. First, the expert coaches were far more cognizant of their visual search behavior, providing a far more explicit rationale for how their gaze data related to their coaching process. The inability of novice coaches to recall and elaborate on their visual gaze data suggests a randomized and inefficient visual search strategy, that is, they were unclear as to why they fixated on specific locations or what information they hoped to acquire by doing so. Second, the experts were able to provide rich descriptions of the critical factors that underpin successful movement and relate such principles to their gaze data. Here they demonstrated more complex frameworks and principles of movement applied to the nature and the angle of the problem. Comparatively, the novice coaches provided very little detail on how principles of movement guide their visual search, suggesting that a lack of knowledge regarding the critical factors that underpin climbing movement may be a key factor that limits the effectiveness of their observational analysis. Finally, the experts were more proactive and systematic in their analysis, with their visual search strategy underpinned by a hierarchy of skills ( Gegenfurtner et al., 2011 ). It is likely that the lack of a systematic approach to observational analysis observed among novice coaches potentially limits the validity and the effectiveness of their analysis ( Knudson, 2013 ).

Based on the insights above, it is proposed that the use of cued RTA interviews potentially offers a deeper insight into the cognitive–perceptual process of coaches than the use of eye tracking or think-aloud methodologies employed in isolation. By capturing the declarative and the procedural knowledge that expert coaches utilize to guide their visual search strategy, valuable insight is acquired as to the systematic processes that expert coaches employ to analyze a climbing performance, that is, where the most salient areas of the visual display are and why they are important to the analysis of a climbing performance. Coach educators may be able to utilize such insights to provide developing coaches with a more explicit rationale to guide their visual search, enhancing the efficiency and the quality of their observational analysis.

In sum, the present results demonstrate the utility of combining eye tracking technology and cued RTA as a methodology for capturing the cognitive–perceptual processes of climbing coaches. In combining these methods, a range of different cognitions and perceptual behaviors were observed as a consequence of coaching expertise. Combining these technologies potentially offers a valid and a reliable method to capture the processes underpinning the observational analysis of a climbing movement. Indeed the same methodological approach could be applied in a variety of coaching contexts. This stated, a number of limitations and recommendations for future research are highlighted. Despite the ecological validity of the present research, the results must be interpreted tentatively given the small sample size. Furthermore, viewing the live performance of a single athlete presents challenges to study repeatability. Researchers will need to weigh the benefits of ecological validity against replicability. Future research would also benefit from exploring whether the visual search strategies of coaches remain consistent with a greater number of athletes of varying ability, anthropometrics, and style. This will help a comprehensive framework for the observational analysis of a climbing movement to be developed.

Data Availability Statement

The datasets generated for this study are available on request to the corresponding author.

Ethics Statement

The studies involving human participants were reviewed and approved by the Human Sciences Research Ethics Committee, University of Derby. The participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this manuscript.

Author Contributions

JM, DG, and NT contributed to the design of the study and in data collection. JM and NT performed data analysis and wrote the first draft of the manuscript. JM, FM, DS, DG, and NT revised the manuscript to produce the final draft, which was subsequently reviewed by all the authors.

Funding for open access fees is being provided internally through research budgets associated with the Human Sciences Research Centre, College of Life and Natural Sciences, University of Derby.

Conflict of Interest

DG was employed by the company Lattice Training Ltd.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abernethy, B. (2013). “Research: informed practice,” in Developing Sport Expertise: Researchers And Coaches Put Theory Into Practice , eds D. Farrow, J. Baker, and C. MacMahon (Abingdon: Routledge), 249–255.

Google Scholar

Afonso, J., and Mesquita, I. (2013). Skill-based differences in visual search behaviours and verbal reports in a representative film-based task in volleyball. Intern. J. Perform. Analy. Sport 13, 669–677. doi: 10.1080/24748668.2013.11868679

CrossRef Full Text | Google Scholar

Bautev, M., and Robinson, L. (2019). Organizational evolution and the olympic games: the case of sport climbing. Sport Soc. 22, 1674–1690. doi: 10.1080/17430437.2018.144099

Braun, V., and Clarke, V. (2006). Using thematic analysis in psychology. Q. Res. Psychol. 3, 77–101.

Catteeuw, P., Helsen, W., Gilis, B., Van Roie, E., and Wagemans, J. (2009). Visual scan patterns and decision-making skills of expert assistant referees in offside situations. J. Sport Exerc. Psychol. 31, 786–797. doi: 10.1123/jsep.31.6.786

Cohen, J. (1988). Statistical Power Analyses For The Behavioral Sciences , 2nd Edn, Hillsdale, NJ: Lawrence Erlbaum.

Cordier, P., France, M. M., Pailhous, J., and Bolon, P. (1994). Entropy as a global variable of the learning process. Hum. Mov. Sci. 13, 745–763. doi: 10.1016/0167-9457(94)90016-7

Currell, K., and Jeukendrup, A. E. (2008). Validity, reliability and sensitivity of measures of sporting performance. Sports Med. 38, 297–316. doi: 10.2165/00007256-200838040-00003

Damas, R. S., and Ferreira, A. (2013). Patterns of visual search in basketball coaches. An analysis on the level of performance. Rev. Psicol. Deport. 22, 199–204.

Dicks, M., Button, C., Davids, K., Chow, J. Y., and Van der Kamp, J. (2017). Keeping an eye on noisy movements: on different approaches to perceptual-motor skill research and training. Sports Med. 47, 575–581. doi: 10.1007/s40279-016-0600-3

Draper, N., Giles, D., Schöffl, V., Fuss, F., Watts, P., and Wolf, P. (2016). ‘Comparative grading scales, statistical analyses, climber descriptors and ability grouping: international rock climbing research association position statement’. Sports Technol. 8, 88–94. doi: 10.1080/19346182.2015.1107081

Duchowski, A. (2007). Eye Tracking Methodology: Theory and Practice. London: Springer.

Ericsson, K. A. (2017). Expertise and individual differences: the search for the structure and acquisition of experts’ superior performance. WIRES Cogn. Sci. 8:e1382. doi: 10.1002/wcs.1382

Ericsson, K. A., and Kintsch, W. (1995). Long-term working memory. Psychol. Rev. 102, 211–245. doi: 10.1037/0033-295X.102.2.211

Ericsson, K. A., Krampe, R. T., and Tesch-Römer, C. (1993). The role of deliberate practice in the acquisition of expert performance. Psychol. Rev. 100, 363–406. doi: 10.1037/0033-295x.100.3.363

Ericsson, K. A., and Smith, J. (1991). “Prospects and limits of the empirical study of expertise: an introduction,” in Towards a General Theory Of Expertise: Prospects And Limits , eds K. A. Ericsson and J. Smith (Cambridge: Cambridge University Press), 1–29.

Ford, P., Coughlan, E., and Williams, M. (2009). The expert-performance approach as a framework for understanding and enhancing coaching performance, expertise and learning. Intern. J. Sports Sci. Coach. 4, 451–463. doi: 10.1260/174795409789623919

Gegenfurtner, A., Lehtinen, E., and Saljo, R. (2011). Expertise differences in the comprehension of visualizations: a meta-analysis of eye-tracking research in professional domains. Educ. Psychol. Rev. 23, 523–552.

Gegenfurtner, A., and Seppänen, M. (2012). Transfer of expertise: an eye tracking and think aloud study using dynamic medical visualizations. Comput. Educ. 63, 393–403.

Haider, H., and Frensch, P. A. (1999). Eye movement during skill acquisition: more evidence for the information-reduction hypothesis. J. Exp. Psychol. 25, 172–190. doi: 10.1037/0278-7393.25.1.172

Holmqvist, K., Nystrom, M., Andersson, R., Dewhurst, R., Jarodzka, H., and Van der Weijer, J. (2011). Eye-Tracking: A Comprehensive Guide To Methods And Measures. New York, NY: Oxford University Press.

Hughes, N., and Franks, I. (2004). “The nature of feedback,” in Notational Analysis of Sport: Systems for Better Coaching and Performance in Sport , eds I. M. Franks and M. Hughes (London: Routledge), 17–39.

Hüttermann, S., Noël, B., and Memmert, D. (2018). Eye tracking in high-performance sports: evaluation of its application in expert athletes. Intern. J. Comput. Sci. Sport 17, 182–203. doi: 10.2478/IJCSS-2018-0011

Hyrskykari, A., Ovaska, S., Majaranta, P., Räihä, K.-J., and Lehtinen, M. (2008). Gaze path stimulation in retrospective think-aloud. J. Eye Mov. Res. 2, 1–18.

Iwatsuki, A., Hirayama, T., and Mase, K. (2013). Analysis of soccer coach’s eye gaze behavior. Proc. IAPR Asian Conf. Pattern Recogn. 2013, 793–797. doi: 10.1109/ACPR.2013.185

Knudson, D. V. (2013). Qualitative Analysis Of Human Movement. Leeds: Human Kinetics.

Lamb, P., Bartlett, R., and Robins, A. (2010). Self-organising maps: an objective method for clustering complex human movement. Intern. J. Comput. Sci. 9, 20–29.

Mann, D. T. Y., Williams, A. M., Ward, P., and Janelle, C. M. (2007). Perceptual-cognitive expertise in sport: a meta-analysis. J. Sport Exerc. Psychol. 29, 457–478. doi: 10.1123/jsep.29.4.457

Moran, A., Campbell, M., and Ranieri, D. (2018). Implications of eye tracking technology for applied sport psychology. J. Sport Psychol. Action 9, 249–259. doi: 10.1080/21520704.2018.1511660

Moreno, F. J., Reina, R., Luis, V., and Sabido, R. (2002). Visual search strategies in experienced and inexperienced gymnastic coaches. Percept. Mot. Skills 95, 901–902. doi: 10.2466/pms.2002.95.3.901

Moreno, F. J., Romero, F., Reina, R., and del Campo, V. L. (2006). Visual behaviour of tennis coaches in a court and video-based conditions (Análisis del comportamiento visual de entrenadores de tenis en situaciones de pista y videoproyección.) RICYDE. Rev. Int. Cienc. Deporte. 2, 29–41. doi: 10.5232/ricyde2006.00503

Nash, C., Sproule, J., and Horton, P. (2011). Excellence in coaching: The art and skill of elite practitioners. Res. Q. Exerc. Sport 82, 229–238. doi: 10.5641/027013611X13119541883744

Nash, C., and Sproule, J. (2011). Insights into experiences: reflections of an expert and novice coach. Intern. J. Sports Sci. Coach. 6, 149–161. doi: 10.1260/1747-9541.6.1.149

Renshaw, I., Davids, K., Araújo, D., Lucas, A., Roberts, W. M., Newcombe, D. J., et al. (2019). Evaluating weaknesses of “perceptual- cognitive training” and “brain training” methods in sport: an ecological dynamics critique. Front. Psychol. 9:2468. doi: 10.3389/fpsyg.2018.02468

Spitz, J., Put, K., Wagemans, J., Williams, A. M., and Helsen, W. F. (2016). Visual search behaviors of association football referees during assessment of foul play situations. Cogn. Res. Principl. Implicat. 1:12. doi: 10.1186/s41235-016-0013-8

Spitz, J., Put, K., Wagemans, J., Williams, A. M., and Helsen, W. F. (2018). The role of domain-generic and domain-specific perceptual-cognitive skills in association football referees. Psychol. Sport Exerc. 34:10. doi: 10.1016/j.psychsport.2017.09.010

Sport England (2018). Active Lives Adult Survey May 17/18 Report. Available at: https://www.sportengland.org/media/13768/active-lives-adult-may-17-18-report.pdf (accessed March 3, 2020).

Swann, C., Moran, A., and Piggott, D. (2015). Defining elite athletes: issues in the study of expert performance in sport psychology. Psychol. Sport Exerc. 16, 3–14. doi: 10.1016/j.psychsport.2014.07.004

Taylor, N., Giles, D., Panáčková, M., Panáčková, P., Mitchell, J., Chidley, J., et al. (2020). A novel tool for the assessment of sport climber’s movement performance. Intern. J. Sports Physiol. Perform. doi: 10.1123/ijspp.2019-0311 [Epub ahead of print].

Tobin, G. A., and Begley, C. M. (2004). Methodological rigour within a qualitative framework. J. Adv. Nurs. 48, 388–396. doi: 10.1111/j.1365-2648.2004.03207

Travassos, B., Araújo, D., Davids, K., O’Hara, K., Leitão, J., and Cortinhas, A. (2013). Expertise effects on decision-making in sport are constrained by requisite response behaviours-A meta-analysis. Psychol. Sport Exerc. 14, 211–219. doi: 10.1016/j.psychsport.2012.11.002

Williams, A. M., Davids, K., and Williams, J. G. (1999). Visual Perception And Action In Sport. London: E & FN Spon.

Williams, A. M., Ford, P., Hodges, J., and Ward, P. (2018). “Expertise in sport: Specificity, plasticity and adaptability,” in Handbook of Expertise And Expert Performance , 2nd Edn, eds K. A. Ericsson, N. Charness, R. Hoffman, and A. M. Williams (Cambridge: Cambridge University Press), 653–674.

Williams, A. M., and Ward, P. (2007). Perceptual-cognitive expertise in sport: exploring new horizons. Handb. Sport Psychol. 29, 203–223.

Wilson, T. D. (1994). Commentary to feature review THE PROPPER PROTOCOL: validity and completeness of verbal reports. Psychol. Sci. 8, 249–253.

Keywords : eye tracking, think-aloud, sport, education, expertise, gaze behavior, coaching

Citation: Mitchell J, Maratos FA, Giles D, Taylor N, Butterworth A and Sheffield D (2020) The Visual Search Strategies Underpinning Effective Observational Analysis in the Coaching of Climbing Movement. Front. Psychol. 11:1025. doi: 10.3389/fpsyg.2020.01025

Received: 02 December 2019; Accepted: 24 April 2020; Published: 28 May 2020.

Reviewed by:

Copyright © 2020 Mitchell, Maratos, Giles, Taylor, Butterworth and Sheffield. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY) . The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: James Mitchell, [email protected]