Human-System Integration in the System Development Process: A New Look (2007)

Chapter: 5 case studies, 5 case studies.

T his chapter provides three examples of specific system development that illustrate application of human-system integration (HSI) methods in the context of the incremental commitment model (ICM). The examples are drawn from the committee’s collective experience and specific application of the concepts developed during our work to these particular projects. They represent projects at three stages of development: the early stages of planning, in mid-development, and fully realized.

The first example involves the development of unmanned aerial systems and identifies numerous HSI issues in these systems that will require solution. This example provides a “notional” application of human factors methods and potential implementation of the incremental commitment model. The case study illustrates the theme of designing to accommodate changing conditions and requirements in the workplace. Specifically, it addresses the issue of adapting current unmanned aerial systems to accommodate fewer operators, with individual operators controlling multiple vehicles. The hypothetical solutions to this problem reveal the potential costs of reliance on automation, particularly prior to a full understanding of the domain, task, and operator strengths and limitations. This case study also reveals the tight interconnection between the various facets of human-system integration, such as manpower, personnel, training, and design. In other words, answering the “how many operators to vehicles” question necessarily impacts design, training, and personnel decisions.

The second example focuses on a large-scale government implementation of port security systems for protection against nuclear smuggling. The example discusses the HSI themes and incremental application of methods

during the iterative development of the system. This case is useful for illustrating application of human factors methods on a risk-driven basis, as they tend to be applied as needed over time in response to the iterative aspects of defining requirements and opportunities, developing design solutions, and evaluation of operational experience.

The third example describes development of an intravenous infusion pump by a medical device manufacturer. This example is the most detailed and “linear” of the three cases, in that it follows a sequential developmental process; the various systems engineering phases are discussed in terms of the human factors methods applied during each phase. This case study illustrates the successful implementation of well-known HSI methods, including contextual inquiry, prototyping and simulations, cognitive walkthroughs for estimating use-error-induced operational risks, iterative design, and usability evaluations that include testing and expert reviews. The importance of the incremental commitment model in phased decision making and the value of shared representations is also highlighted.

Each of these examples is presented in a somewhat different format, as appropriate to the type of development. This presentation emphasizes one broad finding from our study, which is that a “one size” system development model does not fit all. The examples illustrate tailored application of HSI methods, the various trade-offs that are made to incorporate them in the larger context of engineering development, and the overall theme of reducing the risk that operational systems will fail to meet user needs.

UNMANNED AERIAL SYSTEMS

Unmanned aerial systems (UASs) or remotely piloted vehicles (RPVs) are airplanes or helicopters operated remotely by humans on the ground or in some cases from a moving air, ground, or water vehicle. Until recently the term “unmanned aerial vehicle” (UAV) was used in the military services in reference to such vehicles as Predators, Global Hawks, Pioneers, Hunters, and Shadows. The term “unmanned aerial system” acknowledges the fact that the focus is on much more than a vehicle. The vehicle is only part of a large interconnected system that connects other humans and machines on the ground and in the air to carry out tasks ranging from UAS maintenance and operation to data interpretation and sensor operation. The recognition of the system in its full complexity is consistent with the evolution from human-machine design to human-system design, the topic of this report. It highlights an important theme of this book: the need for methods that are scalable to complex systems of systems.

Unmanned aerial systems are intended to keep humans out of harm’s way. However, humans are still on the ground performing maintenance, control, monitoring, and data collection functions, among others. Reports

from the Army indicate that 22 people are required on the ground to operate, maintain, and oversee a Shadow UAS (Bruce Hunn, personal communication). In addition, there is a dearth of UAS operators relative to the current need in Iraq and Afghanistan, not to mention the U.S. borders. The growing need for UAS personnel, combined with the current shortage, points to another theme of this report: the need for human-system integration to accommodate changing conditions and requirements in the workplace.

In addition, this issue has strong ties to questions of manning. The manning questions are “How many operators does it take to operate each unmanned aerial system? Can one modify the 2:1 human to machine ratio (e.g., two humans operating one UAS) to allow for a single operator and multiple aircraft (e.g., 1:4)?” Automation is often proposed as a solution to this problem, but the problem can be much more complex. Automation is not always a solution and may, in fact, present a new set of challenges, such as loss of operator situation awareness or mode confusion. Furthermore, the manning question is a good example of how HSI design touches other aspects of human-system integration, such as manpower, personnel, and training. That is, the question of how many vehicles per operator is not merely one of automation, but also involves the number and nature of the operators in question.

A Hypothetical Case

This example is based on an ongoing debate about the manning question, which has not been fully resolved. Therefore some aspects of the case are hypothetical, yet not improbable. In this example we assume that the objective of the design is to change the operator to UAS ratio from 2:1 to 1:4. That is, instead of two operators for one UAS there will be one operator for four UASs. This operator to UAS ratio is a requirement of the type that may be promulgated by the Department of Defense with minimal HSI input. It could be too late for human-system integration, which needs to be fully integrated into the engineering life cycle before system requirements have been determined. It could be too late in the sense that up-front analysis might have revealed that an effective 1:4 ratio is beyond the capabilities of current humans and technology under the best of circumstances. If this is the case, then there is a huge risk of designing a system that is doomed to fail. Even worse, this failure may not reveal itself until the right operational events line up to produce workload that breaks the system.

In our example, we present another scenario. The design of a UAS with a 1:4 ratio of operator to system is carried through the ICM development process to illustrate the potential role of human-system integration and one of the themes of this book. The Department of Defense is one of many

critical stakeholders in this scenario, all of whom are to be considered in the satisficing process that ensues.

Human-System Integration in the Context of the Incremental Commitment Model

In the earliest exploration phases of ICM development, the problem space and concept of operations are defined, and concept discovery and synthesis take place. Table 5-1 provides highlights of the entire example. It is often the case that human-system integration is not brought into the development cycle at this point, although at great risk. Up-front analyses, such as interviews of UAS operators, observations of operations of 2:1 systems, examination of mishap reports, understanding of the literature and data, an analysis of the 2:1 workload, event data analysis targeted at communications in the 2:1 UAS system, application of models of operator workload, and work flow analysis are all methods that could be used to explore the HSI issues in the current UAS system.

There is much that could come from this kind of up-front analysis. One hypothetical possibility is that the up-front HSI analyses could determine that UAS workload is not constant but peaks in target areas where photos need to be taken or in situations in which the route plan needs to change.

One of the key principles of ICM development is risk management , including risk-driven activity levels and anchor point commitment milestones. What are the risks if human-system integration is not considered early in the development life cycle? In this case, the formal requirements that are established may target workload reduction incorrectly. For example, autopilot automation might be developed to help to get multiple UASs from point A to point B and so on. This might have the effect of reducing workload when a reduction was not needed, while providing no relief from the high-workload tasks. Ultimately the neglect of up-front human-system integration could result in a system that is ineffective or prone to error. Consideration of risks like these should guide system development.

What if there is not enough time to interview UAS operators and to do a thorough job in the exploration phase? There is also risk associated with application of costly up-front techniques. The up-front methods used often during the exploration phase of the life cycle can be tailored to meet time and budget constraints—another theme of this book. For example, in this case in which the manning question is the issue and automation appears to be a promising solution, it would make sense to focus on aspects of the task that may be automated and the workload associated with each. One caveat is that decisions on how to scope and tailor the methods require some HSI expertise in order to target the aspects of human-system integration that promise the most risk reduction.

As system development progresses, other principles of ICM development come into play, including incremental growth of system development and stakeholder commitment. This part of the development life-cycle synthesis leads to construction, invention, or design that is iteratively refined as it is evaluated. HSI activities that would be useful at this point include function allocation and the development of shared representations, such as storyboards and prototypes.

Based on the previous finding of fluctuating workload, it may be decided that human intervention is needed at target areas and during route changes, but that the single operator can handle only one of these peak-workload tasks at a time. It may also be determined that, although automation could handle the routine flight task, an even more important place for automation is in the hand-off between the flight tasks and the human planning/replanning operation. The automation would therefore serve a scheduling and hand-off function, allocating complex tasks to the human operator as they arise and in order of priority (e.g., priority targets first). There could also be automation that serves as a decision aid for the targeting task.

Because only one nonroutine task can be handled at a time under the 1:4 scenario, it may also be decided that operators should be relieved of the flight functions completely but be on call for hand-offs from automation. For example, four controllers could handle the prioritized hand-offs from the automation, much as air traffic controllers handle multiple planes in a sector. Note that this new design and staffing plan are completely different in terms of operator roles and tasks from the former 2:1 operation. It is human-system integration that guided the allocation of tasks to human and machine; without it there would have been many other possibilities for automation that may not have produced the same end-state.

As the ICM development continues, the system engineers will go from working prototypes to product development, beta testing, product deployment, product maintenance, and product retirement. But there is continual iteration along the way. The incremental growth in the automation for scheduling, hand-offs, and targeting would occur in parallel with the next iteration’s requirements and subsystem definitions (i.e., concurrent engineering). Incremental growth will be influenced by stakeholder commitment. The HSI methods in the later stages include interviews and observations in conjunction with the newly designed system and usability testing. Some of the same methods used in up-front analysis (e.g., event data analysis, participatory analysis) can be again used and results contrasted with those of the earlier data collection.

The goal of human-system integration at this stage is to verify that the situation for the user has improved and that no new issues have cropped up in the interim. For instance, it may be determined from testing that the targeting decision aid is not trusted by the human operator (a stakeholder)

TABLE 5-1 Example of Human-System Integration for UASs in the Context of the Risk-Driven Spiral

and as a result is not used (a risk). Through iterations, a new design will be tested or the decision aid will be completely eliminated (i.e., stakeholder satisficing).

Conclusion and Lessons Learned

In this example, human-system integration plays a major role throughout the design process and is critical in the early stages before requirements are established. It can be integrated throughout the design life cycle with other engineering methods. It is also clear that the HSI activities serve to reduce human factors risks along the way and make evident the human factors issues that are at stake, so that these issues can be considered as they trade off with other design issues.

This example illustrates several lessons regarding human-system integration and system design:

The importance and complexity of the “system” in human-system integration compared with “machine” or “vehicle.”

Design concerns are often linked to manpower, personnel, and training concerns.

Up-front analysis and HSI input in early exploration activities is critical.

Methods can be tailored to time and money constraints, but HSI expertise is required to do so.

Risks are incurred if human-system integration is not considered or if it is considered late. In this case the risk would be a system that is not usable and that ultimately leads to catastrophic failure.

PORT SECURITY

The U.S. Department of Homeland Security (DHS) is in the process of implementing a large-scale radiation screening program to protect the country from nuclear weapons or dirty bombs that might be smuggled across the border through various ports of entry. This program encompasses all land, air, and maritime ports of entry. Our example focuses on radiation screening at seaports, which have a particularly complex operational nature. Seaports are structured to facilitate the rapid offloading of cargo containers from ocean-going vessels, provide temporary storage of the containers, and provide facilities for trucks and trains to load containers for transport to their final destination. The operation involves numerous personnel, includ-

FIGURE 5-1 RPM security screening at seaports involves multiple tasks, displays, and people.

ing customs and border protection (CBP) officers for customs and security inspection, terminal personnel, such as longshoremen for equipment operation, and transport personnel, such as truck drivers and railroad operators. Figure 5-1 illustrates the steps involved in the radiation screening process.

Design and deployment of radiation portal monitoring (RPM) systems for seaport operations engages the incremental commitment model for ensuring commitments from the stakeholders and to meet the fundamental technical requirement of screening 100 percent of arriving international cargo containers for illicit radioactive material.

This example illustrates aspects of the ICM process with specific instances of human-system integration linked to concurrent technical activities in the RPM program. The development of RPM systems for application in the seaport environment entails an iterative process that reflects the overall set of themes developed in this book. We discuss how these themes are reflected in the engineering process.

Human-System Integration in the Context of Risk-Driven Incremental Commitments

The human factors design issues encountered in this program are very diverse, ranging from fundamental questions of alarm system effectiveness at a basic research level, to very practical and time-sensitive issues, such as the most appropriate methods of signage or traffic signaling for controlling

the flow of trucks through an RPM system. HSI methods have been applied on a needs-driven basis, with risk as a driver for the nature of the application. With the issue of alarm system effectiveness, for example, it was recognized early in the program that reducing system nuisance alarms is an important issue, but one that requires a considerable amount of physics research and human factors display system modeling and design. The ICM process allowed early implementation of systems with a higher nuisance alarm rate than desirable while pursuing longer term solutions to problems involving filtering, new sensors, and threat-based displays. The nuisance alarm risk was accepted for the early implementations, while concurrent engineering was performed to reduce the alarm rate and improve the threat displays for implementation in later versions.

A contrasting example involves traffic signage and signaling. Since the flow of cargo trucks through port exits is a critical element of maintaining commercial flow, yet proper speed is necessary for RPM measurement, methods for proper staging of individual vehicles needed to be developed. Most ports involve some type of vehicle checkout procedure, but this could not be relied on to produce consistent vehicle speed through the RPM systems. Instead, the program engaged the HSI specialty to assist in developing appropriate signage and signaling that would ensure truck driver attention to RPM speed requirements.

HSI Methods Tailored to Time and Budget Constraints

Since the RPM program focus is homeland security, there has been schedule urgency from the beginning. The need for rapid deployment of RPM systems to maximize threat detection and minimize commercial impact has been the key program driver, and this has also influenced how the HSI discipline has been applied. The primary effect of program urgency and budgetary limitations has been to focus HSI efforts in work domain analysis, the modeling of human-system interactions, and theory-based analysis rather than experiment.

The work domain analysis has typically focused on gaining a rapid understanding of relatively complicated seaport operations in order to evaluate technology insertion opportunities and to better understand design requirements. In contrast to work domain analysis oriented toward cognitive decision aids, which requires time-intensive collaboration with subject matter experts, the RPM analysis worked at a coarser level to characterize staff functions and interactions, material flow, and operational tempo. Similarly, modeling of human-system interactions (such as responding to a traffic light or an intercom system) was performed at the level of detail necessary to facilitate design, rather than a comprehensive representation of operator cognitive processes—this was not required to support engineering.

Theory-based analysis of alarm system effectiveness has been conducted on a somewhat longer time scale, since the problem of human response to alarms is more complex. This work consisted of adapting traditional observer-based signal detection theory, in which the human is an active component of the detection system, to RPM systems in which the human operator evaluates the output of a sensor system that detects a threat precondition. Various threat probability analyses have been conducted in this effort, and they can be used to guide subsequent advanced RPM designs. This work has been guided by empirical studies, but it has not required an independent data collection effort.

Shared Representations Used to Communicate

The rapid-paced nature of the RPM program places a premium on effective communication between human-system integration and the engineering disciplines. In this program, fairly simple communication mechanisms that use graphics or presentation methods adapted from engineering have the best chance of successful communication. For example, it is important to evaluate the human error risks associated with new security screening systems so that mitigation approaches can be designed. One approach to describing this to the engineering community might be to simply borrow existing taxonomies from researchers in the field, such as Reason (1990). Alternatively, a more graphic and less verbose approach is to represent the approach as a fault tree, shown in Figure 5-2 . This type of representation is immediately recognizable to the engineering community and is less subject to interpretation than abstract descriptions of error typologies.

FIGURE 5-2 General model of human error analysis for security screening used as a shared representation to communicate the concept to engineering staff.

FIGURE 5-3 Graphical representation of work flow with a threat-based RPM display.

Human-system integration has used graphics to convey fairly abstract design ideas to the engineering staff, as shown in Figure 5-3 . This display conveys the concept of a threat likelihood display, which informs the RPM operator about the contents of a vehicle based on processing algorithms. The graphic contrasts the eight-step process shown in Figure 5-1 , with a four-step screening process, illustrating the functional utility of the display in a direct way.

Accommodation to Changing Conditions and Workplace Requirements

The RPM program started with a set of baseline designs for seaports that involved a cargo container passing through an exit gate. As the program expanded to a wider range of port operations, numerous variations in the container-processing operations became apparent. In some instances, the traffic volume is so low that the costs of installing a fixed installation are too high; alternatively, trenching limits or other physical constraints may preclude a fixed portal. Operational differences, such as moving containers direct to rail cars, also present challenges for design.

FIGURE 5-4 Standard truck exit RPM system (left), mobile RPM system (middle), and straddle carrier operation (right).

Figure 5-4 illustrates several variants of RPM operational configurations that have HSI implications. The truck exit shown in the figure is a standard design that accommodates the majority of seaport operations as they are currently configured. In order to accommodate reconfiguration and low volume, a mobile RPM system has been developed, as shown above. For ports at which straddle carriers are used to move containers directly to rail, solutions are currently being evaluated. Human-system integration has been directly responsible for operations studies of straddle carrier operation to discern technology insertion opportunities. The critical issue for seaports is that current operations do not predict future operations; the rapid expansion of imports will fundamentally alter how high-volume ports process their cargo, and HSI studies will be an important element of adapting the security screening technologies to evolving operational models.

Scalable Methods

The RPM program is large in scale—involving geographically distributed installations on a nationwide basis, multiple personnel, government agencies and private-sector stakeholders—and seaports are an element of

the nation’s critical infrastructure. To make an effective contribution in this context, human-system integration has focused on problems of an aggregate nature that affect multiple installations. The methods generally employed, such as work domain analysis, probabilistic risk modeling, and timeline analysis, are applicable at an individual operator, work group, or port-wide level. Scalability is inherent in the overall goals of method application (i.e., discerning general operational constraints and potential design solutions); in the process there are requirements for “one-off” tailored solutions, but the fundamental goal is to provide generic solutions.

Principles of System Development

The development of RPM systems for application in the seaport environment has entailed an iterative process that reflects the system development principles described in this book. This section discusses how these principles are reflected in the engineering process.

Success-Critical Stakeholder Satisficing

As mentioned above, this program involves the private sector (seaport terminal management and labor), local public agencies such as port authorities, local and national transportation companies such as railroads, federal government agencies (DHS), federal contractors, and, from time to time, other federal law enforcement agencies, such as the Federal Bureau of Investigation. The issues and requirements of all need to be addressed in RPM deployments. The dual program goals of maximizing threat detection and minimizing impact on commerce define the parameters for stakeholder satisficing.

Incremental Growth of System Definition and Stakeholder Commitment

The objective of minimal disruption to ongoing seaport operations and the need to identify traffic choke points and screening opportunities require considerable up-front analysis, as well as continuing evaluation of impact as individualized deployments are designed. The general activities in this category include

initial site surveys to identify choke points.

operational process analysis to identify traffic flow and screening procedures for individual seaport sites.

adaptation of baseline screening systems to specific seaport site constraints.

continued monitoring and evaluation of impact, including nuisance alarm rates and traffic flow, from design through deployment.

modification of RPM system elements as required to meet security and operational missions.

This process generally involves initial stakeholder meetings to establish the relationships necessary to adapt the technologies to individual operations. Based on information gathered in operational studies, conceptual designs (50-percent level) are proposed, reviewed, and revised as a more detailed understanding of requirements and impacts is obtained. This leads to more refined definitions of implementation requirements and operational impacts, which in turn lead to commitment at the 90-percent design review.

Risk Management

The multiple operational personnel involved in port security and seaport operations necessarily entails a variety of human factors risks when new technology is introduced. One of the major initial risks involved consideration of staffing, as customs and border protection authorities have not typically placed officers on site at seaports. A number of options for operating security equipment were evaluated, and the decision was made that CBP would staff the seaport sites with additional schedule rotations. This reduced the risk of relying on nonlaw enforcement personnel but increased the cost to the government (a trade-off). Other risks include generally low workload associated with processing alarms (a trade-off of boredom and cost, but physical presence is guaranteed), the gradual erosion of alarm credibility based on the exclusive occurrence of nuisance alarms (a trade-off of high sensitivity of detection system with potential for reduced effectiveness), risks of labor disputes as more complex technology is introduced that may be seen as infringing on private-sector territory (a trade-off of the risk of a complex labor situation with the need for security screening), and transfer of training procedure incompatibilities from one location to another (i.e., procedures vary considerably from one site to another, and staff rotate among these locations—a trade-off of procedural variability with the human ability to adapt).

HSI activities tend to be deployed in this program based on continuing assessment of risks associated with individual seaport deployments. For example, HSI operational studies of straddle carrier cargo operations were undertaken midway through seaport deployments, when it was recognized that existing technology solutions could not be adapted to that type of operation. The risk of using existing technology was that seaport operations would need to fundamentally change—this would lead to an unacceptable

impact on commerce. Thus operational studies were undertaken to identify potential technology insertion opportunities that would minimize the risk of commercial impact.

Concurrent System Definition and Development

The RPM program involves substantial concurrent engineering activity. The initial deployments have utilized relatively low-cost, high-sensitivity but low-resolution sensors made of polyvinyl toluene. These sensors are highly sensitive to radioactive material but tend to generate nuisance alarms because of low resolution of the type of radioactive material (naturally occurring versus threat material). While this yields high threat sensitivity, it is also nonspecific and creates a larger impact on commerce due to nuisance alarms and the need for secondary inspections.

However, development of advanced spectroscopic portals (ASPs) that utilize high-resolution sensors is taking place concurrently with the installation of lower resolution portals and will be deployed subsequently. These portals will be able to identify specific radioactive isotopes and will help to reduce nuisance alarms that create an adverse impact on commerce. Concurrent human factors research concerning threat-based displays will be used for developing appropriate end-user displays for the new systems.

“NEXT-GENERATION” INTRAVENOUS INFUSION PUMP

The next-generation infusion pump is a general-purpose intravenous infusion pump (IV pump) designed primarily for hospital use with secondary, limited-feature use by patients at home. The device is intended to deliver liquid medications, nutrients, blood, and other solutions at programmed flow rates, volumes, and time intervals via intravenous and other routes to a patient. The marketed name is the Symbiq™ IV Pump. The device will offer medication management features, including medication management safety software through a programmable drug library. The infuser will also have sufficient memory to support extensive tracking logs and the ability to communicate and integrate with hospital information systems. The infuser will be available as either a single-channel pump or a dual-channel pump. The two configurations can be linked together to form a 3- or 4-channel pump. The infuser includes a large touchscreen color display and can be powered by either A/C power or rechargeable batteries.

To ensure that the infuser has an easy-to-use user interface, the development of the product was based on a user-centered design approach. As part of the user-centered design approach, the team involved potential users at each phase in the design cycle. During the first phase, the team conducted interviews with potential users and stakeholders, including nurses, anes-

thesiologists, doctors, managers, hospital administrators, and biomedical technicians to gather user requirements. The team also conducted early research in the form of contextual observations and interviews in different clinical settings in hospitals as a means to understand user work flow involving infusion pumps. The information from these initial activities was used in the conceptual development phase of the next-generation infusion pump. Iterative design and evaluation took place in the development of each feature. Evaluations included interviews, usability testing in a laboratory setting, usability testing in a simulated patient environment, testing with low-fidelity paper prototypes, and testing with high-fidelity computer simulation prototypes. Computer simulations of the final user interface of each feature were used in focus groups to verify features and to obtain additional user feedback on ease of use before the final software coding began. In the final phases of development, extensive usability testing in simulated patient environments was conducted to ensure design intent has been implemented and that ease of use and usability objectives were met. Throughout the development process, iterative risk analysis, evaluation, and control were conducted in compliance with the federally regulated design control process (see Figures 5-5 and 5-6 ).

Motivation Behind the Design

The primary motivation was to design a state-of-the-art infusion pump that would be a breakthrough in terms of ease of use and improved patient safety. Over recent decades, the quality of the user interface in many IV pump designs has fallen under scrutiny due to many human factors–related issues, such as difficulty in setting up and managing a pump’s interface through careful control and display interplay. In the past 20 years, the type, shape, and use of pumps have been, from outward appearances, very similar and not highly differentiated among the different medical device manufacturers. In fall 2002, Hospira undertook a large-scale effort to redesign the IV pump. Their mission was to create a pump that was easier to set up, easier to manage, easier to oversee patient care, and easier to use safely to help the caregiver prevent medication delivery errors. There was a clear market need for a new-generation IV pump. The Institute of Medicine in 2000 estimated 98,000 deaths a year in the United States due to medical errors (Institute of Medicine, 2000).

The User-Centered Design Process in the Context of the Incremental Commitment Model

The Symbiq™ IV Pump followed a classic user-centered design process, with multiple iterations and decision gates that are typically part of the in-

FIGURE 5-5 Two channel IV pumps with left channel illuminated. Photographs courtesy of Hospira, Inc.

FIGURE 5-6 IV tube management features. Photographs courtesy of Hospira, Inc.

cremental commitment model of product development. Risk management was a central theme in the development, both in terms of reducing project completion and cost risks and managing the risk of adverse events to patients connected to the device. Many of the interim project deliverables, such as fully interactive simulations of graphical user interfaces (GUI), were in the form of shared representations of the design, so that all development team members had the same understanding of the product requirements during the development cycle.

Following a classic human factors approach to device design, the nurse user was the primary influence on the design of the interface and the design of the hardware. Physicians and home patient users were also included in the user profiles. Hospira embarked on a multiphase, user-centered design program that included more than 10 user studies, in-depth interviews, field observations, and numerous design reviews, each aimed at meeting the user’s expectations and improving the intelligence of the pump software aimed at preventing medication errors.

Preliminary Research

Much preliminary work needed to be done in order to kick off this development. A well-known management and marketing planning firm was hired to lead concept analysis in which the following areas were researched:

Comparison of the next-generation pump and major competitors, using traditional strengths/weaknesses/opportunities methodology, included the following features:

Physical specifications

Pump capabilities, e.g., number of channels

Programming options

Set features

Pressure capabilities

Management of air in line

Biomedical indicators

Competitive advantages of the next-generation pump were identified in the following areas:

Bar code reading capability with ergonomic reading wand

Small size and light weight

Standalone functional channels (easier work flow, flexible regarding number of pumping channels)

Extensive drug library (able to set hard and soft limits for the same drug for different profiles of use)

High-level reliability

Clear mapping of screen and pumping channels

Vertical tubing orientation that is clear and simple

An extensive competitive analysis was undertaken, against the five largest market leaders. Task flows and feature lists and capabilities were created. A prioritization of the possible competitive advantage features and their development cost estimates was generated and analyzed.

Business risks were examined using different business case scenarios and different assumptions about design with input from the outside management consultants. Engineering consultants assisted Hospira with input on technical development issues and costs, including pump mechanisms, software platforms, and display alternatives.

Extensive market research was conducted as well to identify market windows, market segment analyses, pricing alternatives, hospital purchasing decision processes, and the influence of outside clinical practice safety groups. Key leaders in critical care were assembled in focus groups and individually to assess these marketing parameters. This process was repeated. Key outcomes were put into the product concept plan and its marketing product description document. This document also captured current and future user work needs and the related environments.

The concept team reached a decision gate with the concurrence of the management steering committee. The project plan and budget were approved and development began. Again, business risks were assessed. This step is typical in an ICM development approach.

Design Decisions

A fundamental architecture decision was reached to have an integrated design with either one or two delivery channels in a single integrated unit. Two or more integrated units could themselves be connected side by side in order to obtain up to four IV channel lines. This alternate was chosen over the competing concept of having modular pumping units that would interconnect and could be stacked onto one master unit to create multiple channels. The integrated master unit approach won out based on problems uncovered from the market research, such as a higher likelihood of lost modular units, inventory problems, and reduced battery life.

Feature Needs and Their Rationale

Based on the preliminary market research and on an analysis of medical device reports from the Food and Drug Administration (FDA) as well as complaints data from the Hospira customer service organization, the Marketing Requirements Document was completed and preliminary decisions were made to include the features described in this section. Field studies and contextual inquiry were planned as follow-on research to verify the need for these features and to collect more detail on how they would be designed.

Types of programmable therapies. Decisions were made to offer a set of complex therapies in addition to the traditional simple therapies usually offered by volumetric IV pumps. The traditional simple therapies were

continuous delivery for a specified period of time (often called mL/Hr delivery).

weight-based dosing, which requires entering the patient’s weight and the ordered drug delivery rate.

bolus delivery (delivery of a dose of medication over a relatively short period of time).

piggyback delivery (the delivery type that requires Channel A delivery suspension while Channel B delivers and then its resumption when Channel B completes).

The more complex therapies included

tapered therapy (ramping up and down of a medicine with a programmed timeline. It is sometimes used for delivery of nutritional and hydration fluids, called total parenteral nutrition).

intermittent therapy (delivery of varying rates of medication at programmed time intervals).

variable time delivery.

multistep delivery.

Business risks were examined to understand the sales consequences of including these features of therapy types to address the issue of stakeholder satisficing.

Medication libraries with hard and soft dosage limits. Research uncovered that several outside patient safety advocate agencies, including the Emergency Care Research Institute and the Institute for Safe Medical Practices were recommending only IV pumps with safety software consisting of upper and lower dosage limits for different drugs as a function of the

programmed clinical care area in a hospital. (Clinical care areas include emergency room, intensive care unit, oncology, pediatrics, transplants, etc.) It became clear that it would have been imperative to have safety software in the form of medication libraries that were programmed by each hospital to have soft limits (which could be overridden by nurses with permission codes) and hard limits (that could under no circumstances be overridden). It was decided at this time that separate software applications would need to written that would be used by hospital pharmacy and safety committees to enter drugs in a library table with these soft and hard limits, which would vary by clinical care area in the hospital. This is an example of incremental growth and stakeholder commitment in the design process.

Large color touch screen. A human factors literature review was conducted to create a list of advantages and disadvantages of various input and display technologies. This research was supplemented with engineering data on the costs and reliabilities of these technologies. Again, business risks were examined, including reliability of supply of various display vendors. After much research and debate, the list of choices was narrowed to three vendors of touch-sensitive color LCD displays.

This was a breakthrough, in the sense that no current on-market IV pumps were using color touchscreen technology. A large 8.4-inch diagonal color LCD display with resistive touchscreen input was selected for further testing. A resistive touchscreen was believed to reduce errors due to poor screen response to light finger touch forces.

Another issue that required some data from use environment analysis was the required angle of view and display brightness under various use scenarios. Subsequent contextual inquiry data did verify the need for viewing angles of at least +/- 60 degrees horizontal viewing and +/- 30 degrees vertical viewing angles. The minimum brightness or luminance levels were verified at 35 candelas per square meter. A business risk analysis examined the trade-offs between a large touchscreen display and the conflicting customer desire for small footprint IV pumps. The larger display size of 8.4-inch diagonal would allow larger on-screen buttons to minimize use errors due to inadvertent selection of adjacent on-screen buttons as well as allowing larger more readable on-screen text. Again, human factors research literature and standards on display usability were included in these decisions.

Special alarms with melodies. FDA medical device reports and customer complaint data reinforced the need for more effective visual and auditory alarms to alert IV pump users to pump fault conditions, such as air in line, occlusion in IV tubing, pending battery failure, IV bag nearly empty or unsafe dosage rates for a particular drug in a specific critical care area.

The team also decided to adopt the recommendations of the International Electrotechnical Commission (IEC) for an international standard for medical device auditory alarms to use unique melody patterns for IV pumps to distinguish these devices from other critical care devices, such as ventilators and vital sign patient monitors. These auditory alarms were later subjected to extensive lab and field studies for effectiveness and acceptability.

An early beta test in actual hospital settings with extended use subsequently showed user dissatisfaction with the harshness of some of the alarm melodies. The IEC standard had purposely recommended a discordant set of tone melodies for the highest alarm level, but clinicians, patients, and their families complained that they were too harsh and irritating. Some clinicians complained that they would not use these IV pumps at all, unless the alarms were modified. Or worse, they would permanently disable the alarms, which would create a very risky use environment.

This outcome highlights a well-known dilemma for human factors: lab studies are imperfect predictors of user behavior and attitudes in a real-world, extended-use setting. The previous lab usability studies were by their very nature short-duration exposures to these tones and showed that they were effective and alerting, but they did not capture long-term subjective preference ratings. A tone design specialist was engaged who redesigned the tones to be more acceptable, while still being alerting, attention grabbing, and still in compliance with the IEC alarm standard for melodies. Subsequent comparative usability evaluations (group demonstrations and interviews) demonstrated the acceptability of the redesigned melodies. This is a prime example of design iteration and concurrent system definition and development.

Semiautomatic cassette loading. Another early decision involved choosing between a traditional manual loading of the cassette into the IV pump or a semiautomated system, in which a motor draws a compartment into the pumping mechanism, after the clinician initially places the cassette into the loading compartment. The cassette is in line with the IV tubing and IV bag containing the medication. The volumetric pumping action is done through mechanical fingers, which activate diaphragms in the plastic cassette mechanism. Customer complaint history suggested the need for the semiautomated system to avoid use error in loading the cassette and to provide a fail-safe mechanism to close off flow in the IV line except when it was inserted properly into the IV pump.

A major problem with earlier cassette-based volumetric IV pump systems was the problem of “free flow,” in which medication could flow uncontrolled into a patient due to gravitational forces, with the possibility of severe adverse events. Early risk analysis and evaluation were done from both a business and use-error safety perspective to examine the benefit of

the semiautomated loading mechanism. Later usability testing and mechanical bench testing validated the decision to select the semiautomated loading feature.

A related decision was to embed a unique LED-based lighting indication system into the cassette loading compartment that would signal with colored red, yellow, and green lights and steady versus flashing conditions the state of the IV pump in general and specifically of the cassette loading mechanism. The lights needed to be visible from at least 9 feet to indicate that the IV pump is running normally, pump is stopped, cassette is improperly loaded, cassette compartment drawer is in the process of activation, etc.

Special pole mounting hardware. Again, data from the FDA medical device reports and customer complaints indicated the need for innovative mechanisms for the mounting of the IV pump on poles. Later contextual inquiry and field shadowing exercises validated the need for special features allowing for the rapid connection and dismounting of the IV pump to the pole via quick release/activation mechanisms that employed ratchet-like slip clutches. Subsequent ergonomics-focused usability tests of hardware mechanisms validated the need and usability of these design innovations for mounting on both IV poles and special bed-mounted poles, to accommodate IV pumps while a patient’s bed is being moved from one hospital department to another.

Risk analyses for business and safety risks were updated to include these design decisions. Industrial design models were built to prototype these concepts, and these working prototypes were subjected to subsequent lab-based usability testing. Again, these actions are examples of stakeholder satisficing, incremental growth of system definition, and iterative system design.

Stacking requirements. Given the earlier conceptual design decision to have an integrated IV pump rather than using add-on pumping channel modules, decisions were needed on how integrated IV pumps could be stacked together to create additional channels. A concomitant decision was that the integrated IV pump would be offered with either one or two integrated channels. Based on risk assessment, it was decided to allow side-by-side stacking to allow the creation of a 4-channel system when desired. The 4-channel system would be electronically integrated and allow the user interface to operate as one system. Again, trade-off analyses of risks were made against the competing customer need for a smaller device size footprint. A related design decision was to have an industrial design that allowed handles for easy transportation, but would also allow stable vertical stacking, while the units are stored between uses in the biomedi-

cal engineering department. Market research clearly indicated the need for vertical stacking in crowded storage areas. To facilitate safe storage of the pumps, the special pole clamps were made removable.

Tubing management. A well-known use-error problem of tangled and confusing IV tubing lines was addressed in the housing design by including several holders for storing excess tubing. Notches were also included to keep tubes organized and straight to reduce line-crossing confusion. These same holders were built as slight protrusions that protected the touchscreen from damage and inadvertent touch activation, if the pump were to be laid on its side or brushed against other medical devices.

Many other preliminary design decisions were made in these early stages that were based on both business and use-error risk analysis. In all cases, these decisions were verified and validated with subsequent data from usability tests and from field trials.

Design Process Details

The development of the Symbiq™ IV Pump followed the acknowledged best practices iterative user-centered design process as described in medical device standards (ANSI/AAMI HE 74:2001, IEC 60601-1-6:2004, and FDA human factors guidance for medical device design controls). The following sections are brief descriptions of what was done. Table 5-2 outlines the use of these human factors techniques and some areas for methodology improvements.

Contextual Inquiry

Contextual inquiry was done by multiple nurse shadowing visits to the most important clinical care areas in several representative hospitals. Several team members spent approximately a half-day shadowing nurses using IV pumps and other medical devices and observing their behaviors and problems. A checklist was used to record behaviors and, as time permitted, ask about problem areas with IV pumps and features that needed attention during the design process. Subsequent to the field visits, one-on-one interviews with nurses were conducted to explore in depth the contextual inquiry observations. These observations and interviews were used to generate the following elements:

task analyses

use environment analyses

user profiles analyses

Figure 5-7 shows an example of one of many task flow diagrams generated during the task analyses phases of the contextual inquiry.

Setting Usability Objectives

Quantitative usability objectives were set based on data from the contextual inquiry, user interviews, and the previous market research. Early use-error risk analysis highlighted tasks that were likely to have high risk, with particular attention to setting usability objectives to ensure that these user interface design mitigations were effective. Experience with earlier IV pump designs and user performance in usability tests also influenced the setting of these usability objectives. The objectives were primarily based on successful task performance measures and secondarily on user satisfaction measures. Examples of usability objectives were

90 percent of experienced nurses would be able to insert the cassette the first time while receiving minimal training; 99 percent would be able to correct any insertion errors.

90 percent of first-time users with no training would be able to power the pump off when directed.

90 percent of experienced nurses would be able to clear an alarm within 1 minute as first-time users with minimal training.

80 percent of patient users would rate the overall ease of use of the IV pump 3 or higher on a 5-point scale of satisfaction with 5 being the highest value.

Early Risk Management

Many rounds of iterative risk analysis, risk evaluation, and risk control were initiated at the earliest stages of design. The risk-management process followed recognized standards in the area of medical device design (e.g., ISO 14971:2000, see International Organization for Standardization, 2000a). The risk analysis process was documented in the form of a failure modes and effects analysis (FMEA), which is described in more detail in Chapter 8 . Table 5-3 presents excerpts from the early Symbiq™ FMEA. Business and project completion risks were frequently addressed at phase review and management review meetings.

The concept of risk priority number (RPN) was used in the operation risk assessment for the Symbiq™ infusion system. RPN is the resulting product of multiplying fault probability times risk hazard severity times probability of detecting the fault. A maximum RPN value is typically 125, and decision rules require careful examination of mitigation when the RPN

TABLE 5-2 Methodology Issues and Research Needs

FIGURE 5-7 Illustrative task flow diagram from the task analysis.

values exceed a value of 45. RPN values between 8 and 45 require an explanation or justification of how the risk is controlled.

The product requirements document (PDR) was formally created at this point to describe the details of the product design. It was considered a draft for revision as testing and other data became available. It was based on the customer needs expressed in the marketing requirements document. This document recorded the incremental growth of system definitions and stakeholder commitment and served as a shared representation of the design requirements for the development team.

Many prototypes and simulations were created for evaluation:

Hardware models and alternatives considered

hardware industrial design mock-ups

early usability tests of hardware mock-ups.

Paper prototypes for graphical user interfaces with wireframes consisting of basic shapes, such as boxes and buttons without finished detail graphic elements.

GUI simulations using Flash™ animations. 1

Early usability tests with hardware mock-ups and embedded software that delivered the Flash™ animations to a touchscreen interface that was integrated into the hardware case.

Flash animations are excellent examples of shared representations because they were directly used in the product requirements document to specify to software engineering exactly how the GUI was to be developed. All team discussions regarding GUI design were focused exclusively on the Flash animation shared representations of the Symbiq™ user interface.

Integrated Hardware and Software Models with Integrated Usability Tests

As noted earlier, the usability tests performed later in the development cycle were done with integrated hardware mock-ups and software simulations. Usability test tasks were driven by tasks with high-risk index values in the risk analysis, specifically the FMEA. Tasks were also included that had formal usability objectives associated with them. Although the majority of usability test tasks were focused on the interaction with the touchscreen-

TABLE 5-3 Excerpts from Symbiq™ Failure Modes and Effects Analysis (FMEA)

based graphical user interface, critical pump-handling tasks were included as well, such as IV pump mounting and dismounting on typical IV poles.

Tests of Alarm Criticality and Alerting

The initial alarm formative usability studies, described earlier, had the goal of selecting alarms that would be alerting, attention getting, and properly convey alarm priority, as well as communicating appropriate actions. These formative studies evaluated the subject’s abilities to identify and dis-

criminate among different visual alarm dimensions, including colors, flash rates, and text size and contrast. For auditory alarms, subjects were tested on their ability to discriminate among various tones with and without melodies and among various cadences and tone sequences for priority levels and detectability. Subjects were asked to rate the candidate tones relative to a standard tone, which was given a value of 100. The standard was the alternating high-low European-style police siren. Subjective measures were also gathered on the tones using the PAD rating system, standing for perceived tone pleasure, arousal, and dominance, as well as perceived criticality. Data

from these studies enabled the team to make further incremental decisions on system definitions for both visual and auditory alarms and alerts.

Tests of Display Readability

Another set of early formative usability tests was conducted to validate the selection of the particular LCD touchscreen for readability and legibility. During the evaluation it was determined that the screen angle (75 degrees) and overall curvature were acceptable. The screen could be read in all tested light conditions at a 15-foot viewing distance.

Iterative Usability Tests

As noted, a series of 10 usability studies were conducted iteratively as the design progressed from early wireframes to the completed user interface with all the major features implemented in a working IV pump. In one of the intermediate formative usability tests, a patient simulator facility was used at a major teaching hospital. Users performed a variety of critical tasks in a simulated room in an intensive care unit, in which other medical devices interacted and produced noises and other distractions. The prototype IV pump delivered fluid to a mannequin connected to a patient monitor that included all vital signs. As the pump was programmed and subsequently changed (e.g., doses titrated), the software-controlled patient mannequin would respond accordingly. The patient simulator also introduced ringing telephones and other realistic conditions during the usability test. This test environment helped in proving the usability of visual alarms and tones, as well as the understandability and readability of the visual displays. Final summative usability tests demonstrated that the usability objectives for the pump were achieved.

Focus Groups

Focus groups of nurses were also used as part of the usability evaluation process. These were used to complement the task-based usability tests. Many of the focus groups had a task performance component. Typically the participants would perform some tasks with new and old versions of design changes, such as time entry widgets on the touchscreen, and then convene to discuss and rate their experiences. This allowed a behavioral component and addressed one of the major shortcomings of typical focus groups, that they focus only on opinions and attitudes and not behaviors.

Field Studies

Field studies in the form of medical device studies have also been incorporated in the design process. Thoroughly bench-tested and working beta versions of the IV pump were deployed in two hospital settings. The hospitals programmed drug libraries for at least two clinical care areas. The devices were used for about 4 weeks. Surveys and interviews were conducted with the users to capture their real-world experiences with the pump. Data from the pump usage and interaction memory were also analyzed and compared with original doctor’s orders. This study revealed a number of opportunities to make improvements, including the problem with the perceived annoyance of the alarm melodies and the data entry methods for entering units of medication delivery time (e.g., hours or minutes).

Instructions for Use Development and Testing

Usability testing was also conducted on one of the sets of abbreviated instructions called TIPS cards. These cards serve as reminders for how to complete the most critical tasks. These usability studies involved 15 experienced nurses with minimal instructions performing 9 tasks with the requirement that they read and use the TIPS cards. Numerous suggestions for improvement in the TIPS cards themselves as well as the user interface came from this work, including how to reset the air-in-line alarm and how to address the alarm and check all on-screen help text for accuracy.

Validation Usability Tests

Two rounds of summative usability testing were conducted, again with experienced nurses performing critical tasks identified during the task analysis, including those with higher risk values in the risk analysis. The tasks were selected to simulate situations that the nurses may encounter while using the IV pump in a hospital setting. The tasks included selecting a clinical care area, programming simple deliveries, adding more volume at the end of an infusion, setting a “near end of infusion” alarm, titration, dose calculations, piggyback deliveries, intermittent deliveries, using standby, programming a lock, adjusting the alarm volume, and responding to messages regarding alarms.

Usability objectives were used as acceptance criteria for the summative validation usability tests. The study objectives were met. The calculated task completion accuracy was 99.66 percent for all tasks for first-time nurse users with minimal training. The null hypothesis that 80 percent of the participants would rate the usability 3 or higher on a 5-point scale in the overall categories was met. There were a few minor usability problems

uncovered that were subsequently fixed without major changes to the user interface or that affected critical safety-related tasks.

Federal regulations on product design controls require that a product’s user interface be validated with the final working product in a simulated work environment. In this instance, the working product was used in a laboratory test, but without having the device connected to an actual patient. Bench testing is also a part of validation to ensure that all mechanical and electrical specifications and requirements have been met.

Revised Risk Analysis

As part of the incremental commitment model, the risk analysis was iterated and revised as the product development matured. FMEAs were updated for three product areas, which were safety-critical risks associated with the user interface, the mechanical and electrical subsystems, and the product manufacturing process. Explicit analysis of the business risks and the costs of continued financial commitment to the funding of development were also incremented and reviewed at various management and phase reviews.

Product Introduction

Product introduction planning included data collection from initial users to better understand remaining usage issues that can be uncovered only during prolonged usage in realistic clinical conditions. The many cycles of laboratory-based usability testing typically are never detailed enough or long enough to uncover all usability problems. The plan is to use the company complaint handling and resolution process (e.g., corrective action and preventive action) to address use issues if they arise after product introduction.

Life-Cycle Planning

The product was developed as a platform for the next generation of infusion pump products. As such, there will be continued business risk assessment during the life cycle of this first product on the new platform as well as on subsequent products and feature extensions.

Summary of Design Issues and Methods Used

This infusion pump incorporated the best practices of user-centered design in order to address the serious user interface deficiencies of previous infusion pumps. The development process took excellent advantage of the

detailed amount of data that is derived from an integrated HSI approach and used it to improve and optimize the safety and usability of the design. Because of these efforts, the Symbiq™ IV Pump won the 2006 Human Factors and Ergonomics Society award for best new product design from the product design technical group.

This case study also illustrates and incorporates the central themes of this report:

Human-system integration must be an integral part of systems engineering.

Begin HSI contributions to development early and continue them throughout the development life cycle.

Adopt a risk-driven approach to determining needs for HSI activity (multiple applications of risk management to both business and safety risks).

Tailor methods to time and budget constraints (scalability).

Ensure communication among stakeholders of HSI outputs (shared representations).

Design to accommodate changing conditions and requirements in the workplace (the use of iterative design and the incremental commitment model).

This case study also demonstrates the five key principles that are integral parts of the incremental commitment model of development: (1) stakeholder satisficing, (2) incremental growth of system definition and stakeholder commitment, (3) iterative system development, (4) concurrent system definition and development, and (5) risk management—risk-driven activity levels.

This page intentionally left blank.

In April 1991 BusinessWeek ran a cover story entitled, "I Can't Work This ?#!!@ Thing," about the difficulties many people have with consumer products, such as cell phones and VCRs. More than 15 years later, the situation is much the same—but at a very different level of scale. The disconnect between people and technology has had society-wide consequences in the large-scale system accidents from major human error, such as those at Three Mile Island and in Chernobyl.

To prevent both the individually annoying and nationally significant consequences, human capabilities and needs must be considered early and throughout system design and development. One challenge for such consideration has been providing the background and data needed for the seamless integration of humans into the design process from various perspectives: human factors engineering, manpower, personnel, training, safety and health, and, in the military, habitability and survivability. This collection of development activities has come to be called human-system integration (HSI). Human-System Integration in the System Development Process reviews in detail more than 20 categories of HSI methods to provide invaluable guidance and information for system designers and developers.

READ FREE ONLINE

Welcome to OpenBook!

You're looking at OpenBook, NAP.edu's online reading room since 1999. Based on feedback from you, our users, we've made some improvements that make it easier than ever to read thousands of publications on our website.

Do you want to take a quick tour of the OpenBook's features?

Show this book's table of contents , where you can jump to any chapter by name.

...or use these buttons to go back to the previous chapter or skip to the next one.

Jump up to the previous page or down to the next one. Also, you can type in a page number and press Enter to go directly to that page in the book.

Switch between the Original Pages , where you can read the report as it appeared in print, and Text Pages for the web version, where you can highlight and search the text.

To search the entire text of this book, type in your search term here and press Enter .

Share a link to this book page on your preferred social network or via email.

View our suggested citation for this chapter.

Ready to take your reading offline? Click here to buy this book in print or download it as a free PDF, if available.

Get Email Updates

Do you enjoy reading reports from the Academies online for free ? Sign up for email notifications and we'll let you know about new publications in your areas of interest when they're released.

Part II: Information Systems for Strategic Advantage

Chapter 10: Information Systems Development

Learning Objectives

Upon successful completion of this chapter, you will be able to:

- Explain the overall process of developing new software;

- Explain the differences between software development methodologies;

- Understand the different types of programming languages used to develop software;

- Understand some of the issues surrounding the development of websites and mobile applications; and

- Identify the four primary implementation policies.

Introduction

When someone has an idea for a new function to be performed by a computer, how does that idea become reality? If a company wants to implement a new business process and needs new hardware or software to support it, how do they go about making it happen? This chapter covers the different methods of taking those ideas and bringing them to reality, a process known as information systems development.

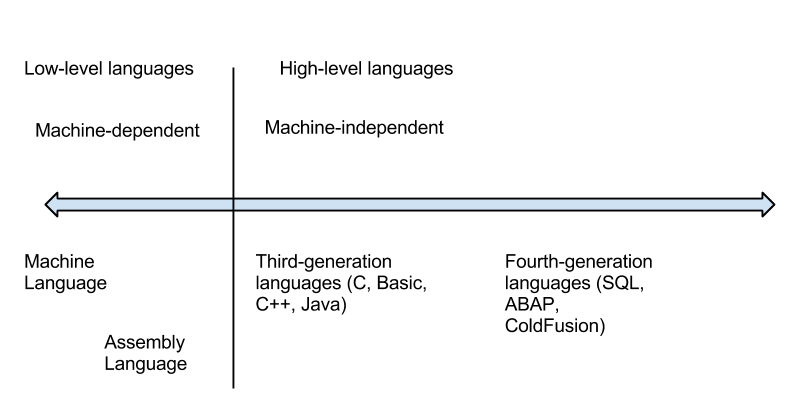

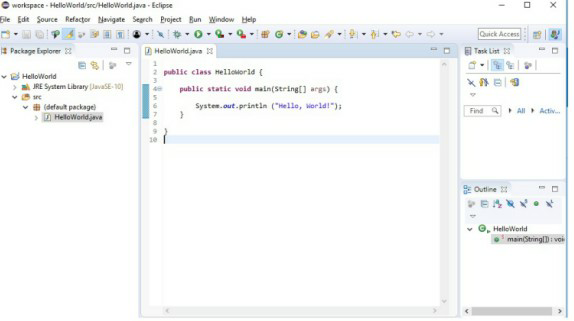

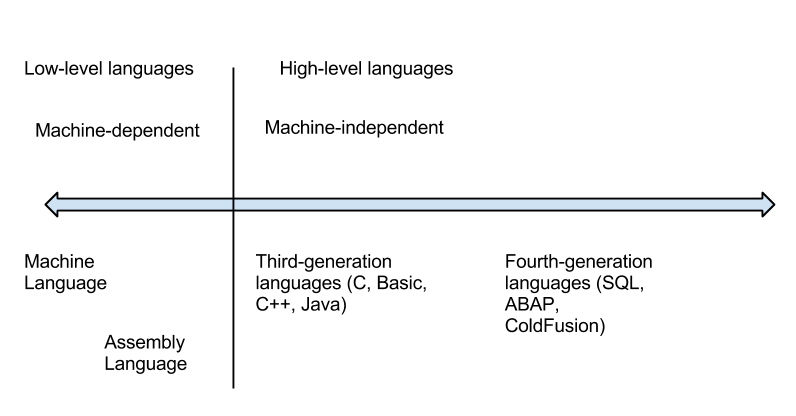

Programming

Software is created via programming, as discussed in Chapter 2. Programming is the process of creating a set of logical instructions for a digital device to follow using a programming language. The process of programming is sometimes called “coding” because the developer takes the design and encodes it into a programming language which then runs on the computer.

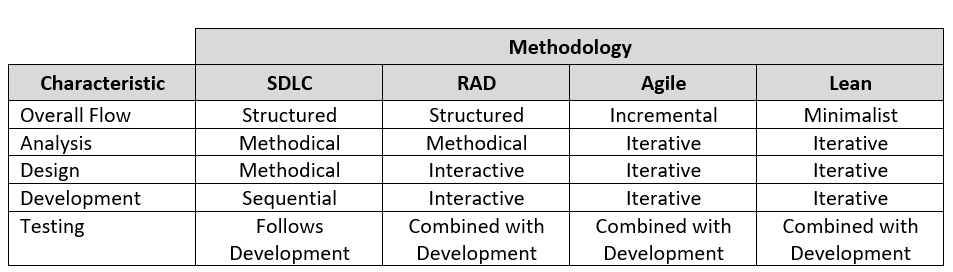

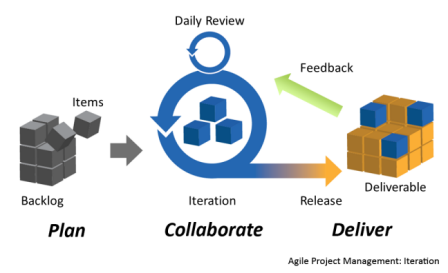

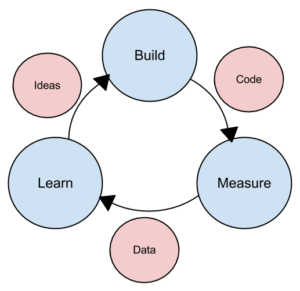

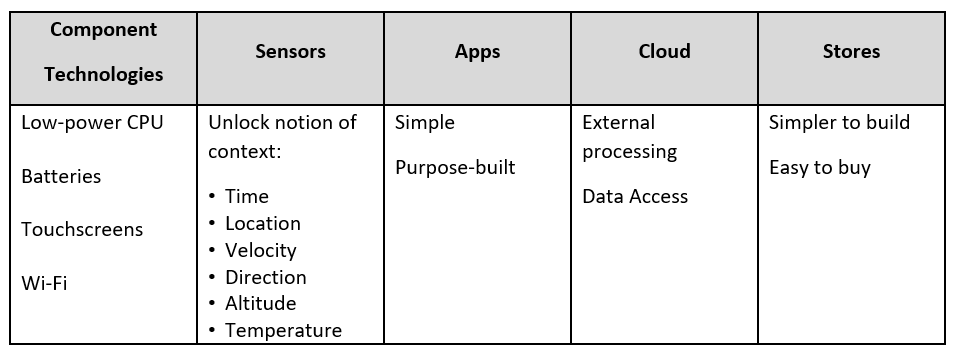

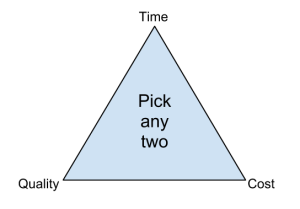

The process of developing good software is usually not as simple as sitting down and writing some code. Sometimes a programmer can quickly write a short program to solve a need, but in most instances the creation of software is a resource-intensive process that involves several different groups of people in an organization. In order to do this effectively, the groups agree to follow a specific software development methodology. The following sections review several different methodologies for software development, as summarized in the table below and more fully described in the following sections.

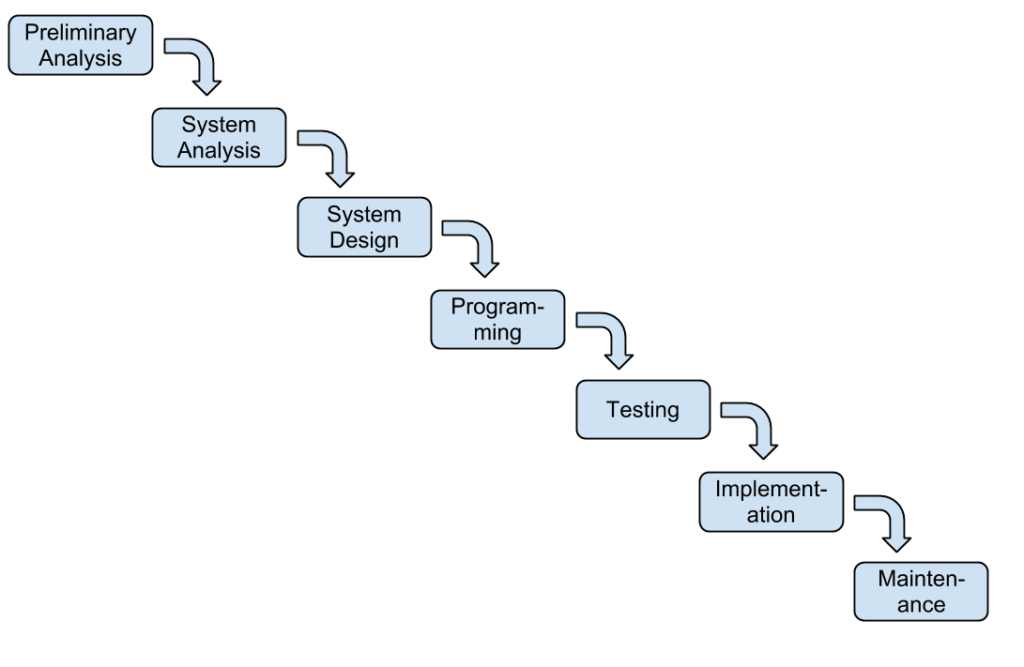

Systems Development Life Cycle

The Systems Development Life Cycle (SDLC) was first developed in the 1960s to manage the large software projects associated with corporate systems running on mainframes. This approach to software development is very structured and risk averse, designed to manage large projects that include multiple programmers and systems that have a large impact on the organization. It requires a clear, upfront understanding of what the software is supposed to do and is not amenable to design changes. This approach is roughly similar to an assembly line process, where it is clear to all stakeholders what the end product should do and that major changes are difficult and costly to implement.

Various definitions of the SDLC methodology exist, but most contain the following phases.

- Preliminary Analysis. A request for a replacement or new system is first reviewed. The review includes questions such as: What is the problem-to-be-solved? Is creating a solution possible? What alternatives exist? What is currently being done about it? Is this project a good fit for our organization? After addressing these question, a feasibility study is launched. The feasibility study includes an analysis of the technical feasibility, the economic feasibility or affordability, and the legal feasibility. This step is important in determining if the project should be initiated and may be done by someone with a title of Requirements Analyst or Business Analyst

- System Analysis. In this phase one or more system analysts work with different stakeholder groups to determine the specific requirements for the new system. No programming is done in this step. Instead, procedures are documented, key players/users are interviewed, and data requirements are developed in order to get an overall impression of exactly what the system is supposed to do. The result of this phase is a system requirements document and may be done by someone with a title of Systems Analyst

- System Design. In this phase, a designer takes the system requirements document created in the previous phase and develops the specific technical details required for the system. It is in this phase that the business requirements are translated into specific technical requirements. The design for the user interface, database, data inputs and outputs, and reporting are developed here. The result of this phase is a system design document. This document will have everything a programmer needs to actually create the system and may be done by someone with a title of Systems Analyst, Developer, or Systems Architect, based on the scale of the project.

- Programming. The code finally gets written in the programming phase. Using the system design document as a guide, programmers develop the software. The result of this phase is an initial working program that meets the requirements specified in the system analysis phase and the design developed in the system design phase. These tasks are done by persons with titles such as Developer, Software Engineer, Programmer, or Coder.

- Testing. In the testing phase the software program developed in the programming phase is put through a series of structured tests. The first is a unit test, which evaluates individual parts of the code for errors or bugs. This is followed by a system test in which the different components of the system are tested to ensure that they work together properly. Finally, the user acceptance test allows those that will be using the software to test the system to ensure that it meets their standards. Any bugs, errors, or problems found during testing are resolved and then the software is tested again. These tasks are done by persons with titles such as Tester, Testing Analyst, or Quality Assurance.

- Implementation. Once the new system is developed and tested, it has to be implemented in the organization. This phase includes training the users, providing documentation, and data conversion from the previous system to the new system. Implementation can take many forms, depending on the type of system, the number and type of users, and how urgent it is that the system become operational. These different forms of implementation are covered later in the chapter.

- Maintenance. This final phase takes place once the implementation phase is complete. In the maintenance phase the system has a structured support process in place. Reported bugs are fixed and requests for new features are evaluated and implemented. Also, system updates and backups of the software are made for each new version of the program. Since maintenance is normally an Operating Expense (OPEX) while much of development is a Capital Expense (CAPEX), funds normally come out of different budgets or cost centers.

The SDLC methodology is sometimes referred to as the waterfall methodology to represent how each step is a separate part of the process. Only when one step is completed can another step begin. After each step an organization must decide when to move to the next step. This methodology has been criticized for being quite rigid, allowing movement in only one direction, namely, forward in the cycle. For example, changes to the requirements are not allowed once the process has begun. No software is available until after the programming phase.

Again, SDLC was developed for large, structured projects. Projects using SDLC can sometimes take months or years to complete. Because of its inflexibility and the availability of new programming techniques and tools, many other software development methodologies have been developed. Many of these retain some of the underlying concepts of SDLC, but are not as rigid.

Rapid Application Development

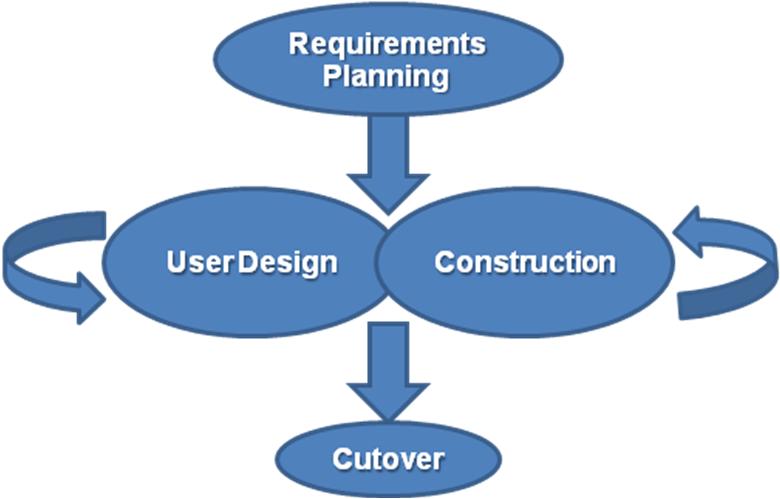

Rapid Application Development (RAD) focuses on quickly building a working model of the software, getting feedback from users, and then using that feedback to update the working model. After several iterations of development, a final version is developed and implemented.

The RAD methodology consists of four phases.

- Requirements Planning. This phase is similar to the preliminary analysis, system analysis, and design phases of the SDLC. In this phase the overall requirements for the system are defined, a team is identified, and feasibility is determined.

- User Design. In the user design phase representatives of the users work with the system analysts, designers, and programmers to interactively create the design of the system. Sometimes a Joint Application Development (JAD) session is used to facilitate working with all of these various stakeholders. A JAD session brings all of the stakeholders for a structured discussion about the design of the system. Application developers also participate and observe, trying to understand the essence of the requirements.

- Construction. In the construction phase the application developers, working with the users, build the next version of the system through an interactive process. Changes can be made as developers work on the program. This step is executed in parallel with the User Design step in an iterative fashion, making modifications until an acceptable version of the product is developed.