Learning center series

Travelling salesman problem explained

- Published on April 26, 2024

- by Oguzhan Uyar

- Last updated: 3 days ago

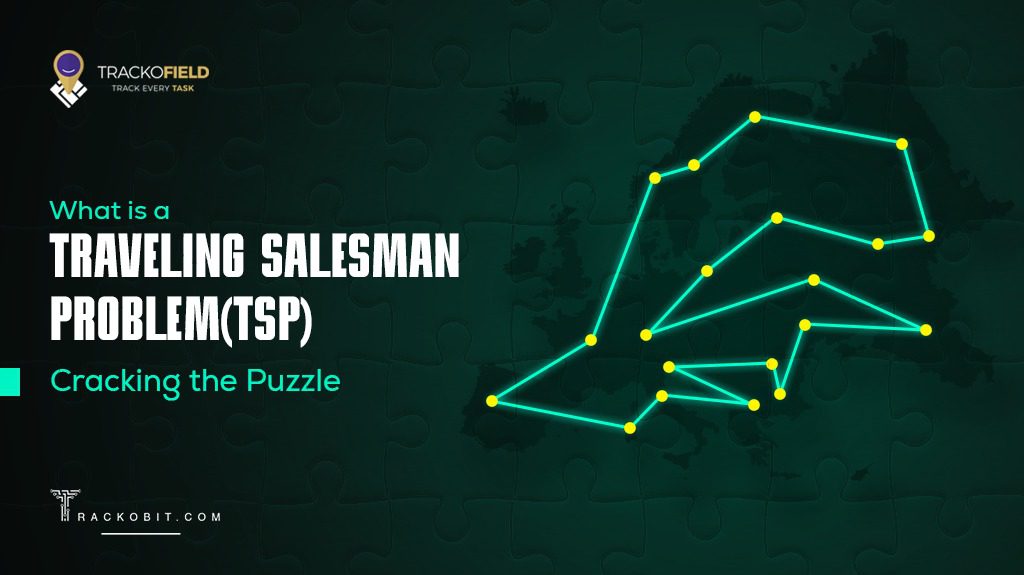

Revolutionize your understanding of the Travelling Salesman Problem (TSP); a mind-boggling conundrum that has compelled academia and industry alike. In the upcoming lines, we decode the key concepts, algorithms, and anticipated solutions for 2024 to this age-old dilemma.

Now, picture the TSP as a globetrotting traveling salesman who’s whirlwind journey. He must stop at every city once, only the origin city once, and find the quickest shortest possible route back home. If daunting to visualize, consider this: the possible number of routes in the problem concerning just 20 cities exceeds the number of atoms in the observable universe.

Fathom the sheer magnitude?

So, what is the Travelling Salesman Problem, and why has it remained unsolved for years? Let’s snap together the puzzle of this notorious problem that spans mathematics, computer science, and beyond. Welcome to an insightful voyage into the astonishing world of the TSP.

Understanding the Travelling Salesman Problem (TSP): Key Concepts and Algorithms

Defining the travelling salesman problem: a comprehensive overview.

The Travelling Salesman Problem, often abbreviated as TSP, has a strong footing in the realm of computer science. To the untrained eye, it presents itself as a puzzle: a salesperson or traveling salesman must traverse through a number of specific cities before an ending point and return to their ending point as of origin, managing to do so in the shortest possible distance. But this problem is not simply a conundrum for those fond of riddles; it holds immense significance in the broad field of computer science and optimization. Optimizing travel routes is the key to solving the Travelling Salesman Problem efficiently, ensuring the shortest and most cost-effective journey for salespeople or travelers.

The sheer computational complexity of TSP is what sets it apart, and incidentally, why it is considered a challenging problem to solve. Its complexity derives from the factorial nature of the problem: whenever a new city is added, the total number of possibilities increases exponentially. Thus, as the problem scope enlarges, it swiftly becomes computationally prohibitive to simply calculate all possible solutions to identify an optimal shortest route through. Consequently, developing effective and efficient algorithms to solve the TSP has become a priority in the world of computational complexity.

Polynomial Time Solution: The TSP can be solved by a deterministic Turing machine in polynomial time, which means that the number of steps to solve the problem can be at most 1.5 times the optimal global solution.

One such algorithm is the dynamic programming approach that can solve TSP problems in polynomial time. The approach uses a recursive formula to compute the shortest possible route that visits all other nodes in the cities exactly once and ends at all nodes in the starting point or city. Moreover, linear programming and approximation algorithms have also been used to find near-optimal solutions.

Unraveling TSP Algorithms: From Brute Force to Heuristics

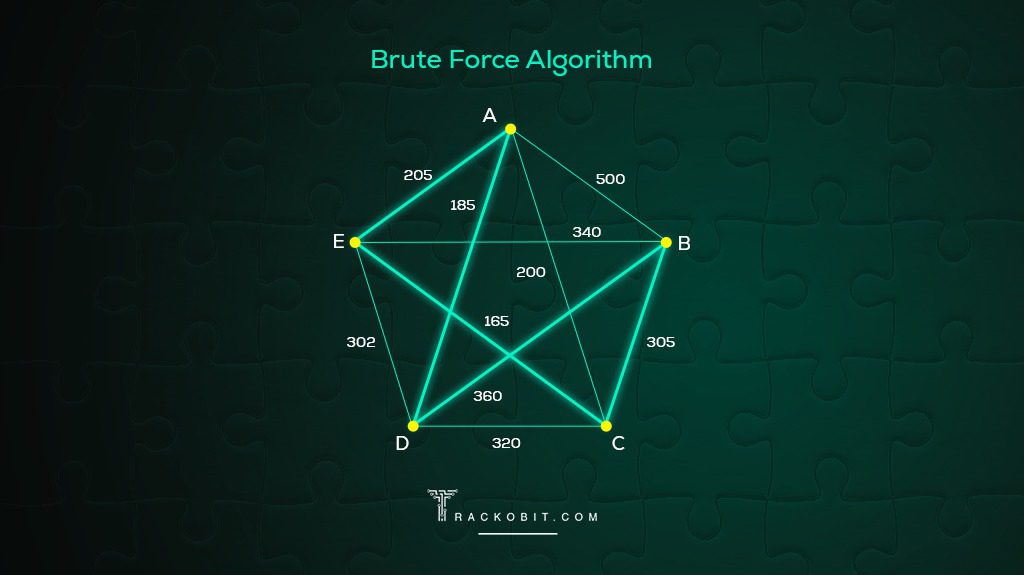

A multitude of algorithms have been developed to contend with the TSP. The brute force method, for example, entails considering the shortest distance for all possible permutations of cities, calculating the total distance for six cities along each route, and selecting the shortest distance for one. While brute force promises an optimal solution, it suffers from exponential computational complexity, rendering it unfeasible for large datasets.

TSP Complexity: A TSP with just 10 cities has 362,880 possible routes , making brute force infeasible for larger instances.

On the other hand, we have heuristic algorithms that generate good, albeit non-optimal, solutions in reasonable timeframes. The greedy algorithm, for instance, initiates from a starting city and looks for the shortest distance from other nodes to the next node minimizes the distance, and guarantees speed but is not necessarily an optimal solution.

Algorithmic Potential: Local Solutions 4/3 Times Optimal Global: The theoretical conjecture suggests an algorithm that can provide a local solution within 4/3 times the optimal global solution.

Record Local TSP Solution: The record for a local solution to the Traveling Salesman Problem (TSP) is 1.4 times the optimal global solution, achieved in September 2012 by Andr´as Seb˝o and Jens Vygen.

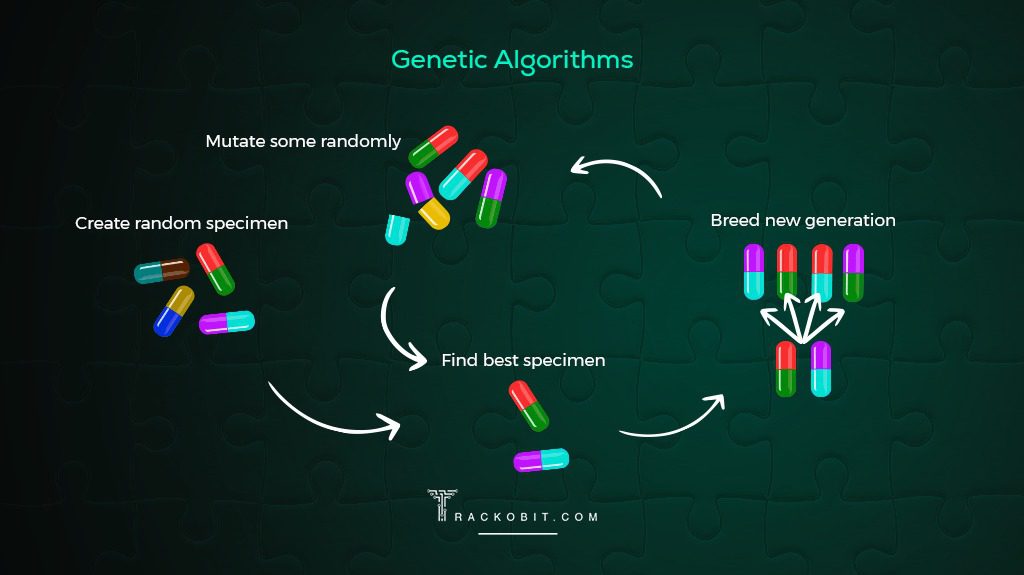

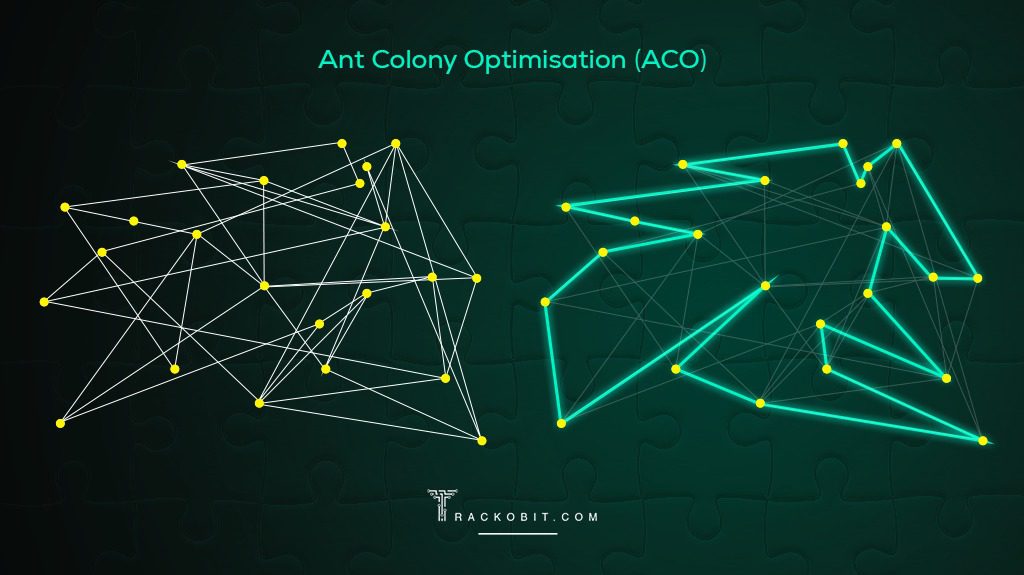

Exploring further, we encounter more refined heuristic solutions such as the genetic algorithm and simulated annealing algorithm. These algorithms deploy probabilistic rules, with the former incorporating principles of natural evolution and the latter being inspired by the cooling process in metallurgy. They differ significantly from each other in terms of how they search for solutions, but when compared to brute force, they often offer a promising trade-off between quality and computational effort.

Certainly, the TSP is far more than a problem to puzzle over during a Sunday afternoon tea. It’s a complex computational task with real-world implications, and unraveling it requires more than brute force; it demands the application of sophisticated algorithms designed to balance efficiency and quality of results. Nevertheless, with a better understanding of the problem statement and its dynamics, you can unlock the potential to not just solve problems faster, but to do so more intelligently.

Practical Solutions to the Travelling Salesman Problem

Implementing tsp solutions: a step-by-step guide, a comprehensive process for implementing tsp solutions.

Developing complete, practical solutions for the Travelling Salesman Problem (TSP) requires a good grasp of specific algorithms. Visual aids, for example, such as finding all the edges leading out of a given city, can help to simplify the process. Enhance your ability to solve the Travelling Salesman Problem by mastering route optimization with Google Maps , streamlining your path and saving significant time on the go.

TSP's Computer Science Quest for Shortest Routes: The TSP involves finding the shortest route to visit a set of cities exactly once before returning to the starting city, aiming to minimize travel distance.

One popular approach to TSP problem solving is the dynamic programming approach, which involves breaking down the problem into smaller sub-problems and recursively solving them. Other approaches include approximation algorithms, which generate near-optimal solutions to small problems in polynomial time, and bound algorithms, which aim to find an upper bound on the optimal solution given graph top.

TSP Variants: ASTP and STSP The TSP can be divided into two types: the asymmetric traveling salesman problem (ASTP) and the symmetric traveling salesman problem (STSP)

Practical code implementation

Before diving into code manipulations, it’s worth noting that practical implementation varies based on the specifics of the problem and the chosen algorithm. Let’s further deconstruct these notions by examining the steps for code implementation for a pre-determined shortest path first. It’s crucial to efficiently concede each point of the shortest path, call other nodes, connect each node, and conclude each route. Hereby, I shall delve into the practicalities of coding from an output standpoint, concentrating on readability, scalability, and execution efficiency.

TSP Instance Size: The size of the TSP instances used in the studies is 100 nodes.

Cluster Quantity in TSP Instances: The number of clusters in the TSP instances used in the studies is 10 .

The Quantity of Instances per Cluster in Studies: The number of instances in each cluster used in the studies is 100.

Optimizing TSP Solutions: Tips and Tricks

Tsp solutions optimization techniques.

An optimized solution for the TSP is often accomplished using advanced algorithms and techniques. Optimization techniques allow professionals to solve more intricate problems, rendering them invaluable tools when handling large datasets and attempting to achieve the best possible solution. Several approaches can be taken, such as heuristic and metaheuristic approaches, that can provide near-optimal solutions with less use of resources.

Expert Advice for Maximum Efficiency Minimum Cost

Even the most proficient problem solvers can gain from learning expert tips and tricks. These nuggets of wisdom often provide the breakthrough needed to turn a good solution into a great one. The TSP is no exception. Peering into the realm of experts can provide novel and creative ways to save time, increase computation speed, manage datasets, and achieve the most efficient shortest route around.

By comprehending and implementing these solutions to the Travelling Salesman Problem, one can unravel the complex web of nodes and paths. This equips any problem-solver with invaluable insights that make navigating through real-world TSP applications much more manageable. By adopting sophisticated routing planner software , businesses can significantly streamline their delivery operations, ensuring a more predictable and cost-effective way of dealing with the challenges presented by the Travelling Salesman Problem.

Real-World Applications of the Travelling Salesman Problem

Tsp in logistics and supply chain management.

The Travelling Salesman Problem (TSP) is not confined to theoretical mathematics or theoretical computer science either, it shines in real-world applications too. One of its most potent applications resides in the field of logistics and supply chain management. Explore how the latest gratis route planning applications can revolutionize logistics and supply chain efficiency by uncovering the top 10 free software tools for 2024.

With globalization, the importance of more efficient routes for logistics and supply chain operations has risen dramatically. Optimizing routes and decreasing costs hold the key to success in this arena. Here’s where TSP comes into play. Discover how Planning for Multiple Stops can revolutionize your logistics, ensuring the most efficient paths are taken. Dive into our post to see how it streamlines operations and reduces expenses.

In the vast complexity of supply chain networks, routing can be a colossal task. TSP algorithms bring clarity to the chaos, eliminating redundant paths and pointing to the optimal route covering the most distance, shortest path, and least distance between all the necessary points—leading to a drastic dip in transportation costs and delivery times. Uncover how optimizing delivery routes can revolutionize your logistics, ensuring efficiency and cost-effectiveness in your delivery operations.

Real-World Examples

Consider the case of UPS – the multinational package delivery company. They’ve reportedly saved hundreds of millions of dollars yearly by implementing route optimization algorithms originating from the Travelling Salesman Problem. The solution, named ORION, helped UPS reduce the distance driven by their drivers roughly by 100 million miles annually.

TSP in GIS and Urban Planning: Optimal Route

TSP’s contributions to cities aren’t confined to logistics; it is invaluable in Geographic Information Systems (GIS) and urban planning as well. A city’s efficiency revolves around transportation. The better the transport networks and systems, the higher the city’s productivity. And as city authorities continuously strive to upgrade transportation systems, they find an ally in TSP. Discover how TSP enhances road architecture by integrating specialized truck routing solutions , elevating urban transport strategies.

Advanced GIS systems apply TSP to design more efficient routes and routing systems for public transportation. The aim of the route is to reach maximum locations with the least travel time and least distance, ensuring essential amenities are accessible to everyone in the city.

The city of Singapore, known for its efficient public transportation system, owes part of its success to similar routing algorithms. The Land Transport Authority uses TSP-related solutions to plan bus routes, reducing travel durations and enhancing accessibility across the city.

Delving Deeper: The Complexity and History of the Travelling Salesman Problem

Understanding the complexity of tsp.

TSP is fascinating not just for its inherent practicality but also for the complexity it brings along. TSP belongs to a class of problems known as NP-hard, a category that houses some of the most complex problems in computer science. But what makes TSP so gnarled?

Unravel the Intricacies: Why is TSP complex?

TSP is a combinatorial optimization problem, which simply means that it’s all about figuring out the most often optimal solution among a vast number of possible solutions or combinations of approximate solutions. The real challenge comes from an innocent-sounding feature of TSP: As the number of cities (we call them nodes) increases, the complexity heightens exponentially, not linearly. The number of possible routes takes a dramatic upward turn as you add more nodes, clearly exemplifying why TSP is no walk-in-the-park problem.

NP-hard and TSP: A Connection Explored

In computer science, we use the terms P and NP for classifying problems. P stands for problems where a solution can be found in ‘polynomial time’. NP stands for ‘nondeterministic polynomial time’, which includes problems where a solution can be verified in polynomial time. The concept of ‘NP-hardness’ applies to TSP. Any problem that can be ‘reduced’ to an NP problem in polynomial time is described as NP-hard. In simpler terms, it’s harder than the hardest problems of NP. TSP holds a famed position in this class, making it a noteworthy example of an NP-hard problem. Discovering efficient solutions for the Traveling Salesman Problem (TSP) exemplifies how optimizing routes can streamline complex logistical challenges, showcasing the practical impact of advancements in algorithmic route optimization.

A Look Back: The History of the Travelling Salesman Problem

TSP is not a new kid on the block. Its roots can be traced back to the 1800s, and the journey since then has been nothing short of a compelling tale of mathematical and computational advancements.

The Origins and Evolution of TSP

Believe it or not, the Travelling Salesman Problem was first formulated as a mathematical problem in the 1800s. This was way before the advent of modern computers. Mathematicians and logisticians were intrigued by the problem and its implications. However, it wasn’t until the mid-20th century that the problem started to gain more widespread attention. Over the years, the TSP has transformed from a theoretical model to a practical problem that helps solve real-world issues.

A Classic Shortest Route Problem Since 1930s: The TSP is a classic optimization problem within the field of operations research, first studied during the 1930s.

Milestones in TSP Research

Looking at all the cities and milestones in TSP research, the story is truly impressive. From some of the initial heuristic algorithms to solve smaller instances of TSP, to geometric methods and then approximation algorithms, TSP research has seen a lot. More recently, we have also seen practical solutions to TSP using quantum computing — a testament to how far we’ve come. Each of these milestones signifies an innovative shift in how we understand and solve TSP. Discovering the top delivery route planning software in 2024, we navigated through the advancements in TSP solutions to identify which applications excel in streamlining delivery logistics.

Wrapping Up The Journey With Algorithms and Solutions

The Travelling Salesman Problem (TSP) remains a complex enigma of business logistics, yet, the advent of sophisticated algorithms and innovative solutions are paving avenues to optimize routing, reduce travel costs further, and enhance customer interactions.

Navigating the TSP intricacies is no longer a daunting challenge but a worthwhile investment in refining operational efficiency and delivering unparalleled customer experiences. With advanced toolsets and intelligent systems, businesses are closer to making more informed, strategic decisions.

Now, it’s your turn. Reflect on your current logistics complexities. How can your business benefit from implementing these key concepts and algorithms? Consider where you could incorporate these practices to streamline and revolutionize your daily operations.

Remember, in the world of dynamic business operations, mastering the TSP is not just an option, but a strategic imperative. As you move forward into 2024, embrace the art of solving the TSP. Unravel the potential, decipher the complexities, and unlock new horizons for your business.

Ready for the challenge?

What is route optimization?

7 reasons for delivery route optimization

What is multi-stop route planning and why is it important?

How to optimize routes with Google Maps

7 benefits of using route scheduling software

Truck route planning vs common route planning

Best delivery route planning software for 2024

Top 10 free route planning software of 2024

‟3 Metrobi Drivers together completed more than 170 deliveries for us.”

Diamond Bakery

‟I am able to do twice as many orders because I don't spend time delivering”

‟Great service and support from Metrobi”

Anna’s Taqueria

‟My favorite drivers are there for me”

Field Trip Flowers

Success Stories

Jacobson Floral

Quinlan-Wasserman

Dorchester Brewing Company

Secret Garden Rose

Hanato Floral Design

Flamingo Estate

DELIVER WITH METROBI

Grow with confidence

- 55 Court St floor 2, Boston, MA 02108

- [email protected]

- Team Metrobi

- Privacy policy

- Terms of service

- Write for us

Refer us to a company, you earn $250 and they earn $250. Learn more

- Shopify Delivery Planner App

- Delivery Management Software

- Atlanta courier service

- Boston courier service

- Chicago courier service

- Denver courier service

- Miami courier service

- New York City courier service

- Los Angeles courier service

- Philadelphia courier service

- San Francisco courier service

- Washington DC courier service

- See all locations

- Bulk Order Delivery Service

- Express Urgent Delivery Service

- Fixed Route Delivery Service

- On Demand Delivery Service

- Overnight Delivery Service

- Same Day Delivery Service

- Scheduled Delivery Service

- Wholesale Delivery Service

- See all delivery services

- Metrobi vs. Onfleet

- Metrobi vs. Roadie

- Metrobi vs. Roadie Support

- Artisan Food

- Food Producers

Want to access our large pool of drivers?

We started Metrobi to take operations off your plate. We provide drivers (rated 4.97/5), dedicated operation managers (70% cheaper), and routing software with a receiver notification system.

- Travelling salesman problem

The travelling salesman problem (often abbreviated to TSP) is a classic problem in graph theory . It has many applications, in many fields. It also has quite a few different solutions.

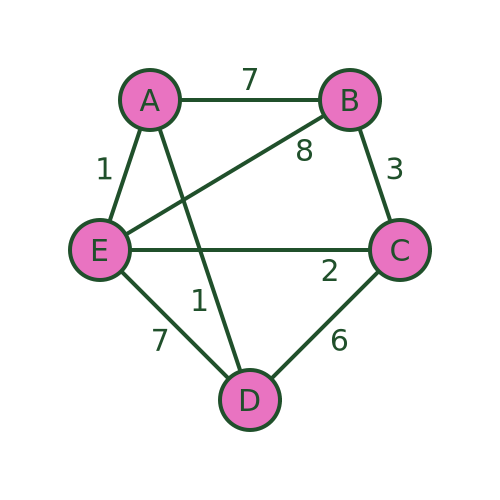

The problem

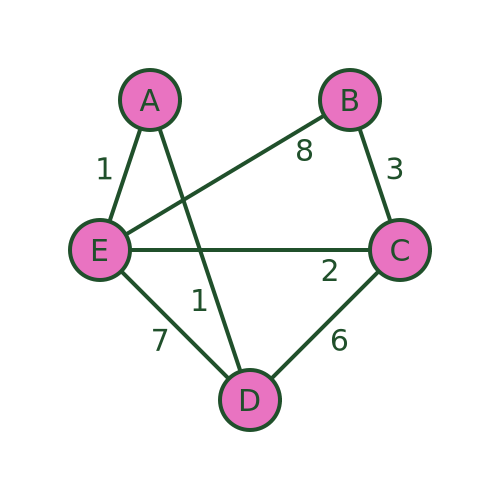

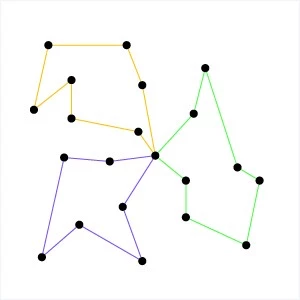

The problem is usually stated in terms of a salesman who needs to visit several towns before eventually returning to the starting point. There are various routes he could take, visiting the different towns in different orders. Here is an example:

There are several different routes that will visit every town. For example, we could visit the towns in the order A , B , C , D , E , then back to A . Or we could use the route A , D , C , E , B then back to A .

But not all routes are possible. For example, we cannot use a route A , D , E , B , C and then back to A , because there is no road from C to A .

The aim is to find the route that visits all the towns with the minimum cost . The cost is shown as the weight of each edge in the graph. This might be the distance between the towns, or it might be some other measure. For example, it might be the time taken to travel between the towns, which might not be proportionate to the distance because some roads have lower speed limits or are more congested. Or, if the salesman was travelling by train, it might be the price of the tickets.

The salesman can decide to optimise for whatever measure he considers to be most important.

Alternative applications and variants

TSP applies to any problem that involves visiting various places in sequence. One example is a warehouse, where various items need to be fetched from different parts of a warehouse to collect the items for an order. In the simplest case, where one person fetches all the items for a single order and then packs and dispatches the items, a TSP algorithm can be used. Of course, a different route would be required for each order, which would be generated by the ordering system.

Another interesting example is printed circuit board (PCB) drilling. A PCB is the circuit board you will find inside any computer or other electronic device. They often need holes drilled in them, including mounting holes where the board is attached to the case and holes where component wires need to pass through the board. These holes are usually drilled by a robot drill that moves across the board to drill each hole in the correct place. TSP can be used to calculate the optimum drilling order.

The TSP algorithm can be applied to directed graphs (where the distance from A to B might be different to the distance from B to A ). Directed graphs can represent things like one-way streets (where the distance might not be the same in both directions) or flight costs (where the price of a single airline ticket might not be the same in both directions).

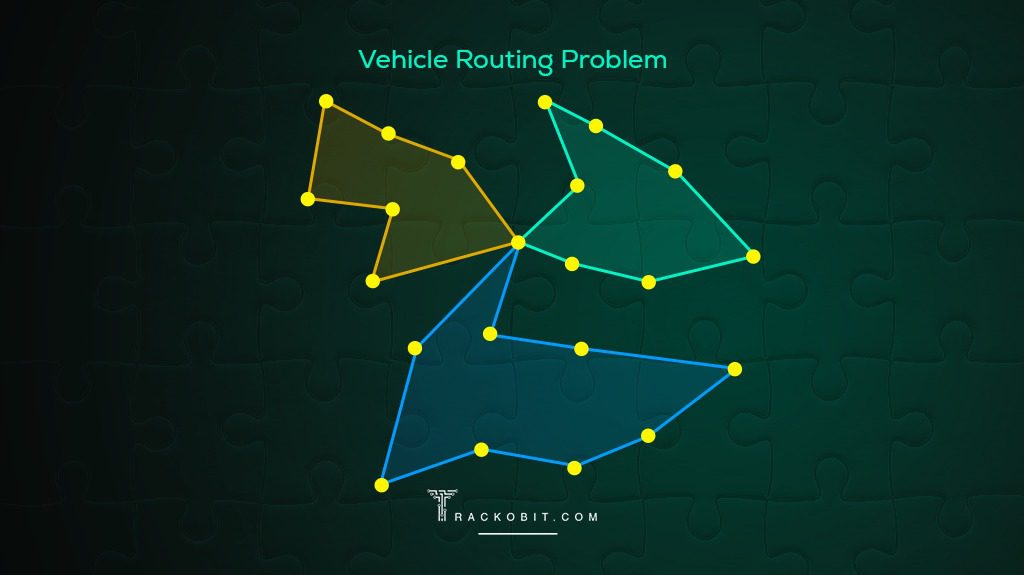

There are some variants of the TSP scenario. The mTSP problem covers the situation where there are several salesmen and exactly one salesman must visit each city. This applies to delivery vans, where there might be several delivery vans. The problem is to decide which parcels to place on each van and also to decide the route of each van.

The travelling purchaser problem is another variant. In that case, a purchaser needs to buy several items that are being sold in different places, potentially at different prices. The task is to purchase all the items, minimising the total cost (the cost of the items and the cost of travel). A simple approach would be to buy each item from the place where it is cheapest, in which case this becomes a simple TSP. However, it is not always worth buying every item from the cheapest place because sometimes the travel cost might outweigh the price difference.

In this article, we will only look at the basic TSP case.

Brute force algorithm

We will look at 3 potential algorithms here. There are many others. The first, and simplest, is the brute force approach.

We will assume that we start at vertex A . Since we intend to make a tour of the vertices (ie visit every vertex once and return to the original vertex) it doesn't matter which vertex we start at, the shortest loop will still be the same.

So we will start at A , visit nodes B , C , D , and E in some particular order, then return to A .

To find the shortest route, we will try every possible ordering of vertices B , C , D , E , and record the cost of each one. Then we can find the shortest.

For example, the ordering ABCDEA has a total cost of 7+3+6+7+1 = 24.

The ordering ABCEDA (i.e. swapping D and E ) has a total cost of 7+3+2+7+1 = 20.

Some routes, such as ADEBCA are impossible because a required road doesn't exist. We can just ignore those routes.

After evaluating every possible route, we are certain to find the shortest route (or routes, as several different routes may happen to have the same length that also happens to be the shortest length). In this case, the shortest route is AECBDA with a total length of 1+8+3+6+1 = 19.

The main problem with this algorithm is that it is very inefficient. In this example, since we have already decided that A is the start/end point, we must work out the visiting order for the 4 towns BCDE . We have 4 choices of the first town, 3 choices for the second town, 2 choices for the third town, and 1 choice for the fourth town. So there are 4! (factorial) combinations. That is only 24 combinations, which is no problem, you could even do it by hand.

If we had 10 towns (in addition to the home town) there would be 10! combinations, which is 3628800. Far too many to do by hand, but a modern PC might be able to do that in a fraction of a second if the algorithm was implemented efficiently.

If we had 20 towns, then the number of combinations would be of order 10 to the power 18. If we assume a computer that could evaluate a billion routes per second (which a typical PC would struggle to do, at least at the time of writing), that would take a billion seconds, which is several decades.

Of course, there are more powerful computers available, and maybe quantum computing will come into play soon, but that is only 20 towns. If we had 100 towns, the number of combinations would be around 10 to the power 157, and 1000 towns 10 to the power 2568. For some applications, it would be quite normal to have hundreds of vertices. The brute force method is impractical for all but the most trivial scenarios.

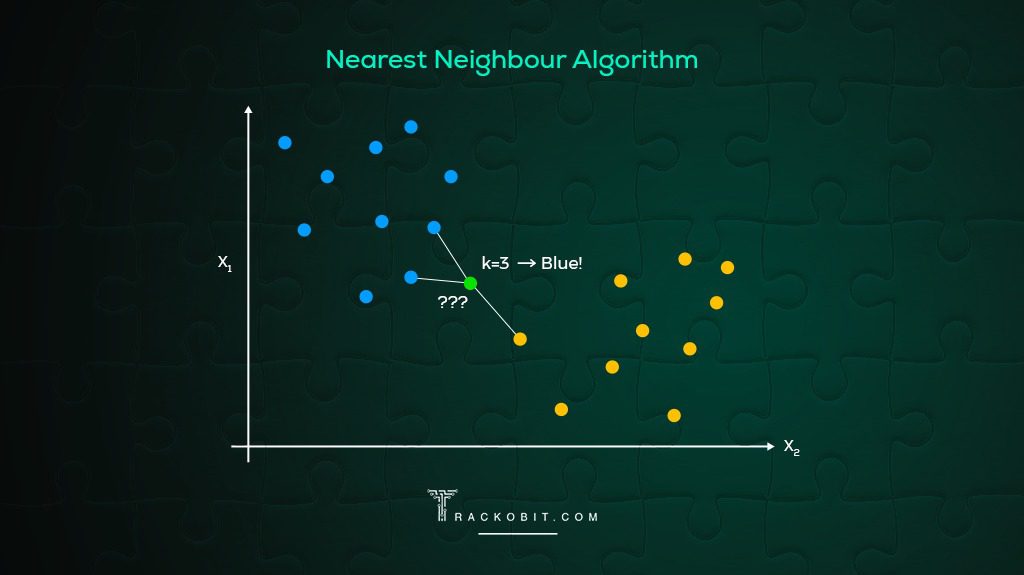

Nearest neighbour algorithm

The nearest neighbour algorithm is what we might call a naive attempt to find a good route with very little computation. The algorithm is quite simple and obvious, and it might seem like a good idea to someone who hasn't put very much thought into it. You start by visiting whichever town is closest to the starting point. Then each time you want to visit the next town, you look at the map and pick the closest town to wherever you happen to be (of course, you will ignore any towns that you have visited already). What could possibly go wrong?

Starting at A , we visit the nearest town that we haven't visited yet. In this case D and E are both distance 1 from A . We will (completely arbitrarily) always prioritise the connections in alphabetical order. So we pick D .

As an aside, if we had picked E instead we would get a different result. It might be better, but it is just as likely to be worse, so there is no reason to chose one over the other.

From D , the closest town is C with a distance of 6 (we can't pick A because we have already been there).

From C the closest town is E with distance 2. From E we have to go to B because we have already visited every other town - that is a distance of 8. And from B we must return to A with a distance of 7.

The final path is ADCEBA and the total distance is 1+6+2+8+7 = 24.

The path isn't the best, but it isn't terrible. This algorithm will often find a reasonable path, particularly if there is a natural shortest path. However, it can sometimes go badly wrong.

The basic problem is that the algorithm doesn't take account of the big picture. It just blindly stumbles from one town to whichever next town is closest.

In particular, the algorithm implicitly decides that the final step will be B to A . It does this based on the other distances, but without taking the distance BA into account. But what if, for example, there is a big lake between B and A that you have to drive all the way around? This might make the driving distance BA very large. A more sensible algorithm would avoid that road at all costs, but the nearest neighbour algorithm just blindly leads us there.

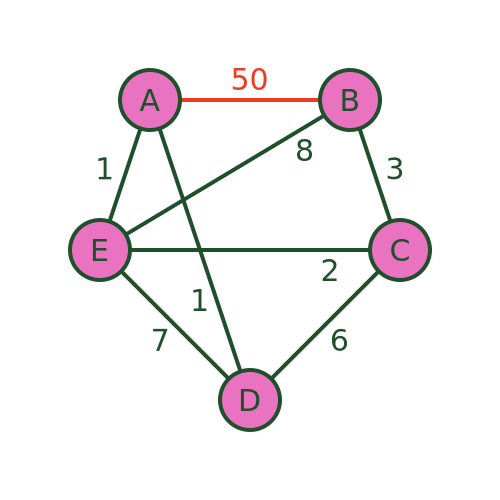

We can see this with this revised graph where BA has a distance of 50:

The algorithm will still recommend the same path because it never takes the distance BA into account. The path is still ADCEBA but the total distance is now 1+6+2+8+50 = 67. There are much better routes that avoid BA .

An even worse problem can occur if there is no road at all from B to A . The algorithm would still guide us to town B as the final visit. But in that case, it is impossible to get from B to A to complete the journey:

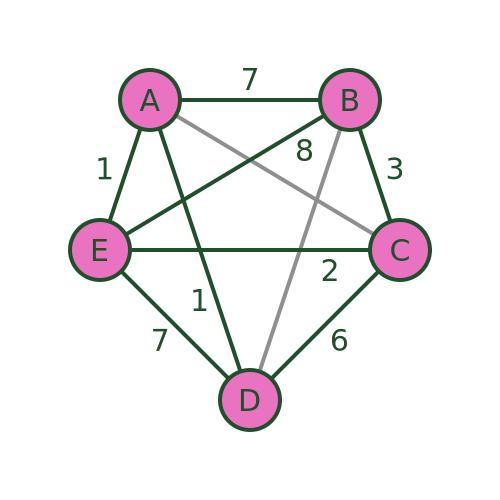

Bellman–Held–Karp algorithm

The Bellman–Held–Karp algorithm is a dynamic programming algorithm for solving TSP more efficiently than brute force. It is sometimes called the Held–Karp algorithm because it was discovered by Michael Held and Richard Karp, but it was also discovered independently by Richard Bellman at about the same time.

The algorithm assumes a complete graph (ie a graph where every vertex is connected to every other). However, it can be used with an incomplete graph like the one we have been using. To do this, we simply add extra the missing connections (shown below in grey) and assign them a very large distance (for example 1000). This ensures that the missing connections will never form part of the shortest path:

The technique works by incrementally calculating the shortest path for every possible set of 3 towns (starting from A ), then for every possible set of 4 towns, and so on until it eventually calculates the shortest path that goes from A via every other town and back to A .

Because the algorithm stores its intermediate calculations and discards non-optimal paths as early as possible, it is much more efficient than brute force.

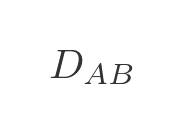

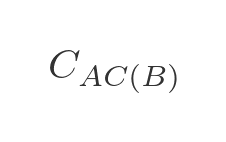

We will use the following notation. We will use this to indicate the distance between towns A and B :

And we will use this to indicate the cost (ie the total distance) from A to C via B :

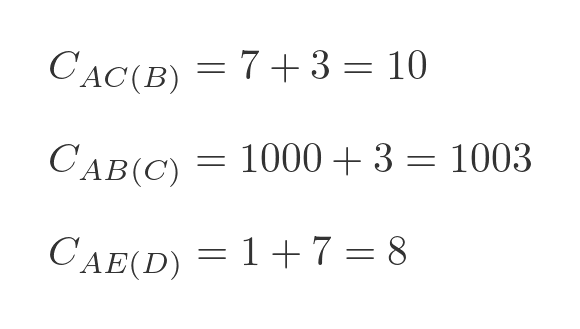

Here are some examples:

The first example is calculated by adding the distance AB to BC , which gives 10. The second example is calculated by adding AC to CB . But since there is no road from A to C , we give that a large dummy distance of 1000, so that total cost is 1003, which means it is never going to form a part of the shortest route. The third example is calculated by adding the distance AD to DE , which gives 8.

In the first step, we will calculate the value of every possible combination of 2 towns starting from A . There are 12 combinations: AB(C) , AB(D) , AB(E) , AC(B) , AC(D) , AC(E) , AD(B) , AD(C) , AD(E) , AE(B) , AE(C) , AE(D) .

These values will be stored for later use.

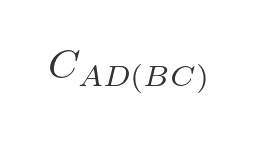

For step 2 we need to extend our notation slightly. We will use this to indicate the lowest cost from A to D via B and C (in either order):

To be clear, this could represent one of two paths - ABCD or ACBD whichever is shorter. We can express this as a minimum of two values:

This represents the 2 ways to get from A to D via B and C :

- We can travel from A to C via B , and then from C to D .

- We can travel from A to B via C , and then from B to D .

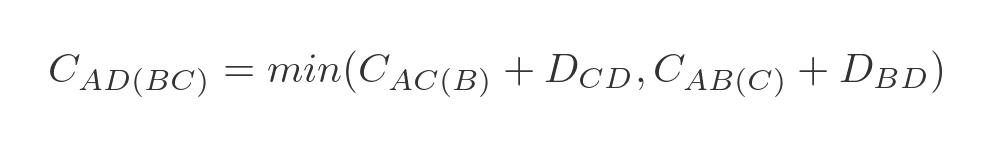

We have already calculated A to C via B (and all the other combinations) in step 1, so all we need to do is add the values and find the smallest. In this case:

- A to C via B is 10, C to D is 6, so the total is 16.

- A to B via C is 1003, B to D is 1000, so the total is 2003.

Clearly, ABCD is the best route.

We need to repeat this for every possible combination of travelling from A to x via y and z . There are, again, 12 combinations: AB(CD) , AB(CE) , AB(DE) , AC(BD) , AC(BE) , AC(DE) , AD(BC) , AD(BE) , AD(CE) , AE(BC) , AE(BD) , AE(CD) .

We store these values, along with the optimal path, for later use.

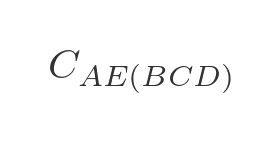

In the next step, we calculate the optimal route for travelling from A to any other town via 3 intermediate towns. For example, travelling from A to E via B , C , and D (in the optimal order) is written as:

There are 3 paths to do this:

- A to D via B and C , then from D to E .

- A to C via B and D , then from C to E .

- A to B via C and D , then from B to E .

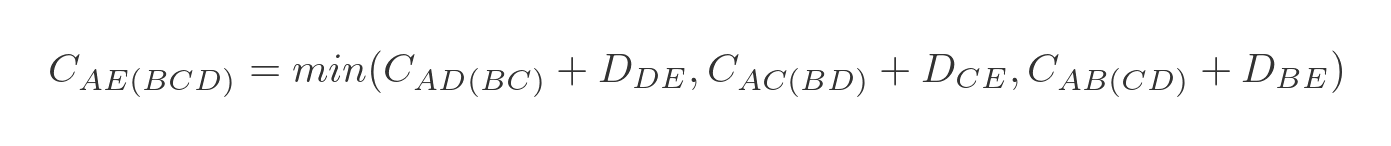

We will choose the shortest of these three paths:

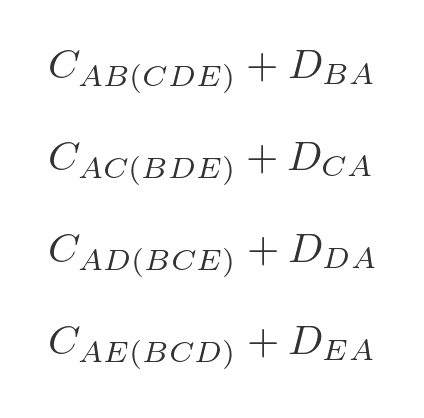

Again we have already calculated all the optimal intermediate paths. This time there are only 4 combinations we need to calculate: AB(CDE) , AC(BDE) , AD(BCE) , and AE(BCD) .

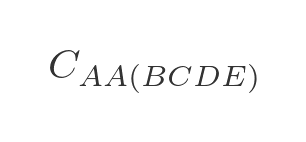

We are now in a position to calculate the optimal route for travelling from A back to A via all 4 other towns:

We need to evaluate each of the 4 options in step 3, adding on the extra distance to get back to A :

We can then choose the option that has the lowest total cost, and that will be the optimal route.

Performance

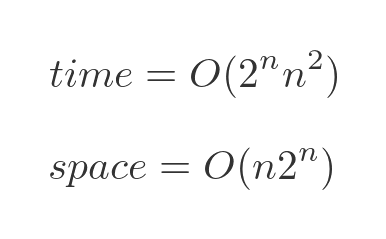

The performance of the Bellman–Held–Karp algorithm can be expressed in big-O notations as:

The time performance is approximately exponential. As the number of towns increases, the time taken will go up exponentially - each time an extra town is added, the time taken will double. We are ignoring the term in n squared because the exponential term is the most significant part.

Exponential growth is still quite bad, but it is far better than the factorial growth of the brute-force algorithm.

It is also important to notice that the algorithm requires memory to store the intermediate results, which also goes up approximately exponentially. The brute force algorithm doesn't have any significant memory requirements. However, that is usually an acceptable trade-off for a much better time performance.

The stages above describe how the algorithm works at a high level. We haven't gone into great detail about exactly how the algorithm keeps track of the various stages. Over the years since its discovery, a lot of work has been put into optimising the implementation. Optimising the implementation won't change the time complexity, which will still be roughly exponential. However, it might make the algorithm run several times faster than a poor implementation.

We won't cover this in detail, but if you ever need to use this algorithm for a serious purpose, it is worth considering using an existing, well-optimised implementation rather than trying to write it yourself. Unless you are just doing it for fun!

Other algorithms

Even the Bellman–Held–Karp algorithm has poor performance for modestly large numbers of towns. 100 towns, for example, would be well beyond the capabilities of a modern PC.

There are various heuristic algorithms that are capable of finding a good solution, but not necessarily the best possible solution, in a reasonable amount of time. I hope to cover these in a future article.

- Adjacency matrices

- The seven bridges of Königsberg

- Permutation matrices and graphs

- Dijkstra's algorithm

Join the GraphicMaths Newletter

Sign up using this form to receive an email when new content is added:

Popular tags

adder adjacency matrix alu and gate angle area argand diagram binary maths cartesian equation chain rule chord circle cofactor combinations complex modulus complex polygon complex power complex root cosh cosine cosine rule cpu cube decagon demorgans law derivative determinant diagonal directrix dodecagon eigenvalue eigenvector ellipse equilateral triangle eulers formula exponent exponential exterior angle first principles flip-flop focus gabriels horn gradient graph hendecagon heptagon hexagon horizontal hyperbola hyperbolic function hyperbolic functions infinity integration by parts integration by substitution interior angle inverse hyperbolic function inverse matrix irregular polygon isosceles trapezium isosceles triangle kite koch curve l system line integral locus maclaurin series major axis matrix matrix algebra mean minor axis nand gate newton raphson method nonagon nor gate normal normal distribution not gate octagon or gate parabola parallelogram parametric equation pentagon perimeter permutations polar coordinates polynomial power probability probability distribution product rule pythagoras proof quadrilateral radians radius rectangle regular polygon rhombus root set set-reset flip-flop sine sine rule sinh sloping lines solving equations solving triangles square standard curves standard deviation star polygon statistics straight line graphs surface of revolution symmetry tangent tanh transformation transformations trapezium triangle turtle graphics variance vertical volume of revolution xnor gate xor gate

- Graph theory

- 7 bridges of Königsberg

- Binary numbers

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

Margin Size

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

12.10: Traveling Salesperson Problem

- Last updated

- Save as PDF

- Page ID 129677

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

Learning Objectives

After completing this section, you should be able to:

- Distinguish between brute force algorithms and greedy algorithms.

- List all distinct Hamilton cycles of a complete graph.

- Apply brute force method to solve traveling salesperson applications.

- Apply nearest neighbor method to solve traveling salesperson applications.

We looked at Hamilton cycles and paths in the previous sections Hamilton Cycles and Hamilton Paths. In this section, we will analyze Hamilton cycles in complete weighted graphs to find the shortest route to visit a number of locations and return to the starting point. Besides the many routing applications in which we need the shortest distance, there are also applications in which we search for the route that is least expensive or takes the least time. Here are a few less common applications that you can read about on a website set up by the mathematics department at the University of Waterloo in Ontario, Canada:

- Design of fiber optic networks

- Minimizing fuel expenses for repositioning satellites

- Development of semi-conductors for microchips

- A technique for mapping mammalian chromosomes in genome sequencing

Before we look at approaches to solving applications like these, let's discuss the two types of algorithms we will use.

Brute Force and Greedy Algorithms

An algorithm is a sequence of steps that can be used to solve a particular problem. We have solved many problems in this chapter, and the procedures that we used were different types of algorithms. In this section, we will use two common types of algorithms, a brute force algorithm and a greedy algorithm . A brute force algorithm begins by listing every possible solution and applying each one until the best solution is found. A greedy algorithm approaches a problem in stages, making the apparent best choice at each stage, then linking the choices together into an overall solution which may or may not be the best solution.

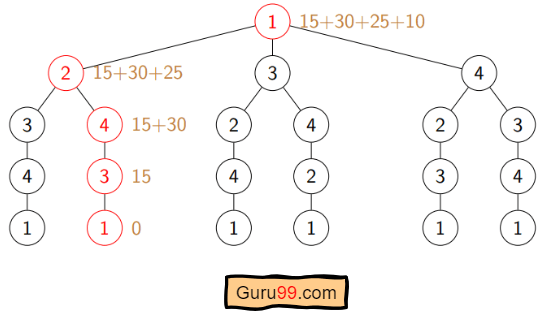

To understand the difference between these two algorithms, consider the tree diagram in Figure 12.214. Suppose we want to find the path from left to right with the largest total sum. For example, branch A in the tree diagram has a sum of 10 + 2 + 11 + 13 = 36 10 + 2 + 11 + 13 = 36 .

To be certain that you pick the branch with greatest sum, you could list each sum from each of the different branches:

A : 10 + 2 + 11 + 13 = 36 10 + 2 + 11 + 13 = 36

B : 10 + 2 + 11 + 8 = 31 10 + 2 + 11 + 8 = 31

C : 10 + 2 + 15 + 1 = 28 10 + 2 + 15 + 1 = 28

D : 10 + 2 + 15 + 6 = 33 10 + 2 + 15 + 6 = 33

E : 10 + 7 + 3 + 20 = 40 10 + 7 + 3 + 20 = 40

F : 10 + 7 + 3 + 14 = 34 10 + 7 + 3 + 14 = 34

G : 10 + 7 + 4 + 11 = 32 10 + 7 + 4 + 11 = 32

H : 10 + 7 + 4 + 5 = 26 10 + 7 + 4 + 5 = 26

Then we know with certainty that branch E has the greatest sum.

Now suppose that you wanted to find the branch with the highest value, but you only were shown the tree diagram in phases, one step at a time.

After phase 1, you would have chosen the branch with 10 and 7. So far, you are following the same branch. Let’s look at the next phase.

After phase 2, based on the information you have, you will choose the branch with 10, 7 and 4. Now, you are following a different branch than before, but it is the best choice based on the information you have. Let’s look at the last phase.

After phase 3, you will choose branch G which has a sum of 32.

The process of adding the values on each branch and selecting the highest sum is an example of a brute force algorithm because all options were explored in detail. The process of choosing the branch in phases, based on the best choice at each phase is a greedy algorithm. Although a brute force algorithm gives us the ideal solution, it can take a very long time to implement. Imagine a tree diagram with thousands or even millions of branches. It might not be possible to check all the sums. A greedy algorithm, on the other hand, can be completed in a relatively short time, and generally leads to good solutions, but not necessarily the ideal solution.

Example 12.42

Distinguishing between brute force and greedy algorithms.

A cashier rings up a sale for $4.63 cents in U.S. currency. The customer pays with a $5 bill. The cashier would like to give the customer $0.37 in change using the fewest coins possible. The coins that can be used are quarters ($0.25), dimes ($0.10), nickels ($0.05), and pennies ($0.01). The cashier starts by selecting the coin of highest value less than or equal to $0.37, which is a quarter. This leaves $ 0.37 − $ 0.25 = $ 0.12 $ 0.37 − $ 0.25 = $ 0.12 . The cashier selects the coin of highest value less than or equal to $0.12, which is a dime. This leaves $ 0.12 − $ 0.10 = $ 0.02 $ 0.12 − $ 0.10 = $ 0.02 . The cashier selects the coin of highest value less than or equal to $0.02, which is a penny. This leaves $ 0.02 − $ 0.01 = $ 0.01 $ 0.02 − $ 0.01 = $ 0.01 . The cashier selects the coin of highest value less than or equal to $0.01, which is a penny. This leaves no remainder. The cashier used one quarter, one dime, and two pennies, which is four coins. Use this information to answer the following questions.

- Is the cashier’s approach an example of a greedy algorithm or a brute force algorithm? Explain how you know.

- The cashier’s solution is the best solution. In other words, four is the fewest number of coins possible. Is this consistent with the results of an algorithm of this kind? Explain your reasoning.

- The approach the cashier used is an example of a greedy algorithm, because the problem was approached in phases and the best choice was made at each phase. Also, it is not a brute force algorithm, because the cashier did not attempt to list out all possible combinations of coins to reach this conclusion.

- Yes, it is consistent. A greedy algorithm does not always yield the best result, but sometimes it does.

Your Turn 12.42

The traveling salesperson problem.

Now let’s focus our attention on the graph theory application known as the traveling salesperson problem (TSP) in which we must find the shortest route to visit a number of locations and return to the starting point.

Recall from Hamilton Cycles, the officer in the U.S. Air Force who is stationed at Vandenberg Air Force base and must drive to visit three other California Air Force bases before returning to Vandenberg. The officer needed to visit each base once. We looked at the weighted graph in Figure 12.219 representing the four U.S. Air Force bases: Vandenberg, Edwards, Los Angeles, and Beal and the distances between them.

Any route that visits each base and returns to the start would be a Hamilton cycle on the graph. If the officer wants to travel the shortest distance, this will correspond to a Hamilton cycle of lowest weight. We saw in Table 12.11 that there are six distinct Hamilton cycles (directed cycles) in a complete graph with four vertices, but some lie on the same cycle (undirected cycle) in the graph.

a → b → c → d → a

a → b → d → c → a

a → c → b → d → a

a → d → c → b → a

a → c → d → b → a

a → d → b → c → a

Since the distance between bases is the same in either direction, it does not matter if the officer travels clockwise or counterclockwise. So, there are really only three possible distances as shown in Figure 12.220.

The possible distances are:

396 + 410 + 106 + 159 = 1071 207 + 410 + 439 + 159 = 1215 396 + 439 + 106 + 207 = 1148 396 + 410 + 106 + 159 = 1071 207 + 410 + 439 + 159 = 1215 396 + 439 + 106 + 207 = 1148

So, a Hamilton cycle of least weight is V → B → E → L → V (or the reverse direction). The officer should travel from Vandenberg to Beal to Edwards, to Los Angeles, and back to Vandenberg.

Finding Weights of All Hamilton Cycles in Complete Graphs

Notice that we listed all of the Hamilton cycles and found their weights when we solved the TSP about the officer from Vandenberg. This is a skill you will need to practice. To make sure you don't miss any, you can calculate the number of possible Hamilton cycles in a complete graph. It is also helpful to know that half of the directed cycles in a complete graph are the same cycle in reverse direction, so, you only have to calculate half the number of possible weights, and the rest are duplicates.

In a complete graph with n n vertices,

- The number of distinct Hamilton cycles is ( n − 1 ) ! ( n − 1 ) ! .

- There are at most ( n − 1 ) ! 2 ( n − 1 ) ! 2 different weights of Hamilton cycles.

TIP! When listing all the distinct Hamilton cycles in a complete graph, you can start them all at any vertex you choose. Remember, the cycle a → b → c → a is the same cycle as b → c → a → b so there is no need to list both.

Example 12.43

Calculating possible weights of hamilton cycles.

Suppose you have a complete weighted graph with vertices N, M, O , and P .

- Use the formula ( n − 1 ) ! ( n − 1 ) ! to calculate the number of distinct Hamilton cycles in the graph.

- Use the formula ( n − 1 ) ! 2 ( n − 1 ) ! 2 to calculate the greatest number of different weights possible for the Hamilton cycles.

- Are all of the distinct Hamilton cycles listed here? How do you know? Cycle 1: N → M → O → P → N Cycle 2: N → M → P → O → N Cycle 3: N → O → M → P → N Cycle 4: N → O → P → M → N Cycle 5: N → P → M → O → N Cycle 6: N → P → O → M → N

- Which pairs of cycles must have the same weights? How do you know?

- There are 4 vertices; so, n = 4 n = 4 . This means there are ( n − 1 ) ! = ( 4 − 1 ) ! = 3 ⋅ 2 ⋅ 1 = 6 ( n − 1 ) ! = ( 4 − 1 ) ! = 3 ⋅ 2 ⋅ 1 = 6 distinct Hamilton cycles beginning at any given vertex.

- Since n = 4 n = 4 , there are ( n − 1 ) ! 2 = ( 4 − 1 ) ! 2 = 6 2 = 3 ( n − 1 ) ! 2 = ( 4 − 1 ) ! 2 = 6 2 = 3 possible weights.

- Yes, they are all distinct cycles and there are 6 of them.

- Cycles 1 and 6 have the same weight, Cycles 2 and 4 have the same weight, and Cycles 3 and 5 have the same weight, because these pairs follow the same route through the graph but in reverse.

TIP! When listing the possible cycles, ignore the vertex where the cycle begins and ends and focus on the ways to arrange the letters that represent the vertices in the middle. Using a systematic approach is best; for example, if you must arrange the letters M, O, and P, first list all those arrangements beginning with M, then beginning with O, and then beginning with P, as we did in Example 12.42.

Your Turn 12.43

The brute force method.

The method we have been using to find a Hamilton cycle of least weight in a complete graph is a brute force algorithm, so it is called the brute force method . The steps in the brute force method are:

Step 1: Calculate the number of distinct Hamilton cycles and the number of possible weights.

Step 2: List all possible Hamilton cycles.

Step 3: Find the weight of each cycle.

Step 4: Identify the Hamilton cycle of lowest weight.

Example 12.44

Applying the brute force method.

On the next assignment, the air force officer must leave from Travis Air Force base, visit Beal, Edwards, and Vandenberg Air Force bases each exactly once and return to Travis Air Force base. There is no need to visit Los Angeles Air Force base. Use Figure 12.221 to find the shortest route.

Step 1: Since there are 4 vertices, there will be ( 4 − 1 ) ! = 3 ! = 6 ( 4 − 1 ) ! = 3 ! = 6 cycles, but half of them will be the reverse of the others; so, there will be ( 4 − 1 ) ! 2 = 6 2 = 3 ( 4 − 1 ) ! 2 = 6 2 = 3 possible distances.

Step 2: List all the Hamilton cycles in the subgraph of the graph in Figure 12.222.

To find the 6 cycles, focus on the three vertices in the middle, B, E, and V . The arrangements of these vertices are BEV, BVE, EBV, EVB, VBE , and VEB . These would correspond to the 6 cycles:

1: T → B → E → V → T

2: T → B → V → E → T

3: T → E → B → V → T

4: T → E → V → B → T

5: T → V → B → E → T

6: T → V → E → B → T

Step 3: Find the weight of each path. You can reduce your work by observing the cycles that are reverses of each other.

1: 84 + 410 + 207 + 396 = 1097 84 + 410 + 207 + 396 = 1097

2: 84 + 396 + 207 + 370 = 1071 84 + 396 + 207 + 370 = 1071

3: 370 + 410 + 396 + 396 = 1572 370 + 410 + 396 + 396 = 1572

4: Reverse of cycle 2, 1071

5: Reverse of cycle 3, 1572

6: Reverse of cycle 1, 1097

Step 4: Identify a Hamilton cycle of least weight.

The second path, T → B → V → E → T , and its reverse, T → E → V → B → T , have the least weight. The solution is that the officer should travel from Travis Air Force base to Beal Air Force Base, to Vandenberg Air Force base, to Edwards Air Force base, and return to Travis Air Force base, or the same route in reverse.

Your Turn 12.44

Now suppose that the officer needed a cycle that visited all 5 of the Air Force bases in Figure 12.221. There would be ( 5 − 1 ) ! = 4 ! = 4 × 3 × 2 × 1 = 24 ( 5 − 1 ) ! = 4 ! = 4 × 3 × 2 × 1 = 24 different arrangements of vertices and ( 5 − 1 ) ! 2 = 4 ! 2 = 24 2 = 12 ( 5 − 1 ) ! 2 = 4 ! 2 = 24 2 = 12 distances to compare using the brute force method. If you consider 10 Air Force bases, there would be ( 10 − 1 ) ! = 9 ! = 9 ⋅ 8 ⋅ 7 ⋅ 6 ⋅ 5 ⋅ 4 ⋅ 3 ⋅ 2 ⋅ 1 = 362 , 880 ( 10 − 1 ) ! = 9 ! = 9 ⋅ 8 ⋅ 7 ⋅ 6 ⋅ 5 ⋅ 4 ⋅ 3 ⋅ 2 ⋅ 1 = 362 , 880 different arrangements and ( 10 − 1 ) ! 2 = 9 ! 2 = 9 ⋅ 8 ⋅ 7 ⋅ 6 ⋅ 5 ⋅ 4 ⋅ 3 ⋅ 2 ⋅ 1 2 = 181 , 440 ( 10 − 1 ) ! 2 = 9 ! 2 = 9 ⋅ 8 ⋅ 7 ⋅ 6 ⋅ 5 ⋅ 4 ⋅ 3 ⋅ 2 ⋅ 1 2 = 181 , 440 distances to consider. There must be another way!

The Nearest Neighbor Method

When the brute force method is impractical for solving a traveling salesperson problem, an alternative is a greedy algorithm known as the nearest neighbor method , which always visit the closest or least costly place first. This method finds a Hamilton cycle of relatively low weight in a complete graph in which, at each phase, the next vertex is chosen by comparing the edges between the current vertex and the remaining vertices to find the lowest weight. Since the nearest neighbor method is a greedy algorithm, it usually doesn’t give the best solution, but it usually gives a solution that is "good enough." Most importantly, the number of steps will be the number of vertices. That’s right! A problem with 10 vertices requires 10 steps, not 362,880. Let’s look at an example to see how it works.

Suppose that a candidate for governor wants to hold rallies around the state. They plan to leave their home in city A , visit cities B, C, D, E , and F each once, and return home. The airfare between cities is indicated in the graph in Figure 12.223.

Let’s help the candidate keep costs of travel down by applying the nearest neighbor method to find a Hamilton cycle that has a reasonably low weight. Begin by marking starting vertex as V 1 Figure 12.224. The lowest of these is $100, which is the edge between A and F .

Mark F as V 2 Figure 12.225. The lowest of these is $150, which is the edge between F and D .

Mark D as V 3 Figure 12.226. The lowest of these is $120, which is the edge between D and B .

So, mark B as V 4 Figure 12.227. The lower amount is $160, which is the edge between B and E .

Now you can mark E as V 5 Figure 12.228. Make a note of the weight of the edge from E to C , which is $180, and from C back to A , which is $210.

The Hamilton cycle we found is A → F → D → B → E → C → A . The weight of the circuit is $ 100 + $ 150 + $ 120 + $ 160 + $ 180 + $ 210 = $ 920 $ 100 + $ 150 + $ 120 + $ 160 + $ 180 + $ 210 = $ 920 . This may or may not be the route with the lowest cost, but there is a good chance it is very close since the weights are most of the lowest weights on the graph and we found it in six steps instead of finding 120 different Hamilton cycles and calculating 60 weights. Let’s summarize the procedure that we used.

Step 1: Select the starting vertex and label V 1 V 1 for "visited 1st." Identify the edge of lowest weight between V 1 V 1 and the remaining vertices.

Step 2: Label the vertex at the end of the edge of lowest weight that you found in previous step as V n V n where the subscript n indicates the order the vertex is visited. Identify the edge of lowest weight between V n V n and the vertices that remain to be visited.

Step 3: If vertices remain that have not been visited, repeat Step 2. Otherwise, a Hamilton cycle of low weight is V 1 → V 2 → ⋯ → V n → V 1 V 1 → V 2 → ⋯ → V n → V 1 .

Example 12.45

Using the nearest neighbor method.

Suppose that the candidate for governor wants to hold rallies around the state but time before the election is very limited. They would like to leave their home in city A , visit cities B , C , D , E , and F each once, and return home. The airfare between cities is not as important as the time of travel, which is indicated in Figure 12.229. Use the nearest neighbor method to find a route with relatively low travel time. What is the total travel time of the route that you found?

Step 1: Label vertex A as V 1 V 1 . The edge of lowest weight between A and the remaining vertices is 85 min between A and D .

Step 2: Label vertex D as V 2 V 2 . The edge of lowest weight between D and the vertices that remain to be visited, B, C, E , and F , is 70 min between D and F .

Repeat Step 2: Label vertex F as V 3 V 3 . The edge of lowest weight between F and the vertices that remain to be visited, B, C, and E , is 75 min between F and C .

Repeat Step 2: Label vertex C as V 4 V 4 . The edge of lowest weight between C and the vertices that remain to be visited, B and E , is 100 min between C and B .

Repeat Step 2: Label vertex B as V 5 V 5 . The only vertex that remains to be visited is E . The weight of the edge between B and E is 95 min.

Step 3: A Hamilton cycle of low weight is A → D → F → C → B → E → A . So, a route of relatively low travel time is A to D to F to C to B to E and back to A . The total travel time of this route is: 85 min + 70 min + 75 min + 100 min + 95 min + 90 min = 515 min or 8 hrs 35 min 85 min + 70 min + 75 min + 100 min + 95 min + 90 min = 515 min or 8 hrs 35 min

Your Turn 12.45

Check your understanding, section 12.9 exercises.

Travelling Salesman Problem: Python, C++ Algorithm

What is the Travelling Salesman Problem (TSP)?

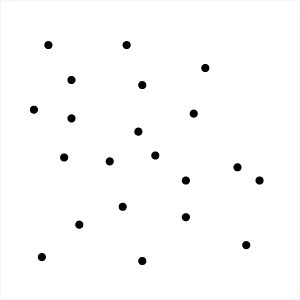

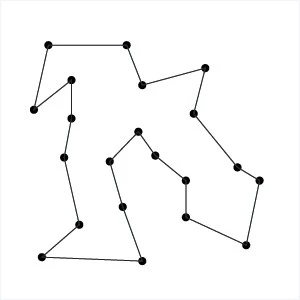

Travelling Salesman Problem (TSP) is a classic combinatorics problem of theoretical computer science. The problem asks to find the shortest path in a graph with the condition of visiting all the nodes only one time and returning to the origin city.

The problem statement gives a list of cities along with the distances between each city.

Objective: To start from the origin city, visit other cities only once, and return to the original city again. Our target is to find the shortest possible path to complete the round-trip route.

Example of TSP

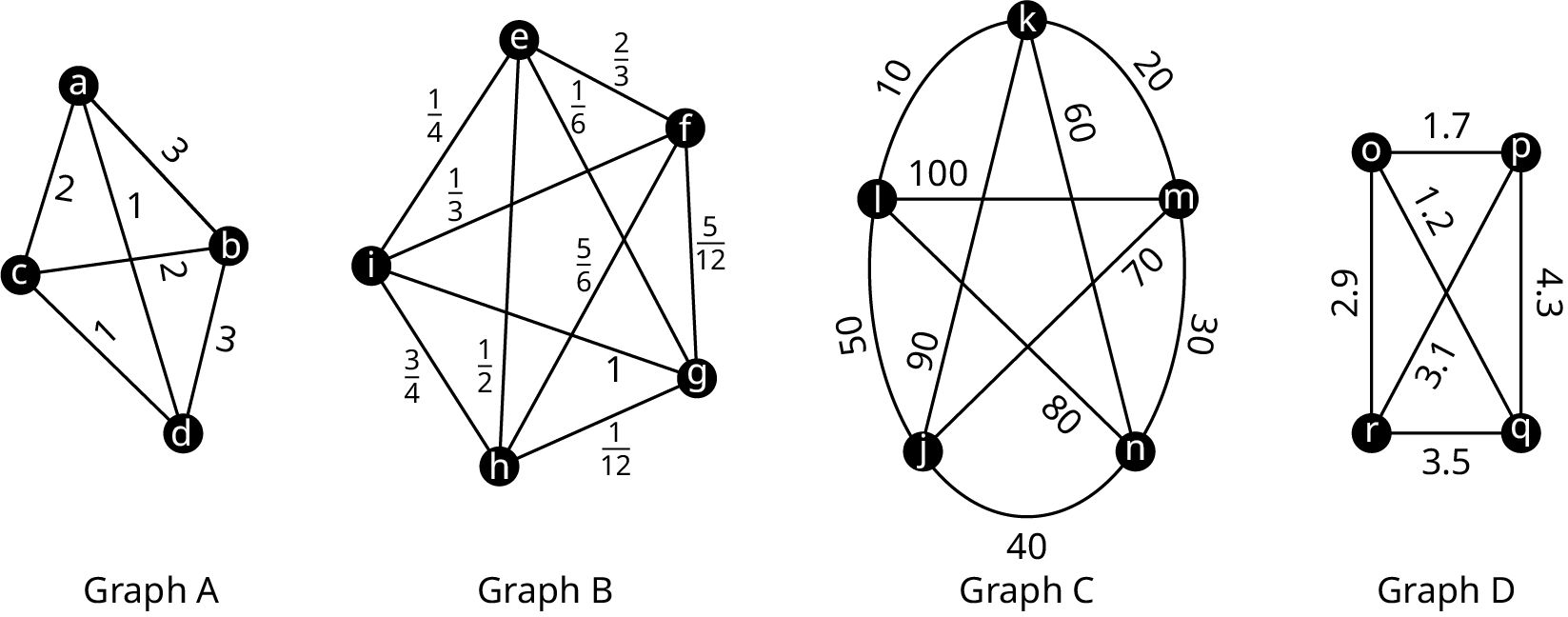

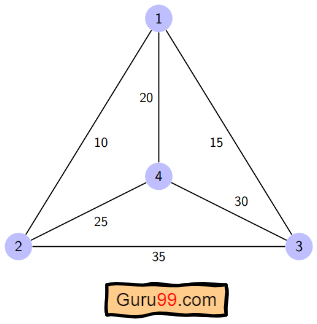

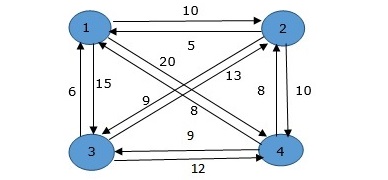

Here a graph is given where 1, 2, 3, and 4 represent the cities, and the weight associated with every edge represents the distance between those cities.

The goal is to find the shortest possible path for the tour that starts from the origin city, traverses the graph while only visiting the other cities or nodes once, and returns to the origin city.

For the above graph, the optimal route is to follow the minimum cost path: 1-2-4-3-1. And this shortest route would cost 10+25+30+15 =80

Different Solutions to Travelling Salesman Problem

Travelling Salesman Problem (TSP) is classified as a NP-hard problem due to having no polynomial time algorithm. The complexity increases exponentially by increasing the number of cities.

There are multiple ways to solve the traveling salesman problem (tsp). Some popular solutions are:

The brute force approach is the naive method for solving traveling salesman problems. In this approach, we first calculate all possible paths and then compare them. The number of paths in a graph consisting of n cities is n! It is computationally very expensive to solve the traveling salesman problem in this brute force approach.

The branch-and-bound method: The problem is broken down into sub-problems in this approach. The solution of those individual sub-problems would provide an optimal solution.

This tutorial will demonstrate a dynamic programming approach, the recursive version of this branch-and-bound method, to solve the traveling salesman problem.

Dynamic programming is such a method for seeking optimal solutions by analyzing all possible routes. It is one of the exact solution methods that solve traveling salesman problems through relatively higher cost than the greedy methods that provide a near-optimal solution.

The computational complexity of this approach is O(N^2 * 2^N) which is discussed later in this article.

The nearest neighbor method is a heuristic-based greedy approach where we choose the nearest neighbor node. This approach is computationally less expensive than the dynamic approach. But it does not provide the guarantee of an optimal solution. This method is used for near-optimal solutions.

Algorithm for Traveling Salesman Problem

We will use the dynamic programming approach to solve the Travelling Salesman Problem (TSP).

Before starting the algorithm, let’s get acquainted with some terminologies:

- A graph G=(V, E), which is a set of vertices and edges.

- V is the set of vertices.

- E is the set of edges.

- Vertices are connected through edges.

- Dist(i,j) denotes the non-negative distance between two vertices, i and j.

Let’s assume S is the subset of cities and belongs to {1, 2, 3, …, n} where 1, 2, 3…n are the cities and i, j are two cities in that subset. Now cost(i, S, j) is defined in such a way as the length of the shortest path visiting node in S, which is exactly once having the starting and ending point as i and j respectively.

For example, cost (1, {2, 3, 4}, 1) denotes the length of the shortest path where:

- Starting city is 1

- Cities 2, 3, and 4 are visited only once

- The ending point is 1

The dynamic programming algorithm would be:

- Set cost(i, , i) = 0, which means we start and end at i, and the cost is 0.

- When |S| > 1, we define cost(i, S, 1) = ∝ where i !=1 . Because initially, we do not know the exact cost to reach city i to city 1 through other cities.

- Now, we need to start at 1 and complete the tour. We need to select the next city in such a way-

cost(i, S, j)=min cost (i, S−{i}, j)+dist(i,j) where i∈S and i≠j

For the given figure, the adjacency matrix would be the following:

Let’s see how our algorithm works:

Step 1) We are considering our journey starting at city 1, visit other cities once and return to city 1.

Step 2) S is the subset of cities. According to our algorithm, for all |S| > 1, we will set the distance cost(i, S, 1) = ∝. Here cost(i, S, j) means we are starting at city i, visiting the cities of S once, and now we are at city j. We set this path cost as infinity because we do not know the distance yet. So the values will be the following:

Cost (2, {3, 4}, 1) = ∝ ; the notation denotes we are starting at city 2, going through cities 3, 4, and reaching 1. And the path cost is infinity. Similarly-

cost(3, {2, 4}, 1) = ∝

cost(4, {2, 3}, 1) = ∝

Step 3) Now, for all subsets of S, we need to find the following:

cost(i, S, j)=min cost (i, S−{i}, j)+dist(i,j), where j∈S and i≠j

That means the minimum cost path for starting at i, going through the subset of cities once, and returning to city j. Considering that the journey starts at city 1, the optimal path cost would be= cost(1, {other cities}, 1).

Let’s find out how we could achieve that:

Now S = {1, 2, 3, 4}. There are four elements. Hence the number of subsets will be 2^4 or 16. Those subsets are-

1) |S| = Null:

2) |S| = 1:

{{1}, {2}, {3}, {4}}

3) |S| = 2:

{{1, 2}, {1, 3}, {1, 4}, {2, 3}, {2, 4}, {3, 4}}

4) |S| = 3:

{{1, 2, 3}, {1, 2, 4}, {2, 3, 4}, {1, 3, 4}}

5) |S| = 4:

{{1, 2, 3, 4}}

As we are starting at 1, we could discard the subsets containing city 1.

The algorithm calculation:

1) |S| = Φ:

cost (2, Φ, 1) = dist(2, 1) = 10

cost (3, Φ, 1) = dist(3, 1) = 15

cost (4, Φ, 1) = dist(4, 1) = 20

cost (2, {3}, 1) = dist(2, 3) + cost (3, Φ, 1) = 35+15 = 50

cost (2, {4}, 1) = dist(2, 4) + cost (4, Φ, 1) = 25+20 = 45

cost (3, {2}, 1) = dist(3, 2) + cost (2, Φ, 1) = 35+10 = 45

cost (3, {4}, 1) = dist(3, 4) + cost (4, Φ, 1) = 30+20 = 50

cost (4, {2}, 1) = dist(4, 2) + cost (2, Φ, 1) = 25+10 = 35

cost (4, {3}, 1) = dist(4, 3) + cost (3, Φ, 1) = 30+15 = 45

cost (2, {3, 4}, 1) = min [ dist[2,3]+Cost(3,{4},1) = 35+50 = 85,

dist[2,4]+Cost(4,{3},1) = 25+45 = 70 ] = 70

cost (3, {2, 4}, 1) = min [ dist[3,2]+Cost(2,{4},1) = 35+45 = 80,

dist[3,4]+Cost(4,{2},1) = 30+35 = 65 ] = 65

cost (4, {2, 3}, 1) = min [ dist[4,2]+Cost(2,{3},1) = 25+50 = 75

dist[4,3]+Cost(3,{2},1) = 30+45 = 75 ] = 75

cost (1, {2, 3, 4}, 1) = min [ dist[1,2]+Cost(2,{3,4},1) = 10+70 = 80

dist[1,3]+Cost(3,{2,4},1) = 15+65 = 80

dist[1,4]+Cost(4,{2,3},1) = 20+75 = 95 ] = 80

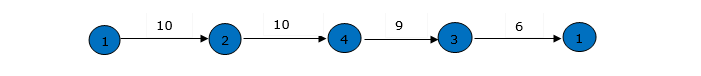

So the optimal solution would be 1-2-4-3-1

Pseudo-code

Implementation in c/c++.

Here’s the implementation in C++ :

Implementation in Python

Academic solutions to tsp.

Computer scientists have spent years searching for an improved polynomial time algorithm for the Travelling Salesman Problem. Until now, the problem is still NP-hard.

Though some of the following solutions were published in recent years that have reduced the complexity to a certain degree:

- The classical symmetric TSP is solved by the Zero Suffix Method.

- The Biogeography‐based Optimization Algorithm is based on the migration strategy to solve the optimization problems that can be planned as TSP.

- Multi-Objective Evolutionary Algorithm is designed for solving multiple TSP based on NSGA-II.

- The Multi-Agent System solves the TSP of N cities with fixed resources.

Application of Traveling Salesman Problem

Travelling Salesman Problem (TSP) is applied in the real world in both its purest and modified forms. Some of those are:

- Planning, logistics, and manufacturing microchips : Chip insertion problems naturally arise in the microchip industry. Those problems can be planned as traveling salesman problems.

- DNA sequencing : Slight modification of the traveling salesman problem can be used in DNA sequencing. Here, the cities represent the DNA fragments, and the distance represents the similarity measure between two DNA fragments.

- Astronomy : The Travelling Salesman Problem is applied by astronomers to minimize the time spent observing various sources.

- Optimal control problem : Travelling Salesman Problem formulation can be applied in optimal control problems. There might be several other constraints added.

Complexity Analysis of TSP

So the total time complexity for an optimal solution would be the Number of nodes * Number of subproblems * time to solve each sub-problem. The time complexity can be defined as O(N 2 * 2^N).

- Space Complexity: The dynamic programming approach uses memory to store C(S, i), where S is a subset of the vertices set. There is a total of 2 N subsets for each node. So, the space complexity is O(2^N).

Next, you’ll learn about Sieve of Eratosthenes Algorithm

- Linear Search: Python, C++ Example

- DAA Tutorial PDF: Design and Analysis of Algorithms

- Heap Sort Algorithm (With Code in Python and C++)

- Kadence’s Algorithm: Largest Sum Contiguous Subarray

- Radix Sort Algorithm in Data Structure

- Doubly Linked List: C++, Python (Code Example)

- Singly Linked List in Data Structures

- Adjacency List and Matrix Representation of Graph

Open Access is an initiative that aims to make scientific research freely available to all. To date our community has made over 100 million downloads. It’s based on principles of collaboration, unobstructed discovery, and, most importantly, scientific progression. As PhD students, we found it difficult to access the research we needed, so we decided to create a new Open Access publisher that levels the playing field for scientists across the world. How? By making research easy to access, and puts the academic needs of the researchers before the business interests of publishers.

We are a community of more than 103,000 authors and editors from 3,291 institutions spanning 160 countries, including Nobel Prize winners and some of the world’s most-cited researchers. Publishing on IntechOpen allows authors to earn citations and find new collaborators, meaning more people see your work not only from your own field of study, but from other related fields too.

Brief introduction to this section that descibes Open Access especially from an IntechOpen perspective

Want to get in touch? Contact our London head office or media team here

Our team is growing all the time, so we’re always on the lookout for smart people who want to help us reshape the world of scientific publishing.

Home > Books > Theory of Complexity - Definitions, Models, and Applications

How to Solve the Traveling Salesman Problem

Submitted: 23 September 2020 Reviewed: 20 January 2021 Published: 09 February 2021

DOI: 10.5772/intechopen.96129

Cite this chapter

There are two ways to cite this chapter:

From the Edited Volume

Theory of Complexity - Definitions, Models, and Applications

Edited by Ricardo López-Ruiz

To purchase hard copies of this book, please contact the representative in India: CBS Publishers & Distributors Pvt. Ltd. www.cbspd.com | [email protected]

Chapter metrics overview

812 Chapter Downloads

Impact of this chapter

Total Chapter Downloads on intechopen.com

Total Chapter Views on intechopen.com

The Traveling Salesman Problem (TSP) is believed to be an intractable problem and have no practically efficient algorithm to solve it. The intrinsic difficulty of the TSP is associated with the combinatorial explosion of potential solutions in the solution space. When a TSP instance is large, the number of possible solutions in the solution space is so large as to forbid an exhaustive search for the optimal solutions. The seemingly “limitless” increase of computational power will not resolve its genuine intractability. Do we need to explore all the possibilities in the solution space to find the optimal solutions? This chapter offers a novel perspective trying to overcome the combinatorial complexity of the TSP. When we design an algorithm to solve an optimization problem, we usually ask the critical question: “How can we find all exact optimal solutions and how do we know that they are optimal in the solution space?” This chapter introduces the Attractor-Based Search System (ABSS) that is specifically designed for the TSP. This chapter explains how the ABSS answer this critical question. The computing complexity of the ABSS is also discussed.

- combinatorial optimization

- global optimization

- heuristic local search

- computational complexity

- traveling salesman problem

- multimodal optimization

- dynamical systems

Author Information

- School of Management, University of Michigan-Flint, Flint, USA

*Address all correspondence to: [email protected]

1. Introduction

The TSP is one of the most intensively investigated optimization problems and often treated as the prototypical combinatorial optimization problem that has provided much motivation for design of new search algorithms, development of complexity theory, and analysis of solution space and search space [ 1 , 2 ]. The TSP is defined as a complete graph Q = V E C , where V = v i : i = 1 2 … n is a set of n nodes, E = e i j : i j = 1 2 … n i ≠ j n × n is an edge matrix containing the set of edges that connects the n nodes, and C = c i j : i j = 1 2 … n i ≠ j n × n is a cost matrix holding a set of traveling costs associated with the set of edges. The solution space S contains a finite set of all feasible tours that a salesman may traverse. A tour s ∈ S is a closed route that visits every node exactly once and returns to the starting node at the end. Like many real-world optimization problems, the TSP is inherently multimodal; that is, it may contain multiple optimal tours in its solution space. We assume that a TSP instance Q contains h ≥ 1 optimal tours in S . We denote f ( s ) as the objective function, s ∗ = min s ∈ S f s as an optimal tour and S ∗ as the set of h optimal tours. The objective of the TSP is to find all h optimal tours in the solution space, that is, S ∗ ⊂ S . Therefore, the argument is

Under this definition, the salesman wants to know what all best alternative tours are available. Finding all optimal solutions is the essential requirement for an optimization search algorithm. In practice, knowledge of multiple optimal solutions is extremely helpful, providing the decision-maker with multiple options, especially when the sensitivity of the objective function to small changes in its variables may be different at the alternative optimal points. Obviously, this TSP definition is elegantly simple but full of challenge to the optimization researchers and practitioners.

Optimization has been a fundamental tool in all scientific and engineering areas. The goal of optimization is to find the best set of the admissible conditions to achieve our objective in our decision-making process. Therefore, the fundamental requirement for an optimization search algorithm is to find all optimal solutions within a reasonable amount of computing time . The focus of computational complexity theory is to analyze the intrinsic difficulty of an optimization problem and the asymptotic property of a search algorithm to solve it. The complexity theory attempts to address this question: “How efficient is a search algorithm for a particular optimization problem, as the number of variables gets large?”

The TSP is known to be NP-hard [ 2 , 3 ]. The problems in NP-hard class are said to be intractable because these problems have no asymptotically efficient algorithm, even the seemingly “limitless” increase of computational power will not resolve their genuine intractability. The intrinsic difficulty of the TSP is that the solution space increases exponentially as the problem size increases, which makes the exhaustive search infeasible. When a TSP instance is large, the number of possible tours in the solution space is so large to forbid an exhaustive search for the optimal tours. A feasible search algorithm for the TSP is one that comes with a guarantee to find all best tours in time at most proportional to n k for some power k .

Do we need to explore all the possibilities in the solution space to find the optimal solutions? Imagine that searching for the optimal solution in the solution space is like treasure hunting. We are trying to hunt for a hidden treasure in the whole world. If we are “blindfolded” without any guidance, it is a silly idea to search every single square inch of the extremely large space. We may have to perform a random search process, which is usually not effective. However, if we are able to use various clues to locate the small village where the treasure was placed, we will then directly go to that village and search every corner of the village to find the hidden treasure. The philosophy behind this treasure-hunting case for optimization is that: if we do not know where the optimal point is in the solution space, we can try to identify the small region that contains the optimal point and then search that small region thoroughly to find that optimal point.

Optimization researchers have developed many optimization algorithms to solve the TSP. Deterministic approaches such as exhaustive enumeration and branch-and-bound can find exact optimal solutions, but they are very expensive from the computational point of view. Stochastic optimization algorithms, such as simple heuristic local search, Evolutionary Algorithms, Particle Swarm Optimization and many other metaheuristics, can find hopefully a good solution to the TSP [ 1 , 4 , 5 , 6 , 7 ]. The stochastic search algorithms trade in guaranteed correctness of the optimal solution for a shorter computing time. In practice, most stochastic search algorithms are based on the heuristic local search technique [ 8 ]. Heuristics are functions that help us decide which one of a set of possible solutions is to be selected next [ 9 ]. A local search algorithm iteratively explores the neighborhoods of solutions trying to improve the current solution by a local change. However, the scope of local search is limited by the neighborhood definition. Therefore, heuristic local search algorithms are locally convergent. The final solution may deviate from the optimal solution. Such a final solution is called a locally optimal solution , denoted as s ′ in this chapter. To distinguish from locally optimal solutions, the optimal solution s ∗ in the solution space is usually called the globally optimal solution .

This chapter studies the TSP from a novel perspective and presents a new search algorithm for the TSP. This chapter is organized in the following sections. Section 2 presents the ABSS algorithm for the TSP. Section 3 describes the important data structure that is a critical player in solving the TSP. Section 4 discusses the nature of heuristic local search algorithm and introduces the concept of solution attractor. Section 5 describes the global optimization features of the ABSS. Section 6 discusses the computational complexity of the ABSS. Section 7 concludes this chapter.

2. The attractor-based search system for the TSP

Figure 1 presents the Attractor-Based Search System (ABSS) for the TSP. In this algorithm, Q is a TSP instance with the edge matrix E and cost matrix C . At beginning of search, the matrix E is initialized by assigning zeros to all elements of E . The function InitialTour() constructs an initial tour s i using any tour-construction technique. The function LocalSearch() takes s i as an input, performs local search using any type of local search technique, and returns a locally optimal tour s j . The function UpdateE() updates the matrix E by recording the edge configuration of tour s j into the matrix. K is the number of search trajectories. After the edge configurations of K locally optimal tours are stored in the matrix E , the function ExhaustedSearch() searches E completely using the depth-first tree search technique, which is a simple recursive search method that traverses a directed graph starting from a node and then searches adjacent nodes recursively. Finally, the ABSS outputs a set of all best tours S ∗ found in the edge configuration of E . The search strategy in the ABSS is straightforward: generating K locally optimal tours, storing their edge configurations in the matrix E , and then identifying the best tours by evaluating all tours represented by the edge configuration of E . The ABSS is a simple and efficient computer program that can solve the TSP effectively. This search algorithm shows strong features of effectiveness, flexibility, adaptability, scalability and efficiency. The computational model of the ABSS is inherently parallel, facilitating implementation on concurrent processors. It can be implemented in many different ways: series, parallel, distributed, or hybrid.

The ABSS algorithm for the TSP.