- Search Menu

- Sign in through your institution

- Advance Articles

- Editor's Choice

- CME Reviews

- Best of 2021 collection

- Abbreviated Breast MRI Virtual Collection

- Contrast-enhanced Mammography Collection

- Author Guidelines

- Submission Site

- Open Access

- Self-Archiving Policy

- Accepted Papers Resource Guide

- About Journal of Breast Imaging

- About the Society of Breast Imaging

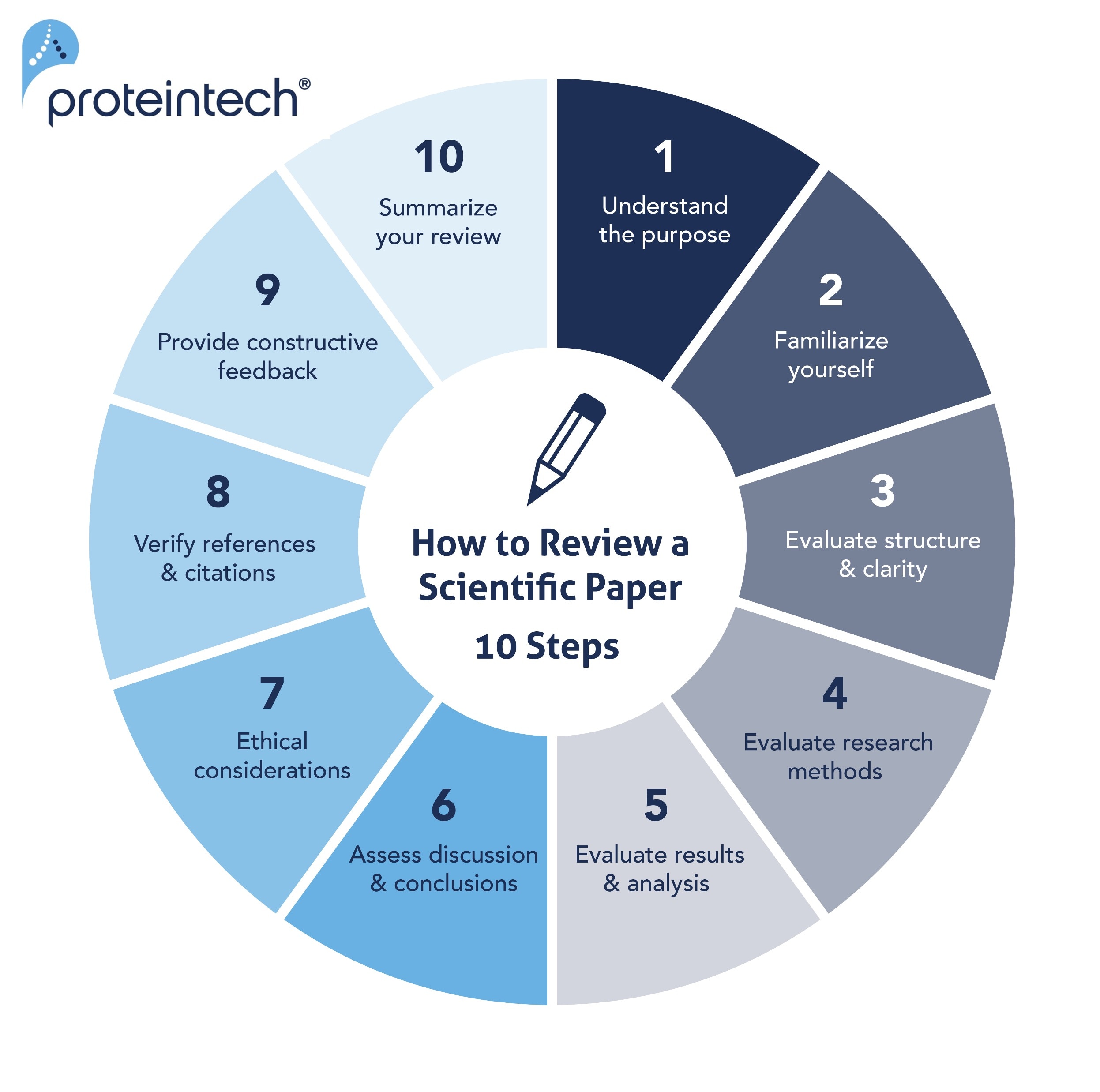

- Guidelines for Reviewers

- Resources for Reviewers and Authors

- Editorial Board

- Advertising Disclaimer

- Advertising and Corporate Services

- Journals on Oxford Academic

- Books on Oxford Academic

- < Previous

A Step-by-Step Guide to Writing a Scientific Review Article

- Article contents

- Figures & tables

- Supplementary Data

Manisha Bahl, A Step-by-Step Guide to Writing a Scientific Review Article, Journal of Breast Imaging , Volume 5, Issue 4, July/August 2023, Pages 480–485, https://doi.org/10.1093/jbi/wbad028

- Permissions Icon Permissions

Scientific review articles are comprehensive, focused reviews of the scientific literature written by subject matter experts. The task of writing a scientific review article can seem overwhelming; however, it can be managed by using an organized approach and devoting sufficient time to the process. The process involves selecting a topic about which the authors are knowledgeable and enthusiastic, conducting a literature search and critical analysis of the literature, and writing the article, which is composed of an abstract, introduction, body, and conclusion, with accompanying tables and figures. This article, which focuses on the narrative or traditional literature review, is intended to serve as a guide with practical steps for new writers. Tips for success are also discussed, including selecting a focused topic, maintaining objectivity and balance while writing, avoiding tedious data presentation in a laundry list format, moving from descriptions of the literature to critical analysis, avoiding simplistic conclusions, and budgeting time for the overall process.

- narrative discourse

Email alerts

Citing articles via.

- Recommend to your Librarian

- Journals Career Network

Affiliations

- Online ISSN 2631-6129

- Print ISSN 2631-6110

- Copyright © 2024 Society of Breast Imaging

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Institutional account management

- Rights and permissions

- Get help with access

- Accessibility

- Advertising

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- CAREER COLUMN

- 08 October 2018

How to write a thorough peer review

- Mathew Stiller-Reeve 0

Mathew Stiller-Reeve is a climate researcher at NORCE/Bjerknes Centre for Climate Research in Bergen, Norway, the leader of SciSnack.com, and a thematic editor at Geoscience Communication .

You can also search for this author in PubMed Google Scholar

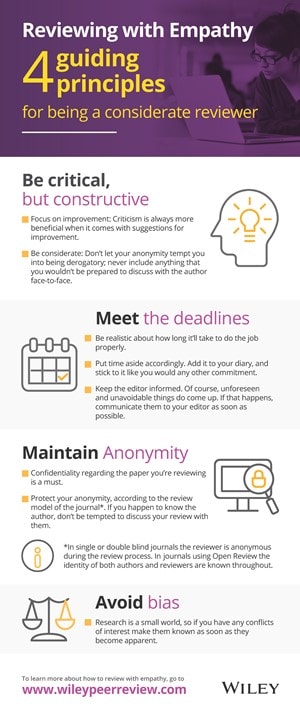

Scientists do not receive enough peer-review training. To improve this situation, a small group of editors and I developed a peer-review workflow to guide reviewers in delivering useful and thorough analyses that can really help authors to improve their papers.

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

24,99 € / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

185,98 € per year

only 3,65 € per issue

Rent or buy this article

Prices vary by article type

Prices may be subject to local taxes which are calculated during checkout

doi: https://doi.org/10.1038/d41586-018-06991-0

This is an article from the Nature Careers Community, a place for Nature readers to share their professional experiences and advice. Guest posts are encouraged. You can get in touch with the editor at [email protected].

Related Articles

Engage more early-career scientists as peer reviewers

Help graduate students to become good peer reviewers

- Peer review

Who will make AlphaFold3 open source? Scientists race to crack AI model

News 23 MAY 24

Pay researchers to spot errors in published papers

World View 21 MAY 24

Plagiarism in peer-review reports could be the ‘tip of the iceberg’

Nature Index 01 MAY 24

Egypt is building a $1-billion mega-museum. Will it bring Egyptology home?

News Feature 22 MAY 24

Researcher parents are paying a high price for conference travel — here’s how to fix it

Career Column 27 MAY 24

How researchers in remote regions handle the isolation

Career Feature 24 MAY 24

What steps to take when funding starts to run out

Full-Time Faculty Member in Molecular Agrobiology at Peking University

Faculty positions in molecular agrobiology, including plant (crop) molecular biology, crop genomics and agrobiotechnology and etc.

Beijing, China

School of Advanced Agricultural Sciences, Peking University

Sir Run Run Shaw Hospital, School of Medicine, Zhejiang University, Warmly Welcomes Talents Abroad

“Qiushi” Distinguished Scholar, Zhejiang University, including Professor and Physician

No. 3, Qingchun East Road, Hangzhou, Zhejiang (CN)

Sir Run Run Shaw Hospital Affiliated with Zhejiang University School of Medicine

Associate Editor, Nature Briefing

Associate Editor, Nature Briefing Permanent, full time Location: London, UK Closing date: 10th June 2024 Nature, the world’s most authoritative s...

London (Central), London (Greater) (GB)

Springer Nature Ltd

Professor, Division Director, Translational and Clinical Pharmacology

Cincinnati Children’s seeks a director of the Division of Translational and Clinical Pharmacology.

Cincinnati, Ohio

Cincinnati Children's Hospital & Medical Center

Data Analyst for Gene Regulation as an Academic Functional Specialist

The Rheinische Friedrich-Wilhelms-Universität Bonn is an international research university with a broad spectrum of subjects. With 200 years of his...

53113, Bonn (DE)

Rheinische Friedrich-Wilhelms-Universität

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

When you choose to publish with PLOS, your research makes an impact. Make your work accessible to all, without restrictions, and accelerate scientific discovery with options like preprints and published peer review that make your work more Open.

- PLOS Biology

- PLOS Climate

- PLOS Complex Systems

- PLOS Computational Biology

- PLOS Digital Health

- PLOS Genetics

- PLOS Global Public Health

- PLOS Medicine

- PLOS Mental Health

- PLOS Neglected Tropical Diseases

- PLOS Pathogens

- PLOS Sustainability and Transformation

- PLOS Collections

Peer Review Template

Save or print this guide Download PDF

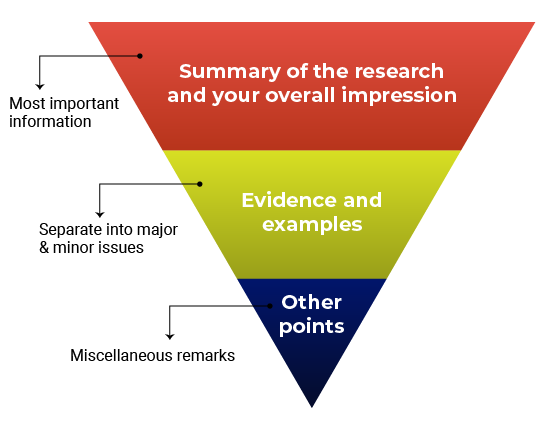

Think about structuring your reviewer report like an upside-down pyramid. The most important information goes at the top, followed by supporting details.

Sample outline

In your own words, summarize the main research question, claims, and conclusions of the study. Provide context for how this research fits within the existing literature.

Discuss the manuscript’s strengths and weaknesses and your overall recommendation.

Major Issues

- Other points (optional) If applicable, add confidential comments for the editors. Raise any concerns about the manuscript that they may need to consider further, such as concerns about ethics. Do not use this section for your overall critique. Also mention whether you might be available to look at a revised version.

- Reviewer Guidelines

- Peer review model

- Scope & article eligibility

- Reviewer eligibility

- Peer reviewer code of conduct

- Guidelines for reviewing

- How to submit

- The peer-review process

- Peer Reviewing Tips

- Benefits for Reviewers

The genesis of this paper is the proposal that genomes containing a poor percentage of guanosine and cytosine (GC) nucleotide pairs lead to proteomes more prone to aggregation than those encoded by GC-rich genomes. As a consequence these organisms are also more dependent on the protein folding machinery. If true, this interesting hypothesis could establish a direct link between the tendency to aggregate and the genomic code.

In their paper, the authors have tested the hypothesis on the genomes of eubacteria using a genome-wide approach based on multiple machine learning models. Eubacteria are an interesting set of organisms which have an appreciably high variation in their nucleotide composition with the percentage of CG genetic material ranging from 20% to 70%. The authors classified different eubacterial proteomes in terms of their aggregation propensity and chaperone-dependence. For this purpose, new classifiers had to be developed which were based on carefully curated data. They took account for twenty-four different features among which are sequence patterns, the pseudo amino acid composition of phenylalanine, aspartic and glutamic acid, the distribution of positively charged amino acids, the FoldIndex score and the hydrophobicity. These classifiers seem to be altogether more accurate and robust than previous such parameters.

The authors found that, contrary to what expected from the working hypothesis, which would predict a decrease in protein aggregation with an increase in GC richness, the aggregation propensity of proteomes increases with the GC content and thus the stability of the proteome against aggregation increases with the decrease in GC content. The work also established a direct correlation between GC-poor proteomes and a lower dependence on GroEL. The authors conclude by proposing that a decrease in eubacterial GC content may have been selected in organisms facing proteostasis problems. A way to test the overall results would be through in vitro evolution experiments aimed at testing whether adaptation to low GC content provide folding advantage.

The main strengths of this paper is that it addresses an interesting and timely question, finds a novel solution based on a carefully selected set of rules, and provides a clear answer. As such this article represents an excellent and elegant bioinformatics genome-wide study which will almost certainly influence our thinking about protein aggregation and evolution. Some of the weaknesses are the not always easy readability of the text which establishes unclear logical links between concepts.

Another possible criticism could be that, as any in silico study, it makes strong assumptions on the sequence features that lead to aggregation and strongly relies on the quality of the classifiers used. Even though the developed classifiers seem to be more robust than previous such parameters, they remain only overall indications which can only allow statistical considerations. It could of course be argued that this is good enough to reach meaningful conclusions in this specific case.

The paper by Chevalier et al. analyzed whether late sodium current (I NaL ) can be assessed using an automated patch-clamp device. To this end, the I NaL effects of ranolazine (a well known I NaL inhibitor) and veratridine (an I NaL activator) were described. The authors tested the CytoPatch automated patch-clamp equipment and performed whole-cell recordings in HEK293 cells stably transfected with human Nav1.5. Furthermore, they also tested the electrophysiological properties of human induced pluripotent stem cell-derived cardiomyocytes (hiPS) provided by Cellular Dynamics International. The title and abstract are appropriate for the content of the text. Furthermore, the article is well constructed, the experiments were well conducted, and analysis was well performed.

I NaL is a small current component generated by a fraction of Nav1.5 channels that instead to entering in the inactivated state, rapidly reopened in a burst mode. I NaL critically determines action potential duration (APD), in such a way that both acquired (myocardial ischemia and heart failure among others) or inherited (long QT type 3) diseases that augmented the I NaL magnitude also increase the susceptibility to cardiac arrhythmias. Therefore, I NaL has been recognized as an important target for the development of drugs with either antiischemic or antiarrhythmic effects. Unfortunately, accurate measurement of I NaL is a time consuming and technical challenge because of its extra-small density. The automated patch clamp device tested by Chevalier et al. resolves this problem and allows fast and reliable I NaL measurements.

The results here presented merit some comments and arise some unresolved questions. First, in some experiments (such is the case in experiments B and D in Figure 2) current recordings obtained before the ranolazine perfusion seem to be quite unstable. Indeed, the amplitude progressively increased to a maximum value that was considered as the control value (highlighted with arrows). Can this problem be overcome? Is this a consequence of a slow intracellular dialysis? Is it a consequence of a time-dependent shift of the voltage dependence of activation/inactivation? Second, as shown in Figure 2, intensity of drug effects seems to be quite variable. In fact, experiments A, B, C, and D in Figure 2 and panel 2D, demonstrated that veratridine augmentation ranged from 0-400%. Even assuming the normal biological variability, we wonder as to whether this broad range of effect intensities can be justified by changes in the perfusion system. Has been the automated dispensing system tested? If not, we suggest testing the effects of several K + concentrations on inward rectifier currents generated by Kir2.1 channels (I Kir2.1 ).

The authors demonstrated that the recording quality was so high that the automated device allows to the differentiation between noise and current, even when measuring currents of less than 5 pA of amplitude. In order to make more precise mechanistic assumptions, the authors performed an elegant estimation of current variance (σ 2 ) and macroscopic current (I) following the procedure described more than 30 years ago by Van Driessche and Lindemann 1 . By means of this method, Chevalier et al. reducing the open channel probability, while veratridine increases the number of channels in the burst mode. We respectfully would like to stress that these considerations must be put in context from a pharmacological point of view. We do not doubt that ranolazine acts as an open channel blocker, what it seems clear however, is that its onset block kinetics has to be “ultra” slow, otherwise ranolazine would decrease peak I NaL even at low frequencies of stimulation. This comment points towards the fact that for a precise mechanistic study of ionic current modifying drugs it is mandatory to analyze drug effects with much more complicated pulse protocols. Questions thus are: does this automated equipment allow to the analysis of the frequency-, time-, and voltage-dependent effects of drugs? Can versatile and complicated pulse protocols be applied? Does it allow to a good voltage control even when generated currents are big and fast? If this is not possible, and by means of its extraordinary discrimination between current and noise, this automated patch-clamp equipment will only be helpful for rapid I NaL -modifying drug screening. Obviously it will also be perfect to test HERG blocking drug effects as demanded by the regulatory authorities.

Finally, as cardiac electrophysiologists, we would like to stress that it seems that our dream of testing drug effects on human ventricular myocytes seems to come true. Indeed, human atrial myocytes are technically, ethically and logistically difficult to get, but human ventricular are almost impossible to be obtained unless from the explanted hearts from patients at the end stage of cardiac diseases. Here the authors demonstrated that ventricular myocytes derived from hiPS generate beautiful action potentials that can be recorded with this automated equipment. The traces shown suggested that there was not alternation in the action potential duration. Is this a consistent finding? How long do last these stable recordings? The only comment is that resting membrane potential seems to be somewhat variable. Can this be resolved? Is it an unexpected veratridine effect? Standardization of maturation methods of ventricular myocytes derived from hiPS will be a big achievement for cardiac cellular electrophysiology which was obliged for years to the imprecise extrapolation of data obtained from a combination of several species none of which was representative of human electrophysiology. The big deal will be the maturation of human atrial myocytes derived from hiPS that fulfil the known characteristics of human atrial cells.

We suggest suppressing the initial sentence of section 3. We surmise that results obtained from the experiments described in this section cannot serve to understand the role of I NaL in arrhythmogenesis.

1. Van Driessche W, Lindemann B: Concentration dependence of currents through single sodium-selective pores in frog skin. Nature . 1979; 282 (5738): 519-520 PubMed Abstract | Publisher Full Text

The authors have clarified several of the questions I raised in my previous review. Unfortunately, most of the major problems have not been addressed by this revision. As I stated in my previous review, I deem it unlikely that all those issues can be solved merely by a few added paragraphs. Instead there are still some fundamental concerns with the experimental design and, most critically, with the analysis. This means the strong conclusions put forward by this manuscript are not warranted and I cannot approve the manuscript in this form.

- The greatest concern is that when I followed the description of the methods in the previous version it was possible to decode, with almost perfect accuracy, any arbitrary stimulus labels I chose. See https://doi.org/10.6084/m9.figshare.1167456 for examples of this reanalysis. Regardless of whether we pretend that the actual stimulus appeared at a later time or was continuously alternating between signal and silence, the decoding is always close to perfect. This is an indication that the decoding has nothing to do with the actual stimulus heard by the Sender but is opportunistically exploiting some other features in the data. The control analysis the authors performed, reversing the stimulus labels, cannot address this problem because it suffers from the exact same problem. Essentially, what the classifier is presumably using is the time that has passed since the recording started.

- The reason for this is presumably that the authors used non-independent data for training and testing. Assuming I understand correctly (see point 3), random sampling one half of data samples from an EEG trace are not independent data . Repeating the analysis five times – the control analysis the authors performed – is not an adequate way to address this concern. Randomly selecting samples from a time series containing slow changes (such as the slow wave activity that presumably dominates these recordings under these circumstances) will inevitably contain strong temporal correlations. See TemporalCorrelations.jpg in https://doi.org/10.6084/m9.figshare.1185723 for 2D density histograms and a correlation matrix demonstrating this.

- While the revised methods section provides more detail now, it still is unclear about exactly what data were used. Conventional classification analysis report what data features (usual columns in the data matrix) and what observations (usual rows) were used. Anything could be a feature but typically this might be the different EEG channels or fMRI voxels etc. Observations are usually time points. Here I assume the authors transformed the raw samples into a different space using principal component analysis. It is not stated if the dimensionality was reduced using the eigenvalues. Either way, I assume the data samples (collected at 128 Hz) were then used as observations and the EEG channels transformed by PCA were used as features. The stimulus labels were assigned as ON or OFF for each sample. A set of 50% of samples (and labels) was then selected at random for training, and the rest was used for testing. Is this correct?

- A powerful non-linear classifier can capitalise on such correlations to discriminate arbitrary labels. In my own analyses I used both an SVM with RBF as well as a k-nearest neighbour classifier, both of which produce excellent decoding of arbitrary stimulus labels (see point 1). Interestingly, linear classifiers or less powerful SVM kernels fare much worse – a clear indication that the classifier learns about the complex non-linear pattern of temporal correlations that can describe the stimulus label. This is further corroborated by the fact that when using stimulus labels that are chosen completely at random (i.e. with high temporal frequency) decoding does not work.

- The authors have mostly clarified how the correlation analysis was performed. It is still left unclear, however, how the correlations for individual pairs were averaged. Was Fisher’s z-transformation used, or were the data pooled across pairs? More importantly, it is not entirely surprising that under the experimental conditions there will be some correlation between the EEG signals for different participants, especially in low frequency bands. Again, this further supports the suspicion that the classification utilizes slow frequency signals that are unrelated to the stimulus and the experimental hypothesis. In fact, a quick spot check seems to confirm this suspicion: correlating the time series separately for each channel from the Receiver in pair 1 with those from the Receiver in pair 18 reveals 131 significant (p‹0.05, Bonferroni corrected) out of 196 (14x14 channels) correlations… One could perhaps argue that this is not surprising because both these pairs had been exposed to identical stimulus protocols: one minute of initial silence and only one signal period (see point 6). However, it certainly argues strongly against the notion that the decoding is any way related to the mental connection between the particular Sender and Receiver in a given pair because it clearly works between Receivers in different pairs! However, to further control for this possibility I repeated the same analysis but now comparing the Receiver from pair 1 to the Receiver from pair 15. This pair was exposed to a different stimulus paradigm (2 minutes of initial silence and a longer paradigm with three signal periods). I only used the initial 3 minutes for the correlation analysis. Therefore, both recordings would have been exposed to only one signal period but at different times (at 1 min and 2 min for pair 1 and 15, respectively). Even though the stimulus protocol was completely different the time courses for all the channels are highly correlated and 137 out of 196 correlations are significant. Considering that I used the raw data for this analysis it should not surprise anyone that extracting power from different frequency bands in short time windows will also reveal significant correlations. Crucially, it demonstrates that correlations between Sender and Receiver are artifactual and trivial.

- The authors argue in their response and the revision that predictive strategies were unlikely. After having performed these additional analyses I am inclined to agree. The excellent decoding almost certainly has nothing to do with expectation or imagery effects and it is irrelevant whether participants could guess the temporal design of the experiment. Rather, the results are almost entirely an artefact of the analysis. However, this does not mean that predictability is not an issue. The figure StimulusTimecourses.jpg in https://doi.org/10.6084/m9.figshare.1185723 plots the stimulus time courses for all 20 pairs as can be extracted from the newly uploaded data. This confirms what I wrote in my previous review, in fact, with the corrected data sets the problem with predictability is even greater. Out of the 20 pairs, 13 started with 1 min of initial silence. The remaining 7 had 2 minutes of initial silence. Most of the stimulus paradigms are therefore perfectly aligned and thus highly correlated. This also proves incorrect the statement that initial silence periods were 1, 2, or 3 minutes. No pair had 3 min of initial silence. It would therefore have been very easy for any given Receiver to correctly guess the protocol. It should be clear that this is far from optimal for testing such an unorthodox hypothesis. Any future experiments should employ more randomization to decrease predictability. Even if this wasn’t the underlying cause of the present results, this is simply not great experimental design.

- The authors now acknowledge in their response that all the participants were authors. They say that this is also acknowledged in the methods section, but I did not see any statement about that in the revised manuscript. As before, I also find it highly questionable to include only authors in an experiment of this kind. It is not sufficient to claim that Receivers weren’t guessing their stimulus protocol. While I am giving the authors (and thus the participants) the benefit of the doubt that they actually believe they weren’t guessing/predicting the stimulus protocols, this does not rule out that they did. It may in fact be possible to make such predictions subconsciously (Now, if you ask me, this is an interesting scientific question someone should do an experiment on!). The fact familiar with the protocol may help that. Any future experiments should take steps to prevent this.

- I do not follow the explanation for the binomial test the authors used. Based on the excessive Bayes Factor of 390,625 it is clear that the authors assumed a chance level of 50% on their binomial test. Because the design is not balanced, this is not correct.

- In general, the Bayes Factor and the extremely high decoding accuracy should have given the authors reason to start. Considering the unusual hypothesis did the authors not at any point wonder if these results aren’t just far too good to be true? Decoding mental states from brain activity is typically extremely noisy and hardly affords accuracies at the level seen here. Extremely accurate decoding and Bayes Factors in the hundreds of thousands should be a tell-tale sign to check that there isn’t an analytical flaw that makes the result entirely trivial. I believe this is what happened here and thus I think this experiment serves as a very good demonstration for the pitfalls of applying such analysis without sanity checks. In order to make claims like this, the experimental design must contain control conditions that can rule out these problems. Presumably, recordings without any Sender, and maybe even when the “Receiver” is aware of this fact, should produce very similar results.

Based on all these factors, it is impossible for me to approve this manuscript. I should however state that it is laudable that the authors chose to make all the raw data of their experiment publicly available. Without this it would have impossible for me to carry out the additional analyses, and thus the most fundamental problem in the analysis would have remained unknown. I respect the authors’ patience and professionalism in dealing with what I can only assume is a rather harsh review experience. I am honoured by the request for an adversarial collaboration. I do not rule out such efforts at some point in the future. However, for all of the reasons outlined in this and my previous review, I do not think the time is right for this experiment to proceed to this stage. Fundamental analytical flaws and weaknesses in the design should be ruled out first. An adversarial collaboration only really makes sense to me for paradigms were we can be confident that mundane or trivial factors have been excluded.

This manuscript does an excellent job demonstrating significant strain differences in Burdian's paradigm. Since each Drosophila lab has their own wild type (usually Canton-S) isolate, this issue of strain differences is actually a very important one for between lab reproducibility. This work is a good reminder for all geneticists to pay attention to the population effects in the background controls, and presumably the mutant lines we are comparing.

I was very pleased to see the within-isolate behavior was consistent in replicate experiments one year apart. The authors further argue that the between-isolate differences in behavior arise from a Founder's effect, at least in the differences in locomotor behavior between the Paris lines CS_TP and CS_JC. I believe this is a very reasonable and testable hypothesis. It predicts that genetic variability for these traits exist within the populations. It should now be possible to perform selection experiments from the original CS_TP population to replicate the founding event and estimate the heritability of these traits.

Two other things that I liked about this manuscript are the ability to adjust parameters in figure 3, and our ability to download the raw data. After reading the manuscript, I was a little disappointed that the performance of the five strains in each 12 behavioral variables weren't broken down individually in a table or figure. I thought this may help us readers understand what the principle components were representing. The authors have made this data readily accessible in a downloadable spreadsheet.

This is an exceptionally good review and balanced assessment of the status of CETP inhibitors and ASCVD from a world authority in the field. The article highlights important data that might have been overlooked when promulgating the clinical value of CETPIs and related trials.

Only 2 areas need revision:

- Page 3, para 2: the notion that these data from Papp et al . convey is critical and the message needs an explicit sentence or two at end of paragraph.

- Page 4, Conclusion: the assertion concerning the ethics of the two Phase 3 clinical trials needs toning down. Perhaps rephrase to indicate that the value and sense of doing these trials is open to question, with attendant ethical implications, or softer wording to that effect.

The Wiley et al . manuscript describes a beautiful synthesis of contemporary genetic approaches to, with astonishing efficiency, identify lead compounds for therapeutic approaches to a serious human disease. I believe the importance of this paper stems from the applicability of the approach to the several thousand of rare human disease genes that Next-Gen sequencing will uncover in the next few years and the challenge we will have in figuring out the function of these genes and their resulting defects. This work presents a paradigm that can be broadly and usefully applied.

In detail, the authors begin with gene responsible for X-linked spinal muscular atrophy and express both the wild-type version of that human gene as well as a mutant form of that gene in S. pombe . The conceptual leap here is that progress in genetics is driven by phenotype, and this approach involving a yeast with no spine or muscles to atrophy is nevertheless and N-dimensional detector of phenotype.

The study is not without a small measure of luck in that expression of the wild-type UBA1 gene caused a slow growth phenotype which the mutant did not. Hence there was something in S. pombe that could feel the impact of this protein. Given this phenotype, the authors then went to work and using the power of the synthetic genetic array approach pioneered by Boone and colleagues made a systematic set of double mutants combining the human expressed UBA1 gene with knockout alleles of a plurality of S. pombe genes. They found well over a hundred mutations that either enhanced or suppressed the growth defect of the cells expressing UBI1. Most of these have human orthologs. My hunch is that many human genes expressed in yeast will have some comparably exploitable phenotype, and time will tell.

Building on the interaction networks of S. pombe genes already established, augmenting these networks by the protein interaction networks from yeast and from human proteome studies involving these genes, and from the structure of the emerging networks, the authors deduced that an E3 ligase modulated UBA1 and made the leap that it therefore might also impact X-linked Spinal Muscular Atrophy.

Here, the awesome power of the model organism community comes into the picture as there is a zebrafish model of spinal muscular atrophy. The principle of phenologs articulated by the Marcotte group inspire the recognition of the transitive logic of how phenotypes in one organism relate to phenotypes in another. With this zebrafish model, they were able to confirm that an inhibitor of E3 ligases and of the Nedd8-E1 activating suppressed the motor axon anomalies, as predicted by the effect of mutations in S. pombe on the phenotypes of the UBA1 overexpression.

I believe this is an important paper to teach in intro graduate courses as it illustrates beautifully how important it is to know about and embrace the many new sources of systematic genetic information and apply them broadly.

This paper by Amrhein et al. criticizes a paper by Bradley Efron that discusses Bayesian statistics ( Efron, 2013a ), focusing on a particular example that was also discussed in Efron (2013b) . The example concerns a woman who is carrying twins, both male (as determined by sonogram and we ignore the possibility that gender has been observed incorrectly). The parents-to-be ask Efron to tell them the probability that the twins are identical.

This is my first open review, so I'm not sure of the protocol. But given that there appears to be errors in both Efron (2013b) and the paper under review, I am sorry to say that my review might actually be longer than the article by Efron (2013a) , the primary focus of the critique, and the critique itself. I apologize in advance for this. To start, I will outline the problem being discussed for the sake of readers.

This problem has various parameters of interest. The primary parameter is the genetic composition of the twins in the mother’s womb. Are they identical (which I describe as the state x = 1) or fraternal twins ( x = 0)? Let y be the data, with y = 1 to indicate the twins are the same gender. Finally, we wish to obtain Pr( x = 1 | y = 1), the probability the twins are identical given they are the same gender 1 . Bayes’ rule gives us an expression for this:

Pr( x = 1 | y = 1) = Pr( x =1) Pr( y = 1 | x = 1) / {Pr( x =1) Pr( y = 1 | x = 1) + Pr( x =0) Pr( y = 1 | x = 0)}

Now we know that Pr( y = 1 | x = 1) = 1; twins must be the same gender if they are identical. Further, Pr( y = 1 | x = 0) = 1/2; if twins are not identical, the probability of them being the same gender is 1/2.

Finally, Pr( x = 1) is the prior probability that the twins are identical. The bone of contention in the Efron papers and the critique by Amrhein et al. revolves around how this prior is treated. One can think of Pr( x = 1) as the population-level proportion of twins that are identical for a mother like the one being considered.

However, if we ignore other forms of twins that are extremely rare (equivalent to ignoring coins finishing on their edges when flipping them), one incontrovertible fact is that Pr( x = 0) = 1 − Pr( x = 1); the probability that the twins are fraternal is the complement of the probability that they are identical.

The above values and expressions for Pr( y = 1 | x = 1), Pr( y = 1 | x = 0), and Pr( x = 0) leads to a simpler expression for the probability that we seek ‐ the probability that the twins are identical given they have the same gender:

Pr( x = 1 | y = 1) = 2 Pr( x =1) / [1 + Pr( x =1)] (1)

We see that the answer depends on the prior probability that the twins are identical, Pr( x =1). The paper by Amrhein et al. points out that this is a mathematical fact. For example, if identical twins were impossible (Pr( x = 1) = 0), then Pr( x = 1| y = 1) = 0. Similarly, if all twins were identical (Pr( x = 1) = 1), then Pr( x = 1| y = 1) = 1. The “true” prior lies somewhere in between. Apparently, the doctor knows that one third of twins are identical 2 . Therefore, if we assume Pr( x = 1) = 1/3, then Pr( x = 1| y = 1) = 1/2.

Now, what would happen if we didn't have the doctor's knowledge? Laplace's “Principle of Insufficient Reason” would suggest that we give equal prior probability to all possibilities, so Pr( x = 1) = 1/2 and Pr( x = 1| y = 1) = 2/3, an answer different from 1/2 that was obtained when using the doctor's prior of 1/3.

Efron(2013a) highlights this sensitivity to the prior, representing someone who defines an uninformative prior as a “violator”, with Laplace as the “prime violator”. In contrast, Amrhein et al. correctly points out that the difference in the posterior probabilities is merely a consequence of mathematical logic. No one is violating logic – they are merely expressing ignorance by specifying equal probabilities to all states of nature. Whether this is philosophically valid is debatable ( Colyvan 2008 ), but weight to that question, and it is well beyond the scope of this review. But setting Pr( x = 1) = 1/2 is not a violation; it is merely an assumption with consequences (and one that in hindsight might be incorrect 2 ).

Alternatively, if we don't know Pr( x = 1), we could describe that probability by its own probability distribution. Now the problem has two aspects that are uncertain. We don’t know the true state x , and we don’t know the prior (except in the case where we use the doctor’s knowledge that Pr( x = 1) = 1/3). Uncertainty in the state of x refers to uncertainty about this particular set of twins. In contrast, uncertainty in Pr( x = 1) reflects uncertainty in the population-level frequency of identical twins. A key point is that the state of one particular set of twins is a different parameter from the frequency of occurrence of identical twins in the population.

Without knowledge about Pr( x = 1), we might use Pr( x = 1) ~ dunif(0, 1), which is consistent with Laplace. Alternatively, Efron (2013b) notes another alternative for an uninformative prior: Pr( x = 1) ~ dbeta(0.5, 0.5), which is the Jeffreys prior for a probability.

Here I disagree with Amrhein et al. ; I think they are confusing the two uncertain parameters. Amrhein et al. state:

“We argue that this example is not only flawed, but useless in illustrating Bayesian data analysis because it does not rely on any data. Although there is one data point (a couple is due to be parents of twin boys, and the twins are fraternal), Efron does not use it to update prior knowledge. Instead, Efron combines different pieces of expert knowledge from the doctor and genetics using Bayes’ theorem.”

This claim might be correct when describing uncertainty in the population-level frequency of identical twins. The data about the twin boys is not useful by itself for this purpose – they are a biased sample (the data have come to light because their gender is the same; they are not a random sample of twins). Further, a sample of size one, especially if biased, is not a firm basis for inference about a population parameter. While the data are biased, the claim by Amrheim et al. that there are no data is incorrect.

However, the data point (the twins have the same gender) is entirely relevant to the question about the state of this particular set of twins. And it does update the prior. This updating of the prior is given by equation (1) above. The doctor’s prior probability that the twins are identical (1/3) becomes the posterior probability (1/2) when using information that the twins are the same gender. The prior is clearly updated with Pr( x = 1| y = 1) ≠ Pr( x = 1) in all but trivial cases; Amrheim et al. ’s statement that I quoted above is incorrect in this regard.

This possible confusion between uncertainty about these twins and uncertainty about the population level frequency of identical twins is further suggested by Amrhein et al. ’s statements:

“Second, for the uninformative prior, Efron mentions erroneously that he used a uniform distribution between zero and one, which is clearly different from the value of 0.5 that was used. Third, we find it at least debatable whether a prior can be called an uninformative prior if it has a fixed value of 0.5 given without any measurement of uncertainty.”

Note, if the prior for Pr( x = 1) is specified as 0.5, or dunif(0,1), or dbeta(0.5, 0.5), the posterior probability that these twins are identical is 2/3 in all cases. Efron (2013b) says the different priors lead to different results, but this result is incorrect, and the correct answer (2/3) is given in Efron (2013a) 3 . Nevertheless, a prior that specifies Pr( x = 1) = 0.5 does indicate uncertainty about whether this particular set of twins is identical (but certainty in the population level frequency of twins). And Efron’s (2013a) result is consistent with Pr( x = 1) having a uniform prior. Therefore, both claims in the quote above are incorrect.

It is probably easiest to show the (lack of) influence of the prior using MCMC sampling. Here is WinBUGS code for the case using Pr( x = 1) = 0.5.

Running this model in WinBUGS shows that the posterior mean of x is 2/3; this is the posterior probability that x = 1.

Instead of using pr_ident_twins <- 0.5, we could set this probability as being uncertain and define pr_ident_twins ~ dunif(0,1), or pr_ident_twins ~ dbeta(0.5,0.5). In either case, the posterior mean value of x remains 2/3 (contrary to Efron 2013b , but in accord with the correction in Efron 2013a ).

Note, however, that the value of the population level parameter pr_ident_twins is different in all three cases. In the first it remains unchanged at 1/2 where it was set. In the case where the prior distribution for pr_ident_twins is uniform or beta, the posterior distributions remain broad, but they differ depending on the prior (as they should – different priors lead to different posteriors 4 ). However, given the biased sample size of 1, the posterior distribution for this particular parameter is likely to be misleading as an estimate of the population-level frequency of twins.

So why doesn’t the choice of prior influence the posterior probability that these twins are identical? Well, for these three priors, the prior probability that any single set of twins is identical is 1/2 (this is essentially the mean of the prior distributions in these three cases).

If, instead, we set the prior as dbeta(1,2), which has a mean of 1/3, then the posterior probability that these twins are identical is 1/2. This is the same result as if we had set Pr( x = 1) = 1/3. In both these cases (choosing dbeta(1,2) or 1/3), the prior probability that a single set of twins is identical is 1/3, so the posterior is the same (1/2) given the data (the twins have the same gender).

Further, Amrhein et al. also seem to misunderstand the data. They note:

“Although there is one data point (a couple is due to be parents of twin boys, and the twins are fraternal)...”

This is incorrect. The parents simply know that the twins are both male. Whether they are fraternal is unknown (fraternal twins being the complement of identical twins) – that is the question the parents are asking. This error of interpretation makes the calculations in Box 1 and subsequent comments irrelevant.

Box 1 also implies Amrhein et al. are using the data to estimate the population frequency of identical twins rather than the state of this particular set of twins. This is different from the aim of Efron (2013a) and the stated question.

Efron suggests that Bayesian calculations should be checked with frequentist methods when priors are uncertain. However, this is a good example where this cannot be done easily, and Amrhein et al. are correct to point this out. In this case, we are interested in the probability that the hypothesis is true given the data (an inverse probability), not the probabilities that the observed data would be generated given particular hypotheses (frequentist probabilities). If one wants the inverse probability (the probability the twins are identical given they are the same gender), then Bayesian methods (andtherefore a prior) are required. A logical answer simply requires that the prior is constructed logically. Whether that answer is “correct” will be, in most cases, only known in hindsight.

However, one possible way to analyse this example using frequentist methods would be to assess the likelihood of obtaining the data for each of the two hypothesis (the twins are identical or fraternal). The likelihood of the twins having the same gender under the hypothesis that they are identical is 1. The likelihood of the twins having the same gender under the hypothesis that they are fraternal is 0.5. Therefore, the weight of evidence in favour of identical twins is twice that of fraternal twins. Scaling these weights so they sum to one ( Burnham and Anderson 2002 ), gives a weight of 2/3 for identical twins and 1/3 for fraternal twins. These scaled weights have the same numerical values as the posterior probabilities based on either a Laplace or Jeffreys prior. Thus, one might argue that the weight of evidence for each hypothesis when using frequentist methods is equivalent to the posterior probabilities derived from an uninformative prior. So, as a final aside in reference to Efron (2013a) , if we are being “violators” when using a uniform prior, are we also being “violators” when using frequentist methods to weigh evidence? Regardless of the answer to this rhetorical question, “checking” the results with frequentist methods doesn’t give any more insight than using uninformative priors (in this case). However, this analysis shows that the question can be analysed using frequentist methods; the single data point is not a problem for this. The claim in Armhein et al. that a frequentist analyis "is impossible because there is only one data point, and frequentist methods generally cannot handle such situations" is not supported by this example.

In summary, the comment by Amrhein et al. raises some interesting points that seem worth discussing, but it makes important errors in analysis and interpretation, and misrepresents the results of Efron (2013a) . This means the current version should not be approved.

Burnham, K.P. & D.R. Anderson. 2002. Model Selection and Multi-model Inference: a Practical Information-theoretic Approach. Springer-Verlag, New York.

Colyvan, M. 2008. Is Probability the Only Coherent Approach to Uncertainty? Risk Anal. 28: 645-652.

Efron B. (2003a) Bayes’ Theorem in the 21st Century. Science 340(6137): 1177-1178.

Efron B. (2013b) A 250-year argument: Belief, behavior, and the bootstrap. Bull Amer. Math Soc. 50: 129-146.

- The twins are both male. However, if the twins were both female, the statistical results would be the same, so I will simply use the data that the twins are the same gender.

- In reality, the frequency of twins that are identical is likely to vary depending on many factors but we will accept 1/3 for now.

- Efron (2013b) reports the posterior probability for these twins being identical as “a whopping 61.4% with a flat Laplace prior” but as 2/3 in Efron (2013a) . The latter (I assume 2/3 is “even more whopping”!) is the correct answer, which I confirmed via email with Professor Efron. Therefore, Efron (2013b) incorrectly claims the posterior probability is sensitive to the choice between a Jeffreys or Laplace uninformative prior.

- When the data are very informative relative to the different priors, the posteriors will be similar, although not identical.

I am very glad the authors wrote this essay. It is a well-written, needed, and useful summary of the current status of “data publication” from a certain perspective. The authors, however, need to be bolder and more analytical. This is an opinion piece, yet I see little opinion. A certain view is implied by the organization of the paper and the references chosen, but they could be more explicit.

The paper would be both more compelling and useful to a broad readership if the authors moved beyond providing a simple summary of the landscape and examined why there is controversy in some areas and then use the evidence they have compiled to suggest a path forward. They need to be more forthright in saying what data publication means to them, or what parts of it they do not deal with. Are they satisfied with the Lawrence et al. definition? Do they accept the critique of Parsons and Fox? What is the scope of their essay?

The authors take a rather narrow view of data publication, which I think hinders their analyses. They describe three types of (digital) data publication: Data as a supplement to an article; data as the subject of a paper; and data independent of a paper. The first two types are relatively new and they represent very little of the data actually being published or released today. The last category, which is essentially an “other” category, is rich in its complexity and encompasses the vast majority of data released. I was disappointed that the examples of this type were only the most bare-bones (Zenodo and Figshare). I think a deeper examination of this third category and its complexity would help the authors better characterize the current landscape and suggest paths forward.

Some questions the authors might consider: Are these really the only three models in consideration or does the publication model overstate a consensus around a certain type of data publication? Why are there different models and which approach is better for different situations? Do they have different business models or imply different social contracts? Might it also be worthy of typing “publishers” instead of “publications”? For example, do domain repositories vs. institutional repositories vs. publishers address the issues differently? Are these models sustaining models or just something to get us through the next 5-10 years while we really figure it out?

I think this oversimplification inhibited some deeper analysis in other areas as well. I would like to see more examination of the validation requirement beyond the lens of peer review, and I would like a deeper examination of incentives and credit beyond citation.

I thought the validation section of the paper was very relevant, but somewhat light. I like the choice of the term validation as more accurate than “quality” and it fits quite well with Callaghan’s useful distinction between technical and scientific review, but I think the authors overemphasize the peer-review style approach. The authors rightly argue that “peer-review” is where the publication metaphor leads us, but it may be a false path. They overstate some difficulties of peer-review (No-one looks at every data value? No, they use statistics, visualization, and other techniques.) while not fully considering who is responsible for what. We need a closer examination of different roles and who are appropriate validators (not necessarily conventional peers). The narrowly defined models of data publication may easily allow for a conventional peer-review process, but it is much more complex in the real-world “other” category. The authors discuss some of this in what they call “independent data validation,” but they don’t draw any conclusions.

Only the simplest of research data collections are validated only by the original creators. More often there are teams working together to develop experiments, sampling protocols, algorithms, etc. There are additional teams who assess, calibrate, and revise the data as they are collected and assembled. The authors discuss some of this in their examples like the PDS and tDAR, but I wish they were more analytical and offered an opinion on the way forward. Are there emerging practices or consensus in these team-based schemes? The level of service concept illustrated by Open Context may be one such area. Would formalizing or codifying some of these processes accomplish the same as peer-review or more? What is the role of the curator or data scientist in all of this? Given the authors’s backgrounds, I was surprised this role was not emphasized more. Finally, I think it is a mistake for science review to be the main way to assess reuse value. It has been shown time and again that data end up being used effectively (and valued) in ways that original experts never envisioned or even thought valid.

The discussion of data citation was good and captured the state of the art well, but again I would have liked to see some views on a way forward. Have we solved the basic problem and are now just dealing with edge cases? Is the “just-in-time identifier” the way to go? What are the implications? Will the more basic solutions work in the interim? More critically, are we overemphasizing the role of citation to provide academic credit? I was gratified that the authors referenced the Parsons and Fox paper which questions the whole data publication metaphor, but I was surprised that they only discussed the “data as software” alternative metaphor. That is a useful metaphor, but I think the ecosystem metaphor has broader acceptance. I mention this because the authors critique the software metaphor because “using it to alter or affect the academic reward system is a tricky prospect”. Yet there is little to suggest that data publication and corresponding citation alters that system either. Indeed there is little if any evidence that data publication and citation incentivize data sharing or stewardship. As Christine Borgman suggests, we need to look more closely at who we are trying to incentivize to do what. There is no reason to assume it follows the same model as research literature publication. It may be beyond the scope of this paper to fully examine incentive structures, but it at least needs to be acknowledged that building on the current model doesn’t seem to be working.

Finally, what is the takeaway message from this essay? It ends rather abruptly with no summary, no suggested directions or immediate challenges to overcome, no call to action, no indications of things we should stop trying, and only brief mention of alternative perspectives. What do the authors want us to take away from this paper?

Overall though, this is a timely and needed essay. It is well researched and nicely written with rich metaphor. With modifications addressing the detailed comments below and better recognizing the complexity of the current data publication landscape, this will be a worthwhile review paper. With more significant modification where the authors dig deeper into the complexities and controversies and truly grapple with their implications to suggest a way forward, this could be a very influential paper. It is possible that the definitions of “publication” and “peer-review” need not be just stretched but changed or even rejected.

- The whole paper needs a quick copy edit. There are a few typos, missing words, and wrong verb tenses. Note the word “data” is a plural noun. E.g., Data are not software, nor are they literature. (NSICD, instead of NSIDC)

- Page 2, para 2: “citability is addressed by assigning a PID.” This is not true, as the authors discuss on page 4, para 4. Indeed, page 4, para 4 seems to contradict itself. Citation is more than a locator/identifier.

- In the discussion of “Data independent of any paper” it is worth noting that there may often be linkages between these data and myriad papers. Indeed a looser concept of a data paper has existed for some time, where researchers request a citation to a paper even though it is not the data nor fully describes the data (e.g the CRU temp records)

- Page 4, para 1: I’m not sure it’s entirely true that published data cannot involve requesting permission. In past work with Indigenous knowledge holders, they were willing to publish summary data and then provide the details when satisfied the use was appropriate and not exploitive. I think those data were “published” as best they could be. A nit, perhaps, but it highlights that there are few if any hard and fast rules about data publication.

- Page 4, para 2: You may also want to mention the WDS certification effort, which is combining with the DSA via an RDA Working Group:

- Page 4, para 2: The joint declaration of data citation principles involved many more organizations than Force11, CODATA, and DCC. Please credit them all (maybe in a footnote). The glory of the effort was that it was truly a joint effort across many groups. There is no leader. Force11 was primarily a convener.

- Page 4, para 6: The deep citation approach recommended by ESIP is not to just to list variables or a range of data. It is to identify a “structural index” for the data and to use this to reference subsets. In Earth science this structural index is often space and time, but many other indices are possible--location in a gene sequence, file type, variable, bandwidth, viewing angle, etc. It is not just for “straightforward” data sets.

- Page 5, para 5: I take issue with the statement that few repositories provide scientific review. I can think of a couple dozen that do just off the top of my head, and I bet most domain repositories have some level of science review. The “scientists” may not always be in house, but the repository is a team facilitator. See my general comments.

- Page 5, para 10: The PDS system is only unusual in that it is well documented and advertised. As mentioned, this team style approach is actually fairly common.

- Page 6, para 3: Parsons and Fox don’t just argue that the data publication metaphor is limiting. They also say it is misleading. That should be acknowledged at least, if not actively grappled with.

- Artifact removal: Unfortunately the authors have not updated the paper with a 2x2 table showing guns and smiles by removed data points. This could dispel criticism that an asymmetrical expectation bias that has been shown to exist in similar experiments is not driving a bias leading to inappropriate conclusions.

- Artifact removal: Unfortunately the authors have not updated the paper with a 2x2 table showing guns and smiles by removed data points. This could dispel criticism that an asymmetrical expectation bias that has been shown to exist in similar experiments is not driving a bias leading to inappropriate conclusions. This is my strongest criticism of the paper and should be easily addressed as per my previous review comment. The fact that this simple data presentation was not performed to remove a clear potential source of spurious results is disappointing.

- The authors have added 95% CIs to figures S1 and S2. This clarifies the scope for expectation bias in these data. The addition of error bars permits the authors’ assumption of a linear trend, indicating that the effect of sequences of either guns or smiles may not skew results. Equally, there could be either a downwards or upwards trend fitting within the confidence intervals that could be indicative of a cognitive bias that may violate the assumptions of the authors, leading to spurious results. One way to remove these doubts could be to stratify the analyses by the length of sequences of identical symbols. If the results hold up in each of the strata, this potential bias could be shown to not be present in the data. If the bias is strong, particularly in longer runs, this could indicate that the positive result was due to small numbers of longer identical runs combined with a cognitive bias rather than an ability to predict future events.

Chamberlain and Szöcs present the taxize R package, a set of functions that provides interfaces to several web tools and databases, and simplifies the process of checking, updating, correcting and manipulating taxon names for researchers working with ecological/biological data. A key feature that is repeated throughout is the need for reproducibility of science workflows and taxize provides a means to achieve this within the R software ecosystem for taxonomic search.

The manuscript is well-written and nicely presented, with a good balance of descriptive text and discourse and practical illustration of package usage. A number of examples illustrate the scope of the package, something that is fully expanded upon in the two appendices, which are a welcome addition to the paper.

As to the package, I am not overly fond of long function names; the authors should consider dropping the data source abbreviations from the function names in a future update/revision of the package. Likewise there is some inconsistency in the naming conventions used. For example there is the ’tpl_search()’ function to search The Plant List, but the equivalent function to search uBio is ’ubio_namebank()’. Whilst this may reflect specific aspects of terminology in use at the respective data stores, it does not help the user gain familiarity with the package by having them remember inconsistent function names.

One advantage of taxize is that it draws together a rich selection of data stores to query. A further suggestion for a future update would be to add generic function names, that apply to a database connection/information object. The latter would describe the resource the user wants to search and any other required information, such as the API key, etc., for example:

The user function to search would then be ’search(foo, "Abies")’. Similar generically named functions would provide the primary user-interface, thus promoting a more consistent toolbox at the R level. This will become increasingly relevant as the scope of taxize increases through the addition of new data stores that the package can access.

In terms of presentation in the paper, I really don’t like the way the R code inputs merge with the R outputs. I know the author of Knitr doesn’t like the demarcation of output being polluted by the R prompt, but I do find it difficult parsing the inputs/outputs you show because often there is no space between them and users not familiar with R will have greater difficulties than I. Consider adding in more conventional indications of R outputs, or physically separate input from output by breaking up the chunks of code to have whitespace between the grey-background chunks. Related, in one location I noticed something amiss with the layout; in the first code block at the top of page 5, the printed output looks wrong here. I would expect the attributes to print on their own line and the data in the attribute to also be on its own separate line.

Note also, the inconsistency in the naming of the output object columns. For example, in the two code chunks shown in column 1 of page 4, the first block has an object printed with column names ’matched_name’ and ’data_source_title’, whilst camelCase is used in the outputs shown in the second block. As the package is revised and developed, consider this and other aspects of providing a consistent presentation to the user.

I was a little confused about the example in the section Resolve Taxonomic Names on page 4. Should the taxon name be “Helianthus annuus” or “Helianthus annus” ? In the ‘mynames’ definition you include ‘Helianthus annuus’ in the character vector but the output shown suggests that the submitted name was ‘Helianthus annus’ (1 “u”) in rows with rownames 9 and 10 in the output shown.

Other than that there were the following minor observations:

- Abstract: replace “easy” with “simple” in “...fashion that’s easy...” , and move the details about availability and the URI to the end of the sentence.

- Page 2, Column 1, Paragraph 2: You have “In addition, there is no one authoritative taxonomic names source...” , which is a little clumsy to read. How about “In addition, there is no one authoritative source of taxonomic names... ” ?

- Pg 2, C1, P2-3: The abbreviated data sources are presented first (in paragraph 2) and subsequently defined (in para 3). Restructure this so that the abbreviated forms are explained upon first usage.

- Pg 2, C2, P2: Most R packages are “in development” so I would drop the qualifier and reword the opening sentence of the paragraph.

- Pg 2, C2, P6: Change “and more can easily be added” to “and more can be easily added” seems to flow better?

- Pg 5, paragraph above Figure 1: You refer to converting the object to an **ape** *phylo* object and then repeat essentially the same information in the next sentence. Remove the repetition.

- Pg 6, C1: The header may be better as “Which taxa are children of the taxon of interest” .

- Pg 6: In the section “IUCN status”, the term “we” is used to refer to both the authors and the user. This is confusing. Reserve “we” for reference to the authors and use something else (“a user” perhaps) for the other instances. Check this throughout the entire manuscript.

- Pg 6, C2: in the paragraph immediately below the ‘grep()’ for “RAG1”, two consecutive sentences begin with “However”.

- Pg 7: The first sentence of “Aggregating data....” reads “In biology, one can asks questions...” . It should be “one asks” or “one can ask” .

- Pg 7, Conclusions: The first sentence reads “information is increasingly sought out by biologists” . I would drop “out” as “sought” is sufficient on its own.

- Appendices: Should the two figures in the Appendices have a different reference to differentiate them from Figure 1 in the main body of the paper? As it stands, the paper has two Figure 1s, one on page 5 and a second on page 12 in the Appendix.

- On Appendix Figure 2: The individual points are a little large. Consider reducing the plotting character size. I appreciate the effect you were going for with the transparency indicating density of observation through overplotting, but the effect is weakened by the size of the individual points.

- Should the phylogenetic trees have some scale to them? I presume the height of the stems is an indication of phylogenetic distance but the figure is hard to calibrate without an associated scale. A quick look at Paradis (2012) Analysis of Phylogenetics and Evolution with R would suggest however that a scale is not consistently applied to these trees. I am happy to be guided by the authors as they will be more familiar with the conventions than I.

Hydbring and Badalian-Very summarize in this review, the current status in the potential development of clinical applications based on miRNAs’ biology. The article gives an interesting historical and scientific perspective on a field that has only recently boomed.

Hydbring and Badalian-Very summarize in this review, the current status in the potential development of clinical applications based on miRNAs’ biology. The article gives an interesting historical and scientific perspective on a field that has only recently boomed; focusing mostly on the two main products in the pipeline of several biotech companies (in Europe and USA) which work with miRNAs-based agents, disease diagnostics and therapeutics. Interestingly, not only the specific agents that are being produced are mentioned, but also clever insights in the important cellular pathways regulated by key miRNAs are briefly discussed.

Minor points to consider in subsequent versions:

- Page 2; paragraph ‘Genomic location and transcription of microRNAs’ : the concept of miRNA clusters and precursors could be a bit better explained.

- Page 2; paragraph ‘Genomic location and transcription of microRNAs’ : when discussing the paper by the laboratory of Richard Young (reference 16); I think it is important to mention that that particular study refers to stem cells.

- Page 2; paragraph ‘Processing of microRNAs’ : “Argonate” should be replaced by “Argonaute”.

- Page 3; paragraph ‘MicroRNAs in disease diagnostics’ : are miR-15a and 16-1 two different miRNAs? I suggest mentioning them as: miR-15a and miR-16-1 and not using a slash sign (/) between them.

- Page 4; paragraph ‘Circulating microRNAs’ : I am a bit bothered by the description of multiple sclerosis (MS) only as an autoimmune disease. Without being an expert in the field, I believe that there are other hypotheses related to the etiology of MS.

- Page 5; paragraph ‘Clinical microRNA diagnostics’ : Does ‘hsa’ in hsa-miR-205 mean something?

- Page 5; paragraph ‘Clinical microRNA diagnostics’ : the authors mention the company Asuragen, Austin, TX, USA but they do not really say anything about their products. I suggest to either remove the reference to that company or to include their current pipeline efforts.

- Page 6; paragraph ‘MicroRNAs in therapeutics’ : in the first paragraph the authors suggest that miRNAs-based therapeutics should be able to be applied with “minimal side-effects”. Since one miRNA can affect a whole gene program, I found this a bit counterintuitive; I was wondering if any data has been published to support that statement. Also, in the same paragraph, the authors compare miRNAs to protein inhibitors, which are described as more specific and/or selective. I think there are now good indications to think that protein inhibitors are not always that specific and/or selective and that such a property actually could be important for their evidenced therapeutic effects.

- Page 6; paragraph ‘MicroRNAs in therapeutics’ : I think the concept of “antagomir” is an important one and could be better highlighted in the text.

- Throughout the text (pages 3, 5, 6, and 7): I am a bit bothered by separating the word “miRNA” or “miRNAs” at the end of a sentence in the following way: “miR-NA” or “miR-NAs”. It is a bit confusing considering the particular nomenclature used for miRNAs. That was probably done during the formatting and editing step of the paper.

- I was wondering if the authors could develop a bit more the general concept that seems to indicate that in disease (and in particular in cancer) the expression and levels of miRNAs are in general downregulated. Maybe some papers have been published about this phenomenon?

The authors describe their attempt to reproduce a study in which it was claimed that mild acid treatment was sufficient to reprogramme postnatal splenocytes from a mouse expressing GFP in the oct4 locus to pluripotent stem cells. The authors followed a protocol that has recently become available as a technical update of the original publication.

They report obtaining no pluripotent stem cells expressing GFP driven over the same time period of several days described in the original publication. They describe observation of some green fluorescence that they attributed to autofluorescence rather than GFP since it coincided with PI positive dead cells. They confirmed the absence of oct4 expression by RT-PCR and also found no evidence for Nanog or Sox2, also markers of pluripotent stem cells.

The paper appears to be an authentic attempt to reproduce the original study, although the study might have had additional value with more controls: “failure to reproduce” studies need to be particularly well controlled.

Examples that could have been valuable to include are:

- For the claim of autofluorescence: the emission spectrum of the samples would likely have shown a broad spectrum not coincident with that of GFP.

- The reprogramming efficiency of postnatal mouse splenocytes using more conventional methods in the hands of the authors would have been useful as a comparison. Idem the lung fibroblasts.

- There are no positive control samples (conventional mESC or miPSC) in the qPCR experiments for pluripotency markers. This would have indicated the biological sensitivity of the assay.

- Although perhaps a sensitive issue, it might have been helpful if the authors had been able to obtain samples of cells (or their mRNA) from the original authors for simultaneous analysis.

In summary, this is a useful study as it is citable and confirms previous blog reports, but it could have been improved by more controls.

The article is well written, treats an actual problem (the risk of development of valvulopathy after long-term cabergoline treatment in patients with macroprolactinoma) and provides evidence about the reversibility of valvular changes after timely discontinuation of DA treatment.

Title and abstract: The title is appropriate for the content of the article. The abstract is concise and accurately summarizes the essential information of the paper although it would be better if the authors define more precisely the anatomic specificity of valvulopathy – mild mitral regurgitation.

Case report: The clinical case presentation is comprehensive and detailed but there are some minor points that should be clarified:

- Please clarify the prolactin levels at diagnosis. In the Presentation section (line 3) “At presentation, prolactin level was found to be greater than 1000 ng/ml on diluted testing” but in the section describing the laboratory evaluation at diagnosis (line 7) “Prolactin level was 55 ng/ml”. Was the difference due to so called “hook effect”?

- Figure 1: In the text the follow-up MR imaging is indicated to be “after 10 months of cabergoline treatment” . However, the figures 1C and 1D represent 2 years post-treatment MR images. Please clarify.

- Figure 2: Echocardiograms 2A and 2B are defined as baseline but actually they correspond to the follow-up echocardiographic assessment at the 4th year of cabergoline treatment. Did the patient undergo a baseline (prior to dopamine agonist treatment) echocardiographic evaluation? If he did not, it should be mentioned as study limitation in the Discussion section.

- The mitral valve thickness was mentioned to be normal. Did the echographic examination visualize increased echogenicity (hyperechogenicity) of the mitral cusps?

- How could you explain the decrease of LV ejection fraction (from 60-65% to 50-55%) after switching from cabergoline to bromocriptine treatment and respectively its increase to 62% after doubling the bromocriptine daily dose? Was LV function estimated always by the same method during the follow-up?

- Final paragraph: Authors conclude that early discontinuation and management with bromocriptine may be effective in reversing cardiac valvular dysfunction. Even though, regular echocardiographic follow up should be considered in patients who are expected to be on long-term high dose treatment with bromocriptine regarding its partial 5-HT2b agonist activity.

This is an interesting topic: as the authors note, the way that communicators imagine their audiences will shape their output in significant ways. And I enjoyed what clearly has the potential to be a very rich data set.

This is an interesting topic: as the authors note, the way that communicators imagine their audiences will shape their output in significant ways. And I enjoyed what clearly has the potential to be a very rich data set. But I have some reservations about the adequacy of that data set, as it currently stands, given the claims the authors make; the relevance of the analytical framework(s) they draw upon; and the extent to which their analysis has offered significant new insights ‐ by which I mean, I would be keen to see the authors push their discussion further. My suggestions are essentially that they extend the data set they are working with to ensure that their analysis is both rigorous and generalisable, an re-consider the analytical frame they use. I will make some more concrete comments below.

With regard to the data: my feeling is that 14 interviews is a rather slim data set, and that this is heightened by the fact that they were all carried out in a single location, and recruited via snowball sampling and personal contacts. What efforts have the authors made to ensure that they are not speaking to a single, small, sub-community in the much wider category of science communicators? ‐ a case study, if you like, of a particular group of science communicators in North Carolina? In addition, though the authors reference grounded theory as a method for analysis, I got little sense of the data reaching saturation. The reliance on one-off quotes, and on the stories and interests of particular individuals, left me unsure as to how representative interview extracts were. I would therefore recommend either that the data set is extended by carrying out more interviews, in a wider variety of locations (e.g. other sites in the US), or that it is redeveloped as a case study of a particular local professional community. (Which would open up some fascinating questions ‐ how many of these people know each other? What spaces, online or offline, do they interact in, and do they share knowledge, for instance about their audiences? Are there certain touchstone events or publics they communally make reference to?)