Eberly Center

Teaching excellence & educational innovation, creating and using rubrics.

A rubric is a scoring tool that explicitly describes the instructor’s performance expectations for an assignment or piece of work. A rubric identifies:

- criteria: the aspects of performance (e.g., argument, evidence, clarity) that will be assessed

- descriptors: the characteristics associated with each dimension (e.g., argument is demonstrable and original, evidence is diverse and compelling)

- performance levels: a rating scale that identifies students’ level of mastery within each criterion

Rubrics can be used to provide feedback to students on diverse types of assignments, from papers, projects, and oral presentations to artistic performances and group projects.

Benefitting from Rubrics

- reduce the time spent grading by allowing instructors to refer to a substantive description without writing long comments

- help instructors more clearly identify strengths and weaknesses across an entire class and adjust their instruction appropriately

- help to ensure consistency across time and across graders

- reduce the uncertainty which can accompany grading

- discourage complaints about grades

- understand instructors’ expectations and standards

- use instructor feedback to improve their performance

- monitor and assess their progress as they work towards clearly indicated goals

- recognize their strengths and weaknesses and direct their efforts accordingly

Examples of Rubrics

Here we are providing a sample set of rubrics designed by faculty at Carnegie Mellon and other institutions. Although your particular field of study or type of assessment may not be represented, viewing a rubric that is designed for a similar assessment may give you ideas for the kinds of criteria, descriptions, and performance levels you use on your own rubric.

- Example 1: Philosophy Paper This rubric was designed for student papers in a range of courses in philosophy (Carnegie Mellon).

- Example 2: Psychology Assignment Short, concept application homework assignment in cognitive psychology (Carnegie Mellon).

- Example 3: Anthropology Writing Assignments This rubric was designed for a series of short writing assignments in anthropology (Carnegie Mellon).

- Example 4: History Research Paper . This rubric was designed for essays and research papers in history (Carnegie Mellon).

- Example 1: Capstone Project in Design This rubric describes the components and standards of performance from the research phase to the final presentation for a senior capstone project in design (Carnegie Mellon).

- Example 2: Engineering Design Project This rubric describes performance standards for three aspects of a team project: research and design, communication, and team work.

Oral Presentations

- Example 1: Oral Exam This rubric describes a set of components and standards for assessing performance on an oral exam in an upper-division course in history (Carnegie Mellon).

- Example 2: Oral Communication This rubric is adapted from Huba and Freed, 2000.

- Example 3: Group Presentations This rubric describes a set of components and standards for assessing group presentations in history (Carnegie Mellon).

Class Participation/Contributions

- Example 1: Discussion Class This rubric assesses the quality of student contributions to class discussions. This is appropriate for an undergraduate-level course (Carnegie Mellon).

- Example 2: Advanced Seminar This rubric is designed for assessing discussion performance in an advanced undergraduate or graduate seminar.

See also " Examples and Tools " section of this site for more rubrics.

CONTACT US to talk with an Eberly colleague in person!

- Faculty Support

- Graduate Student Support

- Canvas @ Carnegie Mellon

- Quick Links

Rubric Best Practices, Examples, and Templates

A rubric is a scoring tool that identifies the different criteria relevant to an assignment, assessment, or learning outcome and states the possible levels of achievement in a specific, clear, and objective way. Use rubrics to assess project-based student work including essays, group projects, creative endeavors, and oral presentations.

Rubrics can help instructors communicate expectations to students and assess student work fairly, consistently and efficiently. Rubrics can provide students with informative feedback on their strengths and weaknesses so that they can reflect on their performance and work on areas that need improvement.

How to Get Started

Best practices, moodle how-to guides.

- Workshop Recording (Fall 2022)

- Workshop Registration

Step 1: Analyze the assignment

The first step in the rubric creation process is to analyze the assignment or assessment for which you are creating a rubric. To do this, consider the following questions:

- What is the purpose of the assignment and your feedback? What do you want students to demonstrate through the completion of this assignment (i.e. what are the learning objectives measured by it)? Is it a summative assessment, or will students use the feedback to create an improved product?

- Does the assignment break down into different or smaller tasks? Are these tasks equally important as the main assignment?

- What would an “excellent” assignment look like? An “acceptable” assignment? One that still needs major work?

- How detailed do you want the feedback you give students to be? Do you want/need to give them a grade?

Step 2: Decide what kind of rubric you will use

Types of rubrics: holistic, analytic/descriptive, single-point

Holistic Rubric. A holistic rubric includes all the criteria (such as clarity, organization, mechanics, etc.) to be considered together and included in a single evaluation. With a holistic rubric, the rater or grader assigns a single score based on an overall judgment of the student’s work, using descriptions of each performance level to assign the score.

Advantages of holistic rubrics:

- Can p lace an emphasis on what learners can demonstrate rather than what they cannot

- Save grader time by minimizing the number of evaluations to be made for each student

- Can be used consistently across raters, provided they have all been trained

Disadvantages of holistic rubrics:

- Provide less specific feedback than analytic/descriptive rubrics

- Can be difficult to choose a score when a student’s work is at varying levels across the criteria

- Any weighting of c riteria cannot be indicated in the rubric

Analytic/Descriptive Rubric . An analytic or descriptive rubric often takes the form of a table with the criteria listed in the left column and with levels of performance listed across the top row. Each cell contains a description of what the specified criterion looks like at a given level of performance. Each of the criteria is scored individually.

Advantages of analytic rubrics:

- Provide detailed feedback on areas of strength or weakness

- Each criterion can be weighted to reflect its relative importance

Disadvantages of analytic rubrics:

- More time-consuming to create and use than a holistic rubric

- May not be used consistently across raters unless the cells are well defined

- May result in giving less personalized feedback

Single-Point Rubric . A single-point rubric is breaks down the components of an assignment into different criteria, but instead of describing different levels of performance, only the “proficient” level is described. Feedback space is provided for instructors to give individualized comments to help students improve and/or show where they excelled beyond the proficiency descriptors.

Advantages of single-point rubrics:

- Easier to create than an analytic/descriptive rubric

- Perhaps more likely that students will read the descriptors

- Areas of concern and excellence are open-ended

- May removes a focus on the grade/points

- May increase student creativity in project-based assignments

Disadvantage of analytic rubrics: Requires more work for instructors writing feedback

Step 3 (Optional): Look for templates and examples.

You might Google, “Rubric for persuasive essay at the college level” and see if there are any publicly available examples to start from. Ask your colleagues if they have used a rubric for a similar assignment. Some examples are also available at the end of this article. These rubrics can be a great starting point for you, but consider steps 3, 4, and 5 below to ensure that the rubric matches your assignment description, learning objectives and expectations.

Step 4: Define the assignment criteria

Make a list of the knowledge and skills are you measuring with the assignment/assessment Refer to your stated learning objectives, the assignment instructions, past examples of student work, etc. for help.

Helpful strategies for defining grading criteria:

- Collaborate with co-instructors, teaching assistants, and other colleagues

- Brainstorm and discuss with students

- Can they be observed and measured?

- Are they important and essential?

- Are they distinct from other criteria?

- Are they phrased in precise, unambiguous language?

- Revise the criteria as needed

- Consider whether some are more important than others, and how you will weight them.

Step 5: Design the rating scale

Most ratings scales include between 3 and 5 levels. Consider the following questions when designing your rating scale:

- Given what students are able to demonstrate in this assignment/assessment, what are the possible levels of achievement?

- How many levels would you like to include (more levels means more detailed descriptions)

- Will you use numbers and/or descriptive labels for each level of performance? (for example 5, 4, 3, 2, 1 and/or Exceeds expectations, Accomplished, Proficient, Developing, Beginning, etc.)

- Don’t use too many columns, and recognize that some criteria can have more columns that others . The rubric needs to be comprehensible and organized. Pick the right amount of columns so that the criteria flow logically and naturally across levels.

Step 6: Write descriptions for each level of the rating scale

Artificial Intelligence tools like Chat GPT have proven to be useful tools for creating a rubric. You will want to engineer your prompt that you provide the AI assistant to ensure you get what you want. For example, you might provide the assignment description, the criteria you feel are important, and the number of levels of performance you want in your prompt. Use the results as a starting point, and adjust the descriptions as needed.

Building a rubric from scratch

For a single-point rubric , describe what would be considered “proficient,” i.e. B-level work, and provide that description. You might also include suggestions for students outside of the actual rubric about how they might surpass proficient-level work.

For analytic and holistic rubrics , c reate statements of expected performance at each level of the rubric.

- Consider what descriptor is appropriate for each criteria, e.g., presence vs absence, complete vs incomplete, many vs none, major vs minor, consistent vs inconsistent, always vs never. If you have an indicator described in one level, it will need to be described in each level.

- You might start with the top/exemplary level. What does it look like when a student has achieved excellence for each/every criterion? Then, look at the “bottom” level. What does it look like when a student has not achieved the learning goals in any way? Then, complete the in-between levels.

- For an analytic rubric , do this for each particular criterion of the rubric so that every cell in the table is filled. These descriptions help students understand your expectations and their performance in regard to those expectations.

Well-written descriptions:

- Describe observable and measurable behavior

- Use parallel language across the scale

- Indicate the degree to which the standards are met

Step 7: Create your rubric

Create your rubric in a table or spreadsheet in Word, Google Docs, Sheets, etc., and then transfer it by typing it into Moodle. You can also use online tools to create the rubric, but you will still have to type the criteria, indicators, levels, etc., into Moodle. Rubric creators: Rubistar , iRubric

Step 8: Pilot-test your rubric

Prior to implementing your rubric on a live course, obtain feedback from:

- Teacher assistants

Try out your new rubric on a sample of student work. After you pilot-test your rubric, analyze the results to consider its effectiveness and revise accordingly.

- Limit the rubric to a single page for reading and grading ease

- Use parallel language . Use similar language and syntax/wording from column to column. Make sure that the rubric can be easily read from left to right or vice versa.

- Use student-friendly language . Make sure the language is learning-level appropriate. If you use academic language or concepts, you will need to teach those concepts.

- Share and discuss the rubric with your students . Students should understand that the rubric is there to help them learn, reflect, and self-assess. If students use a rubric, they will understand the expectations and their relevance to learning.

- Consider scalability and reusability of rubrics. Create rubric templates that you can alter as needed for multiple assignments.

- Maximize the descriptiveness of your language. Avoid words like “good” and “excellent.” For example, instead of saying, “uses excellent sources,” you might describe what makes a resource excellent so that students will know. You might also consider reducing the reliance on quantity, such as a number of allowable misspelled words. Focus instead, for example, on how distracting any spelling errors are.

Example of an analytic rubric for a final paper

Example of a holistic rubric for a final paper, single-point rubric, more examples:.

- Single Point Rubric Template ( variation )

- Analytic Rubric Template make a copy to edit

- A Rubric for Rubrics

- Bank of Online Discussion Rubrics in different formats

- Mathematical Presentations Descriptive Rubric

- Math Proof Assessment Rubric

- Kansas State Sample Rubrics

- Design Single Point Rubric

Technology Tools: Rubrics in Moodle

- Moodle Docs: Rubrics

- Moodle Docs: Grading Guide (use for single-point rubrics)

Tools with rubrics (other than Moodle)

- Google Assignments

- Turnitin Assignments: Rubric or Grading Form

Other resources

- DePaul University (n.d.). Rubrics .

- Gonzalez, J. (2014). Know your terms: Holistic, Analytic, and Single-Point Rubrics . Cult of Pedagogy.

- Goodrich, H. (1996). Understanding rubrics . Teaching for Authentic Student Performance, 54 (4), 14-17. Retrieved from

- Miller, A. (2012). Tame the beast: tips for designing and using rubrics.

- Ragupathi, K., Lee, A. (2020). Beyond Fairness and Consistency in Grading: The Role of Rubrics in Higher Education. In: Sanger, C., Gleason, N. (eds) Diversity and Inclusion in Global Higher Education. Palgrave Macmillan, Singapore.

15.7 Evaluation: Presentation and Analysis of Case Study

Learning outcomes.

By the end of this section, you will be able to:

- Revise writing to follow the genre conventions of case studies.

- Evaluate the effectiveness and quality of a case study report.

Case studies follow a structure of background and context , methods , findings , and analysis . Body paragraphs should have main points and concrete details. In addition, case studies are written in formal language with precise wording and with a specific purpose and audience (generally other professionals in the field) in mind. Case studies also adhere to the conventions of the discipline’s formatting guide ( APA Documentation and Format in this study). Compare your case study with the following rubric as a final check.

As an Amazon Associate we earn from qualifying purchases.

This book may not be used in the training of large language models or otherwise be ingested into large language models or generative AI offerings without OpenStax's permission.

Want to cite, share, or modify this book? This book uses the Creative Commons Attribution License and you must attribute OpenStax.

Access for free at https://openstax.org/books/writing-guide/pages/1-unit-introduction

- Authors: Michelle Bachelor Robinson, Maria Jerskey, featuring Toby Fulwiler

- Publisher/website: OpenStax

- Book title: Writing Guide with Handbook

- Publication date: Dec 21, 2021

- Location: Houston, Texas

- Book URL: https://openstax.org/books/writing-guide/pages/1-unit-introduction

- Section URL: https://openstax.org/books/writing-guide/pages/15-7-evaluation-presentation-and-analysis-of-case-study

© Dec 19, 2023 OpenStax. Textbook content produced by OpenStax is licensed under a Creative Commons Attribution License . The OpenStax name, OpenStax logo, OpenStax book covers, OpenStax CNX name, and OpenStax CNX logo are not subject to the Creative Commons license and may not be reproduced without the prior and express written consent of Rice University.

Case Study - Rubric

- Available Topics

- Top Documents

- Recently Updated

- Internal KB

Rubric example: a case study

- Investigation and Research Discussions

- Case Study - Description

- Case Study - Example

- Affordances of Online Discussions

- Steps for Building an Online Asynchronous Discussion

- Using Online Discussions to Increase Student Engagement

Do Your Students Know How to Analyze a Case—Really?

Explore more.

- Case Teaching

- Student Engagement

J ust as actors, athletes, and musicians spend thousands of hours practicing their craft, business students benefit from practicing their critical-thinking and decision-making skills. Students, however, often have limited exposure to real-world problem-solving scenarios; they need more opportunities to practice tackling tough business problems and deciding on—and executing—the best solutions.

To ensure students have ample opportunity to develop these critical-thinking and decision-making skills, we believe business faculty should shift from teaching mostly principles and ideas to mostly applications and practices. And in doing so, they should emphasize the case method, which simulates real-world management challenges and opportunities for students.

To help educators facilitate this shift and help students get the most out of case-based learning, we have developed a framework for analyzing cases. We call it PACADI (Problem, Alternatives, Criteria, Analysis, Decision, Implementation); it can improve learning outcomes by helping students better solve and analyze business problems, make decisions, and develop and implement strategy. Here, we’ll explain why we developed this framework, how it works, and what makes it an effective learning tool.

The Case for Cases: Helping Students Think Critically

Business students must develop critical-thinking and analytical skills, which are essential to their ability to make good decisions in functional areas such as marketing, finance, operations, and information technology, as well as to understand the relationships among these functions. For example, the decisions a marketing manager must make include strategic planning (segments, products, and channels); execution (digital messaging, media, branding, budgets, and pricing); and operations (integrated communications and technologies), as well as how to implement decisions across functional areas.

Faculty can use many types of cases to help students develop these skills. These include the prototypical “paper cases”; live cases , which feature guest lecturers such as entrepreneurs or corporate leaders and on-site visits; and multimedia cases , which immerse students into real situations. Most cases feature an explicit or implicit decision that a protagonist—whether it is an individual, a group, or an organization—must make.

For students new to learning by the case method—and even for those with case experience—some common issues can emerge; these issues can sometimes be a barrier for educators looking to ensure the best possible outcomes in their case classrooms. Unsure of how to dig into case analysis on their own, students may turn to the internet or rely on former students for “answers” to assigned cases. Or, when assigned to provide answers to assignment questions in teams, students might take a divide-and-conquer approach but not take the time to regroup and provide answers that are consistent with one other.

To help address these issues, which we commonly experienced in our classes, we wanted to provide our students with a more structured approach for how they analyze cases—and to really think about making decisions from the protagonists’ point of view. We developed the PACADI framework to address this need.

PACADI: A Six-Step Decision-Making Approach

The PACADI framework is a six-step decision-making approach that can be used in lieu of traditional end-of-case questions. It offers a structured, integrated, and iterative process that requires students to analyze case information, apply business concepts to derive valuable insights, and develop recommendations based on these insights.

Prior to beginning a PACADI assessment, which we’ll outline here, students should first prepare a two-paragraph summary—a situation analysis—that highlights the key case facts. Then, we task students with providing a five-page PACADI case analysis (excluding appendices) based on the following six steps.

Step 1: Problem definition. What is the major challenge, problem, opportunity, or decision that has to be made? If there is more than one problem, choose the most important one. Often when solving the key problem, other issues will surface and be addressed. The problem statement may be framed as a question; for example, How can brand X improve market share among millennials in Canada? Usually the problem statement has to be re-written several times during the analysis of a case as students peel back the layers of symptoms or causation.

Step 2: Alternatives. Identify in detail the strategic alternatives to address the problem; three to five options generally work best. Alternatives should be mutually exclusive, realistic, creative, and feasible given the constraints of the situation. Doing nothing or delaying the decision to a later date are not considered acceptable alternatives.

Step 3: Criteria. What are the key decision criteria that will guide decision-making? In a marketing course, for example, these may include relevant marketing criteria such as segmentation, positioning, advertising and sales, distribution, and pricing. Financial criteria useful in evaluating the alternatives should be included—for example, income statement variables, customer lifetime value, payback, etc. Students must discuss their rationale for selecting the decision criteria and the weights and importance for each factor.

Step 4: Analysis. Provide an in-depth analysis of each alternative based on the criteria chosen in step three. Decision tables using criteria as columns and alternatives as rows can be helpful. The pros and cons of the various choices as well as the short- and long-term implications of each may be evaluated. Best, worst, and most likely scenarios can also be insightful.

Step 5: Decision. Students propose their solution to the problem. This decision is justified based on an in-depth analysis. Explain why the recommendation made is the best fit for the criteria.

Step 6: Implementation plan. Sound business decisions may fail due to poor execution. To enhance the likeliness of a successful project outcome, students describe the key steps (activities) to implement the recommendation, timetable, projected costs, expected competitive reaction, success metrics, and risks in the plan.

“Students note that using the PACADI framework yields ‘aha moments’—they learned something surprising in the case that led them to think differently about the problem and their proposed solution.”

PACADI’s Benefits: Meaningfully and Thoughtfully Applying Business Concepts

The PACADI framework covers all of the major elements of business decision-making, including implementation, which is often overlooked. By stepping through the whole framework, students apply relevant business concepts and solve management problems via a systematic, comprehensive approach; they’re far less likely to surface piecemeal responses.

As students explore each part of the framework, they may realize that they need to make changes to a previous step. For instance, when working on implementation, students may realize that the alternative they selected cannot be executed or will not be profitable, and thus need to rethink their decision. Or, they may discover that the criteria need to be revised since the list of decision factors they identified is incomplete (for example, the factors may explain key marketing concerns but fail to address relevant financial considerations) or is unrealistic (for example, they suggest a 25 percent increase in revenues without proposing an increased promotional budget).

In addition, the PACADI framework can be used alongside quantitative assignments, in-class exercises, and business and management simulations. The structured, multi-step decision framework encourages careful and sequential analysis to solve business problems. Incorporating PACADI as an overarching decision-making method across different projects will ultimately help students achieve desired learning outcomes. As a practical “beyond-the-classroom” tool, the PACADI framework is not a contrived course assignment; it reflects the decision-making approach that managers, executives, and entrepreneurs exercise daily. Case analysis introduces students to the real-world process of making business decisions quickly and correctly, often with limited information. This framework supplies an organized and disciplined process that students can readily defend in writing and in class discussions.

PACADI in Action: An Example

Here’s an example of how students used the PACADI framework for a recent case analysis on CVS, a large North American drugstore chain.

The CVS Prescription for Customer Value*

PACADI Stage

Summary Response

How should CVS Health evolve from the “drugstore of your neighborhood” to the “drugstore of your future”?

Alternatives

A1. Kaizen (continuous improvement)

A2. Product development

A3. Market development

A4. Personalization (micro-targeting)

Criteria (include weights)

C1. Customer value: service, quality, image, and price (40%)

C2. Customer obsession (20%)

C3. Growth through related businesses (20%)

C4. Customer retention and customer lifetime value (20%)

Each alternative was analyzed by each criterion using a Customer Value Assessment Tool

Alternative 4 (A4): Personalization was selected. This is operationalized via: segmentation—move toward segment-of-1 marketing; geodemographics and lifestyle emphasis; predictive data analysis; relationship marketing; people, principles, and supply chain management; and exceptional customer service.

Implementation

Partner with leading medical school

Curbside pick-up

Pet pharmacy

E-newsletter for customers and employees

Employee incentive program

CVS beauty days

Expand to Latin America and Caribbean

Healthier/happier corner

Holiday toy drives/community outreach

*Source: A. Weinstein, Y. Rodriguez, K. Sims, R. Vergara, “The CVS Prescription for Superior Customer Value—A Case Study,” Back to the Future: Revisiting the Foundations of Marketing from Society for Marketing Advances, West Palm Beach, FL (November 2, 2018).

Results of Using the PACADI Framework

When faculty members at our respective institutions at Nova Southeastern University (NSU) and the University of North Carolina Wilmington have used the PACADI framework, our classes have been more structured and engaging. Students vigorously debate each element of their decision and note that this framework yields an “aha moment”—they learned something surprising in the case that led them to think differently about the problem and their proposed solution.

These lively discussions enhance individual and collective learning. As one external metric of this improvement, we have observed a 2.5 percent increase in student case grade performance at NSU since this framework was introduced.

Tips to Get Started

The PACADI approach works well in in-person, online, and hybrid courses. This is particularly important as more universities have moved to remote learning options. Because students have varied educational and cultural backgrounds, work experience, and familiarity with case analysis, we recommend that faculty members have students work on their first case using this new framework in small teams (two or three students). Additional analyses should then be solo efforts.

To use PACADI effectively in your classroom, we suggest the following:

Advise your students that your course will stress critical thinking and decision-making skills, not just course concepts and theory.

Use a varied mix of case studies. As marketing professors, we often address consumer and business markets; goods, services, and digital commerce; domestic and global business; and small and large companies in a single MBA course.

As a starting point, provide a short explanation (about 20 to 30 minutes) of the PACADI framework with a focus on the conceptual elements. You can deliver this face to face or through videoconferencing.

Give students an opportunity to practice the case analysis methodology via an ungraded sample case study. Designate groups of five to seven students to discuss the case and the six steps in breakout sessions (in class or via Zoom).

Ensure case analyses are weighted heavily as a grading component. We suggest 30–50 percent of the overall course grade.

Once cases are graded, debrief with the class on what they did right and areas needing improvement (30- to 40-minute in-person or Zoom session).

Encourage faculty teams that teach common courses to build appropriate instructional materials, grading rubrics, videos, sample cases, and teaching notes.

When selecting case studies, we have found that the best ones for PACADI analyses are about 15 pages long and revolve around a focal management decision. This length provides adequate depth yet is not protracted. Some of our tested and favorite marketing cases include Brand W , Hubspot , Kraft Foods Canada , TRSB(A) , and Whiskey & Cheddar .

Art Weinstein , Ph.D., is a professor of marketing at Nova Southeastern University, Fort Lauderdale, Florida. He has published more than 80 scholarly articles and papers and eight books on customer-focused marketing strategy. His latest book is Superior Customer Value—Finding and Keeping Customers in the Now Economy . Dr. Weinstein has consulted for many leading technology and service companies.

Herbert V. Brotspies , D.B.A., is an adjunct professor of marketing at Nova Southeastern University. He has over 30 years’ experience as a vice president in marketing, strategic planning, and acquisitions for Fortune 50 consumer products companies working in the United States and internationally. His research interests include return on marketing investment, consumer behavior, business-to-business strategy, and strategic planning.

John T. Gironda , Ph.D., is an assistant professor of marketing at the University of North Carolina Wilmington. His research has been published in Industrial Marketing Management, Psychology & Marketing , and Journal of Marketing Management . He has also presented at major marketing conferences including the American Marketing Association, Academy of Marketing Science, and Society for Marketing Advances.

Related Articles

We use cookies to understand how you use our site and to improve your experience, including personalizing content. Learn More . By continuing to use our site, you accept our use of cookies and revised Privacy Policy .

- Reference Manager

- Simple TEXT file

People also looked at

Review article, appropriate criteria: key to effective rubrics.

- Department of Educational Foundations and Leadership, Duquesne University, Pittsburgh, PA, United States

True rubrics feature criteria appropriate to an assessment's purpose, and they describe these criteria across a continuum of performance levels. The presence of both criteria and performance level descriptions distinguishes rubrics from other kinds of evaluation tools (e.g., checklists, rating scales). This paper reviewed studies of rubrics in higher education from 2005 to 2017. The types of rubrics studied in higher education to date have been mostly analytic (considering each criterion separately), descriptive rubrics, typically with four or five performance levels. Other types of rubrics have also been studied, and some studies called their assessment tool a “rubric” when in fact it was a rating scale. Further, for a few (7 out of 51) rubrics, performance level descriptions used rating-scale language or counted occurrences of elements instead of describing quality. Rubrics using this kind of language may be expected to be more useful for grading than for learning. Finally, no relationship was found between type or quality of rubric and study results. All studies described positive outcomes for rubric use.

A rubric articulates expectations for student work by listing criteria for the work and performance level descriptions across a continuum of quality ( Andrade, 2000 ; Arter and Chappuis, 2006 ). Thus, a rubric has two parts: criteria that express what to look for in the work and performance level descriptions that describe what instantiations of those criteria look like in work at varying quality levels, from low to high.

Other assessment tools, like rating scales and checklists, are sometimes confused with rubrics. Rubrics, checklists, and rating scales all have criteria; the scale is what distinguishes them. Checklists ask for dichotomous decisions (typically has/doesn't have or yes/no) for each criterion. Rating scales ask for decisions across a scale that does not describe the performance. Common rating scales include numerical scales (e.g., 1–5), evaluative scales (e.g., Excellent-Good-Fair-Poor), and frequency scales (e.g., Always, Usually-Sometimes-Never). Frequency scales are sometimes useful for ratings of behavior, but none of the rating scales offer students a description of the quality of their performance they can easily use to envision their next steps in learning. The purpose of this paper is to investigate the types of rubrics that have been studied in higher education.

Rubrics have been analyzed in several different ways. One important characteristic of rubrics is whether they are general or task-specific ( Arter and McTighe, 2001 ; Arter and Chappuis, 2006 ; Brookhart, 2013 ). General rubrics apply to a family of similar tasks (e.g., persuasive writing prompts, mathematics problem solving). For example, a general rubric for an essay on characterization might include a performance level description that reads, “Used relevant textual evidence to support conclusions about a character.” Task-specific rubrics specify the specific facts, concepts, and/or procedures that students' responses to a task should contain. For example, a task-specific rubric for the characterization essay might specify which pieces of textual evidence the student should have located and what conclusions the student should have drawn from this evidence. The generality of the rubric is perhaps the most important characteristic, because general rubrics can be shared with students and used for learning as well as for grading.

The prevailing hypothesis about how rubrics help students is that they make explicit both the expectations for student work and, more generally, describe what learning looks like ( Andrade, 2000 ; Arter and McTighe, 2001 ; Arter and Chappuis, 2006 ; Bell et al., 2013 ; Brookhart, 2013 ; Nordrum et al., 2013 ; Panadero and Jonsson, 2013 ). In this way, rubrics play a role in the formative learning cycle (Where am I going? Where am I now? Where to next? Hattie and Timperley, 2007 ) and support student agency and self-regulation ( Andrade, 2010 ). Some research has borne out this idea, showing that rubrics do make expectations explicit for students ( Jonsson, 2014 ; Prins et al., 2016 ) and that students do use rubrics for this purpose ( Andrade and Du, 2005 ; Garcia-Ros, 2011 ). General rubrics should be written with descriptive language, as opposed to evaluative language (e.g., excellent, poor) because descriptive language helps students envision where they are in their learning and where they should go next.

Another important way to characterize rubrics is whether they are analytic or holistic. Analytic rubrics consider criteria one at a time, which means they are better for feedback to students ( Arter and McTighe, 2001 ; Arter and Chappuis, 2006 ; Brookhart, 2013 ; Brookhart and Nitko, 2019 ). Holistic criteria consider all the criteria simultaneously, requiring only one decision on one scale. This means they are better for grading, for times when students will not need to use feedback, because making only one decision is quicker and less cognitively demanding than making several.

Rubrics have been characterized by the number of criteria and number of levels they use. The number of criteria should be linked to the intended learning outcome(s) to be assessed, and the number of levels should be related to the types of decisions that need to be made and to the number of reliable distinctions in student work that are possible and helpful.

Dawson (2017) recently summarized a set of 14 rubric design elements that characterize both the rubrics themselves and their use in context. His intent was to provide more precision to discussions about rubrics and to future research in the area. His 14 areas included: specificity, secrecy, exemplars, scoring strategy, evaluative criteria, quality levels, quality definitions, judgment complexity, users and uses, creators, quality processes, accompanying feedback information, presentation, and explanation. In Dawson's terms, this study focused on specificity, evaluative criteria, quality levels, quality definitions, quality processes, and presentation (how the information is displayed).

Four recent literature reviews on the topic of rubrics ( Jonsson and Svingby, 2007 ; Reddy and Andrade, 2010 ; Panadero and Jonsson, 2013 ; Brookhart and Chen, 2015 ) summarize research on rubrics. Brookhart and Chen (2015) updated Jonsson and Svingby's (2007) comprehensive literature review. Panadero and Jonsson (2013) specifically addressed the use of rubrics in formative assessment and the fact that formative assessment begins with students understanding expectations. They posited that rubrics help improve student learning through several mechanisms (p. 138): increasing transparency, reducing anxiety, aiding the feedback process, improving student self-efficacy, or supporting student Self-regulation.

Reddy and Andrade (2010) addressed the use of rubrics in post-secondary education specifically. They noted that rubrics have the potential to identify needs in courses and programs, and have been found to support learning (although not in all studies). The found that the validity and reliability of rubrics can be established, but this is not always done in higher education applications of rubrics. Finally, they found that some higher education faculty may resist the use of rubrics, which may be linked to a limited understanding of the purposes of rubrics. Students generally perceive that rubrics serve purposes of learning and achievement, while some faculty members think of rubrics primarily as grading schemes (p. 439). In fact, rubrics are not as easy to use for grading as some traditional rating or point schemes; the reason to use rubrics is that they can support learning and align learning with grading.

Some criticisms and challenges for rubrics have been noted. Nordrum et al. (2013) summarized words of caution from several scholars about the potential for the criteria used in rubrics to be subjective or vague, or to narrow students' understandings of learning (see also Torrance, 2007 ). In a backhanded way, these criticisms support the thesis of this review, namely, that appropriate criteria are the key to the effectiveness of a rubric. Such criticisms are reasonable and get their traction from the fact that many ineffective or poor-quality rubrics exist, that do have vague or narrow criteria. A particularly dramatic example of this happens when the criteria in a rubric are about following the directions for an assignment rather than describing learning (e.g., “has three sources” rather than “uses a variety of relevant, credible sources”). Rubrics of this kind misdirect student efforts and mis-measure learning.

Sadler (2014) argued that codification of qualities of good work into criteria cannot mean the same thing in all contexts and cannot be specific enough to guide student thinking. He suggests instantiation instead of codification, describing a process of induction where the qualities of good work are inferred from a body of work samples. In fact, this method is already used in classrooms when teachers seek to clarify criteria for rubrics ( Arter and Chappuis, 2006 ) or when teachers co-create rubrics with students ( Andrade and Heritage, 2017 ).

Purpose of the Study

A number of scholars have published studies of the reliability, validity, and/or effectiveness of rubrics in higher education and provided the rubrics themselves for inspection. This allows for the investigation of several research questions, including:

(1) What are the types and quality of the rubrics studied in higher education?

(2) Are there any relationships between the type and quality of these rubrics and reported reliability, validity, and/or effects on learning and motivation?

Question 1 was of interest because, after doing the previous review ( Brookhart and Chen, 2015 ), I became aware that not all of the assessment tools in studies that claimed to be about rubrics were characterized by both criteria and performance level descriptions, as for true rubrics ( Andrade, 2000 ). The purpose of Research Question 1 was simply to describe the distribution of assessment tool types in a systematic manner.

Question 2 was of interest from a learning perspective. Various types of assessment tools can be used reliably ( Brookhart and Nitko, 2019 ) and be valid for specific purposes. An additional claim, however, is made about true rubrics. Because the performance level descriptions describe performance across a continuum of work quality, rubrics are intended to be useful for students' learning ( Andrade, 2000 ; Brookhart, 2013 ). The criteria and performance level descriptions, together, can help students conceptualize their learning goal, focus on important aspects of learning and performance, and envision where they are in their learning and what they should try to improve ( Falchikov and Boud, 1989 ). Thus I hypothesized that there would not be a relationship between type of rubric and conventional reliability and validity evidence. However, I did expect a relationship between type of rubric and the effects of rubrics on learning and motivation, expecting true descriptive rubrics to support student learning better than the other types of tools.

This study is a literature review. Study selection began with the data base of studies selected for Brookhart and Chen (2015) , a previous review of literature on rubrics from 2005 to 2013. Thirty-six studies from that review were done in the context of higher education. I conducted an electronic search for articles published from 2013 to 2017 in the ERIC database. This yielded 10 additional studies, for a total of 46 studies. The 46 studies have the following characteristics: (a) conducted in higher education, (b) studied the rubrics (i.e., did not just use the rubrics to study something else, or give a description of “how-to-do-rubrics”), and (c) included the rubrics in the article.

There are two reasons for limiting the studies to the higher education context. One, most published studies of rubrics have been conducted in higher education. I do not think this means fewer rubrics are being used in the K-12 context; I observe a lot of rubric use in K-12. Higher education users, however, are more likely to do a formal review of some kind and publish their results. Thus the number of available studies was large enough to support a review. Two, given that more published information on rubrics exists in higher education than K-12, limiting the review to higher education holds constant one possible source of complexity in understanding rubric use, because all of the students are adult learners. Rubrics used with K-12 students must be written at an appropriate developmental or educational level. The reason for limiting the studies to ones that included a copy of the rubrics in the article was that the analysis for this review required classifying the type and characteristics of the rubrics themselves.

Information about the 46 studies was entered into a spreadsheet. Information noted about the studies included country, level (undergraduate or graduate), type (rubric, rating scale, or point scheme), how the rubric considered criteria (analytic or holistic), whether the performance level descriptors were truly descriptive or used rating scale and/or numerical language in the levels, type of construct assessed by the rubrics (cognitive or behavioral), whether the rubrics were used with students or just by instructors for grading, sample, study method (e.g., case study, quasi-experimental), and findings. Descriptive and summary information about these classifications and study descriptions was used to address the research questions.

As an example of what is meant by descriptive language in a rubric, consider this excerpt from Prins et al. (2016) . This is the performance level description for Level 3 of the criterion Manuscript Structure from a rubric for research theses (p. 133):

All elements are logically connected and keypoints within sections are organized. Research questions, hypotheses, research design, results, inferences and evaluations are related and form a consistent and concise argumentation.

Notice that a key characteristic of the language in this performance level description is that it describes the work. Thus for students who aspire to this high level, the rubric depicts for them what their work needs to look like in order to reach that goal.

In contrast, if performance level descriptions are written in evaluative language (for example, if the performance level description above had read, “The paper shows excellent manuscript structure”), the rubric does not give students the information they need to further their learning. Rubrics written in evaluative language do not give students a depiction of work at that level and, therefore, do not provide a clear description of the learning goal. An example of evaluative language used in a rubric can be found in the performance level descriptions for one of the criteria of an oral communication rubric ( Avanzino, 2010 , p. 109). This is the performance level description for Level 2 (Adequate) on the criterion of Delivery:

Speaker's delivery style/use of notes (manuscript or extemporaneous) is average; inconsistent focus on audience.

Notice that the key word in the first part of the performance level description, “average,” does not give any information to the student about what average delivery looks like in regard to style and use of notes. The second part of the performance level description, “inconsistent focus on audience,” is descriptive and gives students information about what Level 2 performance looks like in regard to audience focus.

Results and Discussion

The 46 studies yielded 51 different rubrics because several studies included more than one rubric. The two sections below take up results for each research question in turn.

Type and Quality of Rubrics

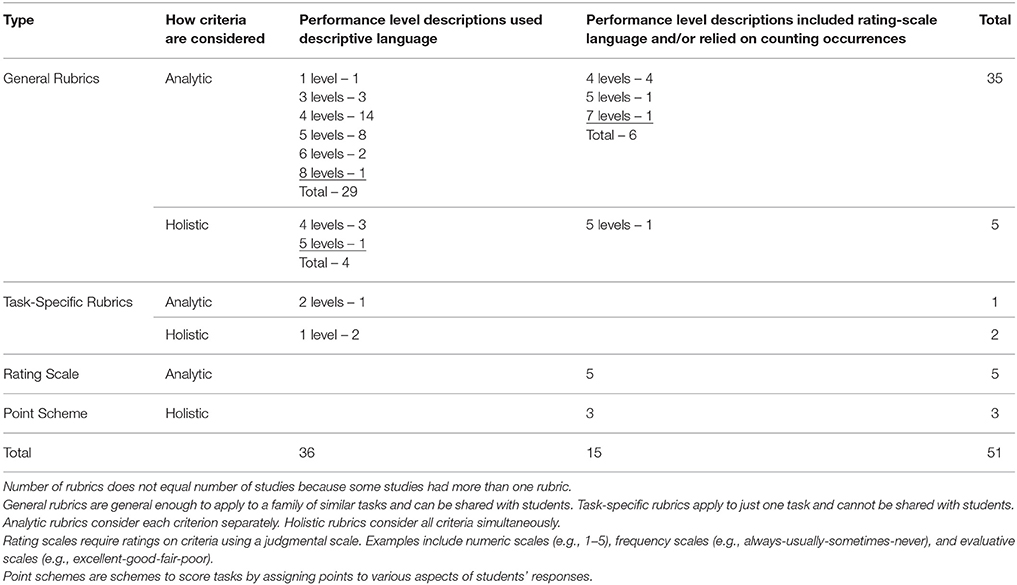

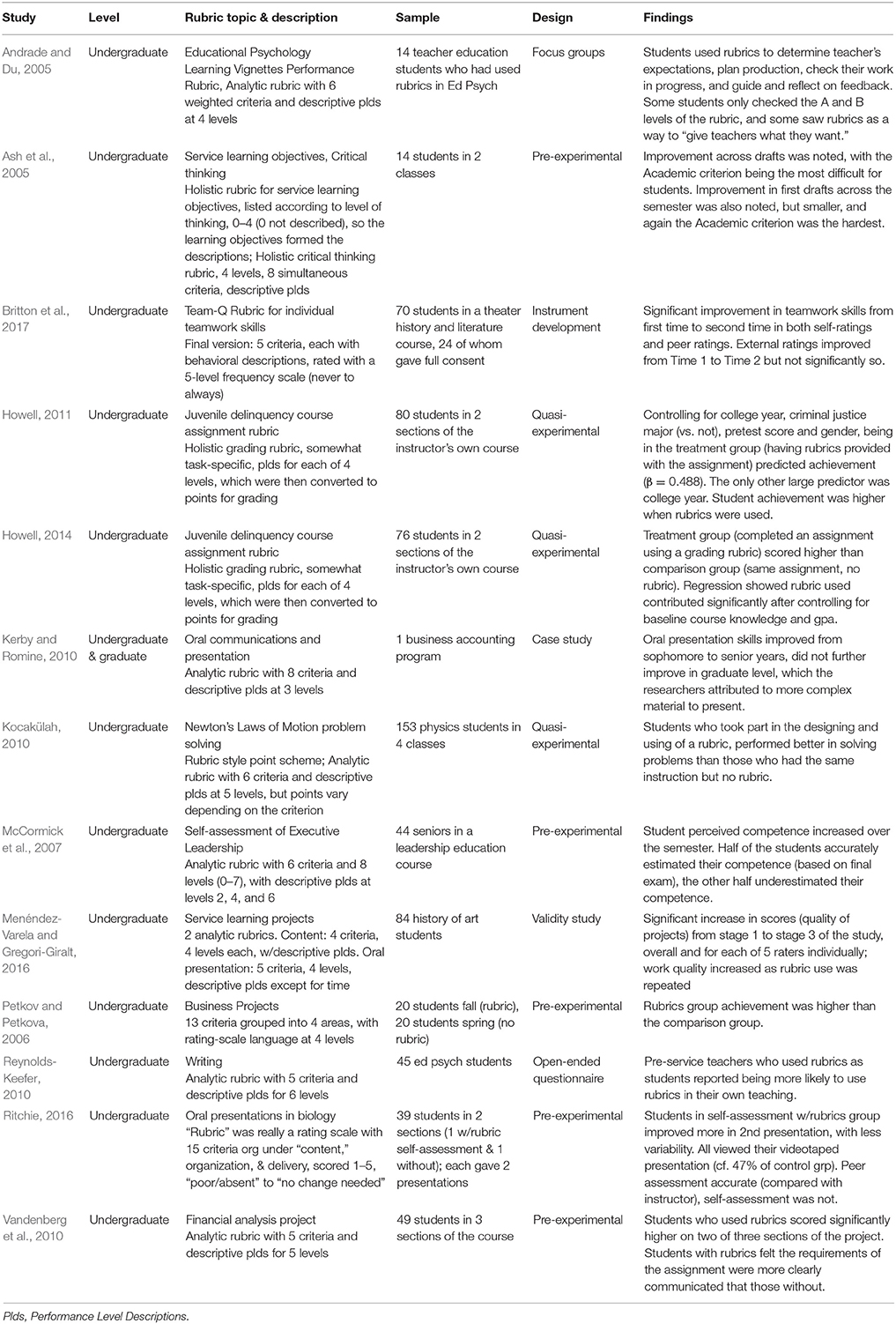

Table 1 displays counts of the type and quality of rubrics found in the studies. Most of the rubrics (29 out of 51, 57%) were analytic, descriptive rubrics. This means they considered the criteria separately, requiring a separate decision about work quality for each criterion. In addition, it means that the performance level descriptions used descriptive, as opposed to evaluative, language, which is expected to be more supportive of learning. Most commonly, these rubrics described four (14) or five (8) performance levels.

Table 1 . Types of rubrics used in studies of rubrics in higher education.

Four of the 51 rubrics (8%) were holistic, descriptive rubrics. This means they considered the criteria simultaneously, requiring one decision about work quality across all criteria at once. In addition, the performance level descriptions used the desired descriptive language.

Three of the rubrics were descriptive and task-specific. One of these was an analytic rubric and two were holistic rubrics. None of the three could be shared with students, because they would “give away” answers. Such rubrics are more useful for grading than for formative assessment supporting learning. This does not necessarily mean the rubrics were not of quality, because they served well the grading function for which they were designed. However, they represent a missed opportunity to support learning as well as grading.

A few of the rubrics were not written in a descriptive manner. Six of the analytic rubrics and one of the holistic rubrics used rating scale language and/or listed counts of occurrences of elements in the work, instead of describing the quality of student learning and performance. Thus 7 out of 51 (14%) of the rubrics were not of the quality that is expected to be best for student learning ( Arter and McTighe, 2001 ; Arter and Chappuis, 2006 ; Andrade, 2010 ; Brookhart, 2013 ).

Finally, eight of the 51 rubrics (16%) were not rubrics but rather rating scales (5) or point schemes for grading (3). It is possible that the authors were not aware of the more nuanced meaning of “rubric” currently used by educators and used the term in a more generic way to mean any scoring scheme.

As the heart of Research Question 1 was about the potential of the rubrics used to contribute to student learning, I also coded the studies according to whether the rubrics were used with students or whether they were just used by instructors for grading. Of the 46 studies, 26 (56%) reported using the rubrics with students and 20 (43%) did not use rubrics with students but rather used them only for grading.

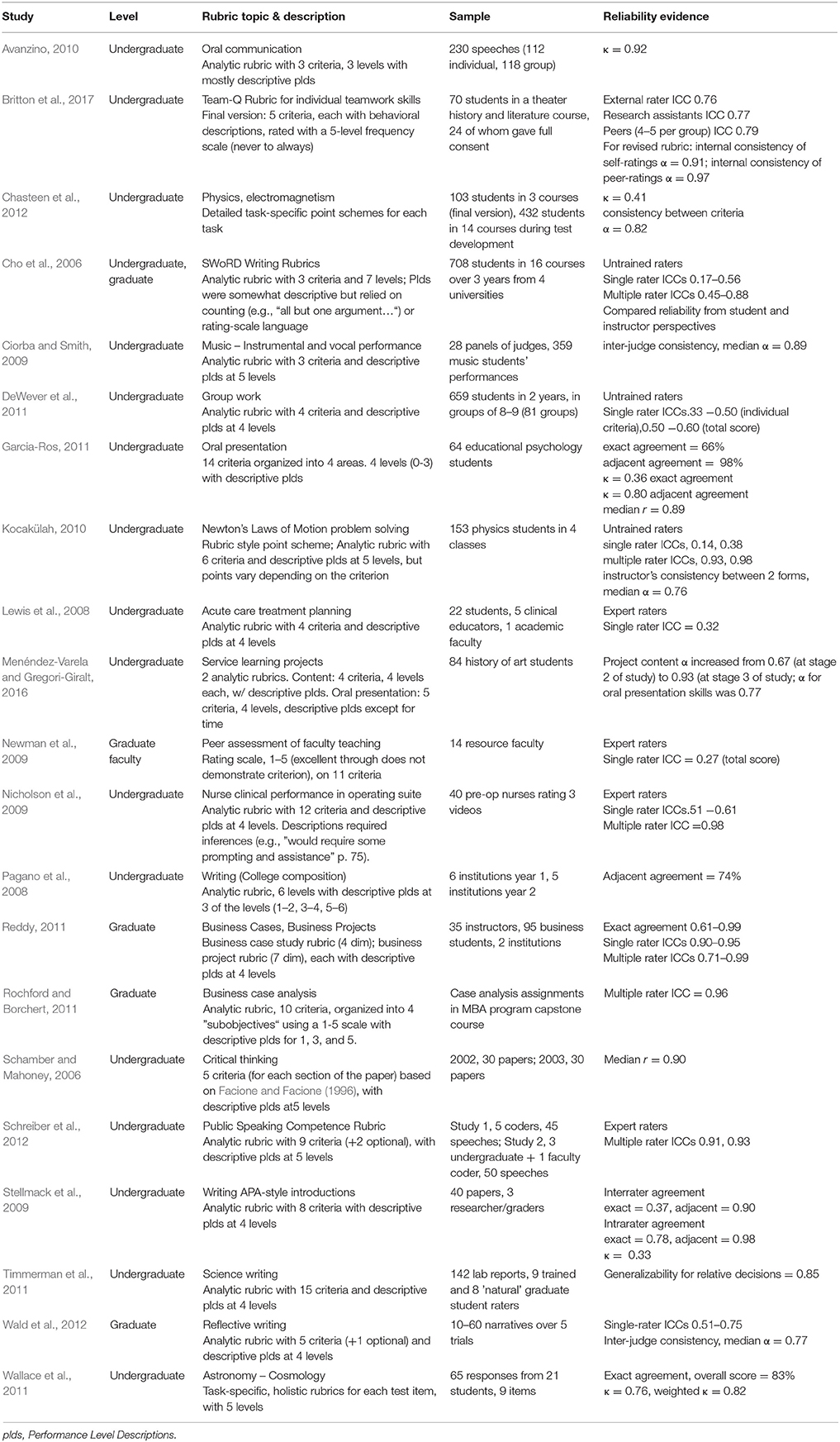

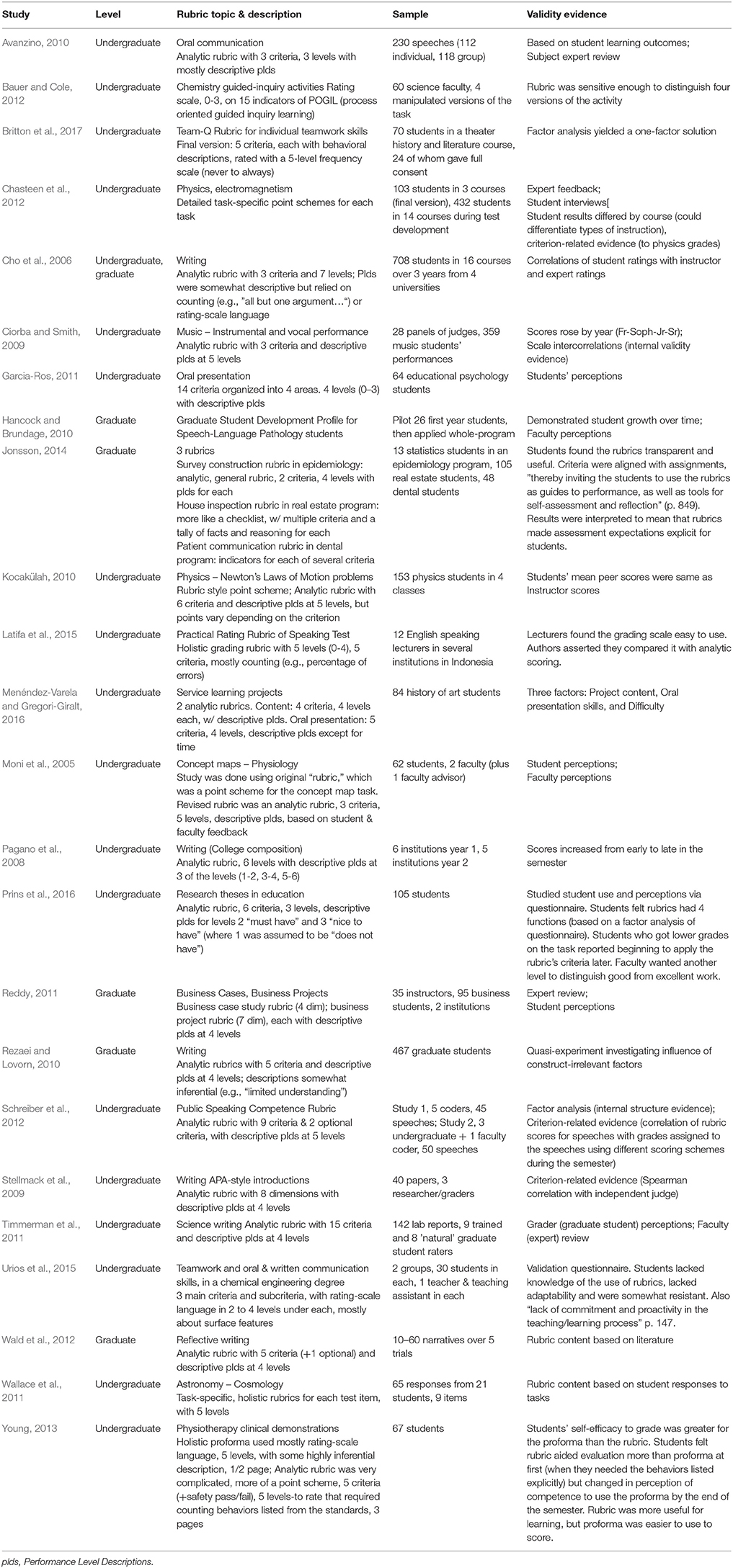

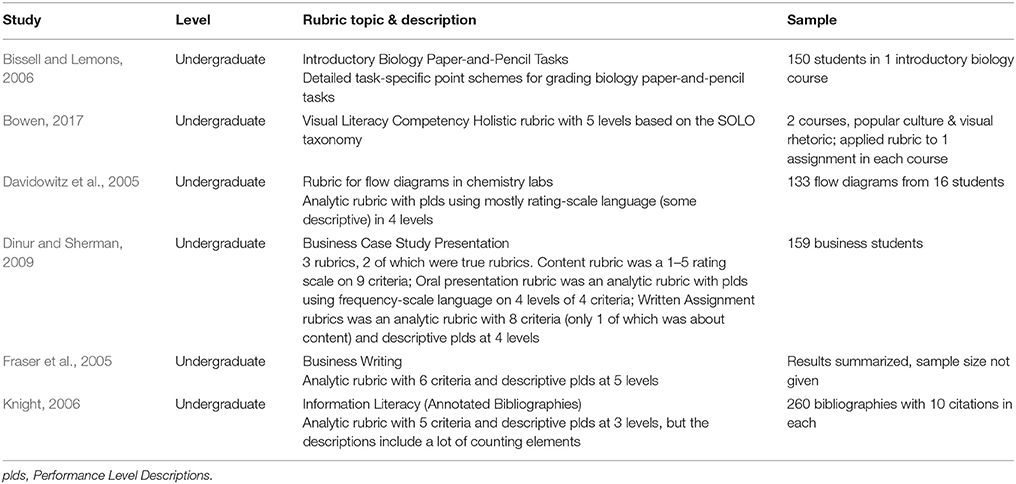

Relation of Rubric Type to Reliability, Validity, and Learning

Different studies reported different characteristics of their rubrics. I charted studies that reported evidence for the reliability of information from rubrics (Table 2 ) and the validity of information from rubrics (Table 3 ). For the sake of completeness, Table 4 lists six studies that presented their work with rubrics in a descriptive case-study style that did not fit easily into Table 2 or Table 3 or in Table 5 (below) about the effects of rubrics on learning. With the inclusion of Table 4 , readers have descriptions of all 51 rubrics in all 46 studies reported under Research Question 1.

Table 2 . Reliability evidence for rubrics.

Table 3 . Validity evidence for rubrics.

Table 4 . Descriptive case studies about developing and using rubrics.

Table 5 . Studies of the effects of rubric use on student learning and motivation to learn.

Reliability was most commonly studied as inter-rater reliability, arguably the most important for rubrics because judgment is involved in matching student work with performance level descriptions, or as internal consistency among criteria. Construct validity was addressed with a variety of methods, from expert review to factor analysis; some studies also addressed consequential evidence for validity with student or faculty questionnaires. No discernable patterns were found that indicated one form of rubric was preferable to another in regard to reliability or validity. Although this conforms to my hypothesis, this result is also partly because most of the studies' reported results and experience with rubrics were positive, no matter what type of rubric was used.

Table 5 describes 13 studies of the effects of rubrics on learning or motivation, all with positive results. Learning was most commonly operationalized as improvement in student work. Motivation was typically operationalized as student responses to questionnaires. In these studies as well, no discernable pattern was found regarding type of rubric. Despite the logical and learning-based arguments made in the literature and summarized in the introduction to this article, rubrics with both descriptive and evaluative performance level descriptions both led to at least some positive results for students. Eight of these studies used descriptive rubrics and five used evaluative rubrics. It is possible that the lack of association of type of rubric with study findings is a result of publication bias, because most of the studies had good things to say about rubrics and their effects. The small sample size (13 studies) may also be an issue.

Conclusions

Rubrics are becoming more and more evident as part of assessment in higher education. Evidence for that claim is simply the number of studies that are published investigating this new and growing interest and the assertions made in those studies about rising interest in rubrics.

Research Question 1 asked about the type and quality of rubrics published in studies of rubrics in higher education. The number of criteria varies widely depending on the rubric and its purpose. Three, four, and five are the most common number of levels. While most of the rubrics are descriptive—the type of rubrics generally expected to be most useful for learning—many are not. Perhaps most surprising, and potentially troubling, is that only 56% of the studies reported using rubrics with students. If all that is required is a grading scheme, traditional point schemes or rating scales are easier for instructors to use. The value of a rubric lies in its formative potential ( Panadero and Jonsson, 2013 ), where the same tool that students can use to learn and monitor their learning is then used for grading and final evaluation by instructors.

Research Question 2 asked whether rubric type and quality were related to measurement quality (reliability and validity) or effects on learning and motivation to learn. Among studies in this review, reported reliability and validity was not related to type of rubric. Reported effects on learning and/or motivation were not related to type of rubric. The discussion above speculated that part of the reason for these findings might be publication bias, because only studies with good effects—whatever the type of rubric they used—were reported.

However, we should not dismiss all the results with a hand-wave about publication bias. All of the tools in the studies of rubrics—true rubrics, rating scales, checklists—had criteria. The differences were in the type of scale and scale descriptions used. Criteria lay out for students and instructors what is expected in student work and, by extension, what it looks like when evidence of intended learning has been produced. Several of the articles stated explicitly that the point of rubrics was to make assignment expectations explicit (e.g., Andrade and Du, 2005 ; Fraser et al., 2005 ; Reynolds-Keefer, 2010 ; Vandenberg et al., 2010 ; Jonsson, 2014 ; Prins et al., 2016 ). The criteria are the assignment expectations: the qualities the final work should display. The performance level descriptions instantiate those expectations at different levels of competence. Thus, one firm conclusion from this review is that appropriate criteria are the key to effective rubrics. Trivial or surface-level criteria will not draw learning goals for students as clearly as substantive criteria. Students will try to produce what is expected of them. If the criterion is simply having or counting something in their work (e.g., “has 5 paragraphs”), students need not pay attention to the quality of what their work has. If the criterion is substantive (e.g., “states a compelling thesis”), attention to quality becomes part of the work.

It is likely that appropriate performance level descriptions are also key for effective rubrics, but this review did not establish this fact. A major recommendation for future research is to design studies that investigate how students use the performance level descriptions as they work, in monitoring their work, and in their self-assessment judgments. Future research might also focus on two additional characteristics of rubrics ( Dawson, 2017 ): users and uses and judgment complexity. Several studies in this review established that students use rubrics to make expectations explicit. However, in only 56% of the studies were rubrics used with students, thus missing the opportunity to take advantage of this important rubric function. Therefore, it seems important to seek additional understanding of users and uses of rubrics. In this review, judgment complexity was a clear issue for one study ( Young, 2013 ). In that study, a complex rubric was found more useful for learning, but a holistic rating scale was easier to use once the learning had occurred. This hint from one study suggests that different degrees of judgment complexity might be more useful in different stages of learning.

Rubrics are one way to make learning expectations explicit for learners. Appropriate criteria are key. More research is needed that establishes how performance level descriptions function during learning and, more generally, how students use rubrics for learning, not just that they do.

Author Contributions

The author confirms being the sole contributor of this work and approved it for publication.

Conflict of Interest Statement

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Andrade, H. G. (2000). Using rubrics to promote thinking and learning. Educational Leadership 57, 13–18. Available online at: http://www.ascd.org/publications/educational-leadership/feb00/vol57/num05/Using-Rubrics-to-Promote-Thinking-and-Learning.aspx

Google Scholar

Andrade, H., and Du, Y. (2005). Student perspectives on rubric-referenced assessment. Pract. Assess. Res. Eval. 10, 1–11. Available online at: http://pareonline.net/pdf/v10n3.pdf

Andrade, H., and Heritage, M. (2017). Using Assessment to Enhance Learning, Achievement, and Academic Self-Regulation . New York, NY: Routledge.

Andrade, H. L. (2010). “Students as the definitive source of formative assessment: academic self-assessment and the self-regulation of learning,” in Handbook of Formative Assessment , eds H. L. Andrade and G. J. Cizek (New York, NY: Routledge), 90–105.

Arter, J. A., and Chappuis, J. (2006). Creating and Recognizing Quality Rubrics . Boston: Pearson.

Arter, J. A., and McTighe, J. (2001). Scoring Rubrics in the Classroom: Using Performance Criteria for Assessing and Improving Student Performance . Thousand Oaks, CA: Corwin.

Ash, S. L., Clayton, P. H., and Atkinson, M. P. (2005). Integrating reflection and assessment to capture and improve student learning. Mich. J. Comm. Serv. Learn. 11, 49–60. Available online at: http://hdl.handle.net/2027/spo.3239521.0011.204

Avanzino, S. (2010). Starting from scratch and getting somewhere: assessment of oral communication proficiency in general education across lower and upper division courses. Commun. Teach. 24, 91–110. doi: 10.1080/17404621003680898

CrossRef Full Text | Google Scholar

Bauer, C. F., and Cole, R. (2012). Validation of an assessment rubric via controlled modification of a classroom activity. J. Chem. Educ. 89, 1104–1108. doi: 10.1021/ed2003324

Bell, A., Mladenovic, R., and Price, M. (2013). Students' perceptions of the usefulness of marking guides, grade descriptors and annotated exemplars. Assess. Eval. High. Educ. 38, 769–788. doi: 10.1080/02602938.2012.714738

Bissell, A. N., and Lemons, P. R. (2006). A new method for assessing critical thinking in the classroom. BioScience , 56, 66–72. doi: 10.1641/0006-3568(2006)056[0066:ANMFAC]2.0.CO;2

Bowen, T. (2017). Assessing visual literacy: a case study of developing a rubric for identifying and applying criteria to undergraduate student learning. Teach. High. Educ. 22, 705–719. doi: 10.1080/13562517.2017.1289507

Britton, E., Simper, N., Leger, A., and Stephenson, J. (2017). Assessing teamwork in undergraduate education: a measurement tool to evaluate individual teamwork skills. Assess. Eval. High. Educ. 42, 378–397. doi: 10.1080/02602938.2015.1116497

Brookhart, S. M. (2013). How to Create and Use Rubrics for Formative Assessment and Grading . Alexandria, VA: ASCD.

Brookhart, S. M., and Chen, F. (2015). The quality and effectiveness of descriptive rubrics. Educ. Rev. 67, 343–368. doi: 10.1080/00131911.2014.929565

Brookhart, S. M., and Nitko, A. J. (2019). Educational Assessment of Students, 8th Edn. Boston, MA: Pearson.

Chasteen, S. V., Pepper, R. E., Caballero, M. D., Pollock, S. J., and Perkins, K. K. (2012). Colorado Upper-Division Electrostatics diagnostic: a conceptual assessment for the junior level. Phys. Rev. Spec. Top. Phys. Educ. Res. 8:020108. doi: 10.1103/PhysRevSTPER.8.020108

Cho, K., Schunn, C. D., and Wilson, R. W. (2006). Validity and reliability of scaffolded peer assessment of writing from instructor and student perspectives. J. Educ. Psychol. 98, 891–901. doi: 10.1037/0022-0663.98.4.891

Ciorba, C. R., and Smith, N. Y. (2009). Measurement of instrumental and vocal undergraduate performance juries using a multidimensional assessment rubric. J. Res. Music Educ. 57, 5–15. doi: 10.1177/0022429409333405

Davidowitz, B., Rollnick, M., and Fakudze, C. (2005). Development and application of a rubric for analysis of novice students' laboratory flow diagrams. Int. J. Sci. Educ. 27, 43–59. doi: 10.1080/0950069042000243754

Dawson, P. (2017). Assessment rubrics: towards clearer and more replicable design, research and practice. Assess. Eval. High. Educ. 42, 347–360. doi: 10.1080/02602938.2015.1111294

DeWever, B., Van Keer, H., Schellens, T., and Valke, M. (2011). Assessing collaboration in a wiki: the reliability of university students' peer assessment. Internet High. Educ. 14, 201–206. doi: 10.1016/j.iheduc.2011.07.003

Dinur, A., and Sherman, H. (2009). Incorporating outcomes assessment and rubrics into case instruction. J. Behav. Appl. Manag. 10, 291–311.

Facione, N. C., and Facione, P. A. (1996). Externalizing the critical thinking in knowledge development and clinical judgment. Nurs. Outlook 44, 129–136. doi: 10.1016/S0029-6554(06)80005-9

PubMed Abstract | CrossRef Full Text | Google Scholar

Falchikov, N., and Boud, D. (1989). Student self-assessment in higher education: a meta-analysis. Rev. Educ. Res. 59, 395–430.

Fraser, L., Harich, K., Norby, J., Brzovic, K., Rizkallah, T., and Loewy, D. (2005). Diagnostic and value-added assessment of business writing. Bus. Commun. Q. 68, 290–305. doi: 10.1177/1080569905279405

Garcia-Ros, R. (2011). Analysis and validation of a rubric to assess oral presentation skills in university contexts. Electr. J. Res. Educ. Psychol. 9, 1043–1062.

Hancock, A. B., and Brundage, S. B. (2010). Formative feedback, rubrics, and assessment of professional competency through a speech-language pathology graduate program. J. All. Health , 39, 110–119.

PubMed Abstract | Google Scholar

Hattie, J., and Timperley, H. (2007). The power of feedback. Rev. Educ. Res. 77, 81–112. doi: 10.3102/003465430298487

Howell, R. J. (2011). Exploring the impact of grading rubrics on academic performance: findings from a quasi-experimental, pre-post evaluation. J. Excell. Coll. Teach. 22, 31–49.

Howell, R. J. (2014). Grading rubrics: hoopla or help? Innov. Educ. Teach. Int. 51, 400–410. doi: 10.1080/14703297.2013.785252

Jonsson, A. (2014). Rubrics as a way of providing transparency in assessment. Assess. Eval. High. Educ. 39, 840–852. doi: 10.1080/02602938.2013.875117

Jonsson, A., and Svingby, G. (2007). The use of scoring rubrics: Reliability, validity and educational consequences. Educ. Res. Rev. 2, 130–144. doi: 10.1016/j.edurev.2007.05.002

Kerby, D., and Romine, J. (2010). Develop oral presentation skills through accounting curriculum design and course-embedded assessment. Journal of Education for Business , 85, 172–179. doi: 10.1080/08832320903252389

Knight, L. A. (2006). Using rubrics to assess information literacy. Ref. Serv. Rev. 34, 43–55. doi: 10.1108/00907320610640752

Kocakülah, M. (2010). Development and application of a rubric for evaluating students' performance on Newton's Laws of Motion. J. Sci. Educ. Technol. 19, 146–164. doi: 10.1007/s10956-009-9188-9

Latifa, A., Rahman, A., Hamra, A., Jabu, B., and Nur, R. (2015). Developing a practical rating rubric of speaking test for university students of English in Parepare, Indonesia. Engl. Lang. Teach. 8, 166–177. doi: 10.5539/elt.v8n6p166

Lewis, L. K., Stiller, K., and Hardy, F. (2008). A clinical assessment tool used for physiotherapy students—is it reliable? Physiother. Theory Pract. 24, 121–134. doi: 10.1080/09593980701508894

McCormick, M. J., Dooley, K. E., Lindner, J. R., and Cummins, R. L. (2007). Perceived growth versus actual growth in executive leadership competencies: an application of the stair-step behaviorally anchored evaluation approach. J. Agric. Educ. 48, 23–35. doi: 10.5032/jae.2007.02023

Menéndez-Varela, J., and Gregori-Giralt, E. (2016). The contribution of rubrics to the validity of performance assessment: a study of the conservation-restoration and design undergraduate degrees. Assess. Eval. High. Educ. 41, 228–244. doi: 10.1080/02602938.2014.998169

Moni, R. W., Beswick, E., and Moni, K. B. (2005). Using student feedback to construct an assessment rubric for a concept map in physiology. Adv. Physiol. Educ. 29, 197–203. doi: 10.1152/advan.00066.2004

Newman, L. R., Lown, B. A., Jones, R. N., Johansson, A., and Schwartzstein, R. M. (2009). Developing a peer assessment of lecturing instrument: lessons learned. Acad. Med. 84, 1104–1110. doi: 10.1097/ACM.0b013e3181ad18f9

Nicholson, P., Gillis, S., and Dunning, A. M. (2009). The use of scoring rubrics to determine clinical performance in the operating suite. Nurse Educ. Today 29, 73–82. doi: 10.1016/j.nedt.2008.06.011

Nordrum, L., Evans, K., and Gustafsson, M. (2013). Comparing student learning experiences of in-text commentary and rubric-articulated feedback: strategies for formative assessment. Assess. Eval. High. Educ. 38, 919–940. doi: 10.1080/02602938.2012.758229

Pagano, N., Bernhardt, S. A., Reynolds, D., Williams, M., and McCurrie, M. (2008). An inter-institutional model for college writing assessment. Coll. Composition Commun. 60, 285–320.

Panadero, E., and Jonsson, A. (2013). The use of scoring rubrics for formative assessment purposes revisited: a review. Educ. Res. Rev. 9, 129–144. doi: 10.1016/j.edurev.2013.01.002

Petkov, D., and Petkova, O. (2006). Development of scoring rubrics for IS projects as an assessment tool. Issues Informing Sci. Inform. Technol. 3, 499–510. doi: 10.28945/910

Prins, F. J., de Kleijn, R., and van Tartwijk, J. (2016). Students' use of a rubric for research theses. Assess. Eval. High. Educ. 42, 128–150. doi: 10.1080/02602938.2015.1085954

Reddy, M. Y. (2011). Design and development of rubrics to improve assessment outcomes: a pilot study in a master's level Business program in India. Qual. Assur. Educ. 19, 84–104. doi: 10.1108/09684881111107771

Reddy, Y., and Andrade, H. (2010). A review of rubric use in higher education. Assess. Eval. High. Educ. 35, 435–448. doi: 10.1080/02602930902862859

Reynolds-Keefer, L. (2010). Rubric-referenced assessment in teacher preparation: an opportunity to learn by using. Pract. Assess. Res. Eval. 15, 1–9. Available online at: http://pareonline.net/getvn.asp?v=15&n=8

Rezaei, A., and Lovorn, M. (2010). Reliability and validity of rubrics for assessment through writing. Assess. Writing , 15, 18–39. doi: 10.1016/j.asw.2010.01.003

Ritchie, S. M. (2016). Self-assessment of video-recorded presentations: does it improve skills? Act. Learn. High. Educ. 17, 207–221. doi: 10.1177/1469787416654807

Rochford, L., and Borchert, P. S. (2011). Assessing higher level learning: developing rubrics for case analysis. J. Educ. Bus. 86, 258–265. doi: 10.1080/08832323.2010.512319

Sadler, D. R. (2014). The futility of attempting to codify academic achievement standards. High. Educ. 67, 273–288. doi: 10.1007/s10734-013-9649-1

Schamber, J. F., and Mahoney, S. L. (2006). Assessing and improving the quality of group critical thinking exhibited in the final projects of collaborative learning groups. J. Gen. Educ. 55, 103–137. doi: 10.1353/jge.2006.0025

Schreiber, L. M., Paul, G. D., and Shibley, L. R. (2012). The development and test of the public speaking competence rubric. Commun. Educ. 61, 205–233. doi: 10.1080/03634523.2012.670709

Stellmack, M. A., Konheim-Kalkstein, Y. L., Manor, J. E., Massey, A. R., and Schmitz, J. P. (2009). An assessment of reliability and validity of a rubric for grading APA-style introductions. Teach. Psychol. 36, 102–107. doi: 10.1080/00986280902739776

Timmerman, B. E. C., Strickland, D. C., Johnson, R. L., and Payne, J. R. (2011). Development of a ‘universal’ rubric for assessing undergraduates' scientific reasoning skills using scientific writing. Assess. Eval. High. Educ. 36, 509–547. doi: 10.1080/02602930903540991

Torrance, H. (2007). Assessment as learning? How the use of explicit learning objectives, assessment criteria and feedback in post-secondary education and training can come to dominate learning. Assess. Educ. 14, 281–294. doi: 10.1080/09695940701591867

Urios, M. I., Rangel, E. R., Tomàs, R. B., Salvador, J. T., Garci,á, F. C., and Piquer, C. F. (2015). Generic skills development and learning/assessment process: use of rubrics and student validation. J. Technol. Sci. Educ. 5, 107–121. doi: 10.3926/jotse.147

Vandenberg, A., Stollak, M., McKeag, L., and Obermann, D. (2010). GPS in the classroom: using rubrics to increase student achievement. Res. High. Educ. J. 9, 1–10. Available online at: http://www.aabri.com/manuscripts/10522.pdf

Wald, H. S., Borkan, J. M., Taylor, J. S., Anthony, D., and Reis, S. P. (2012). Fostering and evaluating reflective capacity in medical education: developing the REFLECT rubric for assessing reflective writing. Acad. Med. 87, 41–50. doi: 10.1097/ACM.0b013e31823b55fa

Wallace, C. S., Prather, E. E., and Duncan, D. K. (2011). A study of general education Astronomy students' understandings of cosmology. Part II. Evaluating four conceptual cosmology surveys: a classical test theory approach. Astron. Educ. Rev. 10:010107. doi: 10.3847/AER2011030

Young, C. (2013). Initiating self-assessment strategies in novice physiotherapy students: a method case study. Assess. Eval. High. Educ. 38, 998–1011. doi: 10.1080/02602938.2013.771255

Keywords: criteria, rubrics, performance level descriptions, higher education, assessment expectations

Citation: Brookhart SM (2018) Appropriate Criteria: Key to Effective Rubrics. Front. Educ . 3:22. doi: 10.3389/feduc.2018.00022

Received: 01 February 2018; Accepted: 27 March 2018; Published: 10 April 2018.

Reviewed by:

Copyright © 2018 Brookhart. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY) . The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Susan M. Brookhart, [email protected]

This article is part of the Research Topic

Transparency in Assessment – Exploring the Influence of Explicit Assessment Criteria

Case Studies

Case studies (also called "case histories") are descriptions of real situations that provide a context for engineers and others to explore decision-making in the face of socio-technical issues, such as environmental, political, and ethical issues. case studies typically involve complex issues where there is no single correct answer--a student analyzing a case study may be asked to select the "best" answer given the situation 1 . a case study is not a demonstration of a valid or "best" decision or solution. on the contrary, unsuccessful or incomplete attempts at a solution are often included in the written account. 2.

The process of analyzing a case study encourages several learning tasks:

Exploring the nature of a problem and circumstances that affect a decision or solution

Learning about others' viewpoints and how they may be taken into account

Learning about one's own viewpoint

Defining one's own priorities

Making one's own decisions to solve a problem

Predicting outcomes and consequences 1

Student Learning Outcomes in Ethics

Most engineering case studies available pertain to engineering ethics. After a two year study of education in ethics sponsored by the Hastings Center, an interdisciplinary group agreed on five main outcomes for student learning in ethics:

Sensitivity to ethical issues, sometimes called "developing a moral imagination," or the awareness of the needs of others and that there is an ethical point of view;

Recognition of ethical issues or the ability to see the ethical implications of specific situations and choices;

Ability to analyze and critically evaluate ethical dilemmas, including an understanding of competing values, and the ability to scrutinize options for resolution;

Ethical responsibility, or the ability to make a decision and take action;

Tolerance for ambiguity, or the recognition that there may be no single ideal solution to ethically problematic situations 2 .

These outcomes would make an excellent list of attributes for designing a rubric for a case analysis.

Ideas for Case Study Assignments

To assign a case analysis, an instructor needs

skill in analyzing a case (and the ability to model that process for students)

skill in managing classroom discussion of a case

a case study

a specific assignment that will guide students' case analyses, and

a rubric for scoring students' case analyses.

Below are ideas for each of these five aspects of teaching with case studies. Another viewpoint is to consider how not to teach a case study .

1. Skill in analyzing a case

For many engineering instructors, analyzing cases is unfamiliar. Examining completed case analyses could help develop case analysis skills. As an exercise for building skill in analyzing cases, use the generic guidelines for case analysis assignments (#4 below) to carefully review some completed case analyses. A few completed case analyses are available:

Five example analyses of an engineering case study

Case study part 1 [Unger, S. The BART case: ethics and the employed engineer. IEEE CSIT Newsletter. September 1973 Issue 4, p 6.]

Case study part 2 [Friedlander, G. The case of the three engineers vs. BART. IEEE Spectrum. October 1974, p. 69-76.]

Case study part 3 [Friedlander, G. Bigger Bugs in BART? IEEE Spectrum . March 1973. p32,35,37.]

Case study with an example analysis

2. Skill in managing classroom discussion of a case

Managing classroom discussion of a case study requires planning.

Suggestions for using engineering cases in the classroom

Guidelines for leading classroom discussion of case studies

3. Case studies

Case studies should be complex enough and realistic enough to be challenging, yet be manageable within the time frame. It is time-consuming to create case studies, but there are a large number of engineering case studies online.

Online Case Libraries

Case Studies in Technology, Ethics, Environment, and Public Policy

Teaching Engineering Ethics: A Case Study Approach

The Online Ethics Center for Engineering and Science

Ethics Cases

The Engineering Case Library

Cases and Teaching Tips

4. A specific assignment that will guide students' case analyses

There are several types of case study assignment:

Nine approaches to using case studies for teaching

Written Case Analysis

Case Discussions

Case analyses typically include answering questions such as:

What kinds of problems are inherent in the situation?

Describe the socio-technical situation sufficiently to enable listeners (or readers) to understand the situation faced by the central character in the case.

Identify and characterize the issue or conflict central to the situation. Identify the parties involved in the situation. Describe the origins, structure, and trajectory of the conflict.

Evaluate the strengths and weaknesses of the arguments made by each party.

How would these problems affect the outcomes of the situation?

Describe the possible actions that could have been taken by the central character in the case.

Describe, for each possible action, what the potential outcomes might be for each party involved.

Describe what action was actually taken and the outcomes for each party involved.

How would you solve these problems? Why?

Describe the action you would take if you were the central character in the case. Explain why.

What should the central character in the situation do? Why?