MERRIMACK COLLEGE MCQUADE LIBRARY

Mpa 6200: research methods and evaluation (nguyen).

- Choose a Topic

- Find Background Information

- Determine Keywords

- Find Books & Media

- Explore Types of Articles

- Find Empirical Articles

- Using Google Scholar

- Evaluate Sources

- Cite Sources

- Zotero This link opens in a new window

- Creating an Annotated Bibliography

Writing a Literature Review

Synthesis visualization.

- Literature Review Class Activity

- Lit Review Matrix

- Lit Review Organizer

- Lit Review Worksheet 1

- Lit Review Worksheet 2

- Lit Review Worksheet 3

- Lit Review Template

- Click on the activity link above

- Select File > Make a Copy

- Complete the activity on YOUR COPY

Selected Books

Online Resources

- Basics of a Literature Review (Merrimack College's Writing Center)

- Library Guide to Capstone Literature Reviews: Role of the Literature Review

- The Literature Review: A Few Tips on Writing It University of Toronto

- Lit Review Matrix This one is customized for Higher Education students, but may be helpful for others.

- Matrix Examples This page from Walden University gives examples of different types of literature review matrices. A matrix can be very helpful in taking notes and preparing sources for your literature review.

- OWL's Literature Reviews

- Review of Literature UW - Madison, Writing Center

- UNC at Chapel Hill's Literature Reviews

What Is a Literature Review?

A literature review is a survey of scholarly articles, books, or other sources that pertain to a specific topic, area of research, or theory. The literature review offers brief descriptions, summaries, and critical evaluations of each work, and does so in the form of a well-organized essay. Scholars often write literature reviews to provide an overview of the most significant recent literature published on a topic. They also use literature reviews to trace the evolution of certain debates or intellectual problems within a field. Even if a literature review is not a formal part of a research project, students should conduct an informal one so that they know what kind of scholarly work has been done previously on the topic that they have selected.

How Is a Literature Review Different from a Research Paper?

An academic research paper attempts to develop a new argument and typically has a literature review as one of its parts. In a research paper, the author uses the literature review to show how his or her new insights build upon and depart from existing scholarship. A literature review by itself does not try to make a new argument based on original research but rather summarizes, synthesizes, and critiques the arguments and ideas of others, and points to gaps in the current literature. Before writing a literature review, a student should look for a model from a relevant journal or ask the instructor to point to a good example.

Organizing a Literature Review

A successful literature review should have three parts that break down in the following way:

INTRODUCTION

- Defines and identifies the topic and establishes the reason for the literature review.

- Points to general trends in what has been published about the topic.

- Explains the criteria used in analyzing and comparing articles.

BODY OF THE REVIEW

- Groups articles into thematic clusters, or subtopics. Clusters may be grouped together chronologically, thematically, or methodologically (see below for more on this).

- Proceeds in a logical order from cluster to cluster.

- Emphasizes the main findings or arguments of the articles in the student’s own words. Keeps quotations from sources to an absolute minimum.

CONCLUSION

- Summarizes the major themes that emerged in the review and identifies areas of controversy in the literature.

- Pinpoints strengths and weaknesses among the articles (innovative methods used, gaps in research, problems with theoretical frameworks, etc.).

- Concludes by formulating questions that need further research within the topic, and provides some insight into the relationship between that topic and the larger field of study or discipline.

In the four examples of student writing below, only one shows a good example of synthesis: the fourth column, Student D. (Click on the image below to see larger)

- << Previous: Creating an Annotated Bibliography

- Last Updated: May 9, 2024 3:32 PM

- URL: https://libguides.merrimack.edu/MPA6200_Nguyen

- UConn Library

- Literature Review: The What, Why and How-to Guide

- Introduction

Literature Review: The What, Why and How-to Guide — Introduction

- Getting Started

- How to Pick a Topic

- Strategies to Find Sources

- Evaluating Sources & Lit. Reviews

- Tips for Writing Literature Reviews

- Writing Literature Review: Useful Sites

- Citation Resources

- Other Academic Writings

What are Literature Reviews?

So, what is a literature review? "A literature review is an account of what has been published on a topic by accredited scholars and researchers. In writing the literature review, your purpose is to convey to your reader what knowledge and ideas have been established on a topic, and what their strengths and weaknesses are. As a piece of writing, the literature review must be defined by a guiding concept (e.g., your research objective, the problem or issue you are discussing, or your argumentative thesis). It is not just a descriptive list of the material available, or a set of summaries." Taylor, D. The literature review: A few tips on conducting it . University of Toronto Health Sciences Writing Centre.

Goals of Literature Reviews

What are the goals of creating a Literature Review? A literature could be written to accomplish different aims:

- To develop a theory or evaluate an existing theory

- To summarize the historical or existing state of a research topic

- Identify a problem in a field of research

Baumeister, R. F., & Leary, M. R. (1997). Writing narrative literature reviews . Review of General Psychology , 1 (3), 311-320.

What kinds of sources require a Literature Review?

- A research paper assigned in a course

- A thesis or dissertation

- A grant proposal

- An article intended for publication in a journal

All these instances require you to collect what has been written about your research topic so that you can demonstrate how your own research sheds new light on the topic.

Types of Literature Reviews

What kinds of literature reviews are written?

Narrative review: The purpose of this type of review is to describe the current state of the research on a specific topic/research and to offer a critical analysis of the literature reviewed. Studies are grouped by research/theoretical categories, and themes and trends, strengths and weakness, and gaps are identified. The review ends with a conclusion section which summarizes the findings regarding the state of the research of the specific study, the gaps identify and if applicable, explains how the author's research will address gaps identify in the review and expand the knowledge on the topic reviewed.

- Example : Predictors and Outcomes of U.S. Quality Maternity Leave: A Review and Conceptual Framework: 10.1177/08948453211037398

Systematic review : "The authors of a systematic review use a specific procedure to search the research literature, select the studies to include in their review, and critically evaluate the studies they find." (p. 139). Nelson, L. K. (2013). Research in Communication Sciences and Disorders . Plural Publishing.

- Example : The effect of leave policies on increasing fertility: a systematic review: 10.1057/s41599-022-01270-w

Meta-analysis : "Meta-analysis is a method of reviewing research findings in a quantitative fashion by transforming the data from individual studies into what is called an effect size and then pooling and analyzing this information. The basic goal in meta-analysis is to explain why different outcomes have occurred in different studies." (p. 197). Roberts, M. C., & Ilardi, S. S. (2003). Handbook of Research Methods in Clinical Psychology . Blackwell Publishing.

- Example : Employment Instability and Fertility in Europe: A Meta-Analysis: 10.1215/00703370-9164737

Meta-synthesis : "Qualitative meta-synthesis is a type of qualitative study that uses as data the findings from other qualitative studies linked by the same or related topic." (p.312). Zimmer, L. (2006). Qualitative meta-synthesis: A question of dialoguing with texts . Journal of Advanced Nursing , 53 (3), 311-318.

- Example : Women’s perspectives on career successes and barriers: A qualitative meta-synthesis: 10.1177/05390184221113735

Literature Reviews in the Health Sciences

- UConn Health subject guide on systematic reviews Explanation of the different review types used in health sciences literature as well as tools to help you find the right review type

- << Previous: Getting Started

- Next: How to Pick a Topic >>

- Last Updated: Sep 21, 2022 2:16 PM

- URL: https://guides.lib.uconn.edu/literaturereview

Harvey Cushing/John Hay Whitney Medical Library

- Collections

- Research Help

YSN Doctoral Programs: Steps in Conducting a Literature Review

- Biomedical Databases

- Global (Public Health) Databases

- Soc. Sci., History, and Law Databases

- Grey Literature

- Trials Registers

- Data and Statistics

- Public Policy

- Google Tips

- Recommended Books

- Steps in Conducting a Literature Review

What is a literature review?

A literature review is an integrated analysis -- not just a summary-- of scholarly writings and other relevant evidence related directly to your research question. That is, it represents a synthesis of the evidence that provides background information on your topic and shows a association between the evidence and your research question.

A literature review may be a stand alone work or the introduction to a larger research paper, depending on the assignment. Rely heavily on the guidelines your instructor has given you.

Why is it important?

A literature review is important because it:

- Explains the background of research on a topic.

- Demonstrates why a topic is significant to a subject area.

- Discovers relationships between research studies/ideas.

- Identifies major themes, concepts, and researchers on a topic.

- Identifies critical gaps and points of disagreement.

- Discusses further research questions that logically come out of the previous studies.

APA7 Style resources

APA Style Blog - for those harder to find answers

1. Choose a topic. Define your research question.

Your literature review should be guided by your central research question. The literature represents background and research developments related to a specific research question, interpreted and analyzed by you in a synthesized way.

- Make sure your research question is not too broad or too narrow. Is it manageable?

- Begin writing down terms that are related to your question. These will be useful for searches later.

- If you have the opportunity, discuss your topic with your professor and your class mates.

2. Decide on the scope of your review

How many studies do you need to look at? How comprehensive should it be? How many years should it cover?

- This may depend on your assignment. How many sources does the assignment require?

3. Select the databases you will use to conduct your searches.

Make a list of the databases you will search.

Where to find databases:

- use the tabs on this guide

- Find other databases in the Nursing Information Resources web page

- More on the Medical Library web page

- ... and more on the Yale University Library web page

4. Conduct your searches to find the evidence. Keep track of your searches.

- Use the key words in your question, as well as synonyms for those words, as terms in your search. Use the database tutorials for help.

- Save the searches in the databases. This saves time when you want to redo, or modify, the searches. It is also helpful to use as a guide is the searches are not finding any useful results.

- Review the abstracts of research studies carefully. This will save you time.

- Use the bibliographies and references of research studies you find to locate others.

- Check with your professor, or a subject expert in the field, if you are missing any key works in the field.

- Ask your librarian for help at any time.

- Use a citation manager, such as EndNote as the repository for your citations. See the EndNote tutorials for help.

Review the literature

Some questions to help you analyze the research:

- What was the research question of the study you are reviewing? What were the authors trying to discover?

- Was the research funded by a source that could influence the findings?

- What were the research methodologies? Analyze its literature review, the samples and variables used, the results, and the conclusions.

- Does the research seem to be complete? Could it have been conducted more soundly? What further questions does it raise?

- If there are conflicting studies, why do you think that is?

- How are the authors viewed in the field? Has this study been cited? If so, how has it been analyzed?

Tips:

- Review the abstracts carefully.

- Keep careful notes so that you may track your thought processes during the research process.

- Create a matrix of the studies for easy analysis, and synthesis, across all of the studies.

- << Previous: Recommended Books

- Last Updated: Jan 4, 2024 10:52 AM

- URL: https://guides.library.yale.edu/YSNDoctoral

Purdue Online Writing Lab Purdue OWL® College of Liberal Arts

Writing a Literature Review

Welcome to the Purdue OWL

This page is brought to you by the OWL at Purdue University. When printing this page, you must include the entire legal notice.

Copyright ©1995-2018 by The Writing Lab & The OWL at Purdue and Purdue University. All rights reserved. This material may not be published, reproduced, broadcast, rewritten, or redistributed without permission. Use of this site constitutes acceptance of our terms and conditions of fair use.

A literature review is a document or section of a document that collects key sources on a topic and discusses those sources in conversation with each other (also called synthesis ). The lit review is an important genre in many disciplines, not just literature (i.e., the study of works of literature such as novels and plays). When we say “literature review” or refer to “the literature,” we are talking about the research ( scholarship ) in a given field. You will often see the terms “the research,” “the scholarship,” and “the literature” used mostly interchangeably.

Where, when, and why would I write a lit review?

There are a number of different situations where you might write a literature review, each with slightly different expectations; different disciplines, too, have field-specific expectations for what a literature review is and does. For instance, in the humanities, authors might include more overt argumentation and interpretation of source material in their literature reviews, whereas in the sciences, authors are more likely to report study designs and results in their literature reviews; these differences reflect these disciplines’ purposes and conventions in scholarship. You should always look at examples from your own discipline and talk to professors or mentors in your field to be sure you understand your discipline’s conventions, for literature reviews as well as for any other genre.

A literature review can be a part of a research paper or scholarly article, usually falling after the introduction and before the research methods sections. In these cases, the lit review just needs to cover scholarship that is important to the issue you are writing about; sometimes it will also cover key sources that informed your research methodology.

Lit reviews can also be standalone pieces, either as assignments in a class or as publications. In a class, a lit review may be assigned to help students familiarize themselves with a topic and with scholarship in their field, get an idea of the other researchers working on the topic they’re interested in, find gaps in existing research in order to propose new projects, and/or develop a theoretical framework and methodology for later research. As a publication, a lit review usually is meant to help make other scholars’ lives easier by collecting and summarizing, synthesizing, and analyzing existing research on a topic. This can be especially helpful for students or scholars getting into a new research area, or for directing an entire community of scholars toward questions that have not yet been answered.

What are the parts of a lit review?

Most lit reviews use a basic introduction-body-conclusion structure; if your lit review is part of a larger paper, the introduction and conclusion pieces may be just a few sentences while you focus most of your attention on the body. If your lit review is a standalone piece, the introduction and conclusion take up more space and give you a place to discuss your goals, research methods, and conclusions separately from where you discuss the literature itself.

Introduction:

- An introductory paragraph that explains what your working topic and thesis is

- A forecast of key topics or texts that will appear in the review

- Potentially, a description of how you found sources and how you analyzed them for inclusion and discussion in the review (more often found in published, standalone literature reviews than in lit review sections in an article or research paper)

- Summarize and synthesize: Give an overview of the main points of each source and combine them into a coherent whole

- Analyze and interpret: Don’t just paraphrase other researchers – add your own interpretations where possible, discussing the significance of findings in relation to the literature as a whole

- Critically Evaluate: Mention the strengths and weaknesses of your sources

- Write in well-structured paragraphs: Use transition words and topic sentence to draw connections, comparisons, and contrasts.

Conclusion:

- Summarize the key findings you have taken from the literature and emphasize their significance

- Connect it back to your primary research question

How should I organize my lit review?

Lit reviews can take many different organizational patterns depending on what you are trying to accomplish with the review. Here are some examples:

- Chronological : The simplest approach is to trace the development of the topic over time, which helps familiarize the audience with the topic (for instance if you are introducing something that is not commonly known in your field). If you choose this strategy, be careful to avoid simply listing and summarizing sources in order. Try to analyze the patterns, turning points, and key debates that have shaped the direction of the field. Give your interpretation of how and why certain developments occurred (as mentioned previously, this may not be appropriate in your discipline — check with a teacher or mentor if you’re unsure).

- Thematic : If you have found some recurring central themes that you will continue working with throughout your piece, you can organize your literature review into subsections that address different aspects of the topic. For example, if you are reviewing literature about women and religion, key themes can include the role of women in churches and the religious attitude towards women.

- Qualitative versus quantitative research

- Empirical versus theoretical scholarship

- Divide the research by sociological, historical, or cultural sources

- Theoretical : In many humanities articles, the literature review is the foundation for the theoretical framework. You can use it to discuss various theories, models, and definitions of key concepts. You can argue for the relevance of a specific theoretical approach or combine various theorical concepts to create a framework for your research.

What are some strategies or tips I can use while writing my lit review?

Any lit review is only as good as the research it discusses; make sure your sources are well-chosen and your research is thorough. Don’t be afraid to do more research if you discover a new thread as you’re writing. More info on the research process is available in our "Conducting Research" resources .

As you’re doing your research, create an annotated bibliography ( see our page on the this type of document ). Much of the information used in an annotated bibliography can be used also in a literature review, so you’ll be not only partially drafting your lit review as you research, but also developing your sense of the larger conversation going on among scholars, professionals, and any other stakeholders in your topic.

Usually you will need to synthesize research rather than just summarizing it. This means drawing connections between sources to create a picture of the scholarly conversation on a topic over time. Many student writers struggle to synthesize because they feel they don’t have anything to add to the scholars they are citing; here are some strategies to help you:

- It often helps to remember that the point of these kinds of syntheses is to show your readers how you understand your research, to help them read the rest of your paper.

- Writing teachers often say synthesis is like hosting a dinner party: imagine all your sources are together in a room, discussing your topic. What are they saying to each other?

- Look at the in-text citations in each paragraph. Are you citing just one source for each paragraph? This usually indicates summary only. When you have multiple sources cited in a paragraph, you are more likely to be synthesizing them (not always, but often

- Read more about synthesis here.

The most interesting literature reviews are often written as arguments (again, as mentioned at the beginning of the page, this is discipline-specific and doesn’t work for all situations). Often, the literature review is where you can establish your research as filling a particular gap or as relevant in a particular way. You have some chance to do this in your introduction in an article, but the literature review section gives a more extended opportunity to establish the conversation in the way you would like your readers to see it. You can choose the intellectual lineage you would like to be part of and whose definitions matter most to your thinking (mostly humanities-specific, but this goes for sciences as well). In addressing these points, you argue for your place in the conversation, which tends to make the lit review more compelling than a simple reporting of other sources.

- USC Libraries

- Research Guides

Organizing Your Social Sciences Research Paper

- 5. The Literature Review

- Purpose of Guide

- Design Flaws to Avoid

- Independent and Dependent Variables

- Glossary of Research Terms

- Reading Research Effectively

- Narrowing a Topic Idea

- Broadening a Topic Idea

- Extending the Timeliness of a Topic Idea

- Academic Writing Style

- Applying Critical Thinking

- Choosing a Title

- Making an Outline

- Paragraph Development

- Research Process Video Series

- Executive Summary

- The C.A.R.S. Model

- Background Information

- The Research Problem/Question

- Theoretical Framework

- Citation Tracking

- Content Alert Services

- Evaluating Sources

- Primary Sources

- Secondary Sources

- Tiertiary Sources

- Scholarly vs. Popular Publications

- Qualitative Methods

- Quantitative Methods

- Insiderness

- Using Non-Textual Elements

- Limitations of the Study

- Common Grammar Mistakes

- Writing Concisely

- Avoiding Plagiarism

- Footnotes or Endnotes?

- Further Readings

- Generative AI and Writing

- USC Libraries Tutorials and Other Guides

- Bibliography

A literature review surveys prior research published in books, scholarly articles, and any other sources relevant to a particular issue, area of research, or theory, and by so doing, provides a description, summary, and critical evaluation of these works in relation to the research problem being investigated. Literature reviews are designed to provide an overview of sources you have used in researching a particular topic and to demonstrate to your readers how your research fits within existing scholarship about the topic.

Fink, Arlene. Conducting Research Literature Reviews: From the Internet to Paper . Fourth edition. Thousand Oaks, CA: SAGE, 2014.

Importance of a Good Literature Review

A literature review may consist of simply a summary of key sources, but in the social sciences, a literature review usually has an organizational pattern and combines both summary and synthesis, often within specific conceptual categories . A summary is a recap of the important information of the source, but a synthesis is a re-organization, or a reshuffling, of that information in a way that informs how you are planning to investigate a research problem. The analytical features of a literature review might:

- Give a new interpretation of old material or combine new with old interpretations,

- Trace the intellectual progression of the field, including major debates,

- Depending on the situation, evaluate the sources and advise the reader on the most pertinent or relevant research, or

- Usually in the conclusion of a literature review, identify where gaps exist in how a problem has been researched to date.

Given this, the purpose of a literature review is to:

- Place each work in the context of its contribution to understanding the research problem being studied.

- Describe the relationship of each work to the others under consideration.

- Identify new ways to interpret prior research.

- Reveal any gaps that exist in the literature.

- Resolve conflicts amongst seemingly contradictory previous studies.

- Identify areas of prior scholarship to prevent duplication of effort.

- Point the way in fulfilling a need for additional research.

- Locate your own research within the context of existing literature [very important].

Fink, Arlene. Conducting Research Literature Reviews: From the Internet to Paper. 2nd ed. Thousand Oaks, CA: Sage, 2005; Hart, Chris. Doing a Literature Review: Releasing the Social Science Research Imagination . Thousand Oaks, CA: Sage Publications, 1998; Jesson, Jill. Doing Your Literature Review: Traditional and Systematic Techniques . Los Angeles, CA: SAGE, 2011; Knopf, Jeffrey W. "Doing a Literature Review." PS: Political Science and Politics 39 (January 2006): 127-132; Ridley, Diana. The Literature Review: A Step-by-Step Guide for Students . 2nd ed. Los Angeles, CA: SAGE, 2012.

Types of Literature Reviews

It is important to think of knowledge in a given field as consisting of three layers. First, there are the primary studies that researchers conduct and publish. Second are the reviews of those studies that summarize and offer new interpretations built from and often extending beyond the primary studies. Third, there are the perceptions, conclusions, opinion, and interpretations that are shared informally among scholars that become part of the body of epistemological traditions within the field.

In composing a literature review, it is important to note that it is often this third layer of knowledge that is cited as "true" even though it often has only a loose relationship to the primary studies and secondary literature reviews. Given this, while literature reviews are designed to provide an overview and synthesis of pertinent sources you have explored, there are a number of approaches you could adopt depending upon the type of analysis underpinning your study.

Argumentative Review This form examines literature selectively in order to support or refute an argument, deeply embedded assumption, or philosophical problem already established in the literature. The purpose is to develop a body of literature that establishes a contrarian viewpoint. Given the value-laden nature of some social science research [e.g., educational reform; immigration control], argumentative approaches to analyzing the literature can be a legitimate and important form of discourse. However, note that they can also introduce problems of bias when they are used to make summary claims of the sort found in systematic reviews [see below].

Integrative Review Considered a form of research that reviews, critiques, and synthesizes representative literature on a topic in an integrated way such that new frameworks and perspectives on the topic are generated. The body of literature includes all studies that address related or identical hypotheses or research problems. A well-done integrative review meets the same standards as primary research in regard to clarity, rigor, and replication. This is the most common form of review in the social sciences.

Historical Review Few things rest in isolation from historical precedent. Historical literature reviews focus on examining research throughout a period of time, often starting with the first time an issue, concept, theory, phenomena emerged in the literature, then tracing its evolution within the scholarship of a discipline. The purpose is to place research in a historical context to show familiarity with state-of-the-art developments and to identify the likely directions for future research.

Methodological Review A review does not always focus on what someone said [findings], but how they came about saying what they say [method of analysis]. Reviewing methods of analysis provides a framework of understanding at different levels [i.e. those of theory, substantive fields, research approaches, and data collection and analysis techniques], how researchers draw upon a wide variety of knowledge ranging from the conceptual level to practical documents for use in fieldwork in the areas of ontological and epistemological consideration, quantitative and qualitative integration, sampling, interviewing, data collection, and data analysis. This approach helps highlight ethical issues which you should be aware of and consider as you go through your own study.

Systematic Review This form consists of an overview of existing evidence pertinent to a clearly formulated research question, which uses pre-specified and standardized methods to identify and critically appraise relevant research, and to collect, report, and analyze data from the studies that are included in the review. The goal is to deliberately document, critically evaluate, and summarize scientifically all of the research about a clearly defined research problem . Typically it focuses on a very specific empirical question, often posed in a cause-and-effect form, such as "To what extent does A contribute to B?" This type of literature review is primarily applied to examining prior research studies in clinical medicine and allied health fields, but it is increasingly being used in the social sciences.

Theoretical Review The purpose of this form is to examine the corpus of theory that has accumulated in regard to an issue, concept, theory, phenomena. The theoretical literature review helps to establish what theories already exist, the relationships between them, to what degree the existing theories have been investigated, and to develop new hypotheses to be tested. Often this form is used to help establish a lack of appropriate theories or reveal that current theories are inadequate for explaining new or emerging research problems. The unit of analysis can focus on a theoretical concept or a whole theory or framework.

NOTE: Most often the literature review will incorporate some combination of types. For example, a review that examines literature supporting or refuting an argument, assumption, or philosophical problem related to the research problem will also need to include writing supported by sources that establish the history of these arguments in the literature.

Baumeister, Roy F. and Mark R. Leary. "Writing Narrative Literature Reviews." Review of General Psychology 1 (September 1997): 311-320; Mark R. Fink, Arlene. Conducting Research Literature Reviews: From the Internet to Paper . 2nd ed. Thousand Oaks, CA: Sage, 2005; Hart, Chris. Doing a Literature Review: Releasing the Social Science Research Imagination . Thousand Oaks, CA: Sage Publications, 1998; Kennedy, Mary M. "Defining a Literature." Educational Researcher 36 (April 2007): 139-147; Petticrew, Mark and Helen Roberts. Systematic Reviews in the Social Sciences: A Practical Guide . Malden, MA: Blackwell Publishers, 2006; Torracro, Richard. "Writing Integrative Literature Reviews: Guidelines and Examples." Human Resource Development Review 4 (September 2005): 356-367; Rocco, Tonette S. and Maria S. Plakhotnik. "Literature Reviews, Conceptual Frameworks, and Theoretical Frameworks: Terms, Functions, and Distinctions." Human Ressource Development Review 8 (March 2008): 120-130; Sutton, Anthea. Systematic Approaches to a Successful Literature Review . Los Angeles, CA: Sage Publications, 2016.

Structure and Writing Style

I. Thinking About Your Literature Review

The structure of a literature review should include the following in support of understanding the research problem :

- An overview of the subject, issue, or theory under consideration, along with the objectives of the literature review,

- Division of works under review into themes or categories [e.g. works that support a particular position, those against, and those offering alternative approaches entirely],

- An explanation of how each work is similar to and how it varies from the others,

- Conclusions as to which pieces are best considered in their argument, are most convincing of their opinions, and make the greatest contribution to the understanding and development of their area of research.

The critical evaluation of each work should consider :

- Provenance -- what are the author's credentials? Are the author's arguments supported by evidence [e.g. primary historical material, case studies, narratives, statistics, recent scientific findings]?

- Methodology -- were the techniques used to identify, gather, and analyze the data appropriate to addressing the research problem? Was the sample size appropriate? Were the results effectively interpreted and reported?

- Objectivity -- is the author's perspective even-handed or prejudicial? Is contrary data considered or is certain pertinent information ignored to prove the author's point?

- Persuasiveness -- which of the author's theses are most convincing or least convincing?

- Validity -- are the author's arguments and conclusions convincing? Does the work ultimately contribute in any significant way to an understanding of the subject?

II. Development of the Literature Review

Four Basic Stages of Writing 1. Problem formulation -- which topic or field is being examined and what are its component issues? 2. Literature search -- finding materials relevant to the subject being explored. 3. Data evaluation -- determining which literature makes a significant contribution to the understanding of the topic. 4. Analysis and interpretation -- discussing the findings and conclusions of pertinent literature.

Consider the following issues before writing the literature review: Clarify If your assignment is not specific about what form your literature review should take, seek clarification from your professor by asking these questions: 1. Roughly how many sources would be appropriate to include? 2. What types of sources should I review (books, journal articles, websites; scholarly versus popular sources)? 3. Should I summarize, synthesize, or critique sources by discussing a common theme or issue? 4. Should I evaluate the sources in any way beyond evaluating how they relate to understanding the research problem? 5. Should I provide subheadings and other background information, such as definitions and/or a history? Find Models Use the exercise of reviewing the literature to examine how authors in your discipline or area of interest have composed their literature review sections. Read them to get a sense of the types of themes you might want to look for in your own research or to identify ways to organize your final review. The bibliography or reference section of sources you've already read, such as required readings in the course syllabus, are also excellent entry points into your own research. Narrow the Topic The narrower your topic, the easier it will be to limit the number of sources you need to read in order to obtain a good survey of relevant resources. Your professor will probably not expect you to read everything that's available about the topic, but you'll make the act of reviewing easier if you first limit scope of the research problem. A good strategy is to begin by searching the USC Libraries Catalog for recent books about the topic and review the table of contents for chapters that focuses on specific issues. You can also review the indexes of books to find references to specific issues that can serve as the focus of your research. For example, a book surveying the history of the Israeli-Palestinian conflict may include a chapter on the role Egypt has played in mediating the conflict, or look in the index for the pages where Egypt is mentioned in the text. Consider Whether Your Sources are Current Some disciplines require that you use information that is as current as possible. This is particularly true in disciplines in medicine and the sciences where research conducted becomes obsolete very quickly as new discoveries are made. However, when writing a review in the social sciences, a survey of the history of the literature may be required. In other words, a complete understanding the research problem requires you to deliberately examine how knowledge and perspectives have changed over time. Sort through other current bibliographies or literature reviews in the field to get a sense of what your discipline expects. You can also use this method to explore what is considered by scholars to be a "hot topic" and what is not.

III. Ways to Organize Your Literature Review

Chronology of Events If your review follows the chronological method, you could write about the materials according to when they were published. This approach should only be followed if a clear path of research building on previous research can be identified and that these trends follow a clear chronological order of development. For example, a literature review that focuses on continuing research about the emergence of German economic power after the fall of the Soviet Union. By Publication Order your sources by publication chronology, then, only if the order demonstrates a more important trend. For instance, you could order a review of literature on environmental studies of brown fields if the progression revealed, for example, a change in the soil collection practices of the researchers who wrote and/or conducted the studies. Thematic [“conceptual categories”] A thematic literature review is the most common approach to summarizing prior research in the social and behavioral sciences. Thematic reviews are organized around a topic or issue, rather than the progression of time, although the progression of time may still be incorporated into a thematic review. For example, a review of the Internet’s impact on American presidential politics could focus on the development of online political satire. While the study focuses on one topic, the Internet’s impact on American presidential politics, it would still be organized chronologically reflecting technological developments in media. The difference in this example between a "chronological" and a "thematic" approach is what is emphasized the most: themes related to the role of the Internet in presidential politics. Note that more authentic thematic reviews tend to break away from chronological order. A review organized in this manner would shift between time periods within each section according to the point being made. Methodological A methodological approach focuses on the methods utilized by the researcher. For the Internet in American presidential politics project, one methodological approach would be to look at cultural differences between the portrayal of American presidents on American, British, and French websites. Or the review might focus on the fundraising impact of the Internet on a particular political party. A methodological scope will influence either the types of documents in the review or the way in which these documents are discussed.

Other Sections of Your Literature Review Once you've decided on the organizational method for your literature review, the sections you need to include in the paper should be easy to figure out because they arise from your organizational strategy. In other words, a chronological review would have subsections for each vital time period; a thematic review would have subtopics based upon factors that relate to the theme or issue. However, sometimes you may need to add additional sections that are necessary for your study, but do not fit in the organizational strategy of the body. What other sections you include in the body is up to you. However, only include what is necessary for the reader to locate your study within the larger scholarship about the research problem.

Here are examples of other sections, usually in the form of a single paragraph, you may need to include depending on the type of review you write:

- Current Situation : Information necessary to understand the current topic or focus of the literature review.

- Sources Used : Describes the methods and resources [e.g., databases] you used to identify the literature you reviewed.

- History : The chronological progression of the field, the research literature, or an idea that is necessary to understand the literature review, if the body of the literature review is not already a chronology.

- Selection Methods : Criteria you used to select (and perhaps exclude) sources in your literature review. For instance, you might explain that your review includes only peer-reviewed [i.e., scholarly] sources.

- Standards : Description of the way in which you present your information.

- Questions for Further Research : What questions about the field has the review sparked? How will you further your research as a result of the review?

IV. Writing Your Literature Review

Once you've settled on how to organize your literature review, you're ready to write each section. When writing your review, keep in mind these issues.

Use Evidence A literature review section is, in this sense, just like any other academic research paper. Your interpretation of the available sources must be backed up with evidence [citations] that demonstrates that what you are saying is valid. Be Selective Select only the most important points in each source to highlight in the review. The type of information you choose to mention should relate directly to the research problem, whether it is thematic, methodological, or chronological. Related items that provide additional information, but that are not key to understanding the research problem, can be included in a list of further readings . Use Quotes Sparingly Some short quotes are appropriate if you want to emphasize a point, or if what an author stated cannot be easily paraphrased. Sometimes you may need to quote certain terminology that was coined by the author, is not common knowledge, or taken directly from the study. Do not use extensive quotes as a substitute for using your own words in reviewing the literature. Summarize and Synthesize Remember to summarize and synthesize your sources within each thematic paragraph as well as throughout the review. Recapitulate important features of a research study, but then synthesize it by rephrasing the study's significance and relating it to your own work and the work of others. Keep Your Own Voice While the literature review presents others' ideas, your voice [the writer's] should remain front and center. For example, weave references to other sources into what you are writing but maintain your own voice by starting and ending the paragraph with your own ideas and wording. Use Caution When Paraphrasing When paraphrasing a source that is not your own, be sure to represent the author's information or opinions accurately and in your own words. Even when paraphrasing an author’s work, you still must provide a citation to that work.

V. Common Mistakes to Avoid

These are the most common mistakes made in reviewing social science research literature.

- Sources in your literature review do not clearly relate to the research problem;

- You do not take sufficient time to define and identify the most relevant sources to use in the literature review related to the research problem;

- Relies exclusively on secondary analytical sources rather than including relevant primary research studies or data;

- Uncritically accepts another researcher's findings and interpretations as valid, rather than examining critically all aspects of the research design and analysis;

- Does not describe the search procedures that were used in identifying the literature to review;

- Reports isolated statistical results rather than synthesizing them in chi-squared or meta-analytic methods; and,

- Only includes research that validates assumptions and does not consider contrary findings and alternative interpretations found in the literature.

Cook, Kathleen E. and Elise Murowchick. “Do Literature Review Skills Transfer from One Course to Another?” Psychology Learning and Teaching 13 (March 2014): 3-11; Fink, Arlene. Conducting Research Literature Reviews: From the Internet to Paper . 2nd ed. Thousand Oaks, CA: Sage, 2005; Hart, Chris. Doing a Literature Review: Releasing the Social Science Research Imagination . Thousand Oaks, CA: Sage Publications, 1998; Jesson, Jill. Doing Your Literature Review: Traditional and Systematic Techniques . London: SAGE, 2011; Literature Review Handout. Online Writing Center. Liberty University; Literature Reviews. The Writing Center. University of North Carolina; Onwuegbuzie, Anthony J. and Rebecca Frels. Seven Steps to a Comprehensive Literature Review: A Multimodal and Cultural Approach . Los Angeles, CA: SAGE, 2016; Ridley, Diana. The Literature Review: A Step-by-Step Guide for Students . 2nd ed. Los Angeles, CA: SAGE, 2012; Randolph, Justus J. “A Guide to Writing the Dissertation Literature Review." Practical Assessment, Research, and Evaluation. vol. 14, June 2009; Sutton, Anthea. Systematic Approaches to a Successful Literature Review . Los Angeles, CA: Sage Publications, 2016; Taylor, Dena. The Literature Review: A Few Tips On Conducting It. University College Writing Centre. University of Toronto; Writing a Literature Review. Academic Skills Centre. University of Canberra.

Writing Tip

Break Out of Your Disciplinary Box!

Thinking interdisciplinarily about a research problem can be a rewarding exercise in applying new ideas, theories, or concepts to an old problem. For example, what might cultural anthropologists say about the continuing conflict in the Middle East? In what ways might geographers view the need for better distribution of social service agencies in large cities than how social workers might study the issue? You don’t want to substitute a thorough review of core research literature in your discipline for studies conducted in other fields of study. However, particularly in the social sciences, thinking about research problems from multiple vectors is a key strategy for finding new solutions to a problem or gaining a new perspective. Consult with a librarian about identifying research databases in other disciplines; almost every field of study has at least one comprehensive database devoted to indexing its research literature.

Frodeman, Robert. The Oxford Handbook of Interdisciplinarity . New York: Oxford University Press, 2010.

Another Writing Tip

Don't Just Review for Content!

While conducting a review of the literature, maximize the time you devote to writing this part of your paper by thinking broadly about what you should be looking for and evaluating. Review not just what scholars are saying, but how are they saying it. Some questions to ask:

- How are they organizing their ideas?

- What methods have they used to study the problem?

- What theories have been used to explain, predict, or understand their research problem?

- What sources have they cited to support their conclusions?

- How have they used non-textual elements [e.g., charts, graphs, figures, etc.] to illustrate key points?

When you begin to write your literature review section, you'll be glad you dug deeper into how the research was designed and constructed because it establishes a means for developing more substantial analysis and interpretation of the research problem.

Hart, Chris. Doing a Literature Review: Releasing the Social Science Research Imagination . Thousand Oaks, CA: Sage Publications, 1 998.

Yet Another Writing Tip

When Do I Know I Can Stop Looking and Move On?

Here are several strategies you can utilize to assess whether you've thoroughly reviewed the literature:

- Look for repeating patterns in the research findings . If the same thing is being said, just by different people, then this likely demonstrates that the research problem has hit a conceptual dead end. At this point consider: Does your study extend current research? Does it forge a new path? Or, does is merely add more of the same thing being said?

- Look at sources the authors cite to in their work . If you begin to see the same researchers cited again and again, then this is often an indication that no new ideas have been generated to address the research problem.

- Search Google Scholar to identify who has subsequently cited leading scholars already identified in your literature review [see next sub-tab]. This is called citation tracking and there are a number of sources that can help you identify who has cited whom, particularly scholars from outside of your discipline. Here again, if the same authors are being cited again and again, this may indicate no new literature has been written on the topic.

Onwuegbuzie, Anthony J. and Rebecca Frels. Seven Steps to a Comprehensive Literature Review: A Multimodal and Cultural Approach . Los Angeles, CA: Sage, 2016; Sutton, Anthea. Systematic Approaches to a Successful Literature Review . Los Angeles, CA: Sage Publications, 2016.

- << Previous: Theoretical Framework

- Next: Citation Tracking >>

- Last Updated: May 25, 2024 4:09 PM

- URL: https://libguides.usc.edu/writingguide

Research Methods: Literature Reviews

- Annotated Bibliographies

- Literature Reviews

- Scoping Reviews

- Systematic Reviews

- Scholarship of Teaching and Learning

- Persuasive Arguments

- Subject Specific Methodology

A literature review involves researching, reading, analyzing, evaluating, and summarizing scholarly literature (typically journals and articles) about a specific topic. The results of a literature review may be an entire report or article OR may be part of a article, thesis, dissertation, or grant proposal. A literature review helps the author learn about the history and nature of their topic, and identify research gaps and problems.

Steps & Elements

Problem formulation

- Determine your topic and its components by asking a question

- Research: locate literature related to your topic to identify the gap(s) that can be addressed

- Read: read the articles or other sources of information

- Analyze: assess the findings for relevancy

- Evaluating: determine how the article are relevant to your research and what are the key findings

- Synthesis: write about the key findings and how it is relevant to your research

Elements of a Literature Review

- Summarize subject, issue or theory under consideration, along with objectives of the review

- Divide works under review into categories (e.g. those in support of a particular position, those against, those offering alternative theories entirely)

- Explain how each work is similar to and how it varies from the others

- Conclude which pieces are best considered in their argument, are most convincing of their opinions, and make the greatest contribution to the understanding and development of an area of research

Writing a Literature Review Resources

- How to Write a Literature Review From the Wesleyan University Library

- Write a Literature Review From the University of California Santa Cruz Library. A Brief overview of a literature review, includes a list of stages for writing a lit review.

- Literature Reviews From the University of North Carolina Writing Center. Detailed information about writing a literature review.

- Undertaking a literature review: a step-by-step approach Cronin, P., Ryan, F., & Coughan, M. (2008). Undertaking a literature review: A step-by-step approach. British Journal of Nursing, 17(1), p.38-43

Literature Review Tutorial

- << Previous: Annotated Bibliographies

- Next: Scoping Reviews >>

- Last Updated: Feb 29, 2024 12:00 PM

- URL: https://guides.auraria.edu/researchmethods

1100 Lawrence Street Denver, CO 80204 303-315-7700 Ask Us Directions

- University of Texas Libraries

Literature Reviews

- What is a literature review?

- Steps in the Literature Review Process

- Define your research question

- Determine inclusion and exclusion criteria

- Choose databases and search

- Review Results

- Synthesize Results

- Analyze Results

- Librarian Support

What is a Literature Review?

A literature or narrative review is a comprehensive review and analysis of the published literature on a specific topic or research question. The literature that is reviewed contains: books, articles, academic articles, conference proceedings, association papers, and dissertations. It contains the most pertinent studies and points to important past and current research and practices. It provides background and context, and shows how your research will contribute to the field.

A literature review should:

- Provide a comprehensive and updated review of the literature;

- Explain why this review has taken place;

- Articulate a position or hypothesis;

- Acknowledge and account for conflicting and corroborating points of view

From S age Research Methods

Purpose of a Literature Review

A literature review can be written as an introduction to a study to:

- Demonstrate how a study fills a gap in research

- Compare a study with other research that's been done

Or it can be a separate work (a research article on its own) which:

- Organizes or describes a topic

- Describes variables within a particular issue/problem

Limitations of a Literature Review

Some of the limitations of a literature review are:

- It's a snapshot in time. Unlike other reviews, this one has beginning, a middle and an end. There may be future developments that could make your work less relevant.

- It may be too focused. Some niche studies may miss the bigger picture.

- It can be difficult to be comprehensive. There is no way to make sure all the literature on a topic was considered.

- It is easy to be biased if you stick to top tier journals. There may be other places where people are publishing exemplary research. Look to open access publications and conferences to reflect a more inclusive collection. Also, make sure to include opposing views (and not just supporting evidence).

Source: Grant, Maria J., and Andrew Booth. “A Typology of Reviews: An Analysis of 14 Review Types and Associated Methodologies.” Health Information & Libraries Journal, vol. 26, no. 2, June 2009, pp. 91–108. Wiley Online Library, doi:10.1111/j.1471-1842.2009.00848.x.

Meryl Brodsky : Communication and Information Studies

Hannah Chapman Tripp : Biology, Neuroscience

Carolyn Cunningham : Human Development & Family Sciences, Psychology, Sociology

Larayne Dallas : Engineering

Janelle Hedstrom : Special Education, Curriculum & Instruction, Ed Leadership & Policy

Susan Macicak : Linguistics

Imelda Vetter : Dell Medical School

For help in other subject areas, please see the guide to library specialists by subject .

Periodically, UT Libraries runs a workshop covering the basics and library support for literature reviews. While we try to offer these once per academic year, we find providing the recording to be helpful to community members who have missed the session. Following is the most recent recording of the workshop, Conducting a Literature Review. To view the recording, a UT login is required.

- October 26, 2022 recording

- Last Updated: Oct 26, 2022 2:49 PM

- URL: https://guides.lib.utexas.edu/literaturereviews

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- JMIR Form Res

- v.3(4); Oct-Dec 2019

A Comprehensive Framework to Evaluate Websites: Literature Review and Development of GoodWeb

Rosalie allison.

1 Public Health England, Gloucester, United Kingdom

Catherine Hayes

Cliodna a m mcnulty, vicki young, associated data.

Summary of included studies, including information on the participant.

Interventions: methodologies and tools to evaluate websites.

Methods used or described in each study.

Summary of the most used website attributes evaluated.

Attention is turning toward increasing the quality of websites and quality evaluation to attract new users and retain existing users.

This scoping study aimed to review and define existing worldwide methodologies and techniques to evaluate websites and provide a framework of appropriate website attributes that could be applied to any future website evaluations.

We systematically searched electronic databases and gray literature for studies of website evaluation. The results were exported to EndNote software, duplicates were removed, and eligible studies were identified. The results have been presented in narrative form.

A total of 69 studies met the inclusion criteria. The extracted data included type of website, aim or purpose of the study, study populations (users and experts), sample size, setting (controlled environment and remotely assessed), website attributes evaluated, process of methodology, and process of analysis. Methods of evaluation varied and included questionnaires, observed website browsing, interviews or focus groups, and Web usage analysis. Evaluations using both users and experts and controlled and remote settings are represented. Website attributes that were examined included usability or ease of use, content, design criteria, functionality, appearance, interactivity, satisfaction, and loyalty. Website evaluation methods should be tailored to the needs of specific websites and individual aims of evaluations. GoodWeb, a website evaluation guide, has been presented with a case scenario.

Conclusions

This scoping study supports the open debate of defining the quality of websites, and there are numerous approaches and models to evaluate it. However, as this study provides a framework of the existing literature of website evaluation, it presents a guide of options for evaluating websites, including which attributes to analyze and options for appropriate methods.

Introduction

Since its conception in the early 1990s, there has been an explosion in the use of the internet, with websites taking a central role in diverse fields such as finance, education, medicine, industry, and business. Organizations are increasingly attempting to exploit the benefits of the World Wide Web and its features as an interface for internet-enabled businesses, information provision, and promotional activities [ 1 , 2 ]. As the environment becomes more competitive and websites become more sophisticated, attention is turning toward increasing the quality of the website itself and quality evaluation to attract new and retain existing users [ 3 , 4 ]. What determines website quality has not been conclusively established, and there are many different definitions and meanings of the term quality, mainly in relation to the website’s purpose [ 5 ]. Traditionally, website evaluations have focused on usability, defined as “the extent to which a product can be used by specified users to achieve specified goals with effectiveness, efficiency and satisfaction in a specified context of use [ 6 ].” The design of websites and users’ needs go beyond pure usability, as increased engagement and pleasure experienced during interactions with websites can be more important predictors of website preference than usability [ 7 - 10 ]. Therefore, in the last decade, website evaluations have shifted their focus to users’ experience, employing various assessment techniques [ 11 ], with no universally accepted method or procedure for website evaluation.

This scoping study aimed to review and define existing worldwide methodologies and techniques to evaluate websites and provide a simple framework of appropriate website attributes, which could be applied to future website evaluations.

A scoping study is similar to a systematic review as it collects and reviews content in a field of interest. However, scoping studies cover a broader question and do not rigorously evaluate the quality of the studies included [ 12 ]. Scoping studies are commonly used in the fields of public services such as health and education, as they are more rapid to perform and less costly in terms of staff costs [ 13 ]. Scoping studies can be precursors to a systematic review or stand-alone studies to examine the range of research around a particular topic.

The following research question is based on the need to gain knowledge and insight from worldwide website evaluation to inform the future study design of website evaluations: what website evaluation methodologies can be robustly used to assess users’ experience?

To show how the framework of attributes and methods can be applied to evaluating a website, e-Bug, an international educational health website, will be used as a case scenario [ 14 ].

This scoping study followed a 5-stage framework and methodology, as outlined by Arksey and O’Malley [ 12 ], involving the following: (1) identifying the research question, as above; (2) identifying relevant studies; (3) study selection; (4) charting the data; and (5) collating, summarizing, and reporting the results.

Identifying Relevant Studies

Following the Preferred Reporting Items for Systematic Reviews and Meta-Analyses guidelines [ 15 ], studies for consideration in the review were located by searching the following electronic databases: Excerpta Medica dataBASE, PsycINFO, Cochrane, Cumulative Index to Nursing and Allied Health Literature, Scopus, ACM digital library, and IEEE Xplore SPORTDiscus. The keywords used referred to the following:

- Population: websites

- Intervention: evaluation methodologies

- Outcome: user’s experience.

Table 1 shows the specific search criteria for each database. These keywords were also used to search gray literature for unpublished or working documents to minimize publication bias.

Full search strategy used to search each electronic database.

a EMBASE: Excerpta Medica database.

b CINAHL: Cumulative Index to Nursing and Allied Health Literature.

c ACM: Association for Computing Machinery.

d IEEE: Institute of Electrical and Electronics Engineers.

Study Selection

Once all sources had been systematically searched, the list of citations was exported to EndNote software to identify eligible studies. By scanning the title, and abstract if necessary, studies that did not fit the inclusion criteria were removed by 2 researchers (RA and CH). As abstracts are not always representative of the full study that follows or capture the full scope [ 16 ], if the title and abstract did not provide sufficient information, the full manuscript was examined to ascertain whether they met all the inclusion criteria, which included (1) studies focused on websites, (2) studies of evaluative methods (eg, use of questionnaire and task completion), (3) studies that reported outcomes that affect the user’s experience (eg, quality, satisfaction, efficiency, effectiveness without necessarily focusing on methodology), (4) studies carried out between 2006 and 2016, (5) studies published in English, and (6) type of study (any study design that is appropriate).

Exclusion criteria included (1) studies that focus on evaluations using solely experts and are not transferrable to user evaluations; (2) studies that are in the form of electronic book or are not freely available on the Web or through OpenAthens, the University of Bath library, or the University of the West of England library; (3)studies that evaluate banking, electronic commerce (e-commerce), or online libraries’ websites and do not have transferrable measures to a range of other websites; (4) studies that report exclusively on minority or special needs groups (eg, blind or deaf users); and (5) studies that do not meet all the inclusion criteria.

Charting the Data

The next stage involved charting key items of information obtained from studies being reviewed. Charting [ 17 ] describes a technique for synthesizing and interpreting qualitative data by sifting, charting, and sorting material according to key issues and themes. This is similar to a systematic review in which the process is called data extraction. The data extracted included general information about the study and specific information relating to, for instance, the study population or target, the type of intervention, outcome measures employed, and the study design.

The information of interest included the following: type of website, aim or purpose of the study, study populations (users and experts), sample size, setting (laboratory, real life, and remotely assessed), website attributes evaluated, process of methodology, and process of analysis.

NVivo version 10.0 software was used for this stage by 2 researchers (RA and CH) to chart the data.

Collating, Summarizing, and Reporting the Results

Although the scoping study does not seek to assess the quality of evidence, it does present an overview of all material reviewed with a narrative account of findings.

Ethics Approval and Consent to Participate

As no primary research was carried out, no ethical approval was required to undertake this scoping study. No specific reference was made to any of the participants in the individual studies, nor does this study infringe on their rights in any way.

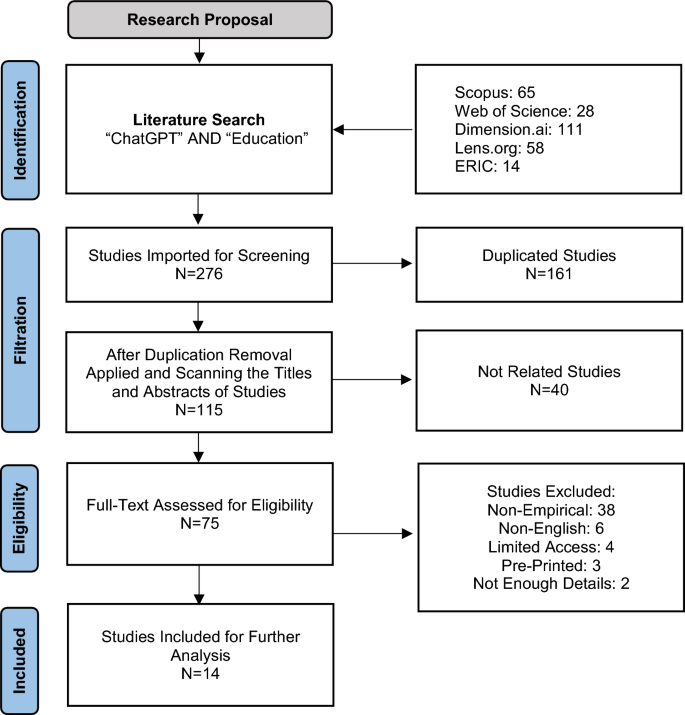

The electronic database searches produced 6657 papers; a further 7 papers were identified through other sources. After removing duplicates (n=1058), 5606 publications remained. After titles and abstracts were examined, 784 full-text papers were read and assessed further for eligibility. Of those, 69 articles were identified as suitable by meeting all the inclusion criteria ( Figure 1 ).

Preferred Reporting Items for Systematic Reviews and Meta-Analyses flowchart of search results.

Study Characteristics

Studies referred to or used a mixture of users (72%) and experts (39%) to evaluate their websites; 54% used a controlled environment, and 26% evaluated websites remotely ( Multimedia Appendix 1 [ 2 - 4 , 11 , 18 - 85 ]). Remote usability, in its most basic form, involves working with participants who are not in the same physical location as the researcher, employing techniques such as live screen sharing or questionnaires. Advantages to remote website evaluations include the ability to evaluate using a larger number of participants as travel time and costs are not a factor, and participants are able to partake at a time that is appropriate to them, increasing the likelihood of participation and the possibility of a greater diversity of participants [ 18 ]. However, the disadvantages of remote website evaluations, in comparison with a controlled setting, are that system performance, network traffic, and the participant’s computer setup can all affect the results.

A variety of types of websites evaluated were included in this review including government (9%), online news (6%), education (1%), university (12%), and sports organizations (4%). The aspects of quality considered, and their relative importance varied according to the type of website and the goals to be achieved by the users. For example, criteria such as ease of paying or security are not very important to educational websites, whereas they are especially important for online shopping. In this sense, much attention must be paid when evaluating the quality of a website, establishing a specific context of use and purpose [ 19 ].

The context of the participants was also discussed, in relation to the generalizability of results. For example, when evaluations used potential or current users of their website, it was important that computer literacy was reflective of all users [ 20 ]. This could mean ensuring that participants with a range of computer abilities and experiences were used so that results were not biased to the most or least experienced users.

Intervention

A total of 43 evaluation methodologies were identified in the 69 studies in this review. Most of them were variations of similar methodologies, and a brief description of each is provided in Multimedia Appendix 2 . Multimedia Appendix 3 shows the methods used or described in each study.

Questionnaire

Use of questionnaires was the most common methodology referred to (37/69, 54%), including questions to rank or rate attributes and open questions to allow text feedback and suggested improvements. Questionnaires were used in a combination of before or after usability testing to assess usability and overall user experience.

Observed Browsing the Website

Browsing the website using a form of task completion with the participant, such as cognitive walkthrough, was used in 33/69 studies (48%), whereby an expert evaluator used a detailed procedure to simulate task execution and browse all particular solution paths, examining each action while determining if expected user’s goals and memory content would lead to choosing a correct option [ 30 ]. Screen capture was often used (n=6) to record participants’ navigation through the website, and eye tracking was used (n=7) to assess where the eye focuses on each page or the motion of the eye as an individual views a Web page. The think-aloud protocol was used (n=10) to encourage users to express out loud what they were looking at, thinking, doing, and feeling, as they performed tasks. This allows observers to see and understand the cognitive processes associated with task completion. Recording the time to complete tasks (n=6) and mouse movement or clicks (n=8) were used to assess the efficiency of the websites.

Qualitative Data Collection

Several forms of qualitative data collection were used in 27/69 studies (39%). Observed browsing, interviews, and focus groups were used either before or after the use of the website. Pre-website-use, qualitative research was often used to collect details of which website attributes were important for participants or what weighting participants would give to each attribute. Postevaluation, qualitative techniques were used to collate feedback on the quality of the website and any suggestions for improvements.

Automated Usability Evaluation Software

In 9/69 studies (13%), automated usability evaluation focused on developing software, tools, and techniques to speed evaluation (rapid), tools that reach a wider audience for usability testing (remote), and tools that have built-in analyses features (automated). The latter can involve assessing server logs, website coding, and simulations of user experience to assess usability [ 42 ].

Card Sorting

A technique that is often linked with assessing navigability of a website, card sorting, is useful for discovering the logical structure of an unsorted list of statements or ideas by exploring how people group items and structures that maximize the probability of users finding items (5/69 studies, 7%). This can assist with determining effective website structure.

Web Usage Analysis

Of 69 studies, 3 studies used Web usage analysis or Web analytics to identify browsing patterns by analyzing the participants’ navigational behavior. This could include tracking at the widget level, that is, combining knowledge of the mouse coordinates with elements such as buttons and links, with the layout of the HTML pages, enabling complete tracking of all user activity.

Outcomes (Attributes Used to Evaluate Websites)

Often, different terminology for website attributes was used to describe the same or similar concepts ( Multimedia Appendix 4 ). The most used website attributes that were assessed can be broken down into 8 broad categories and further subcategories:

- Usability or ease of use is the degree to which a website can be used to achieve given goals (n=58). It includes navigation such as intuitiveness, learnability, memorability, and information architecture; effectiveness such as errors; and efficiency.

- Content (n=41) includes completeness, accuracy, relevancy, timeliness, and understandability of the information.

- Web design criteria (n=29) include use of media, search engines, help resources, originality of the website, site map, user interface, multilanguage, and maintainability.

- Functionality (n=31) includes links, website speed, security, and compatibility with devices and browsers.

- Appearance (n=26) includes layout, font, colors, and page length.

- Interactivity (n=25) includes sense of community, such as ability to leave feedback and comments and email or share with a friend option or forum discussion boards; personalization; help options such as frequently answered questions or customer services; and background music.

- Satisfaction (n=26) includes usefulness, entertainment, look and feel, and pleasure.

- Loyalty (n=8) includes first impression of the website.

GoodWeb: Website Evaluation Guide

As there was such a range of methods used, a suggested guide of options for evaluating websites is presented below ( Figure 2 ), coined GoodWeb, and applied to an evaluation of e-Bug, an international educational health website [ 14 ]. Allison at al [ 86 ] show the full details of how GoodWeb has been applied and outcomes of the e-Bug website evaluation.

Framework for website evaluation.

Step 1. What Are the Important Website Attributes That Affect User's Experience of the Chosen Website?

Usability or ease of use, content, Web design criteria, functionality, appearance, interactivity, satisfaction, and loyalty were the umbrella terms that encompassed the website attributes identified or evaluated in the 69 studies in this scoping study. Multimedia Appendix 4 contains a summary of the most used website attributes that have been assessed. Recent website evaluations have shifted focus from usability of websites to an overall user’s experience of website use. A decision on which website attributes to evaluate for specific websites could come from interviews or focus groups with users or experts or a literature search of attributes used in similar evaluations.

Application

In the scenario of evaluating e-Bug or similar educational health websites, the attributes chosen to assess could be the following:

- Appearance: colors, fonts, media or graphics, page length, style consistency, and first impression

- Content: clarity, completeness, current and timely information, relevance, reliability, and uniqueness

- Interactivity: sense of community and modern features

- Ease of use: home page indication, navigation, guidance, and multilanguage support

- Technical adequacy: compatibility with other devices, load time, valid links, and limited use of special plug-ins

- Satisfaction: loyalty

These cover the main website attributes appropriate for an educational health website. If the website did not currently have features such as search engines, site map, background music, it may not be appropriate to evaluate these, but may be better suited to question whether they would be suitable additions to the website; or these could be combined under the heading modern features . Furthermore, security may not be a necessary attribute to evaluate if participant identifiable information or bank details are not needed to use the website.

Step 2. What Is the Best Way to Evaluate These Attributes?

Often, a combination of methods is suitable to evaluate a website, as 1 method may not be appropriate to assess all attributes of interest [ 29 ] (see Multimedia Appendix 3 for a summary of the most used methods for evaluating websites). For example, screen capture of task completion may be appropriate to assess the efficiency of a website but would not be the chosen method to assess loyalty. A questionnaire or qualitative interview may be more appropriate for this attribute.