Something went wrong when searching for seed articles. Please try again soon.

No articles were found for that search term.

Author, year The title of the article goes here

Upcoming Litmaps Webinar

LITERATURE REVIEW SOFTWARE FOR BETTER RESEARCH

“Litmaps is a game changer for finding novel literature... it has been invaluable for my productivity.... I also got my PhD student to use it and they also found it invaluable, finding several gaps they missed”

Varun Venkatesh

Austin Health, Australia

As a full-time researcher, Litmaps has become an indispensable tool in my arsenal. The Seed Maps and Discover features of Litmaps have transformed my literature review process, streamlining the identification of key citations while revealing previously overlooked relevant literature, ensuring no crucial connection goes unnoticed. A true game-changer indeed!

Ritwik Pandey

Doctoral Research Scholar – Sri Sathya Sai Institute of Higher Learning

Using Litmaps for my research papers has significantly improved my workflow. Typically, I start with a single paper related to my topic. Whenever I find an interesting work, I add it to my search. From there, I can quickly cover my entire Related Work section.

David Fischer

Research Associate – University of Applied Sciences Kempten

“It's nice to get a quick overview of related literature. Really easy to use, and it helps getting on top of the often complicated structures of referencing”

Christoph Ludwig

Technische Universität Dresden, Germany

“This has helped me so much in researching the literature. Currently, I am beginning to investigate new fields and this has helped me hugely”

Aran Warren

Canterbury University, NZ

“I can’t live without you anymore! I also recommend you to my students.”

Professor at The Chinese University of Hong Kong

“Seeing my literature list as a network enhances my thinking process!”

Katholieke Universiteit Leuven, Belgium

“Incredibly useful tool to get to know more literature, and to gain insight in existing research”

KU Leuven, Belgium

“As a student just venturing into the world of lit reviews, this is a tool that is outstanding and helping me find deeper results for my work.”

Franklin Jeffers

South Oregon University, USA

“Any researcher could use it! The paper recommendations are great for anyone and everyone”

Swansea University, Wales

“This tool really helped me to create good bibtex references for my research papers”

Ali Mohammed-Djafari

Director of Research at LSS-CNRS, France

“Litmaps is extremely helpful with my research. It helps me organize each one of my projects and see how they relate to each other, as well as to keep up to date on publications done in my field”

Daniel Fuller

Clarkson University, USA

As a person who is an early researcher and identifies as dyslexic, I can say that having research articles laid out in the date vs cite graph format is much more approachable than looking at a standard database interface. I feel that the maps Litmaps offers lower the barrier of entry for researchers by giving them the connections between articles spaced out visually. This helps me orientate where a paper is in the history of a field. Thus, new researchers can look at one of Litmap's "seed maps" and have the same information as hours of digging through a database.

Baylor Fain

Postdoctoral Associate – University of Florida

Accelerate your research with the best systematic literature review tools

The ideal literature review tool helps you make sense of the most important insights in your research field. ATLAS.ti empowers researchers to perform powerful and collaborative analysis using the leading software for literature review.

Finalize your literature review faster with comfort

ATLAS.ti makes it easy to manage, organize, and analyze articles, PDFs, excerpts, and more for your projects. Conduct a deep systematic literature review and get the insights you need with a comprehensive toolset built specifically for your research projects.

Figure out the "why" behind your participant's motivations

Understand the behaviors and emotions that are driving your focus group participants. With ATLAS.ti, you can transform your raw data and turn it into qualitative insights you can learn from. Easily determine user intent in the same spot you're deciphering your overall focus group data.

Visualize your research findings like never before

We make it simple to present your analysis results with meaningful charts, networks, and diagrams. Instead of figuring out how to communicate the insights you just unlocked, we enable you to leverage easy-to-use visualizations that support your goals.

Everything you need to elevate your literature review

Import and organize literature data.

Import and analyze any type of text content – ATLAS.ti supports all standard text and transcription files such as Word and PDF.

Analyze with ease and speed

Utilize easy-to-learn workflows that save valuable time, such as auto coding, sentiment analysis, team collaboration, and more.

Leverage AI-driven tools

Make efficiency a priority and let ATLAS.ti do your work with AI-powered research tools and features for faster results.

Visualize and present findings

With just a few clicks, you can create meaningful visualizations like charts, word clouds, tables, networks, among others for your literature data.

The faster way to make sense of your literature review. Try it for free, today.

A literature review analyzes the most current research within a research area. A literature review consists of published studies from many sources:

- Peer-reviewed academic publications

- Full-length books

- University bulletins

- Conference proceedings

- Dissertations and theses

Literature reviews allow researchers to:

- Summarize the state of the research

- Identify unexplored research inquiries

- Recommend practical applications

- Critique currently published research

Literature reviews are either standalone publications or part of a paper as background for an original research project. A literature review, as a section of a more extensive research article, summarizes the current state of the research to justify the primary research described in the paper.

For example, a researcher may have reviewed the literature on a new supplement's health benefits and concluded that more research needs to be conducted on those with a particular condition. This research gap warrants a study examining how this understudied population reacted to the supplement. Researchers need to establish this research gap through a literature review to persuade journal editors and reviewers of the value of their research.

Consider a literature review as a typical research publication presenting a study, its results, and the salient points scholars can infer from the study. The only significant difference with a literature review treats existing literature as the research data to collect and analyze. From that analysis, a literature review can suggest new inquiries to pursue.

Identify a focus

Similar to a typical study, a literature review should have a research question or questions that analysis can answer. This sort of inquiry typically targets a particular phenomenon, population, or even research method to examine how different studies have looked at the same thing differently. A literature review, then, should center the literature collection around that focus.

Collect and analyze the literature

With a focus in mind, a researcher can collect studies that provide relevant information for that focus. They can then analyze the collected studies by finding and identifying patterns or themes that occur frequently. This analysis allows the researcher to point out what the field has frequently explored or, on the other hand, overlooked.

Suggest implications

The literature review allows the researcher to argue a particular point through the evidence provided by the analysis. For example, suppose the analysis makes it apparent that the published research on people's sleep patterns has not adequately explored the connection between sleep and a particular factor (e.g., television-watching habits, indoor air quality). In that case, the researcher can argue that further study can address this research gap.

External requirements aside (e.g., many academic journals have a word limit of 6,000-8,000 words), a literature review as a standalone publication is as long as necessary to allow readers to understand the current state of the field. Even if it is just a section in a larger paper, a literature review is long enough to allow the researcher to justify the study that is the paper's focus.

Note that a literature review needs only to incorporate a representative number of studies relevant to the research inquiry. For term papers in university courses, 10 to 20 references might be appropriate for demonstrating analytical skills. Published literature reviews in peer-reviewed journals might have 40 to 50 references. One of the essential goals of a literature review is to persuade readers that you have analyzed a representative segment of the research you are reviewing.

Researchers can find published research from various online sources:

- Journal websites

- Research databases

- Search engines (Google Scholar, Semantic Scholar)

- Research repositories

- Social networking sites (Academia, ResearchGate)

Many journals make articles freely available under the term "open access," meaning that there are no restrictions to viewing and downloading such articles. Otherwise, collecting research articles from restricted journals usually requires access from an institution such as a university or a library.

Evidence of a rigorous literature review is more important than the word count or the number of articles that undergo data analysis. Especially when writing for a peer-reviewed journal, it is essential to consider how to demonstrate research rigor in your literature review to persuade reviewers of its scholarly value.

Select field-specific journals

The most significant research relevant to your field focuses on a narrow set of journals similar in aims and scope. Consider who the most prominent scholars in your field are and determine which journals publish their research or have them as editors or reviewers. Journals tend to look favorably on systematic reviews that include articles they have published.

Incorporate recent research

Recently published studies have greater value in determining the gaps in the current state of research. Older research is likely to have encountered challenges and critiques that may render their findings outdated or refuted. What counts as recent differs by field; start by looking for research published within the last three years and gradually expand to older research when you need to collect more articles for your review.

Consider the quality of the research

Literature reviews are only as strong as the quality of the studies that the researcher collects. You can judge any particular study by many factors, including:

- the quality of the article's journal

- the article's research rigor

- the timeliness of the research

The critical point here is that you should consider more than just a study's findings or research outputs when including research in your literature review.

Narrow your research focus

Ideally, the articles you collect for your literature review have something in common, such as a research method or research context. For example, if you are conducting a literature review about teaching practices in high school contexts, it is best to narrow your literature search to studies focusing on high school. You should consider expanding your search to junior high school and university contexts only when there are not enough studies that match your focus.

You can create a project in ATLAS.ti for keeping track of your collected literature. ATLAS.ti allows you to view and analyze full text articles and PDF files in a single project. Within projects, you can use document groups to separate studies into different categories for easier and faster analysis.

For example, a researcher with a literature review that examines studies across different countries can create document groups labeled "United Kingdom," "Germany," and "United States," among others. A researcher can also use ATLAS.ti's global filters to narrow analysis to a particular set of studies and gain insights about a smaller set of literature.

ATLAS.ti allows you to search, code, and analyze text documents and PDF files. You can treat a set of research articles like other forms of qualitative data. The codes you apply to your literature collection allow for analysis through many powerful tools in ATLAS.ti:

- Code Co-Occurrence Explorer

- Code Co-Occurrence Table

- Code-Document Table

Other tools in ATLAS.ti employ machine learning to facilitate parts of the coding process for you. Some of our software tools that are effective for analyzing literature include:

- Named Entity Recognition

- Opinion Mining

- Sentiment Analysis

As long as your documents are text documents or text-enable PDF files, ATLAS.ti's automated tools can provide essential assistance in the data analysis process.

Writing in the Health and Social Sciences: Literature Reviews and Synthesis Tools

- Journal Publishing

- Style and Writing Guides

- Readings about Writing

- Citing in APA Style This link opens in a new window

- Resources for Dissertation Authors

- Citation Management and Formatting Tools

- What are Literature Reviews?

- Conducting & Reporting Systematic Reviews

- Finding Systematic Reviews

- Tutorials & Tools for Literature Reviews

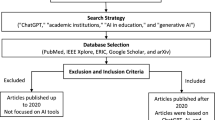

Systematic Literature Reviews: Steps & Resources

These steps for conducting a systematic literature review are listed below .

Also see subpages for more information about:

- The different types of literature reviews, including systematic reviews and other evidence synthesis methods

- Tools & Tutorials

Literature Review & Systematic Review Steps

- Develop a Focused Question

- Scope the Literature (Initial Search)

- Refine & Expand the Search

- Limit the Results

- Download Citations

- Abstract & Analyze

- Create Flow Diagram

- Synthesize & Report Results

1. Develop a Focused Question

Consider the PICO Format: Population/Problem, Intervention, Comparison, Outcome

Focus on defining the Population or Problem and Intervention (don't narrow by Comparison or Outcome just yet!)

"What are the effects of the Pilates method for patients with low back pain?"

Tools & Additional Resources:

- PICO Question Help

- Stillwell, Susan B., DNP, RN, CNE; Fineout-Overholt, Ellen, PhD, RN, FNAP, FAAN; Melnyk, Bernadette Mazurek, PhD, RN, CPNP/PMHNP, FNAP, FAAN; Williamson, Kathleen M., PhD, RN Evidence-Based Practice, Step by Step: Asking the Clinical Question, AJN The American Journal of Nursing : March 2010 - Volume 110 - Issue 3 - p 58-61 doi: 10.1097/01.NAJ.0000368959.11129.79

2. Scope the Literature

A "scoping search" investigates the breadth and/or depth of the initial question or may identify a gap in the literature.

Eligible studies may be located by searching in:

- Background sources (books, point-of-care tools)

- Article databases

- Trial registries

- Grey literature

- Cited references

- Reference lists

When searching, if possible, translate terms to controlled vocabulary of the database. Use text word searching when necessary.

Use Boolean operators to connect search terms:

- Combine separate concepts with AND (resulting in a narrower search)

- Connecting synonyms with OR (resulting in an expanded search)

Search: pilates AND ("low back pain" OR backache )

Video Tutorials - Translating PICO Questions into Search Queries

- Translate Your PICO Into a Search in PubMed (YouTube, Carrie Price, 5:11)

- Translate Your PICO Into a Search in CINAHL (YouTube, Carrie Price, 4:56)

3. Refine & Expand Your Search

Expand your search strategy with synonymous search terms harvested from:

- database thesauri

- reference lists

- relevant studies

Example:

(pilates OR exercise movement techniques) AND ("low back pain" OR backache* OR sciatica OR lumbago OR spondylosis)

As you develop a final, reproducible strategy for each database, save your strategies in a:

- a personal database account (e.g., MyNCBI for PubMed)

- Log in with your NYU credentials

- Open and "Make a Copy" to create your own tracker for your literature search strategies

4. Limit Your Results

Use database filters to limit your results based on your defined inclusion/exclusion criteria. In addition to relying on the databases' categorical filters, you may also need to manually screen results.

- Limit to Article type, e.g.,: "randomized controlled trial" OR multicenter study

- Limit by publication years, age groups, language, etc.

NOTE: Many databases allow you to filter to "Full Text Only". This filter is not recommended . It excludes articles if their full text is not available in that particular database (CINAHL, PubMed, etc), but if the article is relevant, it is important that you are able to read its title and abstract, regardless of 'full text' status. The full text is likely to be accessible through another source (a different database, or Interlibrary Loan).

- Filters in PubMed

- CINAHL Advanced Searching Tutorial

5. Download Citations

Selected citations and/or entire sets of search results can be downloaded from the database into a citation management tool. If you are conducting a systematic review that will require reporting according to PRISMA standards, a citation manager can help you keep track of the number of articles that came from each database, as well as the number of duplicate records.

In Zotero, you can create a Collection for the combined results set, and sub-collections for the results from each database you search. You can then use Zotero's 'Duplicate Items" function to find and merge duplicate records.

- Citation Managers - General Guide

6. Abstract and Analyze

- Migrate citations to data collection/extraction tool

- Screen Title/Abstracts for inclusion/exclusion

- Screen and appraise full text for relevance, methods,

- Resolve disagreements by consensus

Covidence is a web-based tool that enables you to work with a team to screen titles/abstracts and full text for inclusion in your review, as well as extract data from the included studies.

- Covidence Support

- Critical Appraisal Tools

- Data Extraction Tools

7. Create Flow Diagram

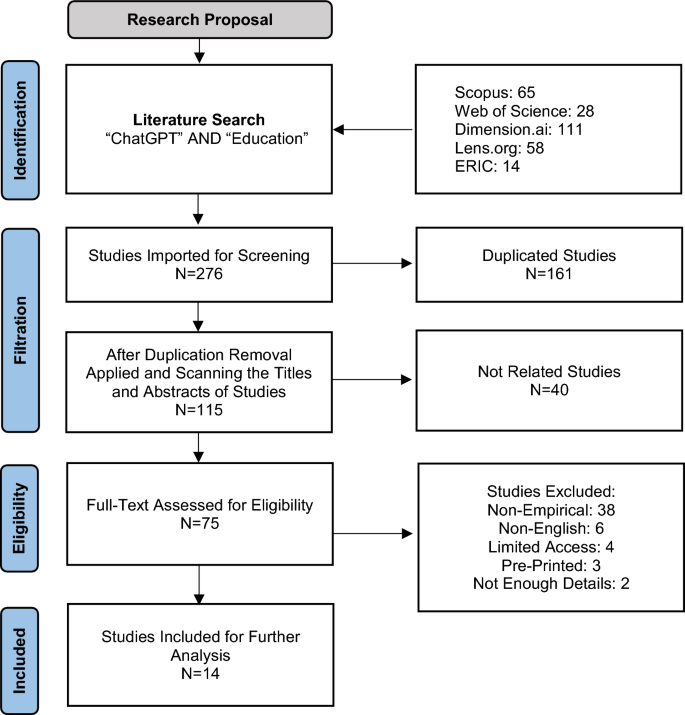

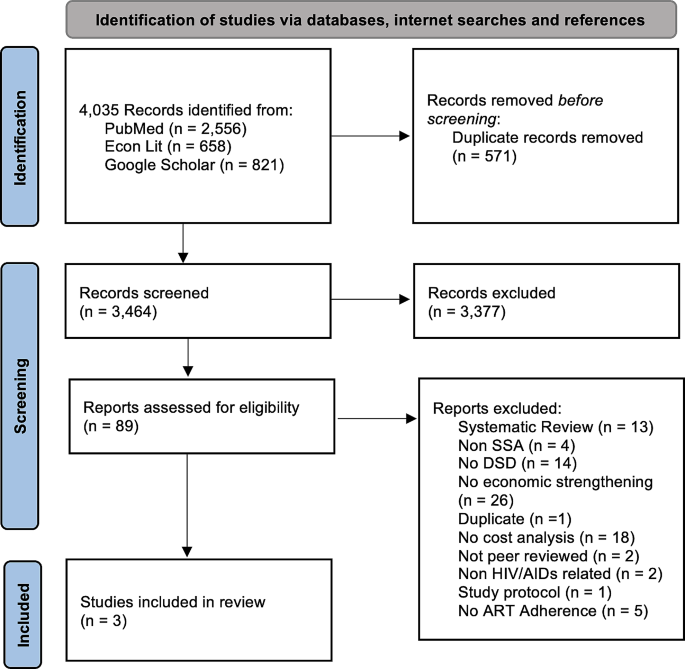

The PRISMA (Preferred Reporting Items for Systematic reviews and Meta-Analyses) flow diagram is a visual representation of the flow of records through different phases of a systematic review. It depicts the number of records identified, included and excluded. It is best used in conjunction with the PRISMA checklist .

Example from: Stotz, S. A., McNealy, K., Begay, R. L., DeSanto, K., Manson, S. M., & Moore, K. R. (2021). Multi-level diabetes prevention and treatment interventions for Native people in the USA and Canada: A scoping review. Current Diabetes Reports, 2 (11), 46. https://doi.org/10.1007/s11892-021-01414-3

- PRISMA Flow Diagram Generator (ShinyApp.io, Haddaway et al. )

- PRISMA Diagram Templates (Word and PDF)

- Make a copy of the file to fill out the template

- Image can be downloaded as PDF, PNG, JPG, or SVG

- Covidence generates a PRISMA diagram that is automatically updated as records move through the review phases

8. Synthesize & Report Results

There are a number of reporting guideline available to guide the synthesis and reporting of results in systematic literature reviews.

It is common to organize findings in a matrix, also known as a Table of Evidence (ToE).

- Reporting Guidelines for Systematic Reviews

- Download a sample template of a health sciences review matrix (GoogleSheets)

Steps modified from:

Cook, D. A., & West, C. P. (2012). Conducting systematic reviews in medical education: a stepwise approach. Medical Education , 46 (10), 943–952.

- << Previous: Citation Management and Formatting Tools

- Next: What are Literature Reviews? >>

- Last Updated: May 15, 2024 11:19 AM

- URL: https://guides.nyu.edu/healthwriting

Literature Review Tips & Tools

- Tips & Examples

Organizational Tools

Tools for systematic reviews.

- Bubbl.us Free online brainstorming/mindmapping tool that also has a free iPad app.

- Coggle Another free online mindmapping tool.

- Organization & Structure tips from Purdue University Online Writing Lab

- Literature Reviews from The Writing Center at University of North Carolina at Chapel Hill Gives several suggestions and descriptions of ways to organize your lit review.

- Cochrane Handbook for Systematic Reviews of Interventions "The Cochrane Handbook for Systematic Reviews of Interventions is the official guide that describes in detail the process of preparing and maintaining Cochrane systematic reviews on the effects of healthcare interventions. "

- Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) website "PRISMA is an evidence-based minimum set of items for reporting in systematic reviews and meta-analyses. PRISMA focuses on the reporting of reviews evaluating randomized trials, but can also be used as a basis for reporting systematic reviews of other types of research, particularly evaluations of interventions."

- PRISMA Flow Diagram Generator Free tool that will generate a PRISMA flow diagram from a CSV file (sample CSV template provided) more... less... Please cite as: Haddaway, N. R., Page, M. J., Pritchard, C. C., & McGuinness, L. A. (2022). PRISMA2020: An R package and Shiny app for producing PRISMA 2020-compliant flow diagrams, with interactivity for optimised digital transparency and Open Synthesis Campbell Systematic Reviews, 18, e1230. https://doi.org/10.1002/cl2.1230

- Rayyan "Rayyan is a 100% FREE web application to help systematic review authors perform their job in a quick, easy and enjoyable fashion. Authors create systematic reviews, collaborate on them, maintain them over time and get suggestions for article inclusion."

- Covidence Covidence is a tool to help manage systematic reviews (and create PRISMA flow diagrams). **UMass Amherst doesn't subscribe, but Covidence offers a free trial for 1 review of no more than 500 records. It is also set up for researchers to pay for each review.

- PROSPERO - Systematic Review Protocol Registry "PROSPERO accepts registrations for systematic reviews, rapid reviews and umbrella reviews. PROSPERO does not accept scoping reviews or literature scans. Sibling PROSPERO sites registers systematic reviews of human studies and systematic reviews of animal studies."

- Critical Appraisal Tools from JBI Joanna Briggs Institute at the University of Adelaide provides these checklists to help evaluate different types of publications that could be included in a review.

- Systematic Review Toolbox "The Systematic Review Toolbox is a community-driven, searchable, web-based catalogue of tools that support the systematic review process across multiple domains. The resource aims to help reviewers find appropriate tools based on how they provide support for the systematic review process. Users can perform a simple keyword search (i.e. Quick Search) to locate tools, a more detailed search (i.e. Advanced Search) allowing users to select various criteria to find specific types of tools and submit new tools to the database. Although the focus of the Toolbox is on identifying software tools to support systematic reviews, other tools or support mechanisms (such as checklists, guidelines and reporting standards) can also be found."

- Abstrackr Free, open-source tool that "helps you upload and organize the results of a literature search for a systematic review. It also makes it possible for your team to screen, organize, and manipulate all of your abstracts in one place." -From Center for Evidence Synthesis in Health

- SRDR Plus (Systematic Review Data Repository: Plus) An open-source tool for extracting, managing,, and archiving data developed by the Center for Evidence Synthesis in Health at Brown University

- RoB 2 Tool (Risk of Bias for Randomized Trials) A revised Cochrane risk of bias tool for randomized trials

- << Previous: Tips & Examples

- Next: Writing & Citing Help >>

- Last Updated: Apr 2, 2024 4:46 PM

- URL: https://guides.library.umass.edu/litreviews

© 2022 University of Massachusetts Amherst • Site Policies • Accessibility

Purdue Online Writing Lab Purdue OWL® College of Liberal Arts

Writing a Literature Review

Welcome to the Purdue OWL

This page is brought to you by the OWL at Purdue University. When printing this page, you must include the entire legal notice.

Copyright ©1995-2018 by The Writing Lab & The OWL at Purdue and Purdue University. All rights reserved. This material may not be published, reproduced, broadcast, rewritten, or redistributed without permission. Use of this site constitutes acceptance of our terms and conditions of fair use.

A literature review is a document or section of a document that collects key sources on a topic and discusses those sources in conversation with each other (also called synthesis ). The lit review is an important genre in many disciplines, not just literature (i.e., the study of works of literature such as novels and plays). When we say “literature review” or refer to “the literature,” we are talking about the research ( scholarship ) in a given field. You will often see the terms “the research,” “the scholarship,” and “the literature” used mostly interchangeably.

Where, when, and why would I write a lit review?

There are a number of different situations where you might write a literature review, each with slightly different expectations; different disciplines, too, have field-specific expectations for what a literature review is and does. For instance, in the humanities, authors might include more overt argumentation and interpretation of source material in their literature reviews, whereas in the sciences, authors are more likely to report study designs and results in their literature reviews; these differences reflect these disciplines’ purposes and conventions in scholarship. You should always look at examples from your own discipline and talk to professors or mentors in your field to be sure you understand your discipline’s conventions, for literature reviews as well as for any other genre.

A literature review can be a part of a research paper or scholarly article, usually falling after the introduction and before the research methods sections. In these cases, the lit review just needs to cover scholarship that is important to the issue you are writing about; sometimes it will also cover key sources that informed your research methodology.

Lit reviews can also be standalone pieces, either as assignments in a class or as publications. In a class, a lit review may be assigned to help students familiarize themselves with a topic and with scholarship in their field, get an idea of the other researchers working on the topic they’re interested in, find gaps in existing research in order to propose new projects, and/or develop a theoretical framework and methodology for later research. As a publication, a lit review usually is meant to help make other scholars’ lives easier by collecting and summarizing, synthesizing, and analyzing existing research on a topic. This can be especially helpful for students or scholars getting into a new research area, or for directing an entire community of scholars toward questions that have not yet been answered.

What are the parts of a lit review?

Most lit reviews use a basic introduction-body-conclusion structure; if your lit review is part of a larger paper, the introduction and conclusion pieces may be just a few sentences while you focus most of your attention on the body. If your lit review is a standalone piece, the introduction and conclusion take up more space and give you a place to discuss your goals, research methods, and conclusions separately from where you discuss the literature itself.

Introduction:

- An introductory paragraph that explains what your working topic and thesis is

- A forecast of key topics or texts that will appear in the review

- Potentially, a description of how you found sources and how you analyzed them for inclusion and discussion in the review (more often found in published, standalone literature reviews than in lit review sections in an article or research paper)

- Summarize and synthesize: Give an overview of the main points of each source and combine them into a coherent whole

- Analyze and interpret: Don’t just paraphrase other researchers – add your own interpretations where possible, discussing the significance of findings in relation to the literature as a whole

- Critically Evaluate: Mention the strengths and weaknesses of your sources

- Write in well-structured paragraphs: Use transition words and topic sentence to draw connections, comparisons, and contrasts.

Conclusion:

- Summarize the key findings you have taken from the literature and emphasize their significance

- Connect it back to your primary research question

How should I organize my lit review?

Lit reviews can take many different organizational patterns depending on what you are trying to accomplish with the review. Here are some examples:

- Chronological : The simplest approach is to trace the development of the topic over time, which helps familiarize the audience with the topic (for instance if you are introducing something that is not commonly known in your field). If you choose this strategy, be careful to avoid simply listing and summarizing sources in order. Try to analyze the patterns, turning points, and key debates that have shaped the direction of the field. Give your interpretation of how and why certain developments occurred (as mentioned previously, this may not be appropriate in your discipline — check with a teacher or mentor if you’re unsure).

- Thematic : If you have found some recurring central themes that you will continue working with throughout your piece, you can organize your literature review into subsections that address different aspects of the topic. For example, if you are reviewing literature about women and religion, key themes can include the role of women in churches and the religious attitude towards women.

- Qualitative versus quantitative research

- Empirical versus theoretical scholarship

- Divide the research by sociological, historical, or cultural sources

- Theoretical : In many humanities articles, the literature review is the foundation for the theoretical framework. You can use it to discuss various theories, models, and definitions of key concepts. You can argue for the relevance of a specific theoretical approach or combine various theorical concepts to create a framework for your research.

What are some strategies or tips I can use while writing my lit review?

Any lit review is only as good as the research it discusses; make sure your sources are well-chosen and your research is thorough. Don’t be afraid to do more research if you discover a new thread as you’re writing. More info on the research process is available in our "Conducting Research" resources .

As you’re doing your research, create an annotated bibliography ( see our page on the this type of document ). Much of the information used in an annotated bibliography can be used also in a literature review, so you’ll be not only partially drafting your lit review as you research, but also developing your sense of the larger conversation going on among scholars, professionals, and any other stakeholders in your topic.

Usually you will need to synthesize research rather than just summarizing it. This means drawing connections between sources to create a picture of the scholarly conversation on a topic over time. Many student writers struggle to synthesize because they feel they don’t have anything to add to the scholars they are citing; here are some strategies to help you:

- It often helps to remember that the point of these kinds of syntheses is to show your readers how you understand your research, to help them read the rest of your paper.

- Writing teachers often say synthesis is like hosting a dinner party: imagine all your sources are together in a room, discussing your topic. What are they saying to each other?

- Look at the in-text citations in each paragraph. Are you citing just one source for each paragraph? This usually indicates summary only. When you have multiple sources cited in a paragraph, you are more likely to be synthesizing them (not always, but often

- Read more about synthesis here.

The most interesting literature reviews are often written as arguments (again, as mentioned at the beginning of the page, this is discipline-specific and doesn’t work for all situations). Often, the literature review is where you can establish your research as filling a particular gap or as relevant in a particular way. You have some chance to do this in your introduction in an article, but the literature review section gives a more extended opportunity to establish the conversation in the way you would like your readers to see it. You can choose the intellectual lineage you would like to be part of and whose definitions matter most to your thinking (mostly humanities-specific, but this goes for sciences as well). In addressing these points, you argue for your place in the conversation, which tends to make the lit review more compelling than a simple reporting of other sources.

Citations + Writing

- AMA Citation Stumpers

- Writing for Graduate School

- Writing up Your Research

Introduction

- Writing a Lit Review

- Tools to help you Organize your Lit Review

- Other Reference Management Tools

- Transitioning From RefWorks to Zotero

A Literature Review Matrix and a Literature Synthesis Matrix will help you organize the articles, etc. you've found along the way and will help you prepare to write your literature review.

Writing a Literature Review

Unless you have a reason to present the literature chronologically (to show development over time, perhaps), the preferred method for organizing your literature is thematically.

Step 1 : Identify your themes.

Step 2 : Identify the articles that address those themes

Step 3 : Identify the similarities and differences among the articles within the themes

A Literature Synthesis Matrix will be especially helpful in this process.

Click on the title to go to the e-book.

Tools to Help you Organize your Literature Review

Literature Review Matrix

This type of matrix will help you see the content of all of your articles at a glance. Each row represents an article, and each column and element of the articles. Typical columns can include things like

- Research method

- Sample size

- Theoretical framework

However, the exact columns you chose depend on the elements of each study you want to discuss in your paper. You get to decide!

Here are couple of examples to give you a better idea.

- An evolutionary concept analysis of helicopter parenting. (Lee et al., 2014)

- The use of video conferencing for persons with chronic conditions: A systematic review. (Mallow et al. 2016)

Literature Synthesis Matrix

In essence a synthesis matrix is a way to organize your literature by theme, which is generally the way writers organize their whole literature reviews. The real benefit is that it helps you identify the articles that talk about the same themes so that you can write about them together in your literature review.

North Carolina State has a very nice description and example of the process.

This YouTube video also explains the process.

Here is a template you can use (this one is in Word instead of PPT).

- << Previous: Writing up Your Research

- Next: Other Reference Management Tools >>

- Last Updated: May 22, 2024 1:17 PM

- URL: https://libguides.massgeneral.org/citations-writing

Literature review: your definitive guide

Joanna Wilkinson

This is our ultimate guide on how to write a narrative literature review. It forms part of our Research Smarter series .

How do you write a narrative literature review?

Researchers worldwide are increasingly reliant on literature reviews. That’s because review articles provide you with a broad picture of the field, and help to synthesize published research that’s expanding at a rapid pace .

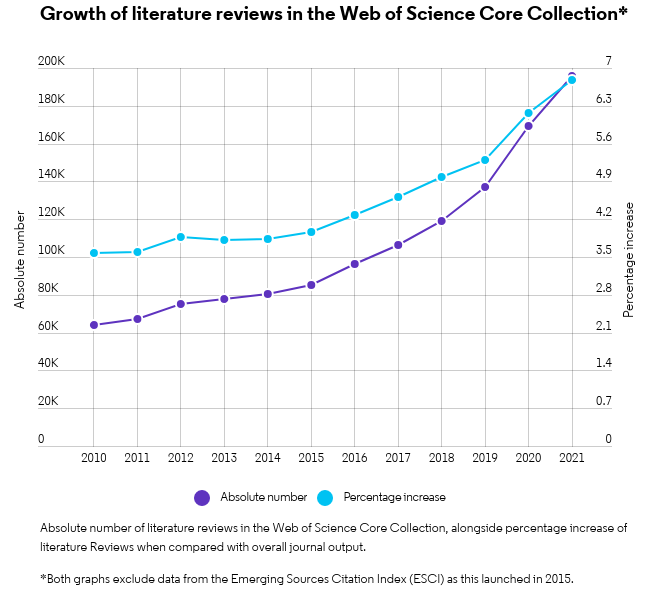

In some academic fields, researchers publish more literature reviews than original research papers. The graph below shows the substantial growth of narrative literature reviews in the Web of Science™, alongside the percentage increase of reviews when compared to all document types.

It’s critical that researchers across all career levels understand how to produce an objective, critical summary of published research. This is no easy feat, but a necessary one. Professionally constructed literature reviews – whether written by a student in class or an experienced researcher for publication – should aim to add to the literature rather than detract from it.

To help you write a narrative literature review, we’ve put together some top tips in this blog post.

Best practice tips to write a narrative literature review:

- Don’t miss a paper: tips for a thorough topic search

- Identify key papers (and know how to use them)

- Tips for working with co-authors

- Find the right journal for your literature review using actual data

- Discover literature review examples and templates

We’ll also provide an overview of all the products helpful for your next narrative review, including the Web of Science, EndNote™ and Journal Citation Reports™.

1. Don’t miss a paper: tips for a thorough topic search

Once you’ve settled on your research question, coming up with a good set of keywords to find papers on your topic can be daunting. This isn’t surprising. Put simply, if you fail to include a relevant paper when you write a narrative literature review, the omission will probably get picked up by your professor or peer reviewers. The end result will likely be a low mark or an unpublished manuscript, neither of which will do justice to your many months of hard work.

Research databases and search engines are an integral part of any literature search. It’s important you utilize as many options available through your library as possible. This will help you search an entire discipline (as well as across disciplines) for a thorough narrative review.

We provide a short summary of the various databases and search engines in an earlier Research Smarter blog . These include the Web of Science , Science.gov and the Directory of Open Access Journals (DOAJ).

Like what you see? Share it with others on Twitter:

[bctt tweet=”Writing a #LiteratureReview? Check out the latest @clarivateAG blog for top tips (from topic searches to working with coauthors), examples, templates and more”]

Searching the Web of Science

The Web of Science is a multidisciplinary research engine that contains over 170 million papers from more than 250 academic disciplines. All of the papers in the database are interconnected via citations. That means once you get started with your keyword search, you can follow the trail of cited and citing papers to efficiently find all the relevant literature. This is a great way to ensure you’re not missing anything important when you write a narrative literature review.

We recommend starting your search in the Web of Science Core Collection™. This database covers more than 21,000 carefully selected journals. It is a trusted source to find research papers, and discover top authors and journals (read more about its coverage here ).

Learn more about exploring the Core Collection in our blog, How to find research papers: five tips every researcher should know . Our blog covers various tips, including how to:

- Perform a topic search (and select your keywords)

- Explore the citation network

- Refine your results (refining your search results by reviews, for example, will help you avoid duplication of work, as well as identify trends and gaps in the literature)

- Save your search and set up email alerts

Try our tips on the Web of Science now.

2. Identify key papers (and know how to use them)

As you explore the Web of Science, you may notice that certain papers are marked as “Highly Cited.” These papers can play a significant role when you write a narrative literature review.

Highly Cited papers are recently published papers getting the most attention in your field right now. They form the top 1% of papers based on the number of citations received, compared to other papers published in the same field in the same year.

You will want to identify Highly Cited research as a group of papers. This group will help guide your analysis of the future of the field and opportunities for future research. This is an important component of your conclusion.

Writing reviews is hard work…[it] not only organizes published papers, but also positions t hem in the academic process and presents the future direction. Prof. Susumu Kitagawa, Highly Cited Researcher, Kyoto University

3. Tips for working with co-authors

Writing a narrative review on your own is hard, but it can be even more challenging if you’re collaborating with a team, especially if your coauthors are working across multiple locations. Luckily, reference management software can improve the coordination between you and your co-authors—both around the department and around the world.

We’ve written about how to use EndNote’s Cite While You Write feature, which will help you save hundreds of hours when writing research . Here, we discuss the features that give you greater ease and control when collaborating with your colleagues.

Use EndNote for narrative reviews

Sharing references is essential for successful collaboration. With EndNote, you can store and share as many references, documents and files as you need with up to 100 people using the software.

You can share simultaneous access to one reference library, regardless of your colleague’s location or organization. You can also choose the type of access each user has on an individual basis. For example, Read-Write access means a select colleague can add and delete references, annotate PDF articles and create custom groups. They’ll also be able to see up to 500 of the team’s most recent changes to the reference library. Read-only is also an option for individuals who don’t need that level of access.

EndNote helps you overcome research limitations by synchronizing library changes every 15 minutes. That means your team can stay up-to-date at any time of the day, supporting an easier, more successful collaboration.

Start your free EndNote trial today .

4.Finding a journal for your literature review

Finding the right journal for your literature review can be a particular pain point for those of you who want to publish. The expansion of scholarly journals has made the task extremely difficult, and can potentially delay the publication of your work by many months.

We’ve written a blog about how you can find the right journal for your manuscript using a rich array of data. You can read our blog here , or head straight to Endnote’s Manuscript Matcher or Journal Citation Report s to try out the best tools for the job.

5. Discover literature review examples and templates

There are a few tips we haven’t covered in this blog, including how to decide on an area of research, develop an interesting storyline, and highlight gaps in the literature. We’ve listed a few blogs here that might help you with this, alongside some literature review examples and outlines to get you started.

Literature Review examples:

- Aggregation-induced emission

- Development and applications of CRISPR-Cas9 for genome engineering

- Object based image analysis for remote sensing

(Make sure you download the free EndNote™ Click browser plugin to access the full-text PDFs).

Templates and outlines:

- Learn how to write a review of literature , Univ. of Wisconsin – Madison

- Structuring a literature review , Australian National University

- Matrix Method for Literature Review: The Review Matrix , Duquesne University

Additional resources:

- Ten simple rules for writing a literature review , Editor, PLoS Computational Biology

- Video: How to write a literature review , UC San Diego Psychology

Related posts

Journal citation reports 2024 preview: unified rankings for more inclusive journal assessment.

Introducing the Clarivate Academic AI Platform

Reimagining research impact: Introducing Web of Science Research Intelligence

The Sheridan Libraries

- Write a Literature Review

- Sheridan Libraries

- Find This link opens in a new window

- Evaluate This link opens in a new window

What Will You Do Differently?

Please help your librarians by filling out this two-minute survey of today's class session..

Professor, this one's for you .

Introduction

Literature reviews take time. here is some general information to know before you start. .

- VIDEO -- This video is a great overview of the entire process. (2020; North Carolina State University Libraries) --The transcript is included --This is for everyone; ignore the mention of "graduate students" --9.5 minutes, and every second is important

- OVERVIEW -- Read this page from Purdue's OWL. It's not long, and gives some tips to fill in what you just learned from the video.

- NOT A RESEARCH ARTICLE -- A literature review follows a different style, format, and structure from a research article.

Steps to Completing a Literature Review

- Next: Find >>

- Last Updated: Sep 26, 2023 10:25 AM

- URL: https://guides.library.jhu.edu/lit-review

7 open source tools to make literature reviews easy

Opensource.com

A good literature review is critical for academic research in any field, whether it is for a research article, a critical review for coursework, or a dissertation. In a recent article, I presented detailed steps for doing a literature review using open source software .

The following is a brief summary of seven free and open source software tools described in that article that will make your next literature review much easier.

1. GNU Linux

Most literature reviews are accomplished by graduate students working in research labs in universities. For absurd reasons, graduate students often have the worst computers on campus. They are often old, slow, and clunky Windows machines that have been discarded and recycled from the undergraduate computer labs. Installing a flavor of GNU Linux will breathe new life into these outdated PCs. There are more than 100 distributions , all of which can be downloaded and installed for free on computers. Most popular Linux distributions come with a "try-before-you-buy" feature. For example, with Ubuntu you can make a bootable USB stick that allows you to test-run the Ubuntu desktop experience without interfering in any way with your PC configuration. If you like the experience, you can use the stick to install Ubuntu on your machine permanently.

Linux distributions generally come with a free web browser, and the most popular is Firefox . Two Firefox plugins that are particularly useful for literature reviews are Unpaywall and Zotero. Keep reading to learn why.

3. Unpaywall

Often one of the hardest parts of a literature review is gaining access to the papers you want to read for your review. The unintended consequence of copyright restrictions and paywalls is it has narrowed access to the peer-reviewed literature to the point that even Harvard University is challenged to pay for it. Fortunately, there are a lot of open access articles—about a third of the literature is free (and the percentage is growing). Unpaywall is a Firefox plugin that enables researchers to click a green tab on the side of the browser and skip the paywall on millions of peer-reviewed journal articles. This makes finding accessible copies of articles much faster that searching each database individually. Unpaywall is fast, free, and legal, as it accesses many of the open access sites that I covered in my paper on using open source in lit reviews .

Formatting references is the most tedious of academic tasks. Zotero can save you from ever doing it again. It operates as an Android app, desktop program, and a Firefox plugin (which I recommend). It is a free, easy-to-use tool to help you collect, organize, cite, and share research. It replaces the functionality of proprietary packages such as RefWorks, Endnote, and Papers for zero cost. Zotero can auto-add bibliographic information directly from websites. In addition, it can scrape bibliographic data from PDF files. Notes can be easily added on each reference. Finally, and most importantly, it can import and export the bibliography databases in all publishers' various formats. With this feature, you can export bibliographic information to paste into a document editor for a paper or thesis—or even to a wiki for dynamic collaborative literature reviews (see tool #7 for more on the value of wikis in lit reviews).

5. LibreOffice

Your thesis or academic article can be written conventionally with the free office suite LibreOffice , which operates similarly to Microsoft's Office products but respects your freedom. Zotero has a word processor plugin to integrate directly with LibreOffice. LibreOffice is more than adequate for the vast majority of academic paper writing.

If LibreOffice is not enough for your layout needs, you can take your paper writing one step further with LaTeX , a high-quality typesetting system specifically designed for producing technical and scientific documentation. LaTeX is particularly useful if your writing has a lot of equations in it. Also, Zotero libraries can be directly exported to BibTeX files for use with LaTeX.

7. MediaWiki

If you want to leverage the open source way to get help with your literature review, you can facilitate a dynamic collaborative literature review . A wiki is a website that allows anyone to add, delete, or revise content directly using a web browser. MediaWiki is free software that enables you to set up your own wikis.

Researchers can (in decreasing order of complexity): 1) set up their own research group wiki with MediaWiki, 2) utilize wikis already established at their universities (e.g., Aalto University ), or 3) use wikis dedicated to areas that they research. For example, several university research groups that focus on sustainability (including mine ) use Appropedia , which is set up for collaborative solutions on sustainability, appropriate technology, poverty reduction, and permaculture.

Using a wiki makes it easy for anyone in the group to keep track of the status of and update literature reviews (both current and older or from other researchers). It also enables multiple members of the group to easily collaborate on a literature review asynchronously. Most importantly, it enables people outside the research group to help make a literature review more complete, accurate, and up-to-date.

Wrapping up

Free and open source software can cover the entire lit review toolchain, meaning there's no need for anyone to use proprietary solutions. Do you use other libre tools for making literature reviews or other academic work easier? Please let us know your favorites in the comments.

Related Content

10 Best Literature Review Tools for Researchers

This post may contain affiliate links that allow us to earn a commission at no expense to you. Learn more

Boost your research game with these Best Literature Review Tools for Researchers! Uncover hidden gems, organize your findings, and ace your next research paper!

Conducting literature reviews poses challenges for researchers due to the overwhelming volume of information available and the lack of efficient methods to manage and analyze it.

Researchers struggle to identify key sources, extract relevant information, and maintain accuracy while manually conducting literature reviews. This leads to inefficiency, errors, and difficulty in identifying gaps or trends in existing literature.

Advancements in technology have resulted in a variety of literature review tools. These tools streamline the process, offering features like automated searching, filtering, citation management, and research data extraction. They save time, improve accuracy, and provide valuable insights for researchers.

In this article, we present a curated list of the 10 best literature review tools, empowering researchers to make informed choices and revolutionize their systematic literature review process.

Table of Contents

Top 10 Literature Review Tools for Researchers: In A Nutshell (2023)

#1. semantic scholar – a free, ai-powered research tool for scientific literature.

Semantic Scholar is a cutting-edge literature review tool that researchers rely on for its comprehensive access to academic publications. With its advanced AI algorithms and extensive database, it simplifies the discovery of relevant research papers.

By employing semantic analysis, users can explore scholarly articles based on context and meaning, making it a go-to resource for scholars across disciplines.

Additionally, Semantic Scholar offers personalized recommendations and alerts, ensuring researchers stay updated with the latest developments. However, users should be cautious of potential limitations.

Not all scholarly content may be indexed, and occasional false positives or inaccurate associations can occur. Furthermore, the tool primarily focuses on computer science and related fields, potentially limiting coverage in other disciplines.

Researchers should be mindful of these considerations and supplement Semantic Scholar with other reputable resources for a comprehensive literature review. Despite these caveats, Semantic Scholar remains a valuable tool for streamlining research and staying informed.

#2. Elicit – Research assistant using language models like GPT-3

Elicit is a game-changing literature review tool that has gained popularity among researchers worldwide. With its user-friendly interface and extensive database of scholarly articles, it streamlines the research process, saving time and effort.

The tool employs advanced algorithms to provide personalized recommendations, ensuring researchers discover the most relevant studies for their field. Elicit also promotes collaboration by enabling users to create shared folders and annotate articles.

However, users should be cautious when using Elicit. It is important to verify the credibility and accuracy of the sources found through the tool, as the database encompasses a wide range of publications.

Additionally, occasional glitches in the search function have been reported, leading to incomplete or inaccurate results. While Elicit offers tremendous benefits, researchers should remain vigilant and cross-reference information to ensure a comprehensive literature review.

#3. Scite.Ai – Your personal research assistant

Scite.Ai is a popular literature review tool that revolutionizes the research process for scholars. With its innovative citation analysis feature, researchers can evaluate the credibility and impact of scientific articles, making informed decisions about their inclusion in their own work.

By assessing the context in which citations are used, Scite.Ai ensures that the sources selected are reliable and of high quality, enabling researchers to establish a strong foundation for their research.

However, while Scite.Ai offers numerous advantages, there are a few aspects to be cautious about. As with any data-driven tool, occasional errors or inaccuracies may arise, necessitating researchers to cross-reference and verify results with other reputable sources.

Moreover, Scite.Ai’s coverage may be limited in certain subject areas and languages, with a possibility of missing relevant studies, especially in niche fields or non-English publications.

Therefore, researchers should supplement the use of Scite.Ai with additional resources to ensure comprehensive literature coverage and avoid any potential gaps in their research.

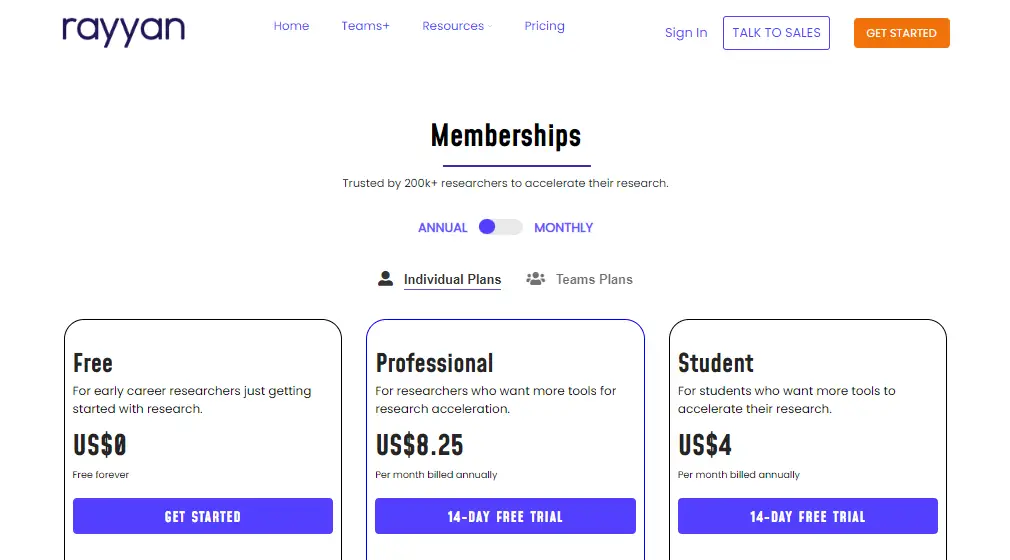

Rayyan offers the following paid plans:

- Monthly Plan: $20

- Yearly Plan: $12

#4. DistillerSR – Literature Review Software

DistillerSR is a powerful literature review tool trusted by researchers for its user-friendly interface and robust features. With its advanced search capabilities, researchers can quickly find relevant studies from multiple databases, saving time and effort.

The tool offers comprehensive screening and data extraction functionalities, streamlining the review process and improving the reliability of findings. Real-time collaboration features also facilitate seamless teamwork among researchers.

While DistillerSR offers numerous advantages, there are a few considerations. Users should invest time in understanding the tool’s features and functionalities to maximize its potential. Additionally, the pricing structure may be a factor for individual researchers or small teams with limited budgets.

Despite occasional technical glitches reported by some users, the developers actively address these issues through updates and improvements, ensuring a better user experience.

Overall, DistillerSR empowers researchers to navigate the vast sea of information, enhancing the quality and efficiency of literature reviews while fostering collaboration among research teams .

#5. Rayyan – AI Powered Tool for Systematic Literature Reviews

Rayyan is a powerful literature review tool that simplifies the research process for scholars and academics. With its user-friendly interface and efficient management features, Rayyan is highly regarded by researchers worldwide.

It allows users to import and organize large volumes of scholarly articles, making it easier to identify relevant studies for their research projects. The tool also facilitates seamless collaboration among team members, enhancing productivity and streamlining the research workflow.

However, it’s important to be aware of a few aspects. The free version of Rayyan has limitations, and upgrading to a premium subscription may be necessary for additional functionalities.

Users should also be mindful of occasional technical glitches and compatibility issues, promptly reporting any problems. Despite these considerations, Rayyan remains a valuable asset for researchers, providing an effective solution for literature review tasks.

Rayyan offers both free and paid plans:

- Professional: $8.25/month

- Student: $4/month

- Pro Team: $8.25/month

- Team+: $24.99/month

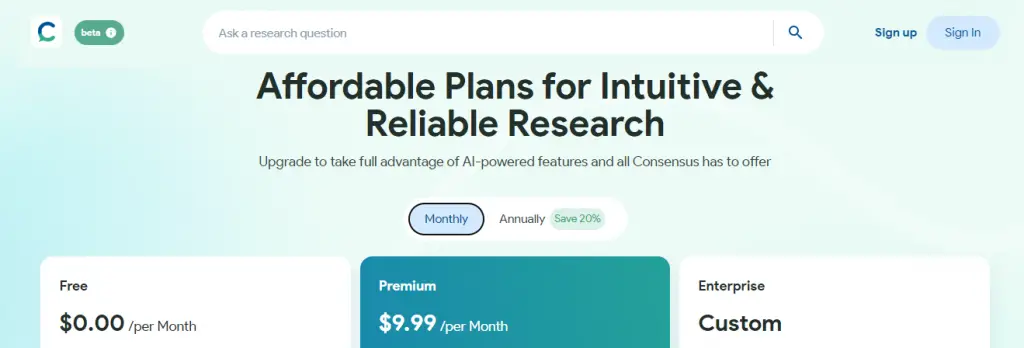

#6. Consensus – Use AI to find you answers in scientific research

Consensus is a cutting-edge literature review tool that has become a go-to choice for researchers worldwide. Its intuitive interface and powerful capabilities make it a preferred tool for navigating and analyzing scholarly articles.

With Consensus, researchers can save significant time by efficiently organizing and accessing relevant research material.People consider Consensus for several reasons.

Its advanced search algorithms and filters help researchers sift through vast amounts of information, ensuring they focus on the most relevant articles. By streamlining the literature review process, Consensus allows researchers to extract valuable insights and accelerate their research progress.

However, there are a few factors to watch out for when using Consensus. As with any automated tool, researchers should exercise caution and independently verify the accuracy and relevance of the generated results. Complex or niche topics may present challenges, resulting in limited search results. Researchers should also supplement Consensus with manual searches to ensure comprehensive coverage of the literature.

Overall, Consensus is a valuable resource for researchers seeking to optimize their literature review process. By leveraging its features alongside critical thinking and manual searches, researchers can enhance the efficiency and effectiveness of their work, advancing their research endeavors to new heights.

Consensus offers both free and paid plans:

- Premium: $9.99/month

- Enterprise: Custom

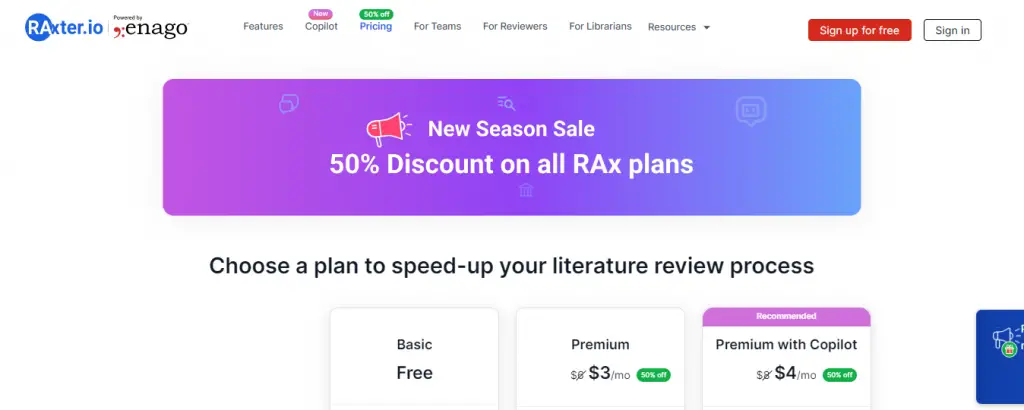

#7. RAx – AI-powered reading assistant

Consensus is a revolutionary literature review tool that has transformed the research process for scholars worldwide. With its user-friendly interface and advanced features, it offers a vast database of academic publications across various disciplines, providing access to relevant and up-to-date literature.

Using advanced algorithms and machine learning, Consensus delivers personalized recommendations, saving researchers time and effort in their literature search.

However, researchers should be cautious of potential biases in the recommendation system and supplement their search with manual verification to ensure a comprehensive review.

Additionally, occasional inaccuracies in metadata have been reported, making it essential for users to cross-reference information with reliable sources. Despite these considerations, Consensus remains an invaluable tool for enhancing the efficiency and quality of literature reviews.

RAx offers both free and paid plans. Currently offering 50% discounts as of July 2023:

- Premium: $6/month $3/month

- Premium with Copilot: $8/month $4/month

#8. Lateral – Advance your research with AI

“Lateral” is a revolutionary literature review tool trusted by researchers worldwide. With its user-friendly interface and powerful search capabilities, it simplifies the process of gathering and analyzing scholarly articles.

By leveraging advanced algorithms and machine learning, Lateral saves researchers precious time by retrieving relevant articles and uncovering new connections between them, fostering interdisciplinary exploration.

While Lateral provides numerous benefits, users should exercise caution. It is advisable to cross-reference its findings with other sources to ensure a comprehensive review.

Additionally, researchers must be mindful of potential biases introduced by the tool’s algorithms and should critically evaluate and interpret the results.

Despite these considerations, Lateral remains an indispensable resource, empowering researchers to delve deeper into their fields of study and make valuable contributions to the academic community.

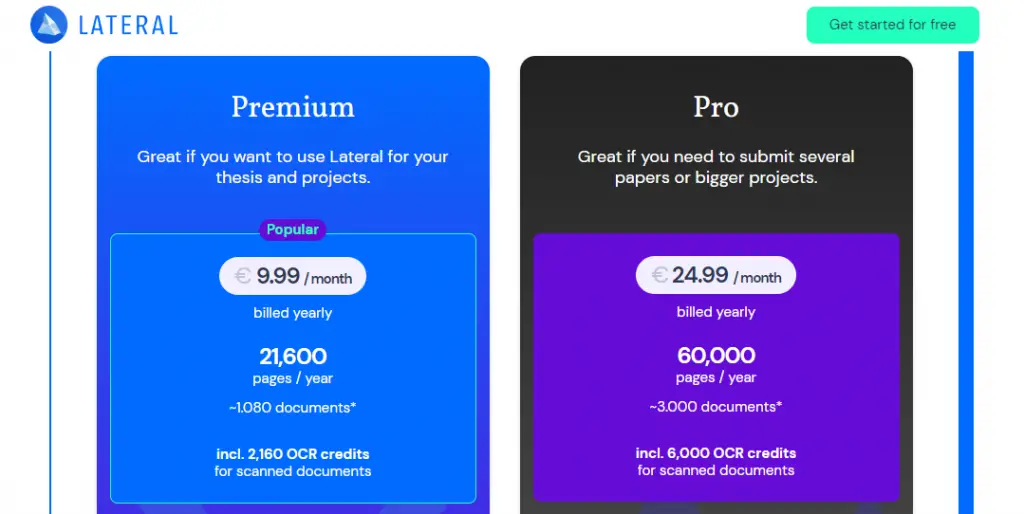

RAx offers both free and paid plans:

- Premium: $10.98

- Pro: $27.46

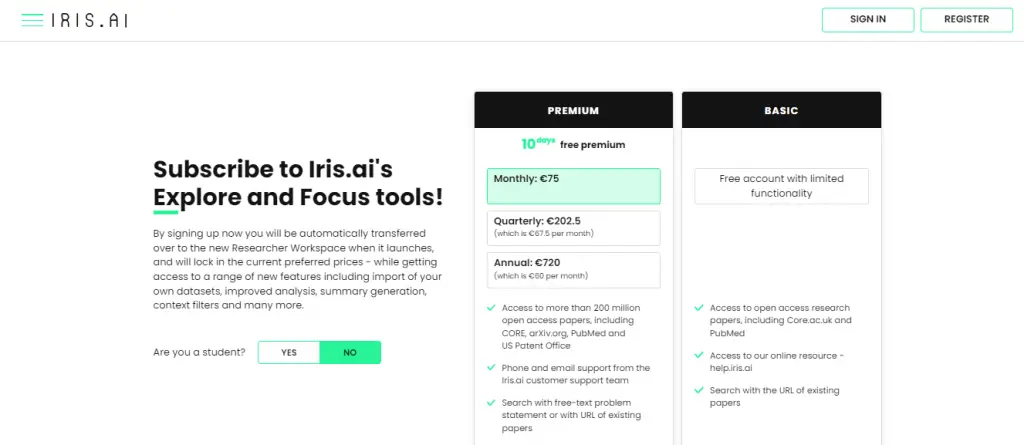

#9. Iris AI – Introducing the researcher workspace

Iris AI is an innovative literature review tool that has transformed the research process for academics and scholars. With its advanced artificial intelligence capabilities, Iris AI offers a seamless and efficient way to navigate through a vast array of academic papers and publications.

Researchers are drawn to this tool because it saves valuable time by automating the tedious task of literature review and provides comprehensive coverage across multiple disciplines.

Its intelligent recommendation system suggests related articles, enabling researchers to discover hidden connections and broaden their knowledge base. However, caution should be exercised while using Iris AI.

While the tool excels at surfacing relevant papers, researchers should independently evaluate the quality and validity of the sources to ensure the reliability of their work.

It’s important to note that Iris AI may occasionally miss niche or lesser-known publications, necessitating a supplementary search using traditional methods.

Additionally, being an algorithm-based tool, there is a possibility of false positives or missed relevant articles due to the inherent limitations of automated text analysis. Nevertheless, Iris AI remains an invaluable asset for researchers, enhancing the quality and efficiency of their research endeavors.

Iris AI offers different pricing plans to cater to various user needs:

- Basic: Free

- Premium: Monthly ($82.41), Quarterly ($222.49), and Annual ($791.07)

#10. Scholarcy – Summarize your literature through AI

Scholarcy is a powerful literature review tool that helps researchers streamline their work. By employing advanced algorithms and natural language processing, it efficiently analyzes and summarizes academic papers, saving researchers valuable time.

Scholarcy’s ability to extract key information and generate concise summaries makes it an attractive option for scholars looking to quickly grasp the main concepts and findings of multiple papers.

However, it is important to exercise caution when relying solely on Scholarcy. While it provides a useful starting point, engaging with the original research papers is crucial to ensure a comprehensive understanding.

Scholarcy’s automated summarization may not capture the nuanced interpretations or contextual information presented in the full text.

Researchers should also be aware that certain types of documents, particularly those with heavy mathematical or technical content, may pose challenges for the tool.

Despite these considerations, Scholarcy remains a valuable resource for researchers seeking to enhance their literature review process and improve overall efficiency.

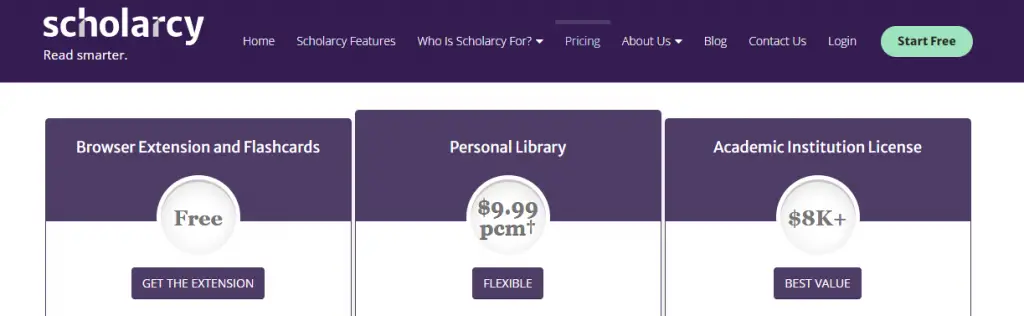

Scholarcy offer the following pricing plans:

- Browser Extension and Flashcards: Free

- Personal Library: $9.99

- Academic Institution License: $8K+

Final Thoughts

In conclusion, conducting a comprehensive literature review is a crucial aspect of any research project, and the availability of reliable and efficient tools can greatly facilitate this process for researchers. This article has explored the top 10 literature review tools that have gained popularity among researchers.

Moreover, the rise of AI-powered tools like Iris.ai and Sci.ai promises to revolutionize the literature review process by automating various tasks and enhancing research efficiency.

Ultimately, the choice of literature review tool depends on individual preferences and research needs, but the tools presented in this article serve as valuable resources to enhance the quality and productivity of research endeavors.

Researchers are encouraged to explore and utilize these tools to stay at the forefront of knowledge in their respective fields and contribute to the advancement of science and academia.

Q1. What are literature review tools for researchers?

Literature review tools for researchers are software or online platforms designed to assist researchers in efficiently conducting literature reviews. These tools help researchers find, organize, analyze, and synthesize relevant academic papers and other sources of information.

Q2. What criteria should researchers consider when choosing literature review tools?

When choosing literature review tools, researchers should consider factors such as the tool’s search capabilities, database coverage, user interface, collaboration features, citation management, annotation and highlighting options, integration with reference management software, and data extraction capabilities.

It’s also essential to consider the tool’s accessibility, cost, and technical support.

Q3. Are there any literature review tools specifically designed for systematic reviews or meta-analyses?

Yes, there are literature review tools that cater specifically to systematic reviews and meta-analyses, which involve a rigorous and structured approach to reviewing existing literature. These tools often provide features tailored to the specific needs of these methodologies, such as:

Screening and eligibility assessment: Systematic review tools typically offer functionalities for screening and assessing the eligibility of studies based on predefined inclusion and exclusion criteria. This streamlines the process of selecting relevant studies for analysis.

Data extraction and quality assessment: These tools often include templates and forms to facilitate data extraction from selected studies. Additionally, they may provide features for assessing the quality and risk of bias in individual studies.

Meta-analysis support: Some literature review tools include statistical analysis features that assist in conducting meta-analyses. These features can help calculate effect sizes, perform statistical tests, and generate forest plots or other visual representations of the meta-analytic results.

Reporting assistance: Many tools provide templates or frameworks for generating systematic review reports, ensuring compliance with established guidelines such as PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses).

Q4. Can literature review tools help with organizing and annotating collected references?

Yes, literature review tools often come equipped with features to help researchers organize and annotate collected references. Some common functionalities include:

Reference management: These tools enable researchers to import references from various sources, such as databases or PDF files, and store them in a central library. They typically allow you to create folders or tags to organize references based on themes or categories.

Annotation capabilities: Many tools provide options for adding annotations, comments, or tags to individual references or specific sections of research articles. This helps researchers keep track of important information, highlight key findings, or note potential connections between different sources.

Full-text search: Literature review tools often offer full-text search functionality, allowing you to search within the content of imported articles or documents. This can be particularly useful when you need to locate specific information or keywords across multiple references.

Integration with citation managers: Some literature review tools integrate with popular citation managers like Zotero, Mendeley, or EndNote, allowing seamless transfer of references and annotations between platforms.

By leveraging these features, researchers can streamline the organization and annotation of their collected references, making it easier to retrieve relevant information during the literature review process.

Leave a Comment Cancel reply

Save my name, email, and website in this browser for the next time I comment.

We maintain and update science journals and scientific metrics. Scientific metrics data are aggregated from publicly available sources. Please note that we do NOT publish research papers on this platform. We do NOT accept any manuscript.

2012-2024 © scijournal.org

All-in-one Literature Review Software

Start your free trial.

Free MAXQDA trial for Windows and Mac

Your trial will end automatically after 14 days and will not renew. There is no need for cancelation.

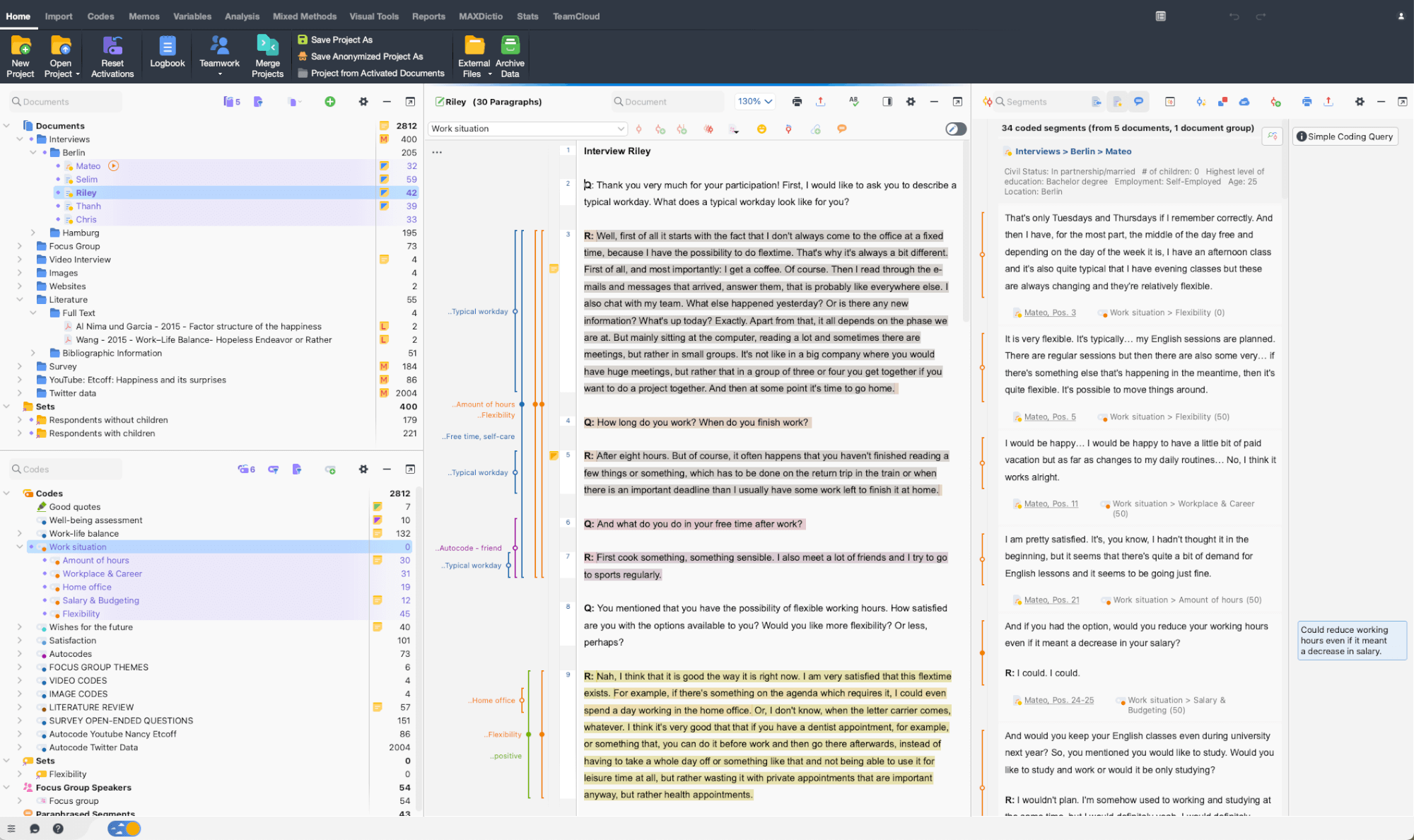

MAXQDA The All-in-one Literature Review Software

MAXQDA is the best choice for a comprehensive literature review. It works with a wide range of data types and offers powerful tools for literature review, such as reference management, qualitative, vocabulary, text analysis tools, and more.

Document viewer

Your analysis.

As your all-in-one literature review software, MAXQDA can be used to manage your entire research project. Easily import data from texts, interviews, focus groups, PDFs, web pages, spreadsheets, articles, e-books, and even social media data. Connect the reference management system of your choice with MAXQDA to easily import bibliographic data. Organize your data in groups, link relevant quotes to each other, keep track of your literature summaries, and share and compare work with your team members. Your project file stays flexible and you can expand and refine your category system as you go to suit your research.

Developed by and for researchers – since 1989

Having used several qualitative data analysis software programs, there is no doubt in my mind that MAXQDA has advantages over all the others. In addition to its remarkable analytical features for harnessing data, MAXQDA’s stellar customer service, online tutorials, and global learning community make it a user friendly and top-notch product.

Sally S. Cohen – NYU Rory Meyers College of Nursing

Literature Review is Faster and Smarter with MAXQDA

Easily import your literature review data

With a literature review software like MAXQDA, you can easily import bibliographic data from reference management programs for your literature review. MAXQDA can work with all reference management programs that can export their databases in RIS-format which is a standard format for bibliographic information. Like MAXQDA, these reference managers use project files, containing all collected bibliographic information, such as author, title, links to websites, keywords, abstracts, and other information. In addition, you can easily import the corresponding full texts. Upon import, all documents will be automatically pre-coded to facilitate your literature review at a later stage.

Capture your ideas while analyzing your literature

Great ideas will often occur to you while you’re doing your literature review. Using MAXQDA as your literature review software, you can create memos to store your ideas, such as research questions and objectives, or you can use memos for paraphrasing passages into your own words. By attaching memos like post-it notes to text passages, texts, document groups, images, audio/video clips, and of course codes, you can easily retrieve them at a later stage. Particularly useful for literature reviews are free memos written during the course of work from which passages can be copied and inserted into the final text.

Find concepts important to your generated literature review

When generating a literature review you might need to analyze a large amount of text. Luckily MAXQDA as the #1 literature review software offers Text Search tools that allow you to explore your documents without reading or coding them first. Automatically search for keywords (or dictionaries of keywords), such as important concepts for your literature review, and automatically code them with just a few clicks. Document variables that were automatically created during the import of your bibliographic information can be used for searching and retrieving certain text segments. MAXQDA’s powerful Coding Query allows you to analyze the combination of activated codes in different ways.

Aggregate your literature review

When conducting a literature review you can easily get lost. But with MAXQDA as your literature review software, you will never lose track of the bigger picture. Among other tools, MAXQDA’s overview and summary tables are especially useful for aggregating your literature review results. MAXQDA offers overview tables for almost everything, codes, memos, coded segments, links, and so on. With MAXQDA literature review tools you can create compressed summaries of sources that can be effectively compared and represented, and with just one click you can easily export your overview and summary tables and integrate them into your literature review report.

Powerful and easy-to-use literature review tools

Quantitative aspects can also be relevant when conducting a literature review analysis. Using MAXQDA as your literature review software enables you to employ a vast range of procedures for the quantitative evaluation of your material. You can sort sources according to document variables, compare amounts with frequency tables and charts, and much more. Make sure you don’t miss the word frequency tools of MAXQDA’s add-on module for quantitative content analysis. Included are tools for visual text exploration, content analysis, vocabulary analysis, dictionary-based analysis, and more that facilitate the quantitative analysis of terms and their semantic contexts.

Visualize your literature review

As an all-in-one literature review software, MAXQDA offers a variety of visual tools that are tailor-made for qualitative research and literature reviews. Create stunning visualizations to analyze your material. Of course, you can export your visualizations in various formats to enrich your literature review analysis report. Work with word clouds to explore the central themes of a text and key terms that are used, create charts to easily compare the occurrences of concepts and important keywords, or make use of the graphical representation possibilities of MAXMaps, which in particular permit the creation of concept maps. Thanks to the interactive connection between your visualizations with your MAXQDA data, you’ll never lose sight of the big picture.

AI Assist: literature review software meets AI

AI Assist – your virtual research assistant – supports your literature review with various tools. AI Assist simplifies your work by automatically analyzing and summarizing elements of your research project and by generating suggestions for subcodes. No matter which AI tool you use – you can customize your results to suit your needs.

Free tutorials and guides on literature review