- Quality Improvement

- Talk To Minitab

Regression Analysis: How Do I Interpret R-squared and Assess the Goodness-of-Fit?

Topics: Regression Analysis

After you have fit a linear model using regression analysis, ANOVA, or design of experiments (DOE), you need to determine how well the model fits the data. To help you out, Minitab Statistical Software presents a variety of goodness-of-fit statistics. In this post, we’ll explore the R-squared (R 2 ) statistic, some of its limitations, and uncover some surprises along the way. For instance, low R-squared values are not always bad and high R-squared values are not always good!

What Is Goodness-of-Fit for a Linear Model?

Linear regression calculates an equation that minimizes the distance between the fitted line and all of the data points. Technically, ordinary least squares (OLS) regression minimizes the sum of the squared residuals.

In general, a model fits the data well if the differences between the observed values and the model's predicted values are small and unbiased.

Before you look at the statistical measures for goodness-of-fit, you should check the residual plots . Residual plots can reveal unwanted residual patterns that indicate biased results more effectively than numbers. When your residual plots pass muster, you can trust your numerical results and check the goodness-of-fit statistics.

What Is R-squared?

R-squared is a statistical measure of how close the data are to the fitted regression line. It is also known as the coefficient of determination, or the coefficient of multiple determination for multiple regression.

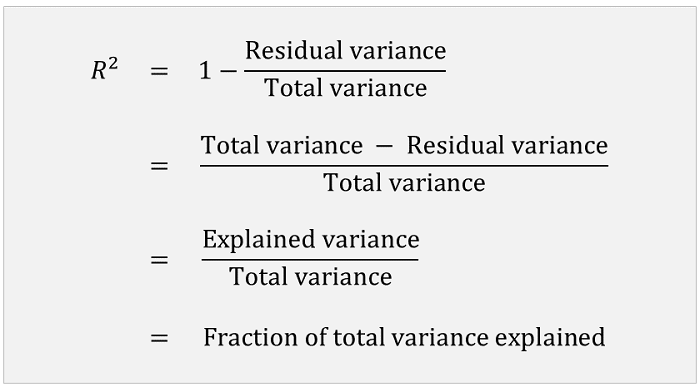

The definition of R-squared is fairly straight-forward; it is the percentage of the response variable variation that is explained by a linear model. Or:

R-squared = Explained variation / Total variation

R-squared is always between 0 and 100%:

- 0% indicates that the model explains none of the variability of the response data around its mean.

- 100% indicates that the model explains all the variability of the response data around its mean.

In general, the higher the R-squared, the better the model fits your data. However, there are important conditions for this guideline that I’ll talk about both in this post and my next post.

Graphical Representation of R-squared

Plotting fitted values by observed values graphically illustrates different R-squared values for regression models.

The regression model on the left accounts for 38.0% of the variance while the one on the right accounts for 87.4%. The more variance that is accounted for by the regression model the closer the data points will fall to the fitted regression line. Theoretically, if a model could explain 100% of the variance, the fitted values would always equal the observed values and, therefore, all the data points would fall on the fitted regression line.

Ready for a demo of Minitab Statistical Software? Just ask!

Key Limitations of R-squared

R-squared cannot determine whether the coefficient estimates and predictions are biased, which is why you must assess the residual plots.

R-squared does not indicate whether a regression model is adequate. You can have a low R-squared value for a good model, or a high R-squared value for a model that does not fit the data!

The R-squared in your output is a biased estimate of the population R-squared .

ArE LOW R-SQUARED VALUES INHERENTLY BAD?

No! There are two major reasons why it can be just fine to have low R-squared values.

In some fields, it is entirely expected that your R-squared values will be low. For example, any field that attempts to predict human behavior, such as psychology, typically has R-squared values lower than 50%. Humans are simply harder to predict than, say, physical processes.

Furthermore, if your R-squared value is low but you have statistically significant predictors, you can still draw important conclusions about how changes in the predictor values are associated with changes in the response value. Regardless of the R-squared, the significant coefficients still represent the mean change in the response for one unit of change in the predictor while holding other predictors in the model constant. Obviously, this type of information can be extremely valuable.

See a graphical illustration of why a low R-squared doesn't affect the interpretation of significant variables.

A low R-squared is most problematic when you want to produce predictions that are reasonably precise (have a small enough prediction interval ). How high should the R-squared be for prediction? Well, that depends on your requirements for the width of a prediction interval and how much variability is present in your data. While a high R-squared is required for precise predictions, it’s not sufficient by itself, as we shall see.

Are High R-squared Values Inherently Good?

No! A high R-squared does not necessarily indicate that the model has a good fit. That might be a surprise, but look at the fitted line plot and residual plot below. The fitted line plot displays the relationship between semiconductor electron mobility and the natural log of the density for real experimental data.

The fitted line plot shows that these data follow a nice tight function and the R-squared is 98.5%, which sounds great. However, look closer to see how the regression line systematically over and under-predicts the data (bias) at different points along the curve. You can also see patterns in the Residuals versus Fits plot, rather than the randomness that you want to see. This indicates a bad fit, and serves as a reminder as to why you should always check the residual plots.

This example comes from my post about choosing between linear and nonlinear regression . In this case, the answer is to use nonlinear regression because linear models are unable to fit the specific curve that these data follow.

However, similar biases can occur when your linear model is missing important predictors, polynomial terms, and interaction terms. Statisticians call this specification bias, and it is caused by an underspecified model. For this type of bias, you can fix the residuals by adding the proper terms to the model.

For more information about how a high R-squared is not always good a thing, read my post Five Reasons Why Your R-squared Can Be Too High .

Closing Thoughts on R-squared

R-squared is a handy, seemingly intuitive measure of how well your linear model fits a set of observations. However, as we saw, R-squared doesn’t tell us the entire story. You should evaluate R-squared values in conjunction with residual plots, other model statistics, and subject area knowledge in order to round out the picture (pardon the pun).

While R-squared provides an estimate of the strength of the relationship between your model and the response variable, it does not provide a formal hypothesis test for this relationship. The F-test of overall significance determines whether this relationship is statistically significant.

In my next blog, we’ll continue with the theme that R-squared by itself is incomplete and look at two other types of R-squared: adjusted R-squared and predicted R-squared . These two measures overcome specific problems in order to provide additional information by which you can evaluate your regression model’s explanatory power.

For more about R-squared, learn the answer to this eternal question: How high should R-squared be?

If you're learning about regression, read my regression tutorial !

You Might Also Like

- Trust Center

© 2023 Minitab, LLC. All Rights Reserved.

- Terms of Use

- Privacy Policy

- Cookies Settings

R squared of a linear regression

by Marco Taboga , PhD

How good is a linear regression model in predicting the output variable on the basis of the input variables?

How much of the variability in the output is explained by the variability in the inputs of a linear regression?

The R squared of a linear regression is a statistic that provides a quantitative answer to these questions.

Table of contents

The linear regression model

Sample variance of the outputs, sample variance of the residuals, definition of r squared, properties and interpretation, alternative definition, adjusted r squared, interpretation of the adjusted r squared, more details about the degrees-of-freedom adjustment.

Before defining the R squared of a linear regression, we warn our readers that several slightly different definitions can be found in the literature.

Usually, these definitions are equivalent in the special, but important case in which the linear regression includes a constant among its regressors.

We choose a definition that is easy to understand, and then we make some brief comments about other definitions.

![reporting r squared regression [eq4]](https://statlect.com/images/R-squared-of-a-linear-regression__13.png)

Intuitively, when the predictions of the linear regression model are perfect, then the residuals are always equal to zero and their sample variance is also equal to zero.

On the contrary, the less the predictions of the linear regression model are accurate, the highest the variance of the residuals is.

We are now ready to give a definition of R squared.

Thus, the R squared is a decreasing function of the sample variance of the residuals : the higher the sample variance of the residuals is, the smaller the R squared is.

Note that the R squared cannot be larger than 1 : it is equal to 1 when the sample variance of the residuals is zero, and it is smaller than 1 when the sample variance of the residuals is strictly positive.

The R squared is equal to 0 when the variance of the residuals is equal to the variance of the outputs, that is, when predicting the outputs with the regression model is no better than using the sample mean of the outputs as a prediction.

![reporting r squared regression [eq9]](https://statlect.com/images/R-squared-of-a-linear-regression__30.png)

Check the Wikipedia article for other definitions.

![reporting r squared regression [eq10]](https://statlect.com/images/R-squared-of-a-linear-regression__32.png)

The intuition behind the adjustment is as follows.

The degrees-of-freedom adjustment allows us to take this fact into consideration and to avoid under-estimating the variance of the error terms .

The bias is downwards, that is, they tend to underestimate their population counterparts.

How to cite

Please cite as:

Taboga, Marco (2021). "R squared of a linear regression", Lectures on probability theory and mathematical statistics. Kindle Direct Publishing. Online appendix. https://www.statlect.com/fundamentals-of-statistics/R-squared-of-a-linear-regression.

Most of the learning materials found on this website are now available in a traditional textbook format.

- Point estimation

- Multivariate normal distribution

- Hypothesis testing

- Maximum likelihood

- Gamma function

- Chi-square distribution

- Binomial distribution

- Delta method

- Mathematical tools

- Fundamentals of probability

- Probability distributions

- Asymptotic theory

- Fundamentals of statistics

- About Statlect

- Cookies, privacy and terms of use

- Continuous random variable

- Precision matrix

- Probability density function

- Type I error

- To enhance your privacy,

- we removed the social buttons,

- but don't forget to share .

If you're seeing this message, it means we're having trouble loading external resources on our website.

If you're behind a web filter, please make sure that the domains *.kastatic.org and *.kasandbox.org are unblocked.

To log in and use all the features of Khan Academy, please enable JavaScript in your browser.

AP®︎/College Statistics

Course: ap®︎/college statistics > unit 5.

- R-squared intuition

R-squared or coefficient of determination

- Standard deviation of residuals or root mean square deviation (RMSD)

- Interpreting computer regression data

- Interpreting computer output for regression

- Impact of removing outliers on regression lines

- Influential points in regression

- Effects of influential points

- Identify influential points

- Transforming nonlinear data

- Worked example of linear regression using transformed data

- Predict with transformed data

Want to join the conversation?

- Upvote Button navigates to signup page

- Downvote Button navigates to signup page

- Flag Button navigates to signup page

Video transcript

How to interpret R Squared (simply explained)

R Squared is a common regression machine learning metric, but it can be confusing to know how to interpret the values. In this post, I explain what R Squared is, how to interpret the values and walk through an example.

What is R Squared

R Squared (also known as R2) is a metric for assessing the performance of regression machine learning models. Unlike other metrics, such as MAE or RMSE , it is not a measure of how accurate the predictions are, but instead a measure of fit. R Squared measures how much of the dependent variable variation is explained by the independent variables in the model.

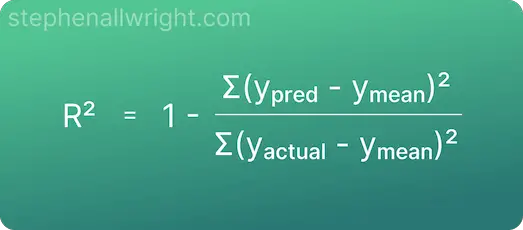

R Squared mathematical formula

The formula for calculating R Squared is as follows:

How to interpret R Squared

R Squared can be interpreted as the percentage of the dependent variable variance which is explained by the independent variables. Put simply, it measures the extent to which the model features can be used to explain the model target.

For example, an R Squared value of 0.9 would imply that 90% of the target variance can be explained by the model features, whilst a value of 0.2 would suggest that the model features are only able to account for 20% of the variance.

R Squared valued interpretation

Now that we understand how to interpret the meaning of R Squared , let’s look at how to interpret the different values that it can produce. This will be dependent upon your use case and dataset, but a general rule that I follow is:

R Squared interpretation example

Let’s use our understanding from the previous sections to walk through an example. I will be calculating the R Squared value and subsequent interpretation for an example where we want to understand how much of the Height variance can be explained by Shoe Size.

The R Squared value for these predictions is:

R Squared = 0.88

The interpretation of this value is:

88% of the variance in Height is explained by Shoe Size, which is commonly seen as a significant amount of the variance being explained.

Related articles

Regression metrics.

What is the interpretation of MAPE? What is the interpretation of RMSE? What is the interpretation of MSE? What is the interpretation of MAE?

Metric calculators

R Squared calculator Coefficient of determination calculator

R2 scikit-learn documentation

Stephen Allwright Twitter

I'm a Data Scientist currently working for Oda, an online grocery retailer, in Oslo, Norway. These posts are my way of sharing some of the tips and tricks I've picked up along the way.

Check out our handy topic pages

Interpret metric values

Use Snowflake in Python

Feature engineering with Pandas

Statistics Made Easy

How to Calculate R-Squared for glm in R

Often when we fit a linear regression model, we use R-squared as a way to assess how well a model fits the data.

R-squared represents the proportion of the variance in the response variable that can be explained by the predictor variables in a regression model.

This number ranges from 0 to 1, with higher values indicating a better model fit.

However, there is no such R-squared value for general linear models like logistic regression models and Poisson regression models.

Instead, we can calculate a metric known as McFadden’s R-Squared , which ranges from 0 to just under 1, with higher values indicating a better model fit.

We use the following formula to calculate McFadden’s R-Squared:

McFadden’s R-Squared = 1 – (log likelihood model / log likelihood null )

- log likelihood model : Log likelihood value of current fitted model

- log likelihood null : Log likelihood value of null model (model with intercept only)

In practice, values over 0.40 indicate that a model fits the data very well.

The following example shows how to calculate McFadden’s R-Squared for a logistic regression model in R.

Example: Calculating McFadden’s R-Squared in R

For this example, we’ll use the Default dataset from the ISLR package. We can use the following code to load and view a summary of the dataset:

This dataset contains the following information about 10,000 individuals:

- default: Indicates whether or not an individual defaulted.

- student: Indicates whether or not an individual is a student.

- balance: Average balance carried by an individual.

- income: Income of the individual.

We will use student status, bank balance, and income to build a logistic regression model that predicts the probability that a given individual defaults:

Next, we’ll use the following formula to calculate McFadden’s R-squared value for this model:

McFadden’s R-squared value turns out to be 0.4619194 . This value is fairly high, which indicates that our model fits the data well and has high predictive power.

Also note that we could use the pR2() function from the pscl package to calculate McFadden’s R-square value for the model as well:

Notice that this value matches the one calculated earlier.

Additional Resources

The following tutorials explain how to perform other common tasks in R:

How to Calculate R-Squared in R How to Calculate Adjusted R-Squared in R What is a Good R-squared Value?

Featured Posts

Hey there. My name is Zach Bobbitt. I have a Masters of Science degree in Applied Statistics and I’ve worked on machine learning algorithms for professional businesses in both healthcare and retail. I’m passionate about statistics, machine learning, and data visualization and I created Statology to be a resource for both students and teachers alike. My goal with this site is to help you learn statistics through using simple terms, plenty of real-world examples, and helpful illustrations.

3 Replies to “How to Calculate R-Squared for glm in R”

Hi, I get slightly different values when I calculate McFadden’s R-squared using the first method compared to the second for the same glm output. Method 1: #calculate McFadden’s R-squared for model with(summary(model), 1 – deviance/null.deviance)

Method 2: #calculate McFadden’s R-squared for model pR2(model)[‘McFadden’]

Any idea why?

Hi, I’ve tried running both the pR2 and the with(summary(model) methods for 7 of my models and have got different results from the two methods for each model. Do you know why? My models are negative binomial and binomial GLMs.

Hi, Thank you for this wonderful source. I just have one question. Can I use the pR2() function you mentioned above for Poisson regression as well?

This is my model: model <- glm (UC ~ Pasture + Rainfall + Temp + offset(log(Pop)), family = poisson(link = "log"), data = data)

I installed the package and just typed: pR2(model)['McFadden'] and the result is McFadden: 0.6536465

Do you think this result is correct? I would be grateful if you let me know. Thanks a lot.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Join the Statology Community

Sign up to receive Statology's exclusive study resource: 100 practice problems with step-by-step solutions. Plus, get our latest insights, tutorials, and data analysis tips straight to your inbox!

By subscribing you accept Statology's Privacy Policy.

R-Squared: Definition, Calculator, Formula, Uses, and Pros & Cons (Finance)

R-squared is a statistical measure that indicates the extent to which data aligns with a regression model. It quantifies how much of the variance in the dependent variable can be accounted for by the model, with R-squared values spanning from 0 to 1—higher numbers typically signify superior fit.

Grasping R-squared is important for evaluating predictive accuracy and dependability within various disciplines such as finance, research, and data science.

The article explores how it’s calculated, its meaning, and its constraints to underscore why R-squared remains fundamental to understanding regression analysis.

Table of contents:

Key Takeaways

- R-squared, or R², is a statistical measure in regression analysis that represents the proportion of the variance for a dependent variable explained by an independent variable or variables, with values ranging from 0 to 1.

- A high R-squared value suggests a better fit of the model to the data; however, it does not confirm causation, indicate the correctness of the model, or guarantee that the model is unbiased and without overfitting.

- Adjusted R-squared is a modified version of R-squared that accounts for the number of predictors in a model, penalizing the addition of irrelevant variables, and is more reliable when comparing models with different numbers of predictors.

R-squared Introduction

R-squared is a statistical measure in linear regression models that indicates how well the model fits the dependent variable. Essentially, it provides insight into the strength of association between our model and what we’re aiming to forecast or understand.

In regression analysis, R-squared quantifies what portion of variance in the dependent variable can be explained by both dependent and independent variables working together. The independent variables are those predictors we utilize for forecasting outcomes related to the dependent variable—which is ultimately at the core of our predictive analysis.

Elaborating on its role within regression analysis, R-squared measures how much variability in our predicted value (the dependent variable) can be accounted for by changes in our predictor(s), known as independent variables. An R-squared statistic reveals how much variation within your observed data points these predictors have managed to capture.

If you wonder about where R-squared values may fall numerically speaking—these statistics vary from 0%, indicating no explanation power over response data variability around their mean, up to 100%, signifying complete explanatory capacity regarding all fluctuations present within these data points.

An elevated r squared value closer to one signals that there’s substantial agreement between your proposed mathematical representation (model) and actual real-world observations—we would say such a model explains a great deal about why certain data patterns appear as they do.

What Is R-Squared?

R-Squared is a statistical measure. It quantifies the proportion of the variance in the dependent variable that is predictable from the independent variable(s).

A deeper look into R-squared, or R2, reveals that it quantifies the share of the dependent variable’s variance that can be predicted from an independent variable in a regression model. R-squared values fall between 0 and 1, frequently represented as percentages ranging from 0% to 100%.

What information does an R-squared value convey?

Primarily, R-squared communicates the extent to which the regression model explains the observed data.

For instance, an R-squared of 60% indicates that the model explains 60% of the variability in the target variable. While a high R-squared is typically seen as desirable, indicating that the model explains more variability, it does not automatically mean the model is good. The measure’s utility depends on various factors like the nature and units of the variables, and any data transformations applied.

Worth mentioning is that:

- A low R-squared is generally viewed as unfavorable for predictive models

- However, there can be situations where a competent model may still yield a low R-squared value

- The context in which R-squared is used is crucial, as its significance can vary depending on the specific application or scenario being considered.

Lastly, it’s key to understand that R-squared doesn’t inform us about the causal relationship between the independent and dependent variables, nor does it validate the accuracy of the regression model.

What is the Formula for R-Squared?

The formula for R-Squared is:

- R-Squared equals 1 minus (SSR divided by SST)

Now that we understand what R-squared is, we can proceed to its calculation:

The formula for R-squared is expressed as R2 = 1 – (sum squared regression (SSR)/total sum of squares (SST).

The sum squared regression (SSR) is the sum of the squared differences between the predicted values and the actual values.

The total sum of squares (SST) represents the sum of the squares of the differences between each actual value and the overall mean of the data set.

To clarify, the residual sum of squares (SSres), defined as the sum of the squares of the residuals, are the differences between the observed values and the predicted values by the model.

The sum of squares (SStot) quantifies the variance in the observed data and is calculated as the sum of the squares of the differences between the observed values and their mean.

R2 can also be seen as:

- The square of the correlation coefficient between the observed and modeled (predicted) data values of the dependent variable when the model includes an intercept term.

- In the case of a simple linear regression, R² is the square of the Pearson correlation coefficient between the independent variable and the dependent variable.

- When multiple regression analysis is performed, R² represents the square of the coefficient of multiple correlation.

How to Use:

- Enter Actual Values: Input the actual values of the dependent variable separated by commas. For example: 10, 20, 30.

- Enter Predicted Values: Input the predicted values of the dependent variable corresponding to the actual values entered earlier, separated by commas. For example: 12, 18, 32.

- Click “Calculate R-squared”: Once you’ve entered the actual and predicted values, click the button to calculate the R-squared value.

- Interpretation: The R-squared value ranges from 0 to 1. A value closer to 1 indicates that a higher proportion of the variance in the dependent variable is explained by the independent variable(s). Conversely, a value closer to 0 suggests a weaker relationship between the variables.

Note: Ensure that the number of actual values matches the number of predicted values, and both sets of values are separated by commas.

Try it out and explore the relationship between your variables!

How is R-Squared Calculated?

R-squared is calculated by determining the sum of squared differences between the observed values and the predicted values of the dependent variable. Then, you calculate the total sum of squares, which represents the total variance in the dependent variable. Finally, divide the sum of squared differences by the total sum of squares and subtract the result from 1.

This yields the R-squared value, which indicates the proportion of variance in the dependent variable explained by the independent variable(s). Having understood the formula for R-squared, we can now delve into its calculation process. R-squared is calculated as:

R2 = 1 – (sum squared regression (SSR) / total sum of squares (SST)).

To calculate R-squared, follow these steps:

- Find the residuals for each data point, which are the differences between the actual values and the predicted values obtained from the regression line equation.

- Square these residuals.

- Sum up the squared residuals to obtain the sum squared regression (SSR).

Calculating the total sum of squares (SST) requires finding the mean of the actual values (Y), and then summing up the squared differences between each actual value and the mean. The final step in calculating R-squared is to subtract the ratio of SSR to SST from 1, which yields the R-squared value indicating the proportion of variance in the dependent variable explained by the independent variables.

To put it simply, to calculate R-squared, the first sum of errors, also known as unexplained variance, is obtained by taking the residuals from the regression model, squaring them, and summing them up. The total variance is calculated by subtracting the average actual value from each actual value, squaring the results, and then summing them up.

Then, R-squared is computed by dividing the sum of errors (unexplained variance) by the sum of total variance, subtracting the result from one, and converting to a percentage if desired.

What is the difference between R-Squared vs. Adjusted R-Squared?

The difference between R-Squared and Adjusted R-Squared lies in how they account for the number of predictors in the model.

R-squared measures the proportion of variance explained by the independent variables, while Adjusted R-squared adjusts for the number of predictors, penalizing unnecessary variables to provide a more accurate reflection of the model’s goodness of fit.

After understanding R-squared, we now focus on adjusted R-squared, a related yet distinct measure. R-squared measures the variation explained by a regression model and can increase or stay the same with adding new predictors, regardless of their relevance. On the other hand, adjusted R-squared increases only if the newly added predictor improves the model’s predictive power, penalizing the addition of irrelevant predictors.

While R-squared is suitable for simple linear regression models, adjusted R-squared is a more reliable for assessing the goodness of fit in multiple regression models. R-squared can give a misleading indication of model performance as it tends to overstate the model’s predictive ability when irrelevant variables are included. In contrast, adjusted R-squared adjusts for the number of predictors and only rewards the model if the new predictors have a real impact.

The formula for adjusted R-squared incorporates the number of predictors (k) and the number of observations (n): Adjusted R-squared = 1 – [(1-R2)(n-1)/(n-k-1)], where R2 is the R-squared value.

Adjusted R-squared provides a more accurate measure for comparing the explanatory power of models with different numbers of predictors, making it more suitable for model selection in multiple regression scenarios.

What is the difference between R-Squared vs. Beta?

The difference between R-Squared and Beta lies in their respective functions. R-Squared assesses the goodness of fit of a regression model, indicating how well the independent variable explains the variation in the dependent variable.

Beta, on the other hand, measures the sensitivity of an asset’s returns to changes in the market returns, revealing the level of systematic risk or volatility associated with the asset.

Within investment analysis, two measures of correlation commonly encountered are R-squared and beta. R-square measures how much the returns of a security are explained by the market index returns, considering both alpha and beta. In contrast, Beta measures the sensitivity of a security’s returns to the returns of a market index.

Beta is a numerical value that indicates the degree to which a security’s returns follow the market index. Here are some key points about beta.

- A beta of 1 suggests that the security’s price movement is aligned with the market index.

- A high beta indicates that the security is more volatile compared to the market index.

- A low beta suggests lower volatility relative to the market.

On the other hand, R-squared is also known as the coefficient of determination and shows the proportion of variation in the security’s return due to the market return, given the estimated values of alpha and beta.

The reliability of alpha and beta as performance measures is considered questionable for assets with R-squared figures below 50, due to insufficient correlation with the benchmark. So, as we can see, while R-squared and beta are related, they offer different insights and are used for different purposes in investment analysis.

What are the Limitations of R-Squared?

The limitations of R-squared include its inability to determine causation, failure to indicate bias in coefficient estimates and predictions, and its dependence on meeting the assumptions of linear regression, which may not always hold in real-world data.

As with any statistical measure, R-squared comes with its limitations. Although it measures the proportion of variance for a dependent variable explained by an independent variable, it does not indicate whether the chosen model is appropriate or whether the data and predictions are unbiased.

It is worth noting that a high R-squared value does not always indicate that the model is a good fit. This is an important consideration in evaluating the accuracy of the model. A model can have a high R-squared and still be poorly fitted to the data. This phenomenon is overfitting, where the model fits the sample’s random quirks rather than representing the underlying relationship.

Low R-squared values are not always problematic, as some fields have greater unexplainable variation and significant coefficients can still provide valuable insights. An overfit model or a model resulting from data mining can exhibit high R-squared values even for random data, which can be misleading and deceptive.

R-squared alone is not sufficient for making precise predictions and can be problematic if narrow prediction intervals are needed for the application at hand.

What is a ‘good’ R-squared value?

A “good” R-squared value indicates a strong relationship between the dependent and independent variables, typically ranging between 0.7 and 1.

What then, constitutes a ‘good’ R-squared value? A good R-squared value accurately reflects the percentage of the dependent variable variation that the linear model explains, but there is no universal threshold that defines a ‘good’ value.

The appropriateness of an R-squared value is context-dependent; studies predicting human behavior often have R-squared values less than 50%, whereas physical processes with precise measurements might have values over 90%. Comparing an R-squared value to those from similar studies can provide insight into whether the R-squared is reasonable for a given context.

When using a regression model for prediction, R-squared is a consideration, as lower values correspond to more error and less precise predictions. To assess the precision of predictions, instead of focusing on R-squared, one should evaluate the prediction intervals to determine if they are narrow enough to be useful.

What does an R-squared value of 0.9 mean?

An R-squared value of 0.9 means that in the context of regression analysis, the independent variables account for 90% of the variability observed in the dependent variable.

This high r-squared value tends to indicate a tight correlation between data points and the fitted regression line, suggesting that our model is a good fit for our observed dataset. Even with a high R-squared like 0.9 indicating strong associations between independent and dependent variables, we cannot conclusively say that predictions will be precise or unbiased based on this metric alone.

Despite having such a high r squared score, possible issues with non-linearity or anomalies within the data are not ruled out. Hence Inspection is crucial using visual aids like scatter diagrams and residual plots to truly assess whether underlying problems are unaccounted for by just looking at an R-squared value.

Is a higher R-squared better?

A higher R-squared is not always better. A higher R-squared value does not necessarily mean a regression model is good; models with high R-squared values can still be biased.

In the case of multiple regression models with several independent variables, R-squared must be adjusted as it can be artificially inflated by simply adding more variables, regardless of their relevance. Overfitting can occur, leading to a misleadingly high R-squared value, even when the model does not predict well.

R-squared alone is insufficient for making precise predictions and can be problematic if narrow prediction intervals are needed for the application.

Why is R-squared important in investing?

R-squared is important in investing because it helps investors understand the proportion of a portfolio’s variability that changes in a benchmark index can explain.

R-squared in investing represents the percentage of a fund’s or security’s movements that movements in a benchmark index can explain. This provides an insight into the performance in relation to market or benchmark movements.

A high R-squared value, ranging from 85% to 100%, suggests that the stock or fund’s performance closely matches the index, which can be particularly valuable for investors looking for investments that follow market trends. An R-squared of 100% indicates that the independent variable entirely explains the movements of a dependent variable.

A lower R-squared value, such as 70% or below, indicates that the stock or fund does not closely follow the index’s movements. R-squared can identify how well a mutual fund or ETF tracks its benchmark, which is crucial for funds designed to replicate the performance of a particular index.

R-squared provides investors with a thorough picture of an asset manager’s performance relative to market movements when used in conjunction with beta.

Can R-Squared Help Assess Risk in Investments?

Yes, R-squared can help assess risk in investments by indicating how much of an investment’s variability can be explained by changes in the market, thus providing insight into its relative stability or volatility.

A high R-squared value indicates a strong correlation between the fund’s performance and its benchmark, suggesting that the asset’s performance is closely tied to the benchmark’s. Investments with high R-Squared values, ranging from 85% to 100%, indicate that the performance of the stock or fund closely follows the index, making R-Squared analysis appropriate for these scenarios.

On the other hand, a low R-squared value indicates that the fund does not generally follow the movements of the index, which may appeal to investors seeking active management strategies that diverge from market trends. R-squared can be particularly beneficial in assessing the performance of asset managers and the trustworthiness of the beta of securities.

Is R-Squared Useful for Analyzing Mutual Funds or ETFs?

R-squared is useful to some extent for analyzing mutual funds or ETFs, but it’s not the sole determinant of their performance or suitability.

R-squared measures how closely the performance of a mutual fund or ETF can be attributed to a selected benchmark index. A high R-squared value between 85 to 100 indicates a fund with a good correlation to its benchmark, and is thus useful for evaluating index-tracking mutual funds or ETFs.

When combined with beta and alpha, R-squared can provide a comprehensive picture of a fund’s performance in relation to its benchmark, aiding in the assessment of an asset manager’s effectiveness.

A mutual fund or ETF with a low R-squared is not necessarily a poor investment, but its performance is less related to its benchmark, which might make R-squared less useful in analysis for some investment strategies.

In summary, R-squared can offer valuable insights when analyzing mutual funds or ETFs, helping investors make informed decisions.

What are the pros of R-Squared?

The pros of R-Squared lie in its ability to provide a straightforward measure of how well the independent variables explain the variability of the dependent variable in a regression model.

After exploring the uses of R-squared, it’s time to highlight its advantages. R-squared measures the proportion of variance for a dependent variable that’s explained by an independent variable, providing a clear, quantitative value for the strength of the relationship.

In investment analysis, R-squared determines how well movements in a benchmark index can explain a fund or security’s price movements. A high R-squared value (from 85% to 100%) indicates strong correlation with the index, which can be useful for investors seeking performance that tracks an index closely.

R-squared provides investors with a thorough picture of an asset manager’s performance relative to market movements when used in conjunction with beta. It provides a simple measure that can be easily compared across different models or investments, facilitating easier decision-making based on the proportion of explained variability.

What are the cons of R-Squared?

The cons of R-Squared include its inability to determine causation and its susceptibility to misleading interpretations due to outliers or non-linear relationships in the data.

Despite its numerous advantages, R-squared is not without certain limitations. Although it measures the proportion of variance for a dependent variable that’s explained by an independent variable, it does not indicate whether the chosen model is appropriate or whether the data and predictions are unbiased.

R-squared cannot determine whether the coefficient estimates and predictions are biased, which is an important aspect of a good regression model. A high R-squared value does not always indicate that the model fits well. It is important to consider other factors as well. In fact, a model can have a high R-squared and still be poorly fitted to the data.

For what kind of investments are R-Squared best suited?

R-Squared is best suited for investments where the objective is to assess how much of a fund’s or security’s price movements can be explained by movements in a benchmark index.

Fixed-income securities, compared against bond indices, and stocks, compared against indices like the S&P 500, are common investments for applying R-squared analysis. For investors who are focused on value investing or long-term growth potential, R-Squared can help determine the influence of market movements on their investment strategies.

A high R-squared value indicates that a mutual fund’s performance is closely related to the benchmark, suggesting that the benchmark’s movements significantly impact the fund. So, as we can see, R-squared is well-suited for various investments, particularly when combined with other metrics for a thorough analysis.

Can R-squared be negative?

R-squared can be negative when the curve does a bad job of fitting the data. This can happen when you fit a badly chosen model or perhaps because the model was fit to a different data set.

It’s an intriguing and common question: can R-squared values drop below zero? Indeed, they can. An R-squared value might turn negative when a regression model demonstrates worse predictive capability than simply drawing a horizontal line, this forms the basis of our null hypothesis.

When dealing with a linear regression model that yields a negative R squared value, it signals that the model fails to capture the trend within the data. In other words, rather than using this poorly fitting model, you would have been better off assuming there was no relationship at all. Such scenarios often arise when constraints are imposed on regression models — for instance by fixing intercepts — leading to outcomes less accurate than what we’d expect from a simple horizontal line representation.

On another note, in unconstrained linear regression scenarios, one will find that R squared cannot be negative. Its lowest point is zero since it reflects r (the correlation coefficient) raised to the power of two. When encountering a negative R squared value, take heed—it’s not signaling mathematical error or computational glitches but rather pointing out inefficiencies in how well your chosen constrained model fits against real-world data patterns.

What does an R-squared value of 0.3 mean?

An R-squared value of 0.3 means that approximately 30% of the variability in the dependent variable is explained by the independent variable(s).

What does an R-squared value of 0.3 imply?

An R-squared value of 0.3 means that the model explains 30% of the variance in the dependent variable, while 70% of the variance is unexplained.

An R-squared of 0.3 indicates a weak relationship between the model’s independent and dependent variables. However, a low R-squared value like 0.3 does not necessarily mean the model is inadequate; it could be common in fields with high inherent variability, such as studies of human behavior.

Even with an R-squared value as low as 0.3, it is still possible to draw important conclusions about the relationships between variables if the independent variables are statistically significant. This emphasizes the importance of considering statistical significance alongside the R-squared value.

What is R squared in regression?

R squared in regression is a statistical measure representing the proportion of the variance in the dependent variable that is predictable from the independent variable(s).

In conclusion, what does R-squared signify in regression? R-squared, also known as R2 or the coefficient of determination, is a statistical measure in regression models that determines the proportion of variance in the dependent variable that is predictable from the independent variable(s).

R-squared values range from 0 to 1 and indicate how well the data fit the regression model, commonly referred to as the model’s goodness of fit. A higher R-squared value generally indicates that the model explains more variability in the dependent variable.

While a high R-squared is often seen as desirable, it should not be the sole measure to rely on for assessing a statistical model’s performance, as it does not indicate causation or the correctness of the regression model.

R-squared is a statistical measure that shows how much of the variance in the dependent variable is explained by the independent variable or variables in a regression model. It provides insight into the relationship between the variables.

In conclusion, R-squared is a crucial statistical measure that offers valuable insights in regression analysis and investment. It provides an understanding of the relationship between independent and dependent variables and helps assess a model’s goodness-of-fit.

However, it’s important to remember that R-squared should not be used to assess a model’s performance or make predictions. It should be used with other statistical measures and a thorough understanding of the subject matter for a comprehensive analysis. Ultimately, understanding and correctly interpreting R-squared can make the difference between a good model and a great one.

Frequently Asked Questions

What does r-squared tell you.

R-squared tells you the proportion of variance in the dependent variable that can be explained by the independent variable, indicating the goodness of fit of the data to the regression model.

What does an R2 value of 0.9 mean?

An R2 value of 0.9 means that the independent variable accounts for 90% of the variability in the dependent variable, implying a robust model fit.

Yes, a higher R-squared value indicates a better fit for the regression model, while a lower R-squared value suggests a poorer fit.

Why is R-squared value so low?

A low R-squared value means a high degree of variation that cannot be readily accounted for. This results in comparatively lower R-squared values.

What does R-squared measure in regression analysis?

In regression analysis, R-squared measures and quantifies the extent to which variance in the dependent variable can be explained by the independent variable(s). It measures this proportion of predictable variance.

Similar Posts

Modigliani modigliani (m2): risk adjusted performance (calculator).

Modigliani Modigliani (M2) risk-adjusted performance is used to compare the performance of investment portfolios. It’s frequently referred to as M2. This measure extends beyond traditional metrics by adjusting returns for risk, offering a percentage-based view that aligns with a benchmark’s…

12 Risk-Adjusted Return Types And Measurement Methods (Calculators, Video)

A risk-adjusted return is a measure of return that compares the potential profit from an investment to the degree of risk that must be accepted in order to achieve it. The reference point is usually a risk-free investment, such as…

Wall Street Cheat Sheet

Wall Street Cheat Sheet The only difference between a profitable trader and a non-profitable trader is how they control their emotions. While the latter always shows the right emotions at the wrong time (often leading to wrong calls in the…

Jensen Ratio: Definition, Formula and Calculator

As an investor, you would want to know whether your investment’s return is worth the risk. There are different methods you can use to measure the risk-adjusted performance of your portfolio, and the Jensen Ratio (Jensen’s Performance Index) is one…

Drawdown in a Trading Strategy – What Are Good Max Drawdowns?

Why is drawdown in trading important? Why should you spend time thinking about what is a good max drawdown percentage? How you prepare and deal with drawdowns in trading is important. Why is it important? Because a drawdown makes you…

Maximum Adverse Excursion (MAE) and Maximum Favorable Excursion (MFE) Explained

How many times have we witnessed in our trading systems how trades move against our position? Many! Well, in this article, we will see how to analyze this behavior in our trades and what conclusions we can draw from them…

Session expired

Please log in again. The login page will open in a new tab. After logging in you can close it and return to this page.

LEARN STATISTICS EASILY

Learn Data Analysis Now!

How to Report Results of Multiple Linear Regression in APA Style

You will learn How to Report Results of Multiple Linear Regression , accurately reporting coefficients, significance levels, and assumptions using APA style.

Introduction

Multiple linear regression is a fundamental statistical method to understand the relationship between one dependent variable and two or more independent variables. This approach allows researchers and analysts to predict the dependent variable’s outcome based on the independent variables’ values, providing insights into complex relationships within data sets. The power of multiple linear regression lies in its ability to control for various confounding factors simultaneously, making it an invaluable tool in fields ranging from social sciences to finance and health sciences.

Reporting the results of multiple linear regression analyses requires precision and adherence to established guidelines, such as those provided by the American Psychological Association (APA) style. The importance of reporting in APA style cannot be overstated, as it ensures clarity, uniformity, and comprehensiveness in research documentation. Proper reporting includes:

- Detailed information about the regression model used.

- The significance of the predictors.

- The fit of the model.

- Any assumptions or conditions that were tested.

Adhering to APA style enhances the readability and credibility of research findings, facilitating their interpretation and application by a broad audience.

This guide will equip you with the knowledge and skills to effectively report multiple linear regression results in APA style, ensuring your research communicates scientific inquiries.

- Detail assumptions check like multicollinearity with VIF scores.

- Report the adjusted R-squared to express model fit.

- Identify significant predictors with t-values and p-values in your regression model.

- Include confidence intervals for a comprehensive understanding of predictor estimates.

- Explain model diagnostics with residual plots for validity.

Ad description. Lorem ipsum dolor sit amet, consectetur adipiscing elit.

Step-by-Step Guide with Examples

1. objective of regression analysis.

Initiate by clearly stating the purpose of your multiple linear regression (MLR) analysis. For example, you might explore how environmental factors (X1, X2, X3) predict plant growth (Y). Example: “This study aims to assess the impact of sunlight exposure (X1), water availability (X2), and soil quality (X3) on plant growth rate (Y).”

2. Sample Size and Power

Discuss the significance of your sample size. A larger sample provides greater power for a robust MLR analysis. Example: “With a sample size of 200 plants, we ensure sufficient power to detect significant predictors of growth, minimizing type II errors.”

*Considering the importance of the power of the statistical test, calculating the sample size is a crucial step for accurately determining the adequate sample size needed to identify the estimated relationship.

3. Checking and Reporting Model Assumptions

- Linearity : Verify each independent variable’s relationship with the dependent variable is linear. Example: “Scatterplots of sunlight exposure, water availability, and soil quality against plant growth revealed linear trends.”

- Normality of Residuals : Assess using the Shapiro-Wilk test. Example: “The Shapiro-Wilk test confirmed the residuals’ normality, W = .98, p = .15.”

- Homoscedasticity : Evaluate with the Breusch-Pagan test. Example: “Homoscedasticity was confirmed, with a Breusch-Pagan test result of χ² = 5.42, p = 0.14.”

- Independence of Errors : Use the Durbin-Watson statistic. Example: “The Durbin-Watson statistic of 1.92 suggests no autocorrelation, indicating independent errors.”

4. Statistical Significance of the Regression Model

Present the F-statistic, degrees of freedom, and its significance (p-value) to demonstrate the model’s overall fit. Example: “The model was significant, F(3,196) = 12.57, p < 0.001, indicating at least one predictor significantly affects plant growth.”

5. Coefficient of Determination

Report the adjusted R² to show the variance explained by the model. Example: “The model explains 62% of the variance in plant growth, with an adjusted R² of 0.62.”

6. Statistical Significance of Predictors

Detail each predictor’s significance through t-tests. Example: “Sunlight exposure was a significant predictor, t(196) = 5.33, p < 0.001, indicating a positive effect on plant growth.”

7. Regression Coefficients and Equation

Provide the regression equation with unstandardized coefficients. Example: “The regression equation was Y = 2.5 + 0.8X1 + 0.5X2 – 0.2X3, where each hour of sunlight (X1) increases growth by 0.8 units…”

8. Discussion of Model Fit and Limitations

Reflect on how well the model fits the data and its limitations. Example: “While the model fits well (Adjusted R² = 0.62), it’s crucial to note that it does not prove causation, and external factors not included in the model may also affect plant growth.”

9. Additional Diagnostics and Visualizations

Incorporate diagnostics like VIF for multicollinearity and visual aids. Example: “VIF scores were below 5 for all predictors, indicating no multicollinearity concern. Residual plots showed random dispersion, affirming model assumptions.”

“In our exploration of the determinants of final exam scores in a university setting, we employed a multiple linear regression model to assess the contributions of study hours (X1), class attendance (X2), and student motivation (X3). The model, specified as Y = β0 + β1X1 + β2X2 + β3X3 + ε, where Y represents final exam scores, aimed to provide a comprehensive understanding of how these variables collectively influence academic performance.

Assumptions Check: Before examining the predictive power of our model, a thorough assessment of its foundational assumptions was undertaken to affirm the integrity of our analysis. Scatterplot examinations scrutinized each predictor’s relationship with the dependent variable for linearity, revealing no deviations from linear expectations. The Shapiro-Wilk test substantiated the normality of the residuals (W = .98, p = .15), thereby satisfying the normality criterion. Homoscedasticity, the uniform variance of residuals across the range of predicted values, was confirmed via the Breusch-Pagan test (χ² = 5.42, p = 0.14). Furthermore, the Durbin-Watson statistic stood at 1.92, effectively ruling out autocorrelation among residuals and attesting to the independence of errors. The Variance Inflation Factor (VIF) for each predictor was well below the threshold of 5, dispelling multicollinearity concerns. Collectively, these diagnostic tests validated the key assumptions underpinning our multiple linear regression model, providing a solid groundwork for the subsequent analysis.

Model Summary : The overall fit of the model was statistically significant, as indicated by an F-statistic of 53.24 with a p-value less than .001 (F(3,196) = 53.24, p < .001), suggesting that the model explains a significant portion of the variance in exam scores. The adjusted R² value of .43 further illustrates that our model can account for approximately 43% of the final exam scores variability, highlighting the included predictors’ substantial impact.

Coefficients and Confidence Intervals :

- The intercept, β0, was estimated at 50 points, implying an average exam score baseline when all independent variables are held at zero.

- Study Hours (X1) : Each additional hour of study was associated with a 2.5 point increase in exam scores (β1 = 2.5), with a 95% confidence interval of [1.9, 3.1], underscoring the value of dedicated study time.

- Class Attendance (X2) : Regular attendance contributed an additional 1.8 points to exam scores per class attended (β2 = 1.8), with the confidence interval ranging from 1.1 to 2.5, reinforcing the importance of class participation.

- Student Motivation (X3) : Motivation emerged as a significant factor, with a 3.2-point increase in scores for heightened motivation levels (β3 = 3.2) and a confidence interval of [2.4, 4.0], suggesting a profound influence on academic success.

Model Diagnostics : The diagnostic checks, including the analysis of residuals, confirmed the model’s adherence to the assumptions of linear regression. The absence of discernible patterns in the residual plots affirmed the model’s homoscedasticity and linearity, further solidifying the reliability of our findings.

In conclusion, our regression analysis elucidates the critical roles of study hours, class attendance, and student motivation in determining final exam scores. The robustness of the model, evidenced by the stringent checks and the significant predictive power of the included variables, provides compelling insights into effective academic strategies. These findings validate our initial hypotheses and offer valuable guidance for educational interventions to enhance student outcomes.

These results, particularly the point estimates and their associated confidence intervals, provide robust evidence supporting the hypothesis that study hours, class attendance, and student motivation are significant predictors of final exam scores. The confidence intervals offer a range of plausible values for the true effects of these predictors, reinforcing the reliability of the estimates.

In this comprehensive guide, we’ve navigated the intricacies of reporting multiple linear regression results in APA style, emphasizing the critical components that must be included to ensure clarity, accuracy, and adherence to standardized reporting conventions. Key points such as the importance of presenting a clear model specification, conducting thorough assumption checks, detailing model summaries and coefficients, and interpreting the significance of predictors have been highlighted to assist you in crafting a report that stands up to academic scrutiny and contributes valuable insights to your field of study.

Accurate reporting is paramount in scientific research. It conveys findings and upholds the integrity and reproducibility of the research process. By meticulously detailing each aspect of your multiple linear regression analysis, from the initial model introduction to the final diagnostic checks, you provide a roadmap for readers to understand and potentially replicate your study. This level of transparency is crucial for fostering trust in your conclusions and encouraging further exploration and discussion within the scientific community.

Moreover, the practical example is a template for effectively applying these guidelines, illustrating how theoretical principles translate into practice. By following the steps outlined in this guide, researchers can enhance the impact and reach of their studies, ensuring that their contributions to knowledge are recognized, understood, and built upon.

Recommended Articles

Explore more on statistical reporting by diving into our extensive collection of APA style guides and examples on our blog.

How to Report Chi-Square Test Results in APA Style: A Step-By-Step Guide

- How to Report One-Way ANOVA Results in APA Style: A Step-by-Step

How to Report Simple Linear Regression Results in APA Style

- Generalized Linear Models: A Comprehensive Introduction

How to Report Pearson Correlation Results in APA Style

- Multiple Linear Regression – an overview (External Link)

- How to Report Cohen’s d in APA Style

- Master Cohen’s d in APA Style (Story)

- APA Style T-Test Reporting Guide

Frequently Asked Questions (FAQs)

Multiple linear regression extends simple linear regression by incorporating two or more predictors to explain the variance in a dependent variable, offering a more comprehensive analysis of complex relationships.

Use multiple linear regression to understand the impact of several independent variables on a single outcome and when these variables are expected to interact with each other in influencing the dependent variable.

Key steps include testing for linearity, examining residual plots for homoscedasticity and normality, checking VIF scores for multicollinearity, and using the Durbin-Watson statistic to assess the independence of residuals.

Coefficients represent the expected change in the dependent variable for a one-unit change in the predictor, holding all other predictors constant. Positive coefficients indicate a direct relationship, while negative coefficients suggest an inverse relationship.

Adjusted R-squared provides a more accurate measure of the model’s explanatory power by adjusting for the number of predictors, preventing overestimating variance explained in models with multiple predictors.

Confidence intervals offer a range of plausible values for each coefficient, providing insights into the precision of the estimates and the statistical significance of predictors.

Consider combining highly correlated variables, removing some, or using techniques like principal component analysis to reduce multicollinearity without losing critical information.

Residual analysis can reveal patterns that suggest violations of linear regression assumptions, guiding modifications to the model, such as transforming variables or adding interaction terms.

P-values can be misleading in the presence of multicollinearity, when sample sizes are very large or small, or when data do not meet the assumptions of linear regression, emphasizing the importance of comprehensive diagnostic checks.

Ensure your graphs are clear, labeled accurately, and include necessary details like confidence intervals or regression lines. Follow APA guidelines for figure presentation to maintain consistency and readability in your report.

Similar Posts

This is a concise guide on how to report Chi-Square Test results in APA style, including significance, p-value, and effect size.

With this article, you will learn How to Report Simple Linear Regression in APA-style. Ensure accurate, credible research results.

Learn how to report correlation in APA style, mastering the key steps and considerations for clearly communicating research findings.

Assumptions in Linear Regression: A Comprehensive Guide

Discover assumptions in linear regression, learn to validate them using real-world examples, and enhance your data analysis skills.

How to Create Regression Lines in Excel

Master the art of creating Regression Lines in Excel with our guide. Discover step-by-step instructions for powerful data analysis.

How to Report Results of Simple Binary Logistic Regression

Our guide will help you master how to report results of simple binary logistic regression in APA style, enhancing clarity.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

The Stats Geek

R squared in logistic regression

In previous posts I’ve looked at R squared in linear regression, and argued that I think it is more appropriate to think of it is a measure of explained variation, rather than goodness of fit.

Of course not all outcomes/dependent variables can be reasonably modelled using linear regression. Perhaps the second most common type of regression model is logistic regression, which is appropriate for binary outcome data. How is R squared calculated for a logistic regression model? Well it turns out that it is not entirely obvious what its definition should be. Over the years, different researchers have proposed different measures for logistic regression, with the objective usually that the measure inherits the properties of the familiar R squared from linear regression. In this post I’m going to focus on one of them, which is McFadden’s R squared, and it is the default ‘pseudo R2’ value reported by the Stata package. There are certain drawbacks to this measure – if you want to read more about these and some of the other measures, take a look at this 1996 Statistics in Medicine paper by Mittlbock and Schemper.

McFadden’s pseudo-R squared

Logistic regression models are fitted using the method of maximum likelihood – i.e. the parameter estimates are those values which maximize the likelihood of the data which have been observed. McFadden’s R squared measure is defined as

Deterministic or inherently random?

McFadden’s R squared in R

In R, the glm (generalized linear model) command is the standard command for fitting logistic regression. As far as I am aware, the fitted glm object doesn’t directly give you any of the pseudo R squared values, but McFadden’s measure can be readily calculated. To do so, we first fit our model of interest, and then the null model which contains only an intercept. We can then calculate McFadden’s R squared using the fitted model log likelihood values:

Thanks to Brian Stucky for pointing out that the code used in the original version of this article only works for individual binary data.

To get a sense of how strong a predictor one needs to get a certain value of McFadden’s R squared, we’ll simulate data with a single binary predictor, X, with P(X=1)=0.5. Then we’ll specify values for P(Y=1|X=0) and P(Y=1|X=1). Bigger differences between these two values corresponds to X having a stronger effect on Y. We’ll first try P(Y=1|X=0)=0.3 and P(Y=1|X=1)=0.7:

(The value printed is McFadden’s log likelihood, and not a log likelihood!) So, even with X affecting the probability of Y=1 reasonably strongly, McFadden’s R2 is only 0.13. To increase it, we must make P(Y=1|X=0) and P(Y=1|X=1) more different:

Even with X changing P(Y=1) from 0.1 to 0.9, McFadden’s R squared is only 0.55. Lastly we’ll try values of 0.01 and 0.99 – what I would call a very strong effect!

Now we have a value much closer to 1. Although just a series of simple simulations, the conclusion I draw is that one should really not be surprised if, from a fitted logistic regression McFadden’s R2 is not particularly large – we need extremely strong predictors in order for it to get close to 1. I personally don’t interpret this as a problem – it is merely illustrating that in practice it is difficult to predict a binary event with near certainty.

Grouped binomial data vs individual data

Sometimes binary data are stored in grouped binomial form. That is, each row in the data frame contains outcome data for a binomial random variable with n>1. This grouped binomial format can be used even when the data arise from single units when groups of units/individuals share the same values of the covariates (so called covariate patterns). For example, if the only covariates collected in a study on people are gender and age category, the data can be stored in grouped form, with groups defined by the combinations of gender and age category. As is well known, one can fit a logistic regression model to such grouped data and obtain the same estimates and inferences as one would get if instead the data were expanded to individual binary data. To illustrate, we first simulate a grouped binomial data frame in R:

The simulated data are very simple, with a single covariate x which takes values 0 or 1. The column s records how many ‘successes’ there are and the column ‘f’ records how many failures. There are 1,000 units in each of the two groups defined by the value of the variable x. To fit a logistic regression model to the data in R we can pass to the glm function a response which is a matix where the first column is the number of successes and the second column is the number of failures:

We now convert the grouped binomial data to individual binary (Bernoulli) data, and fit the same logistic regression model. Rather than expanding the grouped data to the much larger individual data frame, we can instead create, separately for x=0 and x=1, two rows corresponding to y=0 and y=1, and create a variable recording the frequency. The frequency is then passed as a weight to the glm function:

As expected, we obtain the same parameter estimates and inferences as from the grouped data frame. This is because the log likelihood functions are, up to a constant not involving the model parameters, identical. We might expect therefore that McFadden’s R squared would be the same from the two. To calculate this we fit the null models to the grouped data and then the individual data:

We see that the R squared from the grouped data model is 0.96, while the R squared from the individual data model is only 0.12. The explanation for the large difference is (I believe) that for the grouped binomial data setup, the model can accurately predict the number of successes in a binomial observation with n=1,000 with good accuracy. In contrast, for the individual binary data model, the observed outcomes are 0 or 1, while the predicted outcomes are 0.7 and 0.3 for x=0 and x=1 groups. The low R squared for the individual binary data model reflects the fact that the covariate x does not enable accurate prediction of the individual binary outcomes. In contrast, x can give a good prediction for the number of successes in a large group of individuals.

An article describing the same contrast as above but comparing logistic regression with individual binary data and Poisson models for the event rate can be found here at the Journal of Clinical Epidemiology (my thanks to Brian Stucky based at the University of Colorado for very useful discussion on the above, and for pointing me to this paper).

Further reading

In their most recent edition of ’Applied Logistic Regression’ , Hosmer, Lemeshow and Sturdivant give quite a detailed coverage of different R squared measures for logistic regression.

You may also be interested in:

- Area under the ROC curve – assessing discrimination in logistic…

- R squared and goodness of fit in linear regression

- R squared and adjusted R squared

8 thoughts on “R squared in logistic regression”

Sacha Varin asks by email:

1) I have fitted an ordinal logistic regression (with only 1 nominal independent variable and 1 response variable). I am not a big fan of the pseudo R2. Anyways, if I want to interpret the Nagelkerke pseudo R2 (=0.066), I can say that the nominal variable explain alone 6.6% of the total (100%) variability of the response variable (=ordinal variable). When I write, at the end of my sentence “variability of the response variable”, I wonder about the word “variability”. Is “variability” referring to the fact that the response variable can vary, in my case, between 3 levels : not important, important and very important ? If not, how could we explain/interpret this sentence “variability of the response variable” ?

2) Regarding the best pseudo R2 value to use, which one would you recommend ? I would recommend Nagelkerke’s index over Cox & Snell’s, as the rescaling results in proper lower and upper bounds (0 and 1). McFadden’s is also a sound index, and very intuitive. However, values of McFadden will typically be lower than Nagelkerke’s for a given data set (and both will be lower than OLS R2 values), so Nagelkerke’s index will be more in line with what most researchers are accustomed to seeing with OLS R2. There is, however, little theoretical or substantive reason other than this for preferring Nagelkerk’s index over McFadden’s, as both perform similarly across varied multicollinearity conditions. Nagelkere’s index, however, might be somewhat more stable at low base rate conditions. Do you agree ?

My answers:

1) For linear regression, R2 is defined in terms of amount of variance explained. As I understand it, Nagelkerke’s psuedo R2, is an adaption of Cox and Snell’s R2. The latter is defined (in terms of the likelihood function) so that it matches R2 in the case of linear regression, with the idea being that it can be generalized to other types of model. However, once it comes to say logistic regression, as far I know Cox & Snell, and Nagelkerke’s R2 (and indeed McFadden’s) are no longer proportions of explained variance. Nonetheless, I think one could still describe them as proportions of explained variation in the response, since if the model were able to perfectly predict the outcome (i.e. explain variation in the outcome between individuals), then Nagelkerke’s R2 value would be 1.

2) To be honest I don’t know if I’d recommend one over the other – as you say they have different properties and I’m not sure it’s possible to say one is ‘better’ than all the others. In an excellent blog post ( http://statisticalhorizons.com/r2logistic ), Paul Allison explains why he now doesn’t like Nagelkerke’s adjustment to Cox and Snell because it’s ad-hoc.He then describes yet another recently proposed alternative. Personally, I use McFadden’s R2 as it’s reported in Stata, but I would generally just use it as a rough indicator of how well I am predicting the outcome.

Thank you for your elaborate expression. Although I am yet a beginner in this area, In have still difficulty even to understand the basic concept and idea of the what of odds, odds ratio, log of odds ratio and the different measures of goodness of fit of a logistic model. Could you present me the meaning of these terms in a simpler language, please?

This post might be helpful: Interpreting odds and odds ratios

Dear Jonathan,

I really thank you lots for your response. One last precision. In a multiple linear regression we can get a negative R^2. Indeed, if the chosen model fits worse than a horizontal line (null hypothesis), then R^2 is negative. Now, I have fitted an ordinal logistic regression. I get the Nagelkerke pseudo R^2 =0.066 (6.6%). I use the validate() function from the rms R package to obtain the population corrected index (calculated by bootstrap) and I get a negative pseudo R^2 =-0.0473 (-4.73%). How can we explain that the pseudo R^2 can be negative ?

In linear regression, the standard R^2 cannot be negative. The adjusted R^2 can however be negative.

If the validate function does what I think (use bootstrapping to estimate the optimism), then I guess it is just taking the naive Nagelkerke R^2 and then subtracting off the estimated optimism, which I suppose has no guarantee of necessarily being non-negative. The interpretation I suppose then for your negative value is that your model doesn’t appear to have much (any) predictive ability.

Does McFadden’s pseudo-R2 scale? As in, is a model with R2 = 0.25 2.5x as good as a model with R2 = 0.10?

Hi there 🙂 Thank you for these elaborate responses. For my model, Stata gave me a McFadden value of 0.03.

There are few information online as to how to interpret McFadden values with one of the few recommendations being that 0.2 – 0.4 ‘would be excellent’. Other sources say to be weary of pseudo R-square results and not to mistake small values for bad model fit. I am confused about 1. What the value 0.03 tells me 2. If this value is so bad that I should revise my model and/or 3. Despite this low value, am I still able to interprete the coefficients?

Thank you in advance!

Thanks, I have fixed this.

Leave a Reply Cancel reply

This site uses Akismet to reduce spam. Learn how your comment data is processed .

Demystifying R-Squared and Adjusted R-Squared

Adjusted R-squared is a reliable measure of goodness of fit for multiple regression problems. Discover the math behind it and how it differs from R-squared.

R-squared (or the coefficient of determination ) measures the variation that is explained by a regression model . For a multiple regression model, R-squared increases or remains the same as we add new predictors to the model, even if the newly added predictors are independent of the target variable and don’t add any value to the predicting power of the model. Adjusted R-squared eliminates this drawback. It only increases if the newly added predictor improves the model’s predicting power.

Adjusted R-Squared and R-Squared Explained