Methodological quality of case series studies: an introduction to the JBI critical appraisal tool

Affiliations.

- 1 JBI, Faculty of Health and Medical Sciences, The University of Adelaide, Adelaide, SA, Australia.

- 2 The George Institute for Global Health, Telangana, India.

- 3 Australian Institute of Health Innovation, Faculty of Medicine and Health Sciences, Sydney, NSW, Australia.

- PMID: 33038125

- DOI: 10.11124/JBISRIR-D-19-00099

Introduction: Systematic reviews provide a rigorous synthesis of the best available evidence regarding a certain question. Where high-quality evidence is lacking, systematic reviewers may choose to rely on case series studies to provide information in relation to their question. However, to date there has been limited guidance on how to incorporate case series studies within systematic reviews assessing the effectiveness of an intervention, particularly with reference to assessing the methodological quality or risk of bias of these studies.

Methods: An international working group was formed to review the methodological literature regarding case series as a form of evidence for inclusion in systematic reviews. The group then developed a critical appraisal tool based on the epidemiological literature relating to bias within these studies. This was then piloted, reviewed, and approved by JBI's international Scientific Committee.

Results: The JBI critical appraisal tool for case series studies includes 10 questions addressing the internal validity and risk of bias of case series designs, particularly confounding, selection, and information bias, in addition to the importance of clear reporting.

Conclusion: In certain situations, case series designs may represent the best available evidence to inform clinical practice. The JBI critical appraisal tool for case series offers systematic reviewers an approved method to assess the methodological quality of these studies.

- Research Design*

- Systematic Reviews as Topic

- Mayo Clinic Libraries

- Systematic Reviews

- Critical Appraisal by Study Design

Systematic Reviews: Critical Appraisal by Study Design

- Knowledge Synthesis Comparison

- Knowledge Synthesis Decision Tree

- Standards & Reporting Results

- Materials in the Mayo Clinic Libraries

- Training Resources

- Review Teams

- Develop & Refine Your Research Question

- Develop a Timeline

- Project Management

- Communication

- PRISMA-P Checklist

- Eligibility Criteria

- Register your Protocol

- Other Resources

- Other Screening Tools

- Grey Literature Searching

- Citation Searching

- Data Extraction Tools

- Minimize Bias

- Synthesis & Meta-Analysis

- Publishing your Systematic Review

Tools for Critical Appraisal of Studies

“The purpose of critical appraisal is to determine the scientific merit of a research report and its applicability to clinical decision making.” 1 Conducting a critical appraisal of a study is imperative to any well executed evidence review, but the process can be time consuming and difficult. 2 The critical appraisal process requires “a methodological approach coupled with the right tools and skills to match these methods is essential for finding meaningful results.” 3 In short, it is a method of differentiating good research from bad research.

Critical Appraisal by Study Design (featured tools)

- Non-RCTs or Observational Studies

- Diagnostic Accuracy

- Animal Studies

- Qualitative Research

- Tool Repository

- AMSTAR 2 The original AMSTAR was developed to assess the risk of bias in systematic reviews that included only randomized controlled trials. AMSTAR 2 was published in 2017 and allows researchers to “identify high quality systematic reviews, including those based on non-randomised studies of healthcare interventions.” 4 more... less... AMSTAR 2 (A MeaSurement Tool to Assess systematic Reviews)

- ROBIS ROBIS is a tool designed specifically to assess the risk of bias in systematic reviews. “The tool is completed in three phases: (1) assess relevance(optional), (2) identify concerns with the review process, and (3) judge risk of bias in the review. Signaling questions are included to help assess specific concerns about potential biases with the review.” 5 more... less... ROBIS (Risk of Bias in Systematic Reviews)

- BMJ Framework for Assessing Systematic Reviews This framework provides a checklist that is used to evaluate the quality of a systematic review.

- CASP Checklist for Systematic Reviews This CASP checklist is not a scoring system, but rather a method of appraising systematic reviews by considering: 1. Are the results of the study valid? 2. What are the results? 3. Will the results help locally? more... less... CASP (Critical Appraisal Skills Programme)

- CEBM Systematic Reviews Critical Appraisal Sheet The CEBM’s critical appraisal sheets are designed to help you appraise the reliability, importance, and applicability of clinical evidence. more... less... CEBM (Centre for Evidence-Based Medicine)

- JBI Critical Appraisal Tools, Checklist for Systematic Reviews JBI Critical Appraisal Tools help you assess the methodological quality of a study and to determine the extent to which study has addressed the possibility of bias in its design, conduct and analysis.

- NHLBI Study Quality Assessment of Systematic Reviews and Meta-Analyses The NHLBI’s quality assessment tools were designed to assist reviewers in focusing on concepts that are key for critical appraisal of the internal validity of a study. more... less... NHLBI (National Heart, Lung, and Blood Institute)

- RoB 2 RoB 2 “provides a framework for assessing the risk of bias in a single estimate of an intervention effect reported from a randomized trial,” rather than the entire trial. 6 more... less... RoB 2 (revised tool to assess Risk of Bias in randomized trials)

- CASP Randomised Controlled Trials Checklist This CASP checklist considers various aspects of an RCT that require critical appraisal: 1. Is the basic study design valid for a randomized controlled trial? 2. Was the study methodologically sound? 3. What are the results? 4. Will the results help locally? more... less... CASP (Critical Appraisal Skills Programme)

- CONSORT Statement The CONSORT checklist includes 25 items to determine the quality of randomized controlled trials. “Critical appraisal of the quality of clinical trials is possible only if the design, conduct, and analysis of RCTs are thoroughly and accurately described in the report.” 7 more... less... CONSORT (Consolidated Standards of Reporting Trials)

- NHLBI Study Quality Assessment of Controlled Intervention Studies The NHLBI’s quality assessment tools were designed to assist reviewers in focusing on concepts that are key for critical appraisal of the internal validity of a study. more... less... NHLBI (National Heart, Lung, and Blood Institute)

- JBI Critical Appraisal Tools Checklist for Randomized Controlled Trials JBI Critical Appraisal Tools help you assess the methodological quality of a study and to determine the extent to which study has addressed the possibility of bias in its design, conduct and analysis.

- ROBINS-I ROBINS-I is a “tool for evaluating risk of bias in estimates of the comparative effectiveness… of interventions from studies that did not use randomization to allocate units… to comparison groups.” 8 more... less... ROBINS-I (Risk Of Bias in Non-randomized Studies – of Interventions)

- NOS This tool is used primarily to evaluate and appraise case-control or cohort studies. more... less... NOS (Newcastle-Ottawa Scale)

- AXIS Cross-sectional studies are frequently used as an evidence base for diagnostic testing, risk factors for disease, and prevalence studies. “The AXIS tool focuses mainly on the presented [study] methods and results.” 9 more... less... AXIS (Appraisal tool for Cross-Sectional Studies)

- NHLBI Study Quality Assessment Tools for Non-Randomized Studies The NHLBI’s quality assessment tools were designed to assist reviewers in focusing on concepts that are key for critical appraisal of the internal validity of a study. • Quality Assessment Tool for Observational Cohort and Cross-Sectional Studies • Quality Assessment of Case-Control Studies • Quality Assessment Tool for Before-After (Pre-Post) Studies With No Control Group • Quality Assessment Tool for Case Series Studies more... less... NHLBI (National Heart, Lung, and Blood Institute)

- Case Series Studies Quality Appraisal Checklist Developed by the Institute of Health Economics (Canada), the checklist is comprised of 20 questions to assess “the robustness of the evidence of uncontrolled, [case series] studies.” 10

- Methodological Quality and Synthesis of Case Series and Case Reports In this paper, Dr. Murad and colleagues “present a framework for appraisal, synthesis and application of evidence derived from case reports and case series.” 11

- MINORS The MINORS instrument contains 12 items and was developed for evaluating the quality of observational or non-randomized studies. 12 This tool may be of particular interest to researchers who would like to critically appraise surgical studies. more... less... MINORS (Methodological Index for Non-Randomized Studies)

- JBI Critical Appraisal Tools for Non-Randomized Trials JBI Critical Appraisal Tools help you assess the methodological quality of a study and to determine the extent to which study has addressed the possibility of bias in its design, conduct and analysis. • Checklist for Analytical Cross Sectional Studies • Checklist for Case Control Studies • Checklist for Case Reports • Checklist for Case Series • Checklist for Cohort Studies

- QUADAS-2 The QUADAS-2 tool “is designed to assess the quality of primary diagnostic accuracy studies… [it] consists of 4 key domains that discuss patient selection, index test, reference standard, and flow of patients through the study and timing of the index tests and reference standard.” 13 more... less... QUADAS-2 (a revised tool for the Quality Assessment of Diagnostic Accuracy Studies)

- JBI Critical Appraisal Tools Checklist for Diagnostic Test Accuracy Studies JBI Critical Appraisal Tools help you assess the methodological quality of a study and to determine the extent to which study has addressed the possibility of bias in its design, conduct and analysis.

- STARD 2015 The authors of the standards note that “[e]ssential elements of [diagnostic accuracy] study methods are often poorly described and sometimes completely omitted, making both critical appraisal and replication difficult, if not impossible.”10 The Standards for the Reporting of Diagnostic Accuracy Studies was developed “to help… improve completeness and transparency in reporting of diagnostic accuracy studies.” 14 more... less... STARD 2015 (Standards for the Reporting of Diagnostic Accuracy Studies)

- CASP Diagnostic Study Checklist This CASP checklist considers various aspects of diagnostic test studies including: 1. Are the results of the study valid? 2. What were the results? 3. Will the results help locally? more... less... CASP (Critical Appraisal Skills Programme)

- CEBM Diagnostic Critical Appraisal Sheet The CEBM’s critical appraisal sheets are designed to help you appraise the reliability, importance, and applicability of clinical evidence. more... less... CEBM (Centre for Evidence-Based Medicine)

- SYRCLE’s RoB “[I]mplementation of [SYRCLE’s RoB tool] will facilitate and improve critical appraisal of evidence from animal studies. This may… enhance the efficiency of translating animal research into clinical practice and increase awareness of the necessity of improving the methodological quality of animal studies.” 15 more... less... SYRCLE’s RoB (SYstematic Review Center for Laboratory animal Experimentation’s Risk of Bias)

- ARRIVE 2.0 “The [ARRIVE 2.0] guidelines are a checklist of information to include in a manuscript to ensure that publications [on in vivo animal studies] contain enough information to add to the knowledge base.” 16 more... less... ARRIVE 2.0 (Animal Research: Reporting of In Vivo Experiments)

- Critical Appraisal of Studies Using Laboratory Animal Models This article provides “an approach to critically appraising papers based on the results of laboratory animal experiments,” and discusses various “bias domains” in the literature that critical appraisal can identify. 17

- CEBM Critical Appraisal of Qualitative Studies Sheet The CEBM’s critical appraisal sheets are designed to help you appraise the reliability, importance and applicability of clinical evidence. more... less... CEBM (Centre for Evidence-Based Medicine)

- CASP Qualitative Studies Checklist This CASP checklist considers various aspects of qualitative research studies including: 1. Are the results of the study valid? 2. What were the results? 3. Will the results help locally? more... less... CASP (Critical Appraisal Skills Programme)

- Quality Assessment and Risk of Bias Tool Repository Created by librarians at Duke University, this extensive listing contains over 100 commonly used risk of bias tools that may be sorted by study type.

- Latitudes Network A library of risk of bias tools for use in evidence syntheses that provides selection help and training videos.

References & Recommended Reading

1. Kolaski, K., Logan, L. R., & Ioannidis, J. P. (2024). Guidance to best tools and practices for systematic reviews . British Journal of Pharmacology , 181 (1), 180-210

2. Portney LG. Foundations of clinical research : applications to evidence-based practice. Fourth edition. ed. Philadelphia: F A Davis; 2020.

3. Fowkes FG, Fulton PM. Critical appraisal of published research: introductory guidelines. BMJ (Clinical research ed). 1991;302(6785):1136-1140.

4. Singh S. Critical appraisal skills programme. Journal of Pharmacology and Pharmacotherapeutics. 2013;4(1):76-77.

5. Shea BJ, Reeves BC, Wells G, et al. AMSTAR 2: a critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. BMJ (Clinical research ed). 2017;358:j4008.

6. Whiting P, Savovic J, Higgins JPT, et al. ROBIS: A new tool to assess risk of bias in systematic reviews was developed. Journal of clinical epidemiology. 2016;69:225-234.

7. Sterne JAC, Savovic J, Page MJ, et al. RoB 2: a revised tool for assessing risk of bias in randomised trials. BMJ (Clinical research ed). 2019;366:l4898.

8. Moher D, Hopewell S, Schulz KF, et al. CONSORT 2010 Explanation and Elaboration: Updated guidelines for reporting parallel group randomised trials. Journal of clinical epidemiology. 2010;63(8):e1-37.

9. Sterne JA, Hernan MA, Reeves BC, et al. ROBINS-I: a tool for assessing risk of bias in non-randomised studies of interventions. BMJ (Clinical research ed). 2016;355:i4919.

10. Downes MJ, Brennan ML, Williams HC, Dean RS. Development of a critical appraisal tool to assess the quality of cross-sectional studies (AXIS). BMJ open. 2016;6(12):e011458.

11. Guo B, Moga C, Harstall C, Schopflocher D. A principal component analysis is conducted for a case series quality appraisal checklist. Journal of clinical epidemiology. 2016;69:199-207.e192.

12. Murad MH, Sultan S, Haffar S, Bazerbachi F. Methodological quality and synthesis of case series and case reports. BMJ evidence-based medicine. 2018;23(2):60-63.

13. Slim K, Nini E, Forestier D, Kwiatkowski F, Panis Y, Chipponi J. Methodological index for non-randomized studies (MINORS): development and validation of a new instrument. ANZ journal of surgery. 2003;73(9):712-716.

14. Whiting PF, Rutjes AWS, Westwood ME, et al. QUADAS-2: a revised tool for the quality assessment of diagnostic accuracy studies. Annals of internal medicine. 2011;155(8):529-536.

15. Bossuyt PM, Reitsma JB, Bruns DE, et al. STARD 2015: an updated list of essential items for reporting diagnostic accuracy studies. BMJ (Clinical research ed). 2015;351:h5527.

16. Hooijmans CR, Rovers MM, de Vries RBM, Leenaars M, Ritskes-Hoitinga M, Langendam MW. SYRCLE's risk of bias tool for animal studies. BMC medical research methodology. 2014;14:43.

17. Percie du Sert N, Ahluwalia A, Alam S, et al. Reporting animal research: Explanation and elaboration for the ARRIVE guidelines 2.0. PLoS biology. 2020;18(7):e3000411.

18. O'Connor AM, Sargeant JM. Critical appraisal of studies using laboratory animal models. ILAR journal. 2014;55(3):405-417.

- << Previous: Minimize Bias

- Next: GRADE >>

- Last Updated: May 10, 2024 7:59 AM

- URL: https://libraryguides.mayo.edu/systematicreviewprocess

Log in using your username and password

- Search More Search for this keyword Advanced search

- Latest content

- Current issue

- BMJ Journals More You are viewing from: Google Indexer

You are here

- Volume 23, Issue 2

- Methodological quality and synthesis of case series and case reports

- Article Text

- Article info

- Citation Tools

- Rapid Responses

- Article metrics

- http://orcid.org/0000-0001-5502-5975 Mohammad Hassan Murad 1 ,

- Shahnaz Sultan 2 ,

- Samir Haffar 3 ,

- Fateh Bazerbachi 4

- 1 Evidence-Based Practice Center, Mayo Clinic , Rochester , Minnesota , USA

- 2 Division of Gastroenterology, Hepatology, and Nutrition , University of Minnesota, Center for Chronic Diseases Outcomes Research, Minneapolis Veterans Affairs Healthcare System , Minneapolis , Minnesota , USA

- 3 Digestive Center for Diagnosis and Treatment , Damascus , Syrian Arab Republic

- 4 Department of Gastroenterology and Hepatology , Mayo Clinic , Rochester , Minnesota , USA

- Correspondence to Dr Mohammad Hassan Murad, Evidence-Based Practice Center, Mayo Clinic, Rochester, MN 55905, USA; murad.mohammad{at}mayo.edu

https://doi.org/10.1136/bmjebm-2017-110853

Statistics from Altmetric.com

Request permissions.

If you wish to reuse any or all of this article please use the link below which will take you to the Copyright Clearance Center’s RightsLink service. You will be able to get a quick price and instant permission to reuse the content in many different ways.

- epidemiology

In 1904, Dr James Herrick evaluated a 20-year-old patient from Grenada who was studying in Chicago and suffered from anaemia and a multisystem illness. The patient was found to have ‘freakish’ elongated red cells that resembled a crescent or a sickle. Dr Herrick concluded that the red cells were not artefacts because the appearance of the cells was maintained regardless of how the smear slide was prepared. He followed the patient who had subsequently received care from other physicians until 1907 and questioned whether this was syphilis or a parasite from the tropics. Then in 1910, in a published case report, he concluded that this presentation strongly suggested a previously unrecognised change in the composition of the corpuscle itself. 1 Sickle cell disease became a diagnosis thereafter.

Case reports and case series have profoundly influenced the medical literature and continue to advance our knowledge in the present time. In 1985, the American Medical Association reprinted 51 papers from its journal that had significantly changed the science and practice of medicine over the past 150 years, and five of these papers were case reports. 2 However, concerns about weak inferences and the high likelihood of bias associated with such reports have resulted in minimal attention being devoted to developing frameworks for approaching, appraising, synthesising and applying evidence derived from case reports/series. Nevertheless, such observations remain the bread and butter of learning by pattern recognition and integral to advancing medical knowledge.

Guidance on how to write a case report is available (ie, a reporting guideline). The Case Report (CARE) guidelines 3 were developed following a three-phase consensus process and provide a 13-item checklist that can assist researchers in publishing complete and meaningful exposition of medical information. This checklist encourages the explicit presentation of patient information, clinical findings, timeline, diagnostic assessment, therapeutic interventions, follow-up and outcomes. 3 Yet, systematic reviewers appraising the evidence for decision-makers require tools to assess the methodological quality (risk of bias assessment) of this evidence.

In this guide, we present a framework to evaluate the methodological quality of case reports/series and synthesise their results, which is particularly important when conducting a systematic review of a body of evidence that consists primarily of uncontrolled clinical observations.

Definitions

In the biomedical published literature, a case report is the description of the clinical course of one individual, which may include particular exposures, symptoms, signs, interventions or outcomes. A case report is the smallest publishable unit in the literature, whereas case series report aggregates individual cases in one publication. 4

If a case series is prospective, differentiating it from a single-arm uncontrolled cohort study becomes difficult. In one clinical practice guideline, it was proposed that studies without internal comparisons can be labelled as case series unless they explicitly report having a protocol before commencement of data collection, a definition of inclusion and exclusion criteria, a standardised follow-up and clear reporting of the number of excluded patients and those lost to follow-up. 6

Evaluating methodological quality

Pierson 7 provided an approach to evaluate the validity of a case report based on five components: documentation, uniqueness, objectivity, interpretation and educational value, resulting in a score with a maximum of 10 (a score above 5 was suggested indicate a valid case report). This approach, however, was rarely used in subsequent work and seems to conflate methodological quality with other constructs. For case reports of adverse drug reactions, other systems classify an association as definite, probable, possible or doubtful based on leading questions. 8 9 These questions are derived from the causality criteria that was established in 1965 by the English epidemiologist Bradford Hills. 10 Lastly, we have adapted the Newcastle Ottawa scale 11 for cohort and case–control studies by removing items that relate to comparability and adjustment (which are not relevant to non-comparative studies) and retained items that focused on selection, representativeness of cases and ascertainment of outcomes and exposure. This tool was applied in several published systematic reviews with good inter-rater agreement. 12–16

Proposed tool

The previous criteria from Pierson, 7 Bradford Hills 10 and Newcastle Ottawa scale modifications 11 converge into eight items that can be categorised into four domains: selection, ascertainment, causality and reporting. The eight items with leading explanatory questions are summarised in table 1 .

- View inline

Tool for evaluating the methodological quality of case reports and case series

For example, a study that explicitly describes all the cases who have presented to a medical centre over a certain period of time would satisfy the selection domain. In contrast, a study that reports on several individuals with unclear selection approach leaves the reader with uncertainty to whether this is the whole experience of the researchers and suggests possible selection bias. For the domain of ascertainment, self-report (of the exposure or the outcome) is less reliable than ascertainment using administrative and billing codes, which in turn is less reliable than clinical records. For the domain of causality, we would have stronger inference in a case report of an adverse drug reaction that has resolved with cessation of the drug and reoccurred after reintroduction of the drug. Lastly, for the domain of reporting, a case report that is described with sufficient details may allow readers to apply the evidence derived from the report in their practice. On the other hand, an inadequately reported case will likely be unhelpful in the course of clinical care.

We suggest using this tool in systematic reviews of case reports/series. One option to summarise the results of this tool is to sum the scores of the eight binary responses into an aggregate score. A better option is not to use an aggregate score because numeric representation of methodological quality may not be appropriate when one or two questions are deemed most critical to the validity of a report (compared with other questions). Therefore, we suggest making an overall judgement about methodological quality based on the questions deemed most critical in the specific clinical scenario.

Synthesis of case reports/series

A single patient case report does not allow the estimation of an effect size and would only provide descriptive or narrative results. Case series of more than one patient may allow narrative or quantitative synthesis.

Narrative synthesis

A systematic review of the cases with the rare syndrome of lipodystrophy was able to suggest core and supportive clinical features and narratively summarised data on available treatment approaches. 17 Another systematic review of 172 cases of the infrequently encountered glycogenic hepatopathy was able to characterise for the first time patterns of liver enzymes and hepatic injury in this disease. 18

Quantitative synthesis

Quantitative analysis of non-comparative series does not produce relative association measures such as ORs or relative risks but can provide estimates of prevalence or event rates in the form of a proportion (with associated precision). Proportions can be pooled using fixed or random effects models by means of the various available meta-analysis software. For example, a meta-analysis of case series of patients presenting with aortic transection showed that mortality was significantly lower in patients who underwent endovascular repair, followed by open repair and non-operative management (9%, 19% and 46%, respectively, P<0.01). 19

A common challenge, however, occurs when proportions are too large or too small (close to 0 or to 1). In this situation, the variance of the proportion becomes very small leading to an inappropriately large weight in meta-analysis. One way to overcome this challenge is to transform prevalence to a variable that is not constrained to the 0–1 range and has approximately normal distribution, conduct the meta-analysis and then transform the estimate back to a proportion. 20 This is done using logit transformation or using the Freeman-Tukey double arcsine transformation, 21 with the latter being often preferred. 20

Another type of quantitative analysis that may be utilised is regression. A meta-analysis of 47 published cases of hypocalcaemia and cardiac dysfunction used univariate linear regression analysis to demonstrate that both QT interval and left ventricular ejection fraction were significantly correlated with corrected total serum calcium level. 22 Meta-regression, which is a regression in which the unit of analysis is a study, not a patient, can also be used to synthesise case series and control for study-level confounders. A meta-regression analysis of uncontrolled series of patients with uveal melanoma treated with proton beam therapy has shown that this treatment was associated with better outcomes than brachytherapy. 23 It is very important, however, to recognise that meta-regression results can be severely affected by ecological bias.

From evidence to decision

Several authors have described various important reasons to publish case reports/series ( table 2 ). 7 24 25

Role of case reports/series in the medical literature

It is paramount to recognise that a systematic review and meta-analysis of case reports/series should not be placed at the top of the hierarchy in a pyramid that depicts validity. 26 The certainty of evidence derived from a meta-analysis is contingent on the design of included studies, their risk of bias, as well as other factors such as imprecision, indirectness, inconsistency and likelihood of publication bias. 27 Commonly, certainty in evidence derived from case series/reports will be very low. Nevertheless, inferences from such reports can be used for decision-making. In the example of case series of aortic transection showing lower mortality with endovascular repair, a guideline recommendation was made stating ‘We suggest that endovascular repair be performed preferentially over open surgical repair or non-operative management’. This was graded as a weak recommendation based on low certainty evidence. 28 The strength of this recommendation acknowledged that the recommendation might not universally apply to everyone and that variability in decision-making was expected. The certainty in evidence rating of this recommendation implied that future research would likely yield different results that may change the recommendation. 28

The Grading of Recommendations, Assessment, Development and Evaluation (GRADE) approach clearly separates the certainty of evidence from the strength of recommendation. This separation allows decision-making based on lower levels of evidence. For example, despite low certainty evidence (derived from case series) regarding the association between aspirin and Reye’s syndrome in febrile children, a strong recommendation for using acetaminophen over aspirin is possible. 29 GRADE literature also describes five paradigmatic situations in which a strong recommendation can be made based on low quality evidence. 30 One of which is when the condition is life threatening. An example of which would be using hyperbaric oxygen therapy for purpura fulminans, which is only based on case reports. 31

Guideline developers and decision-makers often struggle when dealing with case reports/case series. On occasions, they ignore such evidence and focus the scope of guidelines on areas with higher quality evidence. Sometimes they label recommendations based on case reports as expert opinion. 32 We propose an approach to evaluate the methodological quality of case reports/series based on the domains of selection, ascertainment, causality and reporting and provide signalling questions to aid evidence-based practitioners and systematic reviewers in their assessment. We suggest the incorporation of case reports/series in decision-making based on the GRADE approach when no other higher level of evidence is available.

In this guide, we have made the case for publishing case reports/series and proposed synthesis of their results in systematic reviews to facilitate using this evidence in decision-making. We have proposed a tool that can be used to evaluate the methodological quality in systematic reviews that examine case reports and case series.

- Gagnier JJ ,

- Altman DG , et al

- Grimes DA ,

- Abu-Zidan FM ,

- Schünemann HJ ,

- Naranjo CA ,

- Sellers EM , et al

- 9. ↵ The World health Organization-Uppsala Monitoring Centre . The use of the WHO-UMC system for standardised case causality assessment . https://www.who-umc.org/media/2768/standardised-case-causality-assessment.pdf ( accessed 20 Sep 2017 ).

- O’Connell D , et al

- Bazerbachi F ,

- Prokop L , et al

- Vargas EJ , et al

- Watt KD , et al

- Szarka LA , et al

- Hussain MT , et al

- Farah W , et al

- Leise MD , et al

- Malgor R , et al

- Barendregt JJ ,

- Lee YY , et al

- Freeman MF ,

- Newman DB ,

- Fidahussein SS ,

- Kashiwagi DT , et al

- Schild SE , et al

- Vandenbroucke JP

- Alsawas M , et al

- Matsumura JS ,

- Mitchell RS , et al

- Guyatt GH ,

- Vist GE , et al

- Domecq JP ,

- Murad MH , et al

- Mestrovic J , et al

- Alvarez-Villalobos N ,

- Shah R , et al

- Conboy EE ,

- Mounajjed T , et al

- Gottlieb MS

- Coodin FJ ,

- Uchida IA ,

- Goldfinger SE

- Lennox BR ,

- Jones PB , et al

Contributors MHM drafted the paper and all coauthors critically revised the manuscript. All the authors contributed to conceive the idea and approved the final submitted version.

Competing interests None declared.

Provenance and peer review Not commissioned; externally peer reviewed.

Read the full text or download the PDF:

CASP Checklists

How to use our CASP Checklists

Referencing and Creative Commons

- Online Training Courses

- CASP Workshops

- What is Critical Appraisal

- Study Designs

- Useful Links

- Bibliography

- View all Tools and Resources

- Testimonials

Critical Appraisal Checklists

We offer a number of free downloadable checklists to help you more easily and accurately perform critical appraisal across a number of different study types.

The CASP checklists are easy to understand but in case you need any further guidance on how they are structured, take a look at our guide on how to use our CASP checklists .

CASP Checklist: Systematic Reviews with Meta-Analysis of Observational Studies

CASP Checklist: Systematic Reviews with Meta-Analysis of Randomised Controlled Trials (RCTs)

CASP Randomised Controlled Trial Checklist

- Print & Fill

CASP Systematic Review Checklist

CASP Qualitative Studies Checklist

CASP Cohort Study Checklist

CASP Diagnostic Study Checklist

CASP Case Control Study Checklist

CASP Economic Evaluation Checklist

CASP Clinical Prediction Rule Checklist

Checklist Archive

- CASP Randomised Controlled Trial Checklist 2018 fillable form

- CASP Randomised Controlled Trial Checklist 2018

CASP Checklist

Need more information?

- Online Learning

- Privacy Policy

Critical Appraisal Skills Programme

Critical Appraisal Skills Programme (CASP) will use the information you provide on this form to be in touch with you and to provide updates and marketing. Please let us know all the ways you would like to hear from us:

We use Mailchimp as our marketing platform. By clicking below to subscribe, you acknowledge that your information will be transferred to Mailchimp for processing. Learn more about Mailchimp's privacy practices here.

Copyright 2024 CASP UK - OAP Ltd. All rights reserved Website by Beyond Your Brand

- Board of Directors

- Corporate Reports

- Partnerships & Affiliations

- Staff Directory

- Careers and Awards

- Privacy Policy

- Health Technology Assessment

- Guideline Adaptation and Development

- Health Economics

- Industry Partnerships

- Knowledge Transfer

- Research Methodology Development

- One Society Network

- Publications

- Upcoming Events

- Past Events

- Contract Our Services

Case Series Studies Quality Appraisal Tool

- Publications & Presentations

- Studies Citing Checklist

- Suggestions & Contact

- Acknowledgements

Case Series Studies Quality Appraisal Checklist

The checklist for quality appraisal of case series studies using a modified Delphi technique was developed by a group of researchers at the Institute of Health Economics (IHE) in collaboration with researchers from two other health technology assessment (HTA) agencies in Australia and Spain.

Checklist and Instructions

Current as of March 2nd, 2016.

- Case Series Studies Checklist (MS Word Doc)

- Case Series Studies Checklist with Instructions for Use (MS Word Doc)

Never miss an update!

Strengthening the reporting of observational studies in epidemiology

STROBE Checklists

- STROBE Checklist: cohort, case-control, and cross-sectional studies (combined) Download PDF | Word

- STROBE Checklist (fillable): cohort, case-control, and cross-sectional studies (combined) Download PDF | Word

- STROBE Checklist: cohort studies Download PDF | Word

- STROBE Checklist: case-control studies Download PDF | Word

- STROBE Checklist: cross-sectional studies Download PDF | Word

- STROBE Checklist: conference abstracts Download PDF

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Mil Med Res

Methodological quality (risk of bias) assessment tools for primary and secondary medical studies: what are they and which is better?

1 Center for Evidence-Based and Translational Medicine, Zhongnan Hospital, Wuhan University, 169 Donghu Road, Wuchang District, Wuhan, 430071 Hubei China

Yun-Yun Wang

2 Department of Evidence-Based Medicine and Clinical Epidemiology, The Second Clinical College, Wuhan University, Wuhan, 430071 China

Zhi-Hua Yang

Xian-tao zeng.

3 Center for Evidence-Based and Translational Medicine, Wuhan University, Wuhan, 430071 China

4 Global Health Institute, Wuhan University, Wuhan, 430072 China

Associated Data

The data and materials used during the current review are all available in this review.

Methodological quality (risk of bias) assessment is an important step before study initiation usage. Therefore, accurately judging study type is the first priority, and the choosing proper tool is also important. In this review, we introduced methodological quality assessment tools for randomized controlled trial (including individual and cluster), animal study, non-randomized interventional studies (including follow-up study, controlled before-and-after study, before-after/ pre-post study, uncontrolled longitudinal study, interrupted time series study), cohort study, case-control study, cross-sectional study (including analytical and descriptive), observational case series and case reports, comparative effectiveness research, diagnostic study, health economic evaluation, prediction study (including predictor finding study, prediction model impact study, prognostic prediction model study), qualitative study, outcome measurement instruments (including patient - reported outcome measure development, content validity, structural validity, internal consistency, cross-cultural validity/ measurement invariance, reliability, measurement error, criterion validity, hypotheses testing for construct validity, and responsiveness), systematic review and meta-analysis, and clinical practice guideline. The readers of our review can distinguish the types of medical studies and choose appropriate tools. In one word, comprehensively mastering relevant knowledge and implementing more practices are basic requirements for correctly assessing the methodological quality.

In the twentieth century, pioneering works by distinguished professors Cochrane A [ 1 ], Guyatt GH [ 2 ], and Chalmers IG [ 3 ] have led us to the evidence-based medicine (EBM) era. In this era, how to search, critically appraise, and use the best evidence is important. Moreover, systematic review and meta-analysis is the most used tool for summarizing primary data scientifically [ 4 – 6 ] and also the basic for developing clinical practice guideline according to the Institute of Medicine (IOM) [ 7 ]. Hence, to perform a systematic review and/ or meta-analysis, assessing the methodological quality of based primary studies is important; naturally, it would be key to assess its own methodological quality before usage. Quality includes internal and external validity, while methodological quality usually refers to internal validity [ 8 , 9 ]. Internal validity is also recommended as “risk of bias (RoB)” by the Cochrane Collaboration [ 9 ].

There are three types of tools: scales, checklists, and items [ 10 , 11 ]. In 2015, Zeng et al. [ 11 ] investigated methodological quality tools for randomized controlled trial (RCT), non-randomized clinical intervention study, cohort study, case-control study, cross-sectional study, case series, diagnostic accuracy study which also called “diagnostic test accuracy (DTA)”, animal study, systematic review and meta-analysis, and clinical practice guideline (CPG). From then on, some changes might generate in pre-existing tools, and new tools might also emerge; moreover, the research method has also been developed in recent years. Hence, it is necessary to systematically investigate commonly-used tools for assessing methodological quality, especially those for economic evaluation, clinical prediction rule/model, and qualitative study. Therefore, this narrative review presented related methodological quality (including “RoB”) assessment tools for primary and secondary medical studies up to December 2019, and Table 1 presents their basic characterizes. We hope this review can help the producers, users, and researchers of evidence.

The basic characteristics of the included methodological quality (risk of bias) assessment tools

AMSTAR A measurement tool to assess systematic reviews, AHRQ Agency for healthcare research and quality, AXIS Appraisal tool for cross-sectional studies, CASP Critical appraisal skills programme, CAMARADES The collaborative approach to meta-analysis and review of animal data from experimental studies, COSMIN Consensus-based standards for the selection of health measurement instruments, DSU Decision support unit, EPOC the effective practice and organisation of care group, GRACE The god research for comparative effectiveness initiative, IHE Canada institute of health economics, JBI Joanna Briggs Institute, MINORS Methodological index for non-randomized studies, NOS Newcastle-Ottawa scale, NMA network meta-analysis, NIH national institutes of health, NICE National institute for clinical excellence, PEDro physiotherapy evidence database, PROBAST The prediction model risk of bias assessment tool, QUADAS Quality assessment of diagnostic accuracy studies, QIPS Quality in prognosis studies, RoB Risk of bias, ROBINS-I Risk of bias in non-randomised studies - of interventions, ROBIS Risk of bias in systematic review, SYRCLE Systematic review center for laboratory animal experimentation, STAIR Stroke therapy academic industry roundtable, SIGN The Scottish intercollegiate guidelines network

Tools for intervention studies

Randomized controlled trial (individual or cluster).

The first RCT was designed by Hill BA (1897–1991) and became the “gold standard” for experimental study design [ 12 , 13 ] up to now. Nowadays, the Cochrane risk of bias tool for randomized trials (which was introduced in 2008 and edited on March 20, 2011) is the most commonly recommended tool for RCT [ 9 , 14 ], which is called “RoB”. On August 22, 2019 (which was introduced in 2016), the revised revision for this tool to assess RoB in randomized trials (RoB 2.0) was published [ 15 ]. The RoB 2.0 tool is suitable for individually-randomized, parallel-group, and cluster- randomized trials, which can be found in the dedicated website https://www.riskofbias.info/welcome/rob-2-0-tool . The RoB 2.0 tool consists of five bias domains and shows major changes when compared to the original Cochrane RoB tool (Table S 1 A-B presents major items of both versions).

The Physiotherapy Evidence Database (PEDro) scale is a specialized methodological assessment tool for RCT in physiotherapy [ 16 , 17 ] and can be found in http://www.pedro.org.au/english/downloads/pedro-scale/ , covering 11 items (Table S 1 C). The Effective Practice and Organisation of Care (EPOC) Group is a Cochrane Review Group who also developed a tool (called as “EPOC RoB Tool”) for complex interventions randomized trials. This tool has 9 items (Table S 1 D) and can be found in https://epoc.cochrane.org/resources/epoc-resources-review-authors . The Critical Appraisal Skills Programme (CASP) is a part of the Oxford Centre for Triple Value Healthcare Ltd. (3 V) portfolio, which provides resources and learning and development opportunities to support the development of critical appraisal skills in the UK ( http://www.casp-uk.net/ ) [ 18 – 20 ]. The CASP checklist for RCT consists of three sections involving 11 items (Table S 1 E). The National Institutes of Health (NIH) also develops quality assessment tools for controlled intervention study (Table S 1 F) to assess methodological quality of RCT ( https://www.nhlbi.nih.gov/health-topics/study-quality-assessment-tools ).

The Joanna Briggs Institute (JBI) is an independent, international, not-for-profit researching and development organization based in the Faculty of Health and Medical Sciences at the University of Adelaide, South Australia ( https://joannabriggs.org/ ). Hence, it also develops many critical appraisal checklists involving the feasibility, appropriateness, meaningfulness and effectiveness of healthcare interventions. Table S 1 G presents the JBI Critical appraisal checklist for RCT, which includes 13 items.

The Scottish Intercollegiate Guidelines Network (SIGN) was established in 1993 ( https://www.sign.ac.uk/ ). Its objective is to improve the quality of health care for patients in Scotland via reducing variations in practices and outcomes, through developing and disseminating national clinical guidelines containing recommendations for effective practice based on current evidence. Hence, it also develops many critical appraisal checklists for assessing methodological quality of different study types, including RCT (Table S 1 H).

In addition, the Jadad Scale [ 21 ], Modified Jadad Scale [ 22 , 23 ], Delphi List [ 24 ], Chalmers Scale [ 25 ], National Institute for Clinical Excellence (NICE) methodology checklist [ 11 ], Downs & Black checklist [ 26 ], and other tools summarized by West et al. in 2002 [ 27 ] are not commonly used or recommended nowadays.

Animal study

Before starting clinical trials, the safety and effectiveness of new drugs are usually tested in animal models [ 28 ], so animal study is considered as preclinical research, possessing important significance [ 29 , 30 ]. Likewise, the methodological quality of animal study also needs to be assessed [ 30 ]. In 1999, the initial “Stroke Therapy Academic Industry Roundtable (STAIR)” recommended their criteria for assessing the quality of stroke animal studies [ 31 ] and this tool is also called “STAIR”. In 2009, the STAIR Group updated their criteria and developed “Recommendations for Ensuring Good Scientific Inquiry” [ 32 ]. Besides, Macleod et al. [ 33 ] proposed a 10-point tool based on STAIR to assess methodological quality of animal study in 2004, which is also called “CAMARADES (The Collaborative Approach to Meta-Analysis and Review of Animal Data from Experimental Studies)”; with “S” presenting “Stroke” at that time and now standing for “Studies” ( http://www.camarades.info/ ). In CAMARADES tool, every item could reach a highest score of one point and the total score for this tool could achieve 10 points (Table S 1 J).

In 2008, the Systematic Review Center for Laboratory animal Experimentation (SYRCLE) was established in Netherlands and this team developed and released an RoB tool for animal intervention studies - SYRCLE’s RoB tool in 2014, based on the original Cochrane RoB Tool [ 34 ]. This new tool contained 10 items which had become the most recommended tool for assessing the methodological quality of animal intervention studies (Table S 1 I).

Non-randomised studies

In clinical research, RCT is not always feasible [ 35 ]; therefore, non-randomized design remains considerable. In non-randomised study (also called quasi-experimental studies), investigators control the allocation of participants into groups, but do not attempt to adopt randomized operation [ 36 ], including follow-up study. According to with or without comparison, non-randomized clinical intervention study can be divided into comparative and non-comparative sub-types, the Risk Of Bias In Non-randomised Studies - of Interventions (ROBINS-I) tool [ 37 ] is the preferentially recommended tool. This tool is developed to evaluate risk of bias in estimating comparative effectiveness (harm or benefit) of interventions in studies not adopting randomization in allocating units (individuals or clusters of individuals) into comparison groups. Besides, the JBI critical appraisal checklist for quasi-experimental studies (non-randomized experimental studies) is also suitable, which includes 9 items. Moreover, the methodological index for non-randomized studies (MINORS) [ 38 ] tool can also be used, which contains a total of 12 methodological points; the first 8 items could be applied for both non-comparative and comparative studies, while the last 4 items appropriate for studies with two or more groups. Every item is scored from 0 to 2, and the total scores over 16 or 24 give an overall quality score. Table S 1 K-L-M presented the major items of these three tools.

Non-randomized study with a separate control group could also be called clinical controlled trial or controlled before-and-after study. For this design type, the EPOC RoB tool is suitable (see Table S 1 D). When using this tool, the “random sequence generation” and “allocation concealment” should be scored as “High risk”, while grading for other items could be the same as that for randomized trial.

Non-randomized study without a separate control group could be a before-after (Pre-Post) study, a case series (uncontrolled longitudinal study), or an interrupted time series study. A case series is described a series of individuals, who usually receive the same intervention, and contains non control group [ 9 ]. There are several tools for assessing the methodological quality of case series study. The latest one was developed by Moga C et al. [ 39 ] in 2012 using a modified Delphi technique, which was developed by the Canada Institute of Health Economics (IHE); hence, it is also called “IHE Quality Appraisal Tool” (Table S 1 N). Moreover, NIH also develops a quality assessment tool for case series study, including 9 items (Table S 1 O). For interrupted time series studies, the “EPOC RoB tool for interrupted time series studies” is recommended (Table S 1 P). For the before-after study, we recommend the NIH quality assessment tool for before-after (Pre-Post) study without control group (Table S 1 Q).

In addition, for non-randomized intervention study, the Reisch tool (Check List for Assessing Therapeutic Studies) [ 11 , 40 ], Downs & Black checklist [ 26 ], and other tools summarized by Deeks et al. [ 36 ] are not commonly used or recommended nowadays.

Tools for observational studies and diagnostic study

Observational studies include cohort study, case-control study, cross-sectional study, case series, case reports, and comparative effectiveness research [ 41 ], and can be divided into analytical and descriptive studies [ 42 ].

Cohort study

Cohort study includes prospective cohort study, retrospective cohort study, and ambidirectional cohort study [ 43 ]. There are some tools for assessing the quality of cohort study, such as the CASP cohort study checklist (Table S 2 A), SIGN critical appraisal checklists for cohort study (Table S 2 B), NIH quality assessment tool for observational cohort and cross-sectional studies (Table S 2 C), Newcastle-Ottawa Scale (NOS; Table S 2 D) for cohort study, and JBI critical appraisal checklist for cohort study (Table S 2 E). However, the Downs & Black checklist [ 26 ] and the NICE methodology checklist for cohort study [ 11 ] are not commonly used or recommended nowadays.

The NOS [ 44 , 45 ] came from an ongoing collaboration between the Universities of Newcastle, Australia and Ottawa, Canada. Among all above mentioned tools, the NOS is the most commonly used tool nowadays which also allows to be modified based on a special subject.

Case-control study

Case-control study selects participants based on the presence of a specific disease or condition, and seeks earlier exposures that may lead to the disease or outcome [ 42 ]. It has an advantage over cohort study, that is the issue of “drop out” or “loss in follow up” of participants as seen in cohort study would not arise in such study. Nowadays, there are some acceptable tools for assessing the methodological quality of case-control study, including CASP case-control study checklist (Table S 2 F), SIGN critical appraisal checklists for case-control study (Table S 2 G), NIH quality assessment tool of case-control study (Table S 2 H), JBI critical appraisal checklist for case-control study (Table S 2 I), and the NOS for case-control study (Table S 2 J). Among them, the NOS for case-control study is also the most frequently used tool nowadays and allows to be modified by users.

In addition, the Downs & Black checklist [ 26 ] and the NICE methodology checklist for case-control study [ 11 ] are also not commonly used or recommended nowadays.

Cross-sectional study (analytical or descriptive)

Cross-sectional study is used to provide a snapshot of a disease and other variables in a defined population at a time point. It can be divided into analytical and purely descriptive types. Descriptive cross-sectional study merely describes the number of cases or events in a particular population at a time point or during a period of time; whereas analytic cross-sectional study can be used to infer relationships between a disease and other variables [ 46 ].

For assessing the quality of analytical cross-sectional study, the NIH quality assessment tool for observational cohort and cross-sectional studies (Table S 2 C), JBI critical appraisal checklist for analytical cross-sectional study (Table S 2 K), and the Appraisal tool for Cross-Sectional Studies (AXIS tool; Table S 2 L) [ 47 ] are recommended tools. The AXIS tool is a critical appraisal tool that addresses study design and reporting quality as well as the risk of bias in cross-sectional study, which was developed in 2016 and contains 20 items. Among these three tools, the JBI checklist is the most preferred one.

Purely descriptive cross-sectional study is usually used to measure disease prevalence and incidence. Hence, the critical appraisal tool for analytic cross-sectional study is not proper for the assessment. Only few quality assessment tools are suitable for descriptive cross-sectional study, like the JBI critical appraisal checklist for studies reporting prevalence data [ 48 ] (Table S 2 M), Agency for Healthcare Research and Quality (AHRQ) methodology checklist for assessing the quality of cross-sectional/ prevalence study (Table S 2 N), and Crombie’s items for assessing the quality of cross-sectional study [ 49 ] (Table S 2 O). Among them, the JBI tool is the newest.

Case series and case reports

Unlike above mentioned interventional case series, case reports and case series are used to report novel occurrences of a disease or a unique finding [ 50 ]. Hence, they belong to descriptive studies. There is only one tool – the JBI critical appraisal checklist for case reports (Table S 2 P).

Comparative effectiveness research

Comparative effectiveness research (CER) compares real-world outcomes [ 51 ] resulting from alternative treatment options that are available for a given medical condition. Its key elements include the study of effectiveness (effect in the real world), rather than efficacy (ideal effect), and the comparisons among alternative strategies [ 52 ]. In 2010, the Good Research for Comparative Effectiveness (GRACE) Initiative was established and developed principles to help healthcare providers, researchers, journal readers, and editors evaluate inherent quality for observational research studies of comparative effectiveness [ 41 ]. And in 2016, a validated assessment tool – the GRACE Checklist v5.0 (Table S 2 Q) was released for assessing the quality of CER.

Diagnostic study

Diagnostic tests, also called “Diagnostic Test Accuracy (DTA)”, are used by clinicians to identify whether a condition exists in a patient or not, so as to develop an appropriate treatment plan [ 53 ]. DTA has several unique features in terms of its design which differ from standard intervention and observational evaluations. In 2003, Penny et al. [ 53 , 54 ] developed a tool for assessing the quality of DTA, namely Quality Assessment of Diagnostic Accuracy Studies (QUADAS) tool. In 2011, a revised “QUADAS-2” tool (Table S 2 R) was launched [ 55 , 56 ]. Besides, the CASP diagnostic checklist (Table S 2 S), SIGN critical appraisal checklists for diagnostic study (Table S 2 T), JBI critical appraisal checklist for diagnostic test accuracy studies (Table S 2 U), and the Cochrane risk of bias assessing tool for diagnostic test accuracy (Table S 2 V) are also common useful tools in this field.

Of them, the Cochrane risk of bias tool ( https://methods.cochrane.org/sdt/ ) is based on the QUADAS tool, and the SIGN and JBI tools are based on the QUADAS-2 tool. Of course, the QUADAS-2 tool is the first recommended tool. Other relevant tools reviewed by Whiting et al. [ 53 ] in 2004 are not used nowadays.

Tools for other primary medical studies

Health economic evaluation.

Health economic evaluation research comparatively analyses alternative interventions with regard to their resource uses, costs and health effects [ 57 ]. It focuses on identifying, measuring, valuing and comparing resource use, costs and benefit/effect consequences for two or more alternative intervention options [ 58 ]. Nowadays, health economic study is increasingly popular. Of course, its methodological quality also needs to be assessed before its initiation. The first tool for such assessment was developed by Drummond and Jefferson in 1996 [ 59 ], and then many tools have been developed based on the Drummond’s items or its revision [ 60 ], such as the SIGN critical appraisal checklists for economic evaluations (Table S 3 A), CASP economic evaluation checklist (Table S 3 B), and the JBI critical appraisal checklist for economic evaluations (Table S 3 C). The NICE only retains one methodology checklist for economic evaluation (Table S 3 D).

However, we regard the Consolidated Health Economic Evaluation Reporting Standards (CHEERS) statement [ 61 ] as a reporting tool rather than a methodological quality assessment tool, so we do not recommend it to assess the methodological quality of health economic evaluation.

Qualitative study

In healthcare, qualitative research aims to understand and interpret individual experiences, behaviours, interactions, and social contexts, so as to explain interested phenomena, such as the attitudes, beliefs, and perspectives of patients and clinicians; the interpersonal nature of caregiver and patient relationships; illness experience; and the impact of human sufferings [ 62 ]. Compared with quantitative studies, assessment tools for qualitative studies are fewer. Nowadays, the CASP qualitative research checklist (Table S 3 E) is the most frequently recommended tool for this issue. Besides, the JBI critical appraisal checklist for qualitative research [ 63 , 64 ] (Table S 3 F) and the Quality Framework: Cabinet Office checklist for social research [ 65 ] (Table S 3 G) are also suitable.

Prediction studies

Clinical prediction study includes predictor finding (prognostic factor) studies, prediction model studies (development, validation, and extending or updating), and prediction model impact studies [ 66 ]. For predictor finding study, the Quality In Prognosis Studies (QIPS) tool [ 67 ] can be used for assessing its methodological quality (Table S 3 H). For prediction model impact studies, if it uses a randomized comparative design, tools for RCT can be used, especially the RoB 2.0 tool; if it uses a nonrandomized comparative design, tools for non-randomized studies can be used, especially the ROBINS-I tool. For diagnostic and prognostic prediction model studies, the Prediction model Risk Of Bias Assessment Tool (PROBAST; Table S 3 I) [ 68 ] and CASP clinical prediction rule checklist (Table S 3 J) are suitable.

Text and expert opinion papers

Text and expert opinion-based evidence (also called “non-research evidence”) comes from expert opinions, consensus, current discourse, comments, and assumptions or assertions that appear in various journals, magazines, monographs and reports [ 69 – 71 ]. Nowadays, only the JBI has a critical appraisal checklist for the assessment of text and expert opinion papers (Table S 3 K).

Outcome measurement instruments

An outcome measurement instrument is a “device” used to collect a measurement. The range embraced by the term ‘instrument’ is broad, and can refer to questionnaire (e.g. patient-reported outcome such as quality of life), observation (e.g. the result of a clinical examination), scale (e.g. a visual analogue scale), laboratory test (e.g. blood test) and images (e.g. ultrasound or other medical imaging) [ 72 , 73 ]. Measurements can be subjective or objective, and either unidimensional (e.g. attitude) or multidimensional. Nowadays, only one tool - the COnsensus-based Standards for the selection of health Measurement INstruments (COSMIN) Risk of Bias checklist [ 74 – 76 ] ( www.cosmin.nl/ ) is proper for assessing the methodological quality of outcome measurement instrument, and Table S 3 L presents its major items, including patient - reported outcome measure (PROM) development (Table S 3 LA), content validity (Table S 3 LB), structural validity (Table S 3 LC), internal consistency (Table S 3 LD), cross-cultural validity/ measurement invariance (Table S 3 LE), reliability (Table S 3 LF), measurement error (Table S 3 LG), criterion validity (Table S 3 LH), hypotheses testing for construct validity (Table S 3 LI), and responsiveness (Table S 3 LJ).

Tools for secondary medical studies

Systematic review and meta-analysis.

Systematic review and meta-analysis are popular methods to keep up with current medical literature [ 4 – 6 ]. Their ultimate purposes and values lie in promoting healthcare [ 6 , 77 , 78 ]. Meta-analysis is a statistical process of combining results from several studies, commonly a part of a systematic review [ 11 ]. Of course, critical appraisal would be necessary before using systematic review and meta-analysis.

In 1988, Sacks et al. developed the first tool for assessing the quality of meta-analysis on RCTs - the Sack’s Quality Assessment Checklist (SQAC) [ 79 ]; And then in 1991, Oxman and Guyatt developed another tool – the Overview Quality Assessment Questionnaire (OQAQ) [ 80 , 81 ]. To overcome the shortcomings of these two tools, in 2007 the A Measurement Tool to Assess Systematic Reviews (AMSTAR) was developed based on them [ 82 ] ( http://www.amstar.ca/ ). However, this original AMSTAR instrument did not include an assessment on the risk of bias for non-randomised studies, and the expert group thought revisions should address all aspects of the conduct of a systematic review. Hence, the new instrument for randomised or non-randomised studies on healthcare interventions - AMSTAR 2 was released in 2017 [ 83 ], and Table S 4 A presents its major items.

Besides, the CASP systematic review checklist (Table S 4 B), SIGN critical appraisal checklists for systematic reviews and meta-analyses (Table S 4 C), JBI critical appraisal checklist for systematic reviews and research syntheses (Table S 4 D), NIH quality assessment tool for systematic reviews and meta-analyses (Table S 4 E), The Decision Support Unit (DSU) network meta-analysis (NMA) methodology checklist (Table S 4 F), and the Risk of Bias in Systematic Review (ROBIS) [ 84 ] tool (Table S 4 G) are all suitable. Among them, the AMSTAR 2 is the most commonly used and the ROIBS is the most frequently recommended.

Among those tools, the AMSTAR 2 is suitable for assessing systematic review and meta-analysis based on randomised or non-randomised interventional studies, the DSU NMA methodology checklist for network meta-analysis, while the ROBIS for meta-analysis based on interventional, diagnostic test accuracy, clinical prediction, and prognostic studies.

Clinical practice guidelines

Clinical practice guideline (CPG) is integrated well into the thinking of practicing clinicians and professional clinical organizations [ 85 – 87 ]; and also make scientific evidence incorporated into clinical practice [ 88 ]. However, not all CPGs are evidence-based [ 89 , 90 ] and their qualities are uneven [ 91 – 93 ]. Until now there were more than 20 appraisal tools have been developed [ 94 ]. Among them, the Appraisal of Guidelines for Research and Evaluation (AGREE) instrument has the greatest potential in serving as a basis to develop an appraisal tool for clinical pathways [ 94 ]. The AGREE instrument was first released in 2003 [ 95 ] and updated to AGREE II instrument in 2009 [ 96 ] ( www.agreetrust.org/ ). Now the AGREE II instrument is the most recommended tool for CPG (Table S 4 H).

Besides, based on the AGREE II, the AGREE Global Rating Scale (AGREE GRS) Instrument [ 97 ] was developed as a short item tool to evaluate the quality and reporting of CPGs.

Discussion and conclusions

Currently, the EBM is widely accepted and the major attention of healthcare workers lies in “Going from evidence to recommendations” [ 98 , 99 ]. Hence, critical appraisal of evidence before using is a key point in this process [ 100 , 101 ]. In 1987, Mulrow CD [ 102 ] pointed out that medical reviews needed routinely use scientific methods to identify, assess, and synthesize information. Hence, perform methodological quality assessment is necessary before using the study. However, although there are more than 20 years have been passed since the first tool emergence, many users remain misunderstand the methodological quality and reporting quality. Of them, someone used the reporting checklist to assess the methodological quality, such as used the Consolidated Standards of Reporting Trials (CONSORT) statement [ 103 ] to assess methodological quality of RCT, used the Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) statement [ 104 ] to methodological quality of cohort study. This phenomenon indicates more universal education of clinical epidemiology is needed for medical students and professionals.

The methodological quality tool development should according to the characteristics of different study types. In this review, we used “methodological quality”, “risk of bias”, “critical appraisal”, “checklist”, “scale”, “items”, and “assessment tool” to search in the NICE website, SIGN website, Cochrane Library website and JBI website, and on the basis of them, added “systematic review”, “meta-analysis”, “overview” and “clinical practice guideline” to search in PubMed. Compared with our previous systematic review [ 11 ], we found some tools are recommended and remain used, some are used without recommendation, and some are eliminated [ 10 , 29 , 30 , 36 , 53 , 94 , 105 – 107 ]. These tools produce a significant impetus for clinical practice [ 108 , 109 ].

In addition, compared with our previous systematic review [ 11 ], this review stated more tools, especially those developed after 2014, and the latest revisions. Of course, we also adjusted the method of study type classification. Firstly, in 2014, the NICE provided 7 methodology checklists but only retains and updated the checklist for economic evaluation now. Besides, the Cochrane RoB 2.0 tool, AMSTAR 2 tool, CASP checklist, and most of JBI critical appraisal checklists are all the newest revisions; the NIH quality assessment tool, ROBINS-I tool, EPOC RoB tool, AXIS tool, GRACE Checklist, PROBAST, COSMIN Risk of Bias checklist, and ROBIS tool are all newly released tools. Secondly, we also introduced tools for network meta-analysis, outcome measurement instruments, text and expert opinion papers, prediction studies, qualitative study, health economic evaluation, and CER. Thirdly, we classified interventional studies into randomized and non-randomized sub-types, and then further classified non-randomized studies into with and without controlled group. Moreover, we also classified cross-sectional study into analytic and purely descriptive sub-types, and case-series into interventional and observational sub-types. These processing courses were more objective and comprehensive.

Obviously, the number of appropriate tools is the largest for RCT, followed by cohort study; the applicable range of JBI is widest [ 63 , 64 ], with CASP following closely. However, further efforts remain necessary to develop appraisal tools. For some study types, only one assessment tool is suitable, such as CER, outcome measurement instruments, text and expert opinion papers, case report, and CPG. Besides, there is no proper assessment tool for many study types, such as overview, genetic association study, and cell study. Moreover, existing tools have not been fully accepted. In the future, how to develop well accepted tools remains a significant and important work [ 11 ].

Our review can help the professionals of systematic review, meta-analysis, guidelines, and evidence users to choose the best tool when producing or using evidence. Moreover, methodologists can obtain the research topics for developing new tools. Most importantly, we must remember that all assessment tools are subjective, and actual yields of wielding them would be influenced by user’s skills and knowledge level. Therefore, users must receive formal training (relevant epidemiological knowledge is necessary), and hold rigorous academic attitude, and at least two independent reviewers should be involved in evaluation and cross-checking to avoid performance bias [ 110 ].

Supplementary information

Acknowledgements.

The authors thank all the authors and technicians for their hard field work for development methodological quality assessment tools.

Abbreviations

Authors’ contributions.

XTZ is responsible for the design of the study and review of the manuscript; LLM, ZHY, YYW, and DH contributed to the data collection; LLM, YYW, and HW contributed to the preparation of the article. All authors read and approved the final manuscript.

This work was supported (in part) by the Entrusted Project of National commission on health and health of China (No. [2019]099), the National Key Research and Development Plan of China (2016YFC0106300), and the Nature Science Foundation of Hubei Province (2019FFB03902). The funder had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript. The authors declare that there are no conflicts of interest in this study.

Availability of data and materials

Ethics approval and consent to participate.

Not applicable.

Consent for publication

Competing interests.

The authors declare that they have no competing interests.

Contributor Information

Lin-Lu Ma, Email: moc.361@58251689531 .

Yun-Yun Wang, Email: moc.361@49072054531 .

Zhi-Hua Yang, Email: moc.621@xxauhihzgnay .

Di Huang, Email: moc.361@74384236131 .

Hong Weng, Email: moc.361@29hgnew .

Xian-Tao Zeng, Email: moc.361@8211oatnaixgnez , Email: moc.mtbecuhw@oatnaixgnez .

Supplementary information accompanies this paper at 10.1186/s40779-020-00238-8.

Beckett News

- Beckett Home

- Price Guide

- Beckett Grading

- Beckett Authentication

- Organize Your Collection

- Beckett PLUS

- Release Calendar

- 2024 Baseball Cards & Checklists

- 2023 Baseball Cards & Checklists

- 2022 Baseball Cards & Checklists

- 2021 Baseball Cards & Checklists

- 2020 Baseball Cards & Checklists

- 2017 Baseball Cards & Checklists

- 2018 Baseball Cards & Checklists

- 2019 Baseball Cards & Checklists

- 2000s Baseball Cards

- 1990s Baseball Cards

- 1980 Baseball Cards & Checklists

- 1981 Baseball Cards & Checklists

- 1982 Baseball Cards & Checklists

- 1983 Baseball Cards & Checklists

- 1984 Baseball Cards & Checklists

- Rookie Cards

- Variations and SP Info

- Baseball Card Box Breaks

- Online Price Guide

- 2023-24 Basketball Cards & Checklists

- 2022-23 Basketball Cards & Checklists

- 2021-22 Basketball Cards & Checklists

- 2020-21 Basketball Cards & Checklists

- 2019-20 Basketball Cards & Checklists

- 2018-19 Basketball Cards & Checklists

- 2017-18 Basketball Cards & Checklists

- 2016-17 Basketball Cards & Checklists

- Basketball Box Breaks

- 2024 Football Cards & Checklists

- 2023 Football Cards & Checklists

- 2022 Football Cards & Checklists

- 2021 Football Cards & Checklists

- 2020 Football Cards & Checklists

- 2019 Football Cards & Checklists

- 2018 Football Cards & Checklists

- 2017 Football Cards & Checklists

- Football Box Breaks & Checklists

- 2023-24 Hockey Cards & Checklists

- 2022-23 Hockey Cards & Checklists

- 2021-22 Hockey Cards & Checklists

- 2020-21 Hockey Cards & Checklists

- 2019-20 Hockey Cards & Checklists

- 2018-19 Hockey Cards & Checklists

- 2017-18 Hockey Cards & Checklists

- 2016-17 Hockey Cards & Checklists

- Hockey Box Breaks

- 2020 Non-Sport Cards

- 2019 Non-Sport Cards

- 2018 Non-Sport Cards

- 2017 Non-Sport Cards

- Star Wars Trading Cards

- Garbage Pail Kids

- Non-Sport Box Breaks

- Soccer Cards

- Wrestling Cards

- Racing Cards

- Multisport Cards

- Vintage Cards

- Collecting Tips

- Pricing Insider

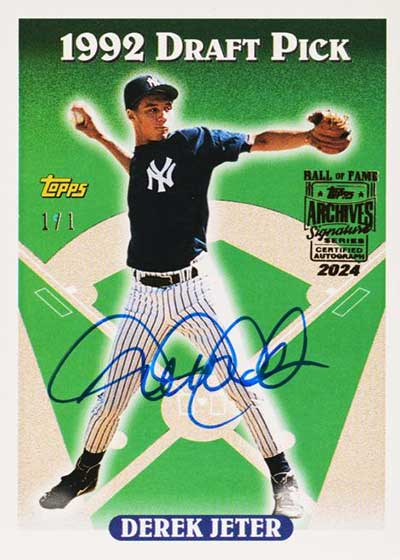

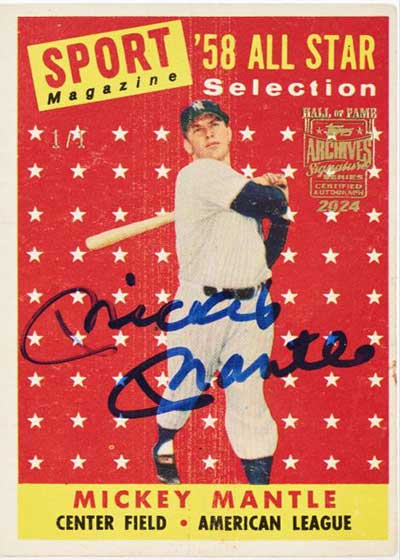

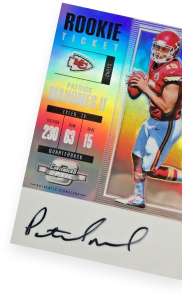

2024 Topps Archives Signature Series Baseball – Retired Edition Checklist and Details

Jump to the 2024 topps archives signature series baseball – retired edition checklist..

2024 Topps Archives Signature Series Baseball – Retired Edition is all about the past. Every card in the product has not only players from yesteryear but cards as well.

Like the similar Active Player Edition , boxes come with one card — an autographed buyback. It’s a simple concept that brings with it a lot of variety.

Every individual card comes numbered to 99 or fewer copies. Historically, it’s often much lower. That said, players may sign several different cards. Due to the nature of the product, the checklist is typically limited to a list of signers versus a full card-by-card breakdown.

What differentiates these buybacks from originals that may have simply been signed at an event or through the mail is a foil stamp. It not only acts as a confirmation that it’s from Topps, but there’s a year noted as well in case the same card has been used in a previous Archives Signature Series release.

Cards also come already in a one-touch holder.

2024 Topps Archives Signature Series Baseball – Retired Edition at a glance:

Cards per pack: Hobby – 1 Packs per box: Hobby – 1 Boxes per case: Hobby – 20 Release date: May 17, 2024

Shop for 2024 Topps Archives Signatures Series Baseball boxes on eBay:

- Hobby Boxes

What to expect in a hobby box:

- Buyback Autographs – 1

2024 Topps Archives Signature Series Baseball – Retired Edition Checklist

The following is a list of signers in the product. A list of specific cards and print runs has not been released.

Autograph Signers

134 signers.

Luis Aparicio Jeff Bagwell Harold Baines Carlos Beltran Johnny Bench Craig Biggio Bert Blyleven Wade Boggs George Brett Mark Buehrle Jose Canseco Steve Carlton Joe Carter Eric Chavez Will Clark Roger Clemens Bartolo Colon David Cone Coco Crisp Chili Davis Eric Davis Andre Dawson Bucky Dent Dennis Eckersley David Eckstein Jim Edmonds Andre Ethier Cecil Fielder Prince Fielder Rollie Fingers Carlton Fisk Julio Franco Nomar Garciaparra Brett Gardner Tom Glavine Juan Gonzalez Dwight Gooden Alex Gordon Goose Gossage Mark Grace Ken Griffey Jr. Ken Griffey Sr. Vladimir Guerrero Sr. Ron Guidry Keith Hernandez Trevor Hoffman Matt Holliday Ryan Howard Torii Hunter Brandon Inge Bo Jackson Reggie Jackson Derek Jeter Randy Johnson Adam Jones Andruw Jones Chipper Jones David Justice Jason Kendall Jimmy Key Paul Konerko John Kruk Barry Larkin Cliff Lee Derrek Lee Kenny Lofton Greg Maddux Bill Madlock Juan Marichal Edgar Martinez Pedro Martinez Hideki Matsui Don Mattingly Mark McGwire Paul Molitor Justin Morneau Eddie Murray Graig Nettles Paul O’Neill Tony Oliva Al Oliver Rafael Palmeiro Jim Palmer Dave Parker Dustin Pedroia Hunter Pence Andy Pettitte Mike Piazza Lou Piniella Jorge Posada Buster Posey Albert Pujols Tim Raines Aramis Ramirez Hanley Ramirez Willie Randolph Jim Rice Cal Ripken Jr. Mariano Rivera Alex Rodriguez Francisco Rodriguez Ivan Rodriguez Scott Rolen Jimmy Rollins Nolan Ryan Freddy Sanchez Ryne Sandberg Mike Scott Gary Sheffield Ozzie Smith John Smoltz Sammy Sosa Dave Stieb Darryl Strawberry Ichiro Suzuki Mark Teixeira Gene Tenace Frank Thomas Alan Trammell Chase Utley Mo Vaughn Tim Wallach Chien-Ming Wang Jered Weaver Jayson Werth Bernie Williams Matt Williams Dontrelle Willis Brian Wilson Dave Winfield Rick Wise Kerry Wood David Wright Robin Yount

Legendary Autographs Checklist

18 cards. 1:2,235 packs.

Sparky Anderson Jim Bunning Gary Carter Monte Irvin George Kell Harmon Killebrew Ralph Kiner Don Larsen Bob Lemon Mickey Mantle Eddie Mathews Willie McCovey Phil Niekro Brooks Robinson Red Schoendienst Bruce Sutter Don Sutton Dick Williams

Recent Topps Archives Signature Series Baseball – Retired Edition releases:

2024 leaf vivid football checklist and details, topps mlb living set checklist, team set ....

Ryan Cracknell

A collector for much of his life, Ryan focuses primarily on building sets, Montreal Expos and interesting cards. He's also got one of the most comprehensive collections of John Jaha cards in existence (not that there are a lot of them). Got a question, story idea or want to get in touch? You can reach him by email and through Twitter @tradercracks .

The Beckett Online Price Guide

The largest and most complete database in the industry. Period. Join the hundreds of thousands of collectors who have benefited from the OPG.

The Beckett Marketplace

Over 129 million cards from 70+ dealers

Related articles