- En español – ExME

- Em português – EME

Case-control and Cohort studies: A brief overview

Posted on 6th December 2017 by Saul Crandon

Introduction

Case-control and cohort studies are observational studies that lie near the middle of the hierarchy of evidence . These types of studies, along with randomised controlled trials, constitute analytical studies, whereas case reports and case series define descriptive studies (1). Although these studies are not ranked as highly as randomised controlled trials, they can provide strong evidence if designed appropriately.

Case-control studies

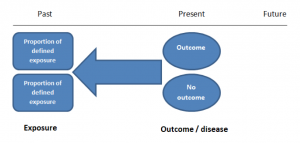

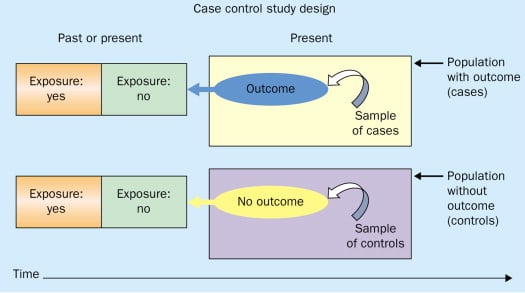

Case-control studies are retrospective. They clearly define two groups at the start: one with the outcome/disease and one without the outcome/disease. They look back to assess whether there is a statistically significant difference in the rates of exposure to a defined risk factor between the groups. See Figure 1 for a pictorial representation of a case-control study design. This can suggest associations between the risk factor and development of the disease in question, although no definitive causality can be drawn. The main outcome measure in case-control studies is odds ratio (OR) .

Figure 1. Case-control study design.

Cases should be selected based on objective inclusion and exclusion criteria from a reliable source such as a disease registry. An inherent issue with selecting cases is that a certain proportion of those with the disease would not have a formal diagnosis, may not present for medical care, may be misdiagnosed or may have died before getting a diagnosis. Regardless of how the cases are selected, they should be representative of the broader disease population that you are investigating to ensure generalisability.

Case-control studies should include two groups that are identical EXCEPT for their outcome / disease status.

As such, controls should also be selected carefully. It is possible to match controls to the cases selected on the basis of various factors (e.g. age, sex) to ensure these do not confound the study results. It may even increase statistical power and study precision by choosing up to three or four controls per case (2).

Case-controls can provide fast results and they are cheaper to perform than most other studies. The fact that the analysis is retrospective, allows rare diseases or diseases with long latency periods to be investigated. Furthermore, you can assess multiple exposures to get a better understanding of possible risk factors for the defined outcome / disease.

Nevertheless, as case-controls are retrospective, they are more prone to bias. One of the main examples is recall bias. Often case-control studies require the participants to self-report their exposure to a certain factor. Recall bias is the systematic difference in how the two groups may recall past events e.g. in a study investigating stillbirth, a mother who experienced this may recall the possible contributing factors a lot more vividly than a mother who had a healthy birth.

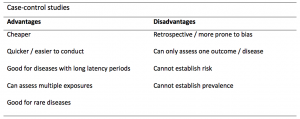

A summary of the pros and cons of case-control studies are provided in Table 1.

Table 1. Advantages and disadvantages of case-control studies.

Cohort studies

Cohort studies can be retrospective or prospective. Retrospective cohort studies are NOT the same as case-control studies.

In retrospective cohort studies, the exposure and outcomes have already happened. They are usually conducted on data that already exists (from prospective studies) and the exposures are defined before looking at the existing outcome data to see whether exposure to a risk factor is associated with a statistically significant difference in the outcome development rate.

Prospective cohort studies are more common. People are recruited into cohort studies regardless of their exposure or outcome status. This is one of their important strengths. People are often recruited because of their geographical area or occupation, for example, and researchers can then measure and analyse a range of exposures and outcomes.

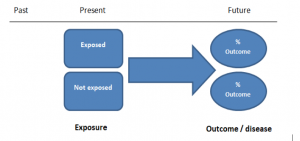

The study then follows these participants for a defined period to assess the proportion that develop the outcome/disease of interest. See Figure 2 for a pictorial representation of a cohort study design. Therefore, cohort studies are good for assessing prognosis, risk factors and harm. The outcome measure in cohort studies is usually a risk ratio / relative risk (RR).

Figure 2. Cohort study design.

Cohort studies should include two groups that are identical EXCEPT for their exposure status.

As a result, both exposed and unexposed groups should be recruited from the same source population. Another important consideration is attrition. If a significant number of participants are not followed up (lost, death, dropped out) then this may impact the validity of the study. Not only does it decrease the study’s power, but there may be attrition bias – a significant difference between the groups of those that did not complete the study.

Cohort studies can assess a range of outcomes allowing an exposure to be rigorously assessed for its impact in developing disease. Additionally, they are good for rare exposures, e.g. contact with a chemical radiation blast.

Whilst cohort studies are useful, they can be expensive and time-consuming, especially if a long follow-up period is chosen or the disease itself is rare or has a long latency.

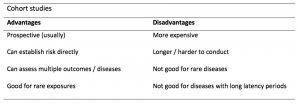

A summary of the pros and cons of cohort studies are provided in Table 2.

The Strengthening of Reporting of Observational Studies in Epidemiology Statement (STROBE)

STROBE provides a checklist of important steps for conducting these types of studies, as well as acting as best-practice reporting guidelines (3). Both case-control and cohort studies are observational, with varying advantages and disadvantages. However, the most important factor to the quality of evidence these studies provide, is their methodological quality.

- Song, J. and Chung, K. Observational Studies: Cohort and Case-Control Studies . Plastic and Reconstructive Surgery.  2010 Dec;126(6):2234-2242.

- Ury HK. Efficiency of case-control studies with multiple controls per case: Continuous or dichotomous data . Biometrics . 1975 Sep;31(3):643–649.

- von Elm E, Altman DG, Egger M, Pocock SJ, Gøtzsche PC, Vandenbroucke JP; STROBE Initiative. The Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) statement: guidelines for reporting observational studies.  Lancet 2007 Oct;370(9596):1453-14577. PMID: 18064739.

Saul Crandon

Leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

No Comments on Case-control and Cohort studies: A brief overview

Very well presented, excellent clarifications. Has put me right back into class, literally!

Very clear and informative! Thank you.

very informative article.

Thank you for the easy to understand blog in cohort studies. I want to follow a group of people with and without a disease to see what health outcomes occurs to them in future such as hospitalisations, diagnoses, procedures etc, as I have many health outcomes to consider, my questions is how to make sure these outcomes has not occurred before the “exposure disease”. As, in cohort studies we are looking at incidence (new) cases, so if an outcome have occurred before the exposure, I can leave them out of the analysis. But because I am not looking at a single outcome which can be checked easily and if happened before exposure can be left out. I have EHR data, so all the exposure and outcome have occurred. my aim is to check the rates of different health outcomes between the exposed)dementia) and unexposed(non-dementia) individuals.

Very helpful information

Thanks for making this subject student friendly and easier to understand. A great help.

Thanks a lot. It really helped me to understand the topic. I am taking epidemiology class this winter, and your paper really saved me.

Happy new year.

Wow its amazing n simple way of briefing ,which i was enjoyed to learn this.its very easy n quick to pick ideas .. Thanks n stay connected

Saul you absolute melt! Really good work man

am a student of public health. This information is simple and well presented to the point. Thank you so much.

very helpful information provided here

really thanks for wonderful information because i doing my bachelor degree research by survival model

Quite informative thank you so much for the info please continue posting. An mph student with Africa university Zimbabwe.

Thank you this was so helpful amazing

Apreciated the information provided above.

So clear and perfect. The language is simple and superb.I am recommending this to all budding epidemiology students. Thanks a lot.

Great to hear, thank you AJ!

I have recently completed an investigational study where evidence of phlebitis was determined in a control cohort by data mining from electronic medical records. We then introduced an intervention in an attempt to reduce incidence of phlebitis in a second cohort. Again, results were determined by data mining. This was an expedited study, so there subjects were enrolled in a specific cohort based on date(s) of the drug infused. How do I define this study? Thanks so much.

thanks for the information and knowledge about observational studies. am a masters student in public health/epidemilogy of the faculty of medicines and pharmaceutical sciences , University of Dschang. this information is very explicit and straight to the point

Very much helpful

Subscribe to our newsletter

You will receive our monthly newsletter and free access to Trip Premium.

Related Articles

Cluster Randomized Trials: Concepts

This blog summarizes the concepts of cluster randomization, and the logistical and statistical considerations while designing a cluster randomized controlled trial.

Expertise-based Randomized Controlled Trials

This blog summarizes the concepts of Expertise-based randomized controlled trials with a focus on the advantages and challenges associated with this type of study.

An introduction to different types of study design

Conducting successful research requires choosing the appropriate study design. This article describes the most common types of designs conducted by researchers.

What Is A Case Control Study?

Julia Simkus

Editor at Simply Psychology

BA (Hons) Psychology, Princeton University

Julia Simkus is a graduate of Princeton University with a Bachelor of Arts in Psychology. She is currently studying for a Master's Degree in Counseling for Mental Health and Wellness in September 2023. Julia's research has been published in peer reviewed journals.

Learn about our Editorial Process

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

A case-control study is a research method where two groups of people are compared – those with the condition (cases) and those without (controls). By looking at their past, researchers try to identify what factors might have contributed to the condition in the ‘case’ group.

Explanation

A case-control study looks at people who already have a certain condition (cases) and people who don’t (controls). By comparing these two groups, researchers try to figure out what might have caused the condition. They look into the past to find clues, like habits or experiences, that are different between the two groups.

The “cases” are the individuals with the disease or condition under study, and the “controls” are similar individuals without the disease or condition of interest.

The controls should have similar characteristics (i.e., age, sex, demographic, health status) to the cases to mitigate the effects of confounding variables .

Case-control studies identify any associations between an exposure and an outcome and help researchers form hypotheses about a particular population.

Researchers will first identify the two groups, and then look back in time to investigate which subjects in each group were exposed to the condition.

If the exposure is found more commonly in the cases than the controls, the researcher can hypothesize that the exposure may be linked to the outcome of interest.

Figure: Schematic diagram of case-control study design. Kenneth F. Schulz and David A. Grimes (2002) Case-control studies: research in reverse . The Lancet Volume 359, Issue 9304, 431 – 434

Quick, inexpensive, and simple

Because these studies use already existing data and do not require any follow-up with subjects, they tend to be quicker and cheaper than other types of research. Case-control studies also do not require large sample sizes.

Beneficial for studying rare diseases

Researchers in case-control studies start with a population of people known to have the target disease instead of following a population and waiting to see who develops it. This enables researchers to identify current cases and enroll a sufficient number of patients with a particular rare disease.

Useful for preliminary research

Case-control studies are beneficial for an initial investigation of a suspected risk factor for a condition. The information obtained from cross-sectional studies then enables researchers to conduct further data analyses to explore any relationships in more depth.

Limitations

Subject to recall bias.

Participants might be unable to remember when they were exposed or omit other details that are important for the study. In addition, those with the outcome are more likely to recall and report exposures more clearly than those without the outcome.

Difficulty finding a suitable control group

It is important that the case group and the control group have almost the same characteristics, such as age, gender, demographics, and health status.

Forming an accurate control group can be challenging, so sometimes researchers enroll multiple control groups to bolster the strength of the case-control study.

Do not demonstrate causation

Case-control studies may prove an association between exposures and outcomes, but they can not demonstrate causation.

A case-control study is an observational study where researchers analyzed two groups of people (cases and controls) to look at factors associated with particular diseases or outcomes.

Below are some examples of case-control studies:

- Investigating the impact of exposure to daylight on the health of office workers (Boubekri et al., 2014).

- Comparing serum vitamin D levels in individuals who experience migraine headaches with their matched controls (Togha et al., 2018).

- Analyzing correlations between parental smoking and childhood asthma (Strachan and Cook, 1998).

- Studying the relationship between elevated concentrations of homocysteine and an increased risk of vascular diseases (Ford et al., 2002).

- Assessing the magnitude of the association between Helicobacter pylori and the incidence of gastric cancer (Helicobacter and Cancer Collaborative Group, 2001).

- Evaluating the association between breast cancer risk and saturated fat intake in postmenopausal women (Howe et al., 1990).

Frequently asked questions

1. what’s the difference between a case-control study and a cross-sectional study.

Case-control studies are different from cross-sectional studies in that case-control studies compare groups retrospectively while cross-sectional studies analyze information about a population at a specific point in time.

In cross-sectional studies , researchers are simply examining a group of participants and depicting what already exists in the population.

2. What’s the difference between a case-control study and a longitudinal study?

Case-control studies compare groups retrospectively, while longitudinal studies can compare groups either retrospectively or prospectively.

In a longitudinal study , researchers monitor a population over an extended period of time, and they can be used to study developmental shifts and understand how certain things change as we age.

In addition, case-control studies look at a single subject or a single case, whereas longitudinal studies can be conducted on a large group of subjects.

3. What’s the difference between a case-control study and a retrospective cohort study?

Case-control studies are retrospective as researchers begin with an outcome and trace backward to investigate exposure; however, they differ from retrospective cohort studies.

In a retrospective cohort study , researchers examine a group before any of the subjects have developed the disease, then examine any factors that differed between the individuals who developed the condition and those who did not.

Thus, the outcome is measured after exposure in retrospective cohort studies, whereas the outcome is measured before the exposure in case-control studies.

Boubekri, M., Cheung, I., Reid, K., Wang, C., & Zee, P. (2014). Impact of windows and daylight exposure on overall health and sleep quality of office workers: a case-control pilot study. Journal of Clinical Sleep Medicine: JCSM: Official Publication of the American Academy of Sleep Medicine, 10 (6), 603-611.

Ford, E. S., Smith, S. J., Stroup, D. F., Steinberg, K. K., Mueller, P. W., & Thacker, S. B. (2002). Homocyst (e) ine and cardiovascular disease: a systematic review of the evidence with special emphasis on case-control studies and nested case-control studies. International journal of epidemiology, 31 (1), 59-70.

Helicobacter and Cancer Collaborative Group. (2001). Gastric cancer and Helicobacter pylori: a combined analysis of 12 case control studies nested within prospective cohorts. Gut, 49 (3), 347-353.

Howe, G. R., Hirohata, T., Hislop, T. G., Iscovich, J. M., Yuan, J. M., Katsouyanni, K., … & Shunzhang, Y. (1990). Dietary factors and risk of breast cancer: combined analysis of 12 case—control studies. JNCI: Journal of the National Cancer Institute, 82 (7), 561-569.

Lewallen, S., & Courtright, P. (1998). Epidemiology in practice: case-control studies. Community eye health, 11 (28), 57–58.

Strachan, D. P., & Cook, D. G. (1998). Parental smoking and childhood asthma: longitudinal and case-control studies. Thorax, 53 (3), 204-212.

Tenny, S., Kerndt, C. C., & Hoffman, M. R. (2021). Case Control Studies. In StatPearls . StatPearls Publishing.

Togha, M., Razeghi Jahromi, S., Ghorbani, Z., Martami, F., & Seifishahpar, M. (2018). Serum Vitamin D Status in a Group of Migraine Patients Compared With Healthy Controls: A Case-Control Study. Headache, 58 (10), 1530-1540.

Further Information

- Schulz, K. F., & Grimes, D. A. (2002). Case-control studies: research in reverse. The Lancet, 359(9304), 431-434.

- What is a case-control study?

Related Articles

Research Methodology

Qualitative Data Coding

What Is a Focus Group?

Cross-Cultural Research Methodology In Psychology

What Is Internal Validity In Research?

Research Methodology , Statistics

What Is Face Validity In Research? Importance & How To Measure

Criterion Validity: Definition & Examples

Overview of Analytic Studies

- 1

- | 2

- | 3

- | 4

- | 5

- | 6

Epi_Tools.XLSX

All Modules

Case-Control Studies

Cohort studies have an intuitive logic to them, but they can be very problematic when:

- The outcomes being investigated are rare;

- There is a long time period between the exposure of interest and the development of the disease; or

- It is expensive or very difficult to obtain exposure information from a cohort.

In the first case, the rarity of the disease requires enrollment of very large numbers of people. In the second case, the long period of follow-up requires efforts to keep contact with and collect outcome information from individuals. In all three situations, cost and feasibility become an important concern.

A case-control design offers an alternative that is much more efficient. The goal of a case-control study is the same as that of cohort studies, i.e. to estimate the magnitude of association between an exposure and an outcome. However, case-control studies employ a different sampling strategy that gives them greater efficiency. As with a cohort study, a case-control study attempts to identify all people who have developed the disease of interest in the defined population. This is not because they are inherently more important to estimating an association, but because they are almost always rarer than non-diseased individuals, and one of the requirements of accurate estimation of the association is that there are reasonable numbers of people in both the numerators (cases) and denominators (people or person-time) in the measures of disease frequency for both exposed and reference groups. However, because most of the denominator is made up of people who do not develop disease, the case-control design avoids the need to collect information on the entire population by selecting a sample of the underlying population.

To illustrate this consider the following hypothetical scenario in which the source population is Plymouth County in Massachusetts, which has a total population of 6,647 (hypothetical). Thirteen people in the county have been diagnosed with an unusual disease and seven of them have a particular exposure that is suspected of being an important contributing factor. The chief problem here is that the disease is quite rare.

If I somehow had exposure and outcome information on all of the subjects in the source population and looked at the association using a cohort design, it might look like this:

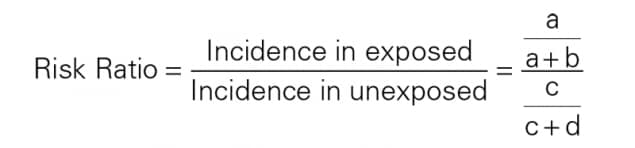

Therefore, the incidence in the exposed individuals would be 7/1,007 = 0.70%, and the incidence in the non-exposed individuals would be 6/5,640 = 0.11%. Consequently, the risk ratio would be 0.70/0.11=6.52, suggesting that those who had the risk factor (exposure) had 6.5 times the risk of getting the disease compared to those without the risk factor. This is a strong association.

In this hypothetical example, I had data on all 6,647 people in the source population, and I could compute the probability of disease (i.e., the risk or incidence) in both the exposed group and the non-exposed group, because I had the denominators for both the exposed and non-exposed groups.

The problem , of course, is that I usually don't have the resources to get the data on all subjects in the population. If I took a random sample of even 5-10% of the population, I might not have any diseased people in my sample.

An alternative approach would be to use surveillance databases or administrative databases to find most or all 13 of the cases in the source population and determine their exposure status. However, instead of enrolling all of the other 5,634 residents, suppose I were to just take a sample of the non-diseased population. In fact, suppose I only took a sample of 1% of the non-diseased people and I then determined their exposure status. The data might look something like this:

With this sampling approach I can no longer compute the probability of disease in each exposure group, because I no longer have the denominators in the last column. In other words, I don't know the exposure distribution for the entire source population. However, the small control sample of non-diseased subjects gives me a way to estimate the exposure distribution in the source population. So, I can't compute the probability of disease in each exposure group, but I can compute the odds of disease in the case-control sample.

The Odds Ratio

The odds of disease among the exposed sample are 7/10, and the odds of disease in the non-exposed sample are 6/56. If I compute the odds ratio, I get (7/10) / (5/56) = 6.56, very close to the risk ratio that I computed from data for the entire population. We will consider odds ratios and case-control studies in much greater depth in a later module. However, for the time being the key things to remember are that:

- The sampling strategy for a case-control study is very different from that of cohort studies, despite the fact that both have the goal of estimating the magnitude of association between the exposure and the outcome.

- In a case-control study there is no "follow-up" period. One starts by identifying diseased subjects and determines their exposure distribution; one then takes a sample of the source population that produced those cases in order to estimate the exposure distribution in the overall source population that produced the cases. [In cohort studies none of the subjects have the outcome at the beginning of the follow-up period.]

- In a case-control study, you cannot measure incidence, because you start with diseased people and non-diseased people, so you cannot calculate relative risk.

- The case-control design is very efficient. In the example above the case-control study of only 79 subjects produced an odds ratio (6.56) that was a very close approximation to the risk ratio (6.52) that was obtained from the data in the entire population.

- Case-control studies are particularly useful when the outcome is rare is uncommon in both exposed and non-exposed people.

The Difference Between "Probability" and "Odds"?

- The odds are defined as the probability that the event will occur divided by the probability that the event will not occur.

If the probability of an event occurring is Y, then the probability of the event not occurring is 1-Y. (Example: If the probability of an event is 0.80 (80%), then the probability that the event will not occur is 1-0.80 = 0.20, or 20%.

The odds of an event represent the ratio of the (probability that the event will occur) / (probability that the event will not occur). This could be expressed as follows:

Odds of event = Y / (1-Y)

So, in this example, if the probability of the event occurring = 0.80, then the odds are 0.80 / (1-0.80) = 0.80/0.20 = 4 (i.e., 4 to 1).

- If a race horse runs 100 races and wins 25 times and loses the other 75 times, the probability of winning is 25/100 = 0.25 or 25%, but the odds of the horse winning are 25/75 = 0.333 or 1 win to 3 loses.

- If the horse runs 100 races and wins 5 and loses the other 95 times, the probability of winning is 0.05 or 5%, and the odds of the horse winning are 5/95 = 0.0526.

- If the horse runs 100 races and wins 50, the probability of winning is 50/100 = 0.50 or 50%, and the odds of winning are 50/50 = 1 (even odds).

- If the horse runs 100 races and wins 80, the probability of winning is 80/100 = 0.80 or 80%, and the odds of winning are 80/20 = 4 to 1.

NOTE that when the probability is low, the odds and the probability are very similar.

On Sept. 8, 2011 the New York Times ran an article on the economy in which the writer began by saying "If history is a guide, the odds that the American economy is falling into a double-dip recession have risen sharply in recent weeks and may even have reached 50 percent." Further down in the article the author quoted the economist who had been interviewed for the story. What the economist had actually said was, "Whether we reach the technical definition [of a double-dip recession] I think is probably close to 50-50."

Question: Was the author correct in saying that the "odds" of a double-dip recession may have reached 50 percent?

return to top | previous page | next page

Content ©2023. All Rights Reserved. Date last modified: August 15, 2023. Wayne W. LaMorte, MD, PhD, MPH

- Chapter 8. Case-control and cross sectional studies

Case-control studies

Selection of cases, selection of controls, ascertainment of exposure, cross sectional studies.

- Chapter 1. What is epidemiology?

- Chapter 2. Quantifying disease in populations

- Chapter 3. Comparing disease rates

- Chapter 4. Measurement error and bias

- Chapter 5. Planning and conducting a survey

- Chapter 6. Ecological studies

- Chapter 7. Longitudinal studies

- Chapter 9. Experimental studies

- Chapter 10. Screening

- Chapter 11. Outbreaks of disease

- Chapter 12. Reading epidemiological reports

- Chapter 13. Further reading

Follow us on

Content links.

- Collections

- Health in South Asia

- Women’s, children’s & adolescents’ health

- News and views

- BMJ Opinion

- Rapid responses

- Editorial staff

- BMJ in the USA

- BMJ in South Asia

- Submit your paper

- BMA members

- Subscribers

- Advertisers and sponsors

Explore BMJ

- Our company

- BMJ Careers

- BMJ Learning

- BMJ Masterclasses

- BMJ Journals

- BMJ Student

- Academic edition of The BMJ

- BMJ Best Practice

- The BMJ Awards

- Email alerts

- Activate subscription

Information

Official websites use .gov

A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS

A lock ( ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

Designing and Conducting Analytic Studies in the Field

Brendan R. Jackson And Patricia M. Griffin

Analytic studies can be a key component of field investigations, but beware of an impulse to begin one too quickly. Studies can be time- and resource-intensive, and a hastily constructed study might not answer the correct questions. For example, in a foodborne disease outbreak investigation, if the culprit food is not on your study’s questionnaire, you probably will not be able to implicate it. Analytic studies typically should be used to test hypotheses, not generate them. However, in certain situations, collecting data quickly about patients and a comparison group can be a way to explore multiple hypotheses. In almost all situations, generating hypotheses before designing a study will help you clarify your study objectives and ask better questions.

- Generating Hypotheses

- Study Designs for Testing Hypotheses

- Types of Observational Studies for Testing Hypotheses

- Selection of Controls in Case–Control Studies

- Matching in Case–Control Studies

- Example: Using an Analytic Study to Solve an Outbreak at a Church Potluck Dinner (But Not That Church Potluck)

- Outbreaks with Universal Exposure

The initial steps of an investigation, described in previous chapters, are some of your best sources of hypotheses. Key activities include the following:

- By examining the sex distribution among persons in outbreaks, US enteric disease investigators have learned to suspect a vegetable as the source when most patients are women. (Of course, generalizations do not always hold true!)

- In an outbreak of bloodstream infections caused by Serratia marcescens among patients receiving parenteral nutrition (food administered through an intravenous catheter), investigators had a difficult time finding the source until they noted that none of the 19 cases were among children. Further investigation of the parenteral nutrition administered to adults but not children in that hospital identified contaminated amino acid solution as the source ( 1 ).

- Focus on outliers. Give extra attention to the earliest and latest cases on an epidemic curve and to persons who recently visited the neighborhood where the outbreak is occurring. Interviews with these patients can yield important clues (e.g., by identifying the index case, secondary case, or a narrowed list of common exposures).

- Determine sources of similar outbreaks. Consult health department records, review the literature, and consult experts to learn about previous sources. Be mindful that new sources frequently occur, given ever-changing social, behavioral, and commercial trends.

- Conduct a small number of in-depth, open-ended interviews. When a likely source is not quickly evident, conducting in-depth (often >1 hour), open-ended interviews with a subset of patients (usually 5 to 10) or their caregivers can be the best way to identify possible sources. It helps to begin with a semistructured list of questions designed to help the patient recall the events and exposures of every day during the incubation period. The interview can end with a “shotgun” questionnaire (see activity 6) ( Box 7.1 ). A key component of this technique is that one investigator ideally conducts, or at least participates in, as many interviews as possible (five or more) because reading notes from others’ interviews is no substitute for soliciting and hearing the information first-hand. For example, in a 2009 Escherichia coli O157 outbreak, investigators were initially unable to find the source through general and targeted questionnaires. During open-ended interviews with five patients, the interviewer noted that most reported having eaten strawberries, a particular type of candy, and uncooked prepackaged cookie dough. An analytic study was then conducted that included questions about these exposures; it confirmed cookie dough as the source ( 3 ).

- Ask patients what they think. Patients can have helpful thoughts about the source of their illness. However, be aware that patients often associate their most recent food exposure (e.g., a meal) with illness, whereas the inciting exposure might have been long before.

- Consider administering a shotgun questionnaire. Such questionnaires, which typically ask about hundreds of possible exposures, are best used on a limited number of patients as part of hypothesis-generating interviews. After generating hypotheses, investigators can create a questionnaire targeted to that investigation. Although not an ideal method, shotgun questionnaires can be used by multiple interviewers to obtain data about large numbers of patients ( Box 7.1 ).

In November 2014, a US surveillance system for foodborne diseases (PulseNet) detected a cluster (i.e., a possible outbreak) of listeriosis cases based on similar-appearing Listeria monocytogenes isolates by pulsed-field gel electrophoresis of the isolates. No suspected foods were identified through routine patient interviews by using a Listeria -specific questionnaire with approximately 40 common food sources of listeriosis (e.g., soft cheese and deli meat). The outbreak’s descriptive epidemiology offered no clear leads: the sex distribution was nearly even, the age spectrum was wide, and the case-fatality rate of approximately 20% was typical. Notably, however, 3 of the 35 cases occurred among previously healthy school-aged children, which is highly unusual for listeriosis. Most cases occurred during late October and early November.

Investigators began reinterviewing patients by using a hypothesis-generating shotgun questionnaire with more than 500 foods, but it did not include caramel apples. By comparing the first nine patient responses with data from a published survey of food consumption, strawberries and ice cream emerged as hypotheses. However, several interviewed patients denied having eaten these foods during the month before illness. An investigator then conducted lengthy, open-ended interviews with patients and their family members. During one interview, he asked about special foods eaten during recent holidays, and the patient’s wife replied that her husband had eaten prepackaged caramel apples around Halloween. Although produce items had been implicated in past listeriosis outbreaks, caramel apples seemed an unlikely source. However, the interviewer took note of this connection because he had previously interviewed another patient who reported having eaten caramel apples. This event underscores the importance of one person conducting multiple interviews because that person might make subtle mental connections that may be missed when reviewing other interviewers’ notes. In fact, several other investigators listening to the interview noted this exposure—among hundreds of others—but thought little of it.

In this investigation, the finding of high strawberry and ice cream consumption among patients, coupled with the timing of the outbreak during a holiday period, helped make a sweet food (i.e., caramel apples) seem more plausible as the possible source.

To explore the caramel apple hypothesis, investigators asked five other patients about this exposure, and four reported having eaten them. On the basis of these initial results, investigators designed and administered a targeted questionnaire to patients involved in the outbreak, as well as to patients infected with unrelated strains of L. monocytogenes (i.e., a case–case study). This study, combined with testing of apples and the apple packing facility, confirmed that caramel apples were the source (2). Had a single interviewer performed multiple open-ended interviews to generate hypotheses before the shotgun questionnaire, the outbreak might have been solved sooner.

As evident in public health and clinical guidelines, randomized controlled trials (e.g., trials of drugs, vaccines, and community-level interventions) are the reference standard for epidemiology, providing the highest level of evidence. However, such studies are not possible in certain situations, including outbreak investigations. Instead, investigators must rely on observational studies, which can provide sufficient evidence for public health action. In observational studies, the epidemiologist documents rather than determines the exposures, quantifying the statistical association between exposure and disease. Here again, the key when designing such studies is to obtain a relevant comparison group for the patients ( Box 7.2 ).

Because field analytic studies are used to quantify the association between exposure and disease, defining what is meant by exposure and disease is essential. Exposure is used broadly, meaning demographic characteristics, genetic or immunologic makeup, behaviors, environmental exposures, and other factors that might influence a person’s risk for disease. Because precise information can help accurately estimate an exposure’s effect on disease, exposure measures should be as objective and standard as possible. Developing a measure of exposure can be conceptually straightforward for an exposure that is a relatively discrete event or characteristic—for example, whether a person received a spinal injection with steroid medication compounded at a specific pharmacy or whether a person received a typhoid vaccination during the year before international travel. Although these exposures might be straightforward in theory, they can be subject to interpretation in practice. Should a patient injected with a medication from an unknown pharmacy be considered exposed? Whatever decision is made should be documented and applied consistently.

Additionally, exposures often are subject to the whims of memory. Memory aids (e.g., restaurant menus, vaccination cards, credit card receipts, and shopper cards) can be helpful. More than just a binary yes or no, the dose of an exposure can also be enlightening. For example, in an outbreak of fungal bloodstream infections linked to contaminated intravenous saline flushes administered at an oncology clinic, affected patients had received a greater number of flushes than unaffected patients ( 4 ). Similarly, in an outbreak of Listeria monocytogenes infections, the association with deli meat became apparent only when the exposure evaluated was consumption of deli meat more than twice a week ( 5 ).

Defining disease (e.g., does a person have botulism?) might sound simple, but often it is not; read more about making and applying disease case definitions in Chapter 3 .

Three types of observational studies are commonly used in the field. All are best performed by using a standard questionnaire specific for that investigation, developed on the basis of hypothesis-generating interviews.

Observational Study Type 1: Cohort

In concept, a cohort study, like an experimental study, begins with a group of persons without the disease under study, but with different exposure experiences, and follows them over time to find out whether they experience the disease or health condition of interest. However, in a cohort study, each person’s exposure is merely recorded rather than assigned randomly by the investigator. Then the occurrence of disease among persons with different exposures is compared to assess whether the exposures are associated with increased risk for disease. Cohort studies can be prospective or retrospective.

Prospective Cohort Studies

A prospective cohort study enrolls participants before they experience the disease or condition of interest. The enrollees are then followed over time for occurrence of the disease or condition. The unexposed or lowest exposure group serves as the comparison group, providing an estimate of the baseline or expected amount of disease. An example of a prospective cohort study is the Framingham Heart Study. By assessing the exposures of an original cohort of more than 5,000 adults without cardiovascular disease (CVD), beginning in 1948 and following them over time, the study was the first to identify common CVD risk factors ( 6 ). Each case of CVD identified after enrollment was counted as an incident case. Incidence was then quantified as the number of cases divided by the sum of time that each person was followed (incidence rate) or as the number of cases divided by the number of participants being followed (attack rate or risk or i ncidence proportion). In field epidemiology, prospective cohort studies also often involve a group of persons who have had a known exposure (e.g., survived the World Trade Center attack on September 11, 2001 [ 7 ]) and who are then followed to examine the risk for subsequent illnesses with long incubation or latency periods.

Retrospective Cohort Studies

A retrospective cohort study enrolls a defined participant group after the disease or condition of interest has occurred. In field epidemiology, these studies are more common than prospective studies. The population affected is often well-defined (e.g., banquet attendees, a particular school’s students, or workers in a certain industry). Investigators elicit exposure histories and compare disease incidence among persons with different exposures or exposure levels.

Observational Study Type 2: Case–Control

In a case–control study, the investigator must identify a comparison group of control persons who have had similar opportunities for exposure as the case-patients. Case–control studies are commonly performed in field epidemiology when a cohort study is impractical (e.g., no defined cohort or too many non-ill persons in the group to interview). Whereas a cohort study proceeds conceptually from exposure to disease or condition, a case–control study begins conceptually with the disease or condition and looks backward at exposures. Excluding controls by symptoms alone might not guarantee that they do not have mild cases of the illness under investigation. Table 7.1 presents selected key differences between a case–control and retrospective cohort study.

Observational Study Type 3: Case–Case

In case–case studies, a group of patients with the same or similar disease serve as a comparison group (8). This method might require molecular subtyping of the suspected pathogen to distinguish outbreak-associated cases from other cases and is especially useful when relevant controls are difficult to identify. For example, controls for an investigation of Listeria illnesses typically are patients with immunocompromising conditions (e.g., cancer or corticosteroid use) who might be difficult to identify among the general population. Patients with Listeria isolates of a different subtype than the outbreak strain can serve as comparisons to help reduce bias when comparing food exposures. However, patients with similar illnesses can have similar exposures, which can introduce a bias, making identifying the source more difficult. Moreover, other considerations should influence the choice of a comparison group. If most outbreak-associated case-patients are from a single neighborhood or are of a certain race/ethnicity, other patients with listeriosis from across the country will serve as an inadequate comparison group.

Considerations for Selecting Controls

Selecting relevant controls is one of the most important considerations when designing a case–control study. Several key considerations are presented here; consult other resources for in-depth discussion ( 9,10 ). Ideally, controls should

- Thoroughly reflect the source population from which case-patients arose, and

- Provide a good estimate of the level of exposure one would expect from that population. Sometimes the source population is not so obvious, and a case–control study using controls from the general population might be needed to implicate a general exposure (e.g., visiting a specific clinic, restaurant, or fair). The investigation can then focus on specific exposures among persons with the general exposure (see also next section).

Controls should be chosen independently of any specific exposure under evaluation. If you select controls on the basis of lack of exposure, you are likely to find an association between illness and that exposure regardless of whether one exists. Also important is selecting controls from a source population in a way that minimizes confounding (see Chapter 8 ), which is the existence of a factor (e.g., annual income) that, by being associated with both exposure and disease, can affect the associations you are trying to examine.

When trying to enroll controls who reflect the source population, try to avoid overmatching (i.e., enrolling controls who are too similar to case-patients, resulting in fewer differences among case-patients and controls than ought to exist and decreased ability to identify exposure–disease associations). When conducting case–control studies in hospitals and other healthcare settings, ensure that controls do not have other diseases linked to the exposure under study.

Commonly Used Control Selection Methods

When an outbreak does not affect a defined population (e.g., potluck dinner attendees) but rather the community at large, a range of options can be used to determine how to select controls from a large group of persons.

- Random-digit dialing . This method, which involves selecting controls by using a system that randomly selects telephone numbers from a directory, has been a staple of US outbreak investigations. In recent years, however, declining response rates because of increasing use of caller identification and cellular phones and lack of readily available directory listings of cellular phone numbers by geographic area have made this method increasingly difficult. Even when this method was most useful, often 50 or more numbers needed to be dialed to reach one household or person who both answered and provided a usable match for the case-patient. Commercial databases that include cellular phone numbers have been used successfully to partially address this problem, but the method remains time-consuming ( 11 ).

- Random or systematic sampling from a list . For investigations in settings where a roster is available (e.g., attendees at a resort on certain dates), controls can be selected by either random or systematic sampling. Government records (e.g., motor vehicle, voter, or tax records) can provide lists of possible controls, but they might not be representative of the population being studied ( 11 ). For random sampling, a table or computer-generated list of random numbers can be used to select every n th persons to contact (e.g., every 12th or 13th).

- Neighborhood . Recruiting controls from the same neighborhood as case-patients (i.e., neighborhood matching) has commonly been used during case–control studies, particularly in low-and middle-income countries. For example, during an outbreak of typhoid fever in Tajikistan ( 12 ), investigators recruited controls by going door-to-door down a street, starting at a case-patient’s house; a study of cholera in Haiti used a similar method ( 13 ). Typically, the immediately neighboring households are skipped to prevent overmatching.

- Patients’ friends or relatives . Using friends and relatives as controls can be an effective technique when the characteristics of case-patients (e.g., very young children) make finding controls by a random method difficult. Typically, the investigator interviews a patient or his or her parent, then asks for the names and contact information for more friends or relatives who are needed as controls. One advantage is that the friends of an ill person are usually willing to participate, knowing their cooperation can help solve the puzzle. However, because they can have similar personal habits and preferences as patients, their exposures might be similar. Such overmatching can decrease the likelihood of finding the source of the illness or condition.

- Databases of persons with exposure information . Sources of data on persons with exposure information include survey data (e.g., FoodNet Population Survey [ 14 ]), public health databases of patients with other illnesses or a different subtype of the same illness, and previous studies. ( Chapter 4 describes additional sources.)

When considering outside data sources, investigators must determine whether those data provide an appropriate comparison group. For example, persons in surveys might differ from case-patients in ways that are impossible to determine. Other patients might be so similar to case-patients that risky exposures are unidentifiable, or they might be so different that exposures identified as risks are not true risks.

To help control for confounding, controls can be matched to case-patients on characteristics specified by investigators, including age group, sex, race/ethnicity, and neighborhood. Such matching does not itself reduce confounding, but it enables greater efficiency when matched analyses are performed that do ( 15 ). When deciding to match, however, be judicious. Matching on too many characteristics can make controls difficult to find (making a tough process even harder). Imagine calling hundreds of random telephone numbers trying to find a man of a particular ethnicity aged 50–54 years who is then willing to answer your questions. Also, remember not to match on the exposure of interest or on any other characteristic you wish to examine. Matched case–control study data typically necessitate a matched analysis (e.g., conditional logistic regression) ( 15 ).

Matching Types

The two main types of matching are pair matching and frequency matching.

Pair Matching

In pair matching, each control is matched to a specific case-patient. This method can be helpful logistically because it allows matching by friends or relatives, neighborhood, or telephone exchange, but finding controls who meet specific criteria can be burdensome.

Frequency Matching

In frequency matching, also called category matching , controls are matched to case-patients in proportion to the distribution of a characteristic among case-patients. For example, if 20% of case-patients are children aged 5–18 years, 50% are adults aged 19–49 years, and 30% are adults 50 years or older, controls should be enrolled in similar proportions. This method works best when most case-patients have been identified before control selection begins. It is more efficient than pair matching because a person identified as a possible control who might not meet the criteria for matching a particular case-patient might meet criteria for one of the case-patient groups.

Number of Controls

Most field case–control studies use control-to-case-patient ratios of 1:1, 2:1, or 3:1. Enrolling more than one control per case-patient can increase study power, which might be needed to detect a statistically significant difference in exposure between case-patients and controls, particularly when an outbreak involves a limited number of cases. The incremental gain of adding more controls beyond three or four is small because study power begins to plateau. Note that not all case-patients need to have the same number of controls. Sample size calculations can help in estimating a target number of controls to enroll, although sample sizes in certain field investigations are limited more by time and resource constraints. Still, estimating study power under a range of scenarios is wise because an analytic study might not be worth doing if you have little chance of detecting a statistically significant association. Sample size calculators for unmatched case–control studies are available at http://www.openepi.com and in the StatCalc function of Epi Info ( https://www.cdc.gov/epiinfo ).

More than One Control Group

Sometimes the choice of a control group is so vexing that investigators decide to use more than one type of control group (e.g., a hospital-based group and a community group). If the two control groups provide similar results and conclusions about risk factors for disease, the credibility of the findings is increased. In contrast, if the two control groups yield conflicting results, interpretation becomes more difficult.

Since the 1940s, field epidemiology students have studied a classic outbreak of gastrointestinal illness at a church potluck dinner in Oswego, New York ( 16 ). However, the case study presented here, used to illustrate study designs, is a different potluck dinner.

In April 2015, an astute neurologist in Lancaster, Ohio, contacted the local health department about a patient in the emergency department with a suspected case of botulism. Within 2 hours, four more patients arrived with similar symptoms, including blurred vision and shortness of breath. Health officials immediately recognized this as a botulism outbreak.

- If the source is a widely distributed commercial product, then the population to study is persons across the United States and possibly abroad.

- If the source is airborne, then the population to study is residents of a single city or area.

- If the source is food from a restaurant, then the population to study is predominantly local residents and some travelers.

- If the source is a meal at a workplace or social setting, then the population to study is meal attendees.

- If the source is a meal at home, then the population to study is household members and any guests.

Descriptive epidemiology and questioning of the case-patients revealed that all had eaten at the same church potluck dinner and had no other common exposures, making the potluck the likely exposure site and attendees the likely source population. Thus, an analytic study would be targeted at potluck attendees, although investigators must remain alert to case-patients among nonattendees. As initial interviews were conducted, more cases of botulism were being diagnosed, quickly increasing to more than 25. The source of the outbreak needed to be identified rapidly to halt further exposure and illness.

- List of foods served at the potluck.

- Approximate number of attendees.

- A case definition.

- Information from 5–10 hypothesis-generating interviews with a few case-patients or their family members.

- A cohort study would be a reasonable option because a defined group exists (i.e., a cohort) of exposed persons who could be interviewed in a reasonable amount of time. The study would be retrospective because the outcome (i.e., botulism) has already occurred, and investigators could assess exposures retrospectively (i.e., foods eaten at the potluck) by interviewing attendees.

- In a cohort study, investigators can calculate the attack rate for botulism among potluck attendees who reported having eaten each food and for those who had not. For example, if 20 of the 30 attendees who had eaten a particular food (e.g., potato salad) had botulism, you would calculate the attack rate by dividing 20 (corresponding to cell a in Handout 7.1 ) by 30 (total exposed, or a + b), yielding approximately 67%. If 5 of the 45 attendees who had not eaten potato salad had botulism, the attack rate among the unexposed—5 / 45, corresponding to c/ (c + d)—would be approximately 11%. The risk ratio would be 6, which is calculated by dividing the attack rate among the exposed (67%) by the attack rate among the unexposed (11%).

- A case–control study would be the most feasible option because the entire cohort could not be identified and because the large number of attendees could make interviewing them all difficult. Rather than interview all non-ill persons, a subset could be interviewed as control subjects.

- The method of control subject selection should be considered carefully. If all attendees are not interviewed, determining the risk for botulism among the exposed and unexposed is impossible because investigators would not know the exposures for all non-ill attendees. Instead of risk, investigators calculate the odds of exposure, which can approximate risk. For example, if 20 (80%) of 25 case-patients had eaten potato salad, the odds of potato salad exposure among case-patients would be 20/ 5 = 4 (exposed/ unexposed, or a/ c in Handout 7.2 ). If 10 (20%) of 50 selected controls had eaten potato salad, the odds of exposure among control subjects would be 10/ 40 = 0.25 (or b/ d in Handout 7.2). Dividing the odds of exposure among the case-patients (a/ c) by the odds of exposure among control subjects (b / d) yields an odds ratio of 16 (4/ 0.25). The odds ratio is not a true measure of risk, but it can be used to implicate a food. An odds ratio can approximate a risk ratio when the outcome or disease is rare (e.g., roughly <5% of a population). In such cases, a/ b is similar to a/ (a + b). The odds ratio is typically higher than the risk ratio when >5% of exposed persons in the analysis have the illness.

In the actual outbreak, 29 (38%) of 77 potluck attendees had botulism. The investigators performed a cohort study, interviewing 75 of the 77 attendees about 52 foods served ( 17 ). The attack rate among persons who had eaten potato salad was significantly and substantially higher than the attack rate among those who had not, with a risk ratio of 14 (95% confidence interval 5–42). One of the potato salads served was made with incorrectly home-canned potatoes (a known source of botulinum toxin), and samples of discarded potato salad tested positive for botulinum toxin, supporting the findings of the analytic study. (Of note, persons often blame potato salad for causing illness when, in fact, it rarely is a source. This outbreak was a notable exception.)

In field epidemiology, the link between exposure and illness is often so strong that it is evident despite such inherent study limitations as small sample size and exposure misclassification. In this outbreak, a few of the patients with botulism reported not having eaten potato salad, and some of the attendees without botulism reported having eaten it. In epidemiologic studies, you rarely find 100% concordance between exposure and outcome for various reasons, including incomplete or erroneous recall because remembering everything eaten is difficult. Here, cross-contamination of potato salad with other foods might have helped explain cases among patients who had not eaten potato salad because only a small amount of botulinum toxin is needed to produce illness.

Two-by-Two Table to Calculate the Relative Risk, or Risk Ratio, in Cohort Studies

Two- by- two tables are covered in more detail in Chapter 8 .

Two-by-Two Table to Calculate the Odds Ratio in Case–Control Studies

A risk ratio cannot be calculated from a case–control study because true attack rates cannot be calculated.

What kind of study would you design if your hypothesis-generating interviews lead you to believe that everyone, or nearly everyone, was exposed to the same suspected infection source? How would you test hypotheses if all barbecue attendees, ill and non-ill, had eaten the chicken or if all town residents had drunk municipal tap water, and no unexposed group exists for comparison? A few factors that might be of help are the exposure timing (e.g., a particularly undercooked batch of barbeque), the exposure place (e.g., a section of the water system more contaminated than others), and the exposure dose (e.g., number of chicken pieces eaten or glasses of water drunk). Including questions about the time, place, and frequency of highly suspected exposures in a questionnaire can improve the chances of detecting a difference ( 18 ).

Cohort, case–control, and case–case studies are the types of analytic studies that field epidemiologists use most often. They are best used as mechanisms for evaluating—quantifying and testing—hypotheses identified in earlier phases of the investigation. Cohort studies, which are oriented conceptually from exposure to disease, are appropriate in settings in which an entire population is well-defined and available for enrollment (e.g., guests at a wedding reception). Cohort studies are also appropriate when well-defined groups can be enrolled by exposure status (e.g., employees working in different parts of a manufacturing plant). Case–control studies, in contrast, are useful when the population is less clearly defined. Case–control studies, oriented from disease to exposure, identify persons with disease and a comparable group of persons without disease (controls). Then the exposure experiences of the two groups are compared. Case–case studies are similar to case–control studies, except that controls have an illness not linked to the outbreak. Case–control studies are probably the type most often appropriate for field investigations. Although conceptually straightforward, the design of an effective epidemiologic study requires many careful decisions. Taking the time needed to develop good hypotheses can result in a questionnaire that is useful for identifying risk factors. The choice of an appropriate comparison group, how many controls per case-patient to enroll, whether to match, and how best to avoid potential biases are all crucial decisions for a successful study.

This chapter relies heavily on the work of Richard C. Dicker, who authored this chapter in the previous edition.

- Gupta N, Hocevar SN, Moulton-Meissner HA, et al. Outbreak of Serratia marcescens bloodstream infections in patients receiving parenteral nutrition prepared by a compounding pharmacy. Clin Infect Dis. 2014;59:1–8.

- Angelo K, Conrad A, Saupe A, et al. Multistate outbreak of Listeria monocytogenes infections linked to whole apples used in commercially produced, prepackaged caramel apples: United States, 2014–2015. Epidemiol Infect. 2017;145:848–56.

- Neil KP, Biggerstaff G, MacDonald JK, et al. A novel vehicle for transmission of Escherichia coli O157: H7 to humans: multistate outbreak of E. coli O157: H7 infections associated with consumption of ready-to-bake commercial prepackaged cookie dough—United States, 2009. Clin Infect Dis. 2012;54:511–8.

- Vasquez AM, Lake J, Ngai S, et al. Notes from the field: fungal bloodstream infections associated with a compounded intravenous medication at an outpatient oncology clinic—New York City, 2016. MMWR. 2016;65:1274–5.

- Gottlieb SL, Newbern EC, Griffin PM, et al. Multistate outbreak of listeriosis linked to turkey deli meat and subsequent changes in US regulatory policy. Clin Infect Dis. 2006;42:29–36.

- Framingham Heart Study: A Project of the National Heart, Lung, and Blood Institute and Boston University. Framingham, MA: Framingham Heart Study; 2017. https://www.framinghamheartstudy.org/

- Jordan HT, Brackbill RM, Cone JE, et al. Mortality among survivors of the Sept 11, 2001, World Trade Center disaster: results from the World Trade Center Health Registry cohort. Lancet. 2011;378:879–87.

- McCarthy N, Giesecke J. Case– case comparisons to study causation of common infectious diseases. Int J Epidemiol. 1999;28:764–8.

- Rothman KJ, Greenland S. Modern epidemiology . 3rd ed. Philadelphia: Lippincott Williams & Wilkins; 2008.

- Wacholder S, McLaughlin JK, Silverman DT, Mandel JS. Selection of controls in case–control studies. I. Principles. Am J Epidemiol. 1992;135:1019–28.

- Chintapalli S, Goodman M, Allen M, et al. Assessment of a commercial searchable population directory as a means of selecting controls for case–control studies. Public Health Rep. 2009;124:378–83.

- Centers for Disease Control and Prevention. Epidemiologic case studies: typhoid in Tajikistan. http://www.cdc.gov/epicasestudies/classroom_typhoid.html

- Dunkle SE, Mba-Jonas A, Loharikar A, Fouche B, Peck M, Ayers T. Epidemic cholera in a crowded urban environment, Port-au-Prince, Haiti. Emerg Infect Dis. 2011;17:2143–6.

- Centers for Disease Control and Prevention. Foodborne Diseases Active Surveillance Network (FoodNet): population survey. http://www.cdc.gov/foodnet/surveys/population.html

- Pearce N. Analysis of matched case–control studies. BMJ. 2016;352:1969.

- Centers for Disease Control and Prevention. Case studies in applied epidemiology: Oswego: an outbreak of gastrointestinal illness following a church supper. http://www.cdc.gov/eis/casestudies.html

- McCarty CL, Angelo K, Beer KD, et al. Notes from the field.: large outbreak of botulism associated with a church potluck meal—Ohio, 2015. MMWR. 2015;64:802–3.

- Tostmann A, Bousema JT, Oliver I. Investigation of outbreaks complicated by universal exposure. Emerg Infect Dis. 2012;18:1717–22.

< Previous Chapter 6: Describing Epidemiologic Data

Next Chapter 8: Analayzing and Interpreting Data >

The fellowship application period will be open March 1-June 5, 2024.

The host site application period is closed.

For questions about the EIS program, please contact us directly at [email protected] .

- Laboratory Leadership Service (LLS)

- Fellowships and Training Opportunities

- Division of Workforce Development

Exit Notification / Disclaimer Policy

- The Centers for Disease Control and Prevention (CDC) cannot attest to the accuracy of a non-federal website.

- Linking to a non-federal website does not constitute an endorsement by CDC or any of its employees of the sponsors or the information and products presented on the website.

- You will be subject to the destination website's privacy policy when you follow the link.

- CDC is not responsible for Section 508 compliance (accessibility) on other federal or private website.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 29 May 2024

A case–control study on pre-, peri-, and neonatal risk factors associated with autism spectrum disorder among Armenian children

- Meri Mkhitaryan 1 ,

- Tamara Avetisyan 2 , 3 ,

- Anna Mkhoyan 4 ,

- Larisa Avetisyan 2 , 5 &

- Konstantin Yenkoyan 1

Scientific Reports volume 14 , Article number: 12308 ( 2024 ) Cite this article

Metrics details

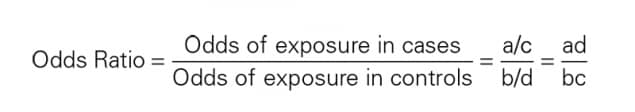

- Autism spectrum disorders

- Public health

- Risk factors

We aimed to investigate the role of pre-, peri- and neonatal risk factors in the development of autism spectrum disorder (ASD) among Armenian children with the goal of detecting and addressing modifiable risk factors to reduce ASD incidence. For this purpose a retrospective case–control study using a random proportional sample of Armenian children with ASD to assess associations between various factors and ASD was conducted. The study was approved by the local ethical committee, and parental written consent was obtained. A total of 168 children with ASD and 329 controls were included in the analysis. Multivariable logistic regression analysis revealed that male gender, maternal weight gain, use of MgB6, self-reported stress during the pregnancy, pregnancy with complications, as well as use of labor-inducing drugs were associated with a significant increase in the odds of ASD, whereas Duphaston use during pregnancy, the longer interpregnancy interval and birth height were associated with decreased odds of ASD. These findings are pertinent as many identified factors may be preventable or modifiable, underscoring the importance of timely and appropriate public health strategies aimed at disease prevention in pregnant women to reduce ASD incidence.

Introduction

Autism spectrum disorder is a neurodevelopmental disorder by the Diagnostic and Statistical Manual of Mental Disorders, the 5th Edition (DSM-5). It is identified by limited repeating patterns of behavior, activities, and interests, as well as impaired social interaction and communication 1 . A systematic review of research articles spanning from 2012 to 2021 indicates that the worldwide median prevalence of ASD in children stands at 1% 2 . Nevertheless, this reported percentage may not fully capture the actual prevalence of ASD in low- and middle-income nations, potentially leading to underestimations. In 2016, data compiled by the CDC's Autism and Developmental Disabilities Monitoring (ADDM) Network revealed that approximately one out of 54 children in the United States (one out of 34 boys and one out of 144 girls) received a diagnosis of ASD. This marks a ten percent increase from the reported rate of one out of 59 in 2014, a 105 percent increase from one out of 110 in 2006, and a 176 percent increase from one out of 150 in 2000 3 . According to the most recent update from the CDC’s ADDM Network, one out of 36 (2.8%) 8-year-old children has been diagnosed with ASD. These latest statistics exceed the 2018 findings, which indicated a rate of 1 in 44 (2.3%) 4 . To our understanding, there is no existing registry for ASD in the Republic of Armenia (RA). Additionally, there is no available data concerning the incidence and prevalence of ASD in the country.

The etiology of ASD remains unclear despite substantial research on the disorder; yet, important advances have been made in identifying some of the disorder's genetic and neurobiological underpinnings. It has been discovered that ASD is heritable, with environmental variables also being involved 5 , 6 , 7 . According to certain research, ASD is associated with both hereditary and environmental factors 5 , 8 , 9 . It is especially important to identify environmental risk factors because, unlike genetic risk factors, they can be prevented.

There are more than 20 pre-, peri- and neonatal risk factors associated with ASD 10 , 11 , 12 . Prenatal risk factors that have been associated with ASD involve parental age 13 , interpregnancy interval 14 , 15 , immune factors (such as autoimmune diseases, both viral and bacterial infections during pregnancy) 16 , 17 , medication use (especially antidepressants, anti-asthmatics, and anti-epileptics) 18 , 19 , 20 , maternal metabolic conditions (such as diabetes, gestational weight gain, and hypertension) 21 , 22 , 23 , and maternal dietary factors (such as folic acid and other supplement use, maternal iron (Fe) intake, as well as maternal vitamin D levels) 24 , 25 , 26 , 27 , 28 , 29 .

Numerous studies indicate that an increased risk of ASD is linked to several perinatal and neonatal factors. These factors include small gestational age or preterm birth, gestational small or large size, the use of labor and delivery drugs 30 , 31 , 32 , 33 . The risk of ASD associated with cesarean delivery is also a subject of continuous discussion 34 , 35 , 36 . Overall, there is no apparent link between assisted conception and a notably higher risk of ASD, however some particular therapies might make ASD more likely.

This study aimed to determine main pre-, peri- and neonatal risk factors linked to ASD among Armenian children. The following research questions were derived to address the objectives of the study:

What are the primary prenatal risk factors associated with the development of ASD among Armenian children?

How do perinatal factors such as maternal complications during childbirth, labor mode, labor interventions, use of labor-inducing drugs, contribute to the risk of ASD in Armenian children?

What neonatal factors, such as birth weight and gestational age, are linked to the likelihood of ASD diagnosis among Armenian children?

How do socio-demographic factors, such as parental education, gender of the child, number of kids in the family, sequence of the kid, influence the relationship between pre-, peri-, and neonatal risk factors and risk of ASD among Armenian children?

To the best of our knowledge, this was the first study of its kind conducted in Armenia that focused on a variety of factors linked to ASD.

The analysis encompassed a total sample of 497 participants, consisting of 168 children diagnosed with ASD and 329 children without ASD. The descriptive analysis revealed significant differences between the cases and controls on several socio demographic variables as well as prenatal, peri- and neonatal risk factors (see Tables 1 , 2 , 3 and 4 ).

The summary of socio demographic characteristics (Table 1 ). Among the cases (the ASD group), the distribution of gender of the child was significantly different to that in the control group. More specifically, while the distribution of male and female were balanced in the control group (52.89% and 47.11% respectively), the proportion of male children was significantly higher in the ASD group (82.14% and 17.86% respectively, p < 0.01). Furthermore, the number of children in the families of cases and controls were slightly different. While the proportion of cases and controls who had two children were similar, families with one child were slightly higher in the ASD group compared to the control group (29.94% and 17.02% respectively, p < 0.01). This picture is reversed with respect to the number of families with more than two children (16.17% and 29.79% respectively). A higher percentage of ASD cases are the first child in the family compared to controls (67.86% vs. 49.54%, p < 0.01). The proportion of non-married families (those that reported to be single, widowed, divorced etc.) were higher in the ASD group compared to the control group (10.24% and 4.28% respectively, p < 0.05). The distribution of the level of educational attainment of the parents were also different between the groups. More specifically, the prevalence of university degree among the cases were somewhat lower compared to that in the control group.

The summary of prenatal risk factors (Table 2 ). With respect to prenatal risk factors, there were significant differences between the cases and controls in interpregnancy intervals, self-reported complications and diseases, medication use, vitamin D levels, maternal weight gain, and the self-reported stress during pregnancy. More specifically, the cases had on average lower interpregnancy intervals compared to the controls (M = 12.9 and M = 23.7 months respectively, p < 0.01). The cases more frequently reported to have had complications during the pregnancy compared to the controls (42.86% and 8.54% respectively, p < 0.01). The prevalence of reported infectious diseases, other diseases and anemia during the pregnancy were also somewhat higher among the cases compared to the control group. The use of medications was higher among the cases compared to the control group (41.67% and 17.74% respectively, p < 0.01). Various medications including vitamins, anticoagulants, Paracetamol, MgB6, Duphaston, iron preparation, No-spa, calcium preparation, antibiotics, and Utrogestan showed significant differences in usage between cases and controls (all p < 0.05) (Table 3 ). The maternal weight gain among the cases was on average higher among the cases compared to the control group (M = 15.4 and M = 13.9 kg respectively, p < 0.05). The self-reported stress was also more frequent among the cases compared to the controls (56.02 and 10.98% respectively, p < 0.01). Specifically, comparing data on self-reported stress during different pregnancy periods, it was obvious that 47.06% of mothers of cases and 91.25% of mothers in the control group reported no stress experienced during pregnancy. During the first trimester, 14.38% of mothers with cases of autism reported stress, whereas only 0.94% of mothers in the control group reported stress. In the second trimester, 11.11% of mothers with cases of autism reported stress, compared to 4.06% of mothers in the control group. During the third trimester, 8.50% of mothers with cases of autism reported stress, while 1.88% of mothers in the control group reported stress. Across the entire pregnancy, 18.95% of mothers with cases of autism reported stress, compared to 1.88% of mothers in the control group. The differences in stress levels between the two groups were statistically significant, indicating a potential link between maternal stress during pregnancy and the odds of autism spectrum disorder in offspring.