- Conferences

- Last updated June 29, 2022

- In AI Mysteries

XOR problem with neural networks: An explanation for beginners

- Published on June 29, 2022

- by Darshan M

Among various logical gates, the XOR or also known as the “exclusive or” problem is one of the logical operations when performed on binary inputs that yield output for different combinations of input, and for the same combination of input no output is produced. The outputs generated by the XOR logic are not linearly separable in the hyperplane. So In this article let us see what is the XOR logic and how to integrate the XOR logic using neural networks.

Table of Contents

What is xor operating logic, the linear separability of points, why can’t perceptrons solve the xor problem, how to solve the xor problem with neural networks.

Let us try to understand the XOR operating logic using a truth table.

From the below truth table it can be inferred that XOR produces an output for different states of inputs and for the same inputs the XOR logic does not produce any output. The Output of XOR logic is yielded by the equation as shown below.

Output= X.Y’+X’.Y

The XOR gate can be usually termed as a combination of NOT and AND gates and this type of logic finds its vast application in cryptography and fault tolerance. The logical diagram of an XOR gate is shown below.

Are you looking for a complete repository of Python libraries used in data science, check out here .

Linear separability of points is the ability to classify the data points in the hyperplane by avoiding the overlapping of the classes in the planes. Each of the classes should fall above or below the separating line and then they are termed as linearly separable data points. With respect to logical gates operations like AND or OR the outputs generated by this logic are linearly separable in the hyperplane

The linear separable data points appear to be as shown below.

So here we can see that the pink dots and red triangle points in the plot do not overlap each other and the linear line is easily separating the two classes where the upper boundary of the plot can be considered as one classification and the below region can be considered as the other region of classification.

Need for linear separability in neural networks

Linear separability is required in neural networks is required as basic operations of neural networks would be in N-dimensional space and the data points of the neural networks have to be linearly separable to eradicate the issues with wrong weight updation and wrong classifications Linear separability of data is also considered as one of the prerequisites which help in the easy interpretation of input spaces into points whether the network is positive and negative and linearly separate the data points in the hyperplane.

Perceptrons are mainly termed as “linear classifiers” and can be used only for linear separable use cases and XOR is one of the logical operations which are not linearly separable as the data points will overlap the data points of the linear line or different classes occur on a single side of the linear line.

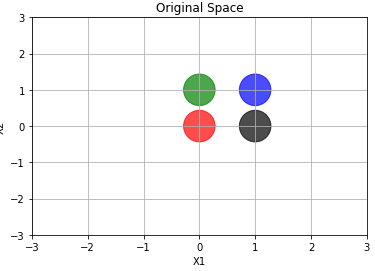

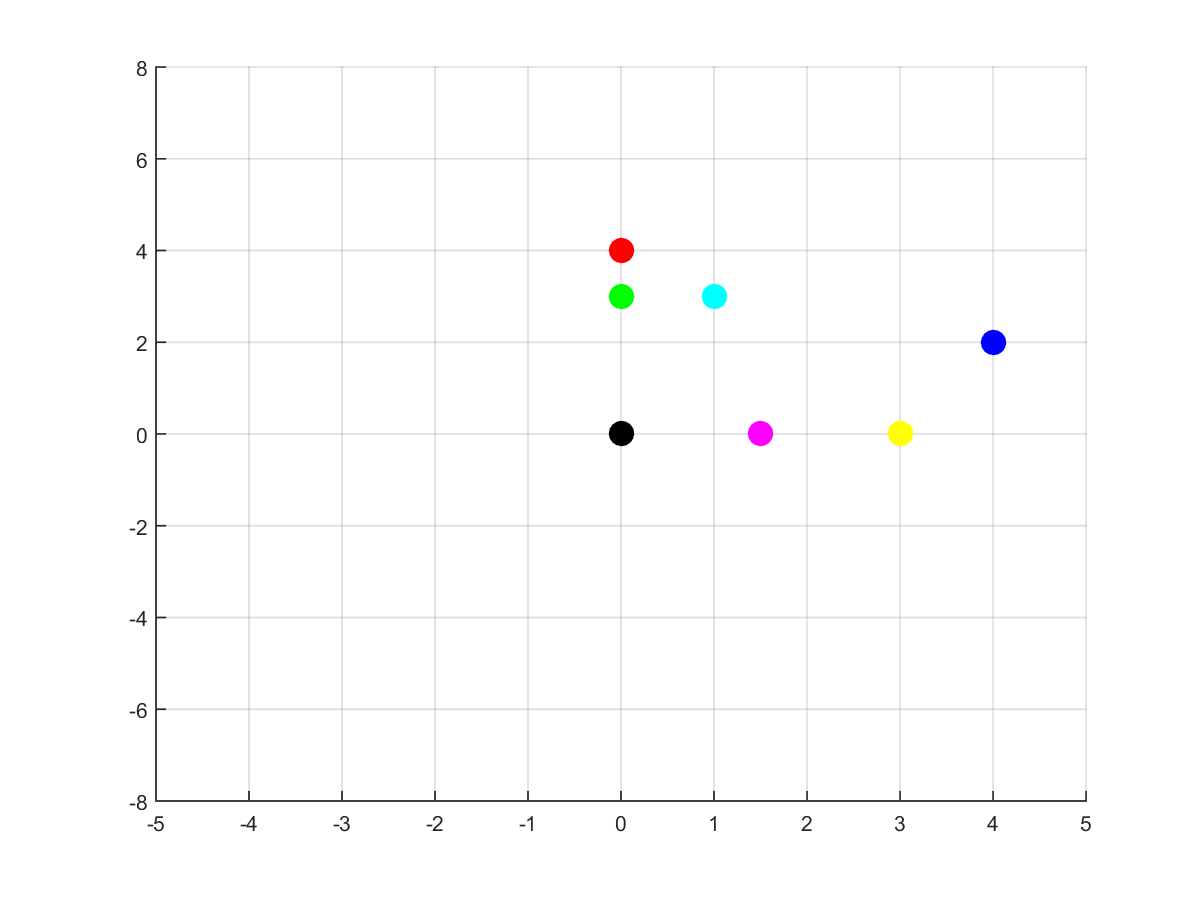

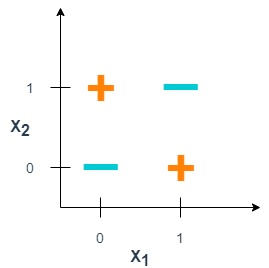

Let us understand why perceptrons cannot be used for XOR logic using the outputs generated by the XOR logic and the corresponding graph for XOR logic as shown below.

In the above figure, we can see that above the linear separable line the red triangle is overlapping with the pink dot and linear separability of data points is not possible using the XOR logic. So this is where multiple neurons also termed as Multi-Layer Perceptron are used with a hidden layer to induce some bias while weight updation and yield linear separability of data points using the XOR logic. So now let us understand how to solve the XOR problem with neural networks.

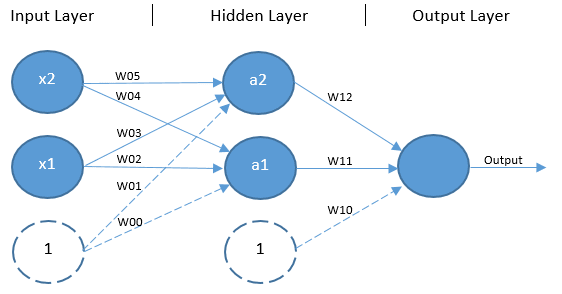

The XOR problem with neural networks can be solved by using Multi-Layer Perceptrons or a neural network architecture with an input layer, hidden layer, and output layer. So during the forward propagation through the neural networks, the weights get updated to the corresponding layers and the XOR logic gets executed. The Neural network architecture to solve the XOR problem will be as shown below.

So with this overall architecture and certain weight parameters between each layer, the XOR logic output can be yielded through forward propagation. The overall neural network architecture uses the Relu activation function to ensure the weights updated in each of the processes to be 1 or 0 accordingly where for the positive set of weights the output at the particular neuron will be 1 and for a negative weight updation at the particular neuron will be 0 respectively. So let us understand one output for the first input state

Example : For X1=0 and X2=0 we should get an input of 0. Let us solve it.

Solution: Considering X1=0 and X2=0

H1=RELU(0.1+0.1+0) = 0

H2=RELU(0.1+0.1+0)=0

So now we have obtained the weights that were propagated from the input layer to the hidden layer. So now let us propagate from the hidden layer to the output layer

Y=RELU(0.1+0.(-2))=0

This is how multi-layer neural networks or also known as Multi-Layer perceptrons (MLP) are used to solve the XOR problem and for all other input sets the architecture provided above can be verified and the right outcome for XOR logic can be yielded.

So among the various logical operations, XOR logical operation is one such problem wherein linear separability of data points is not possible using single neurons or perceptrons. So for solving the XOR problem for neural networks it is necessary to use multiple neurons in the neural network architecture with certain weights and appropriate activation functions to solve the XOR problem with neural networks.

Access all our open Survey & Awards Nomination forms in one place

Can LNNs Replace Transformers?

Stop Confusing Calculators with GPT-4

Google introduces ktn to solve label scarcity in heterogeneous graphs, chatgpt privacy threat is real and we are late.

Going Beyond Large Language Models (LLMs)

Think Like Yoshua

Do AI Models Really Understand The Human Brain?

Is Reinforcement Learning Still Relevant?

The announcement comes right after OpenAI disbanded its super alignment team led by Ilya Sutskever and Jan Leike.

Top Editorial Picks

Google is Giving Away a Custom Electric 1981 DeLorean as Grand Prize in ‘Gemini API Developer Competition’ Siddharth Jindal

Bhashini Launches ‘Be our Sahayogi’ for Multilingual AI Innovation Focused on Voice Mohit Pandey

Jivi’s Medical LLM Beats OpenAI at the Open Medical LLM Leaderboard Pritam Bordoloi

CtrlS Launches 13-MW, AI-ready Data Center in Hyderabad Shyam Nandan Upadhyay

Agnikul Cosmos Launches India’s Second Private Rocket, Agnibaan SOrTeD Shyam Nandan Upadhyay

UiPath Stock Plunges Nearly 30% as CEO Rob Enslin Abruptly Resigns Shyam Nandan Upadhyay

Meta Introduces Vision Language Models, Shows Superior Performance Over Traditional CNNs Sukriti Gupta

Subscribe to The Belamy: Our Weekly Newsletter

Biggest ai stories, delivered to your inbox every week., "> "> flagship events.

Explore the transformative journey of Global Capability Centers at MachineCon GCC Summit 2024, where innovation meets strategic growth.

© Analytics India Magazine Pvt Ltd & AIM Media House LLC 2024

- Terms of use

- Privacy Policy

Subscribe to Our Newsletter

The Belamy, our weekly Newsletter is a rage. Just enter your email below.

There must be a reason why +150K people have chosen to follow us on LinkedIn. 😉

Stay in the know with our linkedin page. follow us and never miss an update on ai.

- Master Data Science

- Generative Adversarial Networks (GANs)

- Linear Algebra

- Shallow Neural Networks

- Deep Learning

- Master TensorFlow 2.0

- Camera Calibration and Stereo Vision

- OpenCV for Hackers

- Master PyTorch

- Advanced Computer Vision

- Machine Learning

- OpenCV projects

- 7Hours sprint to Data Science

- Computer Vision OpenCV Book

- Popular News

#006 PyTorch – Solving the famous XOR problem using Linear classifiers with PyTorch

Highlights : One of the most historical problems in the Neural Network arena is the classic XOR problem where predicting the output of the ‘Exclusive OR’ gate becomes increasingly difficult using traditional linear classifier methods.

In this post, we will study the expressiveness and limitations of Linear Classifiers, and understand how to solve the XOR problem in two different ways. So let’s begin.

Tutorial Overview:

- Logistic Regression Model

The OR Problem

The and problem, the nand problem.

- Historical Research on XOR

Linear Classifier with Non-Linear Features

- The XOR Problem: Formal Solution

Solving the AND problem

Solving the or problem, solving the nand problem, solving the xor problem, creating the third dimension.

This post is inspired by the following YouTube video [ 1 ]

1. Logistic Regression Model

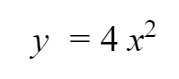

Let’s start by refreshing our memory with the basic mathematical representation of the Logistic Regression Model as seen below.

$$ \hat{y}=\sigma\left(\mathbf{w}^{\top} \mathbf{x}\right) $$

$$ \sigma(z)=\frac{1}{1+e^{-z}} $$

The above expression shows that in the Linear Regression Model, we have a linear or affine transformation between an input vector \(x \) and a weight vector \(w \). The input vector \(x \) is then turned to scalar value and passed into a non-linear sigmoid function. This sigmoid function compresses the whole infinite range into a more comprehensible range between 0 and 1.

Using the output values between this range of 0 and 1, we can determine whether the input \(x\) belongs to Class 1 or Class 0.

Let’s understand this better using an example of 2D Logistic Regression.

Example: 2D Logistic Regression

For better understanding, let us assume that all our input values are in the 2-dimensional domain. Observe the expression below, where we have a vector \(x\) that contains two elements, \(x_1\) and \(x_2\) respectively.

$$ \hat{y}=\sigma\left(\mathbf{w}^{\top} \mathbf{x}+w_{0}\right) $$

$$ \text { Let } \mathbf{x} \in \mathbb{R}^{2} $$

For each of the element of the input vector \(x\), i.e., \(x_1\) and \(x_2\), we will multiply a weight vector \(w\) and a bias \(w_0\). We, then, convert the vector \(x\) into a scalar value represented by \(z\) and passed onto the sigmoid function.

Let us represent this example using a graph.

Notice how the Decision Boundary line for this example is exactly at 0. This is due to the fact that when \(z = 0 \), the sigmoid function changes from being below 0.5 to being above 0.5. In simpler words, the probability of the input belonging to Class 1 is higher on the right-hand side of the Decision Boundary line. And, the probability of the input belonging to Class 0 is higher on the left-hand side of the Decision Boundary line. The equation for this Decision Boundary line is written as follows.

$$ \mathbf{w}^{\top} \mathbf{x}+w_{0}=0 $$

To better visualize the above classification, let’s see the graph below.

A total of three scenarios arise as we can see above.

- If \(z<0\), where \(z= w^Tx+w_0\), we decide for Class 0

- If \(z=0\), where \(z= w^Tx+w_0\), we are at the Decision Boundary and uncertain of the Class

- If \(z>0\), where \(z= w^Tx+w_0\), we decide for Class 1

Now that we have a fair recall of the logistic regression models, let us use some linear classifiers to solve some simpler problems before moving onto the more complex XOR problem.

2. Simple Logical Boolean Operator Problems

Before we head over to understanding the problems associated with predicting the XOR operator, let us see how simple linear classifiers can be used to solve lesser complex Boolean operators.

As seen above, the classification rule from our 2D logistic regression model is written as follows.

$$ \text { Class } 1 \Leftrightarrow \mathbf{w}^{\top} \mathbf{x}>-w_{0} $$

Now, in order to implement our classifier as seen above, we need to determine our two important parameters, the weight \(w\) and the bias \(w_0\) such that our decision boundary line clearly separates Class 1 (red) and Class 0 (green). Intuitively, it is not difficult to imagine a line that will separate the two classes.

So, we have successfully classified the OR operator. Let’s see how we can perform the same procedure for the AND operator.

Again, our main task is to determine the optimal parameters, the weight \(w\) and the bias \(w_0\) to draw a decision boundary line separating the two classes. Using trial and error, and some intuition of course, we come to a conclusion that our parameter values should be \(w = (1,1)\) and \(w_0 = -1.5\) respectively. Let’s see how our decision boundary line appears on the graph.

Great! Let’s look at NAND operation, next.

The values for our parameters that we can arrive at here are \(w = (-1,-1)\) and \(w_0 = 1.5\) such that the decision boundary line looks something like this.

As we saw above, simple linear classifiers can easily solve the basic Boolean operator problems. However, when it comes to the XOR operator, things start to become a little complex. Let’s move on to the classic XOR problem.

3. The XOR Problem: Intuitive Solution

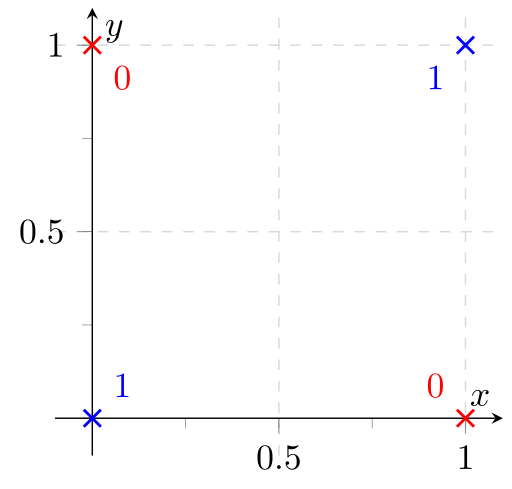

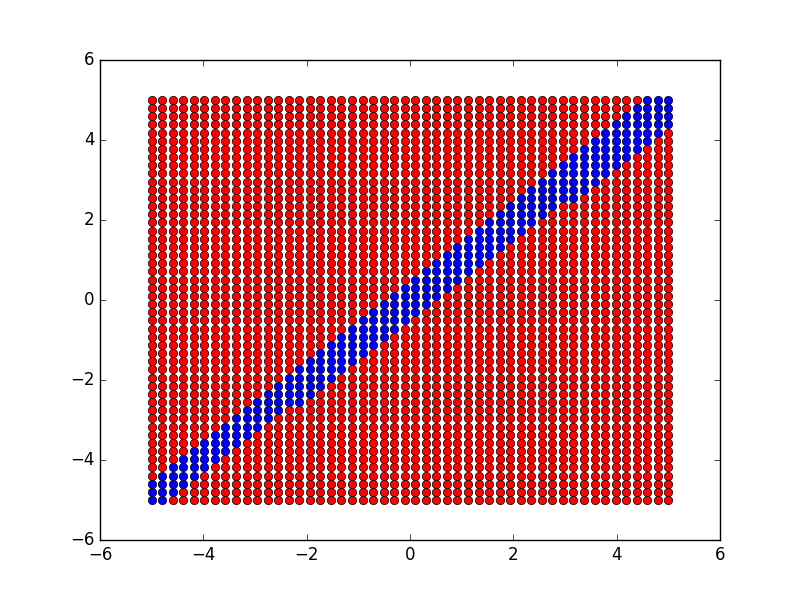

Observe the points on the graph above. Our intuition fails to recognize a way to draw a decision boundary line that will clearly separate the two classes.

Similarly, if we were to use the decision boundary line for the NAND operator here, it will also classify 2 out of 3 points correctly.

It is evident that the XOR problem CAN NOT be solved linearly . This is the reason why this XOR problem has been a topic of interest among researchers for a long time.

However, more than just intuitively, we should also prove this theory more formally. We will take the help of Convex Sets to be able to prove that the XOR operator is not linearly separable. Let’s see how.

Convex Sets

Let’s consider a set \(S\).

The set \(S\) is said to be ‘convex’ if any line segment that joins two points within this set \(S\) lies entirely within the set \(S\). We can represent a convex set mathematically as follows:

$$ \mathbf{x}_{1}, \mathbf{x}_{2} \in \mathcal{S} \Rightarrow \lambda \mathbf{x}_{1}+(1-\lambda) \mathbf{x}_{2} \in \mathcal{S} $$

$$ \text { for } \quad \lambda \in[0,1] $$

The image on the left cannot be considered to be a convex set as some of the points on the line joining two points from \(S\) lie outside of the set \(S\). On the right-hand side, however, we can see a convex set where the line segment joining the two points from \(S\) lies completely inside the set \(S\).

Now, convex sets can be used to better visualize our decision boundary line, which divides the graph into two halves that extend infinitely in the opposite directions. We can intuitively say that these half-spaces are nothing but convex sets such that no two points within these half-spaces lie outside of these half-spaces.

This is clearly a wrong hypothesis and doesn’t hold valid simply because the intersection point of the green and the red line segment cannot lie in both the half-spaces. This means that the intersection point of the two lines cannot be classified in both Class 0 as well as Class 1. Therefore, we have arrived at a contradiction .

This provides formal proof of the fact that the XOR operators cannot be solved linearly.

Historical Research On XOR

In the 1950s and the 1960s, linear classification was widely used and in fact, showed significantly good results on simple image classification problems such as the Perceptron.

Perceptron – an electronic device that was constructed in accordance with biological principles and showed an ability to learn [2]

However, with the 1969 book named ‘Perceptrons’, written by Minsky and Paper, the limitations of using linear classification became more apparent. It became evident that even a problem like the XOR operator, which looks simple on the face of it with just 4 data points, cannot be solved easily since the classifier models available to us at that time were only capable of making linear decisions.

Naturally, due to rising limitations, the use of linear classifiers started to decline in the 1970s and more research time was being devoted to solving non-linear problems.

Moving ahead, let’s study a Linear Classifier with non-linear features and see if it can help us in solving our XOR problem.

In this case, we will consider an extended feature space such as the following:

$$ \mathbf{w}^{\top} \underbrace{\left(\begin{array}{c}x_{1} \\x_{2} \\x_{1} x_{2}\end{array}\right)}_{\psi(\mathbf{x})}>-w_{0} $$

Observe how the green points are below the plane and the red points are above the plane. This plane is nothing but the XOR operator’s decision boundary.

So, by shifting our focus from a 2-dimensional visualization to a 3-dimensional one, we are able to classify the points generated by the XOR operator far more easily.

This exercise brings to light the importance of representing a problem correctly. If we represent the problem at hand in a more suitable way, many difficult scenarios become easy to solve as we saw in the case of the XOR problem. Let’s understand this better.

Representational Learning

Notice the left-hand side image which is based on the Cartesian coordinates. There is no intuitive way to distinguish or separate the green and the blue points from each other such that they can be classified into respective classes.

However, when we transform the dataset to Polar coordinates, i.e., represent them in terms of radius and angle, we can notice how it becomes intuitively possible to draw a linear decision boundary line that classifies each point separately.

Representing data to help you make better decisions while creating your models is what Deep Learning is all about. But, how does one choose the right transformation?

Until the 2000s, choosing the transformation was done manually for problems such as vision, speech, etc. using histogram of gradients to study regions of interest. Having said that, today, we can safely say that rather than doing this manually, it is always better to have your model or computer learn, train, and decide which transformation to use automatically. This is what Representational Learning is all about, wherein, instead of providing the exact function to our model, we provide a family of functions for the model to choose the appropriate function itself.

Now that we have seen how we can solve the XOR problem using an observational, representational, and intuitive approach, let’s look at the formal solution for the XOR problem.

4. The XOR Problem: Formal Solution

One solution for the XOR problem is by extending the feature space and using a more non-linear feature approach. This can be termed as more of an intuitive solution. However, we must understand how we can solve the XOR problem using the traditional linear approach as well.

By combining the two decision boundaries of OR operator and NAND operator respectively using an AND operation, we can obtain the exact XOR classifier.

We can write this mathematically as follows:

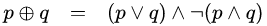

$$ \begin{array}{c}\text { XOR }\left(x_{1}, x_{2}\right)= \\\text { AND(OR }\left(x_{1}, x_{2}\right), \text { NAND } \left.\left(x_{1}, x_{2}\right)\right)\end{array} $$

Now, the above expression can be rewritten as a program of multiple logistic regressions. Have a look.

$$ h_{1}=\sigma\left(\mathbf{w}_{O R}^{\top} \mathbf{x}+w_{O R}\right) $$

$$ h_{2}=\sigma\left(\mathbf{w}_{N A N D}^{\prime} \mathbf{x}+w_{N A N D}\right) $$

$$ \hat{y}=\sigma\left(\mathbf{w}_{A N D}^{\top} \mathbf{h}+w_{A N D}\right) $$

This multi-later ‘perceptron’ has two hidden layers represented by \(h_1\) and \(h_2\), where \(h(x)\) is a non-linear feature of \(x\). We can represent this network visually as follows.

We can, further, simplify the above 1D mappings as a single 2D mapping as follows.

$$ \mathbf{h}=\sigma\underbrace{\left(\begin{array}{c}\mathbf{w}_{O R}^{\top}\\\left.\mathbf{w}_{NAND}^{\top}\right)\end{array}\right)}_{\mathbf{W}}\mathbf{x}+\underbrace{\left(\begin{array}{c}w_{OR}\\w_{NAND}\end{array}\right)}_{\mathbf{w}} $$

The biggest advantage of using the above solution for the XOR problem is that now, we don’t have non-differentiable step-functions anymore. Instead, we have differential equations sigmoid activation functions which means we can start with a random guess for each of the parameters in our model and later, optimize according to our dataset. This parametric learning is done using gradient descent where the gradients are calculated using the back-propagation algorithm.

Let’s now see how we can solve the XOR problem in Python using PyTorch.

5. Solving The XOR Problem in Python using PyTorch

Let’s start by importing the necessary libraries.

First, we’ll create the data for the logical operator AND. First, we will create our decision table were x1 and x2 are two NumPy arrays consisting of four numbers. These arrays will represent the binary input for the AND operator. Then, we will create an output array y , and we will set the data type to be equal to np.float32 .

Now, we can scatter our data points. Here, x1 and x2 will be the coordinates of the points and color will depend on y . Let’s have a look at the following graph.

We can see that just the yellow point will have a value of 1, while the remaining three points will have a value of 0.

The next step would be to create a data set because we cannot just train our data on these four points. So, we will create a function create_dataset() that will accept x1 , x2 and y as our input parameters. Then, we will use the function np. repeat() to repeat every number in x1 , x2 , and y 50 times. In that way, we will have 200 numbers in each array.

After this, we also need to add some noise to x1 and x2 arrays. We can do that by using the np.random.rand() function and pass width of an array multiplied with some small number (in our case it is 0.05).

The next step is to shuffle the data. We will create an index_shuffle variable and apply np.arrange() function on x1.shape[0] . This function will return 200 numbers from zero to 200. Next, we will use the function np.random.shuffle() on the variable index_shuffle .

After we set the data type to be equal to np.float32 , we can apply this index shuffle variable on x1 , x2 and y .

Now, remember, because we are using PyTorch we need to convert our data to tensors. Once we do that we will combine x1 and x2 . We will create a variable X and apply the function torch.hstack() to stack horizontally x1_torch and x2_torch tensors.

The next step is to create a training and testing data set for X and y . So, we will create X_train , X_test , y_train and y_test . Then for the X_train and y_tarin, we will take the first 150 numbers, and then for the X_test and y_test, we will take the last 150 numbers. Finally, we will return X_train, X_test, y_train, and y_test.

Now we can call the function create_dataset() and plot our data.

The next step is to create the LogisticRegression() class. To be able to use it as a PyTorch model, we will pass torch. nn.Module as a parameter. Then, we will define the init() function by passing the parameter self .

Now that we have created the class for the Logistic regression, we need to create the model for AND logical operator. So, we will create the variable model_AND which will be equal to LogisticRegression() class. As parameters we will pass number 2 and 1 because our x now has two features, and we want one output for the y . Then, we will create a criterion where we will calculate the loss using the function torch.nn.BCELoss() ( Binary Cross Entropy Loss). Also we need to define an optimizer by using the Stochastic Gradient descent. As parameters we will pass model_AND.parameters() , and we will set the learning rate to be equal to 0.01 .

Now that we have defined everything we need, we’ll create a training function. As an input, we will pass model , criterion , optimizer , X , y and a number of iterations. Then, we will create a list where we will store the loss for each epoch. We will create a for loop that will iterate for each epoch in the range of iteration.

The next step is to apply the forward propagation. We will define prediction y_hat and we will calculate the loss which will be equal to criterion of the y_hat and the original y . Then we will store loss inside this all_loss list that we have created.

Now, we can apply the backward pass. To calculate the gradients and optimize the weight and the bias we will use the optimizer.step() function. Remember that we need to make sure that calculated gradients are equal to 0 after each epoch. To do that, we’ll just call optimizer.zero_grad() function. Finally, we will just return a list all_loss .

Now, we can train our data. We will call our train() function and set 50.000 for the number of iterations. After our data are trained, we can scatter the results.

As you can see, the classifier classified one set of points to belong to class 0 and another set of points to belong to class 1 . Now, we can also plot the loss that we already saved in the variable all_loss .

Now we will conduct a similar experiment. The only difference is that we will train our data for the logical operator OR. Here the x1 and the x2 will be the same, we will just change the output y .

This is how the graph looks like for the OR model. We can see that now only one point with coordinates (0,0) belongs to class 0 , while the other points belong to class 1 .

After those steps, we can create the data for NAND. Again, we just change the y data, and all the other steps will be the same as for the last two models.

Now we can finally create the XOR data set. Everything is the same as before. We will just change the y array to be equal to (0,1,1,0).

Here, we can see our data set. Now we can test our result on one number. For example, we can take the second number of the data set. Next, we will create two hidden layers h1 and h2 . The hidden layer h1 is obtained after applying model OR on x_test , and h2 is obtained after applying model NAND on x_test . Then, we will obtain our prediction h3 by applying model AND on h1 and h2 .

After printing our result we can see that we get a value that is close to zero and the original value is also zero. On the other hand, when we test the fifth number in the dataset we get the value that is close to 1 and the original value is also 1. So, obviously, this is correct.

Now, we can test our results on the whole dataset. To do that we will just remove indexes. Also in the output h3 we will just change torch.tensor to hstack in order to stack our data horizontally.

Just by looking at this graph, we can say that the data was almost perfectly classified.

Another very useful approach to solve the XOR problem would be engineering a third dimension. The first and second features will remain the same, we will just engineer the third feature.

For this step, we will take a part of the data_set function that we created earlier. However, we have to make some modifications. The only difference is that we have engineered the third feature x3_torch which is equal to element-wise product of the first feature x1_torch and the second feature x2_torch .

Now comes the part where we create our logistic model. It will be the same logistic regression as before, with addition of a third feature. The rest of the code will be identical to the previous one.

We can see that our model made pretty much good predictions. They are not as accurate as before, but if we change the iteration number the result will get even better.

That’s it! By understanding the limitations of traditional logistic regression techniques and deriving intuitive as well as formal solutions, we have made the XOR problem quite easy to understand and solve. In this process, we have also learned how to create a Multi-Layer Perceptron and we will continue to learn more about those in our upcoming post. Before we end this post, let’s do a quick recap of what we learned today.

Solving The XOR Problem

- Logistic Regression works by classifying operator points into Class 0 and Class 1 based on the decision boundary line

- Simple logistic regression methods easily solve OR, AND, and NAND operator problems

- Due to the unintuitive outcome of the decision boundary line in the XOR operator, simple logistic regression becomes difficult for XOR problems

- An intuitive solution for XOR involves working with a 3D feature plane rather than a 2D setup

- Formal solution for XOR involves a combination of decision boundary lines of OR and NAND operators

- Multi-layer perceptron can be built using two hidden layers and differentiable sigmoid activation functions

So how did you like this post on the classic XOR problem? By understanding the past and current research, you have come at par with researchers who are finding new ways to solve problems such as the XOR problems more efficiently. If you would like to practice solving other operator problems or even customized operator problems, do share your results with us. We’ll see you soon. Till then, keep having multi-layer fun! 🙂

[1] Deep Learning – Lecture 3.2

[2] Frank Rosenblatt with a Mark I Perceptron computer in 1960

Pytorch

Recent Posts

- #006 Advanced Computer Vision – Object tracking with Mean-Shift and CAMShift algorithms

- #007 Advanced Computer Vision – Video Stabilization

- #027 R-CNN, Fast R-CNN, and Faster R-CNN explained with a demonstration in PyTorch

- #005 Advanced Computer Vision – Basketball Player Tracking with Open CV

- #004 Advanced Computer Vision – YOLO Object Detection

The hundred-page Computer Vision book

What are morphological transformations?

Learn how to align faces in opencv in python.

© 2024 Master Data Science. Built using WordPress and the Mesmerize Theme

Mateus de Assis Silva

Mechatronics Engineering student at Universidade Federal do Rio Grande do Norte. AI Enthusiast. Computer Vision Researcher.

- Custom Social Profile Link

An Introduction do Neural Networks: Solving the XOR problem

16 minute read

When I started AI, I remember one of the first examples I watched working was MNIST(or CIFAR10, I don’t remember very well). Looking for online tutorials, this example appears over and over, so I suppose it is a common practice to start DL courses with such idea. Although a very exciting starting point (come on, we are literally seeing a classifer recognizing images!), it kind of masks the math behind it and makes it harder to understand what is going under the hood (if you are a beginner). That is why I would like to “start” with a different example.

This example may actually look too simple to us all because we already know how to tackle it, but in reality it stunned very good mathematitians and AI theorists some time ago.

The 2-Variable XOR Problem

What is the XOR logical gate? Imagine two inputs that can assume only binary values each (0 or 1). The output goes to 1 only when both inputs are different. That is:

Now we must propose an artificial neural network that can overfit the dataset. That is, we should design a network that takes x1 and x2 as inputs and successfully outputs y .

Following the development proposed by Ian Goodfellow et al , let’s use the mean squared error function (just like a regression problem) for the sake of simplicity.

We already know what we should do. Now we should choose the model f(\(x\);\(\theta\)).

Theoretical Modelling (Let’s think for a while…)

Only one neuron (a linear model).

Our first attempt will consider a linear model. Such model implements the following equation:

\(ŷ = f(\vec{x};\vec{w},b) = \vec{x}^{T}\vec{w}+b\).

Seems nice, isn’t it? Will it work? Well… unfortunatelly, no.

How can I now it beforehand? Let’s take a closer look to the expression. It is implementing a linear relation. Imagine f is a surface over the \(\vec{x}\) plane, and its height equals the output. The surface must have height equalling 1 over the points \([0, 1]\) and \([1, 0]\) and 0 height (it would be touching the plane) at points \([0, 0]\) and \([1, 1]\). Could a hyperplane behave this way? No, it cannot.

Another way of think about it is to imagine the network trying to separate the points. The points labeled with 1 must remain together in one side of line. The other ones (labelled with 0) stay on the other side of the line.

Take a look at the following image. I plotted each point with a different color. Notice the artificial neural net has to output ‘1’ to the green and black point, and ‘0’ to the remaining ones. In other words, it need to separate the green and black points from the purple and red points. It cannot do such a task. The net will ultimately fail.

More than only one neuron (network)

Let’s add a linear hidden layer with only two neurons.

\(ŷ = f^{(2)}(\vec{h};\vec{w},b)\) , such that \(\vec{h} = f^{(1)}(\vec{x};W,\vec{c})\).

Sounds like we are making real improvements here, but a linear function of a linear function makes the whole thing still linear.

We are going nowhere!

Notice what we are doing here: we are stacking linear layers. What does a linear layer do? How can we visualize its effect mathematically?

Before I explain the layer, let’s simplify a little bit by ignoring the bias term in each neuron (\(\vec{c}\) and \(b\)), alright?

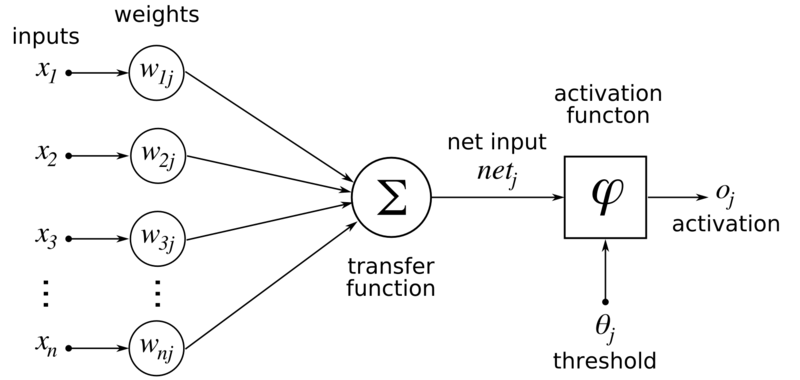

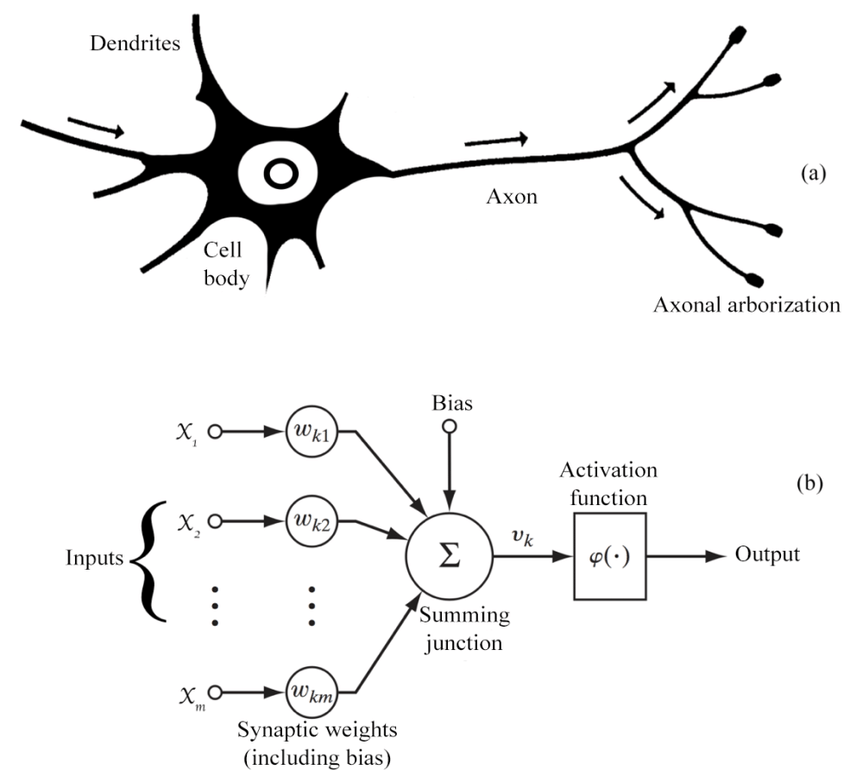

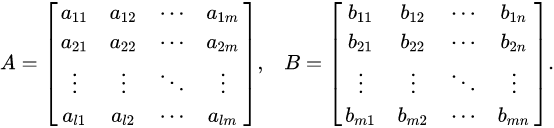

Ok, now consider the following image (which can be found here ):

It is not our own net. Remember: We stacked layers with 2 neurons only, and here we have a hidden layer with 3 neurons. Even though it is not our neural network, it’ll be useful to mathematically visualize what’s going on.

Let’s focus only on the input and hidden layers. We can be sure this network was designed to a 2D input (like our example data), because there is two neurons in the input layer. Let’s call our inputs neurons using the following subscripts: \(i_{1}\) and \(i_{2}\). That means the first and the second input neurons. Watch out! When I say “the first” I mean “the higher”, “the second” then means “the lower”, ok?

The architecture consideration of the hidden layer chose three neurons. That is ok. There is not too much to talk about this choose. I will call the output of the three hidden neurons: \(h_1\),\(h_2\) and \(h_3\). And again, \(h_1\) is the output of the highest hidden layer neuron, \(h_2\) is the output of the hidden layer neuron in the middle and \(h_3\) is the output of the last hidden layer neuron.

I am repeating myself several times about the neurons’ positions because I want to be clear about which neuron I’m talking about.

Now let’s see the output of the first hidden layer neuron, that is, let’s see \(h_1\). We now \(h_1\) is a weighted sum of the inputs, which are written as \(\vec{x}\) in the original formulation, but we’ll use \(i\) so we can relate to input . In one equation:

\(h_1 = w_{1,1} * i_1 + w_{1,2} * i_2\).

don’t you forget we’re ignoring the bias!

In this representation, the first subscript of the weight means “what hidden layer neuron output I’m related to?”, then “1” means “the output of the first neuron”. The second subscript of the weight means “what input will multiply this weight?”. Then “1” means “this weight is going to multiply the first input” and “2” means “this weight is going to multiply the second input”.

The same reasoning can be applied to the following hidden layer neurons, what leads to:

\(h_3 = w_{3,1} * i_1 + w_{3,2} * i_2\).

Now we should pay attention to the fact we have 3 linear equations. If you have ever enrolled in a Linear Algebra class, you know we can arrange these equations in a grid-like structure. If you guessed “a matrix equation”, you’re right!

The matrix structure looks like this:

To simplify even further, let’s shorten our equation by representing the hidden layer output vector by \(\vec{h}\), the input vector by \(\vec{i}\) and the weight matrix by \(W\):

\(\vec{h} = W \vec{i}\).

If we connect the output neuron to the hidden layer, we have:

\(\vec{o} = M \vec{h}\), where \(\vec{o}\) is a 2D vector (each position contains the output of the output neurons) and \(M\) is the matrix that maps the hidden layer representation to the output values (the \(\vec{w}\) in the original formulation). Here, \(ŷ = \vec{o}\). Expanding it we have:

\(\vec{o} = M W \vec{i}\),

where \(MW\) gives another matrix, because this is just a matrix multiplication. Let’s call it A . Then:

Now suppose a different neural network, like the following (you can find it here ):

This network has only one output neuron and two hidden layers (the first one with 4 neurons and the second one with three neurons). The input is a 6-D vector. Again we are ignoring bias terms. Let’s see the shortened matrix equation of this net:

\(o = M H_1 H_2 \vec{i}\).

Here , the output o is a scalar (we have only one output neuron), and two hidden layers (\(H_2\) is the matrix of weights that maps the input to the hidden layer with 4 neurons and \(H_1\) maps the 4 neurons output to the 3 hidden layer neurons outputs). M maps the internal representation to the output scalar.

Notice \(M H_1 H_2\) is a matrix multiplication that results in a matrix again. Let’s call it B . Then:

\(o = B \vec{i}\).

Can you see where we’re going? It doesn’t matter how many linear layers we stack, they’ll always be matrix in the end. To our Machine Learning perspective, it means it doesn’t mean how many layers we stack, we’ll never learn a non linear behaviour in the data, because the model can only learn linear relations (the model itself is a linear function anyway).

I hope I convinced you that stacking linear layers will get us nowhere, but trust me: all is not lost. We just need another mechanism to learn non-linear relationships in the data. This “mechanism” I’ll introduce is called Activation Functions .

If you want to read another explanation on why a stack of linear layers is still linear, please access this Google’s Machine Learning Crash Course page .

Activation Functions!

“Activation Function” is a function that generates an output to the neuron, based on its inputs. The name comes from the neuroscience heirloom. Although there are several activation functions, I’ll focus on only one to explain what they do. Let’s meet the ReLU (Rectified Linear Unit) activation function.

In the figure above we have, on the left (red function), the ReLU. As can be seen in the image, it is defined by the max operation between the input and ‘0’. It means the ReLU looks to the input and thinks: is it greater than ‘0’? If yes, the output is the input itself. If it is not, the output is zero. That said, we see every input point greater than ‘0’ has an height equaling its distance to the origin of the graph. That’s why the positive graph’s half is a perfect diagonal straight line. When we look to the other half, all x’s are negative, so all the outputs are zero. That’s why we have a perfect horizontal line.

Now imagine that we have a lot of points distributed along a line: some of them lie on the negative side of the line, and some of them lie on the positive side. Suppose I apply the ReLU function on them. What happens to the distribution? The points on the positive side remains in the same place, they don’t move because their position is greater than 0. On the other hand, the points from the negative side will crowd on the origin.

Another nice property of the ReLU is its slope (or derivative, or even tangent ). If you have a little background on Machine/Deep Learning, you know this concept is fundamental for the neural nets algorithms. On the graph’s left side we have an horizontal line: it has no slope, so the derivative is 0. On the other side we’ve got a perfect diagonal line: the slope is 1 (tangent of 45º).

Here we have sort of a problem… what’s the slope at x=0 ? Is it 0 (like on the left side) or 1 (right side slope)? That’s called a non-differentiable point . Due to this limitation, people developed the softplus function , which is defined as \(\ln(1+e^{x})\). The softplus function can be seen below :

Empirically, it is better to use the ReLU instead of the softplus. Furthermore, the dead ReLU is a more important problem than the non-differentiability at the origin. Then, at the end, the pros (simple evaluation and simple slope) outweight the cons (dead neuron and non-differentiability at the origin).

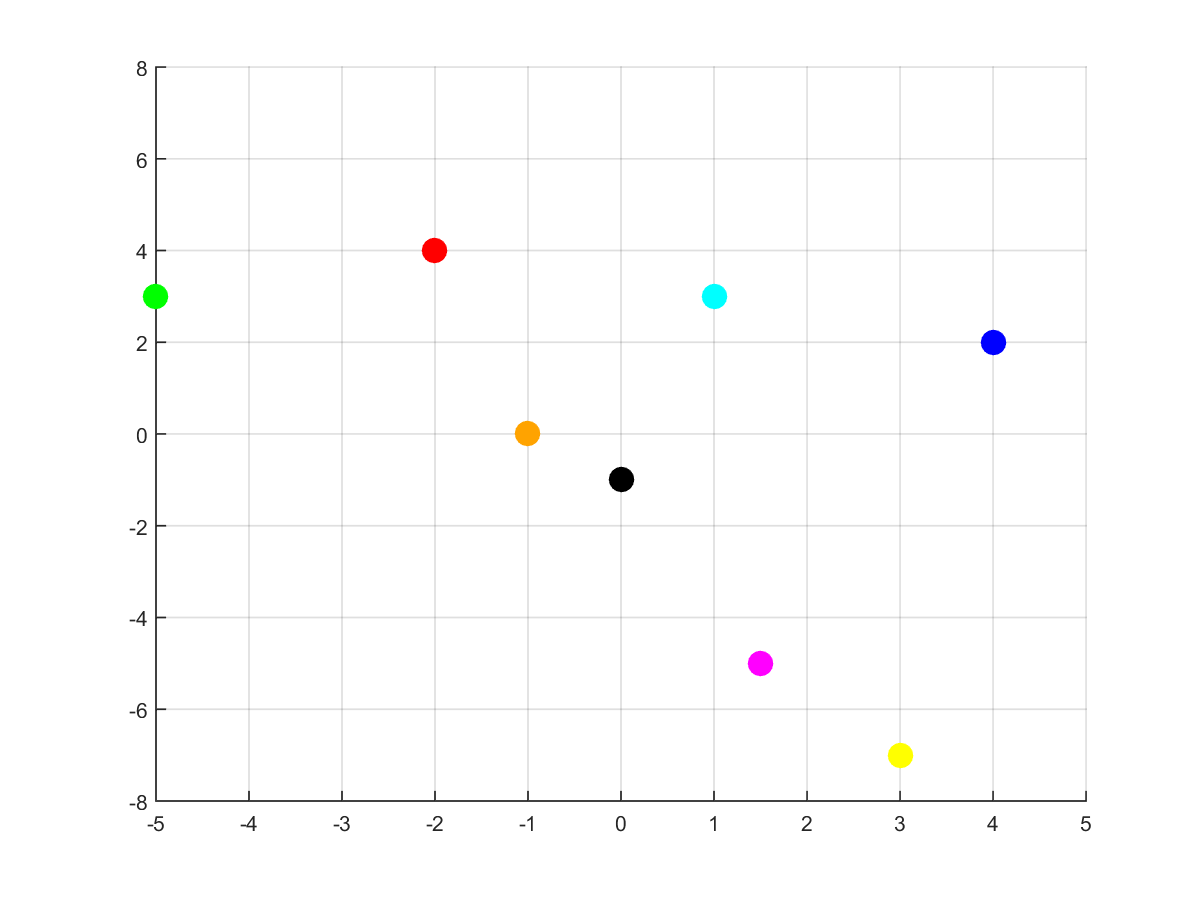

Ok… so far we’ve discussed the 1D effect of ReLU. What happens when we apply ReLU to a set of 2D points?

First, consider this set of 8 colorful points. Pay attention to their x, y positions: the blue ones have positive coordinates both; the green, red and orange ones have negative x coordinates; the remaining ones have positive x coordinates, but negative y ones. Suppose we applied ReLU to the points (to each coordinate). What happens?

As we can see, the blue points didn’t move. Why? Because their coordinates are positive, so the ReLU does not change their values. The pink and yellow points were moved upwards. It happened because their negative coordinates were the y ones. The red and green points were moved rightwards. It happened due to the fact their x coordinates were negative. What about the orange point? Did it disappear? Well… no. Note every moved coordinate became zero (ReLU effect, right?) and the orange’s non negative coordinate was zero (just like the black’s one). The black and orange points ended up in the same place (the origin), and the image just shows the black dot.

The most important thing to remember from this example is the points didn’t move the same way (some of them did not move at all). That effect is what we call “non linear” and that’s very important to neural networks. Some paragraphs above I explained why applying linear functions several times would get us nowhere. Visually what’s happening is the matrix multiplications are moving everybody sorta the same way (you can find more about it here ).

Now we have a powerful tool to help our network with the XOR problem (and with virtually every problem): nonlinearities help us to bend and distort space! The neural network that uses it can move examples more freely so it can learn better relationships in the data!

You can read more about “space-bender” neural networks in Colah’s amazing blog post

More than only one neuron , the return (let’s use a non-linearity)

Ok, we know we cannot stack linear functions. It will lead us anywhere. The solution? ReLU activation function. But maybe something is still confusing: where it goes ?

I believe the following image will help. This is the artificial neuron “classic” model ( classic here means we always see it when we start doing Machine/Deep Learning ):

Recall our previous formulation: \(ŷ = f^{(2)}(\vec{h};\vec{w},b)\) , such that \(\vec{h} = f^{(1)}(\vec{x};W,\vec{c})\).

Here, a “neuron” can be seen as the process which produces a particular output \(h_i\) or \(ŷ_i\). Let’s focus on the \(h_i\). Previously it was explained that, in our context, it equals :

\(h_i = w_{i,1} * i_1 + w_{i,2} * i_2\).

Here, \(w_{i,j}\) are the weights that produces the i-th hidden-layer output. The i elements are the inputs (the x in the image). The transfer function comprises the two products and the sum. Actually, it can be written as \(h_i = \vec{w_i} \vec{i}\) either, which means the inner product between the i-th weights and the input (here is clearer the transfer function is the inner product itself). The input \(net_j\) is \(h_i\), and we’ll finally deal with the activation function!

In the original formulation, there’s no non-linear activation function. Notice I wrote: \(\vec{o} = ŷ = M * \vec{h}\) .

The transformation is linear, right? What we are going to do now is to add the ReLU, such that: \(\vec{o} = ŷ = M * ReLU( \vec{h} )\). Here, the threshold \(\theta_j\) does not exist.

So far it was said the activation function occurs after each inner product. If we think the layer as outputing a vector, the activation funcion is applied point-wise.

Visualizing Results (Function Composition)

The model we chose to use has a hidden layer followed by ReLU nonlinearity. It implements the global function (considering the bias):

\(f(\vec{x};W,\vec{c},\vec{w},b) = \vec{w}^{T} max\{0,W^{T}\vec{x}+\vec{c}\}+b\) .

A specified solution to the XOR problem has the following parameters:

W= \(\begin{bmatrix} 1 & 1 \\ 1 & 1 \\ \end{bmatrix}\),

\(\vec{c} = \begin{bmatrix} 0 \\ -1 \\ \end{bmatrix}\),

\(\vec{w} = \begin{bmatrix} 1 \\ -2 \\ \end{bmatrix}\),

Let’s visualize what’s going on step-by-step.

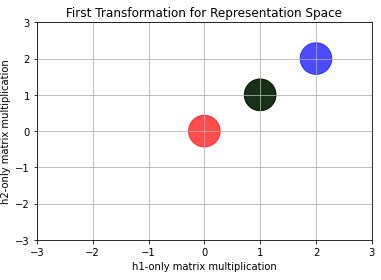

First Transformation for Representation Space

What means Representation Space in the Deep Learning context? We can think the hidden layer as providing features describing \(\vec{x}\), or as providing a new representation for \(\vec{x}\).

When we apply the transformation \(W^{T}\vec{x}\) to all four inputs, we have the following result

Notice this representation space (or, at least, this step towards it) makes some points’ positions look different. While the red-ish one remained at the same place, the blue ended up at \([2,2]\). But the most important thing to notice is that the green and the black points (those labelled with ‘1’) colapsed into only one (whose position is \([1,1]\)).

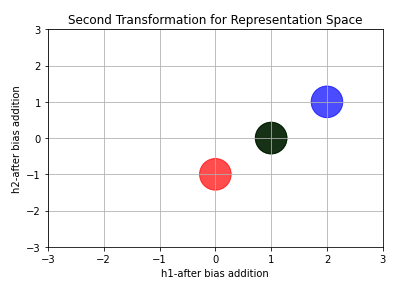

Second Transformation for Representation Space

Now let’s add vector \(\vec{c}\). What do we obtain?

Again, the position of points changed! All points moved downward 1 unit (due to the -1 in \(\vec{c}\)).

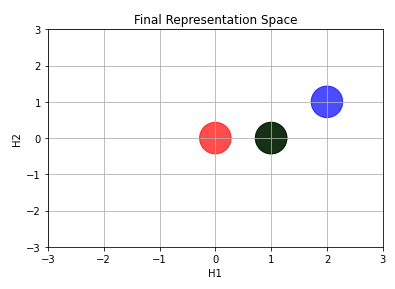

Final Representation Space

We now apply the nonlinearity ReLU . It will gives us “the real” Representation Space. I mean… the Representationa Space itself is this one. All the previous images just shows the modifications occuring due to each mathematical operation ( Matrix Multiplication followed by Vector Sum ).

Now we can draw a line to separate the points!

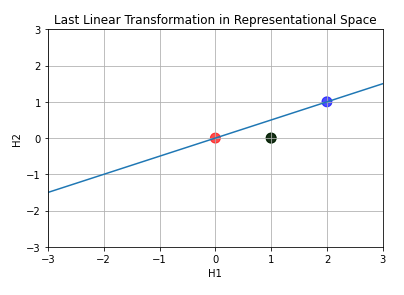

Last Linear Transformation in Representational Space

The last layer ‘draws’ the line over representation-space points.

This line means “here label equals 0”. As we move downwards the line, the classification (a real number) increases. When we stops at the collapsed points, we have classification equalling 1.

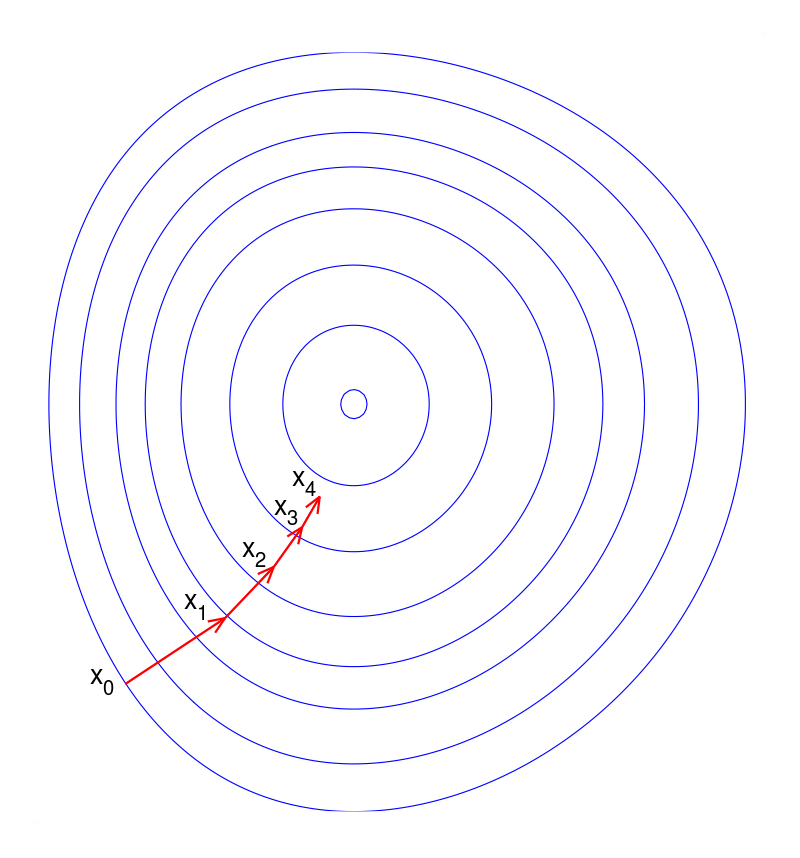

Visualizing Results (Iterative Training)

We saw how to get the correct classification using function composition. Although useful for visualizing , Deep Learning practice is all about backprop and gradient descent, right?

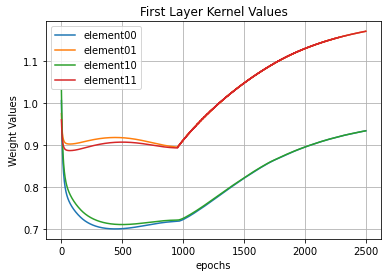

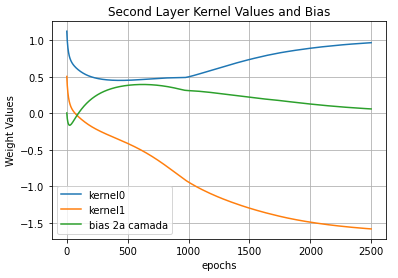

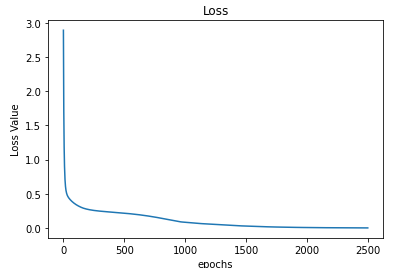

Let’s see what happens when we use such learning algorithms. The images below show the evolution of the parameters values over training epochs.

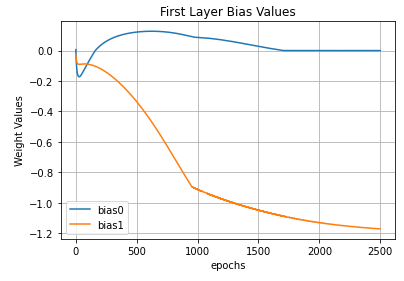

Parameters Evolution

In the image above we see the evolution of the elements of \(W\). Notice also how the first layer kernel values changes, but at the end they go back to approximately one. I believe they do so because the gradient descent is going around a hill (a n-dimensional hill, actually), over the loss function.

The first layer bias values (aka \(\vec{c}\)) behave like the first layer kernel kinda .

The main lesson to be understood from the three images above is: the parameters show a trend. I mean… they sorta goes to a stable value. Paying close attention we see they’re going to stabilize near the hand-defined values I showed during the Visualizing topic .

“The solution we described to the XOR problem is at a global minimum of the loss function, so gradient descent could converge to this point.” - Goodfellow et al .

Below we see the evolution of the loss function. It abruptely falls towards a small value and over epochs it slowly decreases.

Representation Space Evolution

Finally we can see how the transformed space evolves over epochs: how the line described by \(\vec{w}\) turns around trying to separate the samples and how these move.

Brief Words for the Reader

Thank you for reading it all along. It is one of the longest posts I wrote, but I wanted to comment as many things as possible so you could have a better view of what’s going on under the hood of Neural Nets. If any question come up please let me know: you can find how to get in touch with me using any means available here on the left (or on the top of the page, if you’re on a smartphone). The code I created to demo all these stuff can be found here . If you want to know more about my other projects, please check them out clicking on any of the links at the end of this page.

You may also enjoy

Aplication of multiple filters over images.

1 minute read

Digital Image Processing, OpenCV, C++

Tiltshift on Video

4 minute read

Motion Detector

2 minute read

8 minute read

DEV Community

Posted on Apr 3, 2020

Demystifying the XOR problem

In my previous post on Extreme learning machines I told that the famous pioneers in AI Marvin Minsky and Seymour Papert claimed in their book Perceptron [1969] , that the simple XOR cannot be resolved by two-layer of feedforward neural networks, which "drove research away from neural networks in the 1970s, and contributed to the so-called AI winter".[Wikipedia 2013]

Let's explore what is this XOR problem...

The XOR Problem

The XOR, or “exclusive or”, problem is a classic problem in ANN research. It is the problem of using a neural network to predict the outputs of XOR logic gates given two binary inputs. An XOR function should return a true value if the two inputs are not equal and a false value if they are equal. All possible inputs and predicted outputs are shown in figure 1.

XOR is a classification problem and one for which the expected outputs are known in advance. It is therefore appropriate to use a supervised learning approach.

On the surface, XOR appears to be a very simple problem, however, Minksy and Papert (1969) showed that this was a big problem for neural network architectures of the 1960s, known as perceptrons.

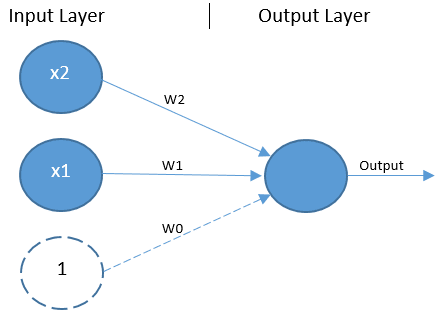

Perceptrons

Like all ANNs, the perceptron is composed of a network of *units *, which are analagous to biological neurons. A unit can receive an input from other units. On doing so, it takes the sum of all values received and decides whether it is going to forward a signal on to other units to which it is connected. This is called activation. The activation function uses some means or other to reduce the sum of input values to a 1 or a 0 (or a value very close to a 1 or 0) in order to represent activation or lack thereof. Another form of unit, known as a bias unit, always activates, typically sending a hard coded 1 to all units to which it is connected.

Perceptrons include a single layer of input units — including one bias unit — and a single output unit (see figure 2). Here a bias unit is depicted by a dashed circle, while other units are shown as blue circles. There are two non-bias input units representing the two binary input values for XOR. Any number of input units can be included.

The perceptron is a type of feed-forward network, which means the process of generating an output — known as forward propagation — flows in one direction from the input layer to the output layer. There are no connections between units in the input layer. Instead, all units in the input layer are connected directly to the output unit.

A simplified explanation of the forward propagation process is that the input values X1 and X2, along with the bias value of 1, are multiplied by their respective weights W0..W2, and parsed to the output unit. The output unit takes the sum of those values and employs an activation function — typically the Heavside step function — to convert the resulting value to a 0 or 1, thus classifying the input values as 0 or 1.

It is the setting of the weight variables that gives the network’s author control over the process of converting input values to an output value. It is the weights that determine where the classification line, the line that separates data points into classification groups, is drawn. If all data points on one side of a classification line are assigned the class of 0, all others are classified as 1.

A limitation of this architecture is that it is only capable of separating data points with a single line. This is unfortunate because the XOR inputs are not linearly separable . This is particularly visible if you plot the XOR input values to a graph. As shown in figure 3, there is no way to separate the 1 and 0 predictions with a single classification line.

Multilayer Perceptrons

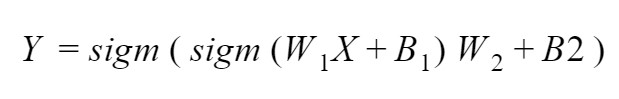

The solution to this problem is to expand beyond the single-layer architecture by adding an additional layer of units without any direct access to the outside world, known as a hidden layer. This kind of architecture — shown in Figure 4 — is another feed-forward network known as a multilayer perceptron (MLP).

It is worth noting that an MLP can have any number of units in its input, hidden and output layers. There can also be any number of hidden layers. The architecture used here is designed specifically for the XOR problem.

Similar to the classic perceptron, forward propagation begins with the input values and bias unit from the input layer being multiplied by their respective weights, however, in this case there is a weight for each combination of input (including the input layer’s bias unit) and hidden unit (excluding the hidden layer’s bias unit). The products of the input layer values and their respective weights are parsed as input to the non-bias units in the hidden layer. Each non-bias hidden unit invokes an activation function — usually the classic sigmoid function in the case of the XOR problem — to squash the sum of their input values down to a value that falls between 0 and 1 (usually a value very close to either 0 or 1). The outputs of each hidden layer unit, including the bias unit, are then multiplied by another set of respective weights and parsed to an output unit. The output unit also parses the sum of its input values through an activation function — again, the sigmoid function is appropriate here — to return an output value falling between 0 and 1. This is the predicted output.

This architecture, while more complex than that of the classic perceptron network, is capable of achieving non-linear separation. Thus, with the right set of weight values, it can provide the necessary separation to accurately classify the XOR inputs.

Backpropagation

The elephant in the room, of course, is how one might come up with a set of weight values that ensure the network produces the expected output. In practice, trying to find an acceptable set of weights for an MLP network manually would be an incredibly laborious task. In fact, it is NP-complete (Blum and Rivest, 1992). However, it is fortunately possible to learn a good set of weight values automatically through a process known as backpropagation. This was first demonstrated to work well for the XOR problem by Rumelhart et al. (1985).

The backpropagation algorithm begins by comparing the actual value output by the forward propagation process to the expected value and then moves backward through the network, slightly adjusting each of the weights in a direction that reduces the size of the error by a small degree. Both forward and back propagation are re-run thousands of times on each input combination until the network can accurately predict the expected output of the possible inputs using forward propagation.

For the XOR problem, 100% of possible data examples are available to use in the training process. We can therefore expect the trained network to be 100% accurate in its predictions and there is no need to be concerned with issues such as bias and variance in the resulting model.

In this post, we explored the classic ANN XOR problem. The problem itself was described in detail, along with the fact that the inputs for XOR are not linearly separable into their correct classification categories. A non-linear solution — involving an MLP architecture — was explored at a high level, along with the forward propagation algorithm used to generate an output value from the network and the backpropagation algorithm, which is used to train the network.

The next post in this series will feature a implementation of the MLP architecture described here, including all of the components necessary to train the network to act as an XOR logic gate.

Blum, A. Rivest, R. L. (1992). Training a 3-node neural network is NP-complete. Neural Networks, 5(1), 117–127.

Minsky, M. Papert, S. (1969). Perceptron: an introduction to computational geometry. The MIT Press, Cambridge, expanded edition, 19(88), 2.

Rumelhart, D. Hinton, G. Williams, R. (1985). Learning internal representations by error propagation (No. ICS-8506). California University San Diego LA Jolla Inst. for Cognitive Science.

Top comments (3)

Templates let you quickly answer FAQs or store snippets for re-use.

- Joined Jun 17, 2021

Hey Jayesh. Nice post thank you! I would love to read the follow up with the implementation because I have problems of teaching MLP's simple relationships. I could not find that one here yet, so if you could provide me a link I would be more than happy.

- Joined Apr 13, 2024

- Joined Jun 11, 2022

Are you sure you want to hide this comment? It will become hidden in your post, but will still be visible via the comment's permalink .

Hide child comments as well

For further actions, you may consider blocking this person and/or reporting abuse

The Future of Information Retrieval: RAG Models vs. Generalized AI

Asad - May 31

How to Use ChatGPT to Kickstart Your Project and Begin Your Journey as a Programmer

Homayoun - Jun 1

CodeBehind Framework - Add Model in View

elanatframework - May 31

Instagram AI policy

Luke Cartwright - May 31

We're a place where coders share, stay up-to-date and grow their careers.

- Your cart is currently empty.

- Unlocking the Power of Neural Networks: Solving the XOR Problem with Ease

Neural networks have been proven to solve complex problems, and one of the most challenging ones is the XOR problem. In this article, we will explore how neural networks can solve this problem and provide a better understanding of their capabilities.

Understanding the XOR Problem in Neural Networks

As an AI expert, I have come across various problems that neural networks struggle to solve. One such problem is the XOR problem. The XOR (Exclusive OR) problem involves classifying input data into two classes based on their features. This may sound simple, but it’s not for traditional neural networks.

The XOR problem is a binary classification problem where the output is 1 if the inputs are different and 0 if they are the same. For example, if we have two inputs A and B, we want to classify them as follows:

Revolutionize Your Blogging Strategy with Content Automation Tools

Revolutionizing Content Creation: How AI Writers are Transforming the Blogging Industry

– If A = 0 and B = 0, then output = 0 – If A = 0 and B = 1, then output = 1 – If A = 1 and B = 0, then output = 1 – If A = 1 and B = 1, then output = 0

This problem may seem easy to solve manually, but it poses a challenge for traditional neural networks because they lack the ability to capture non-linear relationships between input variables.

Why Traditional Neural Networks Struggle to Solve the XOR Problem

Traditional neural networks use linear activation functions that can only model linear relationships between input variables. In other words, they can only learn patterns that are directly proportional or inversely proportional to each other.

For example, if we have two inputs X and Y that are directly proportional (i.e., as X increases, Y also increases), a traditional neural network can learn this relationship easily. However, when there is a non-linear relationship between input variables like in the case of the XOR problem, traditional neural networks fail to capture this relationship.

Traditional neural networks also use a single layer of neurons which makes it difficult for them to learn complex patterns in data. To solve complex problems like the XOR problem with traditional neural networks, we would need to add more layers and neurons which can lead to overfitting and slow learning.

Approaching the XOR Problem with Feedforward Neural Networks

One way to solve the XOR problem is by using feedforward neural networks. Feedforward neural networks are a type of artificial neural network where the information flows in one direction, from input to output.

Revolutionize Your Content with Our AI-Powered Blog Writing Service

Revolutionizing Content Creation: Multilingual AI Blog Service Launches

To solve the XOR problem with feedforward neural networks, we need to use non-linear activation functions such as sigmoid or ReLU (Rectified Linear Unit) that can capture non-linear relationships between input variables. We also need to use multiple layers of neurons to learn complex patterns in data.

Steps for Solving the XOR Problem with Feedforward Neural Networks

Step 1: define the network architecture.

The first step is to define the network architecture. For the XOR problem, we can use a network with two input neurons, two hidden neurons, and one output neuron.

Step 2: Initialize Weights and Biases

The next step is to initialize weights and biases randomly. This is important because it allows the network to start learning from scratch.

Revolutionizing Blogging: How Automated Content Creation is Changing the Game

Revolutionizing Blogging: How AI is Transforming Content Generation

Step 3: train the network.

The third step is to train the network using backpropagation algorithm. During training, we adjust weights and biases based on the error between predicted output and actual output until we achieve a satisfactory level of accuracy.

The Limitations of Single-Layer Feedforward Networks for Solving the XOR Problem

Although single-layer feedforward networks can solve some simple problems like linear regression, they are not suitable for solving complex problems like image recognition or natural language processing.

In fact, single-layer feedforward networks cannot solve problems that require non-linear decision boundaries like in the case of XOR problem. This is because they lack the ability to capture non-linear relationships between input variables.

Single-layer feedforward networks are also limited in their capacity to learn complex patterns in data. They have a fixed number of neurons which means they can only learn a limited number of features. To overcome this limitation, we need to use multi-layer feedforward networks.

Solving the XOR Problem with Multi-Layer Feedforward Neural Networks

Multi-layer feedforward neural networks, also known as deep neural networks, are artificial neural networks that have more than one hidden layer. These networks can learn complex patterns in data by using multiple layers of neurons.

To solve the XOR problem with multi-layer feedforward neural networks, we need to use multiple layers of non-linear activation functions such as sigmoid or ReLU. We also need to use backpropagation algorithm for training.

Steps for Solving the XOR Problem with Multi-Layer Feedforward Neural Networks

The first step is to define the network architecture. For the XOR problem, we can use a network with two input neurons, two hidden layers each with two neurons and one output neuron.

By using multi-layer feedforward neural networks, we can solve complex problems like image recognition or natural language processing that require non-linear decision boundaries.

How Backpropagation Helps in Solving the XOR Problem with Multi-Layer Feedforward Networks

Backpropagation is a supervised learning algorithm used to train neural networks. It is based on the chain rule of calculus and allows us to calculate the error at each layer of the network and adjust weights and biases accordingly.

In the case of multi-layer feedforward networks, backpropagation helps in solving the XOR problem by adjusting weights and biases at each layer based on the error between predicted output and actual output. This allows the network to learn complex patterns in data by using multiple layers of neurons.

Can Convolutional Neural Networks Solve the XOR Problem?

Convolutional neural networks (CNNs) are a type of artificial neural network that is commonly used for image recognition tasks. They use convolutional layers to extract features from images and pooling layers to reduce their size.

Although CNNs are not commonly used for solving simple problems like XOR problem, they can be adapted for this task by using one-dimensional convolutional layers instead of two-dimensional convolutional layers.

However, it’s important to note that CNNs are designed for tasks like image recognition where there is spatial correlation between pixels. For simple problems like XOR problem, traditional feedforward neural networks are more suitable.

Revolutionize Your Blogging with the Latest AI Writing Tool!

Revolutionizing Content Creation: AI-Powered Blogging Platform Takes the Internet by Storm

Differences between recurrent and feedforward neural network approaches to solving the xor problem.

Recurrent neural networks (RNNs) are a type of artificial neural network that can process sequential data such as time-series or natural language data. Unlike feedforward neural networks, RNNs have feedback connections that allow them to store information from previous time steps.

To solve the XOR problem with RNNs, we need to use a special type of RNN called Long Short-Term Memory (LSTM). LSTMs have memory cells that can store information over long periods of time which makes them suitable for processing sequential data.

The main difference between feedforward and recurrent approaches to solving XOR problem is that feedforward networks use fixed-size input and output vectors while RNNs can process sequences of varying length.

Challenges Associated with Using Recurrent Neural Networks for Solving the XOR Problem

Although RNNs are suitable for processing sequential data, they pose a challenge when it comes to solving the XOR problem. This is because XOR problem requires memorizing information over long periods of time which is difficult for RNNs.

RNNs suffer from the vanishing gradient problem which occurs when the gradient becomes too small to update weights and biases during backpropagation. This makes it difficult for them to learn long-term dependencies in data.

To overcome this challenge, we need to use LSTM architecture which has memory cells that can store information over long periods of time.

The Role of Long Short-Term Memory (LSTM) Architecture in Solving the XOR Problem with Recurrent Neural Networks

Long Short-Term Memory (LSTM) is a special type of recurrent neural network that has memory cells that can store information over long periods of time. LSTMs are designed to solve problems where there are long-term dependencies in data.

To solve the XOR problem with LSTMs, we need to use a network with one input neuron, two hidden layers each with four LSTM neurons, and one output neuron. During training, we adjust weights and biases based on the error between predicted output and actual output until we achieve a satisfactory level of accuracy.

By using LSTMs, we can solve complex problems like natural language processing or speech recognition that require memorizing information over long periods of time.

Other Types of Neural Network Architectures for Solving the XOR Problem

Apart from feedforward, convolutional, and recurrent neural networks, there are other types of neural network architectures that can be used for solving the XOR problem. These include:

– Autoencoder neural networks: These are neural networks that are trained to reconstruct their input data. They can be used for feature extraction and anomaly detection.

– Radial basis function (RBF) neural networks: These are neural networks that use radial basis functions as activation functions. They can be used for clustering and classification tasks.

– Self-organizing maps (SOMs): These are unsupervised learning algorithms that can be used for clustering and visualization of high-dimensional data.

The Role of Transfer Learning in Solving Complex Problems Like the XOR Problem

Transfer learning is a technique where we use pre-trained models to solve new problems. It involves using the knowledge learned from one task to improve the performance of another related task.

In the case of XOR problem, transfer learning can be applied by using pre-trained models on similar binary classification tasks. For example, if we have a pre-trained model on classifying images as cats or dogs, we can use this model as a starting point for solving the XOR problem.

Revolutionizing Content Creation: The Rise of Automated Blog Writing Software

Revolutionizing Content Creation: How AI is Streamlining Blog Post Automation

Transfer learning reduces the amount of training data required and speeds up the training process. It also improves the accuracy of models by leveraging knowledge learned from related tasks.

Using Unsupervised Learning Techniques to Solve the XOR Problem Without Labeled Data

Unsupervised learning is a type of machine learning where we train models on unlabeled data. It involves finding patterns in data without any prior knowledge about their labels or categories.

In the case of XOR problem, unsupervised learning techniques like clustering or dimensionality reduction can be used to find patterns in data without any labeled examples. For example, we can use k-means clustering algorithm to group input data into two clusters based on their features.

However, unsupervised learning techniques may not always provide accurate results compared to supervised learning techniques that rely on labeled examples.

Practical Applications of Solving the XOR Problem Using Neural Networks

Although XOR problem may seem like a simple problem, it has practical applications in various fields such as:

– Cryptography: XOR is commonly used in encryption algorithms to scramble data.

– Robotics: XOR can be used to control robotic arms and legs based on sensory input.

– Finance: XOR can be used for fraud detection by classifying transactions as fraudulent or non-fraudulent based on their features.

The Limitations and Drawbacks of Using Neural Networks for Solving Problems Like the XOR Problem

Although neural networks have shown great promise in solving complex problems, they have limitations and drawbacks that need to be considered. Some of these include:

– Overfitting: Neural networks can overfit training data which leads to poor generalization on new data.

– Slow learning: Training large neural networks can take a long time due to the large number of parameters involved.

– Black box models: Neural networks are often considered black box models because it’s difficult to interpret how they arrive at their predictions.

– Lack of transparency: Due to their complexity, neural networks lack transparency which makes it difficult for humans to understand how they work.

Despite these limitations, neural networks remain an important tool for solving complex problems in various fields.

In conclusion, neural networks have proven to be a powerful tool in solving the XOR problem. With their ability to learn and adapt, they can tackle complex tasks that traditional programming methods struggle with. If you’re interested in exploring the possibilities of AI for your business or project, don’t hesitate to get in touch with us and check out our AI services. We’re always happy to help!

https://www.researchgate.net/publication/346707273/figure/fig2/AS:11431281104506106@1670094751465/The-Running-Time-on-Random-S-boxes_Q320.jpg

How neural network solves the XOR problem?

To solve the XOR problem using neural networks, one can use either Multi-Layer Perceptrons or a neural network that consists of an input layer, a hidden layer, and an output layer. As the neural network processes data through forward propagation, the weights of each layer are adjusted accordingly and the XOR logic is executed.

Why is the XOR problem interesting to neural network researchers?

Neural network researchers find the XOR problem particularly intriguing because it is a complicated binary function that cannot be resolved by a neural network.

https://www.researchgate.net/publication/346705804/figure/fig1/AS:11431281104839310@1670267196273/Schematics-of-Arbiter-PUF-XOR-Arbiter-PUF-and-Interpose-PUF_Q320.jpg

How many neurons does it take to solve XOR?

The XOR problem can be solved using just two neurons, according to a statement made on January 18th, 2017.

How do neural networks solve problems?

Artificial neural networks are a type of machine learning algorithm inspired by the structure of the human brain. They can solve problems using trial and error, without being explicitly programmed with rules to follow. These algorithms are part of a larger category of machine learning techniques.

Can decision trees solve the XOR problem?

It is feasible to utilize decision trees to execute the XOR operation, as of October 4th, 2019.

Why can t the XOR problem be solved by a one layer perceptron?

The perceptron is limited to being able to handle only linearly separable data and cannot replicate the XOR function.

- Martin Thoma

XOR tutorial with TensorFlow

The XOR-Problem is a classification problem, where you only have four data points with two features. The training set and the test set are exactly the same in this problem. So the interesting question is only if the model is able to find a decision boundary which classifies all four points correctly.

Neural Network basics

I think of neural networks as a construction kit for functions. The basic building block - called a "neuron" - is usually visualized like this:

It gets a variable number of inputx \(x_0, x_1, \dots, x_n\) , they get multiplied with weights \(w_0, w_1, \dots, w_n\) , summed and a function \(\varphi\) is applied to it. The weights is what you want to "fine tune" to make it actually work. When you have more of those neurons, you visualize it like this:

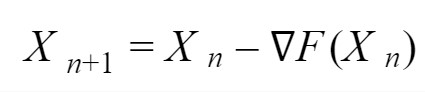

In this example, it is only one output and 5 inputs, but it could be any number. The number of inputs and outputs is usually defined by your problem, the intermediate is to allow it to fit more exact to what you need (which comes with some other implications).

Now you have some structure of the function set, you need to find weights which work. This is where backpropagation 3 comes into play. The idea is the following: You took functions ( \(\varphi\) ) which were differentiable and combined them in a way which makes sure the complete function is differentiable. Then you apply an error function (e.g. the euclidean distance of the output to the desired output, Cross-Entropy) which is also differentiable. Meaning you have a completely differentiable function. Now you see the weights as variables and the data as given parameters of a HUGE function. You can differentiate (calculate the gradient) and go from your random weights "a step" in the direction where the error gets lower. This adjusts your weights. Then you repeat this steepest descent step and hopefully end up some time with a good function.

For two weights, this awesome image by Alec Radford visualizes how different algorithms based on gradient descent find a minimum ( Source with even more of those):

So think of back propagation as a shortsighted hiker trying to find the lowest point on the error surface: He only sees what is directly in front of him. As he makes progress, he adjusts the direction in which he goes.

Targets and Error function

First of all, you should think about how your targets look like. For classification problems, one usually takes as many output neurons as one has classes. Then the softmax function is applied. 1 The softmax function makes sure that the output of every single neuron is in \([0, 1]\) and the sum of all outputs is exactly \(1\) . This means the output can be interpreted as a probability distribution over all classes.

Now you have to adjust your targets. It is likely that you only have a list of labels, where the \(i\) -th element in the list is the label for the \(i\) -th element in your feature list \(X\) (or the \(i\) -th row in your feature matrix \(X\) ). But the tools need a target value which fits to the error function. The usual error function for classification problems is cross entropy (CE). When you have a list of \(n\) features \(x\) , the target \(t\) and a classifier \(clf\) , then you calculate the cross entropy loss for this single sample by:

Now we need a target value for each single neuron for every sample \(x\) . We get those by so called one hot encoding : The \(k\) classes all have their own neuron. If a sample \(x\) is of class \(i\) , then the \(i\) -th neuron should give \(1\) and all others should give \(0\) . 2

sklearn provides a very useful OneHotEncoder class. You first have to fit it on your labels (e.g. just give it all of them). In the next step you can transform a list of labels to an array of one-hot encoded targets:

Install Tensorflow

The documentation about the installation makes a VERY good impression. Better than anything I can write in a few minutes, so ... RTFM 😜

For Linux systems with CUDA and without root privileges, you can install it with:

But remember you have to set the environment variable LD_LIBRARY_PATH and CUDA_HOME . For many configurations, adding the following lines to your .bashrc will work: